We’ve talked about using public search engines as components of a custom search application. Let’s work through two examples of that idea. The first gathers information from a set of technology news sites. The second trolls a set of public LDAP directories.

The Web nowadays offers more search engines than you can keep track of. Even metasearchers—that is, applications that aggregate multiple search engines—are becoming common. For example, I use a tool called Copernic (http://www.copernic.com/) to do parallel searches of AltaVista, Excite, and a number of other engines.

Despite the wealth of searchers and metasearchers, there’s always a need for a more customized solution. The analysts at our fictitious Ronin Group, for example, track technology news by subject. The technology news sites, including PR Newswire (http://www.prnewswire.com/), Business Wire (http://www.businesswire.com/), and Yahoo! (http://www.yahoo.com/), deliver lots of fresh technology news. But consider the plight of the Ronin Group’s XML analyst. None of the available metasearchers cover all the technology news sites that she’d like to include in her daily search for XML-related news.

What to do? It’s straightforward to build a custom metasearcher. You discover the web API for each engine that you want to search, create a URL template, interpolate a search term into that URL template, transmit the URL, and interpret the results.

In some cases, you’ll find your URL template sitting on the browser’s command line after you run a search. For example, when you search CMP’s TechWeb site (http://www.techweb.com), the following URL appears on the command line:

http://www.techweb.com/se/techsearch.cgi?queryText=xml&sorted=true& collname=current&publication=All

At the PR Newswire site, though, the search form uses the HTTP POST method. So the URL template we’re looking for isn’t on the browser’s command line. But it’s easy to discover by viewing the form’s HTML source. Here’s the relevant piece of that form:

<FORM ACTION="http://199.230.26.105/fulltext" METHOD="POST"> <INPUT TYPE="text" NAME="SEARCH" SIZE=35 MAXLENGTH=70> <INPUT NAME="NUMDAYS" TYPE=Radio VALUE="3" CHECKED> </FORM>

That’s equivalent to this HTTP GET request, which you can formulate and test on the browser’s command line:

http://199.230.26.105/fulltext?SEARCH=xml&NUMDAYS=3

Figure 14.2 illustrates the general strategy for a metasearcher. To each of a set of engines, it sends a query. From each, it receives an HTML page in response. To each of these HTML pages, it applies a regular expression to identify search results and normalize them.

To identify the patterns that define search results for each of the target engines, you need to inspect the HTML source of the result pages. Then you write regular expressions to isolate the desired elements. For example, here’s a search result from Business Wire:

<a href="http://www.businesswire.com/webbox/bw.052599/191451672.htm"> SoftQuad Now Shipping XMetaL 1.0--Setting the Standard for XML Content Creation</a></b><br>

We’d like to capture two pieces of this link: its address and its label—that is, the story’s headline. Here’s a Perl regular expression that will match this search result:

"(http:[^"]+)(">)([^<]+)This expression captures the URL in Perl’s special variable

$1 and the headline in $3.

The Perl module shown in Example 14.3 packages these techniques. Its central data structure is a hash of lists (HoL) whose keys are the names of news sites and whose values are three-element lists. Each list comprises the following.

A parameterized URL template to drive the search

A regular expression to match search results

A hostname to prefix to the resulting link (not always needed)

Example 14-3. A Technology News Metasearcher

#!/usr/bin/perl -w

use strict;

package NewsWire;

use LWP::Simple;

sub new

{

my ($pkg,$search_term) = @_;

my $self =

{

'headlines' => {},

'nw_enum' =>

{

'bizwire' =>

[

"http://search.businesswire.com/query.html?qm=1&qt=$search_term",

""(http:[^"]+)(">)([^<]+)",

'',

],

'cnet' =>

[

"http://www.news.com/Searching/Results/1,18,1,00.html?querystr=

$search_term&startdate=&lastdate=&numDaysBack=

03&newsCategory=null&newscomTopics=0",

""(/News/Item/[^"]+)(">)([^<]+)</a>, ([^<]+)",

'http://www.news.com',

],

'yahoo' =>

[

"http://search.news.yahoo.com/search/news?n=10&p=$search_term",

"(http://biz.yahoo.com/[^"]+)(">)(.+)</A>",

'',

],

'infoseek' =>

[

"http://infoseek.go.com/Titles?col=NX&sv=IS&lk=noframes&nh=

10&qt=%2B$search_term%2B&rf=i500sRD&kt=A&ak=news1486",

"(Content?[^"]+)(">)(.+)</a>",

'http://infoseek.go.com/',

],

'techweb' =>

[

"http://www.techweb.com/se/techsearch.cgi?queryText=$search_term&

sorted=true&collname=current&publication=WIR&submitbutton=

Search&from_month=01&from_day=01&from_year=99&to_month=

$month&to_day=$mday&to_year=$year",

"(http://www.techweb.com/wire/story/[^"]+)("[^>]+>)(.+)</a>",

'',

],

'newspage' =>

[

"http://www.newspage.com/cgi-bin/np.Search?previous_module=NASearch&

PreviousSearchPage=NewSearch&offerID=&Query=$search_term&

NumDays=7&NewSearchSubmitBtn.x=15&NewSearchSubmitBtn.y=5",

"href="(/cgi-bin/NA.GetStory[^>]+)(>)(.+)</A>",

'http://www.newspage.com',

],

'prnewswire' =>

[

"http://199.230.26.105/fulltext?SEARCH=$search_term&NUMDAYS=3",

"(http://www.prnewswire.com/cgi-bin/stories.pl[^>]+)(>)([^<]+)",

'',

],

},

};

bless $self,$pkg;

return $self;

}

sub getNewswire

{

my ($self,$newswire) = @_;

my $search_url = $self->{nw_enum}->{$newswire}->[0];

my $result_pat = $self->{nw_enum}->{$newswire}->[1];

my $server_pre = $self->{nw_enum}->{$newswire}->[2];

print STDERR "search_url: $search_url, $result_pat

";

my $raw_results = get $search_url;

my $count = 0;

if ( $raw_results eq '' )

{ print STDERR "Error: no response from $newswire

"; }

print STDERR "trying $newswire...";

while ( $raw_results =~ m#$result_pat#g )

{

$count++;

my $url = "$server_pre$1";

my $headline = "$3 ($newswire)";

$headline =~ s#<b>##g;

$headline =~ s#</b>##g;

if ( $url eq '' )

{ print STDERR "Error: empty URL in $newswire

"; }

if ( $headline eq '' )

{ print STDERR "Error: empty headline in $newswire

"; }

$self->{headlines}->{$headline} = $url;

}

if (! $count )

{ print STDERR "Error: $newswire returned nothing

"; }

else

{ print STDERR "$count results

"; }

}

sub getAllNewswires

{

my ($self) = shift;

foreach my $nw (sort keys %{$self->{nw_enum}})

{

$self->getNewswire($nw);

}

}

sub printResults

{

my ($self) = shift;

print "<ul>

";

foreach my $headline ( sort keys %{$self->{headlines}} )

{

$headline =~ s/^s+//;

print "<li><a href="$self->{headlines}->{$headline}">$headline</a>

";

}

print "</ul>

";

}

1;Here’s a script that uses this module to gather a list of current XML-related news stories :

use NewsWire;

my $nw = NewsWire->new("xml");

$nw->getAllNewswires;

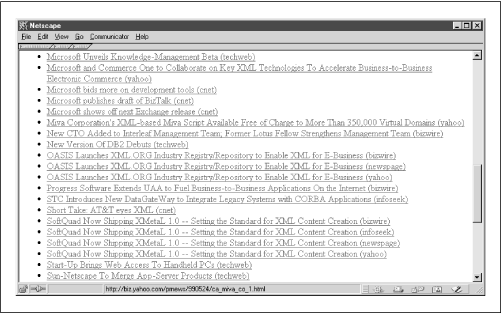

$nw->printResults;Some of the output from this script appears in Figure 14.3.

Note that some of the stories are carried by multiple sources. Why not condense these into single entries? You could do that, though it’s interesting to see the overlap among the various services. And the degree of repetition is one measure of the importance of a story.

Suppose you want to count the repeated items. The results structure in the Newswire module could easily be adapted for that purpose. But the real point here is that even without explicitly adding that capability, the existing HTML output can be further transformed by another stage of a web pipeline. You don’t have to anticipate every possible requirement, and build every imaginable feature, into a web component. It’s arguably better that you don’t, but rather—as is the Unix tradition—just build parts that do simple, well-defined jobs and that can be embedded in a pipeline.

To show that any Internet service can be aggregated and repackaged, we’ll switch gears and focus on public LDAP directories. The Netscape and Microsoft mailreaders both include LDAP clients. They come preloaded with the addresses of several public directories, including Bigfoot, Switchboard, and Four11. It’s remarkable that you can search these directories in real time as you compose messages, but the feature isn’t as useful or well used as it might be. One reason is that it’s never clear which directory to search. None are authoritative; any of them might turn up the right answer to a given query.

Searching the directories one after the other is a tedious affair. Suppose you start with Switchboard, and you’re looking for me. It’s a big Internet, and there are a few different Jon Udells, so if you know I live in New Hampshire, you’ll want to start with a restrictive query, and if that fails then broaden it. What if even the broader search fails? You might try Four11, first restrictively and then broadly. It soon adds up to a lot of wasted motion. You’d like to apply the restrictive search to several sites at once and then, if necessary, search all those sites a second time more broadly. You can achieve that effect with a simple form/script pair. Figure 14.4 shows the search form.

Example 14.4 shows the accompanying script.

Example 14-4. Aggregated LDAP Search Script

use strict; use TinyCGI; my $cgi = TinyCGI->new; print $cgi->printHeader; my $vars = $cgi->readParse(); use Mozilla::LDAP::Conn; # use the PerLDAP module my %searchroots = ( 'ldap.bigfoot.com' => "", 'ldap.four11.com' => "", 'ldap.yahoo.com' => "", 'ldap.switchboard.com' => "o=switchboard,c=us", ); my @servers = (); foreach my $server (keys %searchroots) { if ( $vars->{$server} eq 'on' ) # if LDAP server selected on form { push (@servers,$server); } # add to list to search } my $person; if ( $vars->{person} eq '' ) # by default { $person = "cn=*"; } # use wildcard for cn else { $person = "cn=$vars->{person}"; } # else use supplied name my $state; if ( $vars->{state} eq 'Choose state') # by default { $state = "st=*"; } # use wildcard for state else { my ($st1,$st2) = split(',',$vars->{state}); # else value like "NH,New Hampshire" $state = "| (st=$st1)(st=$st2)"; # use either part } my $city; if ( $vars->{city} eq '') # by default { $city = "l=*"; } # use wildcard for location else { $city = "l=$vars->{city}"; # else use supplied name } my $search = # construct the query "(& ($person) ($city) ($state) )"; print "<p>search: $search"; print "<pre>"; foreach $server (@servers) { print "<p>$server: ($searchroots{$server})"; my $conn = # connect to LDAP server Mozilla::LDAP::Conn->new($server,389); my $entry = # transmit query $conn->search($searchroots{$server}, "subtree", $search); while ($entry) # enumerate and print results { $entry->printLDIF(); $entry = $conn->nextEntry(); } }

This method does more than just aggregate search across multiple

directories. In an organization with various LDAP clients deployed—the

Microsoft and Netscape mailreaders, Eudora, and perhaps

others—it provides a common interface to a fielded LDAP search.

That interface can hide idiosyncracies of the various backend

services that would confuse and frustrate users. For example, at one

time the st slot in some public LDAP directories

used the full state name (e.g., New Hampshire) and in others the

abbreviated name (e.g., NH). Users shouldn’t need to worry

about this kind of thing, and when you repackage services for them,

they won’t have to. These kinds of operational details can be

learned once and then shared with the whole group. There are lots of

opportunities to create this kind of Internet groupware, and many

benefits flow from doing so.

Consider what happens when an important new LDAP directory becomes available. In the normal course of events, it won’t show up in people’s address books until the next browser release. If it’s an internal directory, that will never happen. Either way there’s a procedure for adding a new LDAP directory to the browser’s address book, but not many users are likely to discover it. And of course it’s a different procedure for every LDAP client, so if you want to document it, you might have to do so three or four times. A centralized lookup service not only gives access to new external and internal directories, it also announces their existence as they come online. Making people aware of information resources is sometimes more than half the battle. Years ago a friend who worked at Lotus told me that he’d sold his car within an hour of posting a notice on an internal Notes database. “That’s amazing,” I said. “Yeah,” he replied, “of course, it took me most of the day to figure out which Notes database to post the ad in.”

A deep problem lurks under the surface of that remark. Computer software can’t yet organize and classify knowledge, and I’m not betting that we’ll see meaningful progress on that front anytime soon. The task requires uniquely human traits—creative synthesis, adaptive logic, flexible analogy making. I don’t pretend that the Internet component model I’ve outlined here relieves us of the burden of organizing and classifying knowledge, because I don’t think anything can—or should. But the techniques of aggregation and repackaging create power tools for knowledge management. And since those tools are programmable components, themselves subject to aggregation and repackaging, they can breed new tools. For Internet groupware developers, the challenge and the opportunity is to apply the existing tools and create new ones in order to help networked teams work smarter.