There is only one constant, one universal. It is the only real truth; causality. Action, reaction. Cause and effect. | ||

| --Merovingian, Matrix Reloaded | ||

In Chapter 6, we discussed the similarities and differences between processes and threads. The most significant difference between threads and processes is that each process has its own address space and threads are contained in the address space of their process. We discussed how threads and processes have an id, a set of registers, a state, and a priority, and adhere to a scheduling policy. We explained how threads are created and managed. We also created a thread class.

In this chapter, we take the next step and discuss communication and cooperation between threads and processes. We cover among other topics:

In Chapter 3, we discussed the challenges of coordinating the execution of concurrent tasks. The example used was software-automated painters who were to paint the house before guests arrived for the holidays. A number of issues were outlined while decomposing of the problem and solution for the purpose of determining what would be the best approach to painting the house. Some of those issues had to deal with communication and the synchronization resource usage.

Did the painters have to communicate with each other?

Should the painters communicate when they had completed a task or when they required some resource like a brush or paint?

Should painters communicate directly with each other or should there be a central painter through which all communications are routed?

Would it be better if only painters in one room communicated or if painters of different rooms communicated?

As far as sharing resources, can multiple painters share a resource or will usage have to be serialized?

These issues are concerned with coordinating communication and synchronization between these concurrent tasks. If communication between dependent tasks is not appropriately designed, then data race conditions can occur. Determining the proper coordination of communication and synchronization between tasks requires matching the appropriate concurrency models during problem and solution decomposition. Concurrency models dictate how and when communication occurs and the manner in which work is executed. For example, for our software-automated painters a boss-worker model (which we discuss later in this chapter) could be used. A single boss painter can delegate work or direct painters as to which rooms to paint at a particular time. The boss painter can also manage the use of resources. All painters then communicate what resources they need in order to complete their tasks to the boss who then determines when resources are delegated to painters. Dependency relationships can be used to examine which tasks are dependent on other tasks for communication or cooperation.

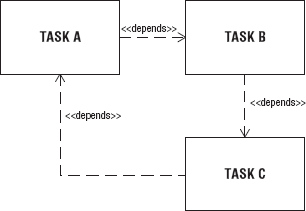

When processes or threads require communication or cooperation among each other to accomplish a common goal, they have a dependency relationship. Task A depends on Task B to supply a value for a calculation, to give the name of the file to be processed, or to release a resource. Task A may depend on Task B, but Task B may not have a dependency on Task A. Given any two tasks, there are exactly four dependency relationships that can exist between them:

In the first and second cases the dependency is a one-way unidirectional dependency. In the third case, there is a two-way bidirectional dependency; A and B are mutually dependent on each other. In the fourth case, there is a NULL dependency between Task A and B; no dependency exists.

When Task A requires data from Task B in order for it to execute its work, then there is a dependency relationship between Task A and Task B. A software-automated painter can be designated to fill all buckets running low on paint with paint at the behest of the boss painter. That would mean all painters (that are actually painting) would have to communicate with the boss painter that they were low on paint. The boss painter would then inform the refill painter that there were buckets of paint to be filled. This would mean that the worker painters have a communication dependency with the boss painter. The refill painter also had a communication dependency with the boss painter.

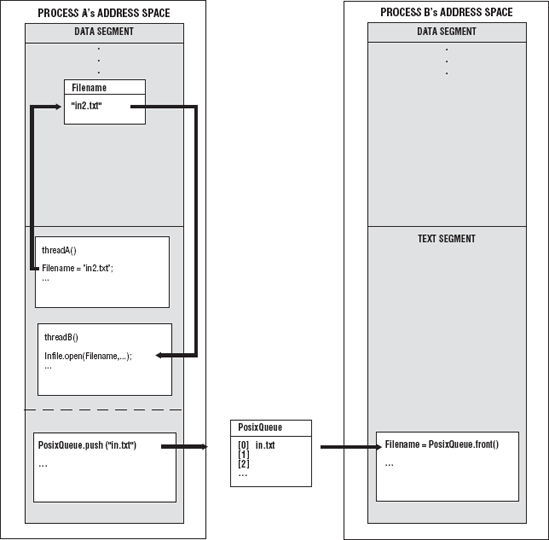

In Chapter 5, a posix_queue object was used to communicate between processes. The posix_queue is an interface to the POSIX message queue, a linked list of strings. The posix_queue object contains the names of the files that the worker processes are to search to find the code. A worker process can read the name of the file from posix_queue. posix_queue is a data structure that resides outside the address space of all processes. On the other hand, threads can also communicate with other threads within the address space of their process by using global variables and data structures. If two threads wanted to pass data between them, thread A would write the name of the file to a global variable, and thread B would simply read that variable. These are examples of unidirectional communication dependencies where only one task depends on another task. Figure 7-1 shows two examples of unidirectional communication dependencies: the posix_queue used by processes and the global variables used to hold the name of a file for threads A and B.

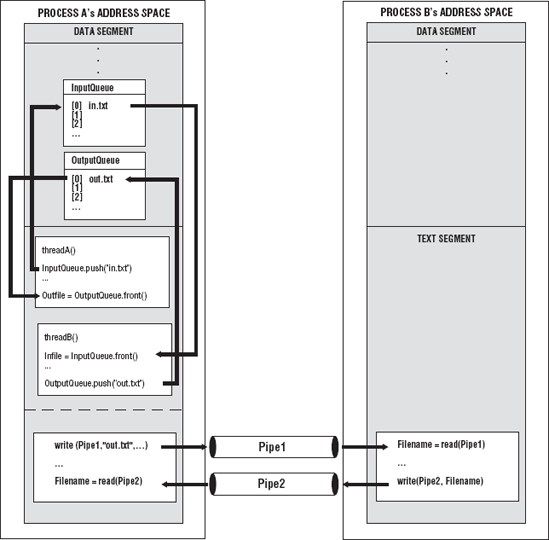

An example of a bidirectional dependency is two First-In, First-Out (FIFO) pipes. A pipe is a data structure that forms a communication channel between two processes. Process A will use pipe 1's input end to send the name of the file that process B has to process. Process B will read the name of the file from the output end of pipe 1. After it has processed the contents of the file, the result is written to a new file. The name of the new file will be written to the input end of pipe 2. Process A will read the name of the file from the output end of pipe 2. This is bidirectional communication dependency. Process B depends on process A to communicate the name of the file, and process A depends on process B to communicate the name of the new file. Thread A and thread B can use two global data structures like queues; one would contain the names of source files, and the other would be used to contain the names of resultant files. Figure 7-2 shows two examples of bidirectional communication dependencies between processes A and B and threads A and B.

When Task A requires a resource that Task B owns and Task B must release the resource before Task A can use it, this is a cooperation dependency. When two tasks are executing concurrently, and both are attempting to utilize the same resource, cooperation is required before either can successfully use the resource. Assume that there are multiple software-automated painters in a single room, and they are sharing a single paint bucket. They all try to access the paint bucket at the same time. Considering that the bucket cannot be accessed simultaneously by painters (it's not thread safe to do so), access has to be synchronized, and this requires cooperation.

Another example of cooperation dependency is write access to the posix_queue. If multiple processes were to write the names of the files where the code was located to the posix_queue, this would require that only one process at a time be able write to the posix_queue. Write access would have to be synchronized.

You can understand the overall task relationships between the threads or processes in an application by enumerating the number of possible dependencies that exist. Once you have enumerated the possible dependencies and then their relationships, you can determine which threads you must code for communication and synchronization. This is similar to truth tables used to determine possible branches of decision in a program or application. Once the dependency relationships among threads are enumerated, the overall thread structure of the process is available.

For example, if there are three threads A, B, and C (three threads from one process or one thread from three processes), you can examine the possible dependencies that exist among the threads. If there are two threads involved in a dependency, use combination to calculate the possible threads involved in the dependency from the three threads: C(n,k) where n is the number of threads and k is the number of threads involved in the dependency. So, for the example C(3,2), the answer is 3; there are three possible combinations of threads: A and B, A and C, B and C.

Now if you consider each combination as a graph (with two nodes and one edge between them), a simple graph, meaning that there are no self-loops and no parallel edges (no two edges will have the same endpoints), then the number of edges in a graph is n(n- 1)/2. So, for the two-node simple graph, there are 2(2 - 1)/2, which is 1. There is one edge for each graph. Now each edge can have a possible four possible dependency relationships as discussed earlier:

A → B: Task A depends on Task B.

A ← B: Task B depends on Task A.

A

B: Task A depends on Task B, and Task B depends on Task A.

A NULL B: There are no dependencies between Task A and Task B.

So, each individual graph has four possible relationships. If you count the number of possible dependency relationships among three threads in which two are involved in the relationship, there are 12 possible relationships.

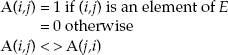

An adjacency matrix can be used to enumerate the actual dependency relationships for two-thread combinations. An adjacency matrix is a graph G = (V,E) in which V is the set of vertices or nodes of the graph and E is the set of edges such that:

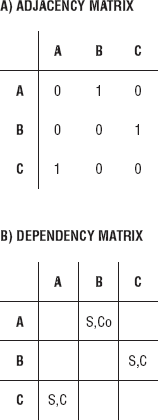

where i denotes a row and j denotes a column. The size of the matrix is n x n, where n is the total number of threads. Figure 7-3(A) shows the adjacency matrix for three threads. The 0 indicates that there is no dependency, and the 1 indicates that there is a dependency. An adjacency matrix can be used to demarcate all of the dependency relationships between any two threads. On a diagonal, there are all 0s because there are no self-dependencies.

A dependency graph is useful for documenting the type of dependency relationship, for example, C for communication or Co for cooperation. S is for synchronization if the communication or cooperation dependency requires synchronization. The dependency graph can be used during the design or testing phase of the Software Development Life Cycle (SDLC). To construct the dependency graph, the adjacency matrix is used. Where there is a 1 in a row column position, it is replaced by the type of relationship. Figure 7-3(B) shows the dependency graph for the three threads. The 0s and 1s have been replaced by C or Co. Where there was a 0, no relationship exists; the space is left blank. For A(1,2), A depends on B for synchronized cooperation, A(2,3) B depends on C for synchronized communication, and A(3,2) C depends on A for synchronized communication. Bidirectional relationships like A

These tools and approaches are very useful. Knowing the number of possible relationships and identifying what those relationships are helps in establishing the overall thread structure of processes and the application. We have used them for small numbers of threads. The matrix is only useful when two threads are involved in the dependency. For large numbers of threads, the matrix approach cannot be used (unless it is multidimensional.) But having to enumerate each relationship for even a moderate number of threads would be unwieldy. This is why the declarative approach is very useful.

Processes have their own address space. Data that is declared in one process is not available in another process. Events that happen in one process are not known to another process. If process A and process B are working together to perform a task such as filtering out special characters in a file or searching files for a code, there must be methods for communicating and coordinating events between the two processes. In Chapter 5, the layout of a process was described. A process has a text, data, and stack segment. Processes may also have other memory allocated in the free store. The data that the process owns is generally in the stack and data segments or is dynamically allocated in memory protected from other processes. For one process to be made aware of another process's data or events, you use operating system Application Program Interfaces (APIs) to create a means of communication. When a process sends data to another process or makes another process aware of an event by means of operating system APIs, this is called Interprocess Communication (IPC). IPC deals with the techniques and mechanisms that facilitate communication between processes. The operating system's kernel acts as the communication channel between the processes. The posix_queue is an example of IPC. Files can also be used to communicate between related or unrelated processes.

The process resides in user mode or space. IPC mechanisms can reside in kernel or user space. Files used for communication reside in the filesystem outside of both user and kernel space. Processes sharing information by utilizing files have to go through the kernel using system calls such as read, write, and lseek or by using iostreams. Some type of synchronization is required when the file is being updated by two processes simultaneously. Shared information between processes resides in kernel space. The operations used to access the shared information will involve a system call into the kernel. An IPC mechanism that does reside in user space is shared memory. Shared memory is a region of memory that each process can reference. With shared memory, processes can access the data in this shared region without making calls to the kernel. This also requires synchronization.

The persistence of an object refers to the existence of an object during or beyond the execution of the program, process, or thread that created it. A storage class specifies how long an object exists during the execution of a program. An object can have a declared storage class of automatic, static, or dynamic.

Automatic objects exist during the invocation of a block of code. The space and values an object is given exist only within the block. When the flow of control leaves the block, the object goes out of existence and cannot be referred to without an error.

A static object exists and retains values throughout the execution of the program.

An object that was dynamically allocated can have no more than static storage but can have less than automatic storage. Programmers determine when an object is dynamically declared during runtime, and that object will exist for the entire execution of the program.

Persistence of an object is not necessarily synonymous with the storage of the object on a storage device. For example, automatic or static objects may be stored in external storage that is used as virtual memory during program execution, but the object will be destroyed after the program is over.

IPC entities reside in the filesystem, in kernel space, or in user space, and persistence is also defined the same way: filesystem, kernel, and process persistence.

An IPC object with filesystem persistence exists until the object is deleted explicitly. If the kernel is rebooted, the object will keep its value.

Kernel persistence defines IPC objects that remain in existence until the kernel is rebooted or the object is deleted explicitly.

An IPC object with process persistence exists until the process that created the object closes it.

There are several types of IPCs, and they are listed in Table 7-1. Most of the IPCs work with related processes — child and parent processes. For processes that are not related and require Interprocess Communication, the IPC object has a name associated with it so that the server process that created it and the client processes can refer to the same object. Pipes are not named; therefore, they are used only between related processes. FIFO or named pipes can be used between unrelated processes. A pathname in the filesystem is used as the identifier for a FIFO IPC mechanism. A name space is the set of all possible names for a specified type of IPC mechanism. For IPCs that require a POSIX IPC name, that name must begin with a slash and contain no other slashes. To create the IPC, one must have write permissions for the directory.

Table 7.1. Table 7-1

Name space | Persistence | Process | |

|---|---|---|---|

Pipe | unnamed | process | Related |

FIFO | pathname | process | Both |

Mutex | unnamed | process | Related |

Condition variable | unnamed | process | Related |

Read-write locks | unnamed | process | Related |

Message queue | Posix IPC name | kernel | Both |

Semaphore (memory-based) | unnamed | process | Related |

Semaphore (named) | Posix IPC name | kernel | Both |

Shared memory | Posix IPC name | kernel | Both |

Table 7-1 also shows that each type of IPC (FIFO and pipes) has process persistence. Message queues and shared memory mst have kernel persistence, but may also use filesystem persistence. When message queues and shared memory utilize filesystem persistence, they are implemented by using a mapping a file to internal memory. This is called mapped files or memory mapped files. Once the file is mapped to memory that is shared between processes, the contents of the files are modified and read by using a memory location.

Parent processes share their resources with child processes. By using posix_spawn, or the exec functions, the parent process can create the child process with exact copies of its environment variables or initialize them with new values. Environment variables store system-dependent information such as paths to directories that contain commands, libraries, functions, and procedures used by a process. They can be used to transmit useful user-defined information between the parent and the child processes. They provide a mechanism to pass specific information to a related process without having it hardcoded in the program code. System environment variables are common and predefined to all shells and processes in that system. The variables are initialized by startup files.

The common environment variables are listed in Chapter 5.

Environment variables and command-line argument can also be passed to newly initialized processes.

int posix_spawn(pid_t *restrict pid, const char *restrict path,

const posix_spawn_file_actions_t *file_actions,

const posix_spawnattr_t *restrict attrp,

char *const argv[restrict], char *const envp[restrict]);argv[] and envp[] are used to pass a list of command-line argument and environment variables to the new process. This is one-way, one-time communication. Once the child process has been created, any changes to those variables by the child will not be reflected in the parent's data, and the parent cannot make any changes to the variables that are seen by its child processes.

Using files to transfer data between processes is one of the simplest and most flexible means of transferring or sharing data. Files can be used to transfer data between processes that are related or unrelated. They can allow processes that were not designed to work together to do so. Of course, files have filesystem persistence; in this case, the persistence can survive a system reboot.

When you use files to communicate between processes, you follow seven basic steps in the file-transferring process:

The name of the file has to be communicated.

You must verify the existence of the file.

Be sure that the correct permission are granted to access to the file.

Synchronize access to the file.

While reading/writing to the file, check to see if the stream is good and that it's not at the end of the file.

Close the file.

First, the name of the file has to be communicated between the processes. You might recall from Chapters 4 and 5 that files stored the work that had to be processed by the workers. Each file contained over a million strings. The posix_queue contained the names of the files. Filenames can also be passed to child processes by means of other IPC-like pipes.

When the process is accessing the file, if more than one process can also access the same file, you need synchronization. You might recall in Chapter 4 in Listing 4-2, that a file was broken up into smaller files, and each process had exclusive access to the file. However, if there was just one file, the access to the file would have to be synchronized. One process at a time would have exclusive read capability and would read a string from the file, advancing the read pointer. We discuss read/write locks and other types of synchronization later in the chapter.

Leaving the file open can lead to data corruption and can prevent other processes from accessing the file. The processes that read or write to or from the file should know the file's file format in order to correctly process the file. The file's format refers to the file type and the file's organization. The file's type also implies the type of data in the file. Is it a text file or a binary file? The processes should also know the file layout or how the data is organized in the file.

File descriptors are unsigned integers used by a process to identify an open file. They are shared between parent and child processes. They are indexes to the file descriptor table, a block maintained by the kernel for each process. When a child process is created, the descriptor table is copied for the child process, which allows the child process to have equal access to the files used by the parent. The number of file descriptors that can be allocated to a process is governed by a resource limit. The limit can be changed by setrlimit(). The file descriptor is returned by the open(). File descriptors are frequently used by other IPCs.

A block of shared memory can be used to transfer information between processes. The block of memory does not belong to any of the processes that are sharing the memory. Each process has its own address space; the block of memory is separate from the address space of the processes. A process gains access to the shared memory by temporarily connecting the shared memory block to its own memory block. Once the piece of memory is attached, it can be used like any other pointer to a block of memory. Like other data transfer mechanisms, shared memory is also set up with the appropriate access permission. It is almost as flexible as using a file to transfer data. If Processes A, B, and C were using a shared memory block, any modifications by any of the processes are visible to all the other processes. This is not a one-time, one-way communication mechanism.

Pipes require that at least two processes be connected before they can be used; shared memory can be written to and read by a single process and held open by that process. Other processes can attach to and detach from the shared memory as needed. This allows much larger blocks of data to be transferred faster than when you use pipes and FIFOs. However, it is important to consider how much memory to allocate to the shared region.

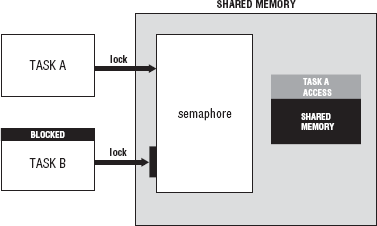

When you are accessing the data contained in the shared memory, synchronization is required. In the same way that file locking is necessary for multiple processes to attempt read/write access for the same file at the same time, access to shared memory must be regulated. Semaphores are the standard technique used for controlling access to shared memory. Controlled access is necessary because a data race can occur when two processes attempt to update the same piece of memory (or file for that matter) at the same time.

The shared memory maps:

a file

internal memory

to the shared memory region:

Synopsis

#include <sys/mman.h> void *mmap(void *addr, size_t len, int prot, int flags, int fd, off_t offset); int mumap(void *addr, size_t len);

The function maps len bytes starting at offset offset from the file or other object specified by the file descriptor fd into memory, preferably at address addr, which is usually specified as 0. The actual place where the object is mapped in memory is returned and is never 0. It is a void *. prot describes the desired memory protection. It must not conflict with the open mode of the file. flags specifies the type of mapped object. It can also specify mapping options and whether modifications made to the mapped copy of the page are private to the process or are to be shared with other references. Table 7-2 shows the possible values for prot and flags with a brief description. To remove the memory mapping from the address space of a process, use mumap().

Table 7.2. Table 7-2

Flag Arguments for mmap | Description |

|---|---|

prot | Describes the protection of the memory-based region. |

| Data can be read. |

| Data can be written. |

| Data can be executed. |

| Data is inaccessible. |

flags | Describes how the data can be used. |

| Changes are shared. |

| Changes are private. |

|

|

To create a shared memory, open a file and store the file descriptor; then call mmap() with the appropriate arguments and store the returning void *. Use a semaphore when accessing the variable. The void * may have to be type cast. depending on the data you are trying to manipulate:

fd = open(file_name,O_RDWR); ptr =casting<type>(mmap(NULL,sizeof(type),PROT_READ,MAP_SHARED,fd,0));

This is an example of memory mapping with a file. When using shared memory with internal memory, a function that creates a shared memory object is used instead of a function that opens a file:

Synopsis

#include <sys/mman.h> int shm_open(const char *name, int oflag, mode_t mode); int shm_unlink(const char *name);

The shm_open() creates and opens a new or opens an existing POSIX shared memory object. The function is very similar to open(). name specifies the shared memory object created and/or opened. To ensure the portability of name use an initial slash (/) and don't use embedded slashes. oflag is a bit mask created by ORing together one of these flags: O_RDONLY or O_RDWR and any of the other flags listed in Table 7-3, along with the possible values for mode with a brief description. shm_open() returns a new file descriptor referring to the shared memory object. The file descriptor is used in the function call to mmap().

fd = sh_open(memory_name,O_RDWR, MODE); ptr =casting<type>(mmap(NULL,sizeof(type),PROT_READ,MAP_SHARED,fd,0));

Now ptr can be used like any other pointer to data. Be sure to use semaphores between processes:

sem_wait(sem); ... *ptr; sem_post(sem);

Table 7.3. Table 7-3

Shared Memory Arguments | Description |

|---|---|

oflag | Describes how the shared memory will be opened. |

| Opens the object for read or write access. |

| Opens the object for read-only access. |

| Creates the shared memory if it does not exist. |

| Checks for the existence and creation of the object. If |

| If the shared memory object exists, then truncates it to zero bytes. |

mode | Specifies the permission. |

| User has read permission. |

| User has write permission. |

| Group has read permission. |

| Group has write permission. |

| Others have read permission. |

| Others have write permission. |

Pipes are communication channels used to transfer data between processes. Whereas data transfer using files generally does not require the sending and receiving of data to be active at the same time, data transfer using pipes includes processes that are active at the same time. Although there are exceptions, the general rule is that pipes are used between two or more active processes. One process (the writer) opens or creates the pipe and then blocks until another process (the reader) opens the same pipe for reading and writing.

There are two kinds of pipes:

Anonymous

Named (also called FIFO)

Anonymous pipes are used to transfer data between related processes (child and parent). Named pipes are used for communication between related or unrelated processes. Related processes created using fork() can use the anonymous pipes. Processes created using posix_spawn() use named pipes. Unrelated processes are created separately by programs. Unrelated processes can be logically related and work together to perform some task, but they are still unrelated. Named pipes are used by unrelated processes and related processes that refer to the pipe by the name associated with it. Named pipes are kernel objects. So, they reside in kernel space with kernel persistence (as far as the data), but the file structure has filesystem persistence until it is explicitly removed from the filesystem.

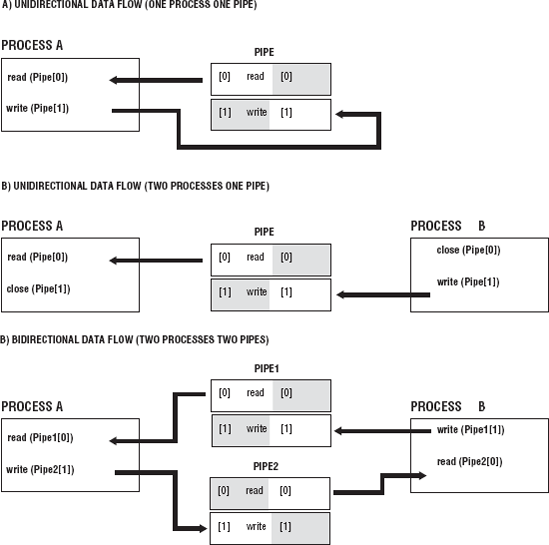

Pipes are created from one process, but they are rarely used in a single process. A pipe is a communication channel to a different process that is related or unrelated. Pipes create a flow of data from one end in one process (input end) to the other end that is in another process (output end). The data becomes a stream of bytes that flows in one direction through the pipe. Two pipes can be used to create bidirectional flow of communication between the processes. Figure 7-5 shows the various uses of pipes from a single process, from to two processes using a single pipe with a one direction flow of data from Process A to Process B, and then with a bidirectional flow of data between two processes that use two pipes.

Two pipes are used to create a bidirectional flow of data because of the way that pipes are set up. Each pipe has two ends, and each pipe has a one-way flow of data. So, one process uses the pipe as an input end (write data to pipe), and the other process uses the same pipe but uses the output end (read data from the pipe). Each process closes the end of the process it does not access, as shown in Figure 7-5.

Anonymous pipes are temporary and exist only while the process that created them has not terminated. Named pipes are special types of files and exist in the filesystem. They can remain after the process that created it has terminated unless the process explicitly removes them from the filesystem. A program that creates a named pipe can finish executing and leave the named pipe in the filesystem, but the data that was placed in the pipe will not be present. Future programs and processes can then access the named pipe later, writing new data to the pipe. In this way, a named pipe can be set up as a kind of permanent channel of communication. Named pipes have file permission settings associated with them, and anonymous pipes do not.

Names pipes are created with mkfifo():

Synposis

#include <sys/types.h> #include <sys/stat.h> int mkfifo(const char *pathname, mode_t mode); int unlink(const char *pathname);

mkfifo() creates a named pipe using pathname as the name of the FIFO with permission specified by mode. mode comprises the file permission bits. They are as listed previously in Table 7-3.

mkfifo() is created with O_CREAT | O_EXCL flags, which means it creates a new named pipe with the name specified if it does not exist. If is does exist, an error EEXIST is returned. So, if you want to open an already existing named pipe, call the function, and check for this error. If the error occurs then use open() instead of mkfifo().

The unlink() removes the filename pathname from the filesystem. The program in Listing 7-1 creates a named pipe with mkfifo.

Listings 7-1 and 7-2 are listings of programs that demonstrate how a named pipe can be used the transfer data from one process to another unrelated process. Listing 7-1 contains the program of the writer, and Listing 7-2 contains the program for the reader.

Example 7.1. Listing 7-1

// Listing 7-1 A program that creates a named pipe with mkfifo(). 1 using namespace std; 2 #include <iostream> 3 #include <fstream> 4 #include <sys/wait.h> 5 #include <sys/types.h> 6 #include <sys/stat.h> 7

8 int main(int argc,char *argv[],char *envp[])

9 {

10

11 fstream Pipe;

12

13 if(mkfifo("Channel-one",S_IRUSR | S_IWUSR

14 | S_IRGRP

15 | S_IWGRP) == −1){

16 cerr << "could not make fifo" << endl;

17 }

18

19 Pipe.open("Channel-one",ios::out);

20 if(Pipe.bad()){

21 cerr << "could not open fifo" << endl;

22 }

23 else{

24 Pipe << "2 3 4 5 6 7 " << endl;

25

26 }

27

28 return(0);

29 }The program in Listing 7-1 creates a named pipe with the mkfifo() system call. The program then opens the pipe with an fstream object called Pipe in Line 19. Notice that Pipe has been opened for output using the ios::out flag. If the Pipe is not in a bad() state after the call to open, then the Pipe is ready for writing data to Channel-one. Although Pipe is ready for input, it blocks (waits) until another process has opened Channel-one for reading. When using the iostreams with pipes, it is important to remember that either the writer or reader must be opened for both input and output using ios::in | ios::out. Opening either the reader or the writer in this manner will prevent deadlock. In this case, we open the reader (Listing 7-2) for both. The program in this listing is called a reader because it reads the information from the pipe. The writer then writes a line of input to Pipe.

Example 7.2. Listing 7-2

// Listing 7-2 A program that reads from a named pipe.

1 using namespace std;

2 #include <iostream>

3 #include <fstream>

4 #include <string>

5 #include <sys/wait.h>

6 #include <sys/types.h>

7 #include <sys/stat.h>

8

9

10 int main(int argc, char *argv[])

11 {

12 int type;

13 fstream NamedPipe;

14 string Input;

15

16 NamedPipe.open("Channel-one",ios::in | ios::out);17

18 if(NamedPipe.bad()){

19 cerr << "could not open Channel-one" << endl;

20 }

21

22 while(!NamedPipe.eof() && NamedPipe.good()){

23

24 getline(NamedPipe,Input);

25 cout << Input << endl;

26 }

27 NamedPipe.close();

28 unlink("Channel-one");

29 return(0);

30

31 }The program in Listing 7-1 uses the << operator to write data into the pipe. Here the reader also has to open the pipe by using an fstream open, using the name of the named pipe Channel-one, and opening the pipe for input and output in Line 16. If the NamedPipe is not in a bad state after opening, then the data is read from the NamedPipe while NamedPipe is not eof() and is still good. The data is read from the pipe and stored in a string Input that is sent to cout. NamedPiped is then closed and the pipe is unlinked. These are unrelated processes. To run, each is launched separately.

Here is Program Profile 7-1 for Listings 7-1 and 7-2.

Listing 7-1 creates a pipe and opens the pipe with a fstream object. Before it writes a string to the pipe, it waits until another process opens the pipe for reading (Listing 7-2). Once the reader process opens the pipe for reading, the writer program writes a string to the pipe, closes the pipe, and then exits. The reader pipe reads the string from the pipe and displays the string to standard out. Run the reader and then the writer.

Run each program in separate terminals.

The use of fstream simplifies the IPC making the named pipe easier to access. All of the functionality of streams comes into play for this example:

mkfifo("Channel-one",...);

vector<int> X( 2,3,4,5,6,7);

ofstream OPipe("Channel-one",ios::out);

ostream_iterator<int> Optr(OPipe,"

");

copy(X.begin(),X.end(),Optr);Here a vector is used to hold all the data. An ofstream object is used this time instead of an fstream object to open the named pipe. An ostream iterator is declared and points to the named pipe (Channel-one). Now instead of successive insertion in a loop, the copy algorithm can copy all the data to the pipe. This is a convenience if have hundreds, thousands, or even more numbers to write to the pipe.

Besides simplifying the use of IPC mechanisms by using iostreams, iterators, and algorithm, you can also simplify use by encapsulating the FIFO into an FIFO interface class. Remember that in doing so you are modeling a FIFO structure. The FIFO class is a model for the communication between two or more processes. It transmits some form of information between them. The information is translated into a sequence of data, inserted into the pipe, and then retrieved by a process on the other side of the pipe. The data is then reassembled by the retrieving process. There must be somewhere for the data to be stored while it is in transit from process A to process B. This storage area for the data is called a buffer. Insertion and extraction operations are used to place the data into or extract the data from this buffer. Before performing insertions or extractions into or from the data buffer, the data buffer must exist. Once communication has been completed, the data buffer is no longer needed. So, your model must be able to remove the data buffer when it is no longer necessary. As indicated, a pipe has two ends, one end for inserting data and the other end for extracting data, and these ends can be accessed from different processes. Therefore the model should also include an input port and an output port that can be connected to separate processes. Here are the basic components of the FIFO model:

Input/output port

Insertion and extraction operation

Creation/initialization operation

Buffer creation, insertion, extraction, destruction

For this example, there are only two processes involved in communication. But if there are multiple processes that can read/write to/ from the named pipe stream, synchronization is required. So, this class also requires a mutex object. Example 7-1 shows the beginnings of the FIFO class:

Example 7.1. Example 7-1

// Example 7-1 Declaration of fifo class.

class fifo{

mutex Mutex;

//...

protected:

string Name;

public:

fifo &operator<<(fifo &In, int X);

fifo &operator<<(fifo &In, char X);

fifo &operator>>(fifo &Out, float X);

//...

};Using this technique, you can easily create fifos in the constructor. You can pass them easily as parameters and return values. You can use them in conjunction with the standard container classes and so on. The construction of such a component greatly reduces the amount of code needed to use FIFOs, provides opportunities for type safety, and generally allows the programmer to work at a higher level.

A message queue is a linked list of strings or messages. This IPC mechanism allows processes with the adequate permissions to the queue to write or remove messages. The sender of the message assigns a priority to it. Message queues do not require more than one process to be used. With a FIFO, the writer process blocks and cannot write to the pipe until there is a process that opens it for reading. With a message queue, a writer process can write to the message queue and then terminate. The data is retained in the queue. Some other process later can read or write to it. The message queue has kernel persistence. When reading a message from the queue, the oldest message with the highest priority is returned. Each message in the queue has these attributes:

A priority

The length of the message

The message or data

With a linked list the head of the list has the maximum number of messages allowed in the queue and the maximum size allowed for a message.

The message queue is created with mq_open():

Synopsis

#include <mqueue.h>

mqd_t mq_open(const char *name, int oflag,mode_t mode,

struct mq_attr *attr);

int mq_close(mqd_t mqdes);

int mq_unlink(const char *name);mq_open() creates a message queue with the specified name. The message queue uses oflag with these possible values to specify the access modes:

O_RDONLY: Open to receive messagesO_WRONLY: Open to send messagesO_RDWR: Open to send or received messages

These flags can be ORred with the following:

O_CREAT: Create a message queue.O_EXCL: If ORred with previous flag, function fails if the pathname already exists.O_NONBLOCK: Determines if queue waits for resources or messages that are not currently available.

The function returns a message queue descriptor of type mq_dt.

The last parameter is a struct mq_attr *attr. This is an attribute structure that describes the properties of the message queue:

struct mq_attr {

long mq_flags; //flags

long mq_maxmsg; //maximum number of messages allowed

long mq_msgsize; //maximum size of message

long mq_curmsgs; //number of messages currently in queue

}mq_close() closes the message queue, but the message queue still exists in the kernel. However, the calling function can no longer use the descriptor. If the process terminates, all message queues associated with the process also close. The data is still retained in the queue.

unlink() removes the message queue specified by name from the system. The number of references to the message queue is tracked, but the queue name can still be removed from the system even if the count is greater than 0. The queue is not destroyed until all processes that utilized the queue have closed or called mq_close().

There are two functions to set and return the attribute object, as shown in the following code synopsis:

Synopsis

#include <mqueue.h> int mq_getattr(mqd_t mqdes,struct mq_attr *attr); int mq_setattr(mqd_t mqdes,struct mq_attr *attr,struct mq_attr *oattr);

When you are setting the attribute with mq_setattr, only the mq_flags are set in the attr structure. Other attributes are not affected. mq_maxmsg and mq_msgsize are set when the message queue is created. mq_curmsg can be returned and not set. oattr contains the previous values for the attributes.

To send or write a message to the queue, use these functions:

Synopsis

#include <mqueue.h>

int mq_send(mqd_t mqdes, const char *ptr, size_t len,

unsigned int prio);

ssize_t mq_receive(mqd_t mqdes, const char *ptr, size_t len,

unsigned int priop);For mq_receive(), the len must be at least the maximum size of the message. The returned message is stored in *ptr.

posix_queue is a simple class that models some of the functionality of a message queue. It encapsulates the basic functions and the message queue attributes. Listing 7-3 shows the declaration of the posix_queue class.

Example 7.3. Listing 7-3

// Listing 7-3 Declaration of the posix_queue class. 1 #ifndef __POSIX_QUEUE 2 #define __POSIX_QUEUE 3 using namespace std; 4 #include <string> 5 #include <mqueue.h> 6 #include <errno.h> 7 #include <iostream> 8 #include <sstream>

9 #include <sys/stat.h>

10

11

12 class posix_queue{

13 protected:

14 mqd_t PosixQueue;

15 mode_t OMode;

16 int QueueFlags;

17 string QueueName;

18 struct mq_attr QueueAttr;

19 int QueuePriority;

20 int MaximumNoMessages;

21 int MessageSize;

22 int ReceivedBytes;

23 void setQueueAttr(void);

24 public:

25 posix_queue(void);

26 posix_queue(string QName);

27 posix_queue(string QName,int MaxMsg, int MsgSize);

28 ~posix_queue(void);

29

30 mode_t openMode(void);

31 void openMode(mode_t OPmode);

32

33 int queueFlags(void);

34 void queueFlags(int X);

35

36 int queuePriority(void);

37 void queuePriority(int X);

38

39 int maxMessages(void);

40 void maxMessages(int X);

41 int messageSize(void);

42 void messageSize(int X);

43

44 void queueName(string X);

45 string queueName(void);

46

47 bool open(void);

48 int send(string Msg);

49 int receive(string &Msg);

50 int remove(void);

51 int close(void);

52

53

54 };

55

#endifThe basic functions performed by a message queue are encapsulated in the posix_queue class:

47 bool open(void); 48 int send(string Msg); 49 int receive(string &Msg); 50 int remove(void); 51 int close(void);

We have discussed what each these functions does already. Examples 7-2 through 7-6 show the definitions of these methods. Example 7-2 is the definition of open():

Example 7.2. Example 7-2

// Example 7-2 The definition of open().

122 bool posix_queue::open(void)

123 {

124 bool Success = true;

125 int RetCode;

126 PosixQueue = mq_open(QueueName.c_str(),QueueFlags,OMode,&QueueAttr);

127 if(errno == EACCES){

128 cerr << "Permission denied to created " << QueueName << endl;

129 Success = false;

130 }

131 RetCode = mq_getattr(PosixQueue,&QueueAttr);

132 if(errno == EBADF){

133 cerr << "PosixQueue is not a valid message descriptor" << endl;

134 Success = false;

135 close();

136

137 }

138 if(RetCode == −1){

139 cerr << "unknown error in mq_getattr() " << endl;

140 Success = false;

141 close();

142 }

143 return(Success);

144 }After the call to mq_open() is made in Line #126, errno is checked to see if message queue failed to open because a message queue by the name QueueName.c_str() already exists. If it does, then the call is not successful. bool Success is returned with a value of false.

In Line 131, the queue attribute structure is returned by mq_getattr(). To ensure that the message queue was opened and successfully initialized its attribute, errno is checked again in Line 132. EBADF means the descriptor was not a valid message queue descriptor. The return code is checked in Line 138.

Example 7-3 is the definition of send().

Example 7.3. Example 7-3

// Example 7-3 The definition of send().

146 int posix_queue::send(string Msg)

147 {

148

149 int StatusCode = 0;

150 if(Msg.size() > QueueAttr.mq_msgsize){

151 cerr << "message to be sent is larger than max queue

message size " << endl;

152 StatusCode = −1;

153 }

154 StatusCode = mq_send(PosixQueue,Msg.c_str(),Msg.size(),0);

155 if(errno == EAGAIN){

156 StatusCode = errno;

157 cerr << "O_NONBLOCK not set and the queue is full " << endl;

158 }

159 if(errno == EBADF){

160 StatusCode = errno;

161 cerr << "PosixQueue is not a valid descriptor open for

writing" << endl;

162 }

163 if(errno == EINVAL){

164 StatusCode = errno;

165 cerr << "msgprio is out side of the priority range for the

message queue or " << endl;

166 cerr << "Thread my block causing a timing conflict with

time out" << endl;

167 }

168

169 if(errno == EMSGSIZE){

170 StatusCode = errno;

171 cerr << "message size exceeds maximum size of message

parameter on message queue" << endl;

172

173 }

174 if(errno == ETIMEDOUT){

175 StatusCode = errno;

176 cerr << "The O_NONBlock flag was not set, but the time expired

before the message " << endl;

177 cerr << "could be added to the queue " << endl;

178 }

179 if(StatusCode == −1){

180 cerr << "unknown error in mq_send() " << endl;

181 }

182 return(StatusCode);

183

184 }In Line 150, the message is checked to ensure that its size does not exceed the allowable size for a message. In Line 154 the call to mq_send() is made. All other code checks for errors.

Example 7-4 is the definition of receive().

Example 7.4. Example 7-4

//Example 7-4 The definition of receive().

187 int posix_queue::receive(string &Msg)

188 {

189

190 int StatusCode = 0;

191 char QueueBuffer[QueueAttr.mq_msgsize];

192 ReceivedBytes = mq_receive(PosixQueue,QueueBuffer,

QueueAttr.mq_msgsize,NULL);

193 if(errno == EAGAIN){

194 StatusCode = errno;

195 cerr << "O_NONBLOCK not set and the queue is full " << endl;

196

197 }

198 if(errno == EBADF){

199 StatusCode = errno;

200 cerr << "PosixQueue is not a valid descriptor open for writing"

<< endl;

201 }

202 if(errno == EINVAL){

203 StatusCode = errno;

204 cerr << "msgprio is out side of the priority range for the message

queue or " << endl;

205 cerr << "Thread my block causing a timing conflict with time out"

<< endl;

206 }

207 if(errno == EMSGSIZE){

208 StatusCode = errno;

209 cerr << "message size exceeds maximum size of message parameter on

message queue" << endl;

210 }

211 if (errno == ETIMEDOUT){

212 StatusCode = errno;

213 cerr << "The O_NONBlock flag was not set, but the time expired

before the message " << endl;

214 cerr << "could be added to the queue " << endl;

215 }

216 string XMessage(QueueBuffer,QueueAttr.mq_msgsize);

217 Msg = XMessage;

218 return(StatusCode);

219

220 }In Line 191, a buffer QueueBuffer with the maximum size of a message is created. mq_receive() is called. The message returned is stored in QueueBuffer, and the number of bytes is returned and stored in ReceivedBytes. In Line 216, the message is extracted from QueueBuffer and assigned to the string in Line 217.

In Example 7-5 is the definition for remove().

Example 7.5. Example 7-5

//Example 7-5 The definition for remove().

221 int posix_queue::remove(void)

222 {

223 int StatusCode = 0;

224 StatusCode = mq_unlink(QueueName.c_str());

225 if(StatusCode != 0){

226 cerr << "Did not unlink " << QueueName << endl;

227 }

228 return(StatusCode);

229 }

230In Line 224, mq_unlink() is called to remove the message queue from the system.

Example 7-6 provides the definition for close().

Example 7.6. Example 7-6

//Example 7-6 The definition for close().

231 int posix_queue::close(void)

232 {

233

234 int StatusCode = 0;

235 StatusCode = mq_close(PosixQueue);

236 if(errno == EBADF){

237 StatusCode = errno;

238 cerr << "PosixQueue is not a valid descriptor open for

writing" << endl;

239 }

240 if(StatusCode == −1){

241 cerr << "unknown error in mq_close() " << endl;

242 }

243 return(StatusCode);

244

245 }In Line 235, mq_close() is called to close the message queue.

Return briefly to Listing 7-3, notice that the bolded methods from Examples 7-2 through 7-6 encapsulate the message queue's attributes to set and return the properties of the message queue:

33 int queueFlags(void); 34 void queueFlags(int X); 35 36 int queuePriority(void); 37 void queuePriority(int X); 38

39 int maxMessages(void); 40 void maxMessages(int X); 41 int messageSize(void); 42 void messageSize(int X);

Some of these attributes are can also be set in the constructor. There are three constructors in Listing 7-3:

25 posix_queue(void); 26 posix_queue(string QName); 27 posix_queue(string QName,int MaxMsg, int MsgSize);

At Line 25 is the default constructor. Example 7-7 shows the definition.

Example 7.7. Example 7-7

// Example 7-7 The definition of the default constructor.

4 posix_queue::posix_queue(void)

5 {

6

7

8 QueueFlags = O_RDWR | O_CREAT | O_EXCL;

9 OMode = S_IRUSR | S_IWUSR;

10 QueueName.assign("none");

11 QueuePriority = 0;

12 MaximumNoMessages = 10;

13 MessageSize = 8192;

14 ReceivedBytes = 0;

15 setQueueAttr();

16

17

18 }This posix_queue class is a simple model of the message queue. All functionality has not been included here, but you can see a message queue class makes the message queue easier to use. The posix_queue class performs error checking for all of the major functions of the IPC mechanism. What should be added is the mq_notify function. With notification signaling, a process is signaled when the empty message queue has a message. This class does not have synchronization capabilities. If multiple processes want to use the posix_queue to write to it, a built-in mutex should be implemented and used when messages are sent or received.

We have discussed the different mechanisms defined by POSIX to perform communication between processes, related and unrelated. We have discussed where those IPC reside and their persistence. Because threads reside in the address space of their process, it is safe and logical to assume that communication between threads would not be difficult or require the use of special mechanisms just for communication. That is true. The most important issue that has to be dealt with when peer threads require communication with each other is synchronization. Data races and indefinite postponement are likely when performing Interthread Communication (ITC).

Communication between threads is used to:

Share data

Send a message

Multiple threads share data in order to streamline processing performed concurrently. Each thread can perform different processing or the same processing on data streams. The data can be modified, or new data can be created as a result, which in turn is shared. Messages can also be communicated. For example, if an event happens in one thread, this could trigger another event in another thread. Threads may communicate a signal to other peer threads, or the main thread may signal the worker threads.

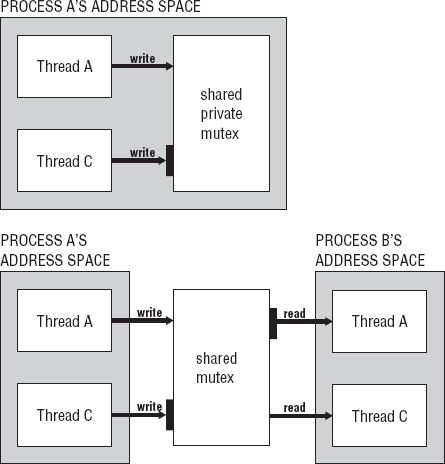

When two processes need to communicate, they use a structure that is external to both processes. When two threads communicate, they typically use structures that are part of the same process to which they both or all belong. Threads cannot communicate with threads outside their process unless you are referring to primary threads of processes. In that case, you refer to them as two processes. Threads within a process can pass values from the data segment of the process or stack segments of each thread.

In most cases, the cost of Interprocess Communication is higher than Interthread Communication. The external structures that must be created by the operating system during IPC require more system processing than the structures involved in ITC. The efficiency of ITC mechanisms makes threads a more attractive alternative in many, but not all programming scenarios that require concurrency.

We discussed some of the issues and drawbacks of using threads as compared to processes in Chapter 5.

Table 7-4 lists the basic Interthread Communications with a brief description.

Table 7.4. Table 7-4

Types of ITC | Description |

|---|---|

Declared outside of the main function or have global scope. Any modifications to the data are instantly accessible to all peer threads. | |

Parameters | Parameters passed to threads during creation. The generic pointer can be converted any data type. |

File handles | Files shared between threads. These threads share the same read-write pointer and offset of the file. |

An important advantage that threads have over processes is that threads can share global data, variables, and data structures. All threads in the process can access them equally. If any thread changes the data, the change is instantly available to all peer threads. For example, take three threads, ThreadA, ThreadB, and ThreadC. ThreadA makes a calculation and stores the results in a global variable Answer. ThreadB reads Answer, performs its calculation on it, and then stores its results in Answer. Then Thread C does the same thing. The final answer is to be displayed by the main thread. This example is shown in Listings 7-4, 7-5, and 7-6.

Example 7.4. Listing 7-4

// Listing 7-4 thread_tasks.h. 1 2 void *task1(void *X); 3 void *task2(void *X); 4 void *task3(void *X); 5

Example 7.5. Listing 7-5

// Listing 7-5 thread_tasks.cc.

1 extern int Answer;

2

3 void *task1(void *X)

4 {

5 Answer = Answer * 32;

6 }

7

8 void *task2(void *X)

9 {

10 Answer = Answer / 2;

11 }

12

13 void *task3(void *X)

14 {

15 Answer = Answer + 5;

16 }Example 7.6. Listing 7-6

// Listing 7-6 main thread.

1 using namespace std;

2 #include <iostream>

3 #include <pthread.h>

4 #include "thread_tasks.h"

5

6 int Answer = 10;

7

8

9 int main(int argc, char *argv[])

10 {

11

12 pthread_t ThreadA, ThreadB, ThreadC;

13

14 cout << "Answer = " << Answer << endl;

15

16 pthread_create(&ThreadA,NULL,task1,NULL);

17 pthread_create(&ThreadB,NULL,task2,NULL);

18 pthread_create(&ThreadC,NULL,task3,NULL);

1920 pthread_join(ThreadA,NULL); 21 pthread_join(ThreadB,NULL); 22 pthread_join(ThreadC,NULL); 23 24 cout << "Answer = " << Answer << endl; 25 26 return(0); 27 28 }

In these listings, the tasks that the threads will execute are defined in a separate file. Answer is the global data declared in the main line in the file program7-6.cc. It is out of scope for use by the tasks that are in defined in thread_tasks.cc. It is declared as extern, so it can have global scope. If the threads are to process the data in the way described earlier — ThreadA, ThreadB, and then ThreadC perform their calculations — it requires synchronization. It is not guaranteed that the correct answer, 165, will be returned if ThreadA and ThreadB have other work they have to do first. The threads are transferring data from one thread to another. With, say, two multicores, ThreadA and ThreadB can be executing. ThreadA works for a time slice, and then ThreadC is given the processor. When ThreadA is preempted, it may still not execute its calculation on Answer. If ThreadC finished when it was preempted, the value of Answer would be 15. Then ThreadB finishes; the Answer is 7. Then ThreadA does its calculation; Answer is 224 not 165. Although a pipeline model of data communication is what was desired, there is no synchronization in place for it to be executed.

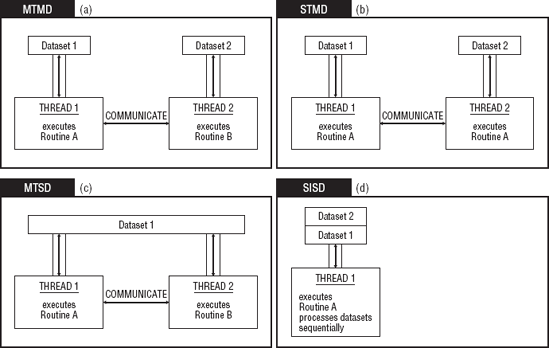

Threads can also share data structures in the same way that the variable was used. IPC supports only a limited set of data structures that can be used (for example, a message queue); in contrast, any type of global set, map, or so forth or any other collection or container class can be used to accomplish ITC. For example, threads can share a set. With a set, membership, intersection, union, and so forth, operations can be performed by different threads using Multiple Instruction Single Data (MISD) or Single Instruction Single Data (SISD) memory access models. The coding that it would take to implement a set container that could be used as an IPC mechanism is prohibitive.

Here is Program Profile 7-2 for Listings 7-4, 7-5, and 7-6.

For this program, there is a global variable Answer declared in the main line in the file program7-6.cc. It is declared as extern, so it can have global scope in thread_tasks.cc. Answer is to be processed by ThreadA, ThreadB, and then ThreadC. They are to perform their calculations, which requires synchronization. The correct answer is 165. Although a pipeline model of data communication is what was desired, there is no synchronization in place for it to be executed.

Parameters to threads can be used for communication between threads or between the primary thread and peer threads. The thread creation API supports thread parameters. The parameter is in the form of a void pointer:

int pthread_create(pthread_t *threadID,const pthread_attr_t *attr,

void *(*start_routine)(void*),

void *restrict parameter);The void pointer in C++ is a generic pointer and can be used to point to any data type. The value of parameter passes values as simple as a char * or a complex as a pointer to a container or user-defined object. In the program in Listing 7-7 and Listing 7-8, we use two queues of strings as global data structures. One thread uses the queue as an output queue, and another thread uses that same queue as a data stream for input and then writes to the second global queue of strings.

Example 7.7. Listing 7-7

// Listing 7-7 Thread tasks that use two global data structures. 1 using namespace std; 2 #include <queue> 3 #include <string> 4 #include <iostream> 5 6 extern queue<string> SourceText; 7 extern queue<string> FilteredText; 8 9 void *task1(void *X)

10 {

11 char Token = '?';

12

13 queue<string> *Input;

14

15

16 Input = static_cast<queue<string> *>(X);

17 string Str;

18 string FilteredString;

19 string::iterator NewEnd;

20

21 for(int Count = 0;Count < 16;Count++)

22 {

23 Str = Input->front();

24 Input->pop();

25 NewEnd = remove(Str.begin(),Str.end(),Token);

26 FilteredString.assign(Str.begin(),NewEnd);

27 SourceText.push(FilteredString);

28

29 }

30

31

32 }

33

34

35 void *task2(void *X)

36 {

37 char Token = '.';

38

39 string Str;

40 string FilteredString;

41 string::iterator NewEnd;

42

43 for(int Count = 0;Count < 16;Count++)

44 {

45 Str = SourceText.front();

46 SourceText.pop();

47 NewEnd = remove(Str.begin(),Str.end(),Token);

48 FilteredString.assign(Str.begin(),NewEnd);

49 FilteredText.push(FilteredString);

50

51

52 }

53

54 }These tasks filter a string of text. task1 removes the (?) from a string and task2 removes a (.) from a string. task1 accepts a queue that serves as the container of strings to be filtered. The void * is type cast to a pointer to a queue of strings in Line #16. task2 does not require a queue for input. It uses the global queue SourceText that is populated by task1. Inside their loops, a string is removed from the queue, the token is removed, and the new string is pushed onto the global queues. For task1 the queue string is SourceText and for task2, the queue string is FilteredText. Both queues are declared extern in Lines 6 and 7.

Example 7.8. Listing 7-8

// Listing 7-8 Main thread declares two global data structures.

1 using namespace std;

2 #include <iostream>

3 #include <pthread.h>

4 #include "thread_tasks.h"

5 #include <queue>

6 #include <fstream>

7 #include <string>

8

9

10

11

12 queue<string> FilteredText;

13 queue<string> SourceText;

14

15 int main(int argc, char *argv[])

16 {

17

18 ifstream Infile;

19 queue<string> QText;

20 string Str;

21 int Size = 0;

22

23

24 pthread_t ThreadA, ThreadB;

25

26 Infile.open("book_text.txt");

27 for(int Count = 0;Count < 16;Count++)

28 {

29 getline(Infile,Str);

30 QText.push(Str);

31

32 }

33

34 pthread_create(&ThreadA,NULL,task1,&QText);

35 pthread_join(ThreadA,NULL);

36

37 pthread_create(&ThreadB,NULL,task2,NULL);

38 pthread_join(ThreadB,NULL);

39

40 Size = FilteredText.size();

41

42 for(int Count = 0;Count < Size;Count++)

43 {

44 cout << FilteredText.front() << endl;

45 FilteredText.pop();

46

47 }

48

49 Infile.close();50 51 return(0); 52 53 }

The program in Listing 7-8 shows the code for the main thread. It declares the two global queues on Line 12 and Line 13. The strings are read in from a file into string queue QText. This is the data source queue for ThreadA in Line 34. The main thread then calls a join on ThreadA and waits for it to return. When ThreadA returns, ThreadB uses the global queue SourceText just populated by task1. When ThreadB returns, the strings in the global queue FilteredText are sent to cout by the main thread. By the main thread calling join in this way these threads are not executed concurrently. The main thread does not create ThreadB until ThreadA returns. If the threads were created one after the other, they would be executed concurrently. The threat of a core dump looms. If ThreadB starts its execution before ThreadA populates its source queue, then ThreadB attempts to pop an empty queue. The size of the queue could be checked before attempting to read it. But you want to take advantage of the multicore in doing this processing. Here you have a few strings in a queue and all you want to do is remove a single token. But if you scale this problem to thousands of string and many tokens to be removed, you realize that another approach has to exist. Again, you do not want to go to a serial solution. Access to the queue can be synchronized but filtering all the strings at once can also be parallelized. We will revisit the problem and present a better solution later in this chapter.

Here is Program Profile 7-3 for Listings 7-7 and 7-8.

The program in Listing 7-8 shows the code for the main thread. It declares the two global queues used for input and output. The strings are read in from a file into string queue QText, the data source queue for ThreadA. The main thread then calls a join on ThreadA and waits for it to return. When ThreadA returns, ThreadB uses the global queue SourceText just populated by ThreadA. When ThreadB returns, the strings in the global queue FilteredText are sent to cout by the main thread.

None

With processes, command-line arguments are passed by using the exec family of functions or posix_spawn, as discussed in Chapter 5. The command-line arguments are restricted to simple data types such as numbers and characters. The parameters passed to processes are one-way communication. The child process simply copies the parameter values. Any modifications made to the data will not be reflected in the parent. With threads, the parameter is not a copy but an address to some data location. Any modification made by the thread to that data can be seen by any thread that uses it.

But this type of transparency may not be what you desire. A thread can keep its own copy of data passed to it. It can copy it to its stack, but what's on its stack will come and go. A thread can be called several times performing the same task over and over again. By using thread-specific data, data can be associated with a thread and made private and persistent.

Sharing files between multiple threads as a form of ITC requires the same caution as using global variables. If Thread A moves the file pointer, then Thread B accesses the file at that location. What if one thread closes the files and another thread attempts to write to the file — what happens? Can a thread read from the file while another thread writes to it? Can multiple threads write to the file? Care must be taken to serialize or synchronize access to files within a multithreaded environment. Since threads can share actual read-write pointers, cooperation techniques must be used.

In any computer system, the resources are limited. There is only so much memory, and there are only so many I/O devices and ports, hardware interrupts, and, yes, even processors cores to go around. The number of I/O devices is usually restricted by the number of I/O ports and the hardware interrupts that a system has. In an environment of limited hardware resources, an application consisting of multiple processes and threads must compete for memory locations, peripheral devices, and processor time. Some threads and processes will be working together intimately using the system's limited sharable resources to perform a task and achieve a goal while other threads and processes work asynchronously and independently competing for those same sharable resources. It is the operating system's job to determine when the process or thread utilizes system resources and for how long. With preemptive scheduling, the operating system can interrupt the process or thread in order to accommodate all the processes and threads competing for the system resources. There are software resources and hardware resources. An example of software resources is a shared library that provides a common set of services or functions to processes and threads. Other sharable software resources are:

To share software resources requires only one copy of the program(s) code to be brought into memory. Data resources are objects, system data files (for example, environment variables), globally defined variables, and data structures. In the last section, we discussed data resources that are used for data communication. It is possible for processes and threads to have their own copy of shared data resources. In other cases, it is desirable, and maybe necessary, that data is shared. Sharing data can be tricky and may lead to race conditions (modifying data simultaneously) or data not being where it should when it is needed. Even attempting to synchronize access to resources can cause problems if this is not properly executed or if the wrong IPC or ITC mechanism is used. This can cause indefinite postponement or deadlock. Synchronization allows multiple threads and processes to be active at the same time while sharing resources without interfering with each other's operation. The synchronization process temporarily serializes (in some cases) execution of the multiple tasks to prevent problems. Serialization occurs if one-at-a-time access has to be granted to hardware or software resources. But too much serialization defeats the advantages of concurrency and parallelism. Then cores sit idle. Serialization is used as the last approach if nothing else can be done. Coordination is the key.

We talked about the resources of the system that are shared, hardware and software resources. These are the entities in a system that require synchronization. What also should be included are tasks, which should also be synchronized. You saw evidence of this in the program in Listing 7-7 and 7-8. task1 had to execute and complete before task2 could begin. Therefore, there are three major categories of synchronization:

Data

Hardware

Task

Table 7-5 summarizes each type of synchronization.

Table 7.5. Table 7-5

Types of synchronization | Description |

|---|---|

Data | Necessary to prevent race conditions. It allows concurrent threads/processes to access a block of memory safely. |

Hardware | Necessary when several hardware devices are needed to perform a task or group of tasks. It requires communication between tasks and tight control over real-time performance and priority settings. |

Task | Necessary to prevent race conditions. It enforces preconditions and postconditions of logical processes. |

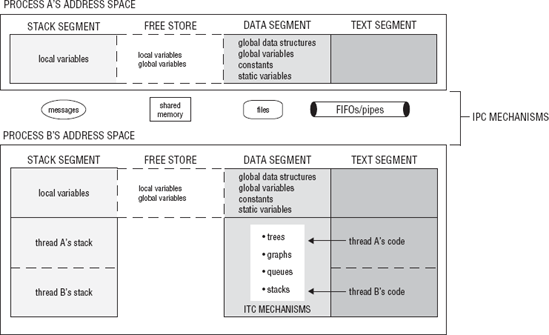

In this chapter thus far, we have discussed IPC and ITC. As we have discussed, the difference between data shared between processes and data shared between threads is that threads share the same address space and processes have separate address spaces. IPC exists outside the address space of the processes involved in the communication, in the kernel space or in the filesystem. Shared memory maps a structure to a block of memory that is accessible to the processes. ITC are global variables and data structures. It is the IPC and ITC mechanisms that require synchronization. Figure 7-6 shows where the IPC and ITC mechanisms exist in the layout of a process.

Data synchronization is needed in order to control race conditions and allow concurrent threads or processes to safely access a block of memory. Data synchronization controls when a block of memory can be read or modified. Concurrent access to shared memory, global variables, and files must be synchronized in a multithreaded environment. Data synchronization is needed at the location in a task's code when it attempts to access the block of memory, global variable, or file shared with other concurrently executing processes or threads. This is called the critical section. The critical section can be any block of code that changes the writes or reads to/from a file, closes a file, reads or writes global variables or data structures.

Critical sections are an area or block of code that accesses a shared resource that must be controlled because the resource is being shared by multiple concurrent tasks. Critical sections are marked by an entry point and an exit point. The actual code in the critical section can be one line of code where the thread/process is reading or writing to memory or a file. It can also be several lines of code where processing and calls to other methods involve the shared data. The entry point marks your entering the critical section and an exit point marks your leaving the critical section.

entry point(synchronization starts here) - - - -critical section - - - -access file, variable or other resource- - - -critical section - - - -exit point(synchronization ends here)

In order to solve the problems caused by multiple concurrent tasks sharing a resource, three conditions should be met:

If a task is in its critical section, other tasks sharing the resource cannot be executing in their critical section. They are blocked. This is called mutual exclusion.

If no tasks are in their critical section, then any blocked tasks can now enter their critical section. This is called progress.

There should be a bounded wait as to the number of times that a task is allowed to reenter its critical sections. A task that keeps entering its critical sections may prevent other tasks from attempting to enter theirs. A task cannot reenter its critical sections if other tasks are waiting in a queue.

These synchronization techniques are what are used to manage critical sections. It is important to determine the how these concurrently executing tasks are using the shared data. Are they writing to the data while others are reading? Are all reading from it? Are all writing to it? How they are sharing the shared data helps determine what type of synchronization is needed and how it should be implemented. Remember applying synchronization incorrectly can also cause problems like deadlock, data race conditions, and so forth.

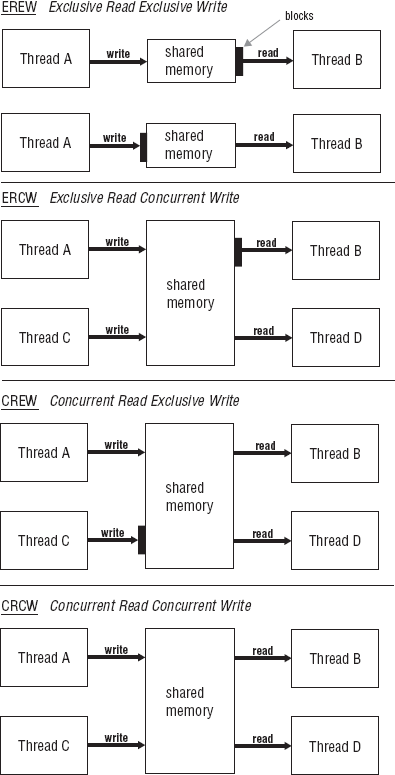

The Parallel Random-Access Machine (PRAM) is a simplified theoretical model in which there are N processors labeled P1, P2, P3, ... PN that share one global memory. All the processors have simultaneous read and write access to shared global memory. Each of these theoretical processors can access the global shared memory in one uninterruptible unit of time. The PRAM model has four algorithms that can be used to access the shared global memory, concurrent read and write algorithms, and exclusive read and write algorithms that work like this:

Concurrent read algorithms are allowed to read the same piece of memory simultaneously with no data corruption.

Concurrent write algorithms allow multiple processors to write to the shared memory.

Exclusive read algorithms are used to ensure that no two processors ever read the same memory location at the same time.

Exclusive write ensures that no two processors write to the same memory at the same time.

Now this PRAM model can be used to characterize concurrent access to shared memory by multiple tasks.

The concurrent and exclusive read-write algorithms can be combined into the following types of algorithm combinations that are possible for read-write access:

These algorithms can be viewed as access policies implemented by the tasks sharing the data. Figure 7-7 shows these access policies. EREW means access to the shared memory is serialized where only one task at a time is given access to the shared memory whether it is access to write or to read. An example of EREW access policy is the producer-consumer. The program in Chapter 5 in Listing 5-7 has an EREW access policy with the shared posix_queue between processes. One process writes the name of a file another process is to search for the code in. Access to the queue that contained the filenames was restricted to exclusive write by the producer and exclusive read by the consumer. Only one task was allowed access to the queue at any given time.

CREW access policy allows multiple reads of the shared memory and exclusive writes. There are no restrictions on how many tasks can read the shared memory concurrently, but only one task can write to the shared memory. Concurrent reads can occur while an exclusive write is taking place. With this access policy, each reading task may read a different value while other task is writing. The next task that reads the shared memory will see different data than some other task. This may be intended, but it also may not. ERCW access policy is direct reverse of CREW. Only one task can read the shared data, but concurrent writes are allowed. CRCW access policy allows concurrent reads and concurrent writes.

Each of these four algorithm types requires different levels and types of synchronization. They can be analyzed on a continuum with the access policy that requires the least amount of synchronization to implement on one end and the access policy that requires the most amount of synchronization at the other end. EREW is the policy that is the simplest to implement because EREW essentially forces sequential processing. You may think that CRCW is the simplest, but it presents the most challenges. It may appear that memory can be accessed without restriction. But this is the most difficult to implement and requires the most synchronization in order to meet the goal to implement a synchronization process that maintains data integrity and satisfactory system performance.

Synchronization is also needed to coordinate the order of execution of concurrent tasks. Order of execution was important in the program in Listings 7-5 and 7-6. If the tasks were executed out of order, the final value for Answer would be wrong. In the program in Listings 7-7 and 7-8, if task1 did not complete, task2 would attempt to read from an empty queue. Synchronization is required to coordinate these tasks so that work can progress or so that the correct results can be produced. Data synchronization (access synchronization) and task synchronization (sequence synchronization) are two types of synchronization required when executing multiple concurrent tasks. Task synchronization enforces preconditions and postconditions of logical processes.

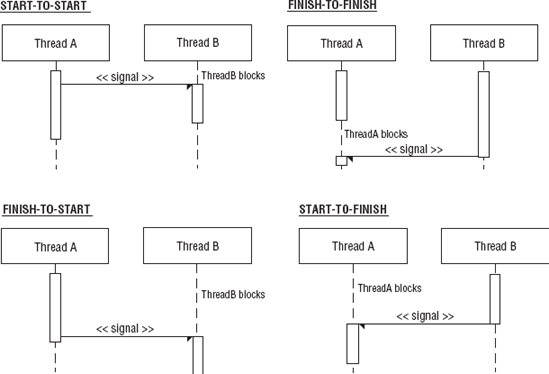

There are four basic synchronization relationships between any two tasks in a single process or between any two processes within a single application:

Start-to-start (SS)

Finish-to-start (FS)

Start-to-finish (SF)

Finish-to-finish (FF)

These four basic relationships characterize the coordination of work between threads and processes. Figure 7-8 shows activity diagrams for each synchronization relationship.