17. Python Database Access

This chapter describes the programming interfaces that Python uses to interface with relational and hash table style databases. Unlike other chapters that describe specific library modules, the material in this chapter partly applies to third-party extensions. For example, if you want Python to interface with a MySQL or Oracle database, you would first have to download a third-party extension module. That module, in turn, would then follow the basic conventions described here.

Relational Database API Specification

For accessing relational databases, the Python community has developed a standard known as the Python Database API Specification V2.0, or PEP 249 for short (the formal description can be found at http://www.python.org/dev/peps/pep-249/). Specific database modules (e.g., MySQL, Oracle, and so on) follow this specification, but may add even more features. This section covers the essential elements needed to use it for most applications.

At a high level, the database API defines a set of functions and objects for connecting to a database server, executing SQL queries, and obtaining results. Two primary objects are used for this: a Connection object that manages the connection to the database and a Cursor object that is used to perform queries.

Connections

To connect to a database, every database module provides a module-level function connect(parameters). The exact parameters depend on the database but typically include information such as the data source name, user name, password, host name, and database name. Typically these are provided with keyword arguments dsn, user, password, host, and database, respectively. So, a call to connect() might look like this:

connect(dsn="hostname:DBNAME",user="michael",password="peekaboo")

If successful, a Connection object is returned. An instance c of Connection has the following methods:

c.close()

Closes the connection to the server.

Commits all pending transactions to the database. If the database supports transactions, this must be called for any changes to take effect. If the underlying database does not support transactions, this method does nothing.

c.rollback()

Rolls back the database to the start of any pending transactions. This method is sometimes used in databases that do not support transactions in order to undo any changes made to the database. For example, if an exception occurred in code that was in the middle of updating a database, you might use this to undo changes made before the exception.

c.cursor()

Creates a new Cursor object that uses the connection. A cursor is an object that you will use to execute SQL queries and obtain results. This is described in the next section.

Cursors

In order to perform any operations on the database, you first create a connection c and then you call c.cursor() method to create a Cursor object. An instance cur of a Cursor has a number of standard methods and attributes that are used to execute queries:

cur.callproc(procname [, parameters])

Calls a stored procedure with name procname. parameters, which is a sequence of values that are used as the arguments to the procedure. The result of this function is a sequence with the same number of items as parameters. This sequence is a copy of parameters where the values of any output arguments have been replaced with their modified values after execution. If a procedure also produces an output set, it can be read using the fetch*() methods described next.

cur.close()

Closes the cursor, preventing any further operations on it.

cur.execute(query [, parameters])

Executes a query or command query on the database. query is a string containing the command (usually SQL), and parameters is either a sequence or mapping that is used to supply values to variables in the query string (this is described in the next section).

cur.executemany(query [, parametersequence])

Repeatedly executes a query or command. query is a query string, and parametersquence is a sequence of parameters. Each item in this sequence is a sequence or mapping object that you would have used with the execute() method shown earlier.

cur.fetchone()

Returns the next row of the result set produced by execute() or executemany(). The result is typically a list or tuple containing values for the different columns of the result. None is returned if there are no more rows available. An exception is raised if there is no pending result or if the previously executed operation didn’t create a result set.

Returns a sequence of result rows (e.g., a list of tuples). size is the number of rows to return. If omitted, the value of cur.arraysize is used as a default. The actual number of rows returned may be less than requested. An empty sequence is returned if no more rows are available.

cur.fetchall()

Returns a sequence of all remaining result rows (e.g., a list of tuples).

cur.nextset()

Discards all remaining rows in the current result set and skips to the next result set (if any). Returns None if there are no more result sets; otherwise, a True value is returned and subsequent fetch*() operations return data from the new set.

cur.setinputsize(sizes)

Gives the cursor a hint about the parameters to be passed on subsequent execute*() methods. sizes is a sequence of type objects (described shortly) or integers which give the maximum expected string length for each parameter. Internally, this is used to predefine memory buffers for creating the queries and commands sent to the database. Using this can speed up subsequent execute*() operations.

cur.setoutputsize(size [, column])

Sets the buffer size for a specific column in result sets. column is an integer index into the result row, and size is the number of bytes. A typical use of this method is to set limits on large database columns such as strings, BLOBs, and LONGs prior to making any execute*() calls. If column is omitted, it sets a limit for all columns in the result.

Cursors have a number of attributes that describe the current result set and give information about the cursor itself.

cur.arraysize

An integer that gives the default value used for the fetchmany() operation. This value may vary between database modules and may be initially set to a value that the module considers to be “optimal.”

cur.description

A sequence of tuples that give information about each column in the current result set. Each tuple has the form (name, type_code, display_size, internal_size, precision, scale, null_ok). The first field is always defined and corresponds to the column name. The type_code can be used in comparisons involving the type objects described in the “Type Objects” section. The other fields may be set to None if they don’t apply to the column.

cur.rowcount

The number of rows in the last result produced by one of the execute*() methods. If set to -1, it means that there is either no result set or that the row count can’t be determined.

Although not required by the specification, the Cursor object in most database modules also implements the iteration protocol. In this case, a statement such as for row in cur: will iterate over the rows the result set created by the last execute*() method.

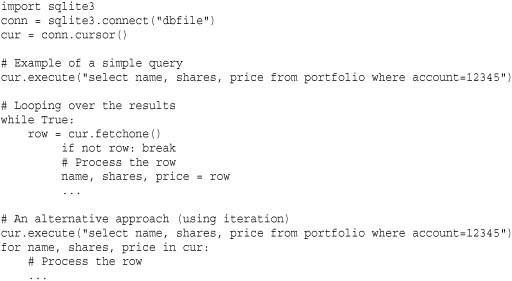

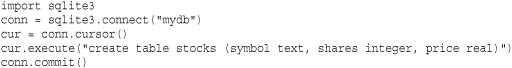

Here is a simple example showing how some of these operations are used with the sqlite3 database module, which is a built-in library:

Forming Queries

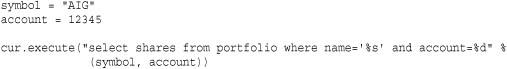

A critical part of using the database API involves forming SQL query strings to pass into the execute*() methods of cursor objects. Part of the problem here is that you need to fill in parts of the query string with parameters supplied by the user. For example, you might be inclined to write code like this:

Although this “works,” you should never manually form queries using Python string operations like this. If you do, it opens up your code to a potential SQL injection attack—a vulnerability that someone can use to execute arbitrary statements on the database server. For example, in the previous code, someone might supply a value for symbol that looks like "EVIL LAUGH'; drop table portfolio;--" which probably does something other than what you anticipated.

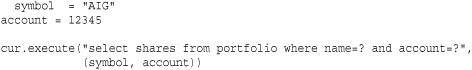

All database modules provide their own mechanism for value substitution. For example, instead of forming the entire query as shown, you might do this instead:

Here, the '?' placeholders are successively replaced with values from the tuple (symbol, account).

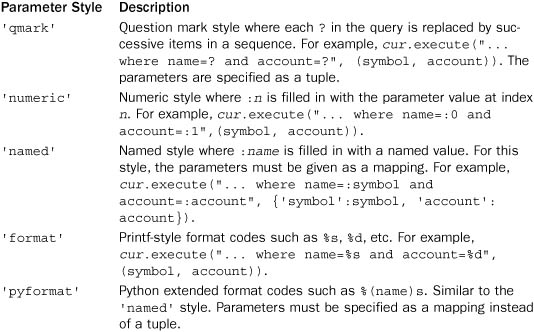

Sadly, there is no standard convention for placeholders across database module implementations. However, each module defines a variable paramstyle that indicates the formatting of value substitutions to be used in queries. Possible values of this variable are as follows:

Type Objects

When working with database data, built-in types such as integers and strings are usually mapped to an equivalent type in the database. However, for dates, binary data, and other special types, data management is more tricky. To assist with this mapping, database modules implement a set of constructor functions for creating objects of various types.

Date(year, month, day)

Creates an object representing a date.

Time(hour, minute, second)

Creates an object representing a time.

Timestamp(year, month, day, hour, minute, second)

Creates an object representing a timestamp.

DateFromTicks(ticks)

Creates a date object from a value of the system time. ticks is the number of seconds as returned by a function such as time.time().

TimeFromTicks(ticks)

Creates a time object from a value of the system time.

TimestampFromTicks(ticks)

Creates a timestamp object from a value of the system time.

Binary(s)

Creates a binary object from a byte-string s.

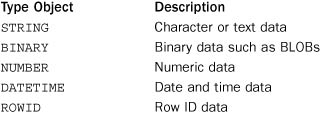

In addition to these constructor functions, the following type objects might be defined. The purpose of these codes is to perform type checking against the type_code field of cur.description, which describes the contents of the current result set.

Error Handling

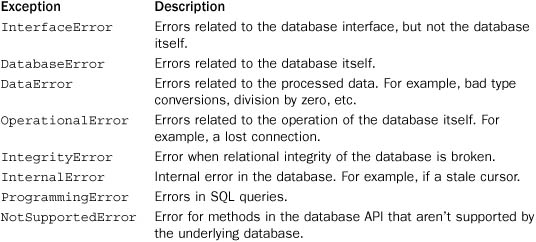

Database modules define a top-level exception Error that is a base class for all other errors. The following exceptions are for more specific kinds of database errors:

Modules may also define a Warning exception that is used by the database module to warn about things such as data truncation during updates.

Multithreading

If you are mixing database access with multithreading, the underlying database module may or may not be thread-safe. The following variable is defined in each module to provide more information.

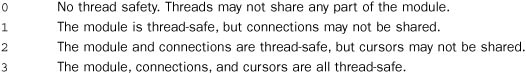

threadsafety

An integer that indicates the thread safety of the module. Possible values are:

Mapping Results into Dictionaries

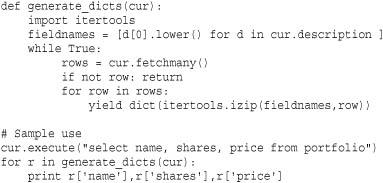

A common issue concerning database results is the mapping of tuples or lists into a dictionary of named fields. For example, if the result set of a query contains a large number of columns, it may be easier to work with this data using descriptive field names instead of hard-coding the numeric index of specific fields within a tuple.

There are many ways to handle this, but one of the most elegant ways to process result data is through the use of generator functions. For example:

Be aware that the naming of columns is not entirely consistent between databases—especially with respect to things such as case sensitivity. So, you’ll need to be a little careful if you try to apply this technique to code that’s meant to work with a variety of different database modules.

Database API Extensions

Finally, many extensions and advanced features can be added to specific database modules—for example, support for two-phase commits and extended error handling. PEP-249 has additional information about the recommended interface for these features and should be consulted by advanced users. Third-party library modules also may simplify the use of relational database interfaces.

sqlite3 Module

The sqlite3 module provides a Python interface to the SQLite database library (http://www.sqlite.org). SQLite is a C library that implements a self-contained relational database entirely within a file or in memory. Although it is simple, this library is attractive for various reasons. For one, it does not rely upon a separate database server nor does it require any kind of special configuration—you can start to use it right away in your programs by simply connecting to a database file (and if it doesn’t exist, a new file is created). The database also supports transactions for improved reliability (even across system crashes) as well as locking to allow the same database file to be simultaneously accessed from multiple processes.

The programming interface to the library follows the conventions described in the previous section on the Database API, so much of that detail is not repeated here. Instead, this section focuses on the technical details of using this module as well as features that are specific to the sqlite3 module.

Module-Level Functions

The following functions are defined by the sqlite3 module:

connect(database [, timeout [, isolation_level [, detect_types]]])

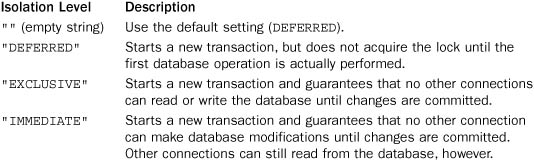

Creates a connection to a SQLite database. database is a string that specifies the name of the database file. It can also be a string ":memory:", in which case an in-memory database is used (note that this kind of database only persists as long as the Python process remains running and would be lost on program exit). The timeout parameter specifies the amount of time to wait for an internal reader-writer lock to be released when other connections are updating the database. By default, timeout is 5 seconds. When SQL statements such as INSERT or UPDATE are used, a new transaction is automatically started if one wasn’t already in effect. The isolation_level parameter is a string that provides an optional modifier to the underlying SQL BEGIN statement that is used to start this transaction. Possible values are "" (the default), "DEFERRED", "EXCLUSIVE", or "IMMEDIATE". The meaning of these settings is related to the underlying database lock and is as follows:

The detect_types parameter enables some extra type detection (implemented by extra parsing of SQL queries) when returning results. By default it is 0 (meaning no extra detection). It can be set to the bitwise-or of PARSE_DECLTYPES and PARSE_COLNAMES. If PARSE_DECLTYPES is enabled, queries are examined for SQL typenames such as "integer" or "number(8)" in order to determine the type of result columns. If PARSE_COLNAMES is enabled, special strings of the form "colname [typename]" (including the double quotes) can be embedded into queries where colname is the column name and typename is the name of a type registered with the register_converter() function described next. These strings are simply transformed into colname when passed to the SQLite engine, but the extra type specifier is used when converting values in the results of a query. For example, a query such as 'select price as "price [decimal]" from portfolio' is interpreted as 'select price as price from portfolio', and the results will be converted according to the “decimal” conversion rule.

register_converter(typename, func)

Registers a new type name for use with the detect_types option to connect(). typename is a string containing the type name as it will be used in queries, and func is a function that takes a single bytestring as input and returns a Python datatype as a result.

For example, if you call sqlite3.register_converter('decimal', decimal.Decimal), then you can have values in queries converted to Decimal objects by writing queries such as 'select price as "price [decimal]" from stocks'.

register_adapter(type, func)

Registers an adapter function for a Python type type that is used when storing values of that type in the datatype. func is a function that accepts an instance of type type as input and returns a int, float, UTF-8–encoded byte string, Unicode string, or buffer as a result. For example, if you wanted to store Decimal objects, you might use sqlite3.register_adapter(decimal.Decimal,float).

complete_statement(s)

Returns True if the string s represents one or more complete SQL statements separated by semicolons. This might be useful if writing an interactive program that reads queries from the user.

enable_callback_tracebacks(flag)

Determines the handling of exceptions in user-defined callback functions such as converters and adapters. By default, exceptions are ignored. If flag is set to True, traceback messages will be printed on sys.stderr.

Connection Objects

The Connection object c returned by the connect() function supports the standard operations described in the Database API. In addition, the following methods specific to the sqlite3 module are provided.

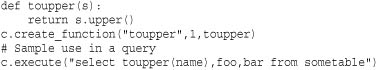

c.create_function(name, num_params, func)

Creates a user-defined function that can be used in SQL statements. name is a string containing the name of the function, num_params is an integer giving the number of parameters, and func is a Python function that provides the implementation. Here is a simple example:

Although a Python function is being defined, the parameters and inputs of this function should only be int, float, str, unicode, buffer, or None.

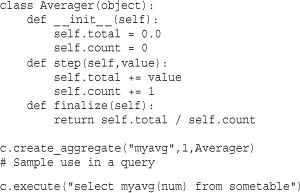

c.create_aggregate(name, num_params, aggregate_class)

Creates a user-defined aggregation function for use in SQL statements. name is a string containing the name of the function, and num_params is an integer giving the number of input parameters. aggregate_class is a class that implements the aggregation operation. This class must support initialization with no arguments and implements a step(params) method that accepts the same number of parameters as given in num_params and a finalize() method that returns the final result. Here is a simple example:

Aggregation works by making repeated calls to the step() method with input values and then calling finalize() to obtain the final value.

c.create_collation(name, func)

Registers a user-defined collation function for use in SQL statements. name is a string containing the name of the collation function, and func is a function that accepts two inputs and returns -1, 0, 1 depending on whether or not the first input is below, equal to, or above the second input. You use the user-defined function using a SQL expression such as "select* from table order by colname collate name".

c.execute(sql [, params])

A shortcut method that creates a cursor object using c.cursor() and executes the cursor’s execute() method with SQL statements in sql with the parameters in params.

c.executemany(sql [, params])

A shortcut method that creates a cursor object using c.cursor() and executes the cursor’s executemany() method with SQL statements in sql with the parameters in params.

c.executescript(sql)

A shortcut method that creates a cursor object using c.cursor() and executes the cursor’s executescript() method with SQL statements in sql.

c.interrupt()

Aborts any currently executing queries on the connection. This is meant to be called from a separate thread.

c.iterdump()

Returns an iterator that dumps the entire database contents to a series of SQL statements that could be executed to recreate the database. This could be useful if exporting the database elsewhere or if you need to dump the contents of an in-memory database to a file for later restoration.

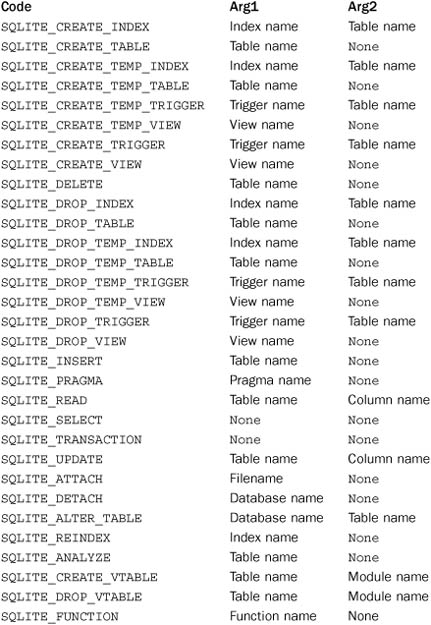

c.set_authorizer(auth_callback)

Registers an authorization callback function that gets executed on every access to a column of data in the database. The callback function must take five arguments as auth_callback(code, arg1, arg2, dbname, innername). The value returned by this callback is one of SQLITE_OK if access is allowed, SQLITE_DENY if the SQL statement should fail with an error, or SQLITE_IGNORE if the column should be ignored by treating it as a Null value. The first argument code is an integer action code. arg1 and arg2 are parameters whose values depend on the value of code. dbname is a string containing the name of the database (usually "main"), and innername is the name of the innermost view or trigger that is attempting access or None if no view or trigger is active. The following table lists the values for code and meaning of the arg1 and arg2 parameters:

c.set_progress_handler(handler, n)

Registers a callback function that gets executed every n instructions of the SQLite virtual machine. handler is a function that takes no arguments.

The following attributes are also defined on connection objects.

c.row_factory

A function that gets called to create the object representing the contents of each result row. This function takes two arguments: the cursor object used to obtain the result and a tuple with the raw result row.

c.text_factory

A function that is called to create the objects representing text values in the database. The function must take a single argument that is a UTF-8–encoded byte string. The return value should be some kind of string. By default, a Unicode string is returned.

c.total_changes

An integer representing the number of rows that have been modified since the database connection was opened.

A final feature of connection objects is that they can be used with the context-manager protocol to automatically handle transactions. For example:

![]()

In this example, a commit() operation is automatically performed after all statements in the with block have executed and no errors have occurred. If any kind of exception is raised, a rollback() operation is performed and the exception is reraised.

Cursors and Basic Operations

To perform basic operations on a sqlite3 database, you first have to create a cursor object using the cursor() method of a connection. You then use the execute(), executemany(), or executescript() methods of the cursor to execute SQL statements. See the Database API section for further details about the general operation of these methods. Instead of repeating that information here, a set of common database use cases are presented along with sample code. The goal is to show both the operation of cursor objects and some common SQL operations for those programmers who might need a brief refresher on the syntax.

Creating New Database Tables

The following code shows how to open a database and create a new table:

When defining tables, a few primitive SQLite datatypes should be used: text, integer, real, and blob. The blob type is a bytestring, whereas the text type is assumed to be UTF-8–encoded Unicode.

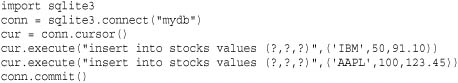

Inserting New Values into a Table

The following code shows how to insert new items into a table:

When inserting values, you should always use the ? substitutions as shown. Each ? is replaced by a value from a tuple of values supplied as parameters.

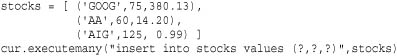

If you have a sequence of data to insert, you can use the executemany() method of a cursor like this:

Updating an Existing Row

The following code shows how you might update columns for an existing row:

cur.execute("update stocks set shares=? where symbol=?",(50,'IBM'))

Again, when you need to insert values into the SQL statement, make sure you use the ? placeholders and supply a tuple of values as parameters.

Deleting Rows

The following code shows how to delete rows:

cur.execute("delete from stocks where symbol=?",('SCOX',))

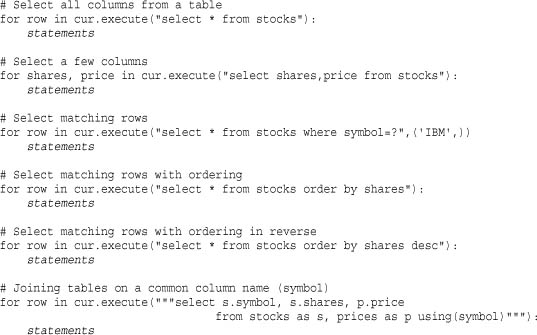

Performing Basic Queries

The following code shows how you can perform basic queries and obtain the results:

DBM-Style Database Modules

Python includes a number of library modules for supporting UNIX DBM-style database files. Several standard types of these databases are supported. The dbm module is used to read standard UNIX-dbm database files. The gdbm module is used to read GNU dbm database files (http://www.gnu.org/software/gdbm). The dbhash module is used to read database files created by the Berkeley DB library (http://www.oracle.com/database/berkeley-db/index.html). The dumbdbm module is a pure-Python module that implements a simple DBM-style database on its own.

All of these modules provide an object that implements a persistent string-based dictionary. That is, it works like a Python dictionary except that all keys and values are restricted to strings. A database file is typically opened using a variation of the open() function.

open(filename [, flag [, mode]])

This function opens the database file filename and returns a database object. flag is 'r' for read-only access, 'w' for read-write access, 'c' to create the database if it doesn’t exist, or 'n' to force the creation of a new database. mode is the integer file-access mode used when creating the database (the default is 0666 on UNIX).

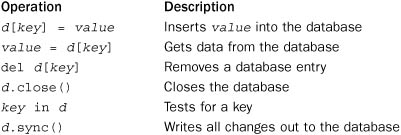

The object returned by the open() function minimally supports the following dictionary-like operations:

Specific implementations may also add additional features (consult the appropriate module reference for details).

One issue with the various DBM-style database modules is that not every module is installed on every platform. For example, if you use Python on Windows, the dbm and gdbm modules are typically unavailable. However, a program may still want to create a DBM-style database for its own use. To address this issue, Python provides a module anydbm that can be used to open and create a DBM-style database file. This module provides an open() function as described previously, but it is guaranteed to work on all platforms. It does this by looking at the set of available DBM modules and picking the most advanced library that is available (typically dbhash if it’s installed). As a fallback, it uses the dumbdbm module which is always available.

Another module is whichdb, which has a function whichdb(filename) that can be used to probe a file in order to determine what kind of DBM-database created it.

As a general rule, it is probably best not to rely upon these low-level modules for any application where portability is important. For example, if you create a DBM database on one machine and then transfer the database file to another machine, there is a chance that Python won’t be able to read it if the underlying DBM module isn’t installed. A high degree of caution is also in order if you are using these database modules to store large amounts of data, have a situation where multiple Python programs might be opening the same database file concurrently, or need high reliability and transactions (the sqlite3 module might be a safer choice for that).

shelve Module

The shelve module provides support for persistent objects using a special “shelf” object. This object behaves like a dictionary except that all the objects it contains are stored on disk using a hash-table based database such as dbhash, dbm or gdbm. Unlike those modules, however, the values stored in a shelf are not restricted to strings. Instead, any object that is compatible with the pickle module may be stored. A shelf is created using the shelve.open() function.

open(filename [,flag='c' [, protocol [, writeback]]])

Opens a shelf file. If the file doesn’t exist, it’s created. filename should be the database filename and should not include a suffix. flag has the same meaning as described in the chapter introduction and is one of 'r', 'w', 'c', or 'n'. If the database file doesn’t exist, it is created. protocol specifies the protocol used to pickle objects stored in the database. It has the same meaning as described in the pickle module. writeback controls the caching behavior of the database object. If True, all accessed entries are cached in memory and only written back when the shelf is closed. The default value is False. Returns a shelf object.

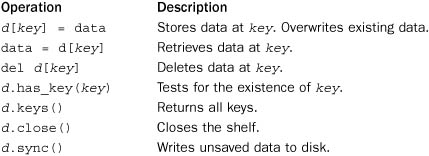

Once a shelf is opened, the following dictionary operations can be performed on it:

The key values for a shelf must be strings. The objects stored in a shelf must be serializable using the pickle module.

Shelf(dict [, protocol [, writeback]])

A mixin class that implements the functionality of a shelf on top of a dictionary object, dict. When this is used, objects stored in the returned shelf object will be pickled and stored in the underlying dictionary dict. Both protocol and writeback have the same meaning as for shelve.open().

The shelve module uses the anydbm module to select an appropriate DBM module for use. In most standard Python installations, it is likely to be the dbhash, which relies upon the Berkeley DB library.