Finding Stuff

18.1 Introduction

For this last chapter in the book, we wanted to take a step back – actually, to take several giant leaps back – and consider what “querying XML” is intended to achieve. In the bigger picture, the technologies we have discussed for querying XML, such as SQL/XML and XQuery, provide some useful infrastructure to achieve universal, intelligent search over all information. Most people don’t care in the slightest whether the underlying technology to achieve this involves SQL or XQuery or XML – all they care about is finding stuff.

We break “finding stuff” down into the various kinds of stuff that you might want to find. We start with structured data, which is the simplest and, currently, the most useful and widely used kind of search. It includes all database search – tables and XML. Then we look at finding stuff on the web – we start by discussing the Google phenomenon, then describe and discuss the Semantic Web. Web search also includes the “deep web” – information that is available on the web, but is not necessarily available as individual web pages (e.g., catalog information that is available via a web form).

After that, we move to finding stuff at work, describing Enterprise Search. This is currently a hot area, with advances in technology (and in perceptions and practices) that promise to make many of us more productive and less stressed at work. We discuss federated search (finding other people’s stuff) and finding services (another hot area), before briefly discussing how people might do all these searches in a more natural way.

Finally, we summarize the chapter and make some predictions for the future of search.

18.2 Finding Structured Data – Databases

So far in this book, we have talked mostly about how to query structured data – dates, numbers, short strings – using query languages such as SQL and XQuery. Finding structured data has been the mainstay of the computer industry for several decades. It’s at the heart of most computer applications, from banking to airline reservations to payroll accounting. What is changing is that this information is shared and exchanged more and more. That means we need standard ways to express the data (to transfer the data from party to party), and standard ways to query the data (so that each party can query any other’s). (A party here may be an application, a data store, a corporation, or a person.)

XML is the obvious choice for a standard way to express structured data. We predict that more and more structured data will be expressed – stored, represented, and/or published – as XML over the next few years. As the amount of structured-data-as-XML grows, the importance of being able to find and extract parts of that XML easily and accurately, in a standard way, will grow too. XQuery is the obvious choice here – it is a rich, expressive language designed specifically to find and extract parts of an XML collection. XQuery has been crafted by some of the computer industry’s leading experts in XML and query languages, and the W3C process (though time-consuming) is thorough and it guarantees participation from a host of experts and users.

Where structured data is stored in a database, SQL/XML is the obvious harness for XQuery, allowing the richness and versatility of XQuery expressions over either XML or relational data, and returning results in either XML or relational form. SQL/XML also forms a bridge between the mature, proven world of relational data and the more flexible, sharable XML data. As the major database vendors roll out SQL/XML with XQuery as standard functionality in the latest versions of their databases, SQL/XML will represent the mainstream adoption of XQuery over the next few years.

18.3 Finding Stuff on the Web – Web Search

18.3.1 The Google Phenomenon

One cannot discuss web search without mentioning Google. Google has been phenomenally successful in a number of dimensions – everyone, it seems, uses Google, whether doing academic or business research, finding out about the latest hot band, or just shopping for T-shirts. The word Google has entered the English language as a verb – “Just Google it.” And then there’s the money – at the time of writing, Google shares are trading at around $350 each, up from an initial public offering price of $85 in August 2005, and Google’s founders, Larry Page and Sergey Brin, are among the top 50 richest men in the world.

But Google were not the first to search the World Wide Web. Alta Vista did a creditable job of making the world’s web pages searchable as far back as 1995, and there have been a whole slew of other web search sites in the past decade (Lycos, Inktomi, Excite, Hotbot, Dogpile, AskJeeves, etc.). Some of these are still in operation, though most have been acquired by larger outfits such as Yahoo or Microsoft.

So, if Google wasn’t the first web search site, what has made it so successful? Quite simply, Google did it better. The Google site is simple and easy to use, performance is crisp, and, above all, the pages you are looking for appear in the top few hits most of the time.1 This accuracy can be attributed (in part) to Google’s use of link analysis to figure out which web pages are more authoritative. In brief, here’s how it works: When the Google spider goes out and crawls web pages, it starts at a number of seed pages. For each page crawled, the crawler adds index information for that page, follows each link on the page, and adds index information for those pages too. Given the interconnectedness of the web, a spider can crawl most of the world’s web pages with relatively few seed sites.2 What you end up with is an index to web pages. What link analysis adds is a catalog of information about which pages link to other pages. The idea is that if lots of people create links to some page, then that page must be authoritative, and so it should appear toward the top of the results page, all other factors being equal.

Let’s look at a simple example. Each year, Idealliance presents an XML conference, which is sponsored by some of the major players in the XML world. The home page for this conference (currently showing notes and presentations from XML 2005) is http://www.xmlconference.org/xmlusa/. This page obviously mentions the word “XML” a lot, and the page is very much about XML. Not only does Google count the links to this page, but they are also kind enough to share that information, via the “link” keyword – a Google search for “link: http://www.xmlconference.org/xmlusa/” reveals that around 820 other pages link to the Idealliance (XML 2005) page.3 That’s quite a lot, and it makes the Idealliance page fairly authoritative. But if you do a Google search for “XML,” the Idealliance page does not appear first in the list of results – you have to go the third page of results to find that. The top result is http://www.xml.com/, which has around 19,400 other pages linking to it.

This example is rather simplistic – there is a lot more going on than a simple link count. The authority of a page is calculated based on the number and authority of the pages that link to it – that is, if a page that links to your target page has itself got a large number of pages linking to it, that counts for more. And of course there’s the whole machinery of keyword search affecting the results and their order (relevancy ranking). For more details, see the original paper on link analysis and relevancy ranking, by Page and Brin (Google’s founders).4

The important point here is that the whole Google phenomenon – a huge leap forward in the utility of the web, a new verb in the English language, and at least two very rich guys – came about because of one incremental advance in search quality, i.e., link analysis (the PageRank algorithm). Web search still has a long way to go, and there are a number of techniques waiting in the wings to be the next PageRank. For the rest of this section, we examine a few of them – metadata, the semantic web, and the deep web.

18.3.2 Metadata

In Part II, “Metadata and XML,” we discussed the importance of metadata. On the web, metadata is found in the title and meta elements of an HTML or XHTML5 document. The web address (URL), too, can be considered metadata – it’s clear that http://www.xml.com/ is about XML without looking into the page at all. A sophisticated web search takes into account not just the existence and number of occurrences of a search term on a page, but also the existence of the keyword (or similar terms) in the URL, title, and meta elements. In this way, web page authors can collaborate with search engines to help ensure that a page is found whenever the page is truly about the search term(s).6 Existence of search terms in metadata can also help with page ranking (ordering hits in a results list to accurately reflect their relevance to the search). For example, if you search for the term “XML,” you would prefer to see pages with “XML” in the URL or in the title element rather than pages that happen to mention XML in passing.

Moving on to the contents of the page itself, the first few lines, headings, and emphasized terms could all be considered as metadata. And there are a growing number of engines that can do entity extraction – pulling out the names of entities (such as people’s names, place names, company names, etc.) from plain, unmarked-up text, by a combination of context recognition and dictionary lookup.

Link text might also be considered part of the metadata of a web page, even though it is not a part of the document itself. The link text is the text of the <a> element that includes the href attribute that points to a page – the text that is (generally) underlined and highlighted in blue, which you click to get to another page. This text is often very descriptive, and can give good clues about the meaning of the page. Google and other search engines make use of this text as if it were metadata as part of the page.7

But this is still just keyword metadata – we are looking for keyword matches, we are just looking for them in metadata as well as in the text of the page. Semantic metadata (see also Chapter 4, “Metadata – An Overview”) takes us one step on from keyword search, toward a semantic search, by providing pointers to what the data mean.

18.3.3 The Semantic Web – The Search for Meaning

The term semantic web was coined by Tim Berners-Lee,8 who described it as “data on the Web in a form that machines can naturally understand … a web of data that can be processed directly or indirectly by machines.” The key here is that machines must be able to understand the data. Keyword search (or, in structured data, number or date or string search), does not involve any understanding of the meaning (semantics) of the search terms or of the data being searched.

When you search across text, a search term may have many meanings. If you search for the word “bat,” are you looking for a flying mammal, or an instrument with which to play baseball (or cricket)? When you type “bat” into Google, it does only a keyword search, and returns pages that include “bat” in all its meanings.9 When you search for a number or a date, there is generally only one possible meaning for the search term.10 If you search in an Excel spreadsheet, for example, for the number 42, you would not expect to get results that include the number 24 (which is similar to 42 in many ways – both are numbers, both are integers, both are even, both have the digits 4 and 2, etc.). But number search would also improve if you could search on the meaning of the number, in the sense that you could search for “the number 42 where it is an employee number at Beeswax Corporation,” or “the number 42 where it is the price in dollars of a medium red T-shirt.” This is the essence of the semantic web – to be able to search for documents (and other stuff) as if you were asking a librarian. The librarian understands the subject matter that exists in the library, and understands your description of what you are looking for, and so can come up with the book or article or paper that meets your needs. Imagine approaching the help desk at the Library of Congress and saying just “bat,” and you can see just how much distance there is between even the smartest keyword-based search and a search that involves meaning.

To search for meaning, we need two things. First, we need the ability to describe real-world things in a way that is machine-understandable, in the same way that XML is machine-readable. (Once this is achieved, computers can process and integrate data in an intelligent way. By contrast, the current World Wide Web demonstrates only how good computers are at presenting data to people.) Second, we need to be able to describe a meaning search as easily as we can describe a keyword search (to, say, Google). In the rest of this section, we describe the contribution that RDF, RDF-S, OWL, and SPARQL have made to this “search for meaning,” and assess how close we are to the goals of the semantic web.

URL, URI, URIRef

Anyone who has used a browser has used a URL – it’s the string you type into the browser to get to a web page, also known as a web address. URL stands for Uniform Resource Locator – a standard way to locate a web resource, usually a web page.

RDF (see next subsection) uses the notion of a URI11 – a Uniform Resource Identifier. This is an ambitious extension to the notion of a URL. A URI is a string that can identify anything in the universe, including things that can be located on the web (web pages, documents, services) and things that cannot (a person, a corporation, a physical copy of a book, even a concept). A URIRef is a URI with an optional fragment identifier.12

RDF Statements

Once we can identify a thing, we can describe its properties. RDF – the Resource Description Framework – defines a framework for describing things (resources) in a machine-readable format. Using RDF, we can make statements about resources, where each statement has a subject, a predicate, and an object.

For example, “An American Werewolf in London was directed by John Landis” is a statement that describes one of our sample movies. “An American Werewolf in London” is the subject, “directed by” is the predicate, “John Landis” is the object. More precisely, the movie with the title “An American Werewolf in London” is the subject, and the person whose name is “John Landis” is the object.

We can express this as an RDF statement. First, we need to know (or assign) a URI for each of the resources.13 Let’s say that the movie is denoted by the URI “http://example.org/movie/42,” the predicate is denoted by “http://example.org/predicates/isDirectedBy,” and the subject is denoted by “http://example.org/people/Landis/John/001.” Note that none of these URIs is a URL – i.e., none of them is a web address that resolves to an actual page or document. Each URI is used to identify some specific thing, not to point to its location (hence it’s a URI and not a URL). Of course, these URIs might also point to a physical location that contains a description of the resource (where the resource is a person, it might contain a biography of that person), but that’s not its primary purpose.14

Serializing RDF Statements

RDF gives us a way to formulate a statement about a resource. But how do we express that statement? The RDF Primer describes three ways – a graph, a triple, and an XML fragment.

Figure 18-1 shows a graph representation of our example statement, saying that the movie “An American Werewolf in London” was directed by the person whose name is “John Landis” (we have invented, for these examples, a person-id scheme where each person is identified by first name and last name, plus a number to distinguish between people with the same name). We have added a second statement to the graph, which says that the title of the movie is “An American Werewolf in London.” Note that the value of the title is a literal string, not a URI – in RDF, any object (but not subject or predicate) may be either a URI or a literal. Literals can be typed, and the type of a literal may be an XML Schema type or some implementation-defined type.

The same statements could be expressed as tuples (more specifically, triples), as in Example 18-1. The RDF Primer describes a shorthand form of this fairly verbose serialization, with no angle brackets, and with part or all of the URI represented by a predefined prefix.

A third way to represent an RDF statement is as an XML document. Example 18-2 shows one possible XML representation of the RDF statements described in the previous section. The XML document starts with an rdf:RDF tag, with three namespace definitions. The first is the namespace associated with the rdf prefix, and the second is the namespace we invented for RDF predicates that we define, such as isDirectedBy. The third is the namespace for Dublin Core metadata definitions (see Chapter 5, “Structural Metadata”). Dublin Core already has a definition for “title,” so we reused that definition for our XML example. The rdf:RDF tag has a child element rdf:description, which encloses an RDF statement. The rdf:about attribute gives the subject of the statement. The child of rdf:Description gives the predicate and object of the statement – the predicate is represented by the element name (pred: isDirectedBy), and the object is given by the content of the element. The rdf:Description element has a second child, showing how several statements about the same subject can be represented in the same rdf:Description.

At this point we need to emphasize that RDF is not XML. RDF is a framework for describing resources using statements containing subjects, predicates, and objects. RDF/XML describes a possible XML serialization of those statements.

Toward Meaning – RDF-S, OWL

The semantic web is all about describing (and searching for) meaning rather than keywords, semantics rather than syntax. But RDF is just one step on from metadata tags in this respect. Two more standards efforts – RDF-S and OWL – push us further along the spectrum toward meaning.

RDF-S,15 or RDF Schema, lets you define a vocabulary for use in RDF statements. The vocabulary sets out:

This gives additional meaning to RDF statements by restricting the vocabulary, in the same way that a data type system adds meaning to unstructured data (“the integer 42” or “the string 42” carry more meaning than “the untyped 42”). RDF-S also allows the definition of a resource class hierarchy, which gives information about how resources relate to each other. The RDF-S vocabulary and class hierarchy constitute a taxonomy.

At this point we need to take a side-trip to discuss the meaning of the terms taxonomy and ontology. There is some debate in the knowledge management and linguistics communities over the precise definitions of these terms, and the differences between them. We describe here our preferred definitions, but warn the reader that she may find the terms used in slightly different ways in different texts.16 A taxonomy is a hierarchical ordering of terms. One obvious example of a taxonomy occurs in biology – a Sheltie is a dog is a mammal is an animal. A taxonomy is generally drawn as a tree with a single root.17 An ontology is a (not necessarily hierarchical)18 representation of concepts and the relationships between them. At first glance, an ontology might look exactly the same as a taxonomy – a Sheltie is a dog is a mammal is an animal. But the ontology node is not “the term Sheltie,” rather it is “the concept which is a breed of dog that is quite small and has long hair and … and is referred to in English by the term Sheltie.” An ontology represents concepts rather than terms. It is language-independent (the term for a concept in some language is one of the properties of that concept), and it implies that we know something about each concept. The relationships between nodes in an ontology tend to be meaning-based (rather than subset/superset-based), so that an ontology is often referred to as a knowledge representation (as opposed to a classification hierarchy).19

Now that we have taken our side trip, we can say that RDF lets you describe the properties of resources; RDF-S lets you define which properties are appropriate for describing which resources, and also lets you define a taxonomy of resources. OWL,20 the Web Ontology Language, takes you one step further on the path to meaning by letting you define an ontology of resources. This ontology describes the meaning of both resources and properties in RDF statements.

Querying RDF Statements – SPARQL

The RDF Data Access Working Group (DAWG)21 was tasked with defining how to query over RDF statements. They came up with SPARQL,22 defined as the SPARQL Protocol And RDF Query Language.23 SPARQL queries across an RDF Dataset, which is a set of one or more RDF graphs (as described above). We give two examples of SPARQL, just to give the reader a general flavor of the language.

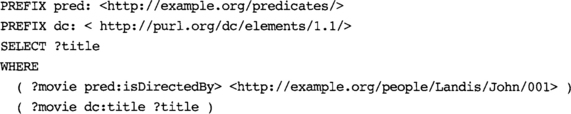

Example 18-3 shows the basic structure of a SPARQL SELECT statement. The WHERE clause is a single triple with a variable (?movie) in place of the subject. This statement returns the value of the variable ?movie, where the triple exists in the RDF Dataset, for any value of ?movie; i.e., the statement returns all movies directed by John Landis. Note the superficial resemblance to the SQL SELECT statement.

Example 18-4 extends Example 18-3 to return the title of each movie directed by John Landis. Note that the two instances of ?movie in the two triples in the WHERE clause must bind to the same value for the match to happen, effectively giving us a “join” across RDF statements.

In addition to SELECT, SPARQL has three other query forms. CONSTRUCT returns an RDF graph given a query or a set of triple templates, DESCRIBE returns an RDF graph that describes the resources returned by a query, and ASK returns yes or no according to whether some statement exists that satisfies the query. Example 18-5 is an ASK query that asks whether or not there is some movie directed by John Landis.

SPARQL – YAXQL?

Is SPARQL “Yet Another XML Query Language”? It could be argued that RDF statements can be expressed as XML, and XQuery is a language designed specifically for querying XML, so we should not reinvent the wheel by coming up with a brand new language. There are some practical difficulties in this approach – for example, any given RDF statement can be serialized as XML in many different forms. However, Jonathan Robie showed in his 2001 paper, “The Syntactic Web,”24 that RDF could be serialized in a normalized XML form, allowing us to query it using XQuery. Nonetheless, the Semantic Web people have insisted that what is needed is a comprehensive language that can query over, and return results as, the RDF graphs that underly the XML serialization, just as XQuery queries over, and returns results as, instances of the XQuery Data Model. The answer is left for the reader to ponder – we lean toward the opinion that inventing another query language is a necessary evil.

The Search for Meaning – Still a Long Way to Go

We have only briefly touched on the semantic web here. The efforts to express meaning rather than just data are in their infancy, and while the goals are laudable, the barriers to adoption of the standards described in this section are enormous.25 People already have a way of expressing meaning to any level of precision and detail that they choose – via human language – and they are generally unwilling to learn a complicated new syntax and vocabulary just to make that meaning machine-understandable. We believe the only way that the semantic web can achieve mass adoption is for machines to be able to translate human language automatically (or perhaps with some human feedback) into machine-understandable form.26 Once computers can codify meaning from human language, it’s a small step to codifying questions and searches into something that will search over that machine-understandable data. RDF, RDF-S, and OWL are a welcome step in the right direction, but we still have a long way to go.

18.3.4 The Deep Web – Feel the Width

The title of this section borrows from a 1960s British sitcom about tailors, “Never Mind the Quality, Feel the Width.”27 The title of this sitcom shows the two important dimensions of web search – quality (getting the right results, in the right order, for each search), and width (searching across all possible web pages). In Section 18.3.1, we suggested that a web spider or crawler can index most of the pages on the web by following the links on a set of seed pages, then following those links to get more “seed” pages, and so on. But this method of crawling misses any page that cannot be reached directly from some link on another page – it misses the so-called deep web. As an example of deep web information, the United Airlines website – at http://www.united.com/ – allows you to type in start and end points for a trip, and find available flights and prices. We typed in a round-trip from SFO to BOS, and found that United Airlines 176 services the first leg, for 366.90 USD. Now try finding that same information on Google – e.g., search for “United Airlines 176.” It cannot be found! The page showing details of United Airlines flight 176 is not a part of the surface or shallow web, it’s part of the deep web.

The deep web consists of all the information that is available on the web, but cannot be found using (most) search engines. Typically, this information is stored in a database, and is available via some database search on the web page – the result is displayed on a dynamic page (a web page created on the fly), and there is no link to that dynamic page from any other page. Some estimates place the amount of information in the deep web at 500 to 1,000 times as much as on the surface web. If we are to achieve universal search, we must be able to search and present this deep web content.

At the time of writing, deep web search is in the research stage.28 There are two obvious ways to make the deep web available. First, a website administrator can make deep web content available in static pages, and offer it up to search engines via a hidden link – one that the web page user cannot see, but which can be seen and followed by a crawler. This is done most often when the dynamic content in the deep web is really a set of textual web pages that have been stored in a database and retrieved dynamically to support a particular look and feel on the site, or perhaps to maximize reuse of the text. Where the information is more structured – as in our United Airlines example – this is more difficult and expensive, but still possible. Of course, this requires a development effort to set up, and a maintenance effort to keep the static data in sync with the dynamic data, not to mention additional disk space.

Second, the search engine can mine the deep web using a mixture of heuristics and manual input. Bright Planet29 and Deep Web Technologies30 are two companies offering to make deep web content searchable. The techniques include identifying pages with form fields, allowing search administrators to set up stored queries for each form page, and cataloging existing deep websites with useful queries. There is no silver bullet here – there is room for huge improvement, probably involving standards for a web page to identify itself as a deep web portal, and to give hints to the crawler on how to extract the deep web content behind that portal.

18.4 Finding Stuff at Work – Enterprise Search

The Google phenomenon has had a knock-on effect in other areas where people who are not necessarily technically minded need to search for documents (and structured data). Everyone, it seems, has used Google to search the web and wants (and expects) all their search experiences to be just as simple, fast, and accurate as their web search experience. This applies especially to anyone who needs to be able to find documents and data in an enterprise (everyone, it seems, is a “knowledge worker” nowadays).

This expectation seems reasonable enough – if Google can find what I’m looking for out of the bazillion pages on the web, why can’t my enterprise search find the competitive analysis whitepaper a colleague wrote last year? But there are a number of qualitative differences between web search and enterprise search.

• Some searches are easier, not harder, when you have a bazillion documents to choose from. When you go to the web and search for “javascript,” there is a wealth of good information out there to help you dash off a piece of javascript to liven up your web page. There may be thousands of results that would all work equally well for your particular needs. But in an enterprise search, you are often looking for a particular document, and that’s the only result that will work for you.

• Link analysis, described briefly in Section 18.3.1, rarely applies in enterprise search. Most enterprise documents are stored in file systems or databases, where there is no URL to examine, no links to count, no link text to read.

• In enterprise search, there is some kind of access restriction (security) on most documents. In web search, there is no concept of security – you search only across publicly available information. In an enterprise, users want to see all the documents they have access to (personally, or by virtue of membership of a group or a role) show up in their search results.

On the other hand, enterprise search is bounded, and the enterprise has (at least in theory) complete control over location, format, and metadata of every document.31 There is huge potential here for an enterprise search solution that combines the “automatic” high-quality search of Google with the metadata available to an enterprise. The successful enterprise search solution must also be able to search all the different sources in an enterprise – file systems, document repositories, e-mail servers, calendars, applications, etc. (the deep enterprise web), and it must respect the various styles and levels of security in the enterprise.

Two companies that have already declared ambitions in this area are Google and Oracle, interesting because they represent opposite starting points. Google are trying to leverage their brand name and technology in web search by selling the Google search appliance.32 And Oracle is trying to leverage their reputation and expertise in databases and document archives with a product called, at the time of writing, Oracle Enterprise Search.33

18.5 Finding Other People’s Stuff – Federated Search

Another area with potential for enormous impact over the next few years is that of federated search. If you want to search data from lots of different sources – websites, databases, document repositories, etc. – you can crawl each of those sources in turn, creating one large index, and build a global search. Alternatively, you can take advantage of the fact that many of those sources already have a local search capability, and “federate out” the search to those sources in parallel, merging the results into a final results set. This last step is famously difficult if the search involves full-text retrieval, for there is no way to merge the relevance of results from multiple sources even when the search at each source is executed using the same search engine. When different search engines are used, even results from the same source are not comparable. (See Chapter 13, “What’s Missing?”)

This is an area of enormous potential – imagine moving from a world where every search engine needs to crawl and index every source, to a world where each source is indexed locally. In this federated world, searches across many sources are done in parallel, each source searching itself on behalf of each searcher. A number of attempts were made in the 1990s to get this kind of collaborative search off the ground, the most famous being the Harvester project. For now, the single universal index approach has won out on the web; in the enterprise, federated search is hampered by the lack of standards. But the benefits are enormous:

• Parallel query across any number of sources – degree of parallelization = the number of sources.

• No duplication of effort – each source is “crawled” and indexed only once, which leads to cost savings across an enterprise.

• All data is accessible – each source can crawl and index in the way most appropriate to that source. Deep web data can be made accessible by (rather than from) the source.

To make this work, we need standards in:

• Query language (of course) – XQuery 1.0 is a good first pass at a standardized definition of the syntax and semantics of a query language that can query (the XML representation of) any data.

• Full-text query – as you read in Chapter 13, XQuery 1.0 does not have any full-text capability (though work on XQuery Full-Text is well under way, with several Public Working Drafts already published, so there is hope here). The queries that are passed from federator to source must be full-text queries.

• Result sets – the result passed back from source to query federator must be in a standard format. The syntax (probably an XML schema) and semantics need to be standardized.

• Relevance – the relevance of results should be comparable across sources, so that the federator can merge and order the results correctly. This is hard!

18.6 Finding Services – WSDL, UDDI, WSIL, RDDL

So far we have discussed a number of different kinds of data that you might want to find. The web also offers the possibility of finding services.

A web service is a service provided over the web. Let’s say we want to make our movies database available to anyone with a website – we could define a web service that allows anyone to query for information (director, producer, rating, etc.) about any movie. The vision is that web services will proliferate in the same way as web pages, so that every operation you might want to perform – finding information about movies, calculating the current time in some city, converting from one currency (or language) to another, etc. – could be done by some web service somewhere. When creating a website, or even a web service, you would have access to thousands of existing web services on which to build. Let’s look at an example, then review what’s available today to help achieve this vision.

First, let’s suppose that we have built a web service that takes in a movie title and gives back the director, producer, rating, and reviews for that movie (movie search). Next, let’s suppose that you want to build a website selling books and movies and CDs. To attract people to your site (and to encourage them to buy stuff once they get there), you offer a host of information around everything you sell. Your customer types in the title of a movie, and you display a page with links to director, producer, rating, and reviews. When the customer clicks any of these links, the software behind your website merely finds the cheapest available web service that will deliver that information. It could also plug web services together – if your customer is browsing in French, it could use one web service to get movie reviews, and another to translate those reviews into French. During the ordering process, your website might use other web services to calculate currency exchange rates, shipping costs, local applicable taxes, etc. This is the dream of reusable (open source) software – that any piece of functionality needs to be written only once – but applied in real time.

Now let’s look at the standards that need to be in place to make this all work. First, let’s assume that you know that the movie search service exists. Your website software needs to know how to invoke the movie search, what to send it, and what to expect back. WSDL34 (the Web Services Definition Language) describes exactly that – a standard way to describe, in XML, the API to a web service. If we publish a WSDL document for movie search, you know everything you need to know to use that service.

So how would you find out that the movie search web service exists? There are several possible ways to discover a web service. First, you could look in a web services directory (a sort of yellow pages, or an LDAP35 server, for web services). UDDI,36 Universal Description Discovery and Integration, describes a standard way to publish a directory of web services, again as XML. The standard includes an XML Schema for such directories. Both IBM37 and Microsoft38 have created UDDI business registry nodes. They both have simple user interfaces (UIs) allowing a search for a web service. Using Microsoft’s search we found a web service that claims to “Search web services using all UDDI registries.” This looks promising! Since both the UDDI entries and the web services they refer to are stored in XML, we would expect XQuery to be used in implementing services search.

An alternative to this centralized, directory lookup method is for each website to “publish” its web services, and make details available upon request. The specification of WSIL (Web Services Inspection Language),39 which “complements UDDI by facilitating the discovery of services available on Web sites, but which may not be listed yet in a UDDI registry,”40 came out of a collaboration between IBM and Microsoft.41

While UDDI is a centralized directory for web services, and WSIL (and DISCO) operate at the web server (site) level, RDDL (Resource Directory Description Language)42 has been touted as a way of publishing a link to a web service inside a web page. RDDL provides a way of adding information about a link on a web page by adding attributes to the XHTML <a> element, such as nature, purpose, and resource. So you could do a web search and find a page with a reference to a web service in some <a> element.

Publishing and finding (discovering) web services is a problem that, at the the time of writing, does not have a complete solution, though all three areas discussed in this section show promise. UDDI seems an obvious way to publish and find web services. It is seen by many as overly complex, while others think it does not go far enough and would like to see UDDI grow into a full-blown directory service (on the lines of LDAP), capable of holding information such as legal disclaimers, privacy policy, etc. Uptake of UDDI has been slow, partly because there are two “central” repositories (at IBM and Microsoft). DISCO and WSIL (at the web server level) and RDDL (at the page level) also show promise, but have seen little uptake. What is needed is a universal search that will find web services programmatically, searching all these forms of web service publishing.

18.7 Finding Stuff in a More Natural Way

So far in this chapter we have discussed what is searched (web pages, applications and database data, services) but not how it is searched. We have already alluded to the limitations of keyword-based web search. Languages such as XQuery provide rich tools to programmers who build user interfaces, but there is much work to be done on the human interface side of search. Tim Bray provides some motivation for better user interfaces in his “On Search” blog.43 Certainly the Google paradigm – type in a few keywords and get a long list of URLs to click – is severely limited.44 Here are some of the search-user interface technologies that are entering the mainstream.

• Smart browsing – lets the user select from a number of different views of the data, presenting each view as a hierarchy. Lets the user “drill down” into a hierarchy, “drill across” to related hierarchies, and combine browsing and searching.

• Classification and categorization – shows search results (or all available data) categorized according to some ontology. Dynamic categorization of search results allows a combination of searching plus browsing your own custom hierarchy.

• Visualization – shows results in a number of ways, not just a flat list. Result sets can be visualized as hyperbolic trees, heat maps, topographic maps, and so on.45

• Personalization – when you do a search on the web, the search engine gives you the same result it gives everybody else. But that is changing, as search applications gather more data about you – starting with what you search for and which results you look at.

• Context – search applications are beginning to take notice of the context of the search. If you are currently building a spreadsheet that shows sales of database software by region, then when you look for “California” the search engine should show you the latest database software sales for California, not restaurants or airline seats.

The next big challenge in search is to move the burden of expressing a search (and dredging through possible results) from the user to the computer. Instead of asking the user to formulate and run the query that will return precisely the right results, the user should be able to press a button that says “show me something that I would find useful or interesting,” or just “Help.” The result should be an easily-navigable map of information related to the user’s current task.

18.8 Putting It All Together – The Semantic Web+

In this chapter we have indulged in some visioning, imagining what the ultimate search engine might be able to do. It is already a cliché that search has changed most people’s daily lives, and enhanced the experiences of the World Wide Web. Efficient, accurate, reliable search is now changing the way many of us do our jobs – from Google’s gmail,46 which rejects the concept of message foldering in favor of a single folder plus search, to the rise of enterprise search software that will allow us to do the same for all the information we consume (mail, files, calendar appointments, database data, news, websites). But search still has a long way to go – most search is still based on either a highly-structured language such as SQL or XQuery, or on keywords plus a small amount of intelligence. We expect that, in the future, you will be able to find information, goods, and services without having to think about how or where to ask for it. The world will host a true semantic web – a web of rich, meaningful content that you can find and consume without asking for it. But we will not predict when.

1This is deliberately vague. There has been a lot of work done to define objective measurements for the results of this kind of search – see, e.g., the TREC (Text REtrieval Conference) home page at: http://trec.nist.gov/. Also, some people would argue that “the pages you are looking for appear in the top few hits” is misleading – since Google is searching so many pages, it’s more accurate to say “the top few hits are acceptable results,” since there may be many pages that would satisfy your search.

2But see also Section 18.3.4, “The Deep Web.”

3Those nice people at Google even show you which pages link to the target page.

4Sergey Brin and Lawrence Page, The Anatomy of a Large-Scale Hypertextual Web Search Engine (Stanford, CA: Computer Science Department, Stanford University, 1997). Available at: http://www-db.stanford.edu/pub/papers/google.pdf.

5Of course, XHTML allows the inclusion of metadata from other namespaces, so an author can include any metadata, including RDF, as part of her web page. We believe this is rare today, but growing. Of particular interest are some experiments allowing web users to add metadata to pages they view – Adam Bosworth (http://www.adambosworth.net/) is a proponent of this sort of “sloppy” data on the web.

6Ideally, this would be an honest attempt by authors to make sure that their page shows up in the results of appropriate searches. It is well known, however, that some authors try to trick search engines into showing their pages on any results list, whether or not they belong there. This practice is sometimes called search engine spoofing.

7Yet another reason – if one were needed – to not use “Click here” for link text.

8Tim Berners-Lee, Weaving the Web: The Original Design and Ultimate Destiny of the World Wide Web (New York: HarperCollins, 2000).

9We were surprised to find the first result from Google to a search for “bat” was none of these – it was “British American Tobacco.”

10There are, of course, differences due to type – the integer 42 and the string 42 are different.

11Tim Berners-Lee, Uniform Resource Identifiers (URI): Generic Syntax (August 1988). Available at: http://www.isi.edu/in-notes/rfc2396.txt.

12The role of fragment identifiers (which often appear at the end of a URL, beginning with a “#” character, to denote a position within the page) has been a matter of hot debate within the W3C and the XML community. Some believe the fragment identifier should be considered a part of the URL, others that the fragment identifier is an addition to the URL. The RDF specs talk about a URIRef as their basic building block, where a URIRef is a URI with an optional fragment identifier at the end.

13Note that RDF does not provide a vocabulary (valid set of terms), even for predicates.

14This is similar to the use of a URI as a namespace name or a schema location hint.

15RDF Vocabulary Description Language 1.0: RDF Schema (Cambridge, MA: World Wide Web Consortium, 2004). Available at: http://www.w3.org/TR/rdf-schema/. See also Chapter 5 of the RDF Primer, at: http://www.w3.org/TR/2004/REC-rdf-primer-20040210/#rdfschema.

16The OWL definition of an ontology is given in OWL Web Ontology Language Use Cases and Requirements (Cambridge, MA: World Wide Web Consortium, 2004). Avaliable at http://www.w3.org/TR/webont-req/#onto-def.

17Probably the most well-known example of a taxonomy is the scientific classification of living things, the Linnaean taxonomy. A taxonomy often describes a system of classification.

18The relationships between concepts in an ontology often form a network rather than a tree.

19The even more subtle distinction between a taxonomy – a hierarchy of terms – and a thesaurus – a hierarchy of words – is left as an exercise for the reader.

20OWL Web Ontology Language Overview (Cambridge, MA: World Wide Web Consortium, 2004). Avaliable at: http://www.w3.org/TR/owl-features/.

21http://www.w3.org/2001/sw/DataAccess/.

22SPARQL Query Language for RDF (Cambridge, MA: World Wide Web Consortium, 2005). Available at: http://www.w3.org/TR/rdf-sparql-query/.

23SQL veterans will recognize this recursive acronym as a nod to the SQL acronym, originally expanded to Structured Query Language or Standard Query Language, and now accepted to be SQL Query Language.

24Jonathan Robie, The Syntactic Web (XML 2001 Conference). Available at: http://www.idealliance.org/papers/xml2001/papers/html/03-01-04.html.

25We should point out that the semantic web folks claim a small but growing number of real-world implementations: see Testimonials for W3C’s Semantic Web Recommendations – RDF and OWL (Cambridge, MA: World Wide Web Consortium, 2004). Available at: http://www.w3.org/2004/01/sws-testimonial.

26Codifying meaning in a machine-understandable form, and translating from human language to and from that form, has been a goal of the AI community for many years: see, e.g., Terry Winograd, Understanding Natural Language (San Diego, CA: Academic Press, 1972).

27http://www.bbc.co.uk/comedy/guide/articles/n/nevermindthequal_1299002290.shtml.

28http://www.deepwebresearch.info/ is a website that tracks deep web research.

29Michael K. Bergman, The Deep Web: Surfacing Hidden Value (Sioux Falls, SD: Bright Planet, 2001). Available at: http://www.brightplanet.com/technology/deepweb.asp.

30http://www.deepwebtech.com/.

31Anyone who has been involved in an enterprise search or content management project knows how difficult it is to get authors to add a name and date to each document, let alone enforce a long list of required metadata.

32http://www.google.com/enterprise/.

33http://www.oracle.com/technology/products/text/files/trydoingthiswithgoogleowsf04.zip.

34Web Services Description Language (WSDL) 1.1, W3C Note (Cambridge, MA: World Wide Web Consortium, 2001). Available at: http://www.w3.org/TR/wsdl.

35Lightweight Directory Access Protocol – see http://rfc.net/rfc1777.html.

36UDDI Version 3.0.2, UDDI Spec Technical Committee Draft, Dated 20041019. Available at: http://uddi.org/pubs/uddi-v3.0.2-20041019.htm.

37https://uddi.ibm.com/ubr/registry.html.

38http://uddi.microsoft.com/default.aspx.

39Specification: Web Services Inspection Language (WS-Inspection) 1.0, IBM, Microsoft (November 2001). Available at: http://www-106.ibm.com/developerworks/webservices/library/ws-wsilspec.html.

40Peter Brittenham, An Overview of the Web Services Inspection Language (June 2002). Available at: http://www-106.ibm.com/developerworks/library/ws-wsilover/index.html.

41Microsoft also has its own technology for publishing and discovering web services as part of the .NET framework – DISCO. See http://msdn.microsoft.com/msdnmag/issues/02/02/xml/.

42Jonathan Borden and Tim Bray, Resource Directory Description Language (RDDL) (March 2001). Available at: http://www.rddl.org/. See also Jonathan Borden and Tim Bray, Resource Directory Description Language (RDDL) (September 2003). Available at: http://www.tbray.org/tag/rddl4.html.

43Tim Bray, On Search: UI Archeology (2003). Available at: http://tbray.org/ongoing/When/200x/2003/07/04/PatMotif.

44Think Tom Cruise in the movie Minority Report.

45For an overview of visualization of search results, see: Information Visualization with Oracle 10g Text, January 2004. Available at: http://www.oracle.com/technology/products/text/pdf/10g_infovis_vl_0.pdf.