CHAPTER 6

ALTERNATIVE WORLD SCENARIOS TO ASSESS REQUIREMENT STABILITY

David Bush

UK National Air Traffic Services Ltd, London, UK

ALL SYSTEMS change over their lifetimes. Unless you are fortunate enough to be responsible for delivering a very small system, with a limited life expectancy, people will want to change your system. This will almost certainly happen while you are developing and delivering the system, and it will definitely happen when you are operating it—unless it is one of the significant numbers of systems that are delivered and never used—which is hardly a consolation.

There are really only two things you can do about this: you can ignore it and hope that you are moved onto another system before anyone thinks too much about changing the system; or you can specify and build your system so that it is robust in the face of the changes it faces, that is, so that change is not needed, so that it is easy to achieve, and so that it does not detract from the operation of your system.

If you are bold enough to take the responsible approach to this problem, this chapter offers you a mechanism for identifying what changes might occur over your systems life cycle. Armed with that knowledge, you have chance of future-proofing your system, and probably getting a promotion for your successor.

APPLICABILITY

Using scenarios of the system environment to identify possible requirement changes requires an investment of time, both to establish the scenarios and to carry out the assessment. As change is an effect that accrues over time, it is not worthwhile carrying the overhead for a small project with a short expected lifetime. Where it is worthwhile is in

- Security- or Safety-related systems, where changes to the system are likely to seriously jeopardise the effectiveness of the system, and where redesign and revalidation of the system is inevitably expensive.

- Architecturally complex systems, where in-service changes will rapidly compromise the architecture and impair system performance.

- Long lifetime systems, where the long term changes that the system will face are difficult to predict by extrapolating current trends.

- Business critical systems that are highly sensitive to external factors, such as international agreements, government policy and regulation, and/or public attitudes and opinions.

POSITION IN THE LIFE CYCLE

The approach applies across the life cycle, but is most relevant in the darker box, and somewhat relevant in the lighter ones.

![]()

KEY FEATURES

Strategic planners in business and government settings have long acknowledged the benefit of exploring possible future environments. Scenarios are developed describing a number of possible future worlds in which the business might exist, and using these to create robust plans, structures for the business in the future. Similar environmental scenarios can be used to describe the environment surrounding a planned future system. Emerging requirements are then analysed in each of those possible future worlds to identify which requirements are likely to change. Requirements Engineers and System Architects can then use these evaluations to make judgements about what requirements should be specified, and how they might be fulfilled within an architecture that can accommodate the possible changes identified.

STRENGTHS

This approach has a number of benefits over other approaches in identifying requirements changes. Firstly, it is proactive and seeks to predict possible future change, rather than simply measure the change that has taken place through change metrics. Secondly, it addresses change as a system life-cycle issue, unlike risk management based approaches—which tend to concentrate only on project risk. Finally, it provides a more creative approach to identifying possible risks to stability, and so overcomes some of the decision-making biases (concentrating on the familiar, anchoring on a single idea etc.) that hamper many approaches in identifying future problems.

WEAKNESSES

It is important to acknowledge that this approach only addresses the identification of possible future change. Its output is a list of potentially unstable requirements, and the way in which they might be unstable. Requirements Engineers and System Architects will then have to start earning their money by deciding how concerned they are about each possible change (–is it likely, would it have a big impact), and then deciding what to do about it (architect in a way that accommodates the change, design the change in from the start, etc.). A project without a strong systems engineering approach may well find such activities daunting. Even well-engineered projects, which would be carrying out such activities anyway, will find that the scale and scope of the work have increased.

Indeed, a general increase in the amount of work conducted is one effect of this approach. (As Ada programmers say, ‘Slow is good’.) Time needs to be spent in investigating and developing the alternative world scenarios, and then in evaluating the requirements against each of them. While an accurate traceability structure in the requirements will limit the amount of assessment that needs to be carried out, nonetheless this is additional work, which the project must fund.

This is not an approach suitable for all projects; but on the sort of projects identified above, it has the capacity for identifying significant potential requirement changes, and giving developers a chance to address them while it is still cheap to do so.

TECHNIQUE

Change in Systems

Experience, and the extensive work of Manny Lehman, has taught us of the troubles that afflict large-scale, user-involved systems in respect of change. For our purposes, it is driven by external factors in two key ways (Lehman and Belady 1985):

- Continuing change: In regard to the assumptions made about the performance of systems external to the system under discussion, even if these are entirely correct at the time, they eventually become compromised as the environment of the system changes.

- Continuing growth: In regard to the functional capability of systems, it must be continually increased to maintain user satisfaction over the system lifetime, and so there are continually emerging new and changed requirements.

In other words, change can occur both in our assumptions about the world in which our system will exist and in the requirements the system is expected to fulfil.

Requirements and Assumptions

Jackson (1995) shows that requirements and assumptions are essentially related in the construction of any system—that it is the combination of specified behaviour of the machine and the assumptions about the world, taken together, that guarantee the achievement of the requirements.

In the normal course of system development then, we may record the requirements and the assumptions we expect to depend on, but this is always a view at a point in time. We usually choose this point in time to be the moment at which the system is to enter service: at that time each requirement can be shown to be met by a combination of machine behaviour and assumptions about the environment.

The Effect of Time

But as time moves on, requirements and assumptions change. As they do so, our system must respond, either by changing the specification—and hence the design, or by accepting a compromise in the functionality of the system (existing requirements can no longer be met, new requirements are ignored). At some future moment, then, requirements and assumptions may no longer be valid. This is a framework for assessing the risk that requirements will become invalid, and so for forming a view on the stability of requirements.

Assessing Requirements Stability

There are two prerequisites for such an assessment,

- the set of requirements and assumptions (at whatever stage of refinement—business, user, system); and

- scenarios—static, snapshot descriptions of the alternative future worlds.

I assume that readers are familiar with developing and documenting requirements and assumptions (if not, see the recommended books at the end of this chapter). The alternative world may, however, be a less familiar example of the myriad types of scenario used in systems development—and deserves some explanation.

Alternative World Scenarios

The use of what I call here Alternative World Scenarios seems to trace to military planning carried out at the RAND Corporation after WW2. They were used to analyse decisions against alternative enemy courses of action. Herman Kahn was influential in popularising this concept and migrated it into the business world. Perhaps the best-known user of this type of scenario was Royal Dutch/Shell.

It is accurate to call these ‘World’ scenarios because they describe the environment that surrounds the system under examination, in our case a software intensive system. It is also appropriate to call them ‘Scenarios’ as they are creative prose descriptions of possible future environments (Bush et al. 2001).

Generating Scenarios

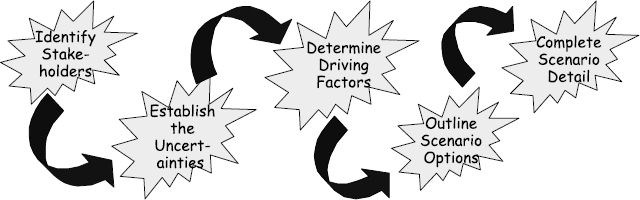

Space precludes a full and detailed description of the mechanism for generating these scenarios (see the recommended reading section for more detailed coverage). However, an outline of the approach is worthwhile to familiarise readers with a perhaps unfamiliar style of scenario. Figure 6.1 shows the key stages.

- Firstly, a set of knowledgeable stakeholders needs to be identified who have some knowledge of aspects of the domain in question.

- Secondly, by interview or group work these stakeholders are used to identify a set of uncertain factors likely to affect the system in question.

FIGURE 6.1 Key stages in generating scenarios

- Thirdly, prioritisation techniques are used to identify the most influential and important of these factors.

- Fourthly, the most important factors are used to identify a small number (2–4) of different possible world states.

- Finally, these outline world states are described in as vivid a detail as possible, and fleshed out with creative prose descriptions (based on the uncertain factors identified) into narrative descriptions of the Alternative Future Worlds.

The result of this activity is a set of prose descriptions of alternative future worlds. By asking the requirements analysts and/or stakeholders to read, and immerse themselves in, these worlds, we can get them to creatively assess the stability of their requirements.

Requirements

To effectively assess the stability of the requirements in the long term, I have argued above that it is essential to consider both requirements and assumptions. At a minimum these must be recorded in the requirements documentation in order to individually assess their stability. Preferably, these need to be recorded in such a way that their relationships are explicitly described—in order to understand the effects of changes in requirements or assumptions, one must know how they are related.

It is my opinion that a goal-based model of requirements is the best way to record them, and their interdependencies, and that is the basis of this description and the worked example that follows. However, it is possible—by using satisfaction arguments (for more detail on these, see Alistair Mavin's Chapter 20 on reveal) or rich traceability—to document requirements, assumptions and their interdependence in a traditional document hierarchy model, therefore, wherever appropriate I will illustrate the equivalent (document hierarchical) approach. Whichever approach is chosen, suitably documented requirements can be assessed for stability using scenarios generated from them.

Assessing Stability

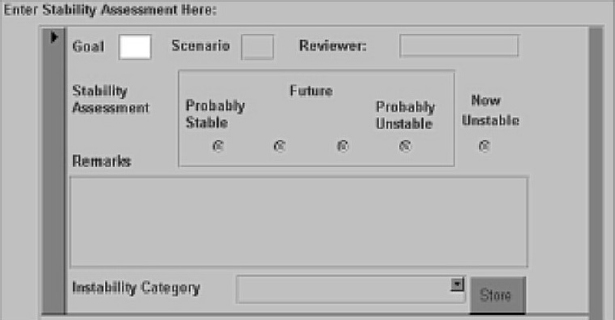

Assessing the stability of the requirements is a relatively mechanical process, where each scenario is taken in turn, read through, and internalised so that the assessors can begin to feel what it would be like to be in that sort of world. Then each of the requirements is considered in turn in the light of that scenario, and in each case an assessment is made of the likely stability. The following information is usefully recorded (Table 6.1):

| Requirement/assumption that prompted the instability. |

| The likelihood of the requirement/assumption being unstable. |

| The type of instability suggested. |

| A brief description of, and rationale for, the assessment |

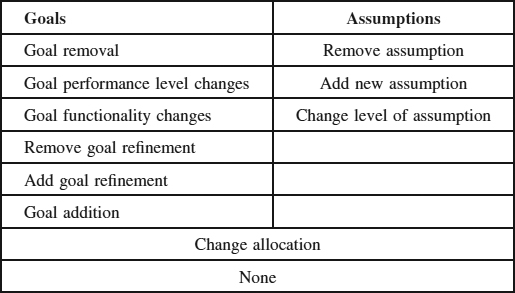

TABLE 6.2 Types of instability

The types of instability referred to (for a goal-based model) could be as shown in Table 6.2.

This process is repeated for each requirement and assumption in each scenario, until all are completed.

In systems of a significant size, it is useful to carry out this process at a reasonable level of abstraction. For goal-based models this means the highest level of goal, while for a document hierarchy-based system, it means the business or user requirements, initially at least. This helps to prevent the task of assessment from growing excessively.

Reviewing Requirement Stability

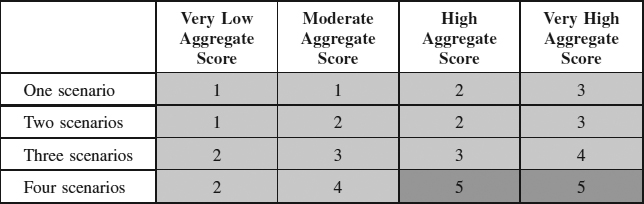

With each requirement/assumption assessed in each of the scenarios, they can be viewed in order of criticality, with the requirements obtaining the highest aggregate score appearing as the most likely to be unstable. It is useful to base this classification on two aspects of the assessment,

- The aggregate score for each requirement assumption

- The number of scenarios in which the requirement/assumption was likely to be unstable.

This classification scheme means that adequate emphasis is given to requirements that are slightly unstable in many future worlds, as well as those that are very unstable in one or two. A matrix such as that shown in Table 6.3 can be used to make such a classification.

This provides a severity index for each requirement, which can be used to prioritise the consideration of the potentially unstable requirements and assumptions.

So far the assessment has made a single pass through the set of requirements and assumptions. Sometimes this will be enough, but it must be recognised that this has only contributed a local view of instability. A more thorough view is possible, if the situation warrants it, by investigating whether the instabilities identified so far, suggest or require instabilities elsewhere.

Instability Propagation

Instability propagation allows us to overcome the purely local view we took initially, and establish whether there are any second-order effects that should be addressed. Before considering whether to include this step in a stability assessment activity, the following factors should be taken into account:

How confident are you in the assessments you made with regard to

- The consistency of your scoring;

- The consistency of your assessment of instability between related requirements and assumptions;

- The scope of your instability assessment (was it over all requirements, or just a high-level subset?);

as well as the time and resources available and the severity of the consequences of having only a partial assessment.

Including a propagation stage adds time and effort, but often improves the scoring. If the scope of assessment (limiting to high-level requirements/goals) was limited; and the whole assessment is not going to be repeated at a later requirements stage, propagation is the only mechanism for driving the assessments down to assumptions and goals that can be made operational.

Instability propagation involves the use of propagation heuristics to question, for each goal, if there are other goals that are similarly affected—for example, the deletion of a goal or requirement might suggest the deletion of all the child goals, or derived requirements. The propagation heuristics describe, for each instability type, the likely areas where related goals could be unstable.

When propagating instability, a scoring system identical to that for the original assessment is used, so that at the end of this process each requirement/assumption has both an initial and a propagated assessment. A similar coding scheme to that identified above can then be applied, in order to identify the most critically unstable requirements/assumptions.

Using the Stability Assessment Results

Stability assessment results are useful in two aspects of the system development life cycle.

Firstly, they are useful within the requirements development activity in order to assist analysts in selecting between alternative requirements, and in examining any ‘obstacles’ (Potts 1995) or threats to the achievement of requirements or assumptions (e.g. ‘Obstacle Analysis’ (van Lamsweerde 2000); Misuse Cases (Sindre and Opdahl 2000; see also Chapter 7 in this volume by Ian Alexander). In this form, the assessment would normally exclude the propagation stage, and make a contribution to the development of better and more robust requirements and assumptions.

Secondly, they are useful at the end of the requirements definition activities. Here propagation activity would normally be useful. In this form, the results of the assessment would be used to inform architectural choices and design constraints. (c.f. Architecture Based Analysis (Kazman 1996, 1998).

WORKED EXAMPLE

As I described, the prerequisites of this approach are the requirements, and the scenarios. This worked example will not cover how these are derived, but the next sections introduce them to provide a comprehensive background.

Goals/Requirements for MSAW

This worked example is based on the goals for a Minimum Safe Altitude Warning (MSAW) system. An MSAW system is intended to reduce the number of occurrences of Controlled Flight into Terrain (CFIT) by providing a ground-based early warning of pilots who are flying below the mandated minimum safe altitude.

In essence, the system uses existing external data sources such as radar returns and terrain and obstacle databases as the raw data feed for proximity detection and prediction algorithms. These algorithms then provide warnings to air traffic controllers about potentially hazardous situations, who in turn can warn the pilot.

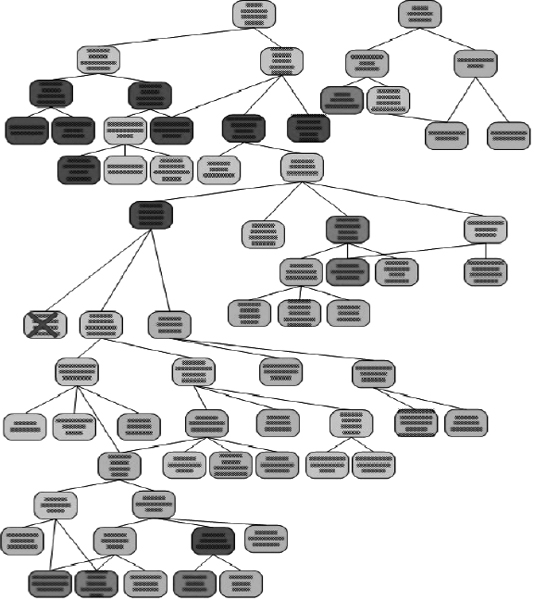

The goal-based model of these requirements is structured like a tree (although technically it should be described as a graph) with the most general and abstract requirements at the top, and the most detailed, operationisable ones at the bottom.

Each goal is related to one or more parents, which explain ‘Why’ it is there, and one or more children, which explain ‘How’ it will be achieved.

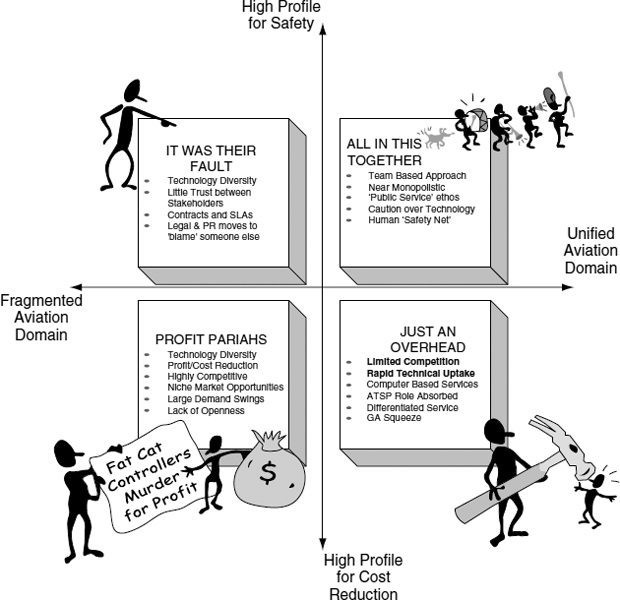

FIGURE 6.2 Air traffic service providers' scenario

In this example, we use a total of four scenarios of the environment surrounding Air Traffic Service Providers. These are the basis on which we can examine the stability of the Goals. These are depicted in Figure 6.2.

Figure 6.3 illustrates one of these scenarios in detail.

Initial Assessment

In the initial assessment stage we examine all the goals, scenario by scenario, scoring each one for its likely stability and instability category, and making any relevant notes, as shown on the screen shot of the assessment tool in Figure 6.4.

For example, in the case of the ‘All in this Together’ scenario, we note that could well be unstable in this scenario. This is because in this scenario technological advance happens fairly easily, and is fairly rapidly introduced, and this coupled with an emphasis on safety means that is possible that warnings would not longer be passed through the controller, but could be sent by data-link directly to the pilot. We can therefore note that in the assessment.

Goal 1—Achieve: Controller Alerted to proximity.

(The controller is alerted to the proximity of an aircraft to the ground. This is currently possible by identifying that an aircraft has hit the ground and/or by predicting that it will hit, and then informing the controller.)

FIGURE 6.3 A scenario

FIGURE 6.4 Stability assessment tool

Assessment Score: ‘2’ Instability Category: ‘Goal Functionality Changes’

Remarks: ‘Likely that this would be removed altogether and replaced with direct alerting.’

Once this process is completed for each goal, in each of the four scenarios, we have a set of instability ratings for each goal.

Results Presentation

Prioritising and colour-coding the goals allows us to take an overview of the most and least stable. Figure 6.5 illustrates how this overview can be used to quickly pinpoint the goals of most concern.

In this picture the red goals are the most unstable, and the green ones most stable. We can therefore concentrate our minds first, on those of greatest concern.

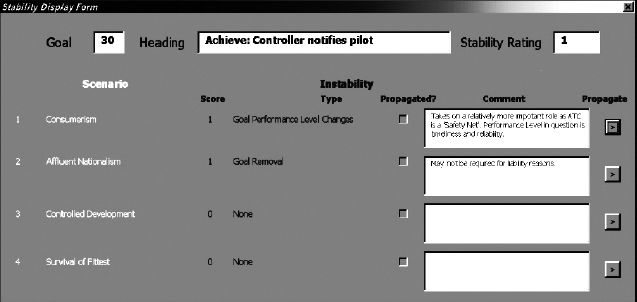

If we then drill down into one of these goals, we can examine the detailed assessments we made in order to make a more considered decision of our assessment. An example of this detail view is shown in Figure 6.6.

On the basis of this information we are in a much better position to decide what we want to do about what we have discovered, for example, can we safely ignore these possibilities? Perhaps we feel that we should make certain architectural or design decisions in order to protect ourselves against this possibility? Perhaps we can use this information to change our requirements.

A final possibility is that we decide we want to examine in some detail, the effects of this particular instability on other parts of our requirements. In that case, we would carry out the propagation activity.

Propagation

Propagation is the activity in which we attempt to overcome the limitations of the local assessments we made earlier. We choose particular Goals, or possibly all the Goals, and use change heuristics to assess what the effects of that instability might be when we traverse the trace links between Goals or Requirements.

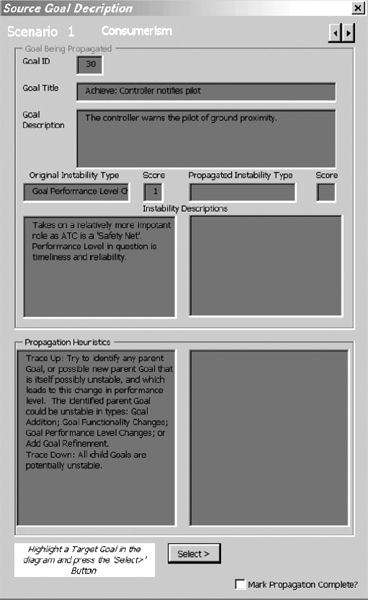

In our example, we might decide that we wish to examine the propagation of a particular goal instability. The screenshot in Figure 6.7 shows the goal we have selected, and the original assessment we made in the ‘Consumerism’ Scenario. Using the Propagation Heuristic shown at the bottom for this instability type, we can identify any parent or child Goals that might be affected. If we find such a Goal we can then edit its original assessment, to provide a propagated instability type and description.

Once we have completed this we have an augmented set of assessments, which we can view in the same way as before—selecting the most critical for overview, and possible resolution action.

FIGURE 6.5 Prioritised and colour-coded overview of goals

FIGURE 6.6 Stability display form – detail of one goal

FIGURE 6.7 Propagation tool

COMPARISONS

Besides the very close comparison we can make between this approach and the classical use of such scenarios in business to assess and select appropriate business decisions, there are a number of close parallels in the systems engineering domain.

Classical requirement stability assessment, for example is an approach to identifying requirement stability, it measures the amount of change in requirements during their development, or over a number of releases of the system to obtain a measure of change. This tends to be a historical approach though, and does not provide much in the way of prediction.

In contrast, Risk Assessment Methods do tend to predict possible problems in the future, but their time horizon is rather different—being focused largely on the project timescale, rather than the system lifetime timescale.

We have already seen the close relationship between the output of this approach and the input to scenario-based assessment techniques such as Obstacle Analysis and Architecture Based Assessment. It could also be used as a creative thinking technique preceding a ‘Misuse Cases’ approach (see Ian Alexander's account in Chapter 7)—helping to elicit knowledge of possible future threats (and therefore likely instabilities when these are designed against).

Most recently, the work of Peri Loucopoulos (see Chapter 21) in using systems dynamic models as ‘design’ tools to explore requirements (no I didn't get my waterfall stages mixed up!) is a most promising-looking partner for this work. The alternative scenarios/dynamic model approach is well established in the business management domain, and may yet prove to be a fruitful technique for system engineers.

KEYWORDS

Scenario

Scenario Planning

Requirements

Assumptions

Goals

Requirement Stability

REFERENCES

Bush, D., Durand, H., Ellison, D., Rhodes-James, C., and Tulloch, A., Alternative Futures for Air Traffic Service Provision in Europe, LBS Project Report, 2001.

Jackson, M., Software Requirements and Specifications, Addison-Wesley, 1995.

van Lamsweerde, A. and Letier, E., Handling obstacles in goal-oriented requirements engineering, IEEE Transactions on Software Engineering, 26(10), 978–1005 2000.

Kazman, R., Abowd, G., Bass, L., and Clements, P., Scenario-based analysis of software architecture, IEEE Software, 13(6), 47–55 1996.

Kazman, R., Klein, M., Barbacci, M., Lipson, H., Longstaff, T., and Carrière, S., The architecture tradeoff analysis method, Proceedings of ICECCS, Monterey, CA, August 1998.

Lehman, M.M. and Belady, L.A., Program Evolution—Processes of Software Change, Academic Press, London, 1985.

Potts, C., Using schematic scenarios to understand user needs, Proceedings of DIS '95—ACM Symposium on Designing Inter-ActiveSystems: Processes, Practices and Techniques, University of Michigan, 1995.

Sindre, G and Opdahl, A, Eliciting security requirements by misuse cases, Proceedings of TOOLS Pacific 2000, November 2000, pp. 20–23, 120–131.

RECOMMENDED READING

On ‘Alternative World’ Scenarios

De Gues, A., The Living Company, Nicholas Brearly, 1999 is an excellent and highly readable introduction to the reasons for the use of scenarios in business planning.

Galt, M., Chicoine-Piper, G., Chicoine-Piper, N., and Hodgson, A., Idon Scenario Thinking, IDON Ltd, 1997 provides a straightforward step-by-step guide to creating scenarios, and the mechanism for using these in decision making. It includes a worked example, and is probably the best text I have seen as a simple introduction. Those weaknesses that there are in this book (it is a little too regimented, and is weak on actually writing engaging scenario descriptions), are addressed in

Schwartz, P., The Art of the Long View, Wiley, 1998 which is, in my opinion, unbeatable for the more confident builder of scenarios. It has excellent examples of scenario use, and a strong section (although less of a step-by-step process) on the stages, activities and tools needed for developing scenarios.

On Requirements Engineering

Robertson, S. and J., Mastering the Requirements Process, Addison-Wesley, 1999 is the best ‘take it off the shelf and do it’ guide to requirements that I have found.