IN THIS CHAPTER

System Center Configuration Manager (ConfigMgr) 2007 represents a significant maturation in Microsoft’s systems management platform. Configuration Manager is an enterprise management tool that provides a total solution for Windows client and server management, including the ability to catalog hardware and software, deliver new software packages and updates, and deploy Windows operating systems with ease. In an increasingly compliance-driven world, Configuration Manager delivers the functionality to detect “shift and drift” in system configuration. ConfigMgr 2007 consolidates information about Windows clients and servers, hardware, and software into a single console for centralized management and control.

Configuration Manager gives you the resources you need to get and stay in control of your Windows environment and helps with managing, configuring, tuning, and securing Windows Server and Windows-based applications. For example, Configuration Manager includes the following features:

• Enterprisewide control and visibility— Whether employing Wake On LAN to power up and apply updates, validating system configuration baselines, or automating client and server operating system deployment, Configuration Manager provides unprecedented control and visibility of your computing resources.

• Automation of deployment and update management tasks— ConfigMgr greatly reduces the administrative effort involved in deployment of client and server operating systems, software applications, and software updates. The scheduling features in software and update deployment ensure minimal interruption to the business. The ConfigMgr summary screens and reporting features provide a convenient view of deployment progress.

• Increased security— Configuration Manager 2007 provides secure management of clients over Internet connections, as well as the capability to validate Virtual Private Network–connected client configurations and remediate deviations from corporate standards. In conjunction with mutual authentication between client and server (available in Configuration Manager native mode only), Configuration Manager 2007 delivers significant advances in security over previous releases.

This chapter serves as an introduction to System Center Configuration Manager 2007. To avoid constantly repeating that very long name, we utilize the Microsoft-approved abbreviation of the product name, Configuration Manager, or simply ConfigMgr. ConfigMgr 2007, the fourth version of Microsoft’s systems management platform, includes numerous additions in functionality as well as security and scalability improvements over its predecessors.

This chapter discusses the Microsoft approach to Information Technology (IT) operations and systems management. This discussion includes an explanation and comparison of the Microsoft Operations Framework (MOF), which incorporates and expands on the concepts contained in the Information Technology Infrastructure Library (ITIL) standard. It also examines Microsoft’s Infrastructure Optimization Model (IO Model), used in the assessment of the maturity of organizations’ IT operations. The IO Model is a component of Microsoft’s Dynamic Systems Initiative (DSI), which aims at increasing the dynamic capabilities of organizations’ IT operations.

These discussions have special relevance in that the objective of all Microsoft System Center products is in the optimization, automation, and process agility and maturity in IT operations.

Why should you use Configuration Manager 2007 in the first place? How does this make your daily life as a systems administrator easier? Although this book covers the features and benefits of Configuration Manager in detail, it definitely helps to have some quick ideas to illustrate why ConfigMgr is worth a look!

Here’s a list of 10 scenarios that illustrate why you might want to use Configuration Manager:

- The bulk of your department’s budget goes toward paying for teams of contractors to perform OS and software upgrades, rather than paying talented people like you the big bucks to implement the platforms and processes to automate and centralize management of company systems.

- You realize systems management would be much easier if you had visibility and control of all your systems from a single management console.

- The laptops used by the sales team have not been updated in 2 years because they never come to the home office.

- You don’t have enough internal manpower to apply updates to your systems manually every month.

- Within days of updating system configurations to meet corporate security requirements, you find several have already mysteriously “drifted” out of compliance.

- When you try to install Vista for the accounting department, you discover Vista cannot run on half the computers, because they only have 256MB of RAM. (It would have been nice to know that when submitting your budget requests!)

- Demonstrating that your organization is compliant with regulations such as Sarbanes-Oxley (SOX), the Health Insurance Portability and Accountability Act (HIPAA), the Federal Information Security Management Act (FISMA), or <insert your own favorite compliance acronym here> has become your new full-time job.

- You spent your last vacation on a trip from desktop to desktop installing Office 2007.

- Your production environment is so diverse and distributed that you can no longer keep track of which software versions should be installed to which system.

- By the time you update your system standards documentation, everything has changed and you have to start all over again!

While trying to bring some humor to the discussion, these topics represent very real problems for many systems administrators. If you are one of those people, then you owe it to yourself to explore how Configuration Manager can be leveraged to solve many of these common issues. These pain points are common to almost all users of Microsoft technologies to some degree, and Configuration Manager holds solutions for all of them.

However, perhaps the most important reason for using Configuration Manager is the peace of mind it brings you as an administrator, knowing that you have complete visibility and control of your IT systems. The stability and productivity this can bring to your organization is a great benefit as well.

The landscape in systems and configuration management has evolved significantly since the first release of Microsoft Systems Management Server, and is experiencing great advancements still today. The proliferation of compliance-driven controls and virtualization (server, desktop, and application) has added significant complexity and exciting new functionality to the management picture.

Configuration Manager 2007 is a software solution that delivers end-to-end management functionality for systems administrators, providing configuration management, patch management, software and operating system distribution, remote control, asset management, hardware and software inventory, and a robust reporting framework to make sense of the various available data for internal systems tracking and regulatory reporting requirements.

These capabilities are significant because today’s IT systems are prone to a number of problems from the perspective of systems management, including the following:

• Configuration “shift and drift”

• Security and control

• Timeliness of asset data

• Automation and enforcement

• Proliferation of virtualization

• Process consistency

This list should not be surprising—these types of problems manifest themselves to varying degrees in IT shops of all sizes. In fact, Forrester Research estimates that 82% of larger IT organizations are pursuing service management, and 67% are planning to increase Windows management. The next sections look at these issues from a systems management perspective.

You may encounter a number of challenges when implementing systems management in a distributed enterprise. These include the following:

• Increasing threats— According to the SANS Institute, the threat landscape is increasingly dynamic, making efficient and proactive update management more important than ever (see http://www.sans.org/top20/).

• Regulatory compliance— Sarbanes-Oxley, HIPAA and many other regulations have forced organizations to adopt and implement fairly sophisticated controls to demonstrate compliance.

• OS and software provisioning— Rolling out the operating system (OS) and software on new workstations and servers, especially in branch offices, can be both time consuming and a logistical challenge.

• Methodology— With the bar for effective IT operations higher than ever, organizations are forced to adapt a more mature implementation of IT operational processes to deliver the necessary services to the organization’s business units more efficiently.

With increasing operational requirements unaccompanied by linear growth in IT staffing levels, organizations must find ways to streamline administration through tools and automation.

As functionality in client and server systems has increased, so too has complexity. Both desktop and server deployment can be very time consuming when performed manually. With the number and variety of security threats increasing every year, timely application of security updates is of paramount importance. Regulatory compliance issues add a new burden, requiring IT to demonstrate that system configurations meet regulatory requirements.

These problems have a common element—all beg for some measure of automation to ensure IT can meet expectations in these areas at the expected level of accuracy and efficiency. To get IT operational requirements in hand, organizations need to implement tools and processes that make OS and software deployment, update management, and configuration monitoring more efficient and effective.

Even in those IT organizations with well-defined and documented change management, procedures fall short of perfection. Unplanned and unwanted changes frequently find their way into the environment, sometimes as an unintended side effect of an approved, scheduled change.

You may be familiar with an old philosophical saying: If a tree falls in a forest and no one is around to hear it, does it make a sound?

Here’s the configuration management equivalent: If a change is made on a system and no one knows, does identifying it make a difference?

The answer to this question is absolutely “yes.” Every change to a system has some potential to affect the functionality or security of the system, or that system’s adherence to corporate or regulatory standards.

For example, adding a feature to a web application component may affect the application binaries, potentially overwriting files or settings replaced by a critical security patch. Or, perhaps the engineer implementing the change sees a setting he or she thinks is misconfigured and decides to just “fix” it while working on the system. In an e-commerce scenario with sensitive customer data involved, this could have potentially devastating consequences.

At the end of the day, your selected systems management platform must bring a strong element of baseline configuration monitoring to ensure configuration standards are implemented and maintained with the required consistency.

Managing systems becomes much more challenging when moving outside the realm of the traditional LAN (local area network)-connected desktop or server computer. Traveling users who rarely connect to the trusted network (other than to periodically change their password) can really make this seem an impossible task.

Just keeping these systems up to date on security patches can easily become a full-time job. Maintaining patch levels and system configurations to corporate standards when your roaming users only connect via the Internet can make this activity exceedingly painful. In reality, remote sales and support staff make this an everyday problem. To add to the quandary, these users are frequently among those installing unapproved applications from unknown sources, subsequently putting the organization at greater risk when they finally do connect to the network.

Point-of-sale (POS) devices running embedded operating systems pose challenges of their own, with specialized operating systems that can be difficult to administer—and for many systems management solutions, they are completely unmanageable. Frequently these systems perform critical functions within the business (such as cash register, automated teller machine, and so on), making the need for visibility and control from configuration and security perspectives an absolute necessity.

Mobile devices have moved from a role of high-dollar phone to a mini-computer used for everything: Internet access, Global Positioning System (GPS) navigation, and storage for all manner of potentially sensitive business data. From the Chief Information Officer’s perspective, ensuring that these devices are securely maintained (and appropriately password protected) is somewhat like gravity. It’s more than a good idea—it’s the law!

But seriously, as computing continues to evolve, and more devices release users from the strictures of office life, the problem only gets larger.

Maintaining a current picture of what is deployed and in use in your environment is a constant challenge due to the ever-increasing pace of change. However, failing to maintain an accurate snapshot of current conditions comes at a cost. In many organizations, this is a manual process involving Excel spreadsheets and custom scripting, and asset data is often obsolete by the time a single pass at the infrastructure is complete.

Without this data, organizations can over-purchase (or worse yet, under-purchase) software licensing. Having accurate asset information can help you get a better handle on your licensing costs. Likewise, without current configuration data, areas including Incident and Problem Management may suffer because troubleshooting incidents will be more error prone and time consuming.

With the perpetually increasing and evolving technology needs of the business, the need to automate resource provisioning, standardize, and enforce standard configurations becomes increasingly important.

Resource provisioning of new workstations or servers can be a very labor-intensive exercise. Installing a client OS and required applications may take a day or longer if performed manually. Ad-hoc scripting to automate these tasks can be a complex endeavor. Once deployed, ensuring the client and server configuration is consistent can seem an insurmountable task. With customer privacy and regulatory compliance at stake, consequences can be severe if this challenge is not met head on.

There’s an old saying: If you fail to plan, you plan to fail. In no area of IT operations is this truer than when considering virtualization technologies.

When dealing with systems management, you have to consider many different functions, such as software and patch deployment, resource provisioning, and configuration management. Managing server and application configuration in an increasingly “virtual” world, where boundaries between systems and applications are not always clear, will require considering new elements of management not present in a purely physical environment.

Virtualization as a concept is very exciting to IT operations. Whether talking about virtualization of servers or applications, the potential for dramatic increases in process automation and efficiency and reduction in deployment costs is very real. New servers and applications can be provisioned in a matter of minutes. With this newfound agility comes a potential downside, which is the reality that virtualization can increase the velocity of change in your environment. The tools used to manage and track changes to a server often fail to address new dynamics that come when virtualization is introduced into a computing environment.

Many organizations make the mistake of taking on new tools and technologies in an adhoc fashion, without first reviewing them in the context of the process controls used to manage the introduction of change into the environment. These big gains in efficiency can lead to a completely new problem—inconsistencies in processes not designed to address the new dynamics that come with the virtual territory.

Many IT organizations still “fly by the seat of their pants” when it comes to identifying and resolving problems. Using standard procedures and a methodology can help minimize risk and solve issues faster.

A methodology is a framework of processes and procedures used by those who work in a particular discipline. You can look at a methodology as a structured process defining the who, what, where, when, and why of one’s operations, and the procedures to use when defining problems, solutions, and courses of action.

When employing a standard set of processes, it is important to ensure the framework you adopt adheres to accepted industry standards or best practices as well as takes into account the requirements of the business—ensuring continuity between expectations and the services delivered by the IT organization. Consistently using a repeatable and measurable set of practices allows an organization to quantify more accurately its progress to facilitate the adjustment of processes as necessary for improving future results. The most effective IT organizations build an element of self-examination into their service management strategy to ensure processes can be incrementally improved or modified to meet the changing needs of the business.

With IT’s continually increased role in running successful business operations, having a structured and standard way to define IT operations aligned to the needs of the business is critical when meeting the expectations of business stakeholders. This alignment results in improved business relationships in which business units engage IT as a partner in developing and delivering innovations to drive business results.

Systems management can be intimidating when you consider the fact that the problems described to this point could happen even in an ostensibly “managed” environment. However, these examples just serve to illustrate that the very processes used to manage change in our environments must themselves be reviewed periodically and updated to accommodate changes in tools and technologies employed from the desktop to the datacenter.

Likewise, meeting the expectations of both the business and compliance regulation can seem an impossible task. As technology evolves, so must IT’s thinking, management tools, and processes. This makes it necessary to embrace continual improvement in those methodologies used to reduce risk while increasing agility in managing systems, keeping pace with the increasing velocity of change.

Systems management is a journey, not a destination. That is to say, it is not something achieved at a point in time. Systems management encompasses all points in the IT service triangle, as displayed in Figure 1.1, including a set of processes and the tools and people that implement them. Although the role of each varies at different points within the IT service life cycle, the end goals do not change. How effectively these components are utilized determines the ultimate degree of success, which manifests itself in the outputs of productive employees producing and delivering quality products and services.

At a process level, systems management touches nearly every area of your IT operations. It can continually manage a computing resource, such as a client workstation, from the initial provisioning of the OS and hardware to end-of-life, when user settings are migrated to a new machine. The hardware and software inventory data collected by your systems management solution can play a key role in incident and problem management, by providing information that facilitates faster troubleshooting.

As IT operations grow in size, scope, complexity, and business impact, the common denominator at all phases is efficiency and automation, based on repeatable processes that conform to industry best practices. Achieving this necessitates capturing subject matter expertise and business context into a repeatable, partially or fully automated process. At the beginning of the service life cycle is the service provisioning, which from a systems management perspective means OS and software deployment. Automation at this phase can save hours or days of manual deployment effort in each iteration.

After resources are in production, the focus expands to include managing and maintaining systems, via ongoing activities IT uses to manage the health and configuration of systems. These activities may touch areas such as configuration management, by monitoring for unwanted changes in standard system and application configuration baselines.

As the service life cycle continues, systems management can affect release management in the form of software upgrades. Activities include software-metering activities, such as reclaiming unused licenses for reuse elsewhere. If you are able to automate these processes to a great degree, you achieve higher reliability and security, greater availability, better asset allocation, and a more predictable IT environment. These translate into business agility, more efficient, less expensive operations, with a greater ability to respond quickly to changing conditions.

Reducing costs and increasing productivity in IT Service Management are important because efficiency in operations frees up money for innovation and product improvements. Information security is also imperative because the price tag of compromised systems and data recovery from security exposures can be large, and those costs continue to rise each year.

Microsoft utilizes a multifaceted approach to IT Service Management. This strategy includes advancements in the following areas:

• Adoption of a model-based management strategy (a component of the Dynamic Systems Initiative, discussed in the next section, “Microsoft’s Dynamic Systems Initiative”) to implement synthetic transaction technology. Configuration Manager 2007 delivers Service Modeling Language–based models in its Desired Configuration Management (DCM) feature, allowing administrators to define intended configurations.

• Using an Infrastructure Optimization (IO) Model as a framework for aligning IT with business needs and as a standard for expressing an organization’s maturity in service management. The “Optimizing Your Infrastructure” section of this chapter discusses the IO Model further. The IO Model describes your IT infrastructure in terms of cost, security risk, and operational agility.

• Supporting a standard Web Services specification for system management. WS-Management is a specification of a SOAP-based protocol, based on Web Services, used to manage servers, devices, and applications (SOAP stands for Simple Object Access Protocol). The intent is to provide a universal language that all types of devices can use to share data about themselves, which in turn makes them more easily managed. Support for WS-Management is included with Windows Vista and Windows Server 2008, and will ultimately be leveraged by multiple System Center components (beginning with Operations Manager 2007).

• Integrating infrastructure and management into OS and server products, by exposing services and interfaces that management applications can utilize.

• Building complete management solutions on this infrastructure, either through making them available in the operating system or by using management products such as Configuration Manager, Operations Manager, and other components of the System Center family.

• Continuing to drive down the complexity of Windows management by providing core management infrastructure and capabilities in the Windows platform itself, thus allowing business and management application developers to improve their infrastructures and capabilities. Microsoft believes that improving the manageability of solutions built on Windows Server System will be a key driver in shaping the future of Windows management.

A large percentage of IT departments’ budgets and resources typically focuses on mundane maintenance tasks such as applying software patches or monitoring the health of a network, without leaving the staff with the time or energy to focus on more exhilarating (and more productive) strategic initiatives.

The Dynamic Systems Initiative, or DSI, is a Microsoft and industry strategy intended to enhance the Windows platform, delivering a coordinated set of solutions that simplifies and automates how businesses design, deploy, and operate their distributed systems. Using DSI helps IT and developers create operationally aware platforms. By designing systems that are more manageable and automating operations, organizations can reduce costs and proactively address their priorities.

DSI is about building software that enables knowledge of an IT system to be created, modified, transferred, and operated on throughout the life cycle of that system. It is a commitment from Microsoft and its partners to help IT teams capture and use knowledge to design systems that are more manageable and to automate operations, which in turn reduce costs and give organizations additional time to focus proactively on what is most important. By innovating across applications, development tools, the platform, and management solutions, DSI will result in

• Increased productivity and reduced costs across all aspects of IT;

• Increased responsiveness to changing business needs;

• Reduced time and effort required to develop, deploy, and manage applications.

Microsoft is positioning DSI as the connector of the entire system and service life cycles.

DSI focuses on automating datacenter operational jobs and reducing associated labor through self-managing systems. Here are several examples where Microsoft products and tools integrate with DSI:

• Configuration Manager employs model-based configuration baseline templates in its Desired Configuration Management feature to automate identification of undesired shifts in system configurations.

• Visual Studio is a model-based development tool that leverages SML, enabling operations managers and application architects to collaborate early in the development phase and ensure applications are modeled with operational requirements in mind.

• Windows Server Update Services (WSUS) enables greater and more efficient administrative control through modeling technology that enables downstream systems to construct accurate models representing their current state, available updates, and installed software.

Note

SDM and SML—What’s the Difference?

Microsoft originally used the System Definition Model (SDM) as its standard schema with DSI. SDM was a proprietary specification put forward by Microsoft. The company later decided to implement SML, which is an industrywide published specification used in heterogeneous environments. Using SML helps DSI adoption by incorporating a standard that Microsoft’s partners can understand and apply across mixed platforms. Service Modeling Language is discussed later in the section “The Role of Service Modeling Language in IT Operations.”

DSI focuses on automating datacenter operations and reducing total cost of ownership (TCO) through self-managing systems. Can logic be implemented in management software so that the management software can identify system or application issues in real time and then dynamically take actions to mitigate the problem? Consider the scenario where, without operator intervention, a management system moves a virtual machine running a line-of-business application because the existing host is experiencing an extended spike in resource utilization. This is actually a reality today, delivered in the quick migration feature of Virtual Machine Manager 2008; DSI aims to extend this type of self-healing and self-management to other areas of operations.

In support of DSI, Microsoft has invested heavily in three major areas:

• Systems designed for systems management— Microsoft is delivering development and authoring tools—such as Visual Studio—that enable businesses to capture the knowledge of everyone from business users and project managers to the architects, developers, testers, and operations staff using models. By capturing and embedding this knowledge into the infrastructure, organizations can reduce support complexity and cost.

• An operationally aware platform— The core Windows operating system and its related technologies are critical when solving everyday operational and service challenges. This requires designing the operating system services for manageability. Additionally, the operating system and server products must provide rich instrumentation and hardware resource virtualization support.

• Virtualized applications and server infrastructure— Virtualization of servers and applications improves the agility of the organization by simplifying the effort involved in modifying, adding, or removing the resources a service utilizes in performing work.

Note

The Microsoft Suite for IT Operations

End-to-end automation could include update management, availability and performance monitoring, change and configuration management, and rich reporting services. Microsoft’s System Center is a family of system management products and solutions that focuses on providing you with the knowledge and tools to manage your IT infrastructure. The objective of the System Center family is to create an integrated suite of systems management tools and technologies, thus helping to ease operations, reduce troubleshooting time, and improve planning capabilities.

There are three architectural elements behind the DSI initiative:

• That developers have tools (such as Visual Studio) to design applications in a way that makes them easier for administrators to manage after those applications are in production

• That Microsoft products can be secured and updated in a uniform way

• That Microsoft server applications are optimized for management, to take advantage of Operations Manager 2007

DSI represents a departure from the traditional approach to systems management. DSI focuses on designing for operations from the application development stage, rather than a more customary operations perspective that concentrates on automating task-based processes. This strategy highlights the fact that Microsoft’s Dynamic Systems Initiative is about building software that enables knowledge of an IT system to be created, modified, transferred, and used throughout the life cycle of a system. DSI’s core principles of knowledge, models, and the life cycle are key in addressing the challenges of complexity and manageability faced by IT organizations. By capturing knowledge and incorporating health models, DSI can facilitate easier troubleshooting and maintenance, and thus lower TCO.

A key underlying component of DSI is the XML-based specification called the Service Modeling Language (SML). SML is a standard developed by several leading information technology companies that defines a consistent way for infrastructure and application architects to define how applications, infrastructure, and services are modeled in a consistent way.

SML facilitates modeling systems from a development, deployment, and support perspective with modular, reusable building blocks that eliminate the need to reinvent the wheel when describing and defining a new service. The end result is systems that are easier to develop, implement, manage, and maintain, resulting in reduced TCO to the organization. SML is a core technology that will continue to play a prominent role in future products developed to support the ongoing objectives of DSI.

Note

SML Resources on the Web

For more information on Service Modeling Language, view the latest draft of the SML standard at http://www.w3.org/TR/sml/. For additional technical information on SML from Microsoft, see http://technet.microsoft.com/en-us/library/bb725986.aspx.

ITIL is widely accepted as an international standard of best practices for operations management, and Microsoft has used ITIL v3 as the basis for Microsoft Operations Framework (MOF) v4, the current version of its own operations framework. Warning: Fasten your seatbelt, because this is where the fun really begins!

As part of Microsoft’s management approach, the company relied on an international standards-setting body as its basis for developing an operational framework. The British Office of Government Commerce (OGC) provides best-practices advice and guidance on using Information Technology in service management and operations. The OGC also publishes the IT Infrastructure Library, known as ITIL.

ITIL provides a cohesive set of best practices for IT Service Management (ITSM). These best practices include a series of books giving direction and guidance on provisioning quality IT services and facilities needed to support Information Technology. The documents are maintained by the OGC and supported by publications, qualifications, and an international users group.

Started in the 1980s, ITIL is under constant development by a consortium of industry IT leaders. The ITIL covers a number of areas and is primarily focused on ITSM; its IT Infrastructure Library is considered to be the most consistent and comprehensive documentation of best practices for IT Service Management worldwide.

ITSM is a business-driven, customer-centric approach to managing Information Technology. It specifically addresses the strategic business value generated by IT and the need to deliver high-quality IT services to one’s business organization. ITSM itself has two main components:

• Service support

• Service delivery

Philosophically speaking, ITSM focuses on the customer’s perspective of IT’s contribution to the business, which is analogous to the objectives of other frameworks in terms of their consideration of alignment of IT service support and delivery with business goals in mind.

Although ITIL describes the what, when, and why of IT operations, it stops short of describing how a specific activity should be carried out. A driving force behind its development was the recognition that organizations are increasingly dependent on IT for satisfying their corporate objectives relating to both internal and external customers, which increases the requirement for high-quality IT services. Many large IT organizations realize that the road to a customer-centric service organization runs along an ITIL framework.

ITIL also specifies keeping measurements or metrics to assess performance over time. Measurements can include a variety of statistics, such as the number and severity of service outages, along with the amount of time it takes to restore service. These metrics can be used to quantify to management how well IT is performing. This information can be particularly useful for justifying resources during the next budget process!

ITIL is generally accepted as the “best practices” for the industry. Being technology-agnostic, it is a foundation that can be adopted and adapted to meet the specific needs of various IT organizations. Although Microsoft chose to adopt ITIL as a standard for its own IT operations for its descriptive guidance, Microsoft designed MOF to provide prescriptive guidance for effective design, implementation, and support of Microsoft technologies.

MOF is a set of publications providing both descriptive (what to do, when and why) and prescriptive (how to do) guidance on IT Service Management. The key focus in developing MOF was providing a framework specifically geared toward managing Microsoft technologies. Microsoft created the first version of the MOF in 1999. The latest iteration of MOF (version 4) is designed to further

• Update MOF to include the full end-to-end IT service life cycle;

• Let IT governance serve as the foundation of the life cycle;

• Provide useful, easily consumable best practice–based guidance;

• Simplify and consolidate service management functions (SMFs), emphasizing workflows, decisions, outcomes, and roles.

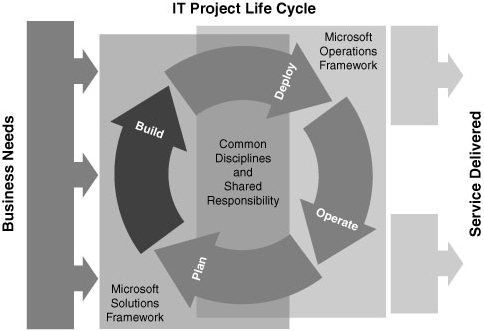

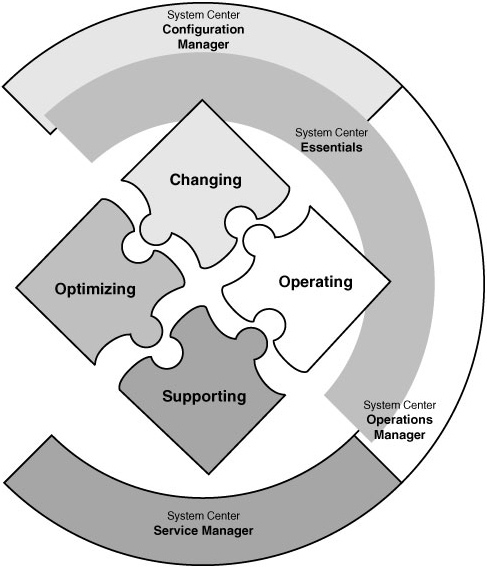

MOF is designed to complement Microsoft’s previously existing Microsoft Solutions Framework (MSF), which provides guidance for application development solutions. Together, the combined frameworks provide guidance throughout the IT life cycle, as shown in Figure 1.2.

Tip

Using MSF for ConfigMgr Deployment

Microsoft uses MOF to describe IT operations and uses Configuration Manager as a tool to put that framework into practice. However, Configuration Manager 2007 is also an application and, as such, is best deployed using a disciplined approach. Although MSF is geared toward application development, it can be adapted to support infrastructure solution design and deployment, as discussed in Chapter 4, “Configuration Manager Solution Design.”

At its core, the MOF is a collection of best practices, principles, and models. It provides direction to achieve reliability, availability, supportability, and manageability of mission-critical production systems, focusing on solutions and services using Microsoft products and technologies. MOF extends ITIL by including guidance and best practices derived from the experience of Microsoft’s internal operations groups, partners, and customers worldwide. MOF aligns with and builds on the IT Service Management practices documented within ITIL, thus enhancing the supportability built on Microsoft’s products and technologies.

MOF uses a process model that describes Microsoft’s approach to IT operations and the service management life cycle. The model organizes the core ITIL processes of service support and service delivery, and it includes additional MOF processes in the four quadrants of the MOF process model, as illustrated in Figure 1.3.

It is important to note that the activities pictured in the quadrants illustrated in Figure 1.3 are not necessarily sequential. These activities can occur simultaneously within an IT organization. Each quadrant has a specific focus and tasks, and within each quadrant are policies, procedures, standards, and best practices that support specific operations management–focused tasks.

Configuration Manager 2007 can be employed to support operations management tasks in different quadrants of the MOF Process Model. Let’s look briefly at each of these quadrants and see how one can use ConfigMgr to support MOF:

• Changing— This quadrant represents instances where new service solutions, technologies, systems, applications, hardware, and processes have been introduced.

The software and OS deployment features of ConfigMgr can be used to automate many activities in the Changing quadrant.

• Operating— This quadrant concentrates on performing day-to-day tasks efficiently and effectively.

ConfigMgr includes many operational tasks that you can initiate from the Configuration Manager console, or that can be automated completely. These are available through various product components, such as update management and software deployment features. The Network Access Protection feature can be utilized to verify clients connecting to the network meet certain corporate criteria, such as antivirus software signatures, before being granted full access to resources.

• Supporting— This quadrant represents the resolution of incidents, problems, and inquiries, preferably in a timely manner.

Using the Desired Configuration Management feature of ConfigMgr in conjunction with software deployment, widespread shifts in system configurations can be identified and reversed with a minimum of effort.

• Optimizing— This quadrant focuses on minimizing costs while optimizing performance, capacity, and availability in the delivery of IT services.

ConfigMgr reporting delivers in a number of functional areas of IT operations. For example, out of the box reports provide instant insight into hardware readiness for operating system deployment to help minimize the hands-on aspects of hardware assessment in upgrade planning. In conjunction with the software metering and asset intelligence features of Configuration Manager, reports can provide insight into unused software licenses that can be reclaimed for use elsewhere.

Service Level Agreements and Operating Level Agreements (OLAs) are tools many organizations use in defining accepted levels of operation and ability. Configuration Manager includes the ability to schedule software and update deployment, as well as to define maintenance windows in support of SLAs and OLAs.

Additional information regarding the MOF Process Model is available at http://go.microsoft.com/fwlink/?LinkId=50015.

Microsoft believes that ITIL is the leading body of knowledge of best practices; for that reason, it uses ITIL as the foundation for MOF. Rather than replacing ITIL, MOF complements it and is similar to ITIL in several ways:

• MOF (with MSF) spans the entire IT life cycle.

• Both MOF and ITIL are based on best practices for IT management, drawing on the expertise of practitioners worldwide.

• The MOF body of knowledge is applicable across the business community—from small businesses to large enterprises. MOF also is not limited only to those using the Microsoft platform in a homogenous environment.

• As is the case with ITIL, MOF has expanded to be more than just a documentation set. In fact, MOF is now intertwined with another System Center component, Operations Manager 2007!

Additionally, Microsoft and its partners provide a variety of resources to support MOF principles and guidance, including self-assessments, IT management tools that incorporate MOF terminology and features, training programs and certification, and consulting services.

You can think of ITIL and ITSM as providing a framework for IT to rethink the ways in which it contributes to and aligns with the business. ISO 20000, which is the first international standard for IT Service Management, institutionalizes these processes. ISO 20000 helps companies to align IT services and business strategy, to create a formal framework for continual service improvement, and provides benchmarks for comparison to best practices.

Published in December 2005, ISO 20000 was developed to reflect the best-practice guidance contained within ITIL. The standard also supports other IT Service Management frameworks and approaches, including MOF, Capability Maturity Model Integration (CMMi) and Six Sigma. ISO 20000 consists of two major areas:

• Part 1 promotes adopting an integrated process approach to deliver managed services effectively that meets business and customer requirements.

• Part 2 is a “code of practice” describing the best practices for service management within the scope of ISO 20000-1.

These two areas—what to do and how to do it—have similarities to the approach taken by the other standards, including MOF.

ISO 20000 goes beyond ITIL, MOF, Six Sigma, and other frameworks in providing organizational or corporate certification for organizations that effectively adopt and implement the ISO 20000 code of practice.

Tip

About CMMi and Six Sigma

CMMi is a process-improvement approach that provides organizations with the essential elements of effective processes. It can be used to guide process improvement—across a project, a division, or an entire organization—thus helping to integrate traditionally separate organizational functions, set process improvement goals and priorities, provide guidance for quality processes, and provide a point of reference for appraising current processes.

Six Sigma is a business management strategy, originally developed by Motorola, which seeks to identify and remove the causes of defects and errors in manufacturing and business processes.

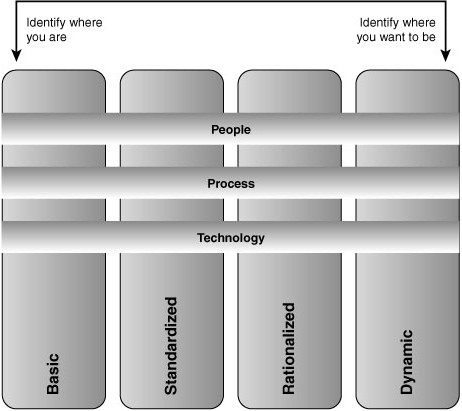

According to Microsoft, analysts estimate that over 70% of the typical IT budget is spent on infrastructure—managing servers, operating systems, storage, and networking. Add to that the challenge of refreshing and managing desktop and mobile devices, and there’s not much left over for anything else. Microsoft describes an Infrastructure Optimization Model that categorizes the state of one’s IT infrastructure, describing the impacts on cost, security risks, and the ability to respond to changes. Using the model shown in Figure 1.4, you can identify where your organization is, and where you want to be:

• Basic— Reactionary, with much time spent fighting fires

• Standardized— Gaining control

• Rationalized— Enabling the business

• Dynamic— Being a strategic asset

Although most organizations are somewhere between the basic and standardized levels in this model, typically one would prefer to be a strategic asset rather than fighting fires. Once you know where you are in the model, you can use best practices from ITIL and guidance from MOF to develop a plan to progress to a higher level. The IO Model describes the technologies and steps organizations can take to move forward, whereas the MOF explains the people and processes required to improve that infrastructure. Similar to ITSM, the IO Model is a combination of people, processes, and technology.

More information about Infrastructure Optimization is available at http://www.microsoft.com/technet/infrastructure.

At the Basic level, your infrastructure is hard to control and expensive to manage. Processes are manual, IT policies and standards are either nonexistent or not enforced, and you don’t have the tools and resources (or time and energy) to determine the overall health of your applications and IT services. Not only are your desktop and server management costs out of control, but you are in reactive mode when it comes to security threats. In addition, you tend to use manual rather than automated methods for applying software deployments and patches. To try to put a bit of humor into this, you could say that computer management has you all tied up, like the system administrator shown in Figure 1.5.

Does this sound familiar? If you can gain control of your environment, you may be more effective at work! Here are some steps to consider:

• Develop standards, policies, and controls.

• Alleviate security risks by developing a security approach throughout your IT organization.

• Adopt best practices, such as those found in ITIL, and operational guidance found in MOF.

• Build IT to become a strategic asset.

If you can achieve operational nirvana, this will go a long way toward your job satisfaction and IT becoming a constructive part of your business.

A Standardized infrastructure introduces control by using standards and policies to manage desktops and servers. These standards control how you introduce machines into your network. As an example, using Directory Services will manage resources, security policies, and access to resources. Shops in a Standardized state realize the value of basic standards and some policies, but still tend to be reactive. Although you now have a managed IT infrastructure and are inventorying your hardware and software assets and starting to manage licenses, your patches, software deployments, and desktop services are not yet automated. Security-wise, the perimeter is now under control, although internal security may still be a bit loose.

To move from a Standardized state to the Rationalized level, you will need to gain more control over your infrastructure and implement proactive policies and procedures. You might also begin to look at implementing service management. At this stage, IT can also move more toward becoming a business asset and ally, rather than a burden.

At the Rationalized level, you have achieved firm control of desktop and service management costs. Processes and policies are in place and beginning to play a large role in supporting and expanding the business. Security is now proactive, and you are responding to threats and challenges in a rapid and controlled manner.

Using technologies such as lite-touch and zero-touch operating system deployment helps you to minimize costs, deployment time, and technical challenges for system rollouts. Because your inventory is now under control, you have minimized the number of images to manage, and desktop management is now largely automated. You also are purchasing only the software licenses and new computers the business requires, giving you a handle on costs. Security is now proactive with policies and control in place for desktops, servers, firewalls, and extranets.

At the Dynamic level, your infrastructure is helping run the business efficiently and stay ahead of competitors. Your costs are now fully controlled. You have also achieved integration between users and data, desktops and servers, and the different departments and functions throughout your organization.

Your Information Technology processes are automated and often incorporated into the technology itself, allowing IT to be aligned and managed according to business needs. New technology investments are able to yield specific, rapid, and measurable business benefits. Measurement is good—it helps you justify the next round of investments!

Using self-provisioning software and quarantine-like systems to ensure patch management and compliance with security policies allows you to automate your processes, which in turn improves reliability, lowers costs, and increases your service levels.

According to IDC research, very few organizations achieve the Dynamic level of the Infrastructure Optimization Model—due to the lack of availability of a single toolset from a single vendor to meet all requirements. Through execution on its vision in DSI, Microsoft aims to change this. To read more on this study, visit http://download.microsoft.com/download/a/4/4/a4474b0c-57d8-41a2-afe6-32037fa93ea6/IDC_windesktop_IO_whitepaper.pdf.

System Center Configuration Manager 2007 is Microsoft’s software platform for addressing systems management issues. It is a key component in Microsoft’s management strategy and System Center that can be utilized to bridge many of the gaps in service support and delivery. Configuration Manager 2007 was designed around four key themes:

• Security— ConfigMgr delivers numerous security enhancements over its predecessor, such as the mutual authentication of native mode and Network Access Protection (NAP), which in conjunction with the NAP feature available with Windows Server protects assets connecting to the network by enforcing compliance with system health requirements such as antivirus version.

• Simplicity— ConfigMgr delivers a simplified user interface with fewer top-level icons, organized in a way that makes resources easier to locate. Investments in simplicity have been made throughout the user interface (UI) in several features, such as the simplified wizard-based UI and common rule templates in DCM 2.0. Such improvements are also evident in the areas of software deployment and metering, as well as OS deployment. Improvements in branch office support also serve to not only simplify management of the branch office, but also reduce ConfigMgr infrastructure costs in these scenarios.

• Manageability— Some of the most important improvements in ConfigMgr come in the form of manageability improvements in common “fringe” scenarios where bandwidth or connectivity are in short supply. Offline OS and driver packages can now be created to support OS deployment in scenarios with no or low-bandwidth connectivity. Native Wake On LAN support makes patching workstation after hours a more hands-off scenario. Internet-Based Client Management (ICBM) is now a reality, providing management for remote clients not connected to the corporate network. Finally, the update management feature of ConfigMgr supports scans the WSUS Server as opposed to distributing a local copy of the catalog to each client.

• Operating system deployment— Systems Management Server (SMS) 2003’s OS deployment feature (OSD) has been integrated into the product, and Microsoft investments in this area have made the feature truly enterprise-ready. For instance, OSD now supports both client and server OS deployment from the same interface, eliminating the need for a separate tool for server deployment.

The driver catalog feature available with OS deployment eliminates the need for a separate OS image for each driver set. Likewise, the task sequencer accommodates configuration of software deployment in conjunction with OS deployment through a wizard more easily than ever before.

Additionally, OEM and offline scenarios are now fully supported through OS deployment using removable media.

While centralized management and visibility are benefits of the platform, ConfigMgr 2007 employs a distributed architecture that delivers an agent-based solution. This brings numerous advantages:

• Once client policy is passed to the ConfigMgr client by the management point, data collection is managed locally on each managed computer, which distributes the load of collecting and handling information. This type of distributed management offers a clear scalability advantage, in that the load on the ConfigMgr server roles is greatly reduced. From the perspective of network load, because all the script execution, Windows Management Instrumentation (WMI) calls, and such are local to the client, network traffic is reduced as well. Data is then passed from the ConfigMgr client back to the management point and is ultimately inserted into the site database, and can then be viewed through the ConfigMgr console.

• A distributed model also enables fault tolerance and flexibility in the event of interruptions in network connectivity. If the network is unavailable, the local client agents still collect information. This model also reduces the impact of data collection on the network by forwarding only information that needs forwarding.

• With a distributed server topology that allows clients to connect to the ConfigMgr server in their local site, clients can access resources no matter where they may roam. This model can reduce response time and improve compliance in a large enterprise, where a traveling client might otherwise attempt to pull software across a slow wide area network (WAN) link, or even require manual intervention to receive needed software applications or updates.

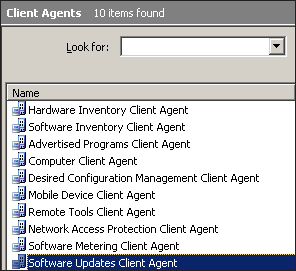

The functionality implemented at the ConfigMgr client is determined by the client agents that are enabled for that client. There are 10 client agents, each of which delivers a subset of ConfigMgr functionality. The client agents, displayed in Figure 1.6, include the following:

• Hardware Inventory

• Software Inventory

• Advertised Programs

• Computer

• Desired Configuration Management

• Mobile Device

• Remote Tools

• Network Access Protection

• Software Metering

• Software Updates

Data is forwarded from the client to the ConfigMgr site server, which inserts data into the ConfigMgr database. From here, data is available for use in a variety of reporting and filtering capacities, allowing granular customization in terms of how data is presented to administrators in the Configuration Manager console.

In an environment with hundreds or even thousands of client and server systems, automating common software provisioning activities becomes a critical component to business agility. Productivity suffers when resources cannot be deployed in a timely manner with a consistent and predictable configuration. Once resources are deployed, ensuring systems are maintained with a consistent and secure configuration can be not only of operational importance, but of legal importance as well. ConfigMgr has several features to address the layers of process automation required to provision and maintain systems in a distributed enterprise. The following sections peel back the layers to explore common issues in each phase and examine how ConfigMgr 2007 addresses them.

One process frequently automated in large IT environments is software deployment. Software deployment can be a time-consuming process, and automating the installation or upgrade of applications such as the Microsoft Office suite can be a huge timesaver. What is perhaps most impressive about the software deployment capabilities of ConfigMgr is the flexibility and control the administrator has in determining what software to deploy, to whom it is deployed, and how it is presented. The software deployment capabilities of ConfigMgr include a range of options, such as the ability to advertise a software package for installation at the user’s option and to assign and deploy by a target deadline. The feature handles software upgrades as easily as new deployments, making that Office 2007 upgrade much less laborious.

Let’s take software deployment a step further. Have you ever asked yourself, “Who is actually using Application X among the users for whom it is installed?” Well, by using the software metering functionality in ConfigMgr, it is possible to report on instances of a particular application that have not been used in a certain period of time. This allows administrators to reclaim unused licenses for reuse elsewhere, saving the organization money on software licensing.

In ConfigMgr 2007 Release 2 (R2), software deployment takes another leap forward with adding support for deployment of virtual applications (using Microsoft Application Virtualization version 4.5) to ConfigMgr clients from the ConfigMgr distribution points. You can read a detailed accounting of software deployment in ConfigMgr in Chapter 14, “Distributing Packages.”

If manually deploying applications is painful from a time perspective, operating system deployment would be excruciating. You can move a step beyond software deployment to operating system deployment in ConfigMgr, which allows configuring of the automated deployment for both the client and server OS using the same interface in the Configuration Manager console.

One of the most common areas of complexity in OS deployment is device drivers. In the past, drivers have forced administrators to maintain multiple OS images, each image containing the drivers for a particular system manufacturer and model. OS deployment in ConfigMgr 2007 introduces a new feature called driver catalogs. Using driver catalogs lets you maintain a single OS image. Here’s how it works: A scan of driver catalogs is performed at runtime to identify and extract the appropriate drivers for a target system. This allows the teams responsible for desktop and server deployment to maintain a single golden OS image along with multiple driver catalogs for the various hardware manufacturers and systems models. There are some limitations here, which are discussed in Chapter 19, “Operating System Deployment.”

Task sequences take automation of OS and software deployment yet one step further, allowing administrators, through a relatively simple wizard interface, to define a sequence of actions, incorporating both OS and software deployment activities into an ordered sequence of events. This enables nearly full automation of the resource-provisioning process.

While on the topic, the value of task sequences in advertisements is often overlooked. Task sequences can be deployed as advertisements, allowing administrators to control the order of software distribution and reboot handling, and as diagnostic actions to analyze and respond to those systems with configurations out of compliance with corporate standards.

A detailed walkthrough of operating system deployment in ConfigMgr is included in Chapter 19.

Once you automate the provisioning process, what can be done to ensure system configurations remain consistent with corporate standards throughout the environment? With the proliferation of legislated regulatory requirements, ensuring configurations meet a certain standard is critical. The fines levied against an organization for noncompliance and breaching these requirements when sensitive client data is involved can be quite costly. This is an area that cannot be addressed by simple hardware and software inventory, making visibility in this area historically quite challenging. This is where the new Desired Configuration Management feature of ConfigMgr comes into play.

DCM allows administrators to define a list of desired settings (called configuration items) into a group of desired settings for a particular set of target systems. This is known as a configuration baseline. To facilitate faster adoption, Microsoft provides predefined configuration baselines (templates, so to speak) called configuration packs, available as free downloads from Microsoft’s website at http://technet.microsoft.com/en-us/configmgr/cc462788.aspx. Microsoft provides configuration packs as a starting point to help organizations evaluate Microsoft server applications against Microsoft best practices or regulatory compliance requirements, such as Sarbanes-Oxley or HIPAA.

With DCM reports (available by default), administrators can identify systems that have “drifted” out of compliance and take corrective action. Although there is no automated enforcement functionality in this version of DCM, noncompliant systems can be dynamically grouped in a collection and then targeted for software deployment, providing some measure of automation in bringing systems back into compliance.

You can read more about Desired Configuration Management in ConfigMgr in Chapter 16, “Desired Configuration Management.”

The update management and network access protection features in ConfigMgr provide a platform for securing clients more effectively than ever before. The following sections discuss these capabilities.

Microsoft overhauled the entire patch management process for ConfigMgr 2007, and the product uses WSUS 3.0 as its base technology for patch distribution to clients. However, ConfigMgr extends native WSUS capabilities, grouping clients based on user-defined criteria (in collections) and updates, as well as scheduling update packages of desired patches, providing more control than with WSUS alone. Using the maintenance window feature of ConfigMgr, you can define a window of time during which a particular group of clients should receive updates, thus ensuring the application of updates does not interrupt normal business. Microsoft recommends a four-phase patch management process to ensure your environment is appropriately secured (see Figure 1.7). You can read more about update management in ConfigMgr in Chapter 15, “Patch Management.”

Many organizations have client machines, such as those belonging to sales staff working remotely, that rarely access the corporate network and make timely application of updates to the OS and applications very challenging. Using the Internet-Based Client Management feature in ConfigMgr in conjunction with an Internet-based management point, you can still deliver updates to clients that never attach to the corporate network. This ensures that clients outside the intranet on the local area network maintain patch levels similar to clients inside the network.

However, when Internet-based clients do attach to the trusted network, updates can resume seamlessly on the intranet. This intelligent roaming capability works in both directions, allowing clients to move seamlessly between Internet and intranet connectivity.

You can read more on IBCM in ConfigMgr in Chapter 6, “Architecture Design Planning.”

As the saying goes, “one rotten apple can spoil the barrel.” To that effect, clients connecting to the corporate network with computers that are not appropriately patched or perhaps not running antivirus software are always a concern. When integrated with the Network Access Protection functionality delivered in Windows Server 2008, the NAP feature in ConfigMgr can help IT administrators dynamically control the access of clients that do not meet corporate standards for patch levels, in addition to antivirus and other standard configurations.

NAP allows network administrators to define granular levels of network access based on who a client is, the groups to which the client belongs, and the degree to which that client is compliant with corporate governance policy. Here’s how it works: If a client is not compliant, NAP provides a policy mechanism to compare client settings to corporate standard settings, and then automatically restricts the noncompliant client to a quarantine network where resources can be used to bring the client back into compliance, thus dynamically increasing its level of network access as the required configuration criteria are met.

Chapter 15 provides additional information about Network Access Protection.

You cannot use information you cannot see. The ability to view the state and status of both the resources and processes in your environment is a critical component of IT operations because it helps to understand where attention is needed. One of the most powerful aspects of the Configuration Manager console (a Microsoft Management Console [MMC] 3.0 application) in ConfigMgr 2007 is the visibility it brings to all status of software, OS and update deployment, and inventory and configuration compliance of client agents deployed in the environment.

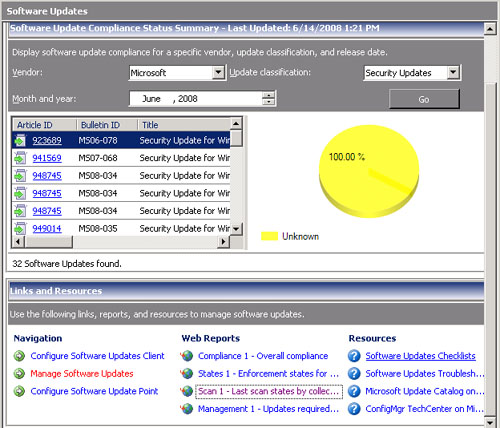

The home pages capability provides at-a-glance status of software deployment progress, application of patches, and so on. Each of the root nodes in the Configuration Manager console provides a home page displaying the status of activity related to that particular feature. For example, the Software Updates home page, shown in Figure 1.8, displays the progress of patch distribution.

If you like having your surroundings organized, you will love search folders. Search folders provide a way to organize collections of similar objects in your ConfigMgr environment, such as packages, advertisements, boot images, OS installation packages, task sequences, driver packages, software metering, reports, configuration baselines, and configuration items. You can create custom search folders based on your own criteria. This makes it really easy to keep track of the resources deployed in your environment in a way that is meaningful to you.

Queries are a convenient way to facilitate ad-hoc retrieval of data stored in the ConfigMgr SQL Server database. Queries can be constructed using a wizard interface, which allows selection of criteria through the UI, thus minimizing the need for knowledge of the WMI Query Language (WQL) in which these queries are written. However, if you are familiar with WQL or Transact SQL (T-SQL), you can easily access the query directly to make changes to the query syntax and criterion.

For example, you could create a query that retrieves a list of all computers with hard drives containing less than 2GB of free space. This sort of logic could be used in determining client readiness for an upgrade to a new version of Microsoft Office.

The default set of reports in ConfigMgr is huge. The product comes with more than 300 reports in 20 categories, out of the box (see Figure 1.9). The Reporting area also provides a filtering feature to display only the reports that match your criteria, making the reports you care about easier to locate. Reports are categorized by feature, with reporting categories including Asset Management, Desired Configuration Management, Hardware, Network Access Protection, Software Updates, and several others. Each category is then organized further into subcategories. For example, the Software Updates category includes approximately 40 reports in six subcategories:

• Compliance

• Deployment Management

• Deployment States

• Scan

• Troubleshooting

• Distribution Status for SMS 2003 Clients

Authoring new reports is quite easy, as is repurposing existing reports. You can actually clone an existing report, allowing you to make the desired changes to suit your particular situation without affecting the original report. You can even import and export reports between sites, allowing ConfigMgr administrators to easily share their customizations with other administrators of other sites.

You can view reports either through the Configuration Manager console or through the Configuration Manager Report Viewer.

Note

ConfigMgr Reporting and SRS

ConfigMgr reporting is fully integrated into the ConfigMgr console, and incorporates the Report Viewer that was present in SMS 2003. Reports are accessed using the ConfigMgr user interface and rendered in Internet Explorer.

However, in ConfigMgr 2007 R2, administrators have the option of moving from the existing reporting environment to SQL Reporting Services as the reporting engine. This requires converting existing reports, but once this is completed, the reports function as they did before and can continue to be administered through the ConfigMgr console. The conversion process is discussed in Chapter 18, “Reporting.”

The Dashboard feature provides additional flexibility in that it allows administrators to group multiple default or custom reports into a single view. This can be used for a number of common scenarios, such as grouping reports that display a certain type of information (for example, hardware and software inventory). This is also very handy for grouping process-related reports, such as the current evaluation and installation state of software and updates. You could further filter your data by site, using a dashboard-per-site strategy to display the status of these processes at individual ConfigMgr sites, each in its own dashboard. All reports are accessible and searchable through the Reports home page, displayed in Figure 1.9.

You can read more about the reporting capabilities in Configuration Manager 2007 in detail in Chapter 18.

Configuration Manager is quite flexible in that it also allows deployment in an incremental fashion. You can begin by managing a specific group of servers or a department. Once you are comfortable with the management platform and understand its features and how those work, you can then deploy to the rest of your organization.

With ConfigMgr as the core component of your systems management toolset handling your systems management objectives, you can take comfort in knowing the tools are available to meet the high expectations of business stakeholders. It plays the role of a trusted partner, helping your IT organization improve service delivery and build a better relationship with the business, while working smarter, not harder.

Beginning with SMS 2003, Configuration Manager has been a component of Microsoft’s System Center strategy. System Center is the brand name for Microsoft’s product suite focused on IT service delivery, support, and management. As time passes (and Microsoft’s management strategy progresses), expect new products and components added over time. System Center is not a single product; the name represents a suite of products designed to address all major aspects of IT service support and delivery.

As part of a multiyear strategy, System Center is being released in “waves.” The first wave included SMS 2003, MOM 2005, and System Center Data Protection Manager 2006. In 2006, additions included System Center Reporting Manager 2006 and System Center Capacity Planner 2006. The second wave includes Operations Manager 2007, Configuration Manager 2007, System Center Essentials 2007, System Center Service Manager, Virtual Machine Manager, and new releases of Data Protection Manager and System Center Capacity Planner. Presentations at popular Microsoft conferences in 2008 and 2009 included discussions of a third wave, expected to begin around 2010-2011.

Microsoft System Center products share the following DSI-based characteristics:

• Ease of use and deployment

• Based on industry and customer knowledge

• Scalability (from the mid-market to the large enterprise)

The data gathered by Configuration Manager 2007 is collected in a self-maintaining SQL Server database and comes with numerous reports viewable using the Configuration Manager console. ConfigMgr delivers more than 300 reports out of the box for categories including asset intelligence, agent health and status, hardware and software inventory, and several others. Using the native functionality in SQL Reporting Services (SRS) in ConfigMgr 2007 R2, reports can also be exported to a variety of formats, including a Report Server file share, web archive format, Excel, and PDF. You can configure ConfigMgr to schedule and email reports, enabling users to open these reports without accessing the Configuration Manager console.

Together with the reporting available in Operations Manager 2007, administrators will find a very complete picture of present system configuration and health, as well as a detailed history of changes in these characteristics over time.

Ultimately, the integrated reporting feature for System Center is moving under the to-be-released System Center Service Manager product and then will no longer be a separate product.

Microsoft rearchitected MOM 2005 to create System Center Operations Manager 2007, its operations management solution for service-oriented monitoring. Currently in its third release, the product is completely rewritten. The design pillars in Operations Manager (OpsMgr) include a focus on end-to-end service monitoring, best-of-breed manager of Windows, reliability and security, and operational efficiency. Features in OpsMgr 2007 include the following:

• Active Directory Integration— Management group information and agent configuration settings can be written to Active Directory, where they can be read by the OpsMgr agent at startup.

• SNMP-enabled device management— OpsMgr can be employed to discover and perform up/down monitoring on any SNMP-enabled server or network device.

• Audit Collection Services (ACS)— ACS provides centralized collection and storage of Windows Security Event Log events for use by auditors in assessment and reporting of an organization’s compliance with internal or external regulatory policies.

• Reporting enhancements— Reporting has been retooled to support reporting targeted to common business requirements such as availability reporting. Data is automatically aggregated to facilitate faster reporting and longer data retention.

• Command shell— Based on PowerShell, the OpsMgr Shell provides rich command-line functionality for performing bulk administration and other tasks not available through the Operations console UI.

• Console enhancements— The console interfaces of MOM 2005 have been consolidated into a single Operations console to support all operational and administrative activities. The new console has an Outlook-like look and feel to minimize the need for training users how to navigate the interface. (A separate console is provided for in-depth management pack authoring.)

• Network-Aware Service Management (NASM) and cross-platform monitoring— In Operations Manager 2007 R2, Microsoft delivers network-aware service management using technology acquired from EMC Smarts, along with native cross-platform monitoring for a number of common Linux and Unix platforms.

System Center Essentials 2007 (Essentials for short) is a System Center application, targeted to the medium-sized business, that combines the monitoring features of OpsMgr with the inventory and software distribution functionality found in ConfigMgr into a single, easy-to-use interface. The monitoring function utilizes the form of the OpsMgr 2007 engine that utilizes OpsMgr 2007 management packs, and Essentials brings additional network device discovery and monitoring out of the box. The platform goes beyond service-oriented monitoring to provide systems management functionality, software distribution, update management, as well as hardware and software inventory, all performed using the native Automatic Updates client and WSUS 3.0. Using Essentials, you can centrally manage Windows-based servers and PCs, as well as network devices, by performing the following tasks:

• Discovering and monitoring the health of computers and network devices and viewing summary reports of computer health

• Centrally distributing software updates, tracking installation progress, and troubleshooting problems using the update management feature

• Centrally deploying software, tracking progress, and troubleshooting problems with the software deployment feature

• Collecting and examining computer hardware and software inventory using the inventory feature

Although Essentials 2007 provides many of the same monitoring features as OpsMgr (and ConfigMgr to some degree), the product lacks the granularity of control and extensibility required to support distributed environments, as well as enterprise scalability. The flip side of this reduced functionality is that Essentials greatly simplifies many functions compared to its OpsMgr and ConfigMgr 2007 counterparts. Customization and connectivity options for Essentials are limited, however. An Essentials deployment supports only a single management server; all managed devices must be in the same Active Directory forest. Reporting functionality is included, but only accommodates about a 40-day retention period.

Essentials 2007 also limits the number of managed objects per deployment to 30 Windows server-based computers and 500 Windows non-server-based computers. There is no limit to the number of network devices.

Using System Center Service Manager (not yet released) will implement a single point of contact for all service requests, knowledge, and workflow. The Service Manager (previously code-named “Service Desk”) incorporates processes such as incident, problem, change, and asset management, along with workflow for automation of IT processes. From an MOF perspective, Service Manager will be an anchor for the MOF Supporting quadrant. Figure 1.10 illustrates the mapping between the quadrants of the MOF Process Model and System Center Components.