B

Hardware Basics

A novice was trying to fix a broken Lisp machine by turning the power off and on. Knight, seeing what the student was doing spoke sternly: “You cannot fix a machine just by power-cycling it with no understanding of what is going wrong.” Knight turned the machine off and on. The machine worked.

(From “AI Koans”, a collection of jokes popular at MIT in the 1980s).

B.1 Introduction (and a Puzzle)

You cannot program a multiprocessor effectively unless you know what a multiprocessor is. You can do a pretty good job of programming a uniprocessor without understanding much about computer architecture, but the same is not true of multiprocessors. We will illustrate this point by a puzzle. We will consider two programs that are logically equivalent, except that one is much less efficient than the other. Ominously, the simpler program is the inefficient one. This discrepancy cannot be explained, nor the danger avoided, without a basic understanding of modern multiprocessor architectures.

Here is the background to the puzzle. Suppose two threads share a resource that can be used by only one thread at a time. To prevent concurrent use, each thread must lock the resource before using it, and unlock it afterward. We will study many ways to implement locks in Chapter 7. For the puzzle, we consider two simple implementations in which the lock is a single Boolean field. If the field is false, the lock is free, and otherwise it is in use. We manipulate the lock with the getAndSet(v) method, which atomically swaps its argument v with the field value. To acquire the lock, a thread calls getAndSet(true). If the call returns false, then the lock was free, and the caller succeeded in locking the object. Otherwise, the object was already locked, and the thread must try again later. A thread releases a lock simply by storing false into the Boolean field.

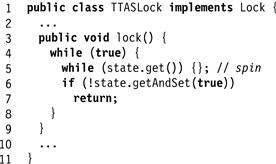

In Fig. B.1, the test-and-set (TASLock) lock repeatedly calls getAndSet(true) (Line 4) until it returns false. By contrast, in Fig. B.2, the test-and-test-and-set lock (TTASLock) repeatedly reads the lock field (by calling state.get() at Line 5) until it returns false, and only then calls getAndSet() (Line 6). It is important to understand that reading the lock value is atomic, and applying getAndSet() to the lock value is atomic, but the combination is not atomic: between the time a thread reads the lock value and the time it calls getAndSet(), the lock value may have changed.

Figure B.1 The TASLock class.

Figure B.2 The TTASLock class.

Before you proceed, you should convince yourself that the TASLock and TTASLock algorithms are logically the same. The reason is simple: in the TTASLock algorithm, reading that the lock is free does not guarantee that the next call to getAndSet() will succeed, because some other thread may have acquired the lock in the interval between reading the lock and trying to acquire it. So why bother reading the lock before trying to acquire it?

Here is the puzzle. While the two lock implementations may be logically equivalent, they perform very differently. In a classic 1989 experiment, Anderson measured the time needed to execute a simple test program on several contemporary multiprocessors. He measured the elapsed time for n threads to execute a short critical section one million times. Fig. B.3 shows how long each lock takes, plotted as a function of the number of threads. In a perfect world, both the TASLock and TTASLock curves would be as flat as the ideal curve on the bottom, since each run does the same number of increments. Instead, we see that both curves slope up, indicating that lock-induced delay increases with the number of threads. Curiously, however, the TASLock is much slower than the TTASLock lock, especially as the number of threads increases. Why?

Figure B.3 Schematic performance of a TASLock, a TTASLock, and an ideal lock.

This chapter covers much of what you need to know about multiprocessor architecture to write efficient concurrent algorithms and data structures. (Along the way, we will explain the divergent curves in Fig. B.3.)

We will be concerned with the following components:

![]() The processors are hardware devices that execute software threads. There are typically more threads than processors, and each processor runs a thread for a while, sets it aside, and turns its attention to another thread.

The processors are hardware devices that execute software threads. There are typically more threads than processors, and each processor runs a thread for a while, sets it aside, and turns its attention to another thread.

![]() The interconnect is a communication medium that links processors to processors and processors to memory.

The interconnect is a communication medium that links processors to processors and processors to memory.

![]() The memory is actually a hierarchy of components that store data, ranging from one or more levels of small, fast caches to a large and relatively slow main memory. Understanding how these levels interact is essential to understanding the actual performance of many concurrent algorithms.

The memory is actually a hierarchy of components that store data, ranging from one or more levels of small, fast caches to a large and relatively slow main memory. Understanding how these levels interact is essential to understanding the actual performance of many concurrent algorithms.

From our point of view, one architectural principle drives everything else: processors and main memory are far apart. It takes a long time for a processor to read a value from memory. It also takes a long time for a processor to write a value to memory, and longer still for the processor to be sure that value has actually been installed in memory. Accessing memory is more like mailing a letter than making a phone call. Almost everything we examine in this chapter is the result of trying to alleviate the long time it takes (“high latency”) to access memory.

Both processor and memory speed change over time, but their relative performance changes slowly. Let us consider the following analogy. We imagine that it is 1980, and you are in charge of a messenger service in mid-town Manhattan. While cars outperform bicycles on the open road, bicycles outperform cars in heavy traffic, so you choose to use bicycles. Even though the technology behind both bicycles and cars has advanced, the architectural comparison remains the same. Then as now, if you are designing an urban messenger service, you should use bicycles, not cars.

B.2 Processors and Threads

A multiprocessor consists of multiple hardware processors, each of which executes a sequential program. When discussing multiprocessor architectures, the basic unit of time is the cycle: the time it takes a processor to fetch and execute a single instruction. In absolute terms, cycle times change as technology advances (from about 10 million cycles per second in 1980 to about 3000 million in 2005), and they vary from one platform to another (processors that control toasters have longer cycles than processors that control web servers). Nevertheless, the relative cost of instructions such as memory access changes slowly when expressed in terms of cycles.

A thread is a sequential program. While a processor is a hardware device, a thread is a software construct. A processor can run a thread for a while and then set it aside and run another thread, an event known as a context switch. A processor may set aside a thread, or deschedule it, for a variety of reasons. Perhaps the thread has issued a memory request that will take some time to satisfy, or perhaps that thread has simply run long enough, and it is time for another thread to make progress. When a thread is descheduled, it may resume execution on another processor.

B.3 Interconnect

The interconnect is the medium by which processors communicate with the memory and with other processors. There are essentially two kinds of interconnect architectures in use: SMP (symmetric multiprocessing) and NUMA (nonuniform memory access).

In an SMP architecture, processors and memory are linked by a bus interconnect, a broadcast medium that acts like a tiny Ethernet. Both processors and the main memory have bus controller units in charge of sending and listening for messages broadcast on the bus. (Listening is sometimes called snooping). Today, SMP architectures are the most common, because they are the easiest to build, but they are not scalable to large numbers of processors because eventually the bus becomes overloaded.

In a NUMA architecture, a collection of nodes are linked by a point-to-point network, like a tiny local area network. Each node contains one or more processors and a local memory. One node’s local memory is accessible to the other nodes, and together, the nodes’ memories form a global memory shared by all processors. The NUMA name reflects the fact that a processor can access memory residing on its own node faster than it can access memory residing on other nodes. Networks are more complex than buses, and require more elaborate protocols, but they scale better than buses to large numbers of processors.

Figure B.4 An SMP architecture with caches on the right and a cacheless NUMA architecture on the left.

The division between SMP and NUMA architectures is a bit of a simplification: one could design hybrid architectures, where processors within a cluster communicate over a bus, but processors in different clusters communicate over a network.

From the programmer’s point of view, it may not seem important whether the underlying platform is based on a bus, a network, or a hybrid interconnect. It is important, however, to realize that the interconnect is a finite resource shared among the processors. If one processor uses too much of the interconnect’s bandwidth, then the others may be delayed.

B.4 Memory

Processors share a main memory, which is a large array of words, indexed by address. Depending on the platform, a word is typically either 32 or 64 bits, and so is an address. Simplifying somewhat, a processor reads a value from memory by sending a message containing the desired address to memory. The response message contains the associated data, that is, the contents of memory at that address. A processor writes a value by sending the address and the new data to memory, and the memory sends back an acknowledgment when the new data has been installed.

B.5 Caches

Unfortunately, on modern architectures a main memory access may take hundreds of cycles, so there is a real danger that a processor may spend much of its time just waiting for the memory to respond to requests. We can alleviate this problem by introducing one or more caches: small memories that are situated closer to the processors and are therefore much faster than main memory. These caches are logically situated “between” the processor and the memory: when a processor attempts to read a value from a given memory address, it first looks to see if the value is already in the cache, and if so, it does not need to perform the slower access to memory. If the desired address’s value was found, we say the processor hits in the cache, and otherwise it misses. In a similar way, if a processor attempts to write an address that is in the cache, it does not need to perform the slower access to memory. The proportion of requests satisfied in the cache is called the cache hit ratio (or hit rate).

Caches are effective because most programs display a high degree of locality: if a processor reads or writes a memory address (also called a memory location), then it is likely to read or write the same location again soon. Moreover, if a processor reads or writes a memory location, then it is also likely to read or write nearby locations soon. To exploit this second observation, caches typically operate at a granularity larger than a single word: a cache holds a group of neighboring words called cache lines (sometimes called cache blocks).

In practice, most processors have two levels of caches, called the L1 and L2 caches. The L1 cache typically resides on the same chip as the processor, and takes one or two cycles to access. The L2 cache may reside either on or off-chip, and may take tens of cycles to access. Both are significantly faster than the hundreds of cycles required to access the memory. Of course, these times vary from platform to platform, and many multiprocessors have even more elaborate cache structures.

The original proposals for NUMA architectures did not include caches because it was felt that local memory was enough. Later, however, commercial NUMA architectures did include caches. Sometimes the term cc-NUMA (for cache-coherent NUMA) is used to mean NUMA architectures with caches. Here, to avoid ambiguity, we use NUMA to include cache-coherence unless we explicitly state otherwise.

Caches are expensive to build and therefore significantly smaller than the memory: only a fraction of the memory locations will fit in a cache at the same time. We would therefore like the cache to maintain values of the most highly used locations. This implies that when a location needs to be cached and the cache is full, it is necessary to evict a line, discarding it if it has not been modified, and writing it back to main memory if it has. A replacement policy determines which cache line to replace to make room for a given new location. If the replacement policy is free to replace any line then we say the cache is fully associative. If, on the other hand, there is only one line that can be replaced then we say the cache is direct mapped. If we split the difference, allowing any line from a set of size k to be replaced to make room for a given line, then we say the cache is k-way set associative.

B.5.1 Coherence

Sharing (or, less politely, memory contention), occurs when one processor reads or writes a memory address that is cached by another. If both processors are reading the data without modifying it, then the data can be cached at both processors. If, however, one processor tries to update the shared cache line, then the other’s copy must be invalidated to ensure that it does not read an out-of-date value. In its most general form, this problem is called cache coherence. The literature contains a variety of very complex and clever cache coherence protocols. Here we review one of the most commonly used, called the MESI protocol (pronounced “messy”) after the names of possible cache line states. This protocol has been used in the Pentium and PowerPC processors. Here are the cache line states.

![]() Modified: the line has been modified in the cache, and it must eventually be written back to main memory. No other processor has this line cached.

Modified: the line has been modified in the cache, and it must eventually be written back to main memory. No other processor has this line cached.

![]() Exclusive: the line has not been modified, and no other processor has this line cached.

Exclusive: the line has not been modified, and no other processor has this line cached.

![]() Shared: the line has not been modified, and other processors may have this line cached.

Shared: the line has not been modified, and other processors may have this line cached.

We illustrate this protocol by a short example depicted in Fig. B.5. For simplicity, we assume processors and memory are linked by a bus.

Figure B.5 Example of the MESI cache coherence protocol’s state transitions. (a) Processor A reads data from address a, and stores the data in its cache in the exclusive state. (b) When processor B attempts to read from the same address, A detects the address conflict, and responds with the associated data. Now a is cached at both A and B in the shared state. (c) If B writes to the shared address a, it changes its state to modified, and broadcasts a message warning A (and any other processor that might have that data cached) to set its cache line state to invalid. (d) If A then reads from a, it broadcasts a request, and B responds by sending the modified data both to A and to the main memory, leaving both copies in the shared state.

Processor A reads data from address a, and stores the data in its cache in the exclusive state. When processor B attempts to read from the same address, A detects the address conflict, and responds with the associated data. Now a is cached at both A and B in the shared state. If B writes to the shared address a, it changes its state to modified, and broadcasts a message warning A (and any other processor that might have that data cached) to set its cache line state to invalid. If A then reads from a, it broadcasts a request, and B responds by sending the modified data both to A and to the main memory, leaving both copies in the shared state.

False sharing occurs when processors that are accessing logically distinct data nevertheless conflict because the locations they are accessing lie on the same cache line. This observation illustrates a difficult tradeoff: large cache lines are good for locality, but they increase the likelihood of false sharing. The likelihood of false sharing can be reduced by ensuring that data objects that might be accessed concurrently by independent threads lie far enough apart in memory. For example, having multiple threads share a byte array invites false sharing, but having them share an array of double-precision integers is less dangerous.

B.5.2 Spinning

A processor is spinning if it is repeatedly testing some word in memory, waiting for another processor to change it. Depending on the architecture, spinning can have a dramatic effect on overall system performance.

On an SMP architecture without caches, spinning is a very bad idea. Each time the processor reads the memory, it consumes bus bandwidth without accomplishing any useful work. Because the bus is a broadcast medium, these requests directed to memory may prevent other processors from making progress.

On a NUMA architecture without caches, spinning may be acceptable if the address in question resides in the processor’s local memory. Even though multiprocessor architectures without caches are rare, we will still ask when we consider a synchronization protocol that involves spinning, whether it permits each processor to spin on its own local memory.

On an SMP or NUMA architecture with caches, spinning consumes significantly fewer resources. The first time the processor reads the address, it takes a cache miss, and loads the contents of that address into a cache line. Thereafter, as long as that data remains unchanged, the processor simply rereads from its own cache, consuming no interconnect bandwidth, a process known as local spinning. When the cache state changes, the processor takes a single cache miss, observes that the data has changed, and stops spinning.

B.6 Cache-Conscious Programming, or the Puzzle Solved

We now know enough to explain why the TTASLock examined in Section B.1 outperforms the TASLock. Each time the TASLock applies getAndSet(true) to the lock, it sends a message on the interconnect causing a substantial amount of traffic. In an SMP architecture, the resulting traffic may be enough to saturate the interconnect, delaying all threads, including a thread trying to release the lock, or even threads not contending for the lock. By contrast, while the lock is busy, the TTASLock spins, reading a locally cached copy of the lock, and producing no interconnect traffic, explaining its improved performance.

The TTASLock is itself however far from ideal. When the lock is released, all its cached copies are invalidated, and all waiting threads call getAndSet(true), resulting in a burst of traffic, smaller than that of the TASLock, but nevertheless significant.

We will further discuss the interactions of caches with locking in Chapter 7. In the meantime, here are some simple ways to structure data to avoid false sharing. Some of these techniques are easier to carry out in languages like C or C++ that provide finer-grained control over memory use than Java.

![]() Objects or fields that are accessed independently should be aligned and padded so that they end up on different cache lines.

Objects or fields that are accessed independently should be aligned and padded so that they end up on different cache lines.

![]() Keep read-only data separate from data that is modified frequently. For example, consider a list whose structure is constant, but whose elements’ value fields change frequently. To ensure that modifications do not slow down list traversals, one could align and pad the value fields so that each one fills up a cache line.

Keep read-only data separate from data that is modified frequently. For example, consider a list whose structure is constant, but whose elements’ value fields change frequently. To ensure that modifications do not slow down list traversals, one could align and pad the value fields so that each one fills up a cache line.

![]() When possible, split an object into thread-local pieces. For example, a counter used for statistics could be split into an array of counters, one per thread, each one residing on a different cache line. While a shared counter would cause invalidation traffic, the split counter allows each thread to update its own replica without causing coherence traffic.

When possible, split an object into thread-local pieces. For example, a counter used for statistics could be split into an array of counters, one per thread, each one residing on a different cache line. While a shared counter would cause invalidation traffic, the split counter allows each thread to update its own replica without causing coherence traffic.

![]() If a lock protects data that is frequently modified, then keep the lock and the data on distinct cache lines, so that threads trying to acquire the lock do not interfere with the lock-holder’s access to the data.

If a lock protects data that is frequently modified, then keep the lock and the data on distinct cache lines, so that threads trying to acquire the lock do not interfere with the lock-holder’s access to the data.

![]() If a lock protects data that is frequently uncontended, then try to keep the lock and the data on the same cache lines, so that acquiring the lock will also load some of the data into the cache.

If a lock protects data that is frequently uncontended, then try to keep the lock and the data on the same cache lines, so that acquiring the lock will also load some of the data into the cache.

B.7 Multi-Core and Multi-Threaded Architectures

In a multi-core architecture, as in Fig. B.6, multiple processors are placed on the same chip. Each processor on that chip typically has its own L1 cache, but they share a common L2 cache. Processors can communicate efficiently through the shared L2 cache, avoiding the need to go through memory, and to invoke the cumbersome cache coherence protocol.

Figure B.6 A multi-core SMP architecture. The L2 cache is on chip and shared by all processors while the memory is off-chip.

In a multi-threaded architecture, a single processor may execute two or more threads at once. Many modern processors have substantial internal parallelism. They can execute instructions out of order, or in parallel (e.g., keeping both fixed and floating-point units busy), or even execute instructions speculatively before branches or data have been computed. To keep hardware units busy, multi-threaded processors can mix instructions from multiple streams.

Modern processor architectures combine multi-core with multi-threading, where multiple individually multi-threaded cores may reside on the same chip. The context switches on some multi-core chips are inexpensive and are performed at a very fine granularity, essentially context switching on every instruction. Thus, multi-threading serves to hide the high latency of accessing memory: whenever a thread accesses memory, the processor allows another thread to execute.

B.7.1 Relaxed Memory Consistency

When a processor writes a value to memory, that value is kept in the cache and marked as dirty, meaning that it must eventually be written back to main memory. On most modern processors, write requests are not applied to memory when they are issued. Rather, they are collected in a hardware queue, called a write buffer (or store buffer), and applied to memory together at a later time. A write buffer provides two benefits. First, it is often more efficient to issue a number of requests all at once, a phenomenon called batching. Second, if a thread writes to an address more than once, the earlier request can be discarded, saving a trip to memory, a phenomenon called write absorption.

The use of write buffers has a very important consequence: the order in which reads–writes are issued to memory is not necessarily the order in which they occur in the program. For example, recall the flag principle of Chapter 1 which was crucial to the correctness of mutual exclusion: if two processors each first write their own flag and then read the other’s flag location, then one of them will see the other’s newly written flag value. Using write buffers this is no longer true, both may write, each in its respective write buffer, but the buffers may both be written only after both processors each read the other’s flag location in memory. Thus, neither reads the other’s flag.

Compilers make matters even worse. They are very good at optimizing performance on single-processor architectures. Often, this optimization requires reordering an individual thread’s reads–writes to memory. Such reordering is invisible for single-threaded programs, but it can have unexpected consequences for multi-threaded programs in which threads may observe the order in which writes occur. For example, if one thread fills a buffer with data and then sets an indicator to mark the buffer as full, then concurrent threads may see the indicator set before they see the new data, causing them to read stale values. The erroneous double-checked locking pattern described in Chapter 3 is an example of a pitfall produced by unintuitive aspects of the Java memory model.

Different architectures provide different guarantees about the extent to which memory reads–writes can be reordered. As a rule, it is better not to rely on such guarantees, and to use more expensive techniques, described in the following paragraph, to prevent such reordering.

All architectures allow you to force your writes to take place in the order they are issued, but at a price. A memory barrier instruction (sometimes called a fence) flushes write buffers, ensuring that all writes issued before the barrier become visible to the processor that issued the barrier. Memory barriers are often inserted transparently by atomic read-modify-write operations such as getAndSet(), or by standard concurrency libraries. Thus, explicit use of memory barriers is needed only when processors perform read–write instructions on shared variables outside of critical sections.

On the one hand, memory barriers are expensive (100s of cycles, maybe more), and should be used only when necessary. On the other, synchronization bugs can be very difficult to track down, so memory barriers should be used liberally, rather than relying on complex platform-specific guarantees about limits to memory instruction reordering.

The Java language itself allows reads–writes to object fields to be reordered if they occur outside synchronized methods or blocks. Java provides a volatile keyword that ensures that reads–writes to a volatile object field that occur outside synchronized blocks or methods are not reordered. Using this keyword can be expensive, so it should be used only when necessary. We notice that in principle, one could use volatile fields to make double-checked locking work correctly, but there would not be much point, since accessing volatile variables requires synchronization anyway.

Here ends our primer on multiprocessor hardware. We will continue to discuss these architectural concepts in the context of specific data structures and algorithms. A pattern will emerge: the performance of multiprocessor programs is highly dependent on synergy with the underlying hardware.

B.8 Hardware Synchronization Instructions

As discussed in Chapter 5, any modern multiprocessor architecture must support powerful synchronization primitives to be universal, that is, provide concurrent computation’s equivalent of a Universal Turing machine. It is therefore not surprising that the implementation of the Java language relies on such specialized hardware instructions (also called hardware primitives) in implementing synchronization, from spin-locks and monitors to the most complex lock-free structures.

Modern architectures typically provide one of two kinds of universal synchronization primitives. The compare-and-swap (CAS) instruction is supported in architectures by AMD, Intel, and Sun. It takes three arguments: an address a in memory, an expected value e, and an update value v. It returns a Boolean. It atomically executes the following steps:

![]() If the memory at address a contains the expected value e,

If the memory at address a contains the expected value e,

On Intel and AMD architectures, CAS is called CMPXCHG, while on SPARC™ it is called CAS.1 Java’s java.util.concurrent.atomic library provides atomic Boolean, integer, and reference classes that implement CAS by a compareAndSet() method. (Because our examples are mostly in Java, we refer to compareAndSet() instead of CAS everywhere else.) C# provides the same functionality with the Interlocked.CompareExchange method.

The CAS instruction has one pitfall. Perhaps the most common use of CAS is the following. An application reads value a from a given memory address, and computes a new value c for that location. It intends to store c, but only if the value a in the address has not changed since it was read. One might think that applying a CAS with expected value a and update value c would accomplish this goal. There is a problem: a thread could have overwritten the value a with another value b, and later written a again to the address. The compare-and-swap will replace a with c, but the application may not have done what it was intended to do (for example, if the address stores a pointer, the new value a may be the address of a recycled object). The CAS call will replace e with v, but the application may not have done what it was intended to do. This problem is known as the ABA problem, discussed in detail in Chapter 10.

Another hardware synchronization primitive is a pair of instructions: load-linked and store-conditional (LL/SC). The LL instruction reads from an address a. A later SC instruction to a attempts to store a new value at that address. The instruction succeeds if the contents of address a are unchanged since that thread issued the earlier LL instruction to a. It fails if the contents of that address has changed in the interval.

The LL and SC instructions are supported by a number of architectures: Alpha AXP (ldl_l/stl_c), IBM PowerPC (lwarx/stwcx) MIPS ll/sc, and ARM (ldrex/strex). LL/SC does not suffer from the ABA problem, but in practice there are often severe restrictions on what a thread can do between a LL and the matching SC. A context switch, another LL, or another load or store instruction may cause the SC to fail.

It is good idea to use atomic fields and their associated methods sparingly because they are often based on CAS or LL/SC. A CAS or LL/SC instruction takes significantly more cycles to complete than a load or store: it includes a memory barrier and prevents out-of-order execution and various compiler optimizations. The precise cost depends on many factors, and varies not only from one architecture to the next, but also from one application of the instruction to the next within the same architecture. It suffices to say that CAS or LL/SC can be an order of magnitude slower than a simple load or store.

B.9 Chapter Notes

John Hennessy and David Patterson [58] give a comprehensive treatment of computer architecture. The MESI protocol is used by Intel’s Pentium processor [75]. The tips on cache-conscious programming are adapted from Benjamin Gamsa, Orran Krieger, Eric Parsons, and Michael Stumm [43]. Sarita Adve and Karosh Gharachorloo [1] give an excellent survey of memory consistency models.

B.10 Exercises

Exercise 219. Thread A must wait for a thread on another processor to change a flag bit in memory. The scheduler can either allow A to spin, repeatedly retesting the flag, or it can deschedule A, allowing some other thread to run. Suppose it takes a total of 10 milliseconds for the operationg system to switch a processor from one thread to another. If the operating system deschedules thread A and immediately reschedules it, then it wastes 20 milliseconds. If, instead, A starts spinning at time t0, and the flag changes at t1, then the operating system will have wasted t1 − t0 time doing unproductive work.

A prescient scheduler is one that can predict the future. If it foresees that the flag will change in less than 20 milliseconds, it makes sense to have A spin, wasting less than 20 milliseconds, because descheduling and rescheduling A wastes 20 milliseconds. If, on the other hand, it takes more than 20 milliseconds for the flag to change, it makes sense to replace A with another thread, wasting no more than 20 milliseconds.

Your assignment is to implement a scheduler that never wastes more than twice the time a prescient scheduler would have wasted under the same circumstances.

Exercise 220. Imagine you are a lawyer, paid to make the best case you can for a particular point of view. How would you argue the following claim: if context switches took negligible time, then processors would not need caches, at least for applications that encompass large numbers of threads.

Extra credit: critique your argument.

Exercise 221. Consider a direct-mapped cache with 16 cache lines, indexed 0 to 15, where each cache line encompasses 32 words.

![]() Explain how to map an address a to a cache line in terms of bit shifting and masking operations. Assume for this question that addresses refer to words, not bytes: address 7 refers to the 7th word in memory.

Explain how to map an address a to a cache line in terms of bit shifting and masking operations. Assume for this question that addresses refer to words, not bytes: address 7 refers to the 7th word in memory.

![]() Compute the best and worst possible hit ratios for a program that loops 4 times through an array of 64 words.

Compute the best and worst possible hit ratios for a program that loops 4 times through an array of 64 words.

![]() Compute the best and worst possible hit ratios for a program that loops 4 times through an array of 512 words.

Compute the best and worst possible hit ratios for a program that loops 4 times through an array of 512 words.

Exercise 222. Consider a direct-mapped cache with 16 cache lines, indexed 0 to 15, where each cache line encompasses 32 words.

Consider a two-dimensional, 32 × 32 array of words a. This array is laid out in memory so that a[0,0] is next to a[0,1], and so on. Assume the cache is initially empty, but that a[0,0] maps to the first word of cache line 0.

Consider the following column-first traversal:

int sum = 0;

for (int i = 0; i < 32; i++) {

for (int j = 0; j < 32; j++) {

sum += a[i,j]; // 2nd dim changes fastest

}

}

and the following row-first traversal:

int sum = 0;

for (int i = 0; i < 32; i++) {

for (int j = 0; j < 32; j++) {

sum += a[j,i]; // 1st dim changes fastest

}

}

Compare the number of cache misses produced by the two traversals, assuming the oldest cache line is evicted first.

Exercise 223. In the MESI cache-coherence protocol, what is the advantage of distinguishing between exclusive and modified modes?

What is the advantage of distinguishing between exclusive and shared modes?

Exercise 224. Implement the test-and-set and test-and-test-and-set locks shown in Figs. B.1 and B.2, test their relative performance on a multiprocessor, and analyze the results.

1 Instead of a Boolean, CAS on Sparc returns the location’s prior value, which can be used to retry an unsuccessful CAS. CMPXCHG on Intel’s Pentium effectively returns both a Boolean and the prior value.