Chapter 13

Network Protocols

Chapter 12 presented the network-communications architecture of 4.4BSD. In this chapter, we examine the network protocols implemented within this framework. The 4.4BSD system supports four major communication domains: DARPA Internet, Xerox Network Systems (NS), ISO/OSI, and local domain (formerly known as the UNIX domain). The local domain does not include network protocols because it operates entirely within a single system. The Internet protocol suite was the first set of protocols implemented within the network architecture of 4.2BSD. Following the release of 4.2BSD, several proprietary protocol families were implemented by vendors within the network architecture. However, it was not until the addition of the Xerox NS protocols in 4.3BSD that the system’s ability to support multiple network-protocol families was visibly demonstrated. Although some parts of the protocol interface were previously unused and thus unimplemented, the changes required to add a second network-protocol family did not substantially modify the network architecture. The implementation of the ISO OSI networking protocols, as well as other changing requirements, led to a further refinement of the network architecture in 4.4BSD.

In this chapter, we shall concentrate on the organization and implementation of the Internet protocols. This protocol implementation is used widely, both in 4BSD systems and in many other systems, because it was publicly available when many vendors were looking for tuned and reliable communication protocols. Developers have implemented other protocols, including Xerox NS and OSI, by following the same general framework set forth by the Internet protocol routines. After describing the overall architecture of the Internet protocols, we shall examine their operation according to the structure defined in Chapter 12. We shall also describe the significant algorithms used by the Internet protocols. We then shall discuss changes that the developers made in the system motivated by aspects of the OSI protocols and their implementation.

13.1 Internet Network Protocols

The Internet network protocols were developed under the sponsorship of DARPA, for use on the ARPANET [McQuillan & Walden, 1977; DARPA, 1983]. They are commonly known as TCP/IP, although TCP and IP are only two of the many protocols in the family. Unlike earlier protocols used within the ARPANET (the ARPANET Host-to-Host Protocol, sometimes called the Network Control Program (NCP)) [Carr et al, 1970], these protocols do not assume a reliable subnetwork that ensures delivery of data. Instead, the Internet protocols were devised for a model in which hosts were connected to networks with varying characteristics, and the networks were interconnected by routers (generally called gateways at the time). Such a model is called a catenet [Cerf, 1978]. The Internet protocols were designed for packet-switching networks ranging from the ARPANET or X.25, which provide reliable message delivery or notification of failure, to pure datagram networks such as Ethernet, which provide no indication of datagram delivery.

This model leads to the use of at least two protocol layers. One layer operates end to end between two hosts involved in a conversation. It is based on a lower-level protocol that operates on a hop-by-hop basis, forwarding each message through intermediate routers to the destination host. In general, there exists at least one protocol layer above the other two: it is the application layer. This three-level layering has been called the ARPANET Reference Model [Padlipsky, 1985]. The three layers correspond roughly to levels 3 (network), 4 (transport), and 7 (application) in the ISO Open Systems Interconnection reference model [ISO, 1984].

The Internet communications protocols that support this model have the layering illustrated in Fig. 13.1. The Internet Protocol (IP) is the lowest-level protocol in the ARPANET Reference Model; this level corresponds to the ISO network layer. IP operates hop by hop as a datagram is sent from the originating host to the destination via any intermediate routers. It provides the network-level services of host addressing, routing, and, if necessary, packet fragmentation and reassembly if intervening networks cannot send an entire packet in one piece. All the other protocols use the services of IP. (The version of IP used in 4.4BSD is version 4. The next generation of IP, version 6, was in development about the time of the release of 4.4BSD.) The Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) are transport-level protocols that provide additional facilities to IP. Each protocol adds a port identifier to IP’s host address so that local and remote sockets can be identified. TCP provides reliable, unduplicated, and flow-controlled transmission of data; it supports the stream socket type in the Internet domain. UDP provides a data checksum for checking integrity in addition to a port identifier, but otherwise adds little to the services provided by IP. UDP is the protocol used by datagram sockets in the Internet domain. The Internet Control Message Protocol (ICMP) is used for error reporting and for other network-management tasks; it is logically a part of IP, but like the transport protocols is layered above IP. It is usually not accessed by users. Raw access to the IP and ICMP protocols is possible through raw sockets; see Section 12.7 for information on this facility.

Figure 13.1 Internet protocol layering. TCP—Transmission Control Protocol; UDP—User Datagram Protocol; IP—Internet Protocol; ICMP—Internet Control Message Protocol.

The Internet protocols were designed to support heterogeneous host systems and architectures. These systems use a wide variety of internal data representations. Even the basic unit of data, the byte, was not the same on all host systems; one common type of host supported variable-sized bytes. The network protocols, however, require a standard representation. This representation is expressed in terms of the octet—an 8-bit byte. We shall use this term as it is used in the protocol specifications to describe network data, although we continue to use the term byte to refer to data or storage within the system. All fields in the Internet protocols that are larger than an octet are expressed in network byte order, with the most significant octet first. The 4.4BSD network implementation uses a set of routines or macros to convert 16-bit and 32-bit integer fields between host and network byte order on hosts (such as the VAX and i386-compatible systems) that have a different native ordering.

Internet Addresses

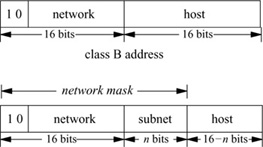

An Internet host address is a 32-bit number that identifies both the network on which a host is located and the host on that network. Network identifiers are assigned by a central agency, whereas host identifiers are assigned by each network’s administrator. It follows that a host with network interfaces attached to multiple networks has multiple addresses. Figure 13.2 shows the original addressing scheme that was tied to the subnetwork addressing used on the ARPANET; each host was known by the number of the ARPANET IMP to which it was attached and by its host port number on that IMP (Interface Message Processor). The IMP and host numbers each occupied one octet of the address. One remaining octet was used to designate the network and the other was available for uses such as multiplexed host connections—thus the name logical host. This encoding of the address limits the number of networks to 255, a number that quickly proved to be too small. Figure 13.2 shows how the network portion of the address was encoded such that it could be variable in size. The most significant bits of the network part of the address determine the class of an address. Three classes of network address are defined, A, B and C, with high-order bits of 0, 10, and 110; they use 8, 16, and 24 bits, respectively, for the network part of the address. Each class has fewer bits for the host part of each address, and thus supports fewer hosts than do the higher classes. This form of frequency encoding supports a larger number of networks of varying size, yet is compatible with the old encoding of ARPANET addresses.

Figure 13.2 Internet addresses. IMP—Interface Message Processor.

Subnets

The basic Internet addressing scheme uses a 32-bit address that contains both a network and a host identifier. All interconnected networks must be known to a central collection of routing agents for full connectivity. This scheme does not handle a large number of interconnected networks well because of the excessive routing information necessary to ensure full connectivity. Furthermore, when networks are installed at a rapid pace, the administrative overhead is significant. However, many networks are installed at organizations such as universities, companies, and research centers that have many interconnected local-area networks with only a few points of attachment to external networks. To handle these problems, the notion of a subnet addressing scheme was added [Mogul & Postel, 1985]; it allows a collection of networks to be known by a single network number.

Subnets allow the addition of another level of hierarchy to the Internet address space. They partition a network assigned to an organization into multiple address spaces (see Fig. 13.3). This partitioning, each part of which is termed a subnet, is visible to only those hosts and routers on the subnetted network. To hosts that are not on the subnetted network, the subnet structure is not visible. Instead, all hosts on subnets of a particular network are perceived externally as being on a single network. The scheme allows Internet routing to be done on a site-by-site basis, as all hosts on a site’s subnets appear to off-site hosts and routers to be on a single Internet network. This partitioning scheme also permits sites to have greater local autonomy over the network topology at their site.

When a subnet addressing scheme is set up at a site, a partitioning of the assigned Internet address space for that site must be chosen. Consider Fig. 13.3: If a site has a class B network address assigned to it, it has 16 bits of the address in which to encode a subnet number and the identifier of a host on that subnet. An arbitrary subdivision of the 16 bits is permitted, but sites must balance the number of subnets they will need against the number of hosts that may be addressed on each subnet. To inform the system of the desired partitioning scheme, the site administrator specifies a network mask for each network interface. This mask shows which bits in the Internet address specify the network part of the local address. The mask includes the normal network portion, as well as the subnet field. This mask also is used when the host part of an address is extracted. When interpreting an address that is not local, the system uses the mask corresponding to the class of the address. The mask does not need to be uniform throughout a subnetted network, although uniformity is common.

The implementation of subnets is isolated, for the most part, to the routines that manipulate Internet addresses. Each Internet address assigned to a network interface is maintained in an in_ifaddr structure that contains an interface address structure and additional information for use in the Internet domain (see Fig. 13.4 on page 440). When an interface’s network mask is specified, it is recorded in the ia_subnetmask field of the address structure. The network mask, ia_netmask, is calculated based on the type of the network number (class A, B, or C) when the interface’s address is assigned. For nonsubnetted networks, the two masks are identical. The system then interprets local Internet addresses using these values. An address is considered to be local to the subnet if the field under the subnetwork mask matches the subnetwork field of an interface address. The system can also determine whether an address is on the logical network using the network mask and number.

Figure 13.3 Example of subnet address partitioning.

Figure 13.4 Internet interface address structure (in_ifaddr).

As the number of Internet networks has grown, it has become necessary to generalize the handling of Internet addresses to avoid exhausting the set of available network numbers. The new scheme is based on Classless Inter-Domain Routing (CIDR) [Fuller et al, 1993]. The allocation of network addresses does not necessarily follow the boundaries according to class (A, B or C). Instead, an organization may be assigned a contiguous group of addresses described by a single value and mask, such as a group of 16 class C networks (using a 20-bit mask), or one-half of a class C network (using a 25-bit mask). This group of addresses may in turn be subnetted within the organization. In addition, these blocks of addresses are often assigned from a larger block by an Internet service provider, allowing aggregation of routes to clients of the provider. In general, 4.4BSD handles classless addressing in the same fashion as subnets, setting the local network mask along with each address. The local network mask can be set to a value either longer or shorter than that of the mask associated with the network class (A, B, or C). When such a network is subnetted, it would sometimes be desirable to set both the network and subnet masks, although the network mask has little remaining significance. As network routes now include explicit masks (see Section 12.5), the system can route to subnets, traditional network classes, and clusters of networks using the same mechanism.

Broadcast Addresses

On networks capable of supporting broadcast datagrams, 4.2BSD used the address with a host part of zero for broadcasts. After 4.2BSD was released, the Internet broadcast address was defined as the address with a host part of all 1s [Mogul, 1984]. This change and the introduction of subnets both complicated the recognition of broadcast addresses. Hosts may use a host part of 0 or 1s to signify broadcast, and some may understand the presence of subnets, whereas others may not. For these reasons, 4.3BSD and later systems set the broadcast address for each interface to be the host value of all 1s, but allow the alternate address to be set for backward compatibility. If the network is subnetted, the subnet field of the broadcast address contains the normal subnet number. The logical broadcast address for the network also is calculated when the address is set; this address would be the standard broadcast address if subnets were not in use. This address is needed by the IP input routine to filter input packets. On input, 4.4BSD recognizes and accepts subnet and network broadcast addresses with host parts of 0s or 1s, as well as the address with 32 bits of 1 (“broadcast on this physical network”).

Internet Multicast

Many link-layer networks, such as the Ethernet, provide a multicast capability that can address groups of hosts, but is more selective than broadcast because it provides a number of different multicast group addresses. IP provides a similar facility at the network-protocol level, using link-layer multicast where available [Deering, 1989]. IP multicasts are sent using class D destination addresses with high-order bits 1110. Unlike host addresses in classes A, B, and C, class D addresses do not contain network and host portions; instead, the entire address names a group, such as a group of hosts using a particular service. These groups can be created dynamically, and the members of the group can change over time. IP multicast addresses map directly to physical multicast addresses on networks such as the Ethernet, using the low 24 bits of the IP address along with a constant 24-bit prefix to form a 48-bit link-layer address.

For a socket to use multicast, it must join a multicast group using the setsockopt system call. This call informs the link layer that it should receive multicasts for the corresponding link-layer address, and also sends a multicast membership report using the Internet Group Management Protocol (IGMP). Multicast agents on the network can thus keep track of the members of each group. Multicast agents receive all multicast packets from directly attached networks and forward multicast datagrams as needed to group members on other networks. This function is similar to the role of routers that forward normal (unicast) packets, but the criteria for packet forwarding are different, and a packet can be forwarded to multiple neighboring networks.

Internet Ports and Associations

At the IP level, packets are addressed to a host, rather than to a process or communications port. However, each packet contains an 8-bit protocol number that identifies the next protocol that should receive the packet. Internet transport protocols use an additional identifier to designate the connection or communications port on the host. Most protocols (including TCP and UDP) use a 16-bit port number for this purpose. Each protocol maintains its own mapping of port numbers to processes or descriptors. Thus, an association, such as a connection, is fully specified by the tuple <source address, destination address, protocol number, source port, destination port>. Connection-oriented protocols, such as TCP, must enforce the uniqueness of associations; other protocols generally do so as well. When the local part of the address is set before the remote part, it is necessary to choose a unique port number to prevent collisions when the remote part is specified.

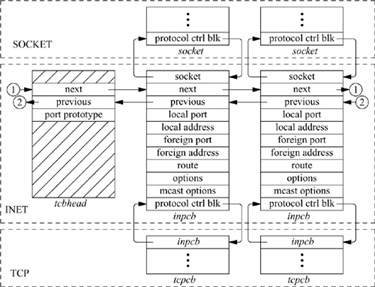

Protocol Control Blocks

For each TCP- or UDP-based socket, an Internet protocol control block (an inpcb structure) is created to hold Internet network addresses, port numbers, routing information, and pointers to any auxiliary data structures. TCP, in addition, creates a TCP control block (a tcpcb structure) to hold the wealth of protocol state information necessary for its implementation. Internet control blocks for use with TCP are maintained on a doubly linked list private to the TCP protocol module. Internet control blocks for use with UDP are kept on a similar list private to the UDP protocol module. Tw o separate lists are needed because each protocol in the Internet domain has a distinct space of port identifiers. Common routines are used by the individual protocols to add new control blocks to a list, to fix the local and remote parts of an association, to locate a control block by association, and to delete control blocks. IP demultiplexes message traffic based on the protocol identifier specified in its protocol header, and each higher-level protocol is then responsible for checking its list of Internet control blocks to direct a message to the appropriate socket. Figure 13.5 shows the linkage between the socket data structure and these protocol-specific data structures.

Figure 13.5 Internet Protocol data structures.

The implementation of the Internet protocols is rather tightly coupled, as befits the strong intertwining of the protocols. For example, the transport protocols send and receive packets including not only their own header, but also an IP pseudoheader containing the source and destination address, the protocol identifier, and a packet length. This pseudoheader is included in the transport-level packet checksum.

We are now ready to examine the operation of the Internet protocols. We begin with UDP, as it is far simpler than TCP.

13.2 User Datagram Protocol (UDP)

The User Datagram Protocol (UDP) [Postel, 1980] is a simple unreliable datagram protocol that provides only peer-to-peer addressing and optional data checksums.† Its protocol headers are extremely simple, containing only the source and destination port numbers, the datagram length, and the data checksum. The host addresses for a datagram are provided by the IP pseudoheader.

†In 4.4BSD, checksums are enabled or disabled on a system-wide basis and cannot be enabled or disabled on individual sockets.

Initialization

When a new datagram socket is created in the Internet domain, the socket layer locates the protocol-switch entry for UDP and calls the udp_usrreq() routine PRU_ATTACH entry with the socket as a parameter. UDP uses in_pcballoc() to create a new protocol control block on its list of current sockets. It also sets the default limits for the socket send and receive buffers. Although datagrams are never placed in the send buffer, the limit is set as an upper limit on datagram size; the UDP protocol-switch entry contains the flag PR_ATOMIC, requiring that all data in a send operation be presented to the protocol at one time.

If the application program wishes to bind a port number—for example, the well-known port for some datagram service—it calls the bind system call. This request reaches UDP as the PRU_BIND request to udp_usrreq(). The binding may also specify a specific host address, which must be an address of an interface on this host. Otherwise, the address will be left unspecified, matching any local address on input, and with an address chosen as appropriate on each output operation. The binding is done by in_pcbbind(), which verifies that the chosen port number (or address and port) is not in use, then records the local part of the association.

To send datagrams, the system must know the remote part of an association. A program can specify this address and port with each send operation using sendto or sendmsg, or can do the specification ahead of time with the connect system call. In either case, UDP uses the in_pcbconnect() function to record the destination address and port. If the local address was not bound, and if a route for the destination is found, the address of the outgoing interface is used as the local address. If no local port number was bound, one is chosen at this time.

Output

A system call that sends data reaches UDP as a call to udp_usrreq() with the PRU_SEND request and a chain of mbufs containing the data for the datagram. If the call provided a destination address, the address is passed as well; otherwise, the address from a prior connect call is used. The actual output operation is done by udp_output(),

error = udp_output(inp, m, addr, control);

struct inpcb *inp;

struct mbuf *m;

struct mbuf *addr;

struct mbuf *control;

where inp is an Internet protocol control block, m is an mbuf chain that contains the data to be sent, and addr is an optional mbuf containing the destination address. Any ancillary data in control are discarded. The destination address could have been prespecified with a connect call; otherwise, it must be provided in the send call. UDP simply prepends its own header, fills in the UDP header fields and those of a prototype IP header, and calculates a checksum before passing the packet on to the IP module for output:

error = ip_output(m, opt, ro, flags, imo);

struct mbuf *m, *opt;

struct route *ro;

int flags;

struct ip_moptions *imo;

The call to IP’s output routine is more complicated than is that to UDP’s because the IP routine cannot depend on having a protocol control block that contains information about the current sender and destination. The m parameter indicates the data to be sent, and the opt parameter may specify a list of IP options that should be placed in the IP packet header. For multicast destinations, the imo parameter may reference multicast options, such as the choice of interface and hop count for multicast packets. IP options may be set for a socket with the setsockopt system call specifying the IP protocol level and option IP_OPTIONS. These options are stored in a separate mbuf, and a pointer to this mbuf is stored in the protocol control block for a socket; the pointer is passed to ip_output() with each packet sent. The ro parameter is optional; UDP passes a pointer to the route structure in the protocol control block for the socket. IP will determine a route and leave it in the control block, so that it can be reused on later calls. The flags parameter indicates whether the user is allowed to transmit a broadcast message, and whether routing is to be bypassed for the message being sent (see Section 13.3). The broadcast flag may be inconsequential if the underlying hardware does not support broadcast transmissions. The flags also indicate whether the packet includes an IP pseudoheader or a completely initialized IP header, as when IP forwards packets.

Input

All Internet transport protocols that are layered directly on top of IP use the following calling convention when receiving input packets from IP:

(void) (*pr_input)(m, hlen);

struct mbuf *m;

int hlen;

Each mbuf chain passed is a single packet to be processed by the protocol module. The packet includes the IP header in lieu of a pseudoheader, and the IP header length is passed as the second parameter. The UDP input routine udp_input() is typical of protocol input routines. It first verifies that the length of the packet is at least as long as the IP plus UDP headers, and it uses m_pullup() to make the header contiguous. It then checks that the packet is the correct length and checksums the data if a checksum is present. If any of these tests fail, the packet is simply discarded. Finally, the protocol control block for the socket that is to receive the data is located by in_pcblookup() from the addresses and port numbers in the packet. There might be multiple control blocks with the same local port number, but different local or remote addresses; if so, the control block with the best match is selected. An exact association matches best; but if none exists, a socket with the correct local port number but unspecified local address, remote port number, or remote address will match. A control block with unspecified local or remote addresses thus acts as a wildcard that receives packets for its port if no exact match is found. If a control block is located, the data and the address from which the packet was received are placed in the receive buffer of the indicated socket with sbappendaddr(). If the destination address is a multicast address, copies of the packet are delivered to each socket with matching addresses. Otherwise, if no receiver is found and if the packet was not addressed to a broadcast or multicast address, an ICMP port unreachable error message is sent to the originator of the datagram.†

†This error message normally has no effect, as the sender typically connects to this destination only temporarily, and destroys the association before new input is processed. However, if the sender still has a fully specified association, it may receive notification of the error. The host-name lookup routine in 4.4BSD uses this mechanism to detect the absence of a nameserver at boot time, allowing the lookup routine to fall back to the local host file.

Control Operations

UDP supports few control operations. It supports no options in 4.4BSD, and passes calls to its pr_ctloutput () entry directly to IP. It has a simple pr_ctlinput () routine that receives notification of any asynchronous errors. Some errors simply cause cached routes to be flushed. Other errors are passed to any datagram socket with the indicated destination; only sockets with a destination fixed by a connect call may be notified of errors asynchronously. Such errors are simply noted in the appropriate socket, and socket wakeups are issued in case the process is selecting or sleeping while waiting for input.

When a UDP datagram socket is closed, the udp_usrreq() is called with the PRU_DETACH request. The protocol control block and its contents are simply deleted with in_pcbdetach(); no other processing is required.

13.3 Internet Protocol (IP)

Having examined the operation of a simple transport protocol, we continue with a discussion of the network-layer protocol [Postel, 1981a; Postel et al, 1981]. The Internet Protocol (IP) is the level responsible for host-to-host addressing and routing, packet forwarding, and packet fragmentation and reassembly. Unlike the transport protocols, it does not always operate on behalf of a socket on the local host; it may forward packets, receive packets for which there is no local socket, or generate error packets in response to these situations.

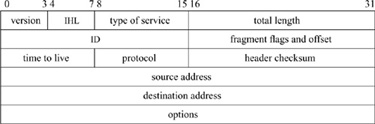

The functions done by IP are illustrated by the contents of its packet header, shown in Fig. 13.6. The header identifies source and destination hosts and the destination protocol, and contains header and packet lengths. The identification and fragment fields are used when a packet or fragment must be broken into smaller sections for transmission on its next hop, and to reassemble the fragments when they arrive at the destination. The fragmentation flags are Don’t Fragment and More Fragments; the latter flag plus the offset are sufficient to assemble the fragments of the original packet at the destination.

Figure 13.6 Internet Protocol header. IHL is the Internet header length specified in units of four octets. Options are delimited by IHL.

IP options are present in an IP packet if the header length field has a value larger than the minimum. The no-operation option and the end-of-option-list option are each one octet in length. All other options are self-encoding, with a type and length preceding any additional data. Hosts and routers are thus able to skip over options that they do not implement. Examples of existing options are the timestamp and record-route options, which are updated by each router that forwards a packet, and the source-route options, which supply a complete or partial route to the destination.

Output

We have already seen the calling convention for the IP output routine, which is

error = ip_output(m, opt, ro, flags, imo);

struct mbuf *m, *opt;

struct route *ro;

int flags;

struct ip_moptions *imo;

As described in the subsection on output in the previous section, the parameter m is an mbuf chain containing the packet to be sent, including a skeletal IP header; opt is an optional mbuf containing IP options to be inserted after the header. If the route ro is given, it may contain a reference to a routing entry (rtentry structure), which specifies a route to the destination from a previous call, and in which any new route will be left for future use. The flags may allow the use of broadcast or may indicate that the routing tables should be bypassed. If present, imo includes options for multicast transmissions.

The outline of the work done by ip_output() is as follows:

• Insert any IP options.

• Fill in the remaining header fields (IP version, zero offset, header length, and a new packet identification) if the packet contains an IP pseudoheader.

• Determine the route (i.e., outgoing interface and next-hop destination).

• Check whether the destination is a multicast address. If it is, determine the outgoing interface and hop count.

• Check whether the destination is a broadcast address; if it is, check whether broadcast is permitted.

• If the packet size is no larger than the maximum packet size for the outgoing interface, compute the checksum and call the interface output routine.

• If the packet size is larger than the maximum packet size for the outgoing interface, break the packet into fragments and send each in turn.

We shall examine the routing step in more detail. First, if no route reference is passed as a parameter, an internal routing reference structure is used temporarily. A route structure that is passed from the caller is checked to see that it is a route to the same destination, and that it is still valid. If either test fails, the old route is freed. After these checks, if there is no route, rtalloc() is called to allocate a route. The route returned includes a pointer to the outgoing interface information. This information includes the maximum packet size, flags including broadcast and multicast capability, and the output routine. If the route is marked with the RTF_GATEWAY flag, the address of the next-hop gateway (router) is given by the route; otherwise, the packet’s destination is the next-hop destination. If routing is to be bypassed because of a MSG_DONTROUTE option (see Section 11.1) or a SO_DONTROUTE option, a directly attached network shared with the destination is found; if there is no directly attached network, an error is returned. Once the outgoing interface and next-hop destination are found, enough information is available to send the packet.

As described in Chapter 12, the interface output routine normally validates the destination address and places the packet on its output queue, returning errors only if the interface is down, the output queue is full, or the destination address is not understood.

Input

In Chapter 12, we described the reception of a packet by a network interface, and the packet’s placement on the input queue for the appropriate protocol. The network-interface handler then schedules the protocol to run by setting a corresponding bit in the network status word and scheduling a software interrupt. The IP input routine is invoked via this software interrupt when network interfaces receive messages for an Internet protocol; consequently, it is called without any parameters. The input routine, ipintr(), removes packets from its input queue one at a time and processes them to completion. A packet’s processing is completed in one of four ways: it is passed as input to a higher-level protocol, it encounters an error that is reported back to the source, it is dropped because of an error, or it is forwarded along the path to its destination. In outline form, the steps in the processing of an IP packet on input are as follows:

1. Verify that the packet is at least as long as an IP header, and ensure that the header is contiguous.

2. Checksum the header of the packet, and discard the packet if there is an error.

3. Verify that the packet is at least as long as the header indicates, and drop the packet if it is not. Trim any padding from the end of the packet.

4. Process any IP options in the header.

5. Check whether the packet is for this host. If it is, continue processing the packet. If it is not, and if doing IP packet forwarding, try to forward the packet. Otherwise, drop the packet.

6. If the packet has been fragmented, keep it until all its fragments are received and reassembled, or until it is too old to keep.

7. Pass the packet to the input routine of the next-higher-level protocol.

When the incoming packet is removed from the input queue, it is accompanied by an indication of the interface on which the packet was received. This information is passed to the next protocol, to the forwarding function, or to the error-reporting function. If any error is detected and is reported to the packet’s originator, the source address of the error message will be set according to the packet’s destination and the incoming interface.

The decision whether to accept a received packet for local processing by a higher-level protocol is not as simple as we might think. If a host has multiple addresses, the packet is accepted if its destination matches one of those addresses. If any of the attached networks support broadcast and the destination is a broadcast address, the packet is also accepted. (For reasons that are given in Section 13.1, there may be as many as five possible broadcast addresses for a given network.)

The IP input routine uses a simple and efficient scheme for locating the input routine for the receiving protocol of an incoming packet. The protocol field in the IP packet is 8 bits long; thus, there are 256 possible protocols. Fewer than 256 protocols are defined or implemented, and the Internet protocol switch has far fewer than 256 entries. Therefore, IP input uses a 256-element mapping array to map from the protocol number to the protocol-switch entry of the receiving protocol. Each entry in the array is initially set to the index of a raw IP entry in the protocol switch. Then, for each protocol with a separate implementation in the system, the corresponding map entry is set to the index of the protocol in the IP protocol switch. When a packet is received, IP simply uses the protocol field to index into the mapping array, and uses the value at that location as the index into the protocol-switch table for the receiving protocol.

Forwarding

Implementations of IP traditionally have been designed for use by either hosts or routers, rather than by both. That is, a system was either an endpoint for IP packets (as source or destination) or a router (which forwards packets between hosts on different networks, but only uses upper-level protocols for maintenance functions). Traditional host systems do not incorporate packet-forwarding functions; instead, if they receive packets not addressed to them, they simply drop the packets. 4.2BSD was the first common IP implementation that attempted to provide both host and router services in normal operation. This approach had advantages and disadvantages. It meant that 4.2BSD hosts connected to multiple networks could serve as routers as well as hosts, reducing the requirement for dedicated router machines. Early routers were neither inexpensive nor especially powerful. On the other hand, the existence of router-function support in ordinary hosts made it more likely for misconfiguration errors to result in problems on the attached networks. The most serious problem had to do with forwarding of a broadcast packet because of a misunderstanding by either the sender or the receiver of the packet’s destination. The packet-forwarding router functions are disabled by default in 4.4BSD. They may be enabled when a kernel binary is configured, and can be enabled at run time with the sysctl call. Hosts not configured as routers never attempt to forward packets or to return error messages in response to misdirected packets. As a result, far fewer misconfiguration problems are capable of causing synchronized or repetitive broadcasts on a local network, called broadcast storms.

The procedure for forwarding IP packets received at a router but destined for another host is the following:

1. Check that forwarding is enabled. If it is not, drop the packet.

2. Check that the destination address is one that allows forwarding. Packets destined for network 0, network 127 (the official loopback network), or illegal network addresses cannot be forwarded.

3. Save at most 64 octets of the received message, in case an error message must be generated in response.

4. Determine the route to be used in forwarding the packet.

5. If the outgoing route uses the same interface as that on which the packet was received, and if the originating host is on that network, send an ICMP redirect message to the originating host. (ICMP is described in Section 13.8.)

6. Call ip_output() to send the packet to its destination or to the next-hop gateway.

7. If an error is detected, send an ICMP error message to the source host.

Multicast transmissions are handled separately from other packets. Systems may be configured as multicast agents independently from other routing functions. Multicast agents receive all incoming multicast packets, and forward those packets to local receivers and group members on other networks according to group memberships and the remaining hop count of incoming packets.

13.4 Transmission Control Protocol (TCP)

The major protocol of the Internet protocol suite is the Transmission Control Protocol (TCP) [Postel, 1981b; Cerf & Kahn, 1974]. TCP is the reliable connection-oriented stream transport protocol on which most application protocols are based. It includes several features not found in the other transport and network protocols described so far:

• Explicit and acknowledged connection initiation and termination

• Reliable, in-order, unduplicated delivery of data

• Flow control

• Out-of-band indication of urgent data

• Congestion avoidance

Because of these features, the TCP implementation is much more complicated than are those of UDP and IP. These complications, along with the prevalence of the use of TCP, make the details of TCP’s implementation both more critical and more interesting than are the implementations of the simpler protocols. We shall begin with an examination of the TCP itself, then continue with a description of its implementation in 4.4BSD.

A TCP connection may be viewed as a bidirectional, sequenced stream of data octets transferred between two peers. The data may be sent in packets of varying sizes and at varying intervals—for example, when they are used to support a login session over the network. The stream initiation and termination are explicit events at the start and end of the stream, and they occupy positions in the sequence space of the stream so that they can be acknowledged in the same manner as data are. Sequence numbers are 32-bit numbers from a circular space; that is, comparisons are made modulo 232, so that zero is the next sequence number after 232–1. The sequence numbers for each direction start with an arbitrary value, called the initial sequence number, sent in the initial packet for a connection. In accordance with the TCP specification, the TCP implementation selects the initial sequence number by sampling a software counter that increments at about 250 KHz, then incrementing the counter so that later connections choose a different starting point, reducing the chance that an old duplicate packet will match the sequence space of a current connection. 4.4BSD includes a random component in the counter value so that the initial sequence number is somewhat less predictable, making it harder to “spoof” a network connection. Each packet of a TCP connection carries the sequence number of its first datum and (except during connection establishment) an acknowledgment of all contiguous data received. A TCP packet is known as a segment because it begins at a specific location in the sequence space and has a specific length. Acknowledgments are specified as the sequence number of the next sequence number not yet received. Acknowledgments are cumulative, and thus may acknowledge data received in more than one (or part of one) packet. A packet may or may not contain data, but always contains the sequence number of the next datum to be sent.

Flow control in TCP is done with a sliding-window scheme. Each packet with an acknowledgment contains a window, which is the number of octets of data that the receiver is prepared to accept, beginning with the sequence number in the acknowledgment. The window is a 16-bit field, limiting the window to 65535 octets by default; however, the use of a larger window may be negotiated (see the next subsection). Urgent data are handled similarly; if the flag indicating urgent data is set, the urgent-data pointer is used as a positive offset from the sequence number of the packet to indicate the extent of urgent data. Thus, TCP can send notification of urgent data without sending all intervening data, even if the flow-control window would not allow the intervening data to be sent.

The complete header for a TCP packet is shown in Fig. 13.7. The flags include SYN and FIN, denoting the initiation (synchronization) and completion of a connection. Each of these flags occupies a sequence space of one. A complete connection thus consists of a SYN, zero or more octets of data, and a FIN sent from each peer and acknowledged by the other peer. Additional flags indicate whether the acknowledgment field (ACK) and urgent fields (URG) are valid, and include a connection-abort signal (RST). The header includes a header-length field so that the header can be extended with optional fields. Options are encoded in the same way as are IP options: the no-operation and end-of-options options are single octets, and all other options include a type and a length. The only option in the initial specification of TCP indicates the maximum segment (packet) size that a correspondent is willing to accept; this option is used only during initial connection establishment. Several other options have been defined. To avoid confusion, the protocol standard allows these options to be used in data packets only if both endpoints include them during establishment of the connection.

Figure 13.7 TCP packet header.

TCP Connection States

The connection-establishment and connection-completion mechanisms of TCP are designed for robustness. They serve to frame the data that are transferred during a connection, so that not only the data but also their extent are communicated reliably. In addition, the procedure is designed to discover old connections that have not terminated correctly because of a crash of one peer or loss of network connectivity. If such a half-open connection is discovered, it is aborted. Hosts choose new initial sequence numbers for each connection to lessen the chances that an old packet may be confused with a current connection.

The normal connection-establishment procedure is known as a three-way handshake. Each peer sends a SYN to the other, and each in turn acknowledges the other’s SYN with an ACK. In practice, a connection is normally initiated by one of the two (the client) attempting to connect to the other (a server listening on a well-known port). The client chooses a port number and initial sequence number and uses these selections in the initial packet with a SYN. The server creates a new connection block for the pending connection and sends a packet with its initial sequence number, a SYN, and an ACK of the client’s SYN. The client responds with an ACK of the server’s SYN, completing connection establishment. As the ACK of the first SYN is piggybacked on the second SYN, this procedure requires three packets, leading to the term three-way handshake. (The protocol still operates correctly if both peers initiate the connection simultaneously, although it requires four packets in that case.)

4.4BSD includes three options along with SYN when initiating a connection. One contains the maximum segment size that the system is willing to accept. The other two options are more recent additions [Jacobson et al, 1992]. The first of these options specifies a window-scaling value expressed as a binary shift value, allowing the window to exceed 65535 octets. If both peers include this option during the three-way handshake, both scaling values take effect; otherwise, the window value remains in octets. The third option is a timestamp option. If this option is sent in both directions during connection establishment, it will also be sent in each packet during data transfer. The data field of the timestamp option includes a timestamp associated with the current sequence number, and also echoes a timestamp associated with the current acknowledgment. Like the sequence space, the timestamp uses a 32-bit field and modular arithmetic. The unit of the timestamp field is not defined, although it must fall between 1 millisecond and 1 second. The value sent by each system must be monotonically nondecreasing during a connection. 4.4BSD uses the value of a counter that is incremented twice per second. These timestamps can be used to implement round-trip timing. They also serve as an extension of the sequence space to prevent old duplicate packets from being accepted; this extension is valuable when a large window or a fast path is used.

After a connection is established, each peer includes an acknowledgment and window information in each packet. Each may send data according to the window that it receives from its peer. As data are sent by one end, the window becomes filled. As data are received by the peer, acknowledgments may be sent so that the sender can discard the data from its send queue. If the receiver is prepared to accept additional data, perhaps because the receiving process has consumed the previous data, it will also advance the flow-control window. Data, acknowledgments, and window updates may all be combined in a single message.

Table 13.1 TCP connection states.

If a sender does not receive an acknowledgment within some reasonable time, it retransmits data that it presumes were lost. Duplicate data are discarded by the receiver but are acknowledged again in case the retransmission was caused by loss of the acknowledgment. If the data are received out of order, the receiver generally retains the out-of-order data for use when the missing segment is received. Out-of-order data cannot be acknowledged, because acknowledgments are cumulative.†

†A selective acknowledgment mechanism was introduced in [Jacobson et al, 1992], but is not implemented in 4.4BSD.

Each peer may terminate data transmission at any time by sending a packet with the FIN bit. A FIN represents the end of the data (like an end-of-file indication). The FIN is acknowledged, advancing the sequence number by 1. The connection may continue to carry data in the other direction until a FIN is sent in that direction. The acknowledgment of that FIN terminates the connection. To guarantee synchronization at the conclusion of the connection, the peer sending the last ACK of a FIN must retain state long enough that any retransmitted FIN packets would have reached it or have been discarded; otherwise, if the ACK were lost and a retransmitted FIN were received, the receiver would be unable to repeat the acknowledgment. This interval is arbitrarily set to twice the maximum expected segment lifetime (known as 2MSL).

The TCP input-processing module and timer modules must maintain the state of a connection throughout that connection’s lifetime. Thus, in addition to processing data received on the connection, the input module must process SYN and FIN flags and other state transitions. The list of states for one end of a TCP connection is given in Table 13.1. Figure 13.8 shows the finite-state machine made up by these states, the events that cause transitions, and the actions during the transitions. An earlier version of the TCP implementation was implemented as an explicit state machine.

If a connection is lost because of a crash or timeout on one peer, but is still considered established by the other, then any data sent on the connection and received at the other end will cause the half-open connection to be discovered. When a half-open connection is detected, the receiving peer sends a packet with the RST flag and a sequence number derived from the incoming packet to signify that the connection is no longer in existence.

Figure 13.8 TCP state diagram. TCB—TCP control block; 2MSL—twice maximum segment lifetime.

Sequence Variables

Each TCP connection maintains a large set of state variables in the TCP control block. This information includes the connection state, timers, options and state flags, a queue that holds data received out of order, and several sequence number variables. The sequence variables are used to define the send and receive sequence space, including the current window for each. The window is the range of data sequence numbers that are currently allowed to be sent, from the first octet of data not yet acknowledged up to the end of the range that has been offered in the window field of a header. The variables used to define the windows in 4.4BSD are a superset of those used in the protocol specification [Postel, 1981b]. The send and receive windows are shown in Fig. 13.9. The meanings of the sequence variables are listed in Table 13.2.

The area between snd_una and snd_una + snd_wnd is known as the send window. Data for the range snd_una to snd_max have been sent but not yet acknowledged, and are kept in the socket send buffer along with data not yet transmitted. The snd_nxt field indicates the next sequence number to be sent, and is incremented as data are transmitted. The area from snd_nxt to snd_una + snd_wnd is the remaining usable portion of the window, and its size determines whether additional data may be sent. The snd_nxt and snd_max values are normally maintained together except when TCP is retransmitting.

The area between rcv_nxt and rcv_nxt + rcv_wnd is known as the receive window. These variables are used in the output module to decide whether data can be sent, and in the input module to decide whether data that are received can be accepted. When the receiver detects that a packet is not acceptable because the data are all outside the window, it drops the packet, but sends a copy of its most recent acknowledgment. If the packet contained old data, the first acknowledgment may have been lost, and thus it must be repeated. The acknowledgment also includes a window update, synchronizing the sender’s state with the receiver’s state.

Figure 13.9 TCP sequence space.

Table 13.2 TCP sequence variables.

If the TCP timestamp option is in use for the connection, the tests to see whether an incoming packet is acceptable are augmented with checks on the timestamp. Each time that an incoming packet is accepted as the next expected packet, its timestamp is recorded in the ts_recent field in the TCP protocol control block. If an incoming packet includes a timestamp, the timestamp is compared to the most recently received timestamp. If the timestamp is less than the previous value, the packet is discarded as being an old duplicate and a current acknowledgment is sent in response. In this way, the timestamp serves as an extension to the sequence number, avoiding accidental acceptance of an old duplicate when the window is large or sequence numbers can be reused quickly. However, because of the granularity of the timestamp value, a timestamp received more than 24 days ago cannot be compared to a new value, and this test is bypassed. The current time is recorded when ts_recent is updated from an incoming timestamp to make this test. Of course, connections are seldom idle for longer than 24 days.

13.5 TCP Algorithms

Now that we have introduced TCP, its state machine, and its sequence space, we can begin to examine the implementation of the protocol in 4.4BSD. Several aspects of the protocol implementation depend on the overall state of a connection. The TCP connection state, output state, and state changes depend on external events and timers. TCP processing occurs in response to one of three events:

1. A request from the user, such as sending data, removing data from the socket receive buffer, or opening or closing a connection

2. The receipt of a packet for the connection

3. The expiration of a timer

These events are handled in the routines tcp_usrreq(), tcp_input(), and tcp_timers(), respectively. Each routine processes the current event and makes any required changes in the connection state. Then, for any transition that may require output, the tcp_output() routine is called to do any output that is necessary.

The criteria for sending a packet with data or control information are complicated, and therefore the TCP send policy is the most interesting and important part of the protocol implementation. For example, depending on the state- and flow-control parameters for a connection, any of the following may allow to be sent data that could not be sent previously:

• A user send call that places new data in the send queue

• The receipt of a window update from the peer TCP

• The expiration of the retransmission timer

• The expiration of the window-update (persist) timer

In addition, the tcp_output() routine may decide to send a packet with control information, even if no data may be sent, for any of these reasons:

• A change in connection state (e.g., open request, close request)

• Receipt of data that must be acknowledged

• A change in the receive window because of removal of data from the receive queue

• A send request with urgent data

• A connection abort

We shall consider most of these decisions in greater detail after we have described the states and timers involved. We begin with algorithms used for timing, connection setup, and shutdown; they are distributed through several parts of the code. We continue with the processing of new input and an overview of output processing and algorithms.

Timers

Unlike a UDP socket, a TCP connection maintains a significant amount of state information, and, because of that state, some operations must be done asynchronously. For example, data might not be sent immediately when a process presents them, because of flow control. The requirement for reliable delivery implies that data must be retained after they are first transmitted so that they can be retransmitted if necessary. To prevent the protocol from hanging if packets are lost, each connection maintains a set of timers used to recover from losses or failures of the peer TCP. These timers are stored in the protocol control block for a connection. Whenever they are set, they are decremented every 500 milliseconds by the tcp_slowtimo() routine (called as the TCP protocol switch pr_slowtimo routine) until they expire, triggering a call to tcp_timers().

Two timers are used for output processing. One is the retransmit timer (TCPT_REXMT). Whenever data are sent on a connection, the retransmit timer is started, unless it is already running. When all outstanding data are acknowledged, the timer is stopped. If the timer expires, the oldest unacknowledged data are resent (at most one full-sized packet) and the timer is restarted with a longer value. The rate at which the timer value is increased (the timer backoff) is determined by a table of multipliers that provides an exponential increase in timeout values up to a ceiling.

The other timer used for maintaining output flow is the persist timer (TCPT_PERSIST). This timer protects against the other type of packet loss that could cause a connection to constipate: the loss of a window update that would allow more data to be sent. Whenever data are ready to be sent, but the send window is too small to bother sending (zero, or less than a reasonable amount), and no data are already outstanding (the retransmit timer is not set), the persist timer is started. If no window update is received before the timer expires, the output routine sends as large a segment as the window allows. If that size is zero, it sends a window probe (a single octet of data) and restarts the persist timer. If a window update was lost in the network, or if the receiver neglected to send a window update, the acknowledgment will contain current window information. On the other hand, if the receiver is still unable to accept additional data, it should send an acknowledgment for previous data with a still-closed window. The closed window might persist indefinitely; for example, the receiver might be a network-login client, and the user might stop terminal output and leave for lunch (or vacation).

The third timer used by TCP is a keepalive timer (TCPT_KEEP). The keepalive timer has two different purposes at different phases of a connection. During connection establishment, this timer limits the time for the three-way handshake to complete. If it expires, the connection is timed out. Once the connection completes, the keepalive timer monitors idle connections that might no longer exist on the correspondent TCP because of timeout or a crash. If a socket-level option is set and the connection has been idle since the most recent keepalive timeout, the timer routine will send a keepalive packet designed to produce either an acknowledgment or a reset (RST) from the peer TCP. If a reset is received, the connection will be closed; if no response is received after several attempts, the connection will be dropped. This facility is designed so that network servers can avoid languishing forever if the client disappears without closing. Keepalive packets are not an explicit feature of the TCP protocol. The packets used for this purpose by 4.4BSD set the sequence number to 1 less than snd_una, which should elicit an acknowledgment from the correspondent TCP if the connection still exists.†

†In 4.4 BSD, the keepalive packet contains no data unless the system is configured with a kernel option for compatibility with 4.2BSD, in which case a single null octet is sent. A bug prevented 4.2BSD from responding to a keepalive packet unless the packet contained data. This option should no longer be necessary.

The final TCP timer is known as the 2MSL timer (TCPT_2MSL; “twice the maximum segment lifetime”). TCP starts this timer when a connection is completed by sending an acknowledgment for a FIN (from FIN_WAIT_2) or by receiving an ACK for a FIN (from CLOSING state, where the send side is already closed). Under these circumstances, the sender does not know whether the acknowledgment was received. If the FIN is retransmitted, it is desirable that enough state remain that the acknowledgment can be repeated. Therefore, when a TCP connection enters the TIME_WAIT state, the 2MSL timer is started; when the timer expires, the control block is deleted. If a retransmitted FIN is received, another ACK is sent and the timer is restarted. To prevent this delay from blocking a process closing the connection, any process close request is returned successfully without the process waiting for the timer. Thus, a protocol control block may continue its existence even after the socket descriptor has been closed. In addition, 4.4BSD starts the 2MSL timer when FIN_WAIT_2 state is entered after the user has closed; if the connection is idle until the timer expires, it will be closed. Because the user has already closed, new data cannot be accepted on such a connection in any case. This timer is set because certain other TCP implementations (incorrectly) fail to send a FIN on a receive-only connection. Connections to such hosts would remain in FIN_WAIT_2 state forever if the system did not have a timeout.

In addition to the four timers implemented by the TCP tcp_slowtimo() routine, TCP uses the protocol switch pr_fasttimo entry. The tcp_fasttimo() routine, called every 200 milliseconds, processes delayed acknowledgment requests. These functions will be described in Section 13.6.

Estimation of Round-Trip Time

When connections must traverse slow networks that lose packets, an important decision determining connection throughput is the value to be used when the retransmission timer is set. If this value is too large, data flow will stop on the connection for an unnecessarily long time before the dropped packet is resent. Another round-trip time interval is required for the sender to receive an acknowledgment of the resent segment and a window update, allowing it to send new data. (With luck, only one segment will have been lost, and the acknowledgment will include the other segments that had been sent.) If the timeout value is too small, however, packets will be retransmitted needlessly. If the cause of the network slowness or packet loss is congestion, then unnecessary retransmission only exacerbates the problem. The traditional solution to this problem in TCP is for the sender to estimate the round-trip time (rtt) for the connection path by measuring the time required to receive acknowledgments for individual segments. The system maintains an estimate of the round-trip time as a smoothed moving average, srtt [Postel, 1981b], using

srtt = (ALPHA × srtt) + ((1 - ALPHA) × rtt).

Older versions of the system set the initial retransmission timeout to a constant multiple (BETA) of the current smoothed round-trip time, with a smoothing factor ALPHA of 0.9 (retaining 90 percent of the previous average) and a variance factor BETA of 2. BSD versions, beginning with the 4.3BSD Tahoe release, use a more sophisticated algorithm. In addition to a smoothed estimate of the round-trip time, TCP keeps a smoothed variance (estimated as mean difference, to avoid square-root calculations in the kernel). It employs an ALPHA value of 0.875 for the round-trip time and a corresponding smoothing factor of 0.75 for the variance. These values were chosen in part so that the system could compute the smoothed av erages using shift operations on fixed-point values, instead of using floating-point values, as the earlier system did. (On many hardware architectures, it is expensive to use floating-point arithmetic in interrupt routines, because doing so forces floating-point registers and status to be saved and restored.) The initial retransmission timeout is then set to the current smoothed round-trip time plus four times the smoothed variance. This algorithm is substantially more efficient on long-delay paths with little variance in delay, such as satellite links, because it computes the BETA factor dynamically [Jacobson, 1988].

For simplicity, the variables in the TCP protocol control block allow measurement of the round-trip time for only one sequence value at a time. This restriction prevents accurate time estimation when the window is large; only one packet per window can be timed. However, if the TCP timestamps option is supported by both peers, a timestamp is sent with each data packet and is returned with each acknowledgment. In this case, estimates of round-trip time can be obtained with each new acknowledgment; the quality of the smoothed average and variance is thus improved, and the system can respond more quickly to changes in network conditions.

Connection Establishment

There are two ways in which a new TCP connection can be established. An active connection is initiated by a connect call, whereas a passive connection is created when a listening socket receives a connection request. We consider each in turn.

The initial steps of an active connection attempt are similar to the actions taken during the creation of a UDP socket. The process creates a new socket, resulting in a call to tcp_usrreq() with the PRU_ATTACH request. TCP creates an inpcb protocol control block just as does UDP, then creates an additional control block (a tcpcb structure), as described in Section 13.1. Some of the flow-control parameters in the tcpcb are initialized at this time. If the process explicitly binds an address or port number to the connection, the actions are identical to those for a UDP socket. Then, a connect call initiates the actual connection. The first step is to set up the association with in_pcbconnect(), again identically to this step in UDP. A packet-header template is created for use in construction of each output packet. An initial sequence number is chosen from a sequence-number prototype, which is then advanced by a substantial amount. The socket is then marked with soisconnecting(), the TCP connection state is set to TCPS_SYN_SENT, the keepalive timer is set (to 75 seconds) to limit the duration of the connection attempt, and tcp_output() is called for the first time.

The output-processing module tcp_output() uses an array of packet control flags indexed by the connection state to determine which control flags should be sent in each state. In the TCPS_SYN_SENT state, the SYN flag is sent. Because it has a control flag to send, the system sends a packet immediately using the prototype just constructed and including the current flow-control parameters. The packet normally contains three option fields: a maximum-segment-size option, a window-scale option and a timestamps option (see Section 13.4). The maximum-segment-size option communicates the largest segment size that TCP is willing to accept. To compute this value, the system locates a route to the destination. If the route specifies a maximum transmission unit (MTU), the system uses that value after allowing for packet headers. If the connection is to a destination on a local network (or a subnet of a local network—see Section 13.1), the maximum transmission unit of the outgoing network interface is used, possibly rounding down to a multiple of the mbuf cluster size for efficiency of buffering. If the destination is not local and nothing is known about the intervening path,† the default segment size (512 octets) is used. The retransmit timer is set to the default value (6 seconds), because no round-trip time information is available yet.

†TCP should use Path MTU Discovery as described in [Mogul & Deering, 1990]. However, this feature is not implemented in 4.4BSD.

With a bit of luck, a responding packet will be received from the target of the connection before the retransmit timer expires. If not, the packet is retransmitted and the retransmit timer is restarted with a greater value. If no response is received before the keepalive timer expires, the connection attempt is aborted with a “Connection timed out” error. If a response is received, however, it is checked for agreement with the outgoing request. It should acknowledge the SYN that was sent, and should include a SYN. If it does both, the receive sequence variables are initialized, and the connection state is advanced to TCPS_ESTABLISHED. If a maximum-segment-size option is present in the response, the maximum segment size for the connection is set to the minimum of the offered size and the maximum transmission unit of the outgoing interface; if the option is not present, the default size (512 data bytes) is recorded. The flag TF_ACKNOW is set in the TCP control block before the output routine is called, so that the SYN will be acknowledged immediately. The connection is now ready to transfer data.

The events that occur when a connection is created by a passive open are different. A socket is created and its address is bound as before. The socket is then marked by the listen call as willing to accept connections. When a packet arrives for a TCP socket in TCPS_LISTEN state, a new socket is created with sonewconn(), which calls the TCP PRU_ATTACH request to create the protocol control blocks for the new socket. The new socket is placed on the queue of partial connections headed by the listening socket. If the packet contains a SYN and is otherwise acceptable, the association of the new socket is bound, both the send and the receive sequence numbers are initialized, and the connection state is advanced to TCPS_SYN_RECEIVED. The keepalive timer is set as before, and the output routine is called after TF_ACKNOW has been set to force the SYN to be acknowledged; an outgoing SYN is sent as well. If this SYN is acknowledged properly, the new socket is moved from the queue of partial connections to the queue of completed connections. If the owner of the listening socket is sleeping in an accept call or does a select, the socket will indicate that a new connection is available. Again, the socket is finally ready to send data. Up to one window of data may have already been received and acknowledged by the time that the accept call completes.

Connection Shutdown

A TCP connection is symmetrical and full-duplex, so either side may initiate disconnection independently. As long as one direction of the connection can carry data, the connection remains open. A socket may indicate that it has completed sending data with the shutdown system call, which results in a call to the tcp_usrreq() routine with request PRU_SHUTDOWN. The response to this request is that the state of the connection is advanced; from the ESTABLISHED state, the state becomes FIN_WAIT_1. The ensuing output call will send a FIN, indicating an endof-file. The receiving socket will advance to CLOSE_WAIT, but may continue to send. The procedure may be different if the process simply closes the socket; in that case, a FIN is sent immediately, but if new data are received, they cannot be delivered. Normally, higher-level protocols conclude their own transactions such that both sides know when to close. If they do not, however, TCP must refuse new data; it does so by sending a packet with RST set if new data are received after the user has closed. If data remain in the send buffer of the socket when the close is done, TCP will normally attempt to deliver them. If the socket option SO_LINGER was set with a linger time of zero, the send buffer is simply flushed; otherwise, the user process is allowed to continue, and the protocol waits for delivery to conclude. Under these circumstances, the socket is marked with the state bit SS_NOFDREF (no file-descriptor reference). The completion of data transfer and the final close can take place an arbitrary amount of time later. When TCP finally completes the connection (or gives up because of timeout or other failure), it calls tcp_close(). The protocol control blocks and other dynamically allocated structures are freed at this time. The socket also is freed if the SS_NOFDREF flag has been set. Thus, the socket remains in existence as long as either a file descriptor or a protocol control block refers to it.

13.6 TCP Input Processing

Although TCP input processing is considerably more complicated than is UDP input handling, the preceding sections have provided the background that we need to examine the actual operation. As always, the input routine is called with parameters

(void) tcp_input(m, hlen);

struct mbuf *m;

int hlen;

The first few steps probably are beginning to sound familiar:

1. Locate the TCP header in the received IP datagram. Make sure that the packet is at least as long as a TCP header, and use m_pullup() if necessary to make it contiguous.

2. Compute the packet length, set up the IP pseudoheader, and checksum the TCP header and data. Discard the packet if the checksum is bad.

3. Check the TCP header length; if it is larger than a minimal header, make sure that the whole header is contiguous.

4. Locate the protocol control block for the connection with the port number specified. If none exists, send a packet containing the reset flag RST and drop the packet.

5. Check whether the socket is listening for connections; if it is, follow the procedure described for passive connection establishment.

6. Process any TCP options from the packet header.

7. Clear the idle time for the connection, and set the keepalive timer to its normal value.

At this point, the normal checks have been made, and we are prepared to deal with data and control flags in the received packet. There are still many consistency checks that must be made during normal processing; for example, the SYN flag must be present if we are still establishing a connection, and must not be present if the connection has been established. We shall omit most of these checks from our discussion, but the tests are important to prevent wayward packets from causing confusion and possible data corruption.

The next step in checking a TCP packet is to see whether the packet is acceptable according to the receive window. It is important that this step be done before control flags—in particular RST—are examined, because old or extraneous packets should not affect the current connection unless they are clearly relevant in the current context. A segment is acceptable if the receive window has nonzero size, and if at least some of the sequence space occupied by the packet falls within the receive window. If the packet contains data, some of the data must fall within the window; portions of the data that precede the window are trimmed, as they have already been received, and portions that exceed the window also are discarded, as they have been sent prematurely. If the receive window is closed (rcv_wnd is zero), then only segments with no data and with a sequence number equal to rcv_nxt are acceptable. If an incoming segment is not acceptable, it is dropped after an acknowledgment is sent.

The processing of incoming TCP packets must be fully general, taking into account all the possible incoming packets and possible states of receiving endpoints. However, the bulk of the packets processed falls into two general categories. Typical packets contain either the next expected data segment for an existing connection or an acknowledgment plus a window update for one or more data segments, with no additional flags or state indications. Rather than considering each incoming segment based on first principles, tcp_input() checks first for these common cases. This algorithm is known as header prediction. If the incoming segment matches a connection in the ESTABLISHED state, if it contains the ACK flag but no other flags, if the sequence number is the next value expected (and the timestamp, if any, is nondecreasing), if the window field is the same as in the previous segment, and if the connection is not in a retransmission state, then the incoming segment is one of the two common types. The system processes any timestamp option that the segment contains, recording the value received to be included in the next acknowledgment. If the segment contains no data, it is a pure acknowledgment with a window update. In the usual case, round-trip–timing information is sampled if it is available, acknowledged data are dropped from the socket send buffer, and the sequence values are updated. The packet is discarded once the header values have been checked. The retransmit timer is canceled if all pending data have been acknowledged; otherwise, it is restarted. The socket layer is notified if any process might be waiting to do output. Finally, tcp_output() is called because the window has moved forward, and that operation completes the handling of a pure acknowledgment.

If a packet meeting the tests for header prediction contains the next expected data, if no out-of-order data are queued for the connection, and if the socket receive buffer has space for the incoming data, then this packet is a pure in-sequence data segment. The sequencing variables are updated, the packet headers are removed from the packet, and the remaining data are appended to the socket receive buffer. The socket layer is notified so that it can notify any interested process, and the control block is marked with a flag indicating that an acknowledgment is needed. No additional processing is required for a pure data packet.

For packets that are not handled by the header-prediction algorithm, the processing steps are as follows:

1. Process the timestamp option if it is present, rejecting any packets for which the timestamp has decreased, first sending a current acknowledgment.

2. Check whether the packet begins before rcv_nxt. If it does, ignore any SYN in the packet, and trim any data that fall before rcv_nxt. If no data remain, send a current acknowledgment and drop the packet. (The packet is presumed to be a duplicate transmission.)

3. If the packet still contains data after trimming, and the process that created the socket has already closed the socket, send a reset (RST) and drop the connection. This reset is necessary to abort connections that cannot complete; it typically is sent when a remote-login client disconnects while data are being received.