Chapter 12

Network Communication

In this chapter, we shall study the internal structure of the network subsystem provided in 4.4BSD. The networking facilities provide a framework within which many network architectures may coexist. A network architecture comprises a set of network-communication protocols, the protocol family; conventions for naming communication endpoints, the address family or address format; and any additional facilities that may fall outside the realm of connection management and data transfer. Networking facilities are accessed through the socket abstraction described in Chapter 11. The network subsystem provides a general-purpose framework within which network services are implemented. These facilities include

• A structured interface to the socket level that allows the development of network-independent application software

• A consistent interface to the hardware devices used to transmit and receive data

• Network-independent support for message routing

• Memory management

We describe the internal structure of the network subsystem in Section 12.1. Then, we discuss the interface between the socket layer and the network facilities, and examine the interfaces between the layers of software that make up the network subsystem. In Section 12.5, we discuss the routing services used by the network protocols; in Section 12.6, we describe the mechanisms provided to manage buffering and to control congestion. We present the raw-socket interface that provides direct access to lower-level network protocols in Section 12.7. Finally, in Section 12.8, we discuss an assortment of issues and facilities, including out-of-band data, subnetwork addressing, and the Address Resolution Protocol.

After we have discussed the framework in which the network protocols fit, we shall examine the implementations of several existing network protocols in Chapter 13. A detailed description of the internal data structures and functions of the network layers and protocols can be found in [Wright & Stevens, 1995].

12.1 Internal Structure

The network subsystem is logically divided into three layers. These three layers manage the following tasks:

1. Interprocess data transport

2. Internetwork addressing and message routing

3. Transmission-media support

The first two layers are made up of modules that implement communication protocols. The software in the third layer generally includes a protocol sublayer, as well as a sublayer that is structurally much like a device driver (see Section 6.3).

The topmost layer in the network subsystem is termed the transport layer. The transport layer must provide an addressing structure that permits communication between sockets and any protocol mechanisms necessary for socket semantics, such as reliable data delivery. The second layer, the network layer, is responsible for the delivery of data destined for remote transport or for network-layer protocols. In providing internetwork delivery, the network layer must manage a private routing database or use the systemwide facility for routing messages to their destination host. The bottom layer, the network-interface layer, or link layer, is responsible for transporting messages between hosts connected to a common transmission medium. The network-interface layer is mainly concerned with driving the transmission media involved and doing any necessary link-level protocol encapsulation and decapsulation.

The transport, network, and network-interface layers of the network subsystem most closely resemble the bottom three levels (2 through 0) of the Xerox Network System (XNS) architecture. These layers correspond to the transport, network, and link layers of the ISO Open Systems Interconnection Reference Model [ISO, 1984], respectively. The internal structure of the networking software is not directly visible to users. Instead, all networking facilities are accessed through the socket layer described in Chapter 11. Each communication protocol that permits access to its facilities exports a user request routine to the socket layer. This routine is used by the socket layer in providing access to network services.

The layering described here is a logical layering. The software that implements network services may use more or fewer communication protocols according to the design of the network architecture being supported. For example, raw sockets often use a null implementation at one or more layers. At the opposite extreme, tunneling of one protocol through another uses one network protocol to encapsulate and deliver packets for another protocol, and involves multiple instances of some layers.

Data Flow

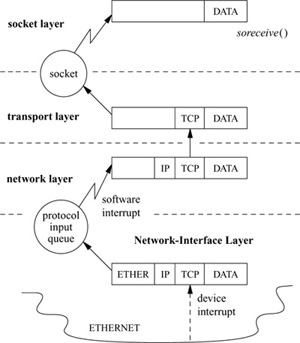

Data flow down to the network subsystem from the socket layer through calls to the transport-layer modules that support the socket abstraction. Data received at a network interface flow upward through communication protocols until they are placed in the receive queue of the destination socket. The downward flow of data typically is started by system calls. Data flowing upward are received asynchronously, and are passed from the network-interface layer to the appropriate communication protocol through per-protocol input message queues, as shown in Fig. 12.1. The system schedules network protocol processing from the network-interface layer by marking a bit assigned to the protocol in the system’s network-interrupt status word, and posting a software interrupt reserved for triggering network activity. Software interrupts are used to schedule asynchronous network activity, rather than protocols being run as independent processes, to avoid context-switching overhead. If a message received by a communication protocol is destined for a higher-level protocol, this protocol is invoked directly at software-interrupt level to process the message. Alternatively, if the message is destined for another host and the system is configured as a router, the message may be returned to the network-interface layer for retransmission.

Figure 12.1 Example of upward flow of a data packet in the network subsystem. ETHER—Ethernet header; IP—Internet Protocol header; TCP—Transmission Control Protocol header.

Communication Protocols

A network protocol is defined by a set of conventions, including packet formats, states, and state transitions. A communication-protocol module implements a protocol, and is made up of a collection of procedures and private data structures. Protocol modules are described by a protocol-switch structure that contains the set of externally visible entry points and certain attributes, shown in Fig. 12.2. The socket layer interacts with a communication protocol solely through the latter’s protocol-switch structure, recording the structure’s address in a socket’s so_proto field. This isolation of the socket layer from the networking subsystem is important in ensuring that the socket layer provides users with a consistent interface to all the protocols supported by a system. When a socket is created, the socket layer looks up the domain for the protocol family to find the array of protocol-switch structures for the family (see Section 11.4). A protocol is selected from the array based on the type of socket supported (the pr_type field) and optionally a specific protocol number (the pr_protocol field). The protocol switch has a back pointer to the domain (pr_domain). Within a protocol family, every protocol capable of supporting a socket directly (for example, most transport protocols) must provide a protocol-switch structure describing the protocol. Lower-level protocols such as network-layer protocols may also have protocol-switch entries, although whether they do can depend on conventions within the family.

Figure 12.2 Protocol-switch structure.

Before a protocol is first used, the protocol’s initialization routine is invoked. Thereafter, the protocol will be invoked for timer-based actions every 200 milliseconds if the pr_fasttimo() entry is present, and every 500 milliseconds if the pr_slowtimo() entry point is present. In general, protocols use the slower timer for most timer processing; the major use of the fast timeout is for delayed-acknowledgment processing. The pr_drain() entry is provided so that the system can notify the protocol if the system is low on space and would like any noncritical data to be discarded. Finally, the pr_sysctl() entry implements sysctl configuration operations specific to the protocol.

Protocols may pass data among themselves in chains of mbufs (see Section 11.3) using the pr_input() and pr_output() routines. The pr_input() routine passes data up toward the user, whereas the pr_output() routine passes data down toward the network. Similarly, control information passes up and down via the pr_ctlinput() and pr_ctloutput() routines. The user request routine, pr_usrreq(), is the interface between a protocol and the socket level; it is described in detail in Section 12.2.

In general, a protocol is responsible for storage space occupied by any of the arguments passed downward via these procedures and must either pass the space onward or dispose of it. On output, the lowest level reached must free space passed as arguments; on input, the highest level is responsible for freeing space passed up to it. Auxiliary storage needed by protocols is allocated from the mbuf store. This space is used temporarily to formulate messages or to hold variablesized socket addresses. (Some protocols also use mbufs for data structures such as state control blocks, although many such uses have been converted to use malloc() directly.) Mbufs allocated by a protocol for private use must be freed by that protocol when they are no longer in use.

The pr_flags field in a protocol’s protocol-switch structure describes the protocol’s capabilities and certain aspects of its operation that are pertinent to the operation of the socket level; the flags are listed in Table 12.1. Protocols that are connection based specify the PR_CONNREQUIRED flag, so that socket routines will never attempt to send data before a connection has been established. If the PR_WANTRCVD flag is set, the socket routines will notify the protocol when the user has removed data from a socket’s receive queue. This notification allows a protocol to implement acknowledgment on user receipt, and also to update flow-control information based on the amount of space available in the receive queue. The PR_ADDR field indicates that any data placed in a socket’s receive queue by the protocol will be preceded by the address of the sender. The PR_ATOMIC flag specifies that each user request to send data must be done in a single protocol send request; it is the protocol’s responsibility to maintain record boundaries on data to be sent. This flag also implies that messages must be received and delivered to processes atomically. The PR_RIGHTS flag indicates that the protocol supports the transfer of access rights; this flag is currently used by only those protocols in the local communication domain (see Section 11.6).

Table 12.1 Protocol flags.

Network Interfaces

Each network interface configured in a system defines a link-layer path through which messages can be sent and received. A link-layer path is a path that allows a message to be sent via a single transmission to its destination, without network-level forwarding. Normally, a hardware device is associated with this interface, although there is no requirement that one be (e.g., all systems have a software loopback interface used for most network traffic sent to local sockets). In addition to manipulating the hardware device, a network-interface module is responsible for encapsulation and decapsulation of any link-layer protocol header required to deliver a message to its destination. For common interface types, the link-layer protocol is implemented in a separate sublayer that is shared by various hardware drivers. The selection of the interface to use in delivering a packet is a routing decision carried out at the network-protocol layer. An interface may have addresses in one or more address families. Each address is set at boot time using an ioctl system call on a socket in the appropriate domain; this operation is implemented by the protocol family after the network interface verifies the operation with an ioctl entry point provided by the network interface. The network-interface abstraction provides protocols with a consistent interface to all hardware devices that may be present on a machine.

An interface and its addresses are defined by the structures shown in Fig.12.3. As interfaces are found at startup time, the ifnet structures are initialized and are placed on a linked list. The network-interface module generally maintains the ifnet interface data structure as part of a larger structure that also contains information used in driving the underlying hardware device. Similarly, the ifaddr interface address structure is often part of a larger structure containing additional protocol information about the interface or its address. Because network socket addresses are variable in size, the protocol is responsible for allocating the space referenced by the address, mask, and broadcast or destination address pointers in the ifaddr structure.

Figure 12.3 Network-interface data structures.

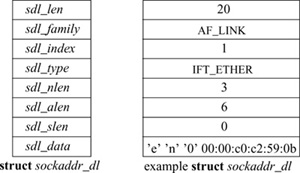

Each network interface contains identification of the interface in two forms: a character string identifying the driver plus a unit number for the driver (e.g. en0) and a binary systemwide index number. The index is used as a shorthand identifier—for example, when a route that refers to the interface is established. As each interface is found during system startup, the system creates an array of pointers to the ifnet structures for the interfaces. It can thus locate an interface quickly given an index number, whereas the lookup using a string name is less efficient. Some operations, such as interface address assignment, name the interface with a string for convenience because performance is not critical. Other operations, such as route establishment, pass a newer style of identifier that can use either a string or an index. The new identifier uses a sockaddr structure in a new address family, AF_LINK, indicating a link-layer address. The family-specific version of the structure is a sockaddr_dl structure, shown in Fig. 12.4, which may contain up to three identifiers. It includes an interface name as a string plus a length, with a length of zero denoting the absence of a name. It also includes an interface index as an integer, with a value of zero indicating that the index is not set. Finally, it may include a binary link-level address, such as an Ethernet address, and the length of the address. An address of this form is created for each network interface as the interface is configured by the system, and is returned in the list of local addresses for the system along with network protocol addresses (see later in this subsection). Figure 12.4 shows a structure describing an Ethernet interface that is the second interface on the system; the structure contains the interface name, the index, and the link-layer (Ethernet) address.

Figure 12.4 Link-layer address structure. The box on the left names the elements of the sockaddr_dl structure. The box on the right shows sample values for these elements for an Ethernet interface. The sdl_data array may contain a name (if sdl_nlen is nonzero, a link-layer address (if sdl_alen is nonzero), and an address selector (if sdl_slen is nonzero). For an Ethernet, sdl_data contains a three-character name, en0, followed by a 6-byte Ethernet address.

The interface data structure includes an if_data structure, which contains the externally visible description of the interface. It includes the link-layer type of the interface, the maximum network-protocol packet size that is supported, and the sizes of the link-layer header and address. It also contains numerous statistics, such as packets and bytes sent and received, input and output errors, and other data required by network-management protocols.

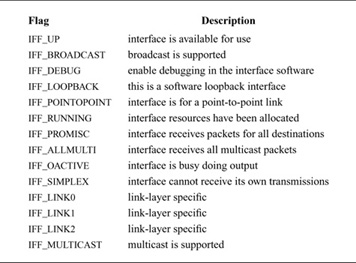

The state of an interface and certain externally visible characteristics are stored in the if_flags field described in Table 12.2. The first set of flags characterizes an interface. If an interface is connected to a network that supports transmission of broadcast messages, the IFF_BROADCAST flag will be set, and the interface’s address list will contain a broadcast address to be used in sending and receiving such messages. If an interface is associated with a point-to-point hardware link (e.g., a serial interface to a telephone circuit), the IFF_POINTOPOINT flag will be set, and the interface’s address list will contain the address of the host on the other side of the connection. (Note that the broadcast and point-to-point attributes are mutually exclusive, and that the two addresses share storage in the interface address structure.) These addresses and the local address of an interface are used by network-layer protocols in filtering incoming packets. The IFF_MULTICAST flag is set by interfaces that support multicast packets in addition to IFF_BROADCAST. Multicast packets are sent to one of several group addresses, and are intended for all members of the group.

Table 12.2 Network interface flags.

Additional interface flags describe the operational state of an interface. An interface sets the IFF_RUNNING flag after it has allocated system resources and has posted an initial read on the device that it manages. This state bit avoids multiple allocation requests when an interface’s address is changed. The IFF_UP flag is set when the interface is configured and is ready to transmit messages. The IFF_OACTIVE flag is used to coordinate between the if_output and if_start routines, described later in this subsection; it is set when no additional output may be attempted. The IFF_PROMISC flag is set by network-monitoring programs to enable promiscuous reception: when they wish to receive packets for all destinations, rather than for just the local system. Packets addressed to other systems are passed to the monitoring packet filter but are not delivered to network protocols. The IFF_ALLMULTI flag is similar, but applies to only multicast packets; it can be used by a multicast forwarding agent. The IFF_SIMPLEX flag is set by Ethernet drivers whose hardware cannot receive packets that they send; here, the output function simulates reception of broadcast and (depending on the protocol) multicast packets that have been sent. Finally, the IFF_DEBUG flag can be set to enable any optional driver-level diagnostic tests or messages. In addition to these interface flags, three additional flags are defined for use by individual link-layer drivers (IFF_LINK0, IFF_LINK1, and IFF_LINK2). They can be used to select link-layer options, such as Ethernet medium type.

Interface addresses and flags are set with ioctl requests. The requests specific to a network interface pass the name of the interface as a string in the input data structure, with the string containing the name for the interface type plus the unit number. Either the SIOCSIFADDR request or the SIOCAIFADDR request is used initially to define each interface’s addresses. The former sets a single address for the protocol on this interface. The latter adds an address, with an associated address mask and broadcast address. It allows an interface to support multiple addresses for the same protocol. In either case, the protocol allocates an ifaddr structure and sufficient space for the addresses and any private data, and links the structure onto the list of addresses for the network interface. In addition, most protocols keep a list of the addresses for the protocol. The result appears somewhat like a two-dimensional linked list, as shown in Fig. 12.5. An address can be deleted with the SIOCDIFADDR request.

The SIOCSIFFLAGS request can be used to change an interface’s state and to do site-specific configuration. The destination address of a point-to-point link is set with the SIOCSIFDSTADDR request. Corresponding operations exist to read each value. Protocol families also can support operations to set and read the broadcast address. Finally, the SIOCGIFCONF request can be used to retrieve a list of interface names and protocol addresses for all interfaces and protocols configured in a running system. Similar information is returned by a newer mechanism based on the sysctl system call with a request in the routing protocol family (see Sections 12.5 and 14.7). These requests permit developers to construct network processes such as the routing daemon without detailed knowledge of the system’s internal data structures.

Figure 12.5 Network-interface and protocol data structures. The linked list of ifnet structures appears on the left side of the figure. The ifaddr structures storing the addresses for each interface are on a linked list headed in the ifnet structure and shown as a horizontal list. The ifaddr structures for most protocols are linked together as well, shown in the vertical lists headed by pf1_addr and pf2_addr.

Each interface has a queue of messages to be transmitted and routines used for initialization and output. The if_output() routine accepts a packet for transmission, and normally handles link-layer encapsulation and queueing that are independent of the specific hardware driver in use. If the IFF_OACTIVE flag is not set, the output routine may then invoke the driver’s if_start() function to begin transmission. The start function then sets the IFF_OACTIVE flag if it is unable to accept additional packets for transmission; the flag will be cleared when transmission completes. The if_done() entry point is provided as a callback function for use when the output queue is emptied. This facility is not yet used, but is intended to support striping of data for a single logical interface across multiple physical interfaces.

If the interface resides on a system bus, a reset routine will be invoked after a bus reset has been done, so that the driver may reinitialize the interface. (This function was used on only the VAX, and should be removed or moved to a machine-dependent data structure.) An interface may also specify a watchdog timer routine and a timer value that (if it is nonzero) the system will decrement once per second, invoking the timer routine when the value expires. The timeout mechanism is typically used by interfaces to implement watchdog schemes for unreliable hardware, and to collect statistics that reside on the hardware device.

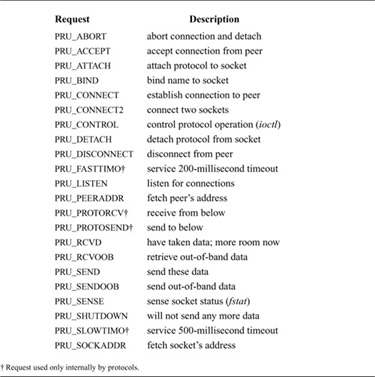

12.2 Socket-to-Protocol Interface

The interface from the socket routines to the communication protocols is through the user request, pr_usrreq(), and control output, pr_ctloutput(), routines, which are defined in the protocol-switch table for each protocol. When the socket layer requires services of a supporting protocol, it makes a call to one of these two routines. The control-output routine implements the getsockopt and setsockopt system calls; the user-request routine is used for all other operations. Calls to pr_usrreq() specify one of the requests shown in Table 12.3 (on page 406). Calls to pr_ctloutput() specify PRCO_GETOPT to get the current value of an option, or PRCO_SETOPT to set the value of an option.

Protocol User-Request Routine

Given a pointer to a protocol-switch entry, pr, a call on the user-request routine is of the form

error = (*pr->pr_usrreq)(so, req, m, addr, control);

struct socket *so;

int req;

struct mbuf *m, *addr, *control;

The so parameter specifies the socket for which the operation is requested, and req names the operation that is requested. The mbuf data chain m is supplied for output operations and for certain other operations where a result is to be returned. The addr parameter is supplied for address-oriented requests, such as PRU_BIND, PRU_CONNECT, and PRU_SEND (when an address is specified—e.g., the sendto call). The address is stored in an mbuf as a sockaddr structure. The control parameter is a pointer to an optional mbuf chain containing protocol-specific control information passed via the sendmsg call, such as user-specified access rights (see Section 11.6). Each protocol is responsible for disposal of the data mbuf chains on output operations. A nonzero return value from the user-request routine indicates an error number that should be passed to higher-level software. A description of each of the possible requests follows.

Table 12.3 pr_usrreq routine requests.

• PRU_ATTACH: Attach protocol to socket When a protocol is first bound to a socket (with the socket system call), the protocol module is called with the PRU_ATTACH request. It is the responsibility of the protocol module to allocate any resources necessary. The attach request will always precede any of the other requests, and will occur only once per socket.

• PRU_DETACH: Detach protocol from socket This operation is the inverse of the attach request, and is used at the time that a socket is deleted. The protocol module may deallocate any resources assigned to the socket.

• PRU_BIND: Bind address to socket When a socket is initially created, it has no address bound to it. This request indicates that an address should be bound to an existing socket. The protocol module must verify that the requested address is valid and is available for use.

• PRU_LISTEN: Listen for incoming connections The listen request indicates that the user wishes to listen for incoming connection requests on the associated socket. The protocol module should make any state changes needed to meet this request (if possible). A listen request always precedes any request to accept a connection.

• PRU_CONNECT: Connect socket to peer The connect request indicates that the user wants to a establish an association. The addr parameter describes the peer to which a connection is desired. The effect of a connect request may vary depending on the protocol. Virtual-circuit protocols use this request to initiate establishment of a network connection. Datagram protocols simply record the peer’s address in a private data structure. They use it as the destination address of all outgoing packets and as a source filter for incoming packets. There are no restrictions on how many times a connect request may be used after an attach, although most stream protocols allow only one connect call.

• PRU_ACCEPT: Accept pending connection Following a successful listen request and the arrival of one or more connections, this request is made to indicate that the user is about to accept a socket from the queue of sockets ready to be returned. The socket supplied as a parameter is the socket that is being accepted; the protocol module is expected to fill in the supplied buffer with the address of the peer connected to the socket.

• PRU_DISCONNECT: Disconnect connected socket This request eliminates an association created with a connect request. It is used with datagram sockets before a new association is created; it is used with connection-oriented protocols only when the socket is closed.

• PRU_SHUTDOWN: Shut down socket data transmission This call indicates that no more data will be sent. The protocol may, at its discretion, deallocate any data structures related to the shutdown and may notify a connected peer of the shutdown.

• PRU_RCVD: Data were received by user This request is made only if the protocol entry in the protocol-switch table includes the PR_WANTRCVD flag. When the socket layer removes data from the receive queue and passes them to the user, this request will be sent to the protocol module. This request may be used by the protocol to trigger acknowledgments, to refresh windowing information, to initiate data transfer, and so on. This request is also made when an application attempts to receive data on a socket that is in the confirming state, indicating that the protocol must accept the connection request before data can be received (see Section 11.5).

• PRU_SEND: Send user data Each user request to send data is translated into one or more PRU_SEND requests. A protocol may indicate that a single user send request must be translated into a single PRU_SEND request by specifying the PR_ATOMIC flag in its protocol description. The data to be sent are presented to the protocol as a chain of mbufs, and an optional address is supplied in the addr parameter. The protocol is responsible for preserving the data in the socket’s send queue if it is not able to send them immediately or if it may need them at some later time (e.g., for retransmission). The protocol must eventually pass the data to a lower level or free the mbufs.

• PRU_ABORT: Abnormally terminate service This request indicates an abnormal termination of service. The protocol should delete any existing associations.

• PRU_CONTROL: Do control operation The control request is generated when a user does an ioctl system call on a socket and the ioctl is not intercepted by the socket routines. This request allows protocol-specific operations to be provided outside the scope of the common socket interface. The addr parameter contains a pointer to a kernel data area where relevant information may be obtained or returned. The m parameter contains the actual ioctl request code. The control parameter contains a pointer to a network-interface structure if the ioctl operation pertains to a particular network interface. This case illustrates the most serious problem with the pr_usrreq entry point: for some requests, the parameters are overloaded with completely different data types.

• PRU_SENSE: Sense socket status The sense request is generated when the user makes an fstat system call on a socket; it requests the status of the associated socket. This call returns a standard stat structure that typically contains only the optimal transfer size for the connection (based on buffer size, windowing information, and maximum packet size).

• PRU_RCVOOB: Receive out-of-band data This operation requests that any out-of-band data now available are to be returned. An mbuf is passed to the protocol module, and the protocol should either place data in the mbuf or attach new mbufs to the one supplied if there is insufficient space in the single mbuf. An error may be returned if out-of-band data are not (yet) available or have already been consumed. The addr parameter contains any options, such as MSG_PEEK, that should be observed while this request is carried out.

• PRU_SENDOOB: Send out-of-band data This request is like the send request, but is used for out-of-band data.

• PRU_SOCKADDR: Retrieve local socket address This request indicates that the local address of the socket is to be returned, if one has been bound to the socket. The address (stored in a sockaddr structure in an address-family–specific format) is returned in the mbuf passed in the addr parameter.

• PRU_PEERADDR: Retrieve peer socket address This request indicates that the address of the peer to which the socket is connected is to be returned. The socket must be in a connected state for this request to be made to the protocol. The address (stored in a sockaddr structure in an address-family–specific format) is returned in the mbuf pointed to by the addr parameter.

• PRU_CONNECT2: Connect two sockets without binding addresses In this request, the protocol module is supplied two sockets, and is asked to establish a connection between the two without binding any addresses, if possible. The system uses this call in implementing the socketpair system call.

Internal Requests

The following requests are used internally by the protocol modules and are never generated by the socket routines. In certain instances, they are used solely for convenience in tracing a protocol’s operation (e.g., the slow timeout request).

• PRU_FASTTIMO: Service fast timeout A fast timeout has occurred. This request is made when a timeout occurs in the protocol’s pr_fasttimo() routine. The addr parameter indicates which timer expired.

• PRU_SLOWTIMO: Service slow timeout A slow timeout has occurred. This request is made when a timeout occurs in the protocol’s pr_slowtimo() routine. The addr parameter indicates which timer expired.

• PRU_PROTORCV: Receive data for protocol This request is used between protocols, rather than by the socket layer; it requests reception of data destined for a protocol and not for the user. No protocols currently use this facility.

• PRU_PROTOSEND: Send data to protocol This request allows a protocol to send data destined for another protocol module, rather than for a user. The details of how data are marked addressed-to-protocol instead of addressed-to-user are left to the protocol modules. No protocols currently use this facility.

Protocol Control-Output Routine

A call on the control-output routine is of the form

error = (*pr->pr_ctloutput)(op, so, level, optname, mp);

int op;

struct socket *so;

int level, optname;

struct mbuf **mp;

where op is PRCO_SETOPT when an option’s value is set, and is PRCO_GETOPT when an option’s value is retrieved. The level parameter indicates the layer of software that should interpret the option request. A level of SOL_SOCKET is specified to control an option at the socket layer. When the option is to be processed by a protocol module below the socket layer, level is set to the appropriate protocol number (the same number used in the socket system call.) Each level has its own set of option names; this name is interpreted by only the targeted layer of software. The final parameter is a pointer to a pointer to an mbuf; the preexisting mbuf contains an option’s new value when setting, and the pointer is used to return an mbuf that contains an option’s value when getting. Mbufs passed to the control-output routine when the socket layer is setting an option value must be freed by the protocol. When the socket layer is getting an option value, mbufs used to return an option value are allocated by the protocol and are returned to the socket layer, where they are freed after data are copied to the user.

In supporting the getsockopt and setsockopt system calls, the socket layer always invokes the control-output routine of the protocol attached to the socket. To access lower-level protocols, each control-output routine must pass control-output requests that are not for itself downward to the next protocol in the protocol hierarchy. Chapter 13 describes some of the options provided by the protocols in the Internet communication domain.

12.3 Protocol–Protocol Interface

The interface between protocol modules uses the pr_usrreq(), pr_input(), pr_output(), pr_ctlinput(), and pr_ctloutput() routines. The pr_usrreq() and pr_ctloutput() routines are used by the socket layer to communicate with protocols and have standard calling conventions. The remaining routines are not normally accessed outside a protocol family, and therefore different calling conventions have evolved.

Although imposing a standard calling convention for all of a protocol’s entry points might theoretically permit an arbitrary interconnection of protocol modules, it would be difficult in practice. Crossing of a protocol-family boundary would require a network address to be converted from the format of the caller’s domain to the format of the receiver’s domain. Consequently, connection of protocols in different communication domains is not generally supported, and calling conventions for the routines listed in the preceding paragraph are typically standardized on a per-domain basis. (However, the system does support encapsulation of packets from one protocol into packets of a protocol in another family to tunnel one protocol through another.)

In this section, we briefly examine the general framework and calling conventions of protocols. In Chapter 13, we examine specific protocols to see how they fit into this framework.

pr_output

The protocol output routine often uses a calling convention designed to send a single message on a connection; for example,

error = (*pr_output)(pcb, m);

struct pcb *pcb;

struct mbuf *m;

would send a message contained in m on a connection described by protocol control block pcb. Lower-level protocol output routines may not always have protocol control blocks, and thus may require more explicit parameters.

pr_input

Upper-level protocol input routines are usually called at software-interrupt level once the network-level protocol has located the protocol identifier. They generally have stricter conventions than do output routines because they are often called via a protocol switch. Depending on the protocol family, they may receive a pointer to a control block identifying the connection, or they may have to locate the control block from information in the received packet. A typical calling convention is

(void) (*pr_input)(m, hlen); struct mbuf *m;

int hlen;

In this example, the incoming packet is passed to a transport protocol in an mbuf m with the network protocol header still in place for the transport protocol to use, as well as the length of the header, hlen, so that the header can be removed. The protocol does the connection-level demultiplexing based on information in the network and transport headers.

pr_ctlinput

This routine passes control information (i.e., information that might be passed to the user, but does not consist of data) upward from one protocol module to another. The common calling convention for this routine is

(void) (*pr_ctlinput)(req, addr);

int req;

struct sockaddr *addr;

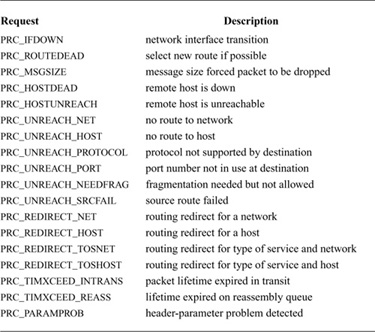

The req parameter is one of the values shown in Table 12.4 (on page 412). The addr parameter is the remote address to which the condition applies. Many of the requests have been derived from the Internet Control Message Protocol (ICMP) [Postel, 1981], and from error messages defined in the 1822 host/IMP convention [BBN, 1978]. Some protocols may pass additional parameters internally, such as local addresses or more specific information.

Table 12.4 Control-input routine requests.

12.4 Interface between Protocol and Network Interface

The lowest layer in the set of protocols that constitutes a protocol family must interact with one or more network interfaces to transmit and receive packets. It is assumed that any routing decisions have been made before a packet is sent to a network interface; a routing decision is necessary to locate any interface at all, unless a single hardwired interface is used. There are two cases with which we should be concerned in the interaction between protocols and network interfaces: transmission of a packet and receipt of a packet. We shall consider each separately.

Packet Transmission

If a protocol has chosen an interface identified by ifp, a pointer to a network interface structure, the protocol transmits a fully formatted network-level packet with the following call:

error = (*ifp->if_output)(ifp, m, dst, rt);

struct ifnet *ifp;

struct mbuf *m;

struct sockaddr *dst;

struct rtentry *rt;

The output routine for the network interface transmits the packet m to the protocol address specified in dst, or returns an error number. In reality, transmission may not be immediate or successful; typically, the output routine validates the destination address, queues the packet on its send queue, and primes an interrupt-driven routine to transmit the packet if the interface is not busy. For unreliable media, such as the Ethernet, successful transmission simply means that the packet has been placed on the cable without a collision. In contrast, an X.25 interface guarantees proper delivery or an error indication for each message transmitted. The model employed in the networking system attaches no promise of delivery to the packets presented to a network interface, and thus corresponds most closely to the Ethernet. Errors returned by the output routine are only those that can be detected immediately and are normally trivial in nature (network down, no buffer space, address format not handled, etc.). If errors are detected after the call has returned, the protocol is not notified.

When messages are transmitted, each network interface usually must formulate a link-layer address for each outgoing packet.† The interface layer must understand each protocol address format that it supports to formulate corresponding link-layer addresses. The network layer for each protocol family selects a destination address for each message, and then uses that address to select the appropriate network interface to use. This destination address is passed to the interface’s output routine as a sockaddr structure. Presuming that the address format is supported by the interface, the interface must map the destination protocol address into an address for the link-layer protocol associated with the transmission medium that the interface supports. This mapping may be a simple algorithm, it may require a table lookup, or it may require more involved techniques, such as use of the Address Resolution Protocol described in Section 12.8.

†A link-layer address may not be required for a point-to-point link.

Packet Reception

Network interfaces receive packets, and dispatch packets to the appropriate network-layer protocol according to information encoded in the link-layer protocol header. Each protocol family must have one or more protocols that constitute the network layer described in Section 12.1. In this system, each network-layer protocol has an input-packet queue assigned to it. Incoming packets received by a network interface are queued in a protocol’s input packet queue, and a software interrupt is posted to initiate network-layer processing; see Fig. 12.6 (on page 414). Similar queues are used to store packets awaiting transmission by network-interface modules.

Figure 12.6 Input packets dispatched to protocol input queues.

Several macros are available for manipulating packet queues:

• IF_ENQUEUE(ifq, m) |

Place the packet m at the tail of the |

• IF_DEQUEUE(ifq, m) |

Place a pointer to the packet at the head |

• IF_PREPEND(ifq, m) |

Place the packet m at the head of the |

Packet queues have a maximum length associated with them as a simple form of congestion control. The macro IF_QFULL() can be used to determine whether a queue is full; if it is, another macro, IF_DROP(), can then be used to record the event in statistics kept for the queue. For example, the following code fragment could be used in a network interface’s output routine:

if (IF_QFULL(ifp->if_snd)) {

IF_DROP(ifp->if_snd);

m_freem(m); /* discard packet */

error = ENOBUFS;

} else

IF_ENQUEUE(ifp->if_snd, m);

On receiving a packet, a network interface decodes the packet type, strips the link-layer protocol header, records the identity of the receiving interface, and then dispatches the packet to the appropriate protocol. For example, packets are enqueued for the Internet domain with

s = splimp();

if (IF_QFULL(&ipintrq)) {

IF_DROP(&ipintrq);

ifp->if_iqdrops++;

m_freem(m);

} else {

schednetisr(NETISR_IP); /* schedule IP input routine */

IF_ENQUEUE(&ipintrq, m); /* place message on IP’s queue */

}

splx(s);

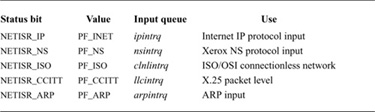

The schednetisr() macro marks a bit in a global status word, and then posts a software interrupt. When the software interrupt occurs, the interrupt handler scans the status word, and, for each preassigned bit that is set, invokes the associated protocol input routine. Note that multiple interfaces can place packets into the same queue, and thus interrupts from other interfaces must be blocked. Bits in the status word are assigned according to the value of their protocol-family identifiers, shown in Table 12.5.

Entries on a protocol’s input queue are mbuf chains with a valid packet header containing the packet’s length and a pointer to the network interface on which the packet was received. The pointer to the interface has many potential uses, such as deciding when to generate routing redirect messages. Input-handling routines that run at software-interrupt level are typically of the form

for (;;) {

s= splimp(); /* block network from queue */

IF_DEQUEUE(&xxintrq, m);

splx(s);

if (m == 0) /* all packets processed */

break;

/* process packet and determine receiving protocol */

(*pr_input)(m, hlen); /* invoke protocol */

}

Table 12.5 Network-interrupt status-word bit assignments.

While an entry is dequeued from an input queue, a protocol blocks all network-interface input handling by raising the processor’s priority level with splimp() to ensure that pointers in the queue data structure are not altered. Once a message is dequeued, it is processed; if there is information in the packet for a higher-level protocol, the message is passed upward.

12.5 Routing

The networking system was designed for an internetwork environment in which a collection of local-area networks is connected at one or more points through network nodes with multiple network interfaces, as shown in the example in Fig. 12.7. Nodes with multiple network interfaces—one on each local-area or long-haul network—may act as routers. †In such an environment, issues related to gatewaying and packet routing are important. Certain of these issues, such as congestion control, are handled simplistically in 4.4BSD (see Section 12.6). For others, the network system provides simple mechanisms on which more involved policies can be implemented. These mechanisms ensure that, as these problems become better understood, their solutions can be incorporated into the system.

†At the time of the original design of this part of the system, a network node that forwarded network-level packets was generally known as a gateway. The current Internet term is router. We use both terms interchangeably, in part because the system data structures continue to use the name gateway.

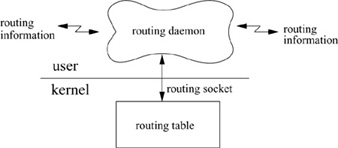

This section describes the facilities provided for packet routing. The routing facilities were designed for use by singly connected and multiply connected hosts, as well as for routers. There are several components involved in routing, illustrated in Fig. 12.8. The design of the routing system places some components within the operating system and others at user level. The routing facilities included in the kernel do not impose routing policies, but instead support a routing mechanism by which externally defined policies can be implemented. By a routing mechanism, we mean a table lookup that provides a first-hop route (a specific network interface and immediate destination) for each destination. Routing policies include all the components involved in choosing the first-hop routes, such as discovery of the local network topology, implementation of various routing protocols, and configuration information specifying local policies. The routing design places enough information in the kernel for packets to be sent on their way without external help; all other components are outside the kernel. User-level processes can be used to implement policies ranging from simple static routing to complex dynamic algorithms. These processes communicate with the kernel via a routing socket to manipulate the kernel routing table and to listen for internal routing events. Each of these components is described in this section. Although there is nothing in the system that prevents protocols from managing their own routing information, the facilities described here were designed to support most needs.

Figure 12.7 Example of the topology for which routing facilities were designed.

Kernel Routing Tables

The kernel routing mechanism implements a routing table for looking up first-hop routes (or next hop, when forwarding packets). It includes two distinct portions: a data structure describing each specific route (a routing entry) and a lookup algorithm to find the correct route for each possible destination. This subsection describes the entries in the routing table, and the next subsection explains the lookup algorithm. A destination is described by a sockaddr structure with an address family, a length, and a value. Routes are typed in two ways: as either host or network routes, and as either direct or indirect. The host–network distinction determines whether the route applies to a specific host, or to a group of hosts with a portion of their addresses in common—usually a prefix of the address. For host routes, the destination address of a route must exactly match the desired destination; the address family, length, and bit pattern of the destination must match those in the route. For network routes, the destination address in the route is paired with a mask. The route matches any address that contains the same bits as the destination in the positions indicated by bits set in the mask. A host route is a special case of a network route, in which the mask bits are set for the whole address, and thus no bits are ignored in the comparison. Another special case is a wildcard route: a network route with an empty mask. Such a route matches every destination and serves as a default route for destinations not otherwise known. This fall-back network route can be pointed to an intelligent gateway that can then make more informed routing decisions.

Figure 12.8 Routing design.

The other major distinction between types of routes is either direct or indirect. A direct route is one that leads directly to the destination: The first hop of the path is the entire path, and the destination is on a network shared with the source. Most routes are indirect: The route specifies a gateway on a local network that is the first-hop destination for the route. Much of the literature (especially for Internet protocols) refers to a local–remote decision, where an implementation checks first whether a destination is local to an attached network or is remote; in the first case, a packet is sent locally (via the link layer) to the destination; in the latter case, it is sent locally to the gateway to the destination. In the implementation, the local–remote decision is made as part of the routing lookup. If the best route is direct, then the destination is local. Otherwise, the route is indirect, the destination is remote, and the route entry specifies the gateway to the destination. In either case, the route specifies a first-hop route: a link-level interface to be used in sending packets, and the destination for the packets in this hop if different from the final destination. This information allows a packet to be sent via a local interface to a destination directly reachable via that interface–either the final destination or a router on the path to the destination. This distinction is needed when the link-layer encapsulation is done. If a packet is destined for a peer at a host or network that is not directly connected to the source, the internetwork packet header will contain the address of the eventual destination, whereas the link-layer protocol header will address the intervening gateway.

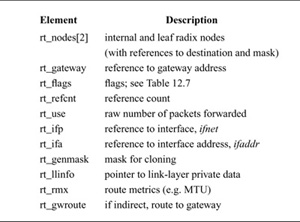

Table 12.6 Elements of a routing-table entry (rtentry) structure.

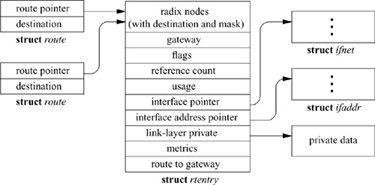

The network system maintains a set of routing tables that is used by protocols in selecting a network interface to use in delivering a packet to its destination. These tables are composed of entries of the form shown in Table 12.6.

Routing entries are stored in an rtentry structure, which contains a reference to the destination address and mask (unless the route is to a host, in which case the mask is implicit). The destination address, the address mask, and the gateway address are variable in size, and thus are placed in separately allocated memory. Routing entries also contain a reference to a network interface, a set of flags that characterize the route, and optionally a gateway address. The flags indicate a route’s type (host or network, direct or indirect) and the other attributes shown in Table 12.7. The route entry also contains a count of the number of packets sent via the route, a field for use by the link-layer driver, and a set of metrics. The RTF_HOST flag in a routing-table entry indicates that the route applies to a single host, using an implicit mask containing all the bits of the address. The RTF_GATEWAY flag in a routing-table entry indicates that the route is to an indirect gateway agent, and that the link-layer header should be filled in from the rt_gateway field, instead of from the final internetwork destination address. The route entry contains a field that can be used by the link layer to cache a reference to the direct route for the gateway. The RTF_UP flag is set when a route is installed. When a route is removed, the RTF_UP flag is cleared, but the route entry is not freed until all users of the route have noticed the failure and have released their references. The route entry contains a reference count because it is allocated dynamically and cannot be freed until all references have been released. The RTF_CLONING flag indicates that a route is a generic route that must be cloned and made more specific before use. This flag is usually used for link-layer routes that apply to a directly attached network, and the cloned routes are generally host routes for hosts on that network that contain some link-level information about that host. When a route is cloned, an external agent may be invoked to complete the link-layer information needed for a destination. Other flags (RTF_REJECT and RTF_BLACKHOLE) mark the destination of the route as being unreachable, causing either an error or a silent failure when an attempt is made to send to the destination. Reject routes are useful when a router receives packets for a cluster of addresses from the outside, but may not have routes for all hosts or networks in the cluster at all times. It is undesirable for packets with unreachable destinations to be sent outside the cluster via a default route, because the default router would send back such packets for delivery within the cluster. Black-hole routes are used during routing transients when a new route may become available shortly.

Table 12.7 Route entry flags.

Network protocols often send to the same destination repeatedly and may desire information about the path. Some of this information can be estimated dynamically for each connection, such as the round-trip time. It is useful to cache such information so that the estimation does not need to begin anew for each connection. The routing entry contains a set of route metrics stored in a rt_metrics structure that may be set externally, or may be determined dynamically by the protocols. These metrics include the maximum packet size for the path, called the maximum transmission unit (MTU); the hop count; the round-trip time and variance; the send and receive buffering requirements implied by the bandwidth–delay product; and congestion-avoidance parameters. Individual metrics can be locked, in which case they cannot be updated with dynamic estimates.

When a route is added or created by cloning, and when a route is deleted, the link layer is called via the ifa_rtrequest entry point stored in the ifaddr structure for this interface address. The link layer can allocate private storage associated with the route entry. This feature is used with direct routes to networks that are marked as cloning routes; the link layer can use this mechanism to manage link-layer address-translation information for each host. The address translation can be arranged within the system—for example, with a dynamic mechanism—or it can be handled outside the kernel when the RTF_XRESOLVE flag is set.

Routing Lookup

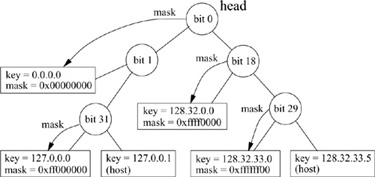

Given a set of routing entries describing various destinations, from specific hosts to a wildcard route, a routing lookup algorithm is required. Earlier versions of the system used a hash lookup in a pair of routing tables: one for host routes and one for network routes. However, this algorithm required the ability to determine the network part of each address to be looked up, which could be expensive or impossible. Not all protocols encode the address with a network part and a host part, and many protocols use multilevel addressing hierarchies. It is useful to create routes at any level of a hierarchy, allowing aggregation of the largest group of hosts for which the next-hop route is the same. Therefore, a new routing lookup algorithm was needed. The lookup algorithm in 4.4BSD uses a modification of the radix search trie [Sedgewick, 1990]. (The initial design was to use a PATRICIA search, also described in [Sedgewick, 1990], which differs only in the details of storage management.) The radix search algorithm provides a way to find a bit string, such as a network address, in a set of known strings. Although the modified search was implemented for routing lookups, the radix code is implemented in a more general way so that it can be used for other purposes. For example, the filesystem code uses a radix tree to manage information about clients to which filesystems can be exported. Each kernel route entry begins with the data structures for the radix tree, including an internal radix node and a leaf node that refers to the destination address and mask.

The radix search algorithm uses a binary tree of nodes beginning with a root node for each address family. Fig. 12.9 shows an example radix tree. A search begins at the root node, and descends through some number of internal nodes until a leaf node is found. Each internal node requires a test of a specific bit in the string, and the search descends in one of two directions depending on the value of that bit. The internal nodes contain an index of the bit to be tested, as well as a precomputed byte index and mask for use in the test. A leaf node is marked with a bit index of −1, which terminates the search. For example, a search for the address 127.0.0.1 with the tree in Fig. 12.9 would start at the head, and would branch left when testing bit 0, right at the node for bit 1, and right on testing bit 31. This search leads to the leaf node containing a host route specific to that host; such a route does not contain a mask, but uses an implicit mask with all bits set.

Figure 12.9 Example radix tree. This simplified example of a radix tree contains routes for one protocol family using 32-bit addresses. The circles represent internal nodes, beginning with the head of the tree at the top. The bit position to be tested is shown within the circle. Leaf nodes are shown as rectangles containing a key (a destination address, listed as four decimal bytes separated by dots) and the corresponding mask (in hexadecimal). Some interior nodes are associated with masks found lower in the tree, as indicated by dashed arrows.

This lookup technique tests the minimum number of bits required to distinguish among a set of bit strings. Once a leaf node is found, either it specifies the specific bit string in question, or that bit string is not present in the table. This algorithm allows a minimal number of bits to be tested in a string to look up an unknown, such as a host route; however, it does not provide for partial matching as required by a routing lookup for a network route. Thus, the routing lookup uses a modified radix search, in which each network route includes a mask, and nodes are inserted into the tree such that longer masks are found earlier in the search [Sklower, 1991]. Interior nodes for subtrees with a common prefix are marked with a mask for that prefix. (Masks generally select a prefix from an address, although the mask does not need to specify a contiguous portion of the address.) As the routing lookup proceeds, the internal nodes that are passed are associated with masks that increase in specificity. If the route that is found at the leaf after the lookup is a network route, the destination is masked before comparison with the key, thus matching any destination on that network. If the leaf node does not match the destination, one of the interior nodes visited during the route lookup should refer to the best match. After a lookup that does not find a match at the leaf node, the lookup procedure iterates backward through the tree, using a parent pointer in each node. At each interior node that contains a mask, a search is made for the part of the destination under that mask from that point. For example, a search for the address 128.32.33.7 in the table in Fig. 12.9 would test bits 0, 18, and 29 before arriving at the host route on the right (128.32.33.5). Because this address is not a match, the search moves up one level, where a mask is found. The mask is a 24-bit prefix, and is associated with the route to 128.32.33.0, which is the best match. If the mask was not a prefix (in the code, a route with a mask specifying a prefix is called a normal route), a search would have been required for the value 128.32.33.7 starting from this point.

The first match found is the best match for the destination; that is, it has the longest mask for any matching route. Matches are thus found by a combination of a radix search, testing 1 bit per node on the way down the tree, plus a full comparison under a mask at the leaf node. If the leaf node (either host or network) does not match, the search backtracks up the tree, checking each parent with a mask until a match is found. This algorithm avoids a complete comparison at each step when searching down the tree, which would eliminate the efficiency of the radix search algorithm. It is optimized for matches to routes with longer masks, and performs least efficiently when the best match is the default route (the route with the shortest mask).

Another complication of using a radix search is that a radix tree does not allow duplicated keys. There are two possible reasons for a key to be duplicated in the routing table: either multiple routes exist to the same destination or the same key is present with different masks. The latter case is not a complete duplicate, but the two routes would occupy the same location in the tree. The routing code does not support completely duplicate routes, but it supports multiple routes that differ in only the mask. When the addition of a route causes a key to be duplicated, the affected routes are chained together from a single leaf node. The routes are chained in order of mask significance, most specific mask first. If the masks are contiguous, longer masks are considered to be more specific (with a host route considered to have the longest possible mask). If a routing lookup visits a node with a duplicated key when doing a masked comparison (either at the leaf node, or while moving back up the tree), the comparison is repeated for each duplicate node on the chain, with the first successful comparison producing the best match.

As we noted, 4.4BSD does not support multiple routes to the same destination (identical key and mask). The main reason to support multiple paths would be to allow the load to be split among the paths. However, most network protocols in 4.4BSD cache a reference to a route, using it as long as the destination is the same and the route is valid. Similarly, when acting as a router, a network protocol may cache references to routes. In either case, interleaving of traffic across the available paths would often be suboptimal. A better design would be to add a pointer to an output function in each route. Most routes would copy the output pointer for the interface used by the route. Routes for which multiple paths were available would be represented by a virtual route containing references to the individual routes, which would not be placed in the radix tree. The virtual route would interpose an intermediate output function that would distribute packets to the output functions for the individual routes. This scheme would allow good packet interleaving even when a path was used by a single connection.

Routing Redirects

A routing redirect is a control request from a protocol to the routing system to modify an existing routing-table entry or to create a new routing-table entry. Protocols usually generate such requests in response to routing-redirect messages that they receive from routers. Routers generate routing-redirect messages when they recognize that a better route exists for a packet that they have been asked to forward. For example, if two hosts A and B are on the same network, and host A sends a packet to host B via a router C, then C will send a routing-redirect message to A indicating that A should send packets to B directly.

On hosts where exhaustive routing information is too expensive to maintain (e.g., small workstations), the combination of wildcard routing entries and routing-redirect messages can be used to provide a simple routing-management scheme without the use of a higher-level policy process. Current connections can be rerouted after notification of the protocols by the protocols’ pr_ctlinput() entries. Statistics are kept by the routing-table routines on the use of routing-redirect messages and on the latter’s effect on the routing tables. A redirect causes the gateway for a route to be changed if the redirect applies to all destinations to which the route applies; otherwise a new, more specific route is added.

Routing-Table Interface

A protocol accesses the routing tables through three routines: one to allocate a route, one to free a route, and one to process a routing-redirect control message. The routine rtalloc() allocates a route; it is called with a pointer to a route structure, which contains the desired destination, as shown in Fig. 12.10, and a pointer that will be set to reference the routing entry that is the best match for the destination. The destination is recorded so that subsequent output operations can check whether the new destination is the same as the previous one, allowing the same route to be used. The route returned is assumed to be held by the caller until released with a call to rtfree(). Protocols that implement virtual circuits, such as the Transmission Control Protocol (TCP), hold onto routes for the duration of the circuit’s lifetime; connectionless protocols, such as the User Datagram Protocol (UDP), allocate and free routes whenever the routes’ destination address changes. The rtalloc() routine simply checks whether the route already contains a reference to a valid route. If no route is referenced or the route is no longer valid, rtalloc() calls the rtalloc1() routine to look up a routing entry for the destination, passing a flag indicating whether the route will be used or is simply being checked. If packets will be sent, the route is created by cloning if necessary.

The rtredirect() routine is called to process a routing-redirect control message. It is called with a destination address and mask, the new gateway to that destination, and the source of the redirect. Redirects are accepted from only the current router for the destination. If a nonwildcard route exists to the destination, the gateway entry in the route is modified to point at the new gateway supplied. Otherwise, a new routing-table entry is inserted that reflects the information supplied. Routes to interfaces and routes to gateways that are not directly accessible from the host are ignored.

Figure 12.10 Data structures used in route allocation.

User-Level Routing Policies

The kernel routing facilities deliberately refrain from making policy decisions. Instead, routing policies are determined by user processes, which then add, delete, or change entries in the kernel routing tables. The decision to place policy decisions in a user process implies that routing-table updates may lag a bit behind the identification of new routes, or the failure of existing routes. This period of instability is normally short if the routing process is implemented properly. Internet-specific advisory information, such as ICMP error messages and IMP diagnostic messages, may also be read from raw sockets (described in Section 12.7).

Several routing-policy processes have been implemented. The system standard routing daemon, routed (8), uses a variant of the Xerox NS Routing Information Protocol [Xerox, 1981] to maintain up-to-date routing tables in a local environment. This protocol has become known as the Routing Information Protocol (RIP) [Hedrick, 1988]. Many sites that require the use of other routing protocols or more configuration options than are provided by routed (8) use a multiprotocol routing process called gated [Hallgren & Honig, 1993].

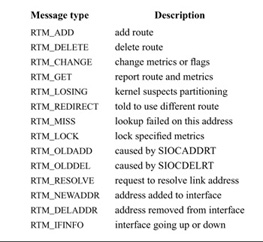

User-Level Routing Interface: Routing Socket

User-level processes that implement routing policy and protocols require an interface to the kernel routing table so that they can add, delete, and change kernel routes. In older versions of the system, route addition and deletion were implemented as ioctl commands that passed a kernel route entry as data. In those versions of the system, the route entry contained fixed-sized sockaddr structures for the destination and gateway values. However, it is no longer possible to use fixed-sized structures, and changes to the routing entry may require other components such as a mask for network routes, a mask for cloning operations, or an identification of the interface to be used by the route. A new interface was designed to accommodate these changes. Another design goal was to allow a routing process such as gated to learn of routing changes due to redirects, and of changes made by other processes.

The interface to the kernel routing layer in 4.4BSD uses a socket in a new protocol family to communicate with the kernel routing layer. A privileged process creates a raw socket in the routing protocol family, and then passes messages to and from the kernel routing layer. This socket operates like a normal datagram socket, including queueing of messages received at the socket, except that communication takes place between a user process and the kernel. Messages include a header with a message type identifying the action, as listed in Table 12.8 (on page 426). Messages to the kernel are requests to add, modify, or delete a route, or are requests for information about the route to a specific destination. The kernel sends a message in reply with the original request, an indication that the message is a reply, and an error number in case of failure. Because routing sockets are raw sockets, each open routing socket receives a copy of the reply. The message header includes a process ID and a sequence number so that each process can determine whether this message is a reply to its own request and can match replies with requests. The kernel also sends messages as indications of asynchronous events, such as redirects and changes in local interface state. These messages allow a daemon to monitor changes in the routing table made by other processes, events detected by the kernel, and changes to the local interface addresses and state. The routing socket is also used to deliver requests for external resolution of a link-layer route when the RTF_XRESOLVE flag is set on a route entry.

Table 12.8 Routing message types.

Requests to add or change a route include all the information needed for the route. The header has a field for the route flags listed in Table 12.7, and contains a rt_metrics structure of metrics that may be set or locked. The header also carries a bit vector that describes the set of addresses carried in the message; the addresses follow the header as an array of variable-sized sockaddr structures. A destination address is required, as is a mask for network routes. A gateway address is generally required as well. The system normally determines the interface to be used by the route from the gateway address, using the interface shared with that gateway. By convention, direct routes contain the local address of the interface to be used. In some cases, the gateway address is not sufficient to determine the interface, and an interface address can be passed as well, generally using a sockaddr_dl structure containing the interface name or index (see Section 12.1).

12.6 Buffering and Congestion Control

A major factor affecting the performance of a protocol is the buffering policy. Lack of a proper buffering policy can force packets to be dropped, cause false windowing information to be emitted by protocols, fragment host memory, and degrade the overall host performance. Because of problems such as these, most systems allocate a fixed pool of memory to the networking system and impose a policy optimized for normal network operation.

The 4.4BSD networking system is not dramatically different in this respect. Mbuf structures are allocated as needed via the general allocator malloc() up to the per-type limit for the network, and are eventually freed for reuse. At boot time, a small, fixed amount of memory is allocated by the networking system for mbuf clusters. At later times, more system memory may be requested for mbuf clusters as the need arises, up to a preconfigured limit; at no time, however, is this memory ever returned to the system. It would be possible to reclaim memory from the network. In the environments where the system has been used, storage use has not been an issue, and thus storage reclamation has been left unimplemented.

Protocol Buffering Policies

When a socket is created, the protocol reserves some amount of buffer space for send and receive queues. These amounts define the high watermarks used by the socket routines in deciding when to block and unblock a process. The reservation of space does not currently result in any action by the memory-management routines.

Protocols that provide connection-level flow control base their decisions on the amount of space in the associated socket queues. That is, windows sent to peers are calculated based on the amount of free space in the socket’s receive queue, whereas utilization of the send window received from a peer is dependent on the high watermark of the send queue.

Queue Limiting

Incoming packets from the network are always received unless memory allocation fails. However, each network-layer protocol input queue has an upper bound on the queue’s length, and any packets exceeding that bound are discarded. It is possible for a host to be overwhelmed by excessive network traffic (e.g., if the host is acting as a gateway from a high-bandwidth network to a low-bandwidth network). As a defense mechanism, the queue limits can be adjusted to throttle network-traffic load on a host. Discarding packets is not always a satisfactory solution to a problem such as this (simply dropping packets is likely to increase the load on a network); the queue lengths were incorporated mainly as a safeguard mechanism. On the other hand, limiting output queue lengths can be valuable on hosts that gateway traffic from a high-bandwidth network to a low-bandwidth network. The queue limit should be sufficiently high that transient overload can be handled by buffering, but allowing the queue to be too large causes network delays to increase to unacceptable levels.

12.7 Raw Sockets

A raw socket allows privileged users direct access to a protocol other than those normally used for transport of user data—for example, network-level protocols. Raw sockets are intended for knowledgeable processes that wish to take advantage of some protocol feature not directly accessible through the normal interface, or for the development of protocols built atop existing protocols. For example, the ping (8) program is implemented using a raw ICMP socket (see Section 13.8). The raw IP socket interface attempts to provide an identical interface to the one a protocol would have if it were resident in the kernel.

The raw socket support is built around a generic raw socket interface, possibly augmented by protocol-specific processing routines. This section describes only the core of the raw socket interface; details specific to particular protocols are not discussed. Some protocol families (including Internet) use private versions of the routines and data structures described here.

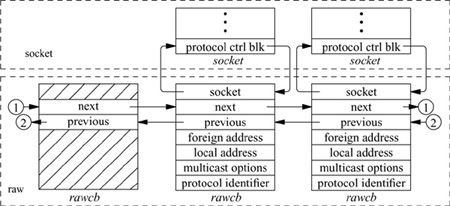

Control Blocks

Every raw socket has a protocol control block of the form shown in Fig. 12.11. All control blocks are kept on a doubly linked list for performing lookups during packet dispatch. Associations may be recorded in fields referenced by the control block and may be used by the output routine in preparing packets for transmission. The rcb_proto field contains the protocol family and protocol number with which the raw socket is associated. The protocol, family, and addresses are used to filter packets on input, as described in the next subsection.

A raw socket interface is datagram oriented: Each send or receive on the socket requires a destination address. Destination addresses may be supplied by the user, or referenced via pointers to sockaddr structures in the control block and automatically installed in the outgoing packet by the output routine. If routing is necessary, it must be performed by an underlying protocol.

Figure 12.11 Raw-socket control block.

Input Processing

Input packets are assigned to raw sockets based on a simple pattern-matching scheme. Each protocol (and potentially some network interfaces) gives unassigned packets to the raw input routine with the call

raw_input(m, proto, src, dst)

struct mbuf *m;

struct sockproto *proto;

struct sockaddr *src, *dst;

This call must be made at software-interrupt level (e.g., from a network-level protocol handler), rather than directly from hardware interrupt level. Input packets are placed into the input queues of all raw sockets that match the header according to the following rules:

1. The protocol family of the socket and header agree.

2. If the protocol number in the socket is nonzero, then it agrees with that found in the packet header.

3. If a local address is defined for the socket, the address format of the socket’s local address is the same as the packet’s destination address, and the two addresses agree exactly.

4. Rule 3 is applied to the socket’s foreign address and the packet’s source address.

A basic assumption in the pattern-matching scheme is that addresses present in the control block and packet header (as constructed by the network interface and any raw input-protocol module) are in a canonical form that can be compared on a bit-for-bit basis. If multiple sockets match the incoming packet, the packet is copied as needed.

Output Processing

On output, each send request results in a call to the raw socket’s user request routine, which is specific to the protocol or protocol family. Any necessary processing is done before the packet is delivered to the appropriate network interface.

12.8 Additional Network-Subsystem Topics

In this section, we shall discuss several aspects of the network subsystem that are not easy to categorize.

Out-of-Band Data