CHAPTER 2

Security Operations and Administration

This is where the planning hits reality; it’s in the day to day of information security operations that you see every decision made during the threat assessments and the risk mitigation plans being live-fire tested by your co-workers, customers, legitimate visitors, and threat actors alike. Whether you’re an on-shift watch-stander in a security operations center (SOC) or network operations center (NOC) or you work a pattern of normal business hours and days, you’ll be exposed to the details of information security in action. Security operations and administration entail a wide breadth of tasks and functions, and the security professional is expected to have a working familiarity with each of them. This can include maintaining a secure environment for business functions and the physical security of a campus and, specifically, the data center. Throughout your career, you will likely have to oversee and participate in incident response activities, which will include conducting investigations, handling material that may be used as evidence in criminal prosecution and/or civil suits, and performing forensic analysis. The Systems Security Certified Practitioner (SSCP) should also be familiar with common tools for mitigating, detecting, and responding to threats and attacks; this includes knowledge of the importance and use of event logging as a means to enhance security efforts. Another facet the security practitioner may have to manage could be how the organization deals with emergencies, including disaster recovery. There is a common thread running through all aspects of this topic: supporting business functions by incorporating security policy and practices with normal daily activities. This involves maintaining an accurate and detailed asset inventory, tracking the security posture and readiness of information technology (IT) assets through the use of configuration/change management, and ensuring personnel are trained and given adequate support for their own safety and security. This chapter will address all these aspects of security operations. The practitioner is advised, however, to not see this as a thorough treatment of all these concepts, each of which could be (and has been) the subject of an entire book (or books) by themselves; for each topic that is unfamiliar, you should look at the following content as an introduction only and pursue a more detailed review of related subject matter. The countries and regions that an organization operates in may have varying, distinct, and at times conflicting legal systems. Beyond considerations of written laws and regulations, the active functioning of court systems and regulatory bodies often has intricate, myriad applications in the real world that extend far beyond how things are codified in written laws. These factors become even more varied and complex when an organization functions in multiple countries and needs to deal with actual scenarios that directly involve international law and the laws of each respective nation. With that in mind, it is always imperative to get the input of a professional legal team to fully understand the legal scope and ramifications of security operations (and basically all operations and responsibilities beyond security as well). Your day-to-day journey along the roadmap of security operations and administration must keep one central ideal clearly in focus. Every day that you serve as an information security professional, you make or influence decisions. Every one of those decision moments is an opportunity or a vulnerability; it is a moment in which you can choose to do the technically and ethically correct thing or the expedient thing. Each of those decision moments is a test for you. Those decisions must be ethically sound; yes, they must be technically correct, cost-effective, and compliant with legal and regulatory requirements, but at their heart they must be ethical. Failure to do so puts your professional and personal integrity at risk, as much as it puts your employer’s or your clients’ reputation and integrity at risk. Being a security professional requires you to work, act, and think in ways that comply with and support the codes of ethics that are fundamental parts of your workplace, your profession, and your society and culture at large. Those codes of ethics should harmonize with if not be the fundamental ethical values and principles you live your life by—if they do not, that internal conflict in values may make it difficult if not impossible to achieve a sense of personal and professional integrity! Professional and personal integrity should be wonderfully, mutually self-reinforcing. Let’s first focus on what ethical decision-making means. This provides a context for how you, as an SSCP, comply with and support the (ISC)2 Code of Ethics in your daily work and life. We’ll see that this is critical to being able to live up to and fulfill the “three dues” of your responsibilities: due care, due diligence, and due process. Let’s start with what it means to be a professional: It means that society has placed great trust and confidence in you, because you have been willing to take on the responsibility to get things done right. Society trusts in you to know your practice, know its practical limits, and work to make sure that the services you perform meet or exceed the best practices of the profession. This is a legal and an ethical responsibility. Everything you do requires you to understand the needs of your employers or clients. You listen, observe, gather data, and ask questions; you think about what you’ve learned, and you come to conclusions. You make recommendations, offer advice, or take action within the scope of your job and responsibilities. Sometimes you take action outside of that scope, going above and beyond the call of those duties. You do this because you are a professional. You would not even think of making those conclusions or taking those actions if they violently conflicted with what known technical standards or recognized best technical practice said was required. You would not knowingly recommend or act to violate the law. Your professional ethics are no different. They are a set of standards that are both constraints and freedoms that you use to inform, shape, and then test your conclusions and decisions with before you act. As a professional—in any profession—you learned what that profession requires of you through education, training, and on-the-job experience. You learned from teachers, mentors, trainers, and the people working alongside of you. They shared their hard-earned insight and knowledge with you, as their part of promoting the profession you had in common. In doing so they strengthened the practice of the ethics of the profession, as well as the practice of its technical disciplines. (ISC)2 provides a Code of Ethics, and to be an SSCP, you agree to abide by it. It is short and simple. It starts with a preamble, which is quoted here in its entirety: The safety and welfare of society and the common good, duty to our principals, and to each other, requires that we adhere, and be seen to adhere, to the highest ethical standards of behavior. Therefore, strict adherence to this Code is a condition of certification. Let’s operationalize that preamble—take it apart, step-by-step, and see what it really asks of us.

The code is equally short, containing just four canons or principles to abide by. Protect society, the common good, necessary public trust and confidence, and the infrastructure. Act honorably, honestly, justly, responsibly, and legally. Provide diligent and competent service to principals. Advance and protect the profession. The canons do more than just restate the preamble’s two points. They show you how to adhere to the preamble. You must take action to protect what you value; that action should be done with honor, honesty, and with justice as your guide. Due care and due diligence are what you owe to those you work for (including the customers of the businesses that employ us!). The final canon talks to your continued responsibility to grow as a professional. You are on a never-ending journey of learning and discovery; each day brings an opportunity to make the profession of information security stronger and more effective. You as an SSCP are a member of a worldwide community of practice—the informal grouping of people concerned with the safety, security, and reliability of information systems and the information infrastructures of the modern world. In ancient history, there were only three professions—those of medicine, the military, and the clergy. Each had in its own way the power of life and death of individuals or societies in its hands. Each as a result had a significant burden to be the best at fulfilling the duties of that profession. Individuals felt the calling to fulfill a sense of duty and service, to something larger than themselves, and responded to that calling by becoming a member of a profession. This, too, is part of being an SSCP. Visit https://www.isc2.org for more information. Most businesses and nonprofit or other types of organizations have a code of ethics that they use to shape their policies and guide them in making decisions, setting goals, and taking actions. They also use these codes of ethics to guide the efforts of their employees, team members, and associates; in many cases, these codes can be the basis of decisions to admonish, discipline, or terminate their relationship with an employee. In most cases, organizational codes of ethics are also extended to the partners, customers, or clients that the organization chooses to do business with. Sometimes expressed as values or statements of principles, these codes of ethics may be in written form, established as policy directives upon all who work there; sometimes, they are implicitly or tacitly understood as part of the organizational culture or shaped and driven by key personalities in the organization. But just because they aren’t written down doesn’t mean that an ethical code or framework for that organization doesn’t exist. Fundamentally, these codes of ethics have the capacity to balance the conflicting needs of law and regulation with the bottom-line pressure to survive and flourish as an organization. This is the real purpose of an organizational ethical code. Unfortunately, many organizations let the balance go too far toward the bottom-line set of values and take shortcuts; they compromise their ethics, often end up compromising their legal or regulatory responsibilities, and end up applying their codes of ethics loosely if at all. As a case in point, consider that risk management must include the dilemma that sometimes there are more laws and regulations than any business can possibly afford to comply with and they all conflict with each other in some way, shape, or form. What’s a chief executive or a board of directors to do in such a circumstance? It’s actually quite easy to incorporate professional and personal ethics, along with the organization’s own code of ethics, into every decision process you use. Strengths, weaknesses, opportunities, and threats (SWOT) analyses, for example, focus your attention on the strengths, weaknesses, opportunities, and threats that a situation or a problem presents; being true to one’s ethics should be a strength in such a context, and if it starts to be seen as a weakness or a threat, that’s a danger signal you must address or take to management and leadership. Cost/benefits analyses or decision trees present the same opportunity to include what sometimes is called the New York Times or the Guardian test: How would each possible decision look if it appeared as a headline on such newspapers of record? Closer to home, think about the responses you might get if you asked your parents, family, or closest friends for advice about such thorny problems—or their reactions if they heard about it via their social media channels. Make these thoughts a habit; that’s part of the practice aspect of being a professional. As the on-scene information security professional, you’ll be the one who most likely has the first clear opportunity to look at an IT security posture, policy, control, or action, and challenge any aspects of it that you think might conflict with the organization’s code of ethics, the (ISC)2 Code of Ethics, or your own personal and professional ethics. What does it mean to “keep information secure?” What is a good or adequate “security posture?” Let’s take questions like these and operationalize them by looking for characteristics or attributes that measure, assess, or reveal the overall security state or condition of our information.

Note that these are characteristics of the information itself. Keeping information authentic, for example, levies requirements on all of the business processes and systems that could be used in creating or changing that information or changing anything about the information. All of these attributes boil down to one thing: decision assurance. How much can we trust that the decisions we’re about to make are based on reliable, trustworthy information? How confident can we be that the competitive advantage of our trade secrets or the decisions we made in private are still unknown to our competitors or our adversaries? How much can we count on that decision being the right decision, in the legal, moral, or ethical sense of its being correct and in conformance with accepted standards? Another way to look at attributes like these is to ask about the quality of the information. Bad data—data that is incomplete, incorrect, not available, or otherwise untrustworthy—causes monumental losses to businesses around the world; an IBM study reported that in 2017 those losses exceeded $3.1 trillion, which may be more than the total losses to business and society due to information security failures. Paying better attention to a number of those attributes would dramatically improve the reliability and integrity of information used by any organization; as a result, a growing number of information security practitioners are focusing on data quality as something they can contribute to. There are any number of frameworks, often represented by their acronyms, which are used throughout the world to talk about information security. All are useful, but some are more useful than others.

These frameworks, and many more, have their advocates, their user base, and their value. That said, in the interest of consistency, we’ll focus throughout this book on CIANA, as its emphasis on both nonrepudiation and authentication have perhaps the strongest and most obvious connections to the vitally important needs of e-commerce and our e-society to be able to conduct personal activities, private business, and governance activities in ways that are safe, respectful of individual rights, responsible, trustworthy, reliable, and transparent. It’s important to keep in mind that these attributes of systems performance or effectiveness build upon each other to produce the overall degree of trust and confidence we can rightly place on those systems and the information they produce for us. We rely on high-reliability systems because their information is correct and complete (high integrity), it’s where we need it when we need it (availability), and we know it’s been kept safe from unauthorized disclosure (it has authentic confidentiality), while at the same time we have confidence that the only processes or people who’ve created or modified it are trusted ones. Our whole sense of “can we trust the system and what it’s telling us” is a greater conclusion than just the sum of the individual CIANA, Parkerian, or triad attributes. Let’s look further at some of these attributes of information security. Often thought of as “keeping secrets,” confidentiality is actually about sharing secrets. Confidentiality is both a legal and ethical concept about privileged communications or privileged information. Privileged information is information you have, own, or create, and that you share with someone else with the agreement that they cannot share that knowledge with anyone else without your consent or without due process in law. You place your trust and confidence in that other person’s adherence to that agreement. Relationships between professionals and their clients, such as the doctor-patient or attorney-client ones, are prime examples of this privilege in action. In rare exceptions, courts cannot compel parties in a privileged relationship to violate that privilege and disclose what was shared in confidence. Confidentiality refers to how much we can trust that the information we’re about to use to make a decision with has not been seen by unauthorized people. The term unauthorized people generally refers to any person or any group of people who could learn something from our confidential information and then use that new knowledge in ways that would thwart our plans to attain our objectives or cause us other harm. Confidentiality needs dictate who can read specific information or files or who can download or copy them; this is significantly different from who can modify, create, or delete those files. One way to think about this is that integrity violations change what we think we know; confidentiality violations tell others what we think is our private knowledge. Business has many categories of information and ideas that it needs to treat as confidential, such as the following:

In many respects, such business confidential information either represents the results of investments the organization has already made or provides insight that informs decisions they’re about to make; either way, all of this and more represent competitive advantage to the company. Letting this information be disclosed to unauthorized persons, inside or outside of the right circles within the company, threatens to reduce the value of those investments and the future return on those investments. It could, in the extreme, put the company out of business! Let’s look a bit closer at how to defend such information. Our intellectual property are the ideas that we create and express in tangible, explicit form; in creating them, we create an ownership interest. Legal and ethical frameworks have long recognized that such creativity benefits a society and that such creativity needs to be encouraged and incentivized. Incentives can include financial reward, recognition and acclaim, or a legally protected ownership interest in the expression of that idea and its subsequent use by others. This vested interest was first recognized by Roman law nearly 2,000 years ago. Recognition is a powerful incentive to the creative mind, as the example of the Pythagorean theorem illustrates. It was created long before the concept of patents, rights, or royalties for intellectual property were established, and its creator has certainly been dead for a long time, and yet no ethical person would think to attempt to claim it as their own idea. Having the author’s name on the cover of a book or at the masthead of a blog post or article also helps to recognize creativity. Financial reward for ideas can take many forms, and ideally, such ideas should pay their own way by generating income for the creator of the idea, recouping the expenses they incurred to create it, or both. Sponsorship, grants, or the salary associated with a job can provide this; creators can also be awarded prizes, such as the Nobel Prize, as both recognition and financial rewards. The best incentive for creativity, especially for corporate-sponsored creativity, is in how that ownership interest in the new idea can be turned into profitable new lines of business or into new products and services. The vast majority of intellectual property is created in part by the significant investment of private businesses and universities in both basic research and product-focused developmental research. Legal protections for the intellectual property (or IP) thus created serve two main purposes. The first is to provide a limited period of time in which the owner of that IP has a monopoly for the commercial use of that idea and thus a sole claim on any income earned by selling products or providing services based on that idea. These monopolies were created by an edict of the government or the ruling monarchy, with the first being issued by the Doge of Venice in the year 1421. Since then, nation after nation has created patent law as the body of legal structure and regulation for establishing, controlling, and limiting the use of patents. The monopoly granted by a patent is limited in time and may even (based on applicable patent law) be limited in geographic scope or the technical or market reach of the idea. An idea protected by a patent issued in Colombia, for example, may not enjoy the same protection in Asian markets as an idea protected by U.S., U.K., European Union, or Canadian patent law. The second purpose is to publish the idea itself to the marketplace so as to stimulate rapid adoption of the idea, leading to widespread adoption, use, and influence upon the marketplace and upon society. Patents may be monetized by selling the rights to the patent or by licensing the use of the patent to another person or business; income from such licensing or sale has long been called the royalties from the patent (in recognition that it used to take an act of a king or a queen to make a patent enforceable). Besides patents and patent law, there exist bodies of law regarding copyrights, trademarks, and trade secrets. Each of these treats the fruits of one’s intellectually creative labors differently, and like patent law, these legal and ethical constructs are constantly under review by the courts and the cultures they apply to. Patents protect an idea, a process, or a procedure for accomplishing a practical task. Copyrights protect an artistic expression of an idea, such as a poem, a painting, a photograph, or a written work (such as this book). Trademarks identify an organization or company and its products or services, typically with a symbol, an acronym, a logo, or even a caricature or character (not necessarily of a person). Trade secrets are the unpublished ideas, typically about step-by-step details of a process, or the recipe for a sauce, paint, pigment, alloy, or coating, that a company or individual has developed. Each of these represent a competitive advantage worthy of protection. Note the contrast in these forms, as shown in Table 2.1. TABLE 2.1 Forms of Intellectual Property Protection The most important aspect of that table for you, as the on-scene information security professional, is the fourth column. Failure to defend and failure to keep secret both require that the owners and licensed or authorized users of a piece of IP must take all reasonable, prudent efforts to keep the ideas and their expression in tangible form safe from infringement. This protection must be firmly in place throughout the entire lifecycle of the idea—from its first rough draft of a sketch on the back of a cocktail napkin through drawings, blueprints, mathematical models, and computer-aided design and manufacturing (CADAM) data sets. All expressions of that idea in written, oral, digital, or physical form must be protected from inadvertent disclosure or deliberate but unauthorized viewing or copying. Breaking this chain of confidentiality can lead to voiding the claim to protection by means of patent, copyright, or trade secret law. In its broadest terms, this means that the organization’s information systems must ensure the confidentiality of this information. Protection of intellectual property must consider three possible exposures to loss: exfiltration, inadvertent disclosure, and failure to aggressively assert one’s claims to protection and compensation. Each of these is a failure by the organization’s management and leadership to exercise due care and due diligence.

Each of these possible exposures to loss starts with taking proper care of the data in the first place. This requires properly classifying it (in terms of the restrictions on handling, use, storage, or dissemination required), marking or labeling it (in human-readable and machine-readable ways), and then instituting procedures that enforce those restrictions. Most software is protected by copyright, although a number of important software products and systems are protected by patents. Regardless of the protection used, it is implemented via a license. Most commercially available software is not actually sold; customers purchase a license to use it, and that license strictly limits that use. This license for use concept also applies to other copyrighted works, such as books, music, movies, or other multimedia products. As a customer, you purchase a license for its use (and pay a few pennies for the DVD, Blu-Ray, or other media it is inscribed upon for your use). In most cases, that license prohibits you from making copies of that work and from giving copies to others to use. Quite often, they are packaged with a copy-protection feature or with features that engage with digital rights management (DRM) software that is increasingly part of modern operating systems, media player software applications, and home and professional entertainment systems. It is interesting to note that, on one hand, digital copyright law authorizes end-user licensees to make suitable copies of a work in the normal course of consuming it—you can make backups, for example, or shift your use of the work to another device or another moment in time. On the other hand, the same laws prohibit you from using any reverse engineering, tools, processes, or programs to defeat, break, side-step, or circumvent such copy protection mechanisms. Some of these laws go further and specify that attempts to defeat any encryption used in these copy protection and rights management processes is a separate crime itself. These laws are part of why businesses and organizations need to have acceptable use policies in force that control the use of company-provided IT systems to install, use, consume, or modify materials protected by DRM or copy-protect technologies. The employer, after all, can be held liable for damages if they do not exert effective due diligence in this regard and allow employees to misuse their systems in this way. By contrast, consider the Creative Commons license, sometimes referred to as a copyleft. The creator of a piece of intellectual property can choose to make it available under a Creative Commons license, which allows anyone to freely use the ideas provided by that license so long as the user attributes the creation of the ideas to the licensor (the owner and issuer of the license). Businesses can choose to share their intellectual property with other businesses, organizations, or individuals by means of licensing arrangements. Copyleft provides the opportunity to widely distribute an idea or a practice and, with some forethought, leads to creating a significant market share for products and services. Pixar Studios, for example, has made RenderMan, its incredibly powerful, industry-leading animation rendering software, available free of charge under a free-to-use license that is a variation of a creative commons license. In March 2019, the National Security Agency made its malware reverse engineering software, called Ghidra, publicly available (and has since issued bug fix releases to it). Both approaches reflect a savvy strategy to influence the ways in which the development of talent, ideas, and other products will happen in their respective marketplaces. Corporations constantly research the capabilities of their competitors to identify new opportunities, technologies, and markets. Market research and all forms of open source intelligence (OSINT) gathering are legal and ethical practices for companies, organizations, and individuals to engage in. Unfortunately, some corporate actors extend their research beyond the usual venue of trade shows and reviewing press releases and seek to conduct surveillance and gather intelligence on their competitors in ways that move along the ethical continuum from appropriate to unethical and, in some cases, into illegal actions. In many legal systems, such activities are known as espionage, rather than research or business intelligence, as a way to clearly focus on their potentially criminal nature. (Most nations consider it an illegal violation of their sovereignty to have another nation conduct espionage operations against it; most nations, of course, conduct espionage upon each other regardless.) To complicate things even further, nearly all nations actively encourage their corporate citizens to gather business intelligence information about the overseas markets they do business in, as well as about their foreign or multinational competitors operating in their home territories. The boundary between corporate espionage and national intelligence services has always been a blurry frontier. When directed against a competitor or a company trying to enter the marketplace, corporate-level espionage activities that might cross over an ethical or legal boundary can include attempts to do the following:

All of the social engineering techniques used by hackers and the whole arsenal of advanced persistent threat (APT) tools and techniques might be used as part of an industrial espionage campaign. Any or all of these techniques can and often are done by third parties, such as hackers (or even adolescents), often through other intermediaries, as a way of maintaining a degree of plausible deniability. You will probably never know if that probing and scanning hitting your systems today has anything to do with the social engineering attempts by phone or email of a few weeks ago. You’ll probably never know if they’re related to an industrial espionage attempt, to a ransom or ransomware attack, or as part of an APT’s efforts to subvert some of your systems as launching pads for their attacks on other targets. Protect your systems against each such threat vector as if each system does have the defense of the company’s intellectual property “crown jewels” as part of its mission. That’s what keeping confidences, what protecting the confidential, proprietary, or business-private information, comes down to, doesn’t it? Integrity, in the common sense of the word, means that something is whole, complete, its parts smoothly joined together. People with high personal integrity are ones whose actions and words consistently demonstrate the same set of ethical principles. Having such integrity, you know you can count on them and trust them to act both in ways they have told you they would and in ways consistent with what they’ve done before. When talking about information systems, integrity refers to both the information in them and the processes (that are integral to that system) that provide the functions we perform on that information. Both of these—the information and the processes—must be complete, correct, function together correctly, and do so in reliable, repeatable, and deterministic ways for the overall system to have integrity. When we measure or assess information systems integrity, therefore, we can think of it in several ways.

Note that in all but the simplest of business or organizational architectures, you’ll find multiple sets of business logic and therefore business processes that interact with each other throughout overlapping cycles of processing. Some of these lines of business can function independently of each other, for a while, so long as the information and information systems that serve that line of business directly are working correctly (that is, have high enough levels of integrity).

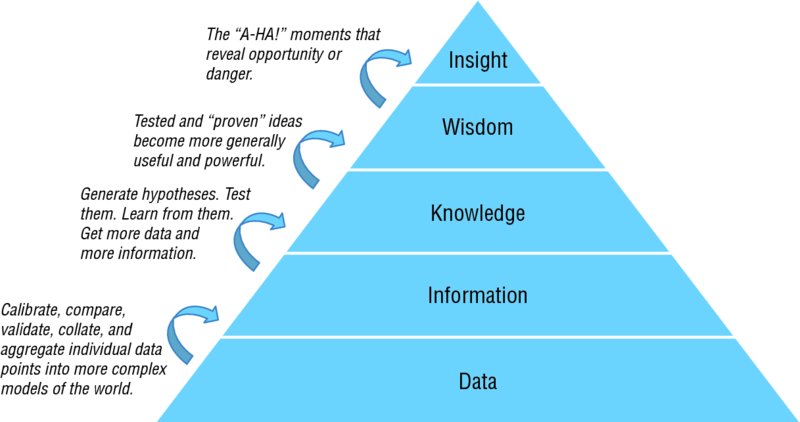

You make a decision to trust in what your systems are telling you. You choose to believe what the test results, the outputs of your monitoring systems, and your dashboards and control consoles are presenting to you as “ground truth,” the truth you could observe if you were right there on the ground where the event reported by your systems is taking place. Most of the time, you’re safe in doing so. The operators of Iran’s nuclear materials processing plant believed what their control systems were reporting to them, all the while the Stuxnet malware had taken control of both the processing equipment and the monitoring and display systems. Those displays lied to their users, while Stuxnet drove the uranium processing systems to self-destruct. An APT that gets deep into your system can make your systems lie to you as well. Attackers have long used the techniques of perception management to disguise their actions and mislead their targets’ defenders. Your defense: Find a separate and distinct means for verifying what your systems are telling you. Get out-of-band or out-of-channel and gather data in some other way that is as independent as possible from your mainline systems; use this alternative source intelligence as a sanity check. Integrity applies to three major elements of any information-centric set of processes: to the people who run and use them, to the data that the people need to use, and to the systems or tools that store, retrieve, manipulate, and share that data. Note, too, that many people in the IT and systems world talk about “what we know” in four very different but strongly related ways, sometimes referred to as D-I-K-W.

Figure 2.1 illustrates this knowledge pyramid. FIGURE 2.1 The DIKW knowledge pyramid Professional opinion in the IT and information systems world is strongly divided about data versus DIKW, with about equal numbers of people holding that they are the same ideas, that they are different, and that the whole debate is unnecessary. As an information security professional, you’ll be expected to combine experience, training, and the data you’re observing from systems and people in real time to know whether an incident of interest is about to become a security issue, whether your organization uses knowledge management terminology like this or not. This is yet another example of just how many potentially conflicting, fuzzy viewpoints exist in IT and information security. Is the data there when we need it in a form we can use? We make decisions based on information; whether that is new information we have gathered (via our data acquisition systems) or knowledge and information we have in our memory, it’s obvious that if the information is not where we need it when we need it, we cannot make as good a decision as we might need.

Those might seem obvious, and they are. Key to availability requirements is that they specify what information is needed; where it will need to be displayed, presented, or put in front of the decision-makers; and within what span of time the data is both available (displayed to the decision-makers) and meaningful. Yesterday’s data may not be what we need to make today’s decision. Note that availability means something different for a system than it does for the information the system produces for us. Systems availability is measurable, such as via a percentage of capacity or a throughput rate. Information availability, by contrast, tells us one of three things.

Information and information systems represent significant investments by organizations, and as a result, there’s a strong bottom-line financial need to know that such investments are paying off—and that their value is not being diluted due to loss of control of that information (via a data breach or exfiltration) or loss or damage to the data’s integrity or utility. Organizations have three functional or operational needs for information regarding accountability. First, they gather information about the use of corporate information and IT systems. Then they consolidate, analyze, and audit that usage information. Finally, they use the results of those reviews to inform decision-making. Due diligence needs, for example, are addressed by resource chargeback, which attributes the per-usage costs of information to each internal user organization. Individuals must also be held accountable for their own actions, including their use or misuse of corporate information systems. Surrounding all of this is the need to know whether the organization’s information security systems are actually working correctly and that alarms are being properly attended to. Although legal and cultural definitions of privacy abound, we each have an internalized, working idea of what it means to keep something private. Fundamentally, this means that when we do something, write something down, or talk with another person, we have a reasonable expectation that what is said and done stays within a space and a place that we can control. We get to choose whom we share our thoughts with or whom we invite into our home. And with this working concept of privacy deep in our minds, we establish circles of trust. The innermost circle, those closest to us, we call our intimates; these are the people with whom we mutually share our feelings, ideas, hopes, worries, and dreams. Layer by layer, we add on other members of our extended family, our neighbors, or even people we meet every morning at the bus stop. We know these people to varying degrees, and our trust and confidence in them varies as well. We’re willing to let our intimates make value judgments about what we consider to be our private matters or accept criticism from them about such matters; we don’t share these with those not in our “inner circle,” and we simply not tolerate them (tolerate criticism or judgments) from someone who is not at the same level of trust and regard. Businesses work the same way. Businesses need to have a reasonable expectation that problems or issues stay within the set of people within the company who need to be aware of them and involved in their resolution. This is in addition to the concept of business confidential or proprietary information—it’s the need to take reasonable and prudent measures to keep conversations and tacit knowledge inside the walls of the business and, when applicable, within select circles of people inside the business. As more and more headline-making data breaches occur, people are demanding greater protection of personally identifiable information (PII) and other information about them as individuals. Increasingly, this is driving governments and information security professionals to see privacy as separate and distinct from confidentiality. While both involve keeping closely held, limited-distribution information safe from inadvertent disclosure, we’re beginning to see that they may each require subtly different approaches to systems design, operation, and management to achieve. In legal terms, privacy relates to three main principles: restrictions on search and seizure of information and property, self-incrimination, and disclosure of information held by the government to plaintiffs or the public. Many of these legal concepts stem from the idea that government must be restricted from taking arbitrary action against its citizens, or people (human beings or fictitious entities) who are within the jurisdiction of those governments. Laws such as the Fourth and Fifth Amendments to the US Constitution, for example, address the first two, while the Privacy Act of 1974 created restrictions on how government could share with others what it knew about its citizens (and even limited sharing of such information within the government). Medical codes of practice and the laws that reflect them encourage data sharing to help health professionals detect a potential new disease epidemic but also require that personally identifiable information in the clinical data be removed or anonymized to protect individual patients. The European Union has enacted a series of policies and laws designed to protect individual privacy as businesses and governments exchange data about people, about transactions, and about themselves. The latest of these, the General Data Protection Regulation 2016/679, is a law binding upon all persons, businesses, or organizations doing anything involving the data related to an EU person. GDPR’s requirements meant that by May 2018, businesses had to change the ways that they collected, used, stored, and shared information about anyone who contacted them (such as by browsing to their website); they also had to notify such users about the changes and gain their informed consent to such use. Many news and infotainment sites hosted in the United States could not serve EU persons until they implemented changes to become GDPR compliant. Privacy as a data protection framework, such as GDPR, provides you with specific functional requirements your organization’s use of information must comply with; you are a vital part in making that compliance effective and in assuring that such usage can be audited and controlled effectively. If you have doubts as to whether a particular action or an information request is legal or ethical, ask your managers, the organizational legal team, or its ethics advisor (if it has one). In some jurisdictions and cultures, we speak of an inherent right to privacy; in others, we speak to a requirement that people and organizations protect the information that they gather, use, and maintain when that data is about another person or entity. In both cases, the right or requirement exists to prevent harm to the individual. Loss of control over information about you or about your business can cause you grave if not irreparable harm. Other key legal and ethical frameworks for privacy around the world include the following. Following World War II, there was a significant renewal and an increased sense of urgency to ensure that governments did not act in an arbitrary manner against citizens. The United Nations drafted the Universal Declaration of Human Rights that set forth these expectations for members. Article 12 states, “No one shall be subjected to arbitrary interference with his privacy, family, home or correspondence, nor to attacks upon his honour and reputation. Everyone has the right to the protection of the law against such interference or attacks.” The Organization for Economic Cooperation and Development (OECD) promotes policies designed to improve the economic and social well-being of people around the world. In 1980, the OECD published “Guidelines on the Protection of Privacy and Transborder Flows of Personal Data” to encourage the adoption of comprehensive privacy protection practices. In 2013, the OECD revised its Privacy Principles to address the wide range of challenges that came about with the explosive growth of information technology. Among other changes, the new guidelines placed greater emphasis on the role of the data controller to establish appropriate privacy practices for their organizations. The OECD Privacy Principles are used throughout many international privacy and data protection laws and are also used in many privacy programs and practices. The eight privacy principles are as follows:

The OECD Privacy Guidelines can be found at www.oecd.org/internet/ieconomy/privacy-guidelines.htm. In developing the guidelines, the OECD recognized the need to balance commerce and other legitimate activities with privacy safeguards. Further, the OECD recognizes the tremendous change in the privacy landscape with the adoption of data breach laws, increased corporate accountability, and the development of regional or multilateral privacy frameworks. The Asia-Pacific Economic Cooperation (APEC) Privacy Framework establishes a set of common data privacy principles for the protection of personally identifiable information as it is transferred across borders. The framework leverages much from the OECD Privacy Guidelines but places greater emphasis on the role of electronic commerce and the importance of organizational accountability. In this framework, once an organization collects personal information, the organization remains accountable for the protection of that data regardless of the location of the data or whether the data was transferred to another party. The APEC Framework also introduces the concept of proportionality to data breach—that the penalties for inappropriate disclosure should be consistent with the demonstrable harm caused by the disclosure. To facilitate enforcement, the APEC Cross-border Privacy Enforcement Arrangement (CPEA) provides mechanisms for information sharing among APEC members and authorities outside APEC. It’s beyond the scope of this book to go into much depth about any of these particular frameworks, legal systems, or regulatory systems. Regardless, it’s important that as an SSCP you become aware of the expectations in law and practice, for the communities that your business serves, in regard to protecting the confidentiality of data you hold about individuals you deal with. Many information security professionals are too well aware of personally identifiable information (PII) and the needs in ethics and law to protect its privacy. If you’ve not worked in the financial services sector, you may not be aware of the much broader category of nonpublished personal information (NPI). The distinction between these two seems simple enough:

However, as identity and credential attacks have grown in sophistication, many businesses and government services providers have been forced to expand their use of NPI as part of their additional authentication challenges, when a person tries to initiate a session with them. Your bank, for example, might ask you to confirm or describe some recent transactions against one of your accounts, before they will let a telephone banking consultation session continue. Businesses may issue similar authentication challenges to someone calling in, claiming to be an account representative from a supplier or customer organization. Three important points about NPI and PII need to be kept in mind:

It may be safest to treat all data you have about any person you deal with as if it is NPI, unless you can show where it has been made public. You may then need to identify subsets of that NPI, such as health care, education, or PII, as defined by specific laws and regulations, that may need additional protections or may be covered by audit requirements. Part of the concept of privacy is connected to the reasonable expectation that other people can see and hear what you are doing, where you are (or are going), and who might be with you. It’s easy to see this in examples: Walking along a sidewalk, you have every reason to think that other people can see you, whether they are out on the sidewalk as well, looking out the windows of their homes, offices, or passing vehicles. The converse is that when out on that public sidewalk, out in the open spaces of the town or city, you have no reason to believe that you are not visible to others. This helps differentiate between public places and private places.

Your home or residence is perhaps the prime example of what we assume is a private place. Typically, business locations can be considered private in that the owners or managing directors of the business set policies as to whom they will allow into their place of business. Customers might be allowed into the sales floor of a retail establishment but not into the warehouse or service areas, for example. In a business location, however, it is the business owner (or its managing directors) who have the most compelling reasonable expectation of privacy, in law and in practice. Employees, clients, or visitors cannot expect that what they say or do in that business location (or on its IT systems) is private to them and not “in plain sight” to the business. As an employee, you can reasonably expect that your pockets or lunch bag are private to you, but the emails you write or the phone calls you make while on company premises are not necessarily private to you. This is not clear-cut in law or practice, however; courts and legislatures are still working to clarify this. The pervasive use of the Internet and the web and the convergence of personal information technologies, communications and entertainment, and computing have blurred these lines. Your smart watch or personal fitness tracker uplinks your location and exercise information to a website, and you’ve set the parameters of that tracker and your web account to share with other users, even ones you don’t know personally. Are you doing your workouts today in a public or private place? Is the data your smart watch collects and uploads public or private data? “Facebook-friendly” is a phrase we increasingly see in corporate policies and codes of conduct these days. The surfing of one’s social media posts, and even one’s browsing histories, has become a standard and important element of prescreening procedures for job placement, admission to schools or training programs, or acceptance into government or military service. Such private postings on the public web are also becoming routine elements in employment termination actions. The boundary between “public” and “private” keeps moving, and it moves because of the ways we think about the information, not because of the information technologies themselves. GDPR and other data protection regulations require business leaders, directors, and owners to make clear to customers and employees what data they collect and what they do with it, which in turn implements the separation of that data into public and private data. As an SSCP, you’ll probably not make specific determinations as to whether certain kinds of data are public or private; but you should be familiar with your organization’s privacy policies and its procedures for carrying out its data protection responsibilities. Many of the information security measures you will help implement, operate, and maintain are vital to keeping the dividing line between public and private data clear and bright. It is interesting to see how the Global War on Terror has transformed attitudes about privacy throughout the Western world. Prior to the 1990s, most Westerners felt quite strongly about their individual rights to privacy; they looked at government surveillance as intrusive and relied upon legal protections to keep it in check. “That’s none of your business” was often the response when a nosy neighbor or an overly zealous official tried to probe too far into what citizens considered as private matters. This agenda changed in 2001 and 2002, as national security communities in the United States and its NATO allies complained bitterly that legal constraints on intelligence gathering, information sharing, and search and seizure hampered their efforts to detect and prevent acts of terrorism. “What have you got to hide,” instead, became the common response by citizens when other citizens sought to protect the idea of privacy. It is important to realize several key facets of this new legal regime for the 21st century. Fundamentally, it uses the idea that international organized crime, including the threat of terrorism, is the fundamental threat to the citizens of law-abiding nations. These new legal systems require significant information sharing between nations, their national police and law enforcement agencies, and international agencies such as the OECD and Interpol, while also strengthening the ability of these agencies to shield or keep secret their demands for information. This sea change in international governance started with the Uniting and Strengthening America by Providing Appropriate Tools Required to Intercept and Obstruct Terrorism Act of 2001, known as the USA PATRIOT Act. This law created the use of National Security Letters (NSLs) as classified, covert ways to demand information from private businesses. The use of NSLs is overseen by the highly secret Foreign Intelligence Surveillance Court, which had its powers and authorities strengthened by this Act as well. Note that if your boss or a company officer is served with an NSL demanding certain information, they cannot disclose or divulge to anyone the fact that they have been served with such a due process demand. International laws regarding disclosure and reporting of financial information, such as bank transactions, invoices and receipts for goods, and property purchases, are also coming under increasing scrutiny by governments. It’s not the purpose of this chapter to frame that debate or argue one way or another about it. It is, however, important that you as an information security specialist within your organization recognize that this debate is not resolved and that many people have strongly held views about it. Those views often clash with legal and regulatory requirements and constraints regarding monitoring of employee actions in the workplace, the use of company information or information systems by employees (or others), and the need to be responsive to digital discovery requests of any and every kind. Those views and those feelings may translate into actions taken by some end users and managers who are detrimental to the organization, harmful to others, illegal, unethical, or all of these to a degree. Such actions—or the failure to take or effectively perform actions that are required—can also compromise the overall information security posture of the organization and are an inherent risk to information security, as well as to the reputation of the organization internally and externally. Your best defense—and your best strategy for defending your company or your organization—is to do as much as you can to ensure the full measure of CIANA protections, including accountability, for all information and information systems within your areas of responsibilities. The fundamental design of the earliest internetworking protocols meant that, in many cases, the sender had no concrete proof that the recipient actually received what was sent. Contrast this with postal systems worldwide, which have long used the concept of registered mail to verify to the sender that the recipient or his agent signed for and received the piece of mail on a given date and time. Legal systems have relied for centuries on formally specified ways to serve process upon someone. Both of these mechanisms protect the sender’s or originator’s rights and the recipient’s rights: Both parties have a vested interest in not being surprised by claims by the other that something wasn’t sent, wasn’t done, or wasn’t received. This is the basis of the concept of nonrepudiation, which is the aspect of a system that prevents a party or user from denying that they took an action, sent a message, or received a message. Nonrepudiation does not say that the recipient understood what you sent or that they agreed with it, only that they received it. You can think of nonrepudiation as being similar to submitting your income tax return every year or the many other government-required filings that we all must make no matter where we live or do business. Sometimes, the only way we can keep ourselves from harm is by being able to prove that we sent it in on time and that the government received it on time. Email systems have been notorious for not providing reliable confirmation of delivery and receipt. Every email system has features built into it that allow senders and server administrators to control whether read receipts or delivery confirmations work reliably or correctly. Email threads can easily be edited to show almost anything in terms of sender and recipient information; attachments to emails can be modified as well. In short, off-the-shelf email systems do not provide anything that a court of law or an employment relations tribunal will accept as proof of what an email user claims it is. Business cannot function that way. The transition from postal delivery of paper to electronic delivery of transactions brought many of the same requirements for nonrepudiation into your web-enabled e-business systems. What e-business and e-commerce did not do a very good job of was bringing that same need for nonrepudiation to email. There are a number of commercial products that act as add-ons, extensions, or major enhancements to email systems that provide end-to-end, legally compliant, evidence-grade proof regarding the sending and receiving of email. A number of national postal systems around the world have started to package these systems as their own government-endorsed email version of registered postal mail. Many industry-facing vertical platforms embed these nonrepudiation features into the ways that they handle transaction processing, rendering reams of fax traffic, uncontrollable emails, or even postal mail largely obsolete. Systems with high degrees of nonrepudiation are in essence systems that are auditable and that are restricted to users who authenticate themselves prior to each use; they also tend to be systems with strong data integrity, privacy, or confidentiality protection built into them. Using these systems improves the organization’s bottom line, while enhancing its reputation for trustworthiness and reliability. This element of the classic CIANA set of information security characteristics brings together many threads from all the others regarding permission to act. None of the other attributes of information security can be implemented, managed, or controlled if the system cannot unambiguously identify the person or process that is trying to take an action involving that system and any or all of its elements and then limit or control their actions to an established, restricted set. Note that the word authentication is used in two different ways.

1984 was a watershed year in public law in this regard, for in the Computer Fraud and Abuse Act (CFAA), the U.S. Congress established that entering into the intangible property that is the virtual world inside a computer system, network, or its storage subsystems was an action comparable to entering into a building or onto a piece of land. Entry onto (or into) real, tangible property without permission or authority is criminal trespass. CFAA extended that same concept to unauthorized entry into the virtual worlds of our information systems. Since then, many changes to public law in the United States and a number of other countries have expanded the list of acts considered as crimes, possibly expanding it too much in the eyes of many civil liberties watchdogs. It’s important to recognize that almost every computer crime possible has within it a violation of permissions to act or an attempt to fraudulently misrepresent the identity of a person, process, or other information system’s element, asset, or component in order to circumvent such restrictions on permitted actions. These authenticity violations are, if you would, the fundamental dishonesty, the lie behind the violation of trust that is at the heart of the crime. Safety is that aspect of a system’s behavior that prevents failures, incorrect operational use, or incorrect data from causing damage to the system itself, to other systems it interfaces with, or to anyone else’s systems or information associated with it. More importantly, safety considerations in design and operation must ensure that humans are not subject to a risk of injury or death as a result of such system malfunctions or misuse. Note that systems designed with an explicit and inherent purpose to cause such damage, injury, or death do not violate safety considerations when they do so—so long as they only damage, destroy, injure, or kill their intended targets. The two most recent crashes of Boeing 737 Max aircraft, as of this writing, are a painful case in point. Early investigation seems to implicate the autopilot software as root or proximate cause of these crashes; and while the investigation is still ongoing, the aviation marketplace, insurance and reinsurance providers, and the flying public have already expressed their concerns in ways that have directly damaged Boeing’s bottom line as well as its reputation. Consider, too that Boeing, Airbus, and their engine and systems manufacturers have built their current generation of aircraft control systems to allow for onboard firmware and software updates via USB thumb drives while aircraft are on the airport ramp. This multiplies the risk of possibly unsafe software, or software operating with unsafe control parameters, with the risk of an inadequately tested update being applied incorrectly. The upshot of all of this is that safety considerations are becoming much more important and influential to the overall systems engineering thought process, as the work of Dr. Nancy Leveson and others at MIT are demonstrating. Her work on the Systems Theoretic Accident Model and Process (STAMP) began in 2004 and has seen significant application in software systems reliability, resilience, and accident prevention and reduction across many industries ever since; it’s also being applied to information security in many powerful and exciting ways. Applying a safety mind-set requires you to ensure that every task involved in mitigating information security risks is done correctly—that you can validate the inputs to that task (be they hardware, software, data, or procedures) or have high confidence that they come to your hands via a trustworthy supply chain. You must further be able to validate that you’ve performed that task, installed that control or countermeasure, or taken other actions correctly and completely; you must then validate that they’re working correctly and producing the required results effectively. Safety, like security, is an end-to-end responsibility. It’s no wonder that some cultures and languages combine both in a single word. For example, in Spanish seguridad unifies both safety and security as one integrated concept, need, and mind-set. Several control principles must be taken into account when developing, implementing, and monitoring people-focused information security risk mitigation controls. Of these, the three most important are need to know, separation of duties, and least privilege. These basic principles are applied in different ways and with different control mechanisms. However, a solid understanding of the principles is essential to evaluating a control’s effectiveness and applicability to a particular circumstance. As we saw in Chapter 1, “Access Controls,” our security classification system for information is what ties all of our information security and decision risk mitigation efforts together. It’s what separates the highest-leverage proprietary information from the routine, nearly-public-knowledge facts and figures. Information classification schemes drive three major characteristics of your information security operations and administration.

Taken together these form a powerful set of functional requirements for the design not just of our information security processes but of our business processes as well! But first, we need to translate these into two control or cybernetic principles. Least privilege as a design and operational principle requires that any given system element (people or software-based) has the minimum level of authority and decision-making capability that the specifically assigned task requires, and no more. This means that designers must strictly limit the access to and control over information, by any subject involved in a process or task, to that minimum set of information that is required for that task and no more. Simply put, least privilege implements and enforces need to know. A few examples illustrate this principle in action.

Each time you encounter a situation in which a person or systems element is doing something in unexpected ways—or where you would not expect that person or element to be present at all—is a red flag. It suggests that a different role, with the right set of privileges, may be a necessary part of a more secure solution. Least privilege should drive the design of business logic and business processes, shaping and guiding the assignment (and separation) of duties to individual systems and people who accomplish their allocated portions of those overall processes. Driven by the Business Impact Analysis (BIA), the organization should start with those processes that are of highest potential impact to the organization. These processes are usually the ones associated with achieving the highest-priority goals and objectives, plus any others that are fundamental to the basic survival of the organization and its ability to carry on day-to-day business activities. Separation of duties is intrinsically tied to accountability. By breaking important business processes into separate sequences of tasks and assigning these separate sequences to different people, applications, servers or devices, you effectively isolate these information workers with accountability boundaries. It also prevents any one person (or application) from having end-to-end responsibility and control over a sequence of high-risk or highly vulnerable tasks or processes. This is easiest to see in a small, cash-intensive business such as a catering truck or small restaurant.

This system protects each worker from errors (deliberate or accidental) made by other workers in this cash flow system. It isolates the error-prone (and tempting!) cash stream from other business transactions, such as accounts payable for inventory, utilities, or payroll. With the bank’s knowledge of its customer, it may also offer reasonable assurances that this business is not involved in money laundering activities (although this is never foolproof with cash businesses). Thus, separation of duties separates hazards or risks from each other; to the best degree possible it precludes any one person, application, system, or server (and thereby the designers, builders, and operators of those systems) from having both responsibility and control over too many hazardous steps in sequence, let alone end to end. Other examples demonstrate how separation of duties can look in practice.

While implementing separation of duties, keep a few additional considerations in mind. First, separation of duties must be well-documented in policies and procedures. To complement this, mechanisms for enforcing the separation must be implemented to match the policies and procedures, including access-level authorizations for each task and role. Smaller organizations may have difficulty implementing segregation of duties, but the concept should be applied to the extent possible and logistically practical. Finally, remember that in cases where it is difficult to split the performance of a task, consider compensating controls such as audit trails, monitoring, and management supervision. Another, similar form of process security is dual control. In dual control, two personnel are required to coordinate the same action from separate workstations (which may be a few feet or miles apart) to accomplish a specific task. This is not something reserved to the military’s release of nuclear weapons; it is inherent in most cash-handling situations and is a vital part of decisions made by risk management boards, loan approval boards, and many other high-risk or safety-critical situations. A related concept is two-person integrity, where no single person is allowed access or control over a location or asset at any time. Entry into restricted areas, such as data centers or network and communications facilities, might be best secured by dual control or two-person integrity processes, requiring an on-shift supervisor to confirm an entry request within a fraction of a minute. Separation of duties is often seen as something that introduces inefficiencies, especially in small organizations or within what seem to be simple, well-bounded sequences of processes or tasks. As with any safeguard, senior leadership has to set the risk appetite or threshold level and then choose how to apply that to specific risks. These types of risk mitigation controls are often put in place to administratively enforce a separation of duties design. By not having one person have such end-to-end responsibility or opportunity, you greatly reduce the exposure to fraud or abuse. In sensitive or hazardous material handling and manufacturing or in banking and casino operations, the work areas where such processes are conducted are often called no lone zones, because nobody is allowed to be working in them by themselves. In information systems, auditing, and accounting operations, this is sometimes referred to as a four-eyes approach for the same reason. Signing and countersigning steps are often part of these processes; these signatures can be pen and ink or electronic, depending upon the overall security needs. The needs for individual process security should dictate whether steps need both people (or all persons) to perform their tasks in the presence of the others (that is, in a no lone zone) or if they can be done in sequence (as in a four-eyes sign/countersign process). Safety considerations also should dictate the use of no lone zones in work process design and layout. Commercial air transport regulations have long required two pilots to be on the flight deck during flight operations; hospital operating theaters and emergency rooms have teams of care providers working with patients, as much for quality of care as for reliability of care. Peer review, structured walkthroughs, and other processes are all a way to bring multiple eyes and minds to the process of producing high-quality, highly reliable software. Note that separation of duties does not presume intent: It will protect the innocent from honest mistakes while increasing the likelihood that those with malicious intent will find it harder, if not impossible, to carry out their nefarious plans (whatever they may be). It limits the exposure to loss or damage that the organization would otherwise face if any component in a vital, sensitive process fails to function properly. Separation of duties, for example, is an important control process to apply to the various event logs, alarm files, and telemetry information produced by all of your network and systems elements. Of course, the total costs of implementing, operating, and maintaining such controls must be balanced against the potential impacts or losses associated with those risks. Implementing separation of duties can be difficult in small organizations simply because there are not enough people to perform the separate functions. Nevertheless, separation of duties remains an effective internal control to minimize the likelihood of fraud or malfeasance and reduce the potential for damage or loss due to accident or other nonhuman causes. In many business settings, the dual concepts of separation of duties and least privilege are seen as people-centric ideas—after all, far too much painful experience has shown that by placing far too much trust and power in one person’s hands, temptation, coercion, or frustration can lead to great harm to the business. Industrial process control, transportation, and the military, by contrast, have long known that any decision-making component of a workflow or process can and will fail, leading also to a potential for great harm. Separation of duties means that autopilot software should not (one hopes!) control the main electrical power systems and buses of the aircraft; nor should the bid-ask real-time pricing systems of an electric utility company direct the CPUs, people, and actuators that run its nuclear reactors or turbine-powered generators. Air gaps between critical sets of duties—gaps into which systems designers insert different people who have assessment and decision authority—become a critical element in designing safe and resilient systems. As you should expect, these key control principles of need to know, separation of duties, and least privilege also drive the ways in which you should configure and manage identity management and access control systems. Chapter 1 explored this relationship in some depth; let’s recap its key recommendations in the following list: