CHAPTER 3

Risk Identification, Monitoring, and Analysis

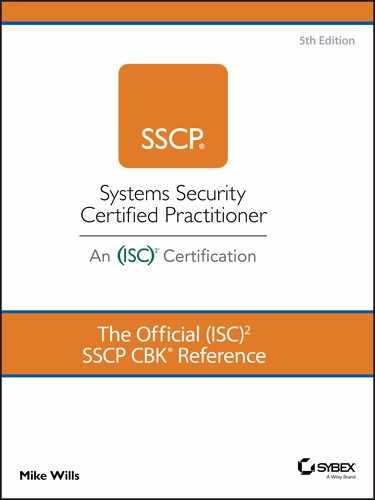

Information security is about controlling and managing risk to information, information systems, and the people, processes, and technologies that support them and make use of them. Most information security risks involve events that can disrupt the smooth functioning of the business processes used by a company, an organization, or even an individual person. Since all systems are imperfect, and all organizations never have enough time or resources to fix every problem, risk management processes are used to identify risks, select and prioritize those that must be dealt with soonest, and implement risk mitigations that control or limit the possibility of the risk event’s occurrence and the damage it can inflict when (not if) it occurs. Risk management also requires ongoing monitoring and assessment of both the real-world context of risks that the organization operates in, and the success or failure of the risk mitigations that management has chosen to implement. Information risk management operationalizes the information security needs of the organization, expressed in terms of the need to preserve the confidentiality, integrity, availability, nonrepudiation, authentication, privacy, and safety aspects of the information and the organizational decisions that make use of it. It does this by using a variety of assessment strategies and processes to relate risks to vulnerabilities, and vulnerabilities to effective control or mitigation approaches. At the heart of information security risk management is the prompt detection, characterization, and response to a potential security event. Setting the right alarm thresholds is as important as identifying the people who will receive those alarms and respond to them, as well as equipping those people with the tools and resources they’ll need to contain the incident and restore the organization’s IT systems back to working order. Those resources include timely senior leadership and management decisions to support the response, escalating it if need be. It’s often been said that the attackers have to get lucky only once, whereas the defenders have to be lucky every moment of every day. When it comes to advanced persistent threats (APTs), which pose potentially the most damaging attacks to our information systems, another, more operationally useful rule applies. Recall that APTs are threat actors who spend months, maybe even years, in their efforts to exploit target systems and organizations in the pursuit of the threat actors’ goals and objectives. APTs quite often use low and slow attack patterns that avoid detection by many network and systems security sensor technologies; once they gain access, they often live off the land and use the built-in capabilities of the target system’s OS and applications to achieve their purposes. APTs often subcontract out much of their work to other players to do for them, both as a layer of protection for their own identity and as a way of gaining additional attack capabilities. This means that APTs plan and conduct their attacks using what’s called a robust kill chain, the sequence of steps they go through as they discover, reconnoiter, characterize, infiltrate, gain control, and further identify resources to attack within the system; make their “target kill” by copying, exfiltrating, or destroying the data and systems of their choice; and then cover their tracks and leave. The bad news is that it’s more than likely that your organization or business is already under the baleful gaze of more than just one APT bad actor; you might also be subject to open source intelligence gathering, active reconnaissance probes, or social engineering attempts being conducted by some of the agents and subtier players in the threat space that APTS often use (much like subcontractors) to carry out steps in an APT’s kill chain. Crowded, busy marketplaces provide many targets for APTs; so, naturally, many such threat actors are probably considering some of the same targets as their next opportunities to strike. For you and your company, this means that on any given day, you’re probably in the crosshairs of multiple APTs and their teammates in various stages of their own unique kill chains, each pursuing their own, probably different, objectives. Taken together, there may be thousands, if not hundreds of thousands, of APTs out there in the wild, each seeking its own dominance, power, and gain. The millions of information systems owned and operated by businesses and organizations worldwide are their hunting grounds. Yours included. The good news is that there are many field-proven information risk management and mitigation strategies and tactics that you can use to help your company or organization adapt and survive in the face of such hostile action and continue to flourish despite the worst the APTs can do to you. These frameworks and the specific risk mitigation controls should be tailored to the confidentiality, integrity, availability, nonrepudiation, and authentication (CIANA) needs of your specific organization. With them, you can first deter, prevent, and avoid attacks. Then you can detect the ones that get past that first set of barriers and characterize them in terms of real-time risks to your systems. You then take steps to contain the damage they’re capable of causing and help the organization recover from the attack and get back up on its feet. You probably will not do battle with an APT directly; you and your team won’t have the luxury (if we can call it that!) of trying to design to defend against a particular APT and thwart their attempts to seek their objectives at your expense. Instead, you’ll wage your defensive campaign one skirmish at a time, never knowing who the ultimate attacker is or what their objectives are vis-à-vis your systems and your information. You’ll deflect or defeat one scouting party as you strengthen one perimeter; you’ll detect and block a probe from somewhere else that is attempting to gain entry into and persistent access to your systems. You’ll find where an illicit user ID has made itself part of your system, and you’ll contain it, quarantine it, and ultimately block its attempts to expand its presence inside your operations. As you continually work with your systems’ designers and maintainers, you’ll help them find ways to tighten down a barrier here or mitigate a vulnerability there. Step by step, you strengthen your information security posture—and, if you’re lucky, all without knowing that one or many APTs have had you in their sights. But in order to have such good luck, you’ve got to have a layered, integrated, and proactive information systems defense in place and operating; and your best approach for establishing such a security posture is to consciously choose which information and decision risks to manage, which ones to mitigate, and what to do when a risk starts to become an event. That’s what this chapter is all about. Since 2011, energy production and distribution systems in North America and Western Europe have been under attack from what can only be described as a large, sophisticated, advanced persistent threat actor team. Known as Dragonfly 2.0, this attack depended heavily on fraudulent IDs and misuse of legitimate IDs created in systems owned and operated by utility companies, engineering and machinery support contractors, and the fuels industries that provide the feedstocks for the nuclear, petroleum, coal, and gas-fired generation of electricity. The Dragonfly 2.0 team wove a complex web of attacks against multiple private and public organizations as they gathered information, obtained access, and created fake IDs as precursor steps to gaining more access and control. For example, reports issued by NIST and Symantec make mention of “hostile email campaigns” that attempted to lure legitimate email subscribers in these organizations to respond to fictitious holiday parties. Blackouts and brownouts in various energy distribution systems, such as those suffered in Ukraine in 2015 and 2016, have been traced to cyberattacks linked to Dragonfly 2.0 and its teams of attackers. Data losses to various companies and organizations in the energy sector are still being assessed. You can read Symantec’s report at www.symantec.com/blogs/threat-intelligence/dragonfly-energy-sector-cyber-attacks. Why should security practitioners put so much emphasis on APTs and their use of the kill chain? In virtually every major data breach in the past decade, the attack pattern was low and slow: sequences of small-scale efforts designed not to cause alarm, each of which gathered information or enabled the attacker to take control of a target system. More low and slow attacks launched from that first target against other target systems. This springboard or stepping-stone attack pattern is both a deliberate strategy to further obscure the ultimate source of the attack and an opportunistic effort to find more exploitable, useful information assets along the way. They continually conduct reconnaissance efforts and continually grow their sense of the exploitable nature of their chosen targets. Then they gain access, typically creating false identities in the target systems. Finally, with all command, control, and hacking capabilities in place, the attack begins in earnest to exfiltrate sensitive, private, or otherwise valuable data out of the target’s systems. This same pattern of events shows itself, with many variations, in ransom attacks, in sabotage, and in disruption of business systems. It’s a part of attacks seen in late 2018 and early 2019 against newspaper publishers and nickel ore refining and processing industries. In any of these attacks, detecting, disrupting, or blocking any step along the attacker’s kill chain might very well have been enough to derail that kill chain and motivate the attacker to move on to another, less well-protected and potentially more lucrative target. Preparation and planning are your keys to survival; without them, you cannot operationally defeat your attacker’s kill chain. Previous chapters examined the day-to-day operational details of information security operations and administration; now, let’s step back and see how to organize, plan, and implement risk management and mitigation programs that deliver what your people need day-to-day to keep their information and information systems safe, secure, reliable, and resilient. We’ll also look in some depth at what information to gather and analyze so as to inform management and leadership when critical, urgent decisions about information security must be made, especially if indicators of an incident must be escalated for immediate, business-saving actions to take place. Many businesses, nonprofits, and even government agencies use the concept of the value chain, which models how they create value in the products or services they provide to their customers (whether internal or external). The value chain brings together the sequence of major activities, the infrastructures that support them, and the key resources that those activities need to transform each input into an output. The value chain focuses the attention of process designers, managers, and workers alike on the outputs and the outcomes that result from each activity. Critical to thinking about the value chain is that each major step provides the organization with a chance to improve the end-to-end experience by reducing costs, reducing waste, scrap, or rework, and by improving the quality of each output and outcome along the way. Value chains extend well beyond the walls of the organization itself, as they take in the efforts of suppliers, vendors, partners, and even the actions and intentions of regulators and other government activities. Even when a company’s value chain is extended beyond its own boundaries, the company owns that value chain—in the sense that they are completely responsible for the outcomes of the decisions they make and the actions they take. The company has end-to-end due care and due diligence responsibility for each value chain they operate. They have to appreciate that every step along each of their value chains is an opportunity for something to go wrong, as much as it is an opportunity to achieve greatness. A key input could be delayed or fail to meet the required specifications for quality or quantity. Skilled labor might not be available when we need it; critical information might be missing, incomplete, or inaccurate. Ten years ago, you didn’t hear too many information systems security practitioners talking about the kill chain. In 2014, the U.S. Congress was briefed on the kill chain involved in the Target data breach of 2013, which you can (and should!) read about at https://www.commerce.senate.gov/public/_cache/files/24d3c229-4f2f-405d-b8db-a3a67f183883/23E30AA955B5C00FE57CFD709621592C.2014-0325-target-kill-chain-analysis.pdf. Today, it’s almost a survival necessity that you know about kill chains, how they function, and what you can do about them. Whether we’re talking about military action or cybersecurity, the “kill chain” as a plan of action is in effect choosing to be the sum total of all of those things that can go wrong in the target’s value chain systems. Using a kill chain does not defeat the target with overwhelming force. Instead, the attacker out-thinks the target by meticulously studying the target as a system of systems and by spotting its inherent vulnerabilities and its critical dependencies on inputs or intermediate results; then it finds the shortest, simplest, lowest-cost pathway to triggering those vulnerabilities and letting them help defeat the target. Much like any military planner, an APT plans their attack with an eye to defeating, degrading, distracting, or denying the effectiveness of the target’s intrusion deterrence, prevention, detection, and containment systems. They’ve further got to plan to apply those same “four Ds” to get around, over, or through any other security features, procedures, and policies that the target is counting on as part of its defenses. The cybersecurity defensive value chain must effectively combine physical, logical, and administrative security and vulnerability mitigation controls; in similar fashion, the APT actor considers which of these controls must be sidestepped, misdirected, spoofed, or ignored, as they pursue their plans to gain entry, attain the command and control of portions of the target’s systems that will serve their needs, and carry out their attack. (In that regard, it might be said that APT actors and security professionals are both following a similar risk management framework.) Figure 3.1 shows a generalized model of an APT’s kill chain, which is derived in part from the previously mentioned Senate committee report. FIGURE 3.1 Kill chain conceptual model One important distinction should be obvious: as a defender, you help own, operate, and protect your value chains, while all of those would-be attackers own their kill chains. With that as a starting point, you can see that an information systems kill chain is the total set of actions, plans, tasks, and resources used by an advanced persistent threat to do the following:

How do APTs apply this kill chain in practice? In broad general terms, APT actors may take many actions as part of their kill chains, as they:

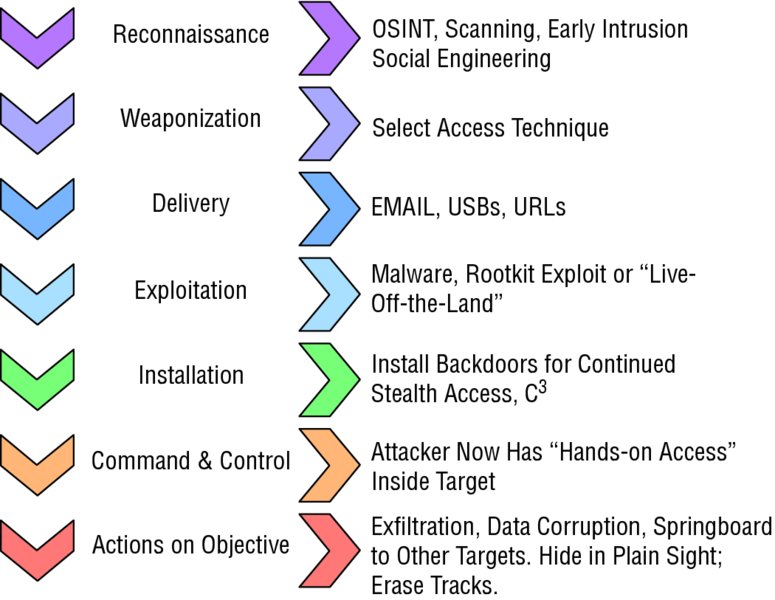

The more complex, pernicious APTs will use multiple target systems as proxies in their kill chains, using one target’s systems to become a platform from which they can run reconnaissance and exploitation against other targets. In the Target breach, the attackers entered Target’s payment processing systems utilizing a maintenance back door left in place by Target’s heating, ventilation, and air conditioning (HVAC) contractors, as shown in Figure 3.2, which is from the previously mentioned Senate report. Defeating an APT’s kill chain requires you to think about breaking each step in the process, in as many ways as you can—and in ways that you can continually monitor, detect, and recognize as being part of an attack in progress. (Target’s information security team, by contrast, failed to heed and understand the alarms that their own network security systems were generating; and Target’s mid-level managers also failed to escalate these alarms to senior management, thus further delaying their ability to respond.) FIGURE 3.2 Target 2013 data breach kill chain APTs can be almost any kind of organized effort to achieve some set of objectives by means of extracting value from your information systems. That value might come from the information they can access, exfiltrate, and sell or trade to other threat actors, or it might come from disrupting your business processes or the work of key people on your team. APTs have been seen as parts of campaigns waged by organized crime, terrorist organizations, political and social activist campaigners, national governments, and even private businesses. The APT threat actors, or the people whom they work with or for, have motives that range from purely mercenary to ideological, from seeking power to seeking revenge. APT threat actors and the campaigns that they attempt to run may be of almost any size, scale, and complexity. And they’re quite willing to use any system, no matter how small, personal or business, if it can be a stepping-stone to completing a step in their kill chain. Including yours. A typical business information system, such as a corporate data center and the networks that make it available to authorized users, might suffer millions of hits each day from unknown and unauthorized Internet addresses. Some of these are merely events—something routine that has happened, such as an innocent ping or ICMP packet attempting to make contact. Others might be part of information security incidents. You obviously cannot spend human security analyst time on each of those events; you’ve got to filter it down to perhaps a few dozen or more each day that might be something worthy of further analysis and investigation. This brings us to define an event of interest as something that happens that might be an indicator (or a precursor) of one or more events that might impact your information’s systems security. An event of interest may or may not be a warning of a computer security incident in the making, or even the first stages of such an incident. But what is a computer security incident? Several definitions by NIST, ITIL, and the IETF1 suggest that computer security incidents are events involving a target information system in ways that:

The unplanned shutdown of your on-premises mail server, as an example, might have been caused by a thunderstorm-induced electrical power transient or by an accidental unplugging of its power supply and conditioning equipment. Your vulnerability assessment might have discovered these vulnerabilities and made recommendations as to how to reduce their potential for disruption. But if neither weather nor hardware-level accident caused the shutdown, you have to determine whether it was a software or systems design problem that caused the crash or a vulnerability that was exploited by a person or persons unknown. Another challenge that is becoming more acute is to differentiate phishing attacks from innocent requests for information. An individual caller to your main business phone number, seeking contact information in your IT team, might be an honest and innocent inquiry (perhaps from an SSCP looking for a job!). However, if a number of such innocent inquiries across many days have attempted to map out your entire organization’s structure, complete with individual names, phone numbers, and email addresses, it’s quite likely that your organization is the object of some hostile party’s reconnaissance efforts. It’s at this point that you realize that you need some information systems risk management. Risk is about a possible occurrence of an event that leads to loss, harm, or disruption. Individuals and organizations face risk and are confronted by its possibilities of impact, in four basic ways, as Figure 3.2 illustrates. Three observations are important here—so important that they are worth considering as rules in and of themselves.

One of the difficulties we face as information systems security professionals is that simple terms, such as risk, have so many different “official” definitions, which seem to vary in degree more than they do in substance. For example:

NIST’s Computer Security Resource Center online glossary offers 29 different definitions of risk, many of which have significant overlap with each other, while pointing at various other NIST, ISO, IEC, and FIPS publications as their sources. It’s worthwhile to note the unique contributions of some of these definitions. Much like the debate over C-I-A versus CIANA, there is probably only one way to decide which definition of risk is right for you: what works best for your organization, its objectives, and its tolerance for disruption? As you might imagine, risk management has at least as many hotly debated definitions as does risk itself. For our purposes, let’s focus on the common denominator and define risk management as the set of decision processes used to identify and assess risks; make plans to treat, control, or mitigate risks; and exercise continuous due care and due diligence over the chosen risk treatment, control, and mitigation approaches. This definition attempts to embrace all risks facing modern organizations—not just IT infrastructure risks—and focuses on how you monitor, measure, or assess how good (or bad) a job you’re doing at dealing with the risks you’ve identified. As a definition, it goes well with our working definition of risk as the possibility of a disruptive event’s occurrence and the expected measure of the impacts that event could have upon you if the event in question actually takes place. For almost all organizations, the most senior leaders and managers are the ones who have full responsibility for all risk management and risk mitigation plans, programs, and activities that the organization carries out. These people have the ultimate due care and due diligence responsibility. In law and in practice, they can be held liable for damages and may even be found criminally negligent or in violation of the law, and face personal consequences for the mistakes in information security that happened on their watch. Clearly, those senior leaders (be they board members or C-suite office holders) need the technically sound, business-based insights, analysis, and advice from everyone in the organization. Organizations depend upon their chains of supervision, control, and management to provide a broad base of support to important decisions, such as those involving risk management. But it is these senior leaders who make the final decisions. Your organization will have its own culture and practices for delegating responsibility and authority, which may change over time. As an information security professional, you may have some of that delegated to you. Nonetheless, it’s not until you’re sitting in one of those “chief’s” offices—as the chief risk manager, chief information security officer, or chief information officer—that you have the responsibility to lead and direct risk management for the organization. Risk management consists of a number of overlapping and mutually reinforcing processes, which can and should be iteratively and continuously applied. We’ll look at each of these in greater detail throughout this chapter. It’s worth noting that because of the iterative, cross-fertilizing, and mutually supportive nature of every aspect of risk management, mitigation, monitoring, and assessment, there’s probably no one right best order to do these steps in. Risk management frameworks (see the “Risk Management Frameworks” section) do offer some great “clean-slate” ways to start your organization’s journey toward robust and effective management of its information and decision risks, and sometimes a clean start (or restart) of such planning and management activities makes sense. You’re just as likely to find that bits and pieces of your organization already have some individual risk management projects underway; if so, don’t sweat getting things done in the right order. Just go find these islands of risk management, get to know the people working on them, and help orchestrate their efforts into greater harmony and greater security. Fortunately, you and your organization are not alone in this. National governments, international agencies, industry associations, and academic and research institutes have developed considerable reference libraries regarding observed vulnerabilities, exploits against them, and defensive techniques. Many local or regional areas or specific marketplaces have a variety of communities of practitioners who share insight, advice, and current threat intelligence. Public-private partnership groups, such as InfraGard (https://infragard.org) and the Electronic Crimes Task Force (ECTF), provide “safe harbor” meeting venues for private industry, law enforcement, and national security to learn with each other. (In the United States, InfraGard is sponsored by the Federal Bureau of Investigation, and the U.S. Secret Service hosts the ECTF; multinational ECTF chapters, such as the European ECTF, operate in similar ways.) Let’s look in some detail at the various processes involved in risk management; then you’ll see how different risk management frameworks can give your organization an overall policy, process, and accountability structure to make risk manageable. In any risk management situation, risks need to be visible to analysts and managers in order to make them manageable. This means that as the process of risk and vulnerability assessment identifies a risk, characterizes it, and assesses it, all of this information about the risk is made available in useful, actionable ways. As risks are identified (or demonstrated to exist because a security incident or compromise occurs), the right levels of management need to be informed. Incident response procedures are put into action because of such risk reporting. Risk reporting mechanisms must provide an accountable, verifiable set of procedures and channels by which the right information about risks is provided to the right people, inside and outside of the organization, as a part of making management and mitigation decisions about it. Risk reporting to those outside of the organization is usually confined to trusted channels and is anonymized to a significant degree. Businesses have long shared information about workplace health and safety risks—and their associated risk reduction and hazard control practices—with their insurers and with industry or trade associations so that others in that group can learn from the shared experience, without disclosing to the world that any given company is exposed to a specific vulnerability. In the same vein, companies share information pertaining to known or suspected threats and threat actors. Businesses that operate in high-risk neighborhoods or regions, for example, share threat and risk insights with local law enforcement, with other government agencies, and of course with their insurance carriers. Such information sharing helps all parties take more informed actions to cope with potential or ongoing threat activities from organized crime, gang activities, or civil unrest. These threats could manifest themselves in physical or Internet-based attempts to intrude into business operations, to disrupt IT systems, or as concerted social engineering and propaganda campaigns. Let’s look at each of these processes in some detail. Every organization or business needs to be building a risk register, a central repository or knowledge bank of the risks that have been identified in their business and business process systems. This register should be a living document, constantly refreshed as the company moves from risk identification through mitigation to the “new normal” of operations after instituting risk controls or countermeasures. It should be routinely updated with each newly discovered risk or vulnerability and certainly as part of the lessons-learned process after an incident response. For each risk in your risk register, it can be valuable to keep track of information regarding the following:

Your organization’s risk register should be treated as a highly confidential, closely held, or proprietary set of information. In the wrong hands, it provides a ready-made targeting plan that an APT or other hacker can use to intrude into your systems, disrupt your operations, hold your data for ransom, or exfiltrate it and sell it on to others. Even your more unscrupulous business competitors (or a disgruntled employee) could use it to cripple or kill your business, or at least drive it out of their marketplaces. As you grow the risk register by linking vulnerabilities, root-cause analyses, CVE data, and risk mitigation plans together, your need to protect this information becomes even more acute. There are probably as many formats and structures for a risk register as there are organizations creating them. Numerous spreadsheet templates can be found on the Internet, some of which attempt to guide users in meeting the expectations of various national, state, or local government risk management frameworks, standards, or best practices. If your organization doesn’t have a risk register now, start creating one! You can always add more fields to each risk (more columns to the spreadsheet) as your understanding of your systems and their risks grows in both breadth and depth. It’s also a matter of experience and judgment to decide how deeply to decompose higher-level risks into finer and finer detail. One rule of thumb might be that if an entry in your risk register doesn’t tell you how to fix it, prevent it, or control it, maybe it’s in need of further analysis. The risk register is an example of making tacit knowledge explicit; it captures in tangible form the observations your team members and other co-workers make during the discovery of or confrontation with a risk. It can be a significant effort to gather this knowledge and transform it into an explicit, reusable form, whether before, during, or after an information security incident. Failing to do so leaves your organization held hostage to human memory. Sadly, any number of organizations fail to document these painful lessons learned in any practical, useful way, and as a result, they keep re-learning them with each new incident. Threat intelligence is both a set of information and the processes by which that information is obtained. Threat intelligence describes the nature of a particular category or instance of a threat actor and characterizes its potential capabilities, motives, and means while assessing the likelihood of action by the threat in the near term. Current threat intelligence, focused on your organization and the marketplaces it operates in, should play an important part in your efforts to keep the organization and its information systems safe and secure. Information security threat intelligence is gathered by national security and law enforcement agencies, by cybersecurity and information security researchers, and by countless practitioners around the world. In most cases, the data that is gathered is reported and shared in a variety of databases, websites, journals, blogs, conferences, and symposia. (Obviously, data gathered during an investigation that may lead to criminal prosecution or national security actions is kept secret for as long as necessary.) As the nature of advanced persistent threat actors continues to evolve, much of the intelligence data we have available to us comes from efforts to explore sanctuary areas, such as the Dark Web, in which these actors can share information, find resources, contract with others to perform services, and profit from their activities. These websites and servers are in areas of the IP address space not indexed by search engines and usually available on an invitation-only basis, depending upon referrals from others already known in these spaces. For most of us cyber-defenders, it’s too great a personal and professional risk to enter into these areas and seek intelligence information; it usually requires purchasing contraband or agreeing to provide illegal services to gain entry. However, many law enforcement, national security, and researchers recognized by such authorities do surf these dark pages. They share what they can in a variety of channels, such as InfraGard and ECTF meetings; it gets digested and made available to the rest of us in various blogs, postings, symposia, and conference workshops. Another great resource is the Computer Society of the Institute of Electrical and Electronics Engineers, which sponsors many activities, such as their Center for Secure Design. See https://cybersecurity.ieee.org/center-for-secure-design/ for ideas and information that might help your business or organization. Many local universities and community colleges work hand in hand with government and industry to achieve excellence in cybersecurity education and training for people of all ages, backgrounds, and professions. Threat intelligence regarding your local community (physically local or virtually/Internet local) is often available in these or similar communities of practice, focused or drawing upon companies and like-minded groups working in those areas. Be advised, too, that a growing number of social activist groups have been adding elements of hacking and related means to their bags of disruptive tactics. You might not be able to imagine how their cause might be furthered by penetrating into the systems you defend; others in your local threat and vulnerability intelligence sharing community of practice, however, might offer you some tangible, actionable insights. Most newly discovered vulnerabilities in operating systems, firmware, applications, or networking and communications systems are quickly but confidentially reported to the vendors or manufacturers of such systems; they are reported to various national and international vulnerabilities and exposures database systems after the vendors or manufacturers have had an opportunity to resolve them or patch around them. Systems such as Mitre’s common vulnerabilities and exposures (CVE) system or NIST’s National Vulnerability Database are valuable, publicly available resources that you can draw upon as you assess the vulnerabilities in your organization’s systems and processes. Many of these make use of the Common Vulnerability Scoring System (CVSS), which is an open industry standard for assessing a wide variety of vulnerabilities in information and communications systems. CVSS makes use of the CIA triad of security needs by providing guidelines for making quantitative assessments of a particular vulnerability’s overall score. (Its data model does not directly reflect nonrepudiation, authentication, privacy, or safety.) Scores run from 0 to 10, with 10 being the most severe of the CVSS scores. Although the details are beyond the scope of this book, it’s good to be familiar with the approach CVSS uses—you may find it useful in planning and conducting your own vulnerability assessments. As you can see at https://nvd.nist.gov/vuln-metrics/cvss, CVSS consists of three areas of concern.

Each of these uses a simple scoring process—impact assessment, for example, defines four values from Low to High (and “not applicable or not defined”). Using CVSS is as simple as making these assessments and totaling up the values. Many nations conduct or sponsor similar efforts to collect and publish information about system vulnerabilities that are commonly found in commercial-off-the-shelf (COTS) IT systems and elements or that result from common design or system production weaknesses. In the United Kingdom, common vulnerabilities information and reporting are provided by the Government Communications Headquarters (GCHQ, which is roughly equivalent to the U.S. National Security Agency); find this and more at the National Cyber Security Centre at www.ncsc.gov.uk. Note that during reconnaissance, hostile threat actors use CVE and CVSS information to help them find, characterize, and then plan their attacks. (And there is growing evidence that the black hats exploit this public domain, open source treasure trove of information to a far greater extent than we white hats do.) The benefits we gain as a community of practice by sharing such information outweighs the risks that threat actors can be successful in exploiting it against our systems if we do the rest of our jobs with due care and due diligence. That said, do not add insult to injury by not looking at CVE or CVSS data as part of your own vulnerability efforts! Given the incredible number of new businesses and organizations that start operating each year, it’s probably no surprise that many of them open their doors, their Internet connection, and their web presence without first having done a thorough information security analysis and vulnerabilities assessment. In this case, the chances are good that their information architecture grows and changes almost daily. Starting with CVE data for the commercial off-the-shelf systems they’re using may be a prudent risk mitigation first step. One risk in this strategy (or, perhaps, more fairly a lack of a strategy) is that the organization can get complacent; it can grow to ignore the vulnerabilities it has already built into its business logic and processes, particularly via its locally grown glueware or people-centric processes. As another risk, that may encourage putting off any real data quality analysis efforts, which increase the likelihood of a self-inflicted garbage-in, garbage-out series of wasted efforts, lost work, and lost opportunities. It’s also worth reminding the owner-operators of a startup business that CVE data cannot protect them against the zero-day exploit; making extra effort to institute more effective access control using multifactor authentication and a data classification guide for its people to use is worth doing early and reviewing often. If you’re part of a larger, more established organization that does not have a solid information security risk management and mitigation posture, starting with the CVE data and hardening what you can find is still a prudent thing to do—while you’re also gathering the data and the management and leadership support to a proper information security risk assessment. Risk management is a decision-making process that must fit within the culture and context of the organization. The context includes the realities of the marketplaces that the business operates in, as well as the legal, regulatory, and financial constraints and characteristics of those marketplaces. Context also includes the perceptions that senior leaders and decision-makers in the organization may hold about those realities; as in all things human, perception is often more powerful and more real than on-the-ground reality. The culture of the organization includes its formal and informal decision-making styles, lines of communication, and lines of power and influence. Culture is often determined by the personalities and preferences of the organization’s founders or its current key stakeholders. While much of the context is written down in tangible form, that is not true of the cultural setting for risk management. Both culture and context determine the risk tolerance or risk appetite of the organization, which attempts to express or sum up the degree of willingness of the organization to maintain business operations in the face of certain types of risks. One organization might have near-zero tolerance for politically or ethically corrupt situations or for operating in jurisdictions where worker health and safety protections are nonexistent or ineffective; another, competing organization, might believe that the business gains are worth the risk to their reputation or that by being a part of such a marketplace they can exert effort to improve these conditions. Perhaps a simpler example of risk tolerance is seen in many small businesses whose stakeholders simply do not believe that their company or their information systems offer an attractive target to any serious hackers—much less to advanced persistent threats, organized crime, or terrorists. With such beliefs in place, management might have a low tolerance for business disruptions caused by power outages or unreliable systems but be totally accepting (or willing to completely ignore) the risks of intrusion, data breach, ransom attacks, or of their own systems being hijacked and used as stepping-stones in attacks on other target systems. In such circumstances, you may have to translate those loftier-sounding, international security–related threat cases into more local, tangible terms—such as the threat of a disgruntled employee or dissatisfied customer—to make a cost-effective argument to invest in additional information security measures. As the on-site information security specialist, you may often have the opportunity to help make the business case for an increased investment in information security systems, risk controls, training, or other process improvements. This can sometimes be an uphill battle. In many businesses, there’s an old-fashioned idea about viewing departments, processes, and activities as either cost centers or profit centers—either they add value to the company’s bottom line, via its products and services, or they add costs. As an example, consider insurance policies (you have them on your car, perhaps your home or furnishings, and maybe even upon your life), which cost you a pre-established premium each year while paying off in case an insured risk event occurs. Information security investments are often viewed as an insurance-like cost activity—they don’t return any value each day or each month, right? Let’s challenge that assertion. Information security investments, I assert, add value to the company (or the nonprofit organization) in many ways, no matter what sector your business operates in, its size, or its maturity as an organization. They bring a net positive return on their investment, when you consider how they enhance revenues, strengthen reputation, and avoid, contain, or eliminate losses. First, let’s consider how good information security hygiene and practice adds value during routine, ongoing business operations.

That list (which is not complete) shows just some of the positive contributions that effective information security programs and systems can make, as they enhance ongoing revenues and reduce or avoid ongoing costs. Information security adds value. Over time it pays its own way. If your organization cannot see it as a profit center, it’s at least a cost containment center. Next, think about the unthinkable: What happens if a major information security incident occurs? What are the worst-case outcomes that can happen if your customers’ data is stolen and sold on the Dark Web or your proprietary business process and intellectual property information is leaked? What happens if ransom attacks freeze your ongoing manufacturing, materials management, sales, and service processes, and your company is dead in the water until you pay up?

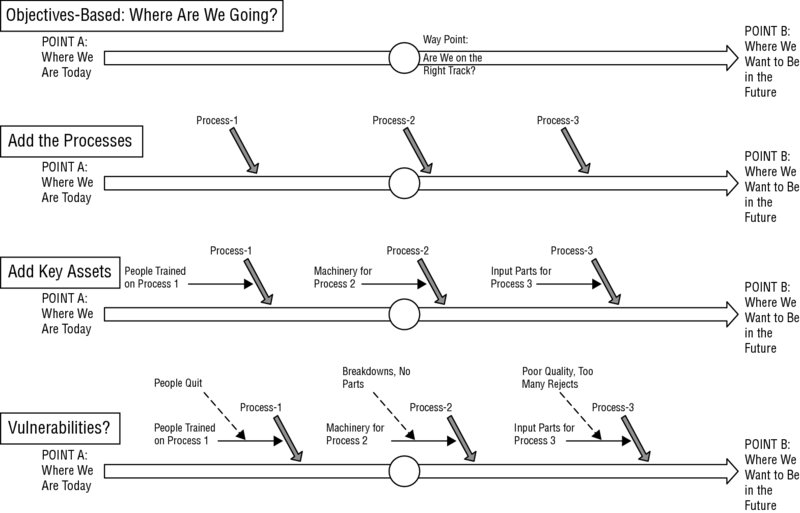

In short, a major information security incident can completely reverse the cost savings and value enhancements shown in that first list. Security professionals often have problems persuading mid-level and senior management to increase spending on information security—and this uphill battle is halfway lost by calling it spending, rather than cost avoidance. Nobody expects an information security department to show a profit; after all, it has no direct revenues of its own to offset its expenses. Even in nonprofit or government organizations, having your work unit or department characterized as a cost center frames your work as a necessary evil—it’s unavoidable, like paying taxes, but should be kept to an absolute minimum. You need to turn this business paradigm around by showing how your risk mitigation recommendations defer or avoid costs. For example, take all of your quantitative risk assessment information and project a five-year budget of losses incurred if all of those risks occur at their worst-case frequency of occurrence. That total impact is the cost that your mitigation efforts, your investments in safeguards, needs to whittle down to a tolerable size. Putting the organization’s information security needs into business terms is crucial to gaining the leadership’s understanding and support of them. Next, let’s consider what happens when the stakeholders or shareholders want to sell the business (perhaps to fund their retirement or to provide the cash to pursue other goals). Strong market value and the systems that protect and enhance it reduce the merger and acquisition risk, the risk that prospective buyers of the company will perceive it as having fragile, risky processes or systems or that its assets are in much worse shape than the owners claim they are. Due diligence is fundamentally about protecting and enhancing the value of the organization, which has a tangible or book value component and an intangible or good will component. Book value reflects the organization’s assets (including its intellectual property), its market share and position, its revenues, its costs, and its portfolio of risks and risk management processes. The intangible components of value reflect all of the many elements that go into reputation, which can range from credit scores, compliance audit reports, and perceptions in the marketplace. The maturity of its business logic and processes also plays an important part in this valuation of the organization (these may show up in both the tangible and intangible valuations). If you’re working in a small business, its creator and owner may already be working toward an exit strategy, their plan for how they reap their ultimate reward for many years of work, sweat, toil, and tears; or, they might be working just to make this month’s bottom line better and better and have no time or energy to worry about the future of the business. Either way, the owner’s duties of due care and due diligence—and your own—demand that you work to protect and enhance the value of the company, its business processes, and its business relationships, by means of the money, time, and effort you invest in sound information security practices. Many of the ways that we look at risk are conditioned by other business or personal choices that have already been made. Financially, we’re preconditioned to think about what we spend or invest as we purchase, lease, or create an asset, such as an assembly line, a data center, or a data warehouse full of customer transactions, product sales, marketing, and related information. Operationally, we think in terms of processes that we use to accomplish work-related tasks. Strategically, we think in terms of goals and objectives we want to achieve—but ideally we remember the reasons that we thought those goals and objectives were worth attaining, which means we think about the outcomes we achieve by accomplishing a goal. (When you’re up to your armpits in alligators, as the old saying goes, do you remember why draining the swamp was an important thing to do?) And if we are cybersecurity professionals or insurance providers or risk managers, we think about the dangers or risks that threaten to disrupt our plans, reduce the effectiveness (and value) of our assets, and derail our best-laid processes; in other words, we think about the threats to our business or the places our business is vulnerable to attack or accident. These four perspectives, based on assets, processes, outcomes, or vulnerabilities and threats, are all correct and useful; no one is the best to use in addressing your risk management and risk mitigation needs. Some textbooks, risk management frameworks, and organizations emphasize one over the others (and may even not realize that the others exist); don’t let this deceive you. As a risk management professional, you need to be comfortable perceiving the risks to your organization and its systems from all four perspectives. Note, too, that on the one hand, these are perspectives or points of view; on the other hand, they each can be the basis of estimate, the foundation or starting point of a chain of decisions and calculations about the value of an asset. All quantitative estimates of risk or value have to start somewhere, as a simple example will illustrate. Suppose your company decides to purchase a new engineering workstation computer for your product designer to use. The purchase price, including all accessories, shipping, installation, and so on, establishes its initial acquisition cost to the company; that is its basis in accounting terms. This number can be the starting point for spreading the cost of the asset over its economically useful life (that is, depreciating it) or as part of break-even calculations. Incidentally, accountants and risk managers pronounce bases, the plural of basis, as “bay-seez,” with both vowels long, rather than “bay-sez,” short “e,” as in “the bases are loaded” in baseball. All estimates are predictions about the various possible outcomes resulting from a set of choices. In the previous example, the purchase price of that computer established its cost basis; now, you’ve got to start making assumptions about the future choices that could affect its economically useful life. You’ll have to make assumptions about how intensely it is used throughout a typical day, across each week; you’ll estimate (that is, guess) how frequently power surges or other environmental changes might contribute to wear and tear and about how well (or how poorly) it will be maintained. Sometimes, modeling all of these possible decisions on a decision tree, with each branch weighted with your guesses of the likelihood (or probability) of choosing that path, provides a way of calculating a probability-weighted expected value. This, too, is just another prediction. But as long as you make similar predictions, using similar weighting factors and rules about how to choose probabilities among various outcomes, your overall estimates will be comparable with each other. That’s no guarantee that they are more correct, just that you’ve been fair and consistent in making these estimates. (Many organizations look to standardized cost modeling rules, which may vary industry by industry, as a way of applying one simple set of factors to a class of assets: All office computers might be assumed to have a three-year useful life, with their cost basis declining to zero at the end of that three years. That’s neither more right nor more wrong than any other set of assumptions to use when making such estimates; it’s just different.) One way to consider these four perspectives on risk is to build up an Ishikawa or fishbone diagram, which shows the journey from start to desired finish as supported by the assets, processes, resources, or systems needed for that journey, and how risks, threats, or problems can disrupt your use of those vital inputs or degrade their contributions to that journey. Figure 3.3 illustrates this concept. FIGURE 3.3 Four bases of risk, viewed together Let’s look in more detail at each of these perspectives or bases of risk. This perspective on risk focuses on the overarching objective—the purpose or intent behind the actions that organizations take. Outcomes are the goal-serving conditions that are created or maintained as a result of accomplishing a series of tasks to fulfill those objectives. Your income tax return, along with all of the payments that you might owe your government, is a set of outputs produced by you and your tax accountant; the act of filing it and paying on time achieves an outcome, a goal that is important or valuable to you in fulfilling an objective. This might at a minimum be because it will keep you from being investigated or prosecuted for tax evasion; it might also be because you believe in doing your civic duty, which includes paying your taxes. The alarms generated by your intrusion detection or prevention systems (IDSs or IPSs) are the outputs of these systems; your decision to escalate to management and activate the incident response procedures is an outcome that logically followed from that set of outputs (and many other preceding outputs that in part preconditioned and prepared you to interpret the IDS and IPS alarms and make your decision). Outcomes-based risk assessment looks at the ultimate value to be gained by the organization when it achieves that outcome. Investing in a new product line to be sold in a newly developed market has, as its outcome, an estimated financial return on investment (or RIO). Risks associated with the plans for developing that product, promoting and positioning it within that market, selling it, and providing after-sales customer service and support are all factors that inject uncertainty into that estimate—risks decrease the anticipated ROI, in effect multiplying it by a decreased probability of success. Organizations with strongly held senses of purpose, mission, or goals, or that have invested significant time, money, and effort in attempting to achieve new objectives, may prefer to use outcomes-based risk assessment. Organizations describe, model, and control the ways they get work done by defining their business logic as sequences of tasks, including conditional logic that enforces constraints, chooses options, or carries out other decision-making actions based on all of the factors specified by that business logic. Many organizations model this business logic in data models and data dictionaries and use systems such as workflow control and management or enterprise resource management (ERP) to implement and manage the use of that business logic. Individually and collectively these sequences or sets of business processes are what transform inputs—materials, information, labor, and energy—into outputs such as products, subassemblies, proposals, or software source code files. Business processes usually are instrumented to produce auxiliary outputs, such as key performance indicators, alarms, or other measurements, which users and managers can use to confirm correct operation of the process or to identify and resolve problems that arise. The absence of required inputs or the failure of a process step along a critical path within the overall process can cause the whole process to fail; this failure can cascade throughout the organization, and in the absence of appropriate backups, safeguards or fail-safes, the entire organization can grind to a halt. Organizations that focus on continually refining and improving their business processes, perhaps even by using a capabilities maturity modeling methodology, may emphasize the use of process-based risk assessment. The failure of critical processes to achieve overall levels of product quality, for example, might be the reason that customers are no longer staying with the organization. While this negative outcome (and the associated outcome of declining revenues and profits) is of course important, the process-oriented focus can more immediately associate cause with effect in decision-makers’ minds. Broadly speaking, an asset is anything that the organization (or the individual) has, owns, uses, or produces as part of its efforts to achieve some of its goals and objectives. Buildings, machinery, or money on deposit in a bank are examples of hard, tangible assets. The people in your organization (including you!) are also tangible assets (you can be counted, touched, moved, or even fired). The knowledge that is recorded in the business logic of your business processes, your reputation in the marketplace, the intellectual property that you own as patents or trade secrets, and every bit of information that you own or use are examples of soft, intangible assets. (Intellectual property is the idea, not the paper it is written on.) Assets are the tools you use to perform the steps in your business processes; without these tools, without assets, the best business logic by itself cannot accomplish anything. It needs a place to work, inputs to work on, and people, energy, and information to achieve its required outputs. Many textbooks on information risk management start with information assets—the information you gather, process and use, and the business logic or systems you use in doing that—and information technology assets—the computers, networks, servers, and cloud services in which that information moves, resides, and is used. The unstated assumption is that if the information asset or IT asset exists, it must therefore be important to the company or organization, and therefore, the possibility of loss or damage to that asset is a risk worth managing. This assumption may or may not still hold true. Assets also lose value over time, reflecting their decreasing usefulness, ongoing wear and tear, obsolescence, or increasing costs of maintenance and ownership. A good example of an obsolete IT asset would be a mainframe computer purchased by a university in the early 1970s for its campus computer center, perhaps at a cost of over a million dollars. By the 1990s, the growth in personal computing and network capabilities meant that students, faculty, and staff needed far more capabilities than that mainframe computer center could provide, and by 2015, it was probably far outpaced by the capabilities in a single smartphone connected to the World Wide Web and its cloud-based service provider systems. Similarly, an obsolete information asset might be the paper records of business transactions regarding products the company no longer sells, services, or supports. At some point, the law of diminishing returns says that it costs more to keep it and use it than the value you receive or generate in doing so. It’s also worth noting that many risk management frameworks seem to favor using information assets or IT assets as the basis of risk assessment; look carefully, and you may find that they actually suggest that this is just one important and useful way to manage information systems risk, but not the only one. Assets, after all, should be kept and protected because they are useful, not just because you’ve spent a lot of money to acquire and keep them. These are two sides of the same coin really. Threat actors (natural or human) are things that can cause damage, disruption, and lead to loss. Vulnerabilities are weaknesses within systems, processes, assets, and so forth, that are points of potential failure. When (not if) they fail, they result in damage, disruption, and loss. Typically, threats or threat actors exploit (make use of) vulnerabilities. Threats can be natural (such as storms or earthquakes), accidental (failures of processes or systems due to unintentional actions or normal wear and tear, causing a component to fail), or deliberate actions taken by humans or instigated by humans. Such intentional attackers have purposes, goals, or objectives they seek to accomplish; Mother Nature or a careless worker do not intend to cause disruption, damage, or loss. Ransom attacks are an important, urgent, and compelling case in point. Unlike ransomware attacks, which require injection of malware into the target system to install and activate software to encrypt files or entire storage subsystems, a ransom attack “lives off the land” by taking advantage of inherent system weaknesses to gain access; the attacker then uses built-in systems capabilities to schedule the encryption of files or storage subsystems, all without the use of any malware. This threat is real, and it’s a rare organization that can prove it is invulnerable to it. Prudent threat-based risk assessment, therefore, starts with this attack plan and assesses how your systems are configured, managed, used, and monitored as part of determining just how exposed your company is to this risk. It’s perhaps natural to combine the threat-based and vulnerability-based views into one perspective, since they both end up looking at vulnerabilities to see what impacts can disrupt an organization’s information systems. The key question that the threat-based perspective asks, at least for human threat actors, is why. What is the motive? What’s the possible advantage the attacker can gain if they exploit this vulnerability? What overall gains might an attacker achieve by an attack on our information systems at all? Many small businesses (and some quite large ones) do not realize that a successful incursion into their systems by an attacker may only be a step in that attacker’s larger plan for disruption, damage, or harm to others. By thinking for a moment like the attacker, you might identify critical assets that the attacker might really be seeking to attack; or, you might identify critical outcomes that an attacker might want to disrupt for ideological, political, emotional, or business reasons. Note that whether you call this a threat-based or vulnerability-based approach or perspective, you end up taking much the same action: You identify the vulnerabilities on the critical path to your high-priority objectives and then decide what to do about them in the face of a possible threat becoming a reality and turning into an incident. Your organization will make choices as to whether to pursue an outcomes-based, asset-based, process-based, or threat-based assessment process, or to blend them all together in one way or another. Some of these choices might already have been made if your organization has chosen (and perhaps tailored) a formal risk management framework to guide its processes with. (You’ll look at these in greater depth in the “Risk Management Frameworks” section.) The priorities of what to examine for potential impact, and in what order, should be set for you by senior leadership and management, which is expressed in the business impact analysis (BIA). One more choice remains, and that is whether to do a quantitative assessment, a qualitative assessment, or a mix of both. Impact assessments for information systems must be guided by some kind of information security classification guide, something that associates broad categories of information types with an expectation of the degree of damage the organization would suffer if that information’s confidentiality, integrity, or availability were compromised. For some types or categories of information, the other CIANA attributes of nonrepudiation and authentication are also part of establishing the security classification (the specific information as to how payments on invoices are approved, and which officials use what processes to approve high-value or special invoices or payments, is an example of the need to protect authentication-related information. False invoicing scams, by the way, are amounting to billions of dollars in losses to businesses worldwide, as of 2018). The process of making an impact assessment seems simple enough.

Now you have a better picture of what can be lost or damaged because of the occurrence of a risk event. This might mean that critical decision support information is destroyed, degraded, or delayed, or that a competitive advantage is degraded or lost because of disclosure of proprietary data. It might mean that a process or system is rendered inoperable and that repairs or other actions are needed to get it back into normal operating condition. Risk analysis is a complex undertaking and often involves trying to sort out what can cause a risk (which is a statement of probability about an event) to become an incident. Root-cause analysis looks to find what the underlying vulnerability or mechanism of failure is that leads to the incident. By contrast, proximate cause analysis asks, “What was the last thing that happened that caused the risk to occur?” (This is sometimes called the “last clear opportunity to prevent” the incident, a term that insurance underwriters and their lawyers often use.) Proximate cause can reveal opportunities to put in back-stops or safety controls, which are additional features that reduce the impact of the risk from spreading to other downstream elements in the chain of processes. Commercial airlines, for example, scrupulously check passenger manifests and baggage check-in manifests; they will remove baggage from the aircraft if they cannot validate that the passenger who checked it in actually boarded, and is still on board, the aircraft, as a last clear opportunity to thwart an attempt to place a bomb onboard the aircraft. Multifactor user authentication and repeated authorization checks on actions that users attempt to take demonstrate the same layered implementation of last clear opportunity to prevent. You’ve learned about a number of examples of risks becoming incidents; for each you’ve identified an outcome of that risk, which describes what might happen. This forms part of the basis of estimate with which you can make two kinds of risk assessments: quantitative and qualitative. Quantitative assessments use simple techniques (such as counting possible occurrences or estimating how often they might occur) along with estimates of the typical cost of each loss.

Other numbers associated with risk assessment relate to how the business or organization deals with time when its systems, processes, and people are not available to do business. This “downtime” can often be expressed as a mean (or average) allowable downtime or a maximum downtime. Times to repair or restore minimum functionality and times to get everything back to normal are also some of the numbers the SSCP will need to deal with. Other commonly used quantitative assessments are:

These types of quantitative assessments help the organization understand what a risk can do when it occurs (and becomes an incident) and what it will take to get back to normal operations and clean up the mess it caused. One more important question remains: How long to repair and restore is too long? Two more “magic numbers” shed light on this question.

Figure 3.4 illustrates a typical risk event occurrence. It shows how the ebb and flow of normal work can get corrupted, then lost completely, and then must be re-accomplished, as you detect and recover from an incident. You’ll note that it shows an undetermined time period elapsing between the actual damage inflicted by an incident—the intruder has started exfiltrating data, corrupting transactions, or introducing false data into your systems, possibly installing more trapdoors—and the time you actually detect the incident has occurred or is still ongoing. The major stages of risk response are shown as overlapping processes. Note that MTTR, RTO, and MAO are not necessarily equal. (They’d hardly ever be in the real world.) FIGURE 3.4 Risk timeline Where is the RPO? Take a look “left of bang,” left of the incident detection time (sometimes called t0, or the reference time from which you measure durations), and look at the database update and transaction in the “possible data recovery points” group. Management needs to determine how far back to go to reload an incremental database update, then reaccomplish known good transactions, and then reaccomplish suspect transactions from independent, verifiably good transaction logs. This logic establishes the RPO. The further back in time you have to fall back, the more work you reaccomplish. It used to be that we thought that RPO times close to the incident reference time were sufficient; and for non-APT-induced incidents, such as systems crashes, power outages, etc., this may be sound reasoning. But if that intruder has been in your systems for weeks or months, you’ll probably need a different strategy to approach your RPO. Chapter 4, “Incident Response and Recovery,” goes into greater depth on the end-to-end process of business continuity planning. It’s important that you realize these numbers play three critical roles in your integrated, proactive information defense efforts. All of these quantitative assessments (plus the qualitative ones as well) help you:

One final thought about the “magic numbers” is worth considering. The organization’s leadership has its stakeholders’ personal and professional fortunes and futures in their hands. Exercising due diligence requires that management and leadership be able to show, by the numbers, that they’ve fulfilled that obligation and brought it back from the brink of irreparable harm when disaster strikes. Those stakeholders—the organization’s investors, customers, neighbors, and workers—need to trust in the leadership and management team’s ability to meet the bottom line every day. Solid, well-substantiated numbers like these help the stakeholders trust, but verify, that their team is doing their job. Qualitative assessments focus on an inherent quality, aspect, or characteristic of the risk as it relates to the outcome(s) of a risk occurrence. “Loss of business” could be losing a few customers, losing many customers, or closing the doors and going out of business entirely! So, which assessment strategy works best? The answer is both. Some risk situations may present us with things we can count, measure, or make educated guesses about in numerical terms, but many do not. Some situations clearly identify existential threats to the organization (the occurrence of the threat puts the organization completely out of business); again, many situations are not as clear-cut. Senior leadership and organizational stakeholders find both qualitative and quantitative assessments useful and revealing. Qualitative assessment of information is most often used as the basis of an information classification system, which labels broad categories of data to indicate the range of possible harm or impact. Most of us are familiar with such systems through their use by military and national security communities. Such simple hierarchical information classification systems often start with “Unclassified” and move up through “For Official Use Only,” “Confidential,” “Secret,” and “Top Secret” as their way of broadly outlining how severely the nation would be impacted if the information was disclosed, stolen, or otherwise compromised. Yet even these cannot stay simple for long. Business and the military have another aspect of data categorization in common: the concept of need to know. Need to know limits who has access to read, use, or modify data based on whether their job functions require them to do so. Thus, a school’s purchasing department staff members have a need to know about suppliers, prices, specific purchases, and so forth, but they do not need to know any of the PII pertaining to students, faculty, or other staff members. Need-to-know leads to compartmentalization of information approaches, which create procedural boundaries (administrative controls) around such sets of information. Threat modeling provides an overall process and management approach organizations can use as they identify possible threats, categorize them in various ways, and analyze and assess both these categories and specific threats. This analysis should shed light on the nature and severity of the threat; the systems, assets, processes, or outcomes it endangers; and offer insights into ways to deter, detect, defeat, or degrade the effectiveness of the threat. While primarily focused on the IT infrastructure and information systems aspects of overall risk management, it has great potential payoff for all aspects of threat-based risk assessment and management. Its roots, in fact, can be found in centuries of physical systems and architecture design practices developed to protect physical plant, high-value assets, and personnel from loss, harm, or injury due to deliberate actions (which are still very much a part of overall risk management today!). Threat modeling can be proactive or reactive; it can be done as a key part of the analysis and design phase of a new systems project’s lifecycle, or it can be done (long) after the systems, products, or infrastructures are in place and have become central to an organization’s business logic and life. Since it’s impossible to identify all threats and vulnerabilities early in the lifecycle of a new system, threat modeling is (or should be) a major component of ongoing systems security support. As the nature, pervasiveness and impact of the APT threat continues to evolve, many organizations are finding that their initial focus on confidentiality, integrity, and availability may not go far enough to meet their business risk management needs. Increasingly, they are placing greater importance on their needs for nonrepudiation, authentication, and privacy as part of their information security posture. Data quality, too, is becoming much more important, as businesses come to grips with the near-runaway costs of rework, lost opportunity, and compliance failures caused by poor data quality control. Your organization should always tailor its use of frameworks and methodologies, such as threat modeling, with its overall and current information security needs in mind. In recent years, greater emphasis has been placed on the need for an overall secure software development lifecycle approach; many of these methodologies integrate threat modeling and risk mitigation controls into different aspects of their end-to-end approach. Additionally, specific industries are paying greater attention to threat modeling in specific and overall secure systems development methodologies in general. If your organization operates in one of these markets, these may have a bearing on how your organization can benefit from (or must use) threat modeling as a part of its risk management and mitigation processes. Chapter 7, “Systems and Application Security” will look at secure software development lifecycle approaches in greater depth; for now, let’s focus on the three main threat modeling approaches commonly in use.

It’s possible that the greatest risk that small and medium-sized businesses and nonprofit organizations face, as they attempt to apply threat modeling to their information architectures, is that of the failure of imagination. It can be quite hard for people in such organizations to imagine that their data or business processes are worthy of an attacker’s time or attention; it’s hard for them to see what an attacker might have to gain by copying or corrupting their data. It’s at this point that your awareness of many different attack methodologies may help inform (and inspire) the threat modeling process. There are many different threat modeling methodologies. Some of the most widely used are SDL, STRIDE, NIST 800-154, PASTA, and OCTAVE, each of which is explored next. Over the years, Microsoft has evolved its thinking and its processes for developing software in ways that make for more secure, reliable, and resilient applications and systems by design, rather than being overly dependent upon seemingly never-ending “testing” being done by customers, users, and hackers after the product has been deployed. Various methodologies, such as STRIDE and SD3+C, have been published by Microsoft as their concepts have grown. Although the names have changed, the original motto of “secure by design, secure by default, and secure in deployment and communication” (SD3+C) continue to be the unifying strategies in Microsoft’s approach and methods. STRIDE, or spoofing, tampering, repudiation, information disclosure, denial of service, and elevation of privilege, provides a checklist and a set of touchpoints by which security analysts can characterize threats and vulnerabilities. As a methodology, it can be applied to applications, operating systems, networks and communications systems, and even human-intensive business processes. These are still at the core of Microsoft’s current secure development lifecycle (SDL) thinking, but it must be noted that SDL focuses intensely on the roles of managers and decision-makers in planning, supporting, and carrying out an end-to-end secure software and systems development and deployment lifecycle, rather than just considering specific classes of threats or controls. Check out https://www.microsoft.com/en-us/securityengineering/sdl/practices for current information, ideas, and tools on SDL. In 2016, NIST placed for public comment a threat modeling approach centered on protecting high-value data. This approach is known as NIST 800-154, “Data-Centric Threat Modeling.” It explicitly rejects that best-practice approaches are sufficient to protect sensitive information, as best practice is too general and would overlook controls specifically tailored to meet the protection of the sensitive asset. In this model, the analysis of the risk proceeds through four major steps.

The Process for Attack Simulation and Threat Analysis (PASTA), as the full name implies, is an attacker-centric modeling approach, but the outputs of the model are focused on protecting the organization’s assets. Its seven-step process aligns business objectives, technical requirements, and compliance expectations to identify threats and attack patterns. These are then prioritized through a scoring system. The results can then be analyzed to determine which security controls can be applied to reduce the risk to an acceptable level. Advocates for this approach argue that the integration of business concerns in the process takes the threat modeling activity from a technical exercise to a process more suited to assessing business risk. Operationally Critical Threat, Asset, and Vulnerability Evaluation (OCTAVE) is an approach for managing information security risks developed at the Software Engineering Institute (SEI). While the overall OCTAVE approach encompasses more than threat modeling, asset-based threat modeling is at the core of the process. In its current form, OCTAVE Allegro breaks down into a set of four phases.