Chapter 4. Antivirus Software

“Let me put it another way. You have a computer with an auto-dial phone link. You put the VIRUS program into it and it starts dialing phone numbers at random until it connects to another computer with an auto-dial. The VIRUS program then injects itself into the new computer. Or rather, it reprograms the new computer with a VIRUS program of its own and erases itself from the first computer. The second machine then begins to dial phone numbers at random until it connects with a third machine. You get the picture?

. . .

“It’s fun to think about, but it was hell to get out of the system. The guy who wrote it had a few little extra goodies tacked onto it—well, I won’t go into any detail. I’ll just tell you that he also wrote a second program, only this one would cost you—it was called VACCINE.”

Don Handley in When Harlie Was One

—DAVID GERROLD

4.1 Characteristics

Antivirus software is one of those things that drives traditional security people crazy. It shouldn’t be necessary, it shouldn’t work, and it should never be listed as a security essential. However, it is needed, it often works, and sometimes—though not always—it really is important.

Viruses were once seen as an artifact and a consequence of the “primitive” operational and security environment of personal computers. After all, in the early Apple and Microsoft worlds, programs ran with full hardware permission; the operating system was more a program loader and a set of utility routines than a full-fledged OS. A real OS had protected mode and access controls on files; people said that viruses simply couldn’t happen in such a world.

To some extent, of course, that was true. Boot sector viruses “couldn’t” exist because no virus would have access rights to the boot sector of any drive. Similarly, no virus could infect any system file because those were all write-protected against any ordinary users’ programs.

Two things shook that belief. First, the Internet (“Morris”) worm of 1988 [Eichin and Rochlis 1989; Spafford 1989] spread almost entirely without privileges; instead, it exploited buggy code and user-specified patterns of trust. Second, Tom Duff showed that it was possible to write viruses for Unix systems, even viruses that could infect shell scripts [Duff 1989a; Duff 1989b]. To understand why these could happen, we need to take a deeper look at the environment in which viruses exist.

It is a truism in the operating system community that an OS presents user programs with a virtual instruction set, an instruction set that consists of the unprivileged subset of the underlying hardware’s op codes combined with OS-specific “virtual instructions”; these latter (better known as system calls) do things like create sockets, write to files, change permissions, and so on. This virtual machine (not to be confused with virtualization of the underlying hardware à la VMware) does not have things like Ethernet adapters or hard drives; instead, it has TCP/IP and file systems. Early DOS and Mac OS programs had full access to the underlying hardware (hence the ability to overwrite the boot sector) as well as to the virtual instructions provided by the operating systems of the day. Morris and Duff (and of course Fred Cohen in his dissertation [1986], which—aside from being the first academic document to use the term “virus”—presented a theoretical model of their existence and spread) showed that access to the privileged instructions was unnecessary, that the OS’s virtualized instruction set was sufficient. We can take that two steps further.

First (and this is graphically illustrated by Duff, and formally modeled by Cohen), the effective target environment of a virus is limited to those files (or other resources) writable by the user context in which it executes. The effect of file permissions, then, is to limit the size of the effective target environment, rather than eliminating it; as such, file permissions slow virus propagation but do not prevent it. In other words, this virtual instruction set is just as capable of hosting viruses as the real+virtual instruction set; it’s just that these viruses won’t multiply as quickly.

Other than effective target environment, the main parameter in modeling virus propagation is the spread rate, i.e., the rate at which the virus is invoked with a different target environment. While that latter could mean the creation of new, infectable files, more likely it means being executed by a different user, who would have a different set of write permissions and hence a very different effective target environment. (For a more detailed and formal model of propagation, see [Staniford, Paxson, and Weaver 2002].)

The second generalization of a suitable virus environment is even more important than the role of file permissions: any sufficiently powerful execution environment can host a virus; it does not have to be an operating system, let alone bare hardware. It is easy to define “sufficient power”: all it has to be able to do is to copy itself to some other location from which it can execute with a different effective target environment. That might be a different user on the same computer, or it might be a different computer; for the latter, the transport can be automatic (as is the case for worms) or aided by a human transmitting the file. Going back to our virtual instruction set model, any programmable environment will suffice, if the I/O capabilities are powerful enough. Microsoft Office documents, with their access to Visual Basic for Applications (VBA), are the classic example, but many others exist. LATEX—the system with which this book was written—comes very close; it has the ability to write files, but many years ago a restriction was imposed that only let documents write to files in the current directory. This effectively froze the effective target environment, since very few directories contain more than one LATEX document. PostScript is another example, though I’ll leave the design (and limitations) of a PostScript virus as an exercise for the reader. Even LISP viruses have appeared in the wild [Zwienenberg 2012].

There’s one more aspect of the virtual instruction set that bears mentioning: what matters is the actual instruction set, not just the one intended by the system designers. Just as on the IBM 7090 computer a STORE ZERO instruction existed by accident [Koenig 2008], a virus writer can and will exploit bugs in the underlying platform to gain abilities beyond those anticipated by the programmers. Bugs are not necessary—the IBM “Christmas Card” virus1 spread because of the credulity of users—but they do complicate life for the defender.

1. “The Christmas Card Caper, (hopefully) concluded,” http://catless.ncl.ac.uk/Risks/5.81.html#subj1.

Collectively, these points explain why the classic protection model of operating systems does not prevent viruses: they can thrive within a single protection domain and spread by ordinary collaborative work. Duff’s experiments relied on the prevalence of world-writable files and directories, but such are not necessary. His design, involving an infected Unix executable, would not work well without such artifacts of a loosely administered system, since Unix users rarely shared executable files, but higher-level environments—Word, PostScript, etc.—could and did host their own forms of malware.

A corollary to this is that the classic operating system protection model—an isolated trusted computing base (TCB)—does not protect the users of the system from themselves. It also explains why, say, the Orange Book [DoD 1985a], the US Department of Defense’s 1980s-vintage specification for secure computer systems, is quite inadequate as a defense against viruses: they do not violate its security model and hence are not impeded by it.

In short, classic operating system design paradigms do not and cannot stop viruses. That is why antivirus programs have been needed.

To understand why virus checkers “shouldn’t” work, it is necessary to understand how they function. It would be nice, of course, if they could analyze a file and know a priori that that file is or isn’t evil [Bellovin 2003]. Even apart from the difficulty of defining “evil,” the problem can’t be solved; it runs afoul of Turing and the Halting Problem [Cohen 1987]. Accordingly, a heuristic solution is necessary.

Today’s virus checkers rely primarily on signatures: they match files against a database of code snippets of known viruses. The code matched can be part of the virus’ replication mechanism or its payload.

The disadvantages of this sort of pattern-matching are obvious. One, of course, is that its success is crucially dependent on having a complete, up-to-date signature database. The antivirus companies love that, since it means that customers have to buy subscriptions rather than make a one-time purchase, but it’s entirely legitimate; by definition a new virus won’t be matched by anything in an older database.

Virus writers have used the obvious counter to signature databases; they’ve employed various forms of obfuscation and transformation of the actual virus. Some viruses encrypt most of the body, in which case the antivirus software has to recognize the decryptor. Other viruses do things like inserting NOPs, rearranging code fragments, replacing instruction sequences with equivalent ones, and so on.

Naturally, the antivirus vendors haven’t stayed idle. The obvious defense is to look for the the invariant code, such as the aforementioned decryptor; other techniques include looking for multiple sequences of short patterns, deleting NOPs, and so on. Ultimately, though, there seems to be a limit on how good a job a static signature analyzer can do; in fact, experiments have shown that “the challenge of modeling self-modifying shellcode by signature-based methods, and certain classes of statistical models, is likely an intractable problem” [Song et al. 2010]. Fundamentally different approaches are needed.

One, widely used today by antivirus programs, employs sandboxed or otherwise controlled execution. It is based on two premises: first, virus code is generally executed at or very near the start of a program to make sure it does get control; second, the behavior of a virus is fundamentally different than that of a normal, benign program. Normal programs do not try to open the boot block, nor do they scan for other executable files and try to modify them. If such behavior patterns are detected—and there are behavior patterns (see, e.g., [Hofmeyr, Somayaji, and Forrest 1998]) as well as byte patterns in a signature database—the program is probably malware.

Note, though, the word “probably.” Antivirus programs are not guaranteed to produce the correct results; not only can they miss viruses (false negatives), as discussed above, they can also produce false positives and claim that perfectly innocent files are in fact malicious.

A second approach, used somewhat today but likely to be a mainstay in the future, relies on anomaly detection. Anomaly detection relies on statistics: the properties of normal programs and documents are different than those of malware; the trick is avoiding false positives.

One challenge is how to identify useful features that distinguish malware from normal files. Data mining is the approach of choice [Lee and Stolfo 1998] in many of today’s products, but it’s not the only one. Another study attempted to detect shellcode—actually, machine code, especially machine code intended to invoke a shell—in Word documents by looking at n-gram frequencies [W.J. Li et al. 2007]. While in theory more or less anything can appear in a Microsoft Office document—“modern document formats are essentially object-containers. . . of any executable object”—some sections of legitimate documents are much less likely than others to contain shellcode. Li and company found that by counting the frequency of various n-grams, they could detect infected documents quite successfully.

Anomaly detectors require training; that is, they need to “know” what is normal. Training is commonly done by feeding large quantities of uninfected files to an analyzer; the analyzer builds some sort of statistical profile based on them. This is then used to catch new malware.

Naturally, what is “normal” changes over time and by location. A site that tends to include pictures in its Word documents will look quite different than a site that does not. Similarly, as new features are added, statistical values will change. Thus, even anomaly detection requires frequently updated databases.

The problem with anomaly detection is, as noted, false positives. While signature schemes can have such issues, the rate is very much higher with anomaly schemes. Users doing different things, either on their own or because the applications that they use have changed, can appear just as anomalous as malware. A partial solution is to correlate anomaly information from different sites [Debar and Wespi 2001; Valdes and Skinner 2001]. If a file seems somewhat odd but not quite odd enough to flag it definitively, it can be uploaded to your antivirus vendor and matched against similar files from other sites.

The final piece of the puzzle is how to find new malware. Sometimes, machines or files believed to be infected are sent to the antivirus companies; indeed, that is how Stuxnet and Flame were found [Falliere, Murchu, and Chien 2011; Zetter 2012; Zetter 2014]. More often, the vendors go looking for infections. They deploy machines that aren’t patched, get on spam mailing lists and open—execute—the attachments, etc. They also haunt locales known to be infested. “Adult” sites are notorious for hosting malware; before suitable scripting technologies were developed, some vendors had employees whose job responsibilities were to spend all day viewing porn. (Anecdotal evidence suggests that yes, it is possible to get bored with such a job, even in the demographics believed to be most interested in such material.)

4.2 The Care and Feeding of Antivirus Software

Antivirus software is not fire-and-forget technology. It needs constant attention, both because of the changing threat environment and the changing computing environment. Other operational considerations include handling false negatives, handling false positives, efficiency, where and when scanning should happen, and user training.

The need for up-to-date signature and anomaly databases is quite clear. What is less obvious is how the operational environment interacts with virus scanning. To pick a trivial example, if you don’t run Microsoft Office you don’t need a scanner that can cope with Word documents; if the former changes, you do.

Sometimes, the environmental influences can be more subtle and serious. Once, three separate versions of a program’s installer were falsely flagged as viruses by a particular scanner.2 It seems likely that the installer had an unusual code sequence that happened to match a virus’s signature.

2. “Pegasus Mail v4.5x Released,” http://www.pmail.com/v45x.htm.

Several of the other factors interact as well. Consider the false-negative problem, often due to an inadequate or antiquated database. Many people suggest running two different brands of antivirus software to take advantage of different collections of viruses. That’s reasonable enough, if you didn’t have to worry about (of course) cost, performance, and increasing your rate of false positives. Assuming that the false positive rate is low enough (it generally is), one solution is to use one technology at network entry points—mail gateways, web proxies, and perhaps file servers—while using a different technology on end systems. That also helps deal with the cost issue; you don’t have to buy two different packages for each of your many desktops and laptops.

That strategy has its own flaw, though: network-based scanners can’t cope with encrypted content. Encrypted email is rare today; encrypted web traffic is common, and web proxies pass HTTPS through unexamined. Furthermore, there is one form of encryption that has been used by malware: encrypted .zip files, with the password given in the body of the message.

In most situations, the proper response to the encryption problem is to ignore it. If you have antivirus software on your end systems, it will probably catch the malware. Most of what’s missed by major scanners is the rarer viruses; they all handle the common ones.

You might be inclined to worry more if you’re being targeted by the Andromedans; after all, they can find 0-days to use against you. However, by definition 0-days aren’t known and hence won’t be in anyone’s signature files; besides, MI-31 can run their code through many different scanners and make sure it gets through unmolested. However, you might find, after someone else has noticed and analyzed the malware, that signatures are developed (as has happened with Stuxnet); in that case, your end system scanners will pick up any previous infections. Anomaly detectors are another solution. (There’s an interesting duality here. As mentioned earlier, anomaly detectors need to be trained on uninfected files. An exploit for a 0-day by definition won’t be in the training data and is therefore more likely to be caught later on. By contrast, the lack of prior instances of the 0-day means that it won’t be in a signature file. The world is thus neatly bifurcated; the two different technologies match the two different time spans. One caveat: just because something hasn’t been noticed doesn’t mean it doesn’t exist. Sophisticated attacks, especially by our friends the Andromedans, may not be noticed for quite a while. Again, this happened with both Stuxnet and Flame.)

Using multiple antivirus scanners is a classic example of when one should not treat insecurity as a sin. It’s an economic issue; gaining this small extra measure of protection isn’t worth it if it costs you too much. If your end systems are well managed—that is, if they’re up to date on patches and have current antivirus software—infrastructure-based scanning is an extra layer. It’s a useful extra layer and shouldn’t be neglected if feasible; the question is what it costs, not in dollars but in lost functionality and perhaps user miseducation. Consider: if you’re in an environment where sending around .zip files is common, barring them costs productivity. Users rapidly learn to evade this, by changing the extension—and teaching users to evade security mechanisms is never a good idea.

Don’t neglect the opportunity to detect viruses after they’ve infected a machine. For the most part, today’s viruses aren’t designed for random malicious mischief; rather, they have very specific goals. Catching that sort of behavior is a good way to find infected machines.

The form of detection to use, of course, depends on the goal of the virus; since you can’t know that you’ll have to employ several. One form is extrusion detection, as discussed in Section 5.5: looking at outbound traffic for theft of data. This is especially useful if you’re the victim of a targeted attack (especially an Andromedan attack), since exfiltration of proprietary data is a frequent goal of such attackers. Another good approach is to look for command and control traffic; infected machines are frequently part of a botnet managed via a peer-to-peer network. This isn’t easy to spot, though there are some fruitful approaches. Traffic flow visualization [D. Best et al. 2011; T. Taylor et al. 2009] is one approach. If you know your machine population well, you can look for client-to-client traffic; such behavior is uncommon except in peer-to-peer networks.

4.3 Is Antivirus Always Needed?

One of the most controversial issues surrounding antivirus software is on which machines it should be used. Some people say it needs to be used everywhere; others say it should never be used. Since absolute statements are always wrong, let’s approach the question analytically.

An antivirus package is another layer of defense. Per the analysis above, it protects against threats that an OS cannot catch; it’s also capable of blocking attacks that somehow managed to get through some other layer. On occasion, this is a matter of timing; a new security hole may be difficult to fix. Or it might be that the vendor quite rightly wants—needs, actually—to put the fix through testing and quality assurance before shipping it. Antivirus firms can ship signature updates much more quickly, because they’re advisory. In a very strong sense, the division of responsibilities between the antivirus package and the OS is like the split between the C compiler and lint [S. C. Johnson 1978]:

In conclusion, it appears that the general notion of having two programs is a good one. The compiler concentrates on quickly and accurately turning the program text into bits which can be run; lint concentrates on issues of portability, style, and efficiency. Lint can afford to be wrong, since incorrectness and over-conservatism are merely annoying, not fatal. The compiler can be fast since it knows that lint will cover its flanks.

Just so. There is, however, one extremely crucial difference: unlike a programmer deciding to ignore a lint warning, deciding to turn off virus-checking is a very difficult decision, and well beyond the pay grade of most users. It’s tempting to say that people know when they’re doing dangerous things, such as downloading programs from random Internet sites, and when they’re doing something that should be safe, such as installing software from a major vendor’s official distribution page. Indeed, many very legitimate packages caution you to turn off your virus scanner prior to running the installer. It’s not that simple. Even apart from timing coincidences and malware that waits for a software installation to fire up, out-of-the-box products have been infected with viruses, including Microsoft software CDs [Barnett 2009], IBM desktops [Weil 1999], Dell server motherboards [Oates 2010], and even digital picture frames [Gage 2008].

One consideration that often leads people to omit antivirus technology is a system’s usage and/or connectivity. An ordinary end-user’s desktop machine is the environment for which, it would seem, antivirus packages were developed; users, after all, are constantly visiting sketchy web sites, downloading dubious files, and receiving all manner of enticing (albeit utterly fraudulent) email. But what about servers? Embedded systems? Systems behind an airgap? Embedded systems behind an airgap? All of these can be vulnerable, but in different ways.

Servers can be infected precisely because they’re servers: they’re listening for certain requests, and if the serving applications are buggy they can be vulnerable to malware. This is, after all, what leaves them very vulnerable to many of the usual attacks: stack-smashing, SQL injections, and more. It is important to realize that nowhere in the operational definition of an antivirus program is any requirement that the signatures only match self-replicating programs. Anything can be matched, including garden-variety persistent malware. In fact, the word “antivirus” is a misnomer; it is, rather, antifile software, and can flag any file that matches certain patterns, as long as the file is of a type that it knows to scan.

Embedded devices—the small computers that run our cars, printers, toasters, DVD players, televisions, and more—are often quite vulnerable [Cui and Stolfo 2010]. Perhaps surprisingly, they’re frequently controlled by general-purpose operating systems (often, though not always, Windows), and they’re very rarely patched. An address space-scanning virus isn’t particular; it doesn’t know if it’s probing your colleague’s desktop or the office thermostat; if it answers in the right way, it can be infected. Furthermore, since the software powering such devices is very rarely updated, they’re generally susceptible to very old hacks. Unfortunately, they also never have antivirus software, and if they did the signature database would be out of date. (What release of what OS is your car’s tire pressure monitor running? It might be vulnerable [Rouf et al. 2010]. Note, too, that although there is local wireless connectivity to your wheels, there is no Internet connectivity and hence no way to automatically download signature updates. Perhaps your mechanic is regularly updating the base software—but perhaps not.) Even nuclear power plants have been infected [Poulsen 2003; Wuokko 2003].

How was the Davis-Besse nuclear power plant infected? There was a firewall that was properly configured to block the attack, but it only protected the direct link to the Internet. The Slammer worm, though, infected a machine at a contractor’s facility, and that contractor had a direct link to the operator of the plant. This link was not protected by the firewall, which permitted the worm to attack an unpatched server within the nuclear plant operator’s network. In other words, there were several different ways the problem could have been prevented—but it wasn’t.

Airgapped systems—ones with no network connections, direct or indirect, to the outside world—are sometimes seen as the ultimate in secure, protected machines. Grampp and Morris wrote [1984], “It is easy to run a secure computer system. You merely have to disconnect all dial-up connections and permit only direct-wired terminals, put the machine and its terminals in a shielded room, and post a guard at the door.” Unfortunately, in many ways such systems are less secure.

How can attacks enter? The easiest way is via USB flash disk. Indeed, Stuxnet is believed to have been introduced into the Iranian centrifuge plant in exactly that fashion, since one of the 0-days it used caused autoexecution of code on a flash disk [Falliere, Murchu, and Chien 2011]. For a while, the US military banned such devices because of a very serious network penetration via that mechanism [Lynn III 2010]. Who provided the infected flash disks in these cases? It isn’t known (at least not publicly), but it could have been a legitimate user who found it in a parking lot; in at least one test, most users fell for that trick [Kenyon 2011]. Alternately, a machine legitimately intended to communicate across the airgap, perhaps to provide new software for it or to receive outbound reports, might have been infected; when the communications flash drive was inserted into it, the drive (or the legitimate files on it) could have been infected, to the detriment of its communicants beyond the airgap. Add to that the difficulty of installing patches and signature file updates on machines that can’t talk to the Internet and you see the problem: the administrators were lulled into a false sense of security by the topological separation, and they didn’t do the hard work of otherwise protecting the machines.

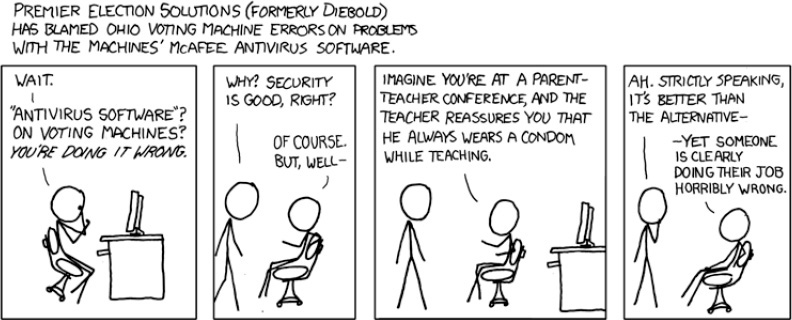

Is the xkcd cartoon (Figure 4.1) wrong? Is it necessary and proper to have antivirus software on voting machines? As in so many other situations, it depends. There can be false positives; indeed, antivirus software was (incorrectly, it turned out) blamed for problems with a voting system [Flaherty 2008]. But voting systems are supposed to be carefully controlled, running only certified software [Flaherty 2008]:

Unlike other software, the problem acknowledged by Premier cannot be fixed by sending out a coding fix to its customers because of federal rules for certifying election systems, Rigall said. Changes to systems must go through the Election Assistance Commission, he said, and take two years on average for certification and approval—and that is apart from whatever approvals and reviews would be needed by each elections board throughout the country.

If the machine is only running certified software, and if proper procedures are followed, there should be no chance of any attacks, and hence no need for protective add-ons.

Note, though, that second “if” (and remember the Davis-Besse nuclear power plant). In practice, voting machines are often not properly protected. Ed Felten, a prominent computer science professor at Princeton, has made a habit of touring his town and photographing unguarded machines [2009]. Nor are the systems secure against someone who has physical access; reports on this are legion, but the summary Red Team report in the California “Top to Bottom” review makes it clear just how bad the situation can be [Bishop 2007]. Similarly, the protective seals are not effective defenses against tampering [Appel 2011]. In short, though in theory antivirus software isn’t needed, in practice it might help—except that the vulnerabilities are so pervasive the attackers could disable any protective mechanisms at the same time as they replaced all of the other software on the machine. Besides, how, when, and by whom would the signature databases be updated?

(The larger question of electronic voting machine security and accuracy is quite interesting. Fortunately, however, most of it is well outside the scope of this book. Let it suffice to say that most computer scientists are very uneasy about direct recording electronic (DRE) systems. For further details, see [Rubin 2006] or [D. W. Jones and Simons 2012].)

There’s one more situation where antivirus software isn’t needed: when the incidence of viruses is so low as to render the protection questionable. In that case, you pay the price—the cost of the software, hits to system efficiency, possible false positives—without reaping much benefit. Mac OS X currently (September 2015) falls into that category, though with the growing popularity of the platform that probably won’t be true for very much longer. Again, antivirus software is not an abstract technology; it can only protect against real, past threats. If there are no threats, there’s nothing to put into the signature database. The most serious attacks on Macs have involved either social engineering or a Java hole; the former can be avoided with education (but see Chapter 14) and the latter by upgrading to the latest release of Mac OS X, which does not include Java. (Microsoft Office macro viruses could, in theory, affect Macs as well, but they seemed extinct because of long-ago fixes by Microsoft. Some people say they’re starting to come back [Ducklin 2015].) This might, though, be a great time to start collecting Mac OS X files for baseline data for anomaly detectors. After all, given the extremely low rate of infection, you’re virtually guaranteed that you won’t inadvertently train your model on unsuspected malware.

One last word of warning: just because your attention is focused on the Andromedans, don’t neglect antivirus protection. Sure, it won’t stop 0-days, but MI-31 will happily use older vulnerabilities if they’ll suffice. Why use an expensive weapon when a cheap one will do the job?

4.4 Analysis

Antivirus—more properly, antimalware—software is a mainstay of today’s security environment. Unfortunately, it is losing its efficacy. One recent article [Krebs 2012] noted that of a recent sample of nasty malware—programs aimed at stealing banking credentials from small businesses—run through multiple scanners via https://www.virustotal.com/, most were not detected. A mean of just 24.47% and a median of 19% of scanners caught these files. This means that the most relied-upon defense usually fails. If people blithely click on attachments under the assumption that they’re protected, they’re in for a very rude shock. The other defenses here—getting people to stop clicking on phishing messages, and either bug-free or quickly patched software—seem even more dubious. Will there be technical changes that can help?

The two areas most likely to change are the efficacies of signature-based detection and anomaly-based detection. A decline in the former would spur greater reliance on the latter; however, it is unclear whether its false positive rate is good enough at this point.

The death of signature-based scanning has been bruited about for quite some time; thus far, it has not quite come to pass. The virus writers may improve their technology, but the antivirus companies have been around for a long time and have invested a lot in their technology; its death will most likely manifest itself as a long decline rather than as a sudden cessation. To be sure, many of the rapid updates are possible only because anomaly detectors have flagged something as suspicious enough to merit analysis by humans.

Anomaly detection is at its best on systems that do the same sort of thing. An embedded device is a better setting for it than, say, a shared computer in a library or Internet cafe. Variations in usage patterns can trigger an upsurge in false positives; this is not a current technological limit but an issue inherent to the technology: by definition, anomaly detectors look for behavior that doesn’t match what has been happening. (Normal variations do happen. Consider the previous paragraph, where I quite unintentionally used the words “bruited” and “cessation.” Searching my system reveals that I virtually never use either of those words in my writing, but they both showed up here. An anomaly detector might conclude—incorrectly!—that I did not write those sentences. No, I didn’t do it consciously, either; those words just happened to jump out of my brain at the right time.)

Another technology that is coming into use is digitally signed files. Just how this is implemented matters a lot; thus far, performance has been mixed.

The basic notion is that an executable file can be digitally signed; this is intended to give the user assurance that it hasn’t been tampered with by a virus. Issues include who signs it, protection of the private signing keys (see Chapter 8 for more discussion of this), and when and how checking is done. Note that the limitations I describe are properties of the concepts themselves, not of their implementation.

In what I will call the “Microsoft device driver model,” many different developers have keys and certificates signed by Microsoft. (Apple’s Gatekeeper system for Mac OS X uses the same model.) Such a design offers some protection against low-end virus authors, but not against the Andromedans; there are too many trusted parties, and experience has shown that at least a few will fail to take adequate care. For that matter, MI-31 is quite capable of setting up a fake development shop and acquiring its own, very legitimate certificate. (The CIA has run its own covert airlines [M. Best 2011]; software development shops are much cheaper.)

The iOS signing model, used for iPhones, iPads, and other iToys, is very different: Apple is the sole signing party, and it nominally scrutinizes programs before approving them. There is only one private key to guard—but of course, if it’s ever compromised a tremendous amount of damage can be done. There is thus less risk from low-end attackers but more risk from the very high end. One can also question just how good a job Apple or anyone else can do at finding cleverly hidden nastiness.

Android has an interesting variant: applications have public keys; these are used to verify updates to those applications. Thus, whoever has obtained the private key for, say, Furious Avians could create fake, malicious updates to it, but not to the Nerds with Fiends game; users who had only the latter and not the former would not be at risk.

When the signature is checked matters a great deal. If it’s checked each time the file is loaded (e.g., on iOS you are protected against on-disk modification by some currently running nastyware; the risk, though, is that the checking code itself might be subverted. You are still protected if the malware has achieved penetration with user privileges rather than root privileges; a variant scheme would have signature checking done at a lower layer still, perhaps by the hypervisor or what a Multics aficionado would call “Ring 0” [Organick 1972].

There is an interaction here with the different signing paradigms. In the iOS model (and assuming that the One True Key hasn’t been stolen), the attacker must either disable all checking or install a substitute verification key and resign all executables on the machine, a task that is quite expensive and probably prohibitively so. With the device driver model, the virus can include a signing key and use it to revalidate only those files it modifies.

Some systems check signatures at installation time. This provides no protection against changes to already-installed programs. It does ensure that what the vendor shipped is what you get and protects you from infection en route. That happened with some copies of the SiN game, where some secondary download servers were apparently infected [Lemos 1998] by the CIH virus; quite possibly, it was also the root cause of the infected CDs that Microsoft shipped [Barnett 2009]. Perhaps more importantly, you’re protected against drive-by downloads, though there a great deal does depend on the implementation.

The ultimate utility of signed code as an antivirus defense remains to be seen. Furthermore, the benefits need to be weighed against the social costs of giving too much power to a very few—two or three—major vendors.

The other technical trend in this space is the increased use of sandboxing, running applications with fewer privileges. The notion has been around in the research community for many years. Long ago, Multics supported multiple protection rings even for user programs [Organick 1972], though few if any made effective use of it. Other, more recent work includes my own design for “sub-operating systems” [S. Ioannidis and Bellovin 2001; S. Ioannidis, Bellovin, and J. Smith 2002], a design that permitted any user to create a very large number of subusers with fewer permissions. In the commercial world, both Windows and Mac OS X use sandboxing for web browsers and some risky applications. Quite notably, Adobe has modified its popular but troubled PDF viewer to run in a sandbox on Windows Vista and later.

The benefit of a sandbox, though, is crucially dependent on two things: how much the isolated application needs to interact with the outside world, and how effectively those interactions can be policed. Consider, for example, an email message with an attached file, handled by a sandboxed mailer. If the file contains a virus, at best I might be barred from opening it—the mail sandbox should disallow execution—but at the least, should the file be executed, it would run with fewer permissions than even the mailer itself. On the other hand, if the attachment is a document I’m expected to edit I want to be able to open it normally; then, however, I take the risk that that document wasn’t really sent by my colleague but was in fact generated by a virus on her machine.

The ultimate in sandboxing is the virtual machine (VM) (Section 10.2). As I’ve noted elsewhere [Bellovin 2006b], assuming that you’re safe because the malware is running in its own VM is like letting your enemy put a 1U-height server into racks in your data center. Would you trust such a machine on your LAN? Even without the intended and expected interactions, you would probably (and rightly) consider that to be a serious risk. A VM is no better—and applications generally do need to interact with other parts of your system.

In essence, a sandbox can do two things: it can reduce both the effective instruction set and the effective target environment of programs executed within it. Taken together, these properties can drastically reduce or even stop the spread of viruses and worms.

Should you run antivirus software? For generic desktop systems, the answer is probably yes. It’s relatively cheap protection and is usually trouble free. Similarly, server or firewall-resident scanners can block malicious inbound malware before it reaches your users. Be sure, though, that your environment and policies are such that definitions are regularly updated. As a corollary, it’s wasteful on most embedded systems, simply because of the lack of any regular update mechanism. If an attacker can persuade such a device to download and run some file, you probably have bigger architectural problems.