Chapter 2. Thinking About Security

“Think, youth, think! For know, Lensman, that upon the clarity of your thought and upon the trueness of your perception depends the whole future of your Patrol and of your Civilization; more so now by far than at any time in the past.”

Mentor of Arisia in Second Stage Lensman

—E. E. “DOC” SMITH

2.1 The Security Mindset

I’ve often remarked that the best thing about my job is that I get to think evil thoughts and still feel virtuous about it. Remarkably, it isn’t easy. In fact, for most people it’s remarkably hard to think like a bad guy. Nevertheless, the ability to do so is at the heart of what security people have to do.

Bruce Schneier explained it very well in an essay a few years back [Schneier 2008]:

Uncle Milton Industries has been selling ant farms to children since 1956. Some years ago, I remember opening one up with a friend. There were no actual ants included in the box. Instead, there was a card that you filled in with your address, and the company would mail you some ants. My friend expressed surprise that you could get ants sent to you in the mail.

I replied: “What’s really interesting is that these people will send a tube of live ants to anyone you tell them to.”

In other words, a security person will look at a mechanism and think, “What else can this do? What can it do that will serve my needs rather than the intended purpose?”

One way to “think sideways” is to consider every process as a series of steps. Each step, in turn, depends on a person or gadget accepting a series of inputs. Ask yourself this: what if one of those inputs was wrong or corrupted? Would something useful happen? Do I have the ability to cause that input to be wrong? Can the defender spot the error and do something about it?

Look again at Bruce’s story. Someone at the company will read the card and send a tube of ants somewhere. However, one of the inputs—the address to which to send the bugs—is under the control of the person who filled out the card, and thus can trivially be forged.

There’s another input here, though, one Bruce didn’t address. Can you spot it? I’ll wait. . .

Most of you got it; that’s great. For the rest: how does the company validate that the card is legitimate? That is, how do they ensure that they only send the ants to people who have actually purchased the product?

Once, that would have been easy; the card was undoubtedly a printed form, very easily distinguished from something produced by even the best typewriters. Forging a printed document would require the connivance of a print shop; that’s too much trouble for the rather trivial benefit. Today, though, just about everyone has very easy access to word processors, lots of fonts, high-quality printers, and so on. If you put in a very modest amount of effort, you can reproduce the form pretty easily. For that matter, a decent scanner can do the job. (Note that easy forgery is a problem for other important pieces of paper, too; airplane boarding passes have been forged [Soghoian 2007].)

The next barrier is probably the cardboard the card is printed on. This isn’t as trivial, but something close enough is not very hard to obtain.

To a computer pro, the fixes are obvious: put a serial number on each card and look it up in a database of valid, unused ant requests. Add some sort of security seal, such as a hologram, on the card [Simske et al. 2008]. Ask for a copy of the purchase receipt, and perhaps the UPC bar code from the box. For that matter, creating hard-to-forge pieces of paper is an old problem: we call it paper money. Problem solved?

Not quite—we don’t even know if there was a problem. The defensive technologies cost money and time; are they worth it? What is the rate of forged requests for ants? Is the population of immature forgers—who else would be motivated to send fake ant delivery requests?—large enough to matter? More precisely, is the cost of the defense more or less than the losses from this attack? This is a crucial point that every security person should memorize and repeat daily: the purpose of security is not to increase security; rather, its purpose is to prevent losses. Any unnecessary expenditure on security is itself a net loss.

There’s one more lesson to take from this example: was the old security measure—the difficulty of forgery—rendered obsolete by improvements in technology? The computer field isn’t static; giving yesterday’s answers to today’s questions is often a recipe for disaster.

2.2 Know Your Goals

What are you trying to do? What are your security goals? The questions may seem trite, but they’re not. Answering them incorrectly will lead you to spend too much, and on the wrong things. (Spending too little generally results from being in denial about the threat.)

All too often, insecurity is treated as the equivalent of being in a state of sin. Being hacked is not perceived as the result of a misjudgment or of being outsmarted by an adversary; rather, it’s seen as divine punishment for a grievous moral failing. The people responsible didn’t just err; they’re fallen souls to be pitied and/or ostracized. And of course that sort of thing can’t happen to us, because we’re fine, upstanding folk who have the blessing of the computer deity—$DEITY, in the old Unix-style joke—of our choice.

Needless to say, I don’t buy any of that. First and foremost, security is an economic decision. You are trying to protect certain assets, tangible or not; these have a certain monetary value. Your goal is to spend less protecting them than they’re worth. If you’re uncomfortable trying to attach a cost to things like national honor or human lives, look at it this way: what is the best protection you can get for those by spending a given amount of money? Can you save more lives by spending the money on, say, antivirus software or by increasing the rate of automotive seat belt use? A hack may be a more spectacular failure (and you’re entitled to take bad publicity into account in calculating your damages), but it’s not a sin, and you are not condemned to eternal damnation for getting it wrong.

What should you protect? Not only is there no one answer, if you’re reading this book you’re probably not the right person to answer it, though you can and should contribute. The proper answer for any given system revolves around the worst possible damage that can occur if your worst enemy had control of that computer. That, in turn, is very rarely limited to the street price of a replacement computer. Consider what supposedly happened in 1982: the “most massive non-nuclear explosion ever recorded,” because a Soviet pipeline was controlled by software the CIA had sabotaged before it was stolen from a Canadian company [R. A. Clarke and Knake 2010; Hollis 2011; Reed 2004]. That’s an extreme case, of course, but it doesn’t take a Hollywood scriptwriter’s imagination to come up with equally crazy scenarios. (This story may also be the result of someone’s imagination. Most published reports seem to derive from Reed’s original published report [2004]. Reed was an insider and may have had access to the full story, but it’s hard to understand why anything was declassified unless it was deliberately leaked—or fabricated—to warn potential enemies about the US government’s prowess in cyberweaponry. The details and quote given here are from [R. A. Clarke and Knake 2010, pp. 92–93]. Zetter, who has done a lot of research on the topic, doubts that it happened [Zetter 2014].) Malware designed to steal confidential business documents, to aid a rival? It’s happened [Harper 2013; B. Sullivan 2005]. A worm that blocks electronic funds transfers, so that your company appears to be a deadbeat that doesn’t pay its bills? Why not? (If you think it sounds far-fetched, see [Markoff and Shanker 2009]: the United States seriously contemplated hacking the Iraqi banking system to deny Hussein funds to pay his troops, buy munitions, and so on. “‘We knew we could pull it off—we had the tools,’ said one senior official who worked at the Pentagon when the highly classified plan was developed.”) All of these could happen. However. . .

The roof of your factory is almost certainly not armored to resist a meteor strike. When selecting the site for your office building, you probably didn’t worry about the proximity of a natural gas pipeline that a backhoe might happen to puncture. Most likely, you’re not even expecting spies to hide in the false ceiling above the reception area, so they can sneak past a locked door in the dead of night. All of these threats, cyber and otherwise, are possible; in general, though, they’re so improbable that they’re not worth worrying about—which is precisely my point.

In most situations, the proper defense posture is an economic question. There are no passages in Leviticus prescribing exile from your family because your system was hacked. You are not a lesser human being if your laptop suffers a virus infestation. You may, however, be a bad system administrator if you don’t have good backups of that laptop. Not using encryption when connecting from a public hotspot is negligent. Ignoring critical vendor patches is foolish. And of course, you should know how to recover from the loss of any computer system, whether due to hackers or because a wandering cosmic ray has fried its disk drive controller.

In general, one should eschew paranoia and embrace professionalism. If you run the network for an X-ray laser battery used for anti-UFO defense, perhaps you should worry about Andromedan-instigated meteor strikes aimed at your router complex. On the other hand, if the network you’re trying to protect controls the cash registers for a large chain of jewelry stores, MI-31 isn’t a big concern, but high-end cyberthieves might be. Regardless, you need to decide: what are you trying to protect, what are you leaving to insurance, and what is too improbable to care about? You cannot deploy proper defenses without going through this exercise. Brainstorming to come up with possible threats is the easy part; deciding which ones are realistic requires expertise.

Here are two rules of thumb: first, distinguish between menaces and nuisances; second, realize the scope of some protection efforts. Let’s consider some concrete examples.

Imagine a piece of sophisticated malware that increases the salary reported to the tax authorities for top executives, while leaving intact the amounts printed on their paystubs. When the executives file their tax returns, the numbers they report will be significantly lower, triggering an audit. What will happen? Ultimately, it will likely be a non-event. A passel of lawyers and accountants will spend some time explaining the situation; ultimately, there will be too much other evidence—contracts, annual reports, bank records, other computer systems, minutes of the Board of Directors’ compensation committee, perhaps discussions with an outside consulting firm that knows the competitive landscape—to make any charges stick. In other words, this is a nuisance attack. By contrast, malware that damages expensive, hard-to-replace equipment—think Stuxnet or the “Aurora” test [Meserve 2007; Zetter 2014] that destroyed an electrical generator—is a serious threat.

A more plausible scenario is malware that tries to steal important corporate secrets. There are enough apparent cases of this occurring that it’s a plausible threat, with attackers ranging from random virus writers to national intelligence services and victims ranging from paint manufacturers to defense contractors [NCIX 2011]. Protecting important secrets is hard, though; they’re rarely in a single (and hence easy to isolate) location. Nevertheless, it’s often worth the effort. Even here, some analysis is necessary: can your enemies actually use your secrets? Unless your enemy has at least the tacit support of a foreign government or a very large company, efforts that require a large capital outlay may be beyond them—and support from competitors isn’t always forthcoming, much to the dismay of some bad guys [Domin 2007].

There is no substitute here for careful analysis. You need to evaluate your assets, estimate what it will take to protect them, guess at the likelihood and cost of a penetration, and allocate your resources accordingly.

One special subcase of the protection question deserves a more careful look: should you protect the network or the hosts? We are often misled when we call the field “network security”; more often, saying that network security is about protecting the network is like saying that highway robbery means that a piece of pavement has been stolen. The network, like the highway, is simply the conduit the attackers use.

With a couple of exceptions, attackers are uninterested in the network itself. Connectivity is ubiquitous today; few attackers need more per se. They often want hosts with good connectivity, especially for spamming and launching DDoS attacks, but the pipe itself doesn’t need special protection; protect the hosts and the pipe will be fine.

There are some important special cases. The most obvious is an ISP: its purpose is to provide connectivity, so attacks on its network cut at the heart of its business. The main threat is DDoS attacks; note carefully that an ISP has to mitigate attacks coming from hosts it has no control over and which may not even be directly connected to its own network.

There are two other special cases worth mentioning; both affect ISPs and customers. The first is access networks, those used to connect a site to an ISP; the second is networks that have some unusual characteristic, such as very low bandwidth where every extra byte can cause pain. In these situations, even modest attacks on the infrastructure can lead to a complete denial of service. As before, the network operator will have little or no direct control over the hosts that are causing trouble; consequently, its responses (and its response plans) have to be in terms of network operations.

Don’t confuse the question of what to protect with where the defenses should be. Protecting hosts might best be done with network firewalls or network-resident intrusion prevention systems; conversely, the best way to deal with insecure networks is often to install encryption software on the hosts.

There’s an important special case that’s almost a hybrid: the “cloud.” What special precautions should you take to protect your cloud resources? The issue is discussed in more detail in Chapter 10; for now, I’ll note that “Is the cloud secure?” is the wrong question; more precisely, it’s a question that has a trivial but useless answer: “No, of course not.” A better question is whether the cloud is secure enough, whether using the cloud is better or worse than doing it yourself.

2.3 Security as a Systems Problem

Changing one physical law is like trying to eat one peanut.

“The Theory and Practice of Teleportation”

—LARRY NIVEN

Here’s a quick security quiz. Suppose you want to steal some information from a particular laptop computer belonging to the CEO of a competitor. Would you:

(a) Find a new 0-day bug in JPG processing and mail an infected picture to the CEO?

(b) Find an old bug in JPG processing and mail a picture with it to the CEO?

(c) Lure the user to a virus-infected web site that you control (a so-called “watering hole” attack)?

(d) Visit the person’s office on some pretext, and slip a boobytrapped USB stick into the machine?

(e) Wait until her laptop is brought across a national border, and bribe or otherwise induce the customs officer to “inspect” it for you?

(f) Bribe her secretary to the do same thing without waiting for an international trip?

(g) Bribe the janitor?

(h) Wait till she takes it home, and attack it via her less-protected home network? The NSA considers that a threat to its own employees.1

1. “Best Practices for Keeping Your Home Network Secure,” https://www.nsa.gov/ia/_files/factsheets/I43V_Slick_Sheets/Slicksheet_BestPracticesForKeepingYourHomeNetworkSecure.pdf.

(i) Penetrate the file server from which the systems administrator distributes patches and antivirus updates to the corporation, and plant your malware there?

(j) Install a rogue DHCP server or do ARP-spoofing to divert traffic from that machine through one of yours, so you can tamper with downloaded content?

(k) Follow her to the airport, and create a rogue access point to capture her traffic?

(l) Cryptanalyze the encrypted connection to the corporate VPN from her hotel?

(m) Infect someone else in the company with a tailored virus, and hope that it spreads to the executive offices?

(n) Send her a spear-phishing email to lure her to a bogus corporate web site; under the assumption that she uses the same password for it and for her laptop, connect to its file-sharing service?

(o) Wait until she’s giving a talk, complete with slides, and when she’s distracted talking to attendees after the talk steal the laptop?

Obviously, some of these are far-fetched. Equally obviously, all of them can work under certain circumstances. (That last one appears to have happened to the CEO of Qualcomm.2) In fact, I’ve omitted some attacks that have worked in practice. How should you prepare your defenses?

2. “Qualcomm Secrets Vanish with Laptop,” http://www.infosyssec.com/securitynews/0009/2776.html.

The point is that you can’t have blinders on, even when you’re only trying to protect a single asset. There are many avenues for attack; you have to watch them all. Furthermore, when you have a complex system, the real risk can come from a sequence of failures. We told one such story in Firewalls [Cheswick and Bellovin 1994, pp. 8–9]: the production gateway had failed during a holiday weekend, the operator added a guest account to facilitate diagnosis by a backup expert, the account was neither protected nor deleted—and a joy hacker found it before the weekend was over. In another incident, I was part of a security audit for a product when we learned that one of the developers had been arrested for hacking. We wondered whether a back door had been inserted into the code base; when we looked, we found two holes. One, I learned, was an error by another developer (ironically, she was part of the audit team). The other, though, gave us pause: it took a common configuration error and two independent bugs—for one of which the comments didn’t agree with the code—to create the problem. To this day, I do not know whether we spotted deliberate sabotage; on odd-numbered days, I think so, but I wrote this on an even-numbered day.

Complex systems fail in complex ways! More or less by definition, it isn’t possible to be aware of all of the possible interactions. Worse yet, the problem is dynamic; a change in software or configurations in one part of a network can lead to a security problem elsewhere. Consider the case of an ordinary, simple-minded firewall, of the type that may have existed around 1995 or thereabouts. It may have been secure, but two unrelated technological developments, each harmless by itself, combined to cause a problem [D. M. Martin, Rajagopalan, and Rubin 1997].

The first was the development of a transparent proxy for the File Transfer Protocol (FTP) [Postel and Reynolds 1985]. By default, FTP requires an inbound call from the server to the client to transmit the actual data; normally, the host and port to be contacted are sent by the client to the FTP server. Normally, of course, a firewall won’t permit inbound calls, even though they’re harmless (or as harmless as sending or receiving data ever is). The solution was a smarter firewall, one that examined the command stream, learned which port was to be used for the FTP transfer, and temporarily created a rule to permit it. This solved the functionality problem without creating any security holes.

The second technological development was the deployment of Java in web browsers [Arnold and Gosling 1996; Lindholm and Yellin 1996]. This was also supposed to be safe; a variety of restrictions were imposed to protect users [McGraw and Felten 1999]. One such restriction was on networking: a Java applet could do network I/O, but only back to the host from which it was downloaded. Of course, that included networking using FTP. The problem is now clear: a malicious applet could speak FTP but cause the firewall to allow connections to random ports on random protected hosts.

The philosophical failure that gave rise to this situation requires deeper thought. First, FTP handling in the firewall was based on the assumption, common to all firewall schemes, that only good guys are on the inside; thus, malicious FTP requests could not occur. A hostile applet, however, was a malicious non-human actor, a scenario unanticipated by the firewall designers. Conversely, the designers of the Java security model wanted to permit as much functionality as they could without endangering users; firewalls were not common in 1995, let alone ones that handled FTP that way, so it’s not surprising that Java didn’t handle this scenario.

Amusingly enough, the problem may now be moot. The rise of the web has made FTP servers far less common; disabling support for it on a firewall is often reasonable. Furthermore, if users encrypt their FTP command channels [Ford-Hutchinson 2005], the firewall won’t be able to see the port numbers and hence won’t be able to open ports, even for benign reasons. Four separate developments—the creation of smarter firewalls, the deployment of Java, the rise of the web and the decline of FTP, and the standardization of encrypted FTP—combined to first cause and then defang a security threat.

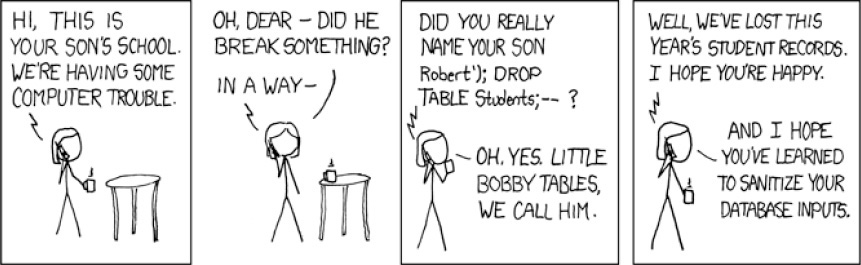

Although protecting an enterprise network is complicated, there’s a very important special case that is itself almost as complicated: protecting a “single” function that is actually implemented as many interconnected computers. Consider a typical e-commerce web site. To the customer, it appears to be a single machine; those who’ve worked for such companies are already laughing at that notion. At a minimum, the web server is just the front end for a large, back-end database; more commonly, there are multiple back-end databases (inventory, customer profiles, order status, sales tax rates, gift cards, and more), links to customer care (itself a complex setup, especially if it’s outsourced), the network operations group, external content providers, developer sites, system administration links, and more. Any one of these can be the conduit for a break-in. SQL injection attacks (Figure 2.1) occur when the web server is incautious about what it passes to a database. Customer care personnel have—and must have—vast powers to correct apparently erroneous entries in assorted databases; penetration of their machines can have very serious consequences. System administrators can do more or less anything; it doesn’t take much imagination to see the threats there. Note carefully that firewalls are of minimal benefit in such a setup; the biggest risks are often from protocols that the firewall has to allow. SQL injection attacks are a good example; they’re passed to the web server via HTTP, and thence on to the database as part of a standard, apparently normal query.

Figure 2.1: SQL injection attacks.

2.4 Thinking Like the Enemy

One of the hardest things about practicing security is that you have to discard all of your childhood training. You were, most likely, taught to flee from evil thoughts; there’s no point to planning how to do something wrong unless you’re actually intending to do it, and of course you wouldn’t ever want to do something you shouldn’t.

Being a security professional turns that around. I noted at the start of this chapter that my job entails thinking evil thoughts, albeit in the service of good; however, it’s not an easy thing to do. It is, nevertheless, essential. How does a bad guy think?

I’ve already discussed the obvious starting point: identifying your assets. Identifying weak points, beyond the checklist that every security book gives—buffer overflows, SQL injection and other unchecked inputs, password guessing, and more—is harder. How can you spot a new flaw in a new setup? If you’re developing a completely different kind of Internet behavior (Peer of the Realm to Peer of the Realm bilesharing?), how can you figure out where the risks are?

By definition, there’s no cookbook recipe. To a first approximation, though, the process is straightforward: attackers violate boundaries and therefore defenses have to. For example, suppose that a particular module requires that its input messages contain only the letters A, D, F, G, V, and X. It’s easy to guess that trouble could result if different characters appeared; the question is how to prevent that. The two obvious fixes are input filtering in one module or output filtering in another. The right answer, though, is both, plus logging. Output filtering, of course, ensures that the upstream module is doing the right thing. Indeed, it may be a natural code path: call the communications routine, and let it encode the message using the six appropriate permissible characters. Nothing can go wrong—except that the bad guy will look at other ways to send Q, Z, and even nastier letters like J and þ. Can a random Internet node send a message to the picky module? What about a subverted node on your Intranet? Another module in the same system? Can the attacker get access to some LAN that can reach the right point? Is there some minor, non-sensitive module that can be subverted and tricked into sending garbage? None of these things should happen, of course, but a defensive analyst has to spot them all—and since spotting them all may be impossible, you want input filtering, plus logging to let you know that somewhere, your other protections have fallen short.

There’s another lesson we can learn from that scenario: the more moving parts a system has, the harder it is to analyze. Quite simply, if code doesn’t exist, it can’t be subverted. As always, complexity is your enemy. This applies within modules, too, of course; a web server is vastly more complex, and hence more likely to contain security holes, than a simple authentication server. Thus, even though an authentication server contains more sensitive data, it is less likely to be the weak point in your architecture.

When trying to emulate the enemy, the most important single question is what the weak points are. That is, what system components are more likely to be penetrated. There is no absolute way to measure this, but there are a few rules of thumb:

• A module that processes input from the outside is more vulnerable.

• A privileged module is more likely to be targeted.

• All other things being equal, a more complex module or system is more likely to have security flaws.

• The richer the input language a module accepts, the more likely it is that there are parsing problems.

The question is addressed more fully in Chapter 11.

Don’t neglect the human element. True, only MI-31 is likely to suborn an employee, but someone with a grudge, real or imagined, can do a lot of harm. What would you do if your boss came to you and said, “We’re about to fire Chris,” when Chris is a system administrator who knows all of the passwords and vulnerabilities? How about “The union is about to go on strike; can we prevent them from creating an electronic picket line?” “There’s going to be a large layoff announced tomorrow; can we protect our systems?” All of these are real questions (I’ve been asked all three). Distrusting everyone you work with is a sure route to low morale (yours and theirs); trusting everyone is an equally sure route to trouble, especially when the stakes are high or when people are stressed. Put yourself in a given role—again, you’re emulating a role, not a person—and ask what damage you can do and how you can prevent it. The answer, by the way, may be procedural rather than technical. For example, if your analysis indicates that bad things could happen if a fake user were added to the system, have a separate group audit the new user list against, say, a database of new employees.

Conti and Caroland have another good pedagogical idea [2011]: teach students to cheat. They gave a deliberately unfair exam, which elicited the predictable (and justifiable) complaints. They told the students they were allowed to cheat, but the usual penalty—failing—would apply if and only if they were caught. The response was magnificently creative, which is what they wanted. Breaking security is a matter of not following the rules; people who don’t know how to do that can’t anticipate the enemy properly. Their conclusion is quite correct:

Teach yourself and your students to cheat. We’ve always been taught to color inside the lines, stick to the rules, and never, ever, cheat. In seeking cyber security, we must drop that mindset. It is difficult to defeat a creative and determined adversary who must find only a single flaw among myriad defensive measures to be successful. We must not tie our hands, and our intellects, at the same time. If we truly wish to create the best possible information security professionals, being able to think like an adversary is an essential skill. Cheating exercises provide long term remembrance, teach students how to effectively evaluate a system, and motivate them to think imaginatively. Cheating will challenge students’ assumptions about security and the trust models they envision. Some will find the process uncomfortable. That is OK and by design. For it is only by learning the thought processes of our adversaries that we can hope to unleash the creative thinking needed to build the best secure systems, become effective at red teaming and penetration testing, defend against attacks, and conduct ethical hacking activities.

It pays to keep up with reporting on what the bad guys are doing. The trade press is useful but tends to be later to the game; you want to be ahead of the curve. Focus first on the specific things bad guys want. If you think you’d be targeted by the Andromedans, you should probably talk to your country’s counterintelligence service. (Don’t skip this step because you’re a defense contractor and you know what MI-31 wants; their methods—and those include compromise of intermediate targets—are of interest as well; the counterintelligence folks want to know what’s going on.)

The changes in target selection over the years have been quite striking. In general, the hackers have been ahead of the bulk of the security community, spotting opportunities well before most defenders realized there was a problem. Mind you, the possibility of these attacks was always acknowledged, and there were always some people warning of them, but too many experts assumed a static threat model.

The first notable shift from pure joy hacking happened in late 1993; advisories described it as “ongoing network monitoring attacks” on plaintext passwords.3 What was more interesting, if less reported, was that a number of ISPs had workstations directly connected to backbone links. Given the LAN technology of the time—unswitched Ethernet—any such machine, if penetrated, could be turned into an eavesdropping station. It remains unclear how the attackers knew of the opportunity; the existence of such well-located machines was not widely known. The essence of the incident, though, is that the good guys did not understand the security implications of the placement of those machines; the bad guys did.

3. “CERT Advisory CA-1994-01 Ongoing Network Monitoring Attacks,” http://www.cert.org/advisories/CA-1994-01.html.

Attackers’ target selection improved over the years, notably in the attention paid to DNS servers, but the next big change happened around 2003. Suddenly, splashy worms that clogged the Internet for no particular reason more or less stopped happening; instead, starting with the Sobig virus [Roberts 2003], the malware acquired a pecuniary motive: sending spam. The virus writers had formed an alliance with the spammers; the latter paid the former for an enhanced ability to clog our inboxes with their lovely missives. It took quite a while for the good guys to understand what had happened, that infecting random PCs was no longer just to allow the perpetrators to cut another notch into their monitors. Again, the bad guys “thought differently.” This was followed by the widespread appearance of phishing web sites and keystroke loggers. Financial gain had become the overwhelming driver for attacks on the Internet.

The next change occurred shortly afterwards, with the Titan Rain [Thornburgh 2005] attacks. Widely attributed to China, these attacks appeared to be targeted industrial espionage attacks against US government sites. The concept of cyberespionage isn’t new—indeed, a real attempt goes back to the early days of the Internet [Stoll 1988; Stoll 1989]—but use on a large scale was still a surprise. Today, we may have moved into another era, of high-quality, militarized malware (Stuxnet, Duqu, and Flame) generally attributed to major governments’ cyberwarfare units [Goodin 2012b; Markoff 2011b; Sanger 2012; Zetter 2014].

What happened? What is the common thread in all of these incidents? With the exception of the cryptanalytic element in Flame [Fillinger 2013; Goodin 2012b; Zetter 2014], there was nothing particularly original or brilliant about the attacks; they’re more the product of hard work. Their import is that the attackers realized the significance of changes in what was on the Internet. It wasn’t possible to attack, say, the US military in 1990, because except for a very few research labs, it wasn’t online. When it was online, the hackers struck.

The essence of these incidents was better thinking about targets and opportunities by the attackers than by the defenders. In some sense, that’s understandable—it’s hard to get management buy-in (and funding) to defend against attacks that have never before been a real threat—but that doesn’t forgive it. There’s an old Navy saying that there are two types of ships, submarines and targets. The same can be said of cyberspace: there are two types of software, malware and targets, and the good guys are blithely building more targets. Will the next unexpected step be cyberwarfare? That’s a very complex topic, well beyond the scope of this book, and while I don’t think that the worst scenarios one sees in the press are credible I do think there’s significantly more potential risk than some would have you believe. The important point here comes from the title of this section: one has to think “outside the box” about next year’s targets. If you deploy a brand new service, what are the non-obvious ways to pervert it?

Beyond target selection, defenders have to think about how their systems are exploited. At the architectural stage, pay more attention to longer-term trends than to the bug du jour. Eavesdropping, SQL injection attacks, buffer overflows, and the like have been with us for decades; they’ll always need to be guarded against. A specific vulnerability in version eπi of some module may be of interest operationally, but dealing with it poses a complex problem; there’s often little you can do save to monitor more intensively until you get a patch from your vendor. Is availability of your service important enough that you should take the risk of leaving it up? Only you can answer that question—but be sure you know there’s a question that needs answering.

Consider it from the bad guy’s perspective, though. Suppose you’re a hacker, and you’ve obtained or written some code to exploit a new vulnerability. What do you do with it? Attack one target? Many targets? Wait a while and see if it really works for others? Some attackers want instant gratification: what can I steal now? Others, especially the more sophisticated ones, take a longer-range view: they’ll use their new code to make initial penetrations of interesting targets, even if they can’t do anything useful immediately, and plant a back door so they can return later.