Chapter 3. Threat Models

“And by the way, we are belittling our opponents and building up a disastrous overconfidence in ourselves by calling them pirates. They are not—they can’t be. Boskonia must be more than a race or a system—it is very probably a galaxy-wide culture. It is an absolute despotism, holding its authority by means of a rigid system of rewards and punishments. In our eyes it is fundamentally wrong, but it works—how it works! It is organized just as we are, and is apparently as strong in bases, vessels, and personnel.”

Kimball Kinnison in Galactic Patrol

—E. E. “DOC” SMITH

3.1 Who’s Your Enemy?

The correct answer to most simple security questions is “it depends.” Security isn’t a matter of absolutes; it’s a matter of picking the best set of strategies given assorted constraints and objectives.

Suppose you’re a security consultant. You visit some client and are asked whether you can secure their systems. The first question you should ask is always the same: “What are you trying to protect, and against whom?” The defenses that suffice against the CEO’s third grader’s best frenemy will likely be insufficient against a spammer; the defenses that suffice against a spammer won’t keep out the Andromedans.

A threat is defined as “an adversary that is motivated and capable of exploiting a vulnerability” [Schneider 1999]. Defining a threat model involves figuring out what you have that someone might want, and then identifying the capabilities of those potential attackers. That, in turn, will tell you how strong your defenses have to be, and which classes of vulnerabilities you have to close. If you run the academic computing center for a university, you probably don’t have to deal with extraordinary threats (though login credentials for university libraries are eagerly sought); on the other hand, if your company’s products are so highly classified that even the company doesn’t know what it makes, things like physical security and extortion against employees become major concerns. An accurate understanding of your risk is essential to picking the right security posture—and hence expense profile—for your site.

In the 1990s, most hackers were so-called joy hackers, typically teenage or 20-something males (and they were almost all male) who wanted to prove their cleverness by showing how many supposedly tough systems they could break into. It’s not that there weren’t intruders motivated by espionage—there were, as Cliff Stoll demonstrated [Stoll 1988; Stoll 1989]—and Donn Parker noted the existence of financially motivated computer crimes as long ago as 1976 [Parker 1976], but the large majority of the early Internet break-ins were done for no better motive than “because it’s there.”

The world has changed, though, and today most of the problems are caused by people pursuing specific goals. There are some hacktivists—people who attack systems to pursue ideological goals—but the large majority of problems are caused by criminals who, when not maturing other felonious little plans, are breaking into computer systems for the money they can make that way. They use a variety of different schemes, most notably spamming and credential-stealing. In fact, most of the spam you receive is sent from hacked personal computers. This has an important consequence: any Internet-connected computer—that is to say, most of them—is of value to many attackers. Maybe your computer doesn’t have defense secrets and maybe you never log on to your bank from it, but if it can send email, it’s useful to the bad guys and thus has to be protected.

We thus have a floor on the threat model; it extends all the way up to Andromedan attacks on national security systems. There is, though, a large middle ground. The rough metric of how much risk your systems are subject to is simple: how much money can a criminal make by subverting it? For example, computers used for banking by small businesses are major targets—large companies use much more complex payment schemes and consumers typically don’t have that much money in their bank accounts—but small businesses will typically have a fair amount. It’s thus worth an attacker’s while to take over such machines and either use them directly or install keystroke loggers to collect login credentials.

One thing they don’t seem to go after very often is credit card numbers entered on individual computers—they’re not worth the trouble. Stolen credit card numbers are in sufficiently large supply that their market value is low, only a few dollars apiece [Krebs 2008; Riley 2011], so collecting them one or two at a time isn’t very cost-effective. (Enough information for easy identity theft is even cheaper: $.25/person for small volumes; $.16/person in bulk [Krebs 2011a].) A large database of card numbers is a much more interesting target, though, and some very large ones have been penetrated. Alberto Gonzalez is probably the most successful such criminal. As The New York Times Magazine put it [Verini 2010],

Over the course of several years, during much of which he worked for the government, Gonzalez and his crew of hackers and other affiliates gained access to roughly 180 million payment-card accounts from the customer databases of some of the most well known corporations in America: OfficeMax, BJ’s Wholesale Club, Dave & Buster’s restaurants, the T. J. Maxx and Marshalls clothing chains. They hacked into Target, Barnes & Noble, JCPenney, Sports Authority, Boston Market, and 7-Eleven’s bank-machine network. In the words of the chief prosecutor in Gonzalez’s case, “The sheer extent of the human victimization caused by Gonzalez and his organization is unparalleled.”

The cost to the victims was put in excess of $400 million; Gonzalez himself made millions [Meyers 2009].

The most interesting corporation Gonzalez hit was Heartland Payment Systems, one of the biggest payment-card processors in the country. The Times noted that “by the time Heartland realized something was wrong, the heist was too immense to be believed: data from 130 million transactions had been exposed,” probably affecting more than 250,000 businesses. What’s especially interesting is his target selection: most people are unaware that such a role exists; Gonzalez researched the payment systems enough to learn of it and to hack one of the major players.

Most computers, of course, don’t store or process 180 million card numbers; obviously, very few do, but these systems merit extremely strong protection. Somewhat less obviously, there are a fair number of systems that connect to the large database machines—administrators, individual stores in a chain, even the point-of-sale systems. All of these need to be added to the threat model—control of these computers can and will be monetized by attackers, and money is the root of most Internet evil.

There is, in fact, a burgeoning “Underground Economy” centered on the Internet. It includes the hackers, the spammers who pay them, the DDoS extortionists, the folks who run the phishing scams and the advance fee (AKA “419”) frauds, and more. Someone in one part of the world will order something via a stolen credit card and have it delivered to a vacant house. Someone else will pick it up and reship it, or return it for cash that is then wired to the perpetrator. Hardware-oriented folks build ATM skimmers, complete with cameras that catch the PIN as it is entered.

A lot of these folk are engaged in other sorts of criminal activity as well. To them, the Internet is just one more place to make money illegally. A 2012 takedown of an international Internet crime ring included seizure of a web site that featured “financial data, hacking tips, malware, spyware and access to stolen goods, like iPads and iPhones”; some of those arrested allegedly “offered to ship stolen merchandise and arrange drop services so items like sunglasses, air purifiers and synthetic marijuana could be picked up” [N. D. Schwartz 2012]. Information may want to be free, but sunglasses?

It is also worth remembering that employees are not always trustworthy, even if they are employed by major companies. One of Gonzalez’ accomplices, Stephen Watt, worked for Morgan Stanley at the time of the hacks [Zetter 2009c], though as far as is known he never engaged in criminal activity connected with his employment. Insider fraud is a very serious problem, one that is rarely discussed in public.

3.2 Classes of Attackers

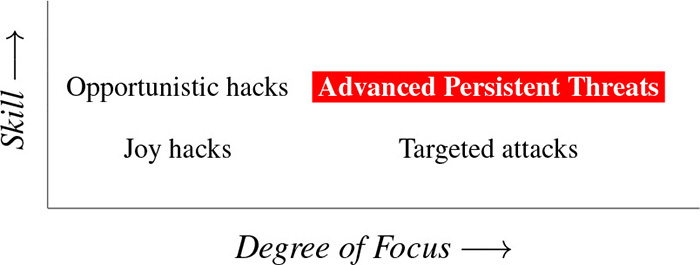

The easiest way to visualize threats is to consider two axes: the skill of the attacker, and whether the attacker is trying to get you in particular or someone at random. It’s shown in threat matrix (Figure 3.1).

Figure 3.1: The threat matrix.

What I dub the joy hacker is close to the Hollywood stereotype: the teenage or 20-something nerdy male who lives in his parents’ basement, surrounded by crumpled soft drink cans and empty pizza boxes, and whose only contact with the outside world is via his computer, with nary a “girl” in sight [M. J. Schwartz 2012]. He’ll have a certain amount of cleverness and can do some damage, but the effect is random and limited because his hacking is pointless; there’s no goal but the hack itself, and perhaps the knowledge gained. It’s youthful experimentation, minus any sense of morals.

The joy hacker exists, though much of the stereotypical picture is (and was) wrong. From a security perspective, though, gender and the presence or absence of parents, basements, pizza, and romantic interests are irrelevant. What matters is the unfocused nature of the threat—and the potential danger if you’re careless. Make no mistake; joy hackers can hurt you. However, ordinary care will suffice; if you’re too hard a target, they’ll move on to someone simpler to attack. After all, by definition they’re not very good.

A related (but lesser) threat is the script kiddie. Script kiddies have little real understanding of what they’re doing. They may try more different attacks, but they’re limited to using canned exploits created and packaged by others.

As joy hackers progress (I hesitate to use the phrase “grow up”), they can move along either axis. If they simply develop more skills, the attacks are still random but more sophisticated. They don’t want your machine in particular; they want someone’s. They may care about the type of machine (perhaps their exploits only work against a certain version of Windows) or its bandwidth, but exactly whose machine they attack is irrelevant. Most worms are like this, as are many types of malware—they spread, and if they happen to catch something useful, the hacker behind it will benefit and probably profit.

Opportunistic hackers are considerably more dangerous than joy hackers, precisely because they’re more skilled. They’ll know of many different vulnerabilities and attack techniques; they’ll likely have a large and varied arsenal. They may even have some 0-days to bring to bear. Still, their attacks are random and opportunistic; again, they’re not after you in particular. They may briefly switch their attention to you if you annoy them (see the box on page 172 for one such incident) or if they’re paid to harass you, but on the whole they don’t engage in much target selection.

Their lack of selectivity doesn’t make them harmless. It is likely that this class of malefactor is responsible for many of the botnets that infest the Internet today; these botnets, in turn, send out spam, launch DDoS attacks, and so on. For many sites, the opportunistic hacker is the threat to defend against.

Targetiers aim specifically at a particular person or organization. Here, to reverse the old joke, it’s not enough to outrun the other guy; you have to outrun the bear. Depending on how good the bear—the attacker—is, this might be quite a struggle. Targetiers will perform various sorts of reconnaissance and may engage in physical world activities like dumpster-diving.

These people are quite dangerous. Even an unskilled attacker can easily buy DDoS attacks; more skilled ones can purchase 0-days. If they’re good enough, they can ascend to the upper right of our chart and be labeled advanced persistent threats (APTs).

One particularly pernicious breed of targetier is the insider who has turned to the Dark Side. They may have been paid, or they may be seeking revenge; regardless, they’re inside most of your defensive mechanisms and they know your systems. Worse yet, human nature is such that we’re reluctant to suspect one of our own; this was one factor that hindered the FBI in the investigation that eventually snagged Robert Hanssen [Fine 2003; Wise 2002].

3.3 Advanced Persistent Threats

Apt: An Arctic monster. A huge, white-furred creature with six limbs, four of which, short and heavy, carry it over the snow and ice; the other two, which grow forward from its shoulders on either side of its long, powerful neck, terminate in white, hairless hands with which it seizes and holds its prey. Its head and mouth are similar in appearance to those of a hippopotamus, except that from the sides of the lower jawbone two mighty horns curve slightly downward toward the front. Its two huge eyes extend in two vast oval patches from the centre of the top of the cranium down either side of the head to below the roots of the horns, so that these weapons really grow out from the lower part of the eyes, which are composed of several thousand ocelli each. Each ocellus is furnished with its own lid, and the apt can, at will, close as many of the facets of his huge eyes as he chooses.

Thuvia, Maid of Mars

—EDGAR RICE BURROUGHS

APTs are, of course, the big enchilada of threat models, the kind of attack we typically attribute to the Andromedans. There’s no one definition of APT; generally speaking, though, an APT involves good target intelligence and a technical attack that isn’t easily deflected. Also note that there are levels of APT; Gonzalez et al. are arguably in that quadrant, but they did not have the capabilities of a major country’s intelligence service.

The best-documented example of a real APT attack is Stuxnet [Falliere, Murchu, and Chien 2011; Zetter 2014], a piece of malware apparently aimed at the Iranian uranium centrifuge enrichment plant. The source is unclear, though press reports have blamed (credited?) Israel and the United States [Broad, Markoff, and Sanger 2011; Williams 2011]. Stuxnet meets anyone’s definition of an APT.

The actual penetration code used four different 0-days, vulnerabilities that were unknown to the vendors or the community. (Actually, two of them had been reported, but the reports were either unnoticed or ignored by the community—but perhaps not by the people behind Stuxnet; it is unknown whether they read of these exploits or rediscovered them.) Stuxnet did not use the Internet or Iranian intranets to spread; rather, it traveled over LANs or moved from site to site via USB flash disks. The attackers apparently charted its spread [Markoff 2011a], possibly to learn a good path to the target [Falliere, Murchu, and Chien 2011; Zetter 2014]. Once it got there, special modules infected the programmable logic controllers (PLCs) that control the centrifuge motors. The motor speeds were varied in a pattern that would cause maximum damage, the monitoring displays showed what the plant operators expected to see, and the emergency stop button did nothing.

There were other interesting things about Stuxnet. It would only attack specific models of PLC, the ones used in the centrifuge plant. It installed device drivers signed with public keys belonging to legitimate Taiwanese companies; somehow, these keys were compromised, too. It also used rootkits—software to conceal its existence—on both Windows and the PLCs. We can deduce several things about Stuxnet. First, whoever launched it believed they were aiming at a high-value target: 0-days, while hardly unknown, are rather rare. Perhaps significantly, APTs appear to be the primary user of 0-days [Batchelder et al. 2013, p. 9]. Furthermore, once they’ve been detected, they’re useless; vendors will patch the holes and anti-malware software will recognize the exploits. That someone was willing to spend four of them in a single attack strongly suggests that they really wanted to take out the target.

The next really interesting thing is how much intelligence the attackers had about their target. Not only did they know a set of organizational links by which a flash drive attack might spread, they also knew precisely what type of PLCs were in use and what motor speeds would do the most damage. Did they learn this from on-site spies? Other traditional forms of intelligence? Another worm designed to learn such things and exfiltrate the information? A Stuxnet variant intended for the latter has been spotted [Markoff 2011b]; some sources think that that was the goal of Flame [Nakashima, G. Miller, and Tate 2012; Zetter 2014], too. The first two options strongly suggest an intelligence agency working with something like the Andromedans’ MI-31 (or, if the press reports are accurate, the NSA and/or Unit 8200).

The last really interesting thing about Stuxnet is the resources it took to create it. Symantec estimates that it took at least five to ten “core developers” half a year to create it, plus additional resources for testing, management, intelligence-gathering, etc. Again, this points to a high-end adversary willing to spend a lot on the attack. Stuxnet was indeed, as I noted in my blog, “weaponized software” [Bellovin 2010].

Stuxnet is a classic example of an APT attack, but there have been others. The penetration of Google in late 2009, allegedly by attackers from China [Jacobs and Helft 2010], is often described as one; another well-known example is the attack on RSA [Markoff 2011c], though that claim has been challenged [Richmond 2011].

What makes an attack an APT? Uri Rivner, the author of RSA’s blog posting on what happened to them, has it right [Rivner 2011]:

The first thing actors like those behind the APT do is seek publicly available information about specific employees—social media sites are always a favorite. With that in hand they then send that user a Spear Phishing email. Often the email uses target-relevant content; for instance, if you’re in the finance department, it may talk about some advice on regulatory controls.

He goes on to talk about how a 0-day exploit was used, but often that’s less important; no one will spend more on an attack than they have to, be it in 0-days or dollars. All attackers are limited; people who can carry out these attacks are the scarcest resource of all, even for the Andromedans.

It’s an interesting question why so many companies seem proud to announce that they’ve been the victim of an advanced persistent threat (APT). Are they saying that their internal security is so good that nothing less could have penetrated it? Or are they bragging that they’re important enough to warrant that sort of attention?

Recall the definition of threat: “an adversary that is motivated and capable of exploiting a vulnerability.” Capabilities are often the easiest part; plenty of garden-variety opportunistic hackers can find 0-days, and even script kiddies can send phishing emails. What’s really important is the motivation: who wants to get you? Someone who’s interested in something ordinary, like a corporate bank account, may come after you with a 0-day, but probably not. If they fail at getting you, though, they’ll probably move on; there are plenty of other targets. However, if you manufacture anti-Andromedan weaponry, MI-31 may try something newer or more clever, because they really want what you’ve got. The most important question, then, when determining whether you may be the target of an APT is whether you have something that an enemy of that caliber might want.

It is important to remember that not all advanced attackers will want the same things. Weaponry might be of interest to the Andromedan military, but that isn’t all that’s at risk. Some countries’ intelligence agencies may work on behalf of national economic interests; as long ago as 1991, first-class seats on Air France were reportedly bugged to pick up conversations by business executives [Rawnsley 2013]. Enterprises that might be military targets in the event of a shooting war may be penetrated to “prepare the battlefield” [National Research Council 2010].

An APT attacker won’t be stopped by the strongest cryptography, either. There are probably other weaknesses in the victim’s defenses; why bother going through a strong defense when you can go around it? It’s easier to plant some malware on the endpoint; such code can read the plaintext before it’s encrypted or after it’s decrypted. Better yet, the malware can include a keystroke logger, which can capture the passwords used to encrypt files.1

1. See United States v. Scarfo, Criminal No. 00-404 (D.N.J.) (2001), http://epic.org/crypto/scarfo.html, for an example of a keystroke logger planted by law enforcement. Governments do indeed do that sort of thing [Paul 2011].

Andromeda’s MI-31 won’t restrict itself to online means. Finding a clever hole in software is a great academic game; pros, though, are interested in results. If the easiest way to break in to an important computer is via surreptitious entry into someone’s house, they’ll do that. As Robert Morris once noted when talking about a supposedly secure cryptosystem: “You can still get the message, but maybe not by cryptanalysis. If you’re in this business, you go after a reasonably cheap, reliable method. It may be one of the three Bs: burglary, bribery or blackmail. Those are right up there along with cryptanalysis in their importance” [Kolata 2001].

What should you do if you think you are a potential target for an APT? While you should certainly take all of the usual technical precautions, more or less by definition they alone will not suffice. Two more things are essential: good user training (see Chapter 14) and proper processes (Chapter 16). Finally, you should talk to your own country’s counterintelligence service—but at this point, they may already have contacted you.

A caveat: There’s a piece of advice given to every beginning medical student: when you hear galloping hoofbeats, don’t think of zebras. Yes, zebras exist, but most likely, you’re hearing horses. The same is true of attacks. Many things that look like attacks are just normal errors or misconfigurations; many attacks that appear to be advanced and targeted are ordinary, opportunistic, garden-variety malware. If you are hit by an APT, don’t assume you know who did it.

In fact, don’t even assume it was really an APT. When JPMorgan Chase was hacked in 2014, news reports suggested foreign government involvement [Perlroth and Goldstein 2014]:

Given the level of sophistication of the attack, investigators say they believe it was planned for months and may have involved some coordination or assistance from a foreign government. The working theory is that the hackers most likely live in Russia, the people briefed said.

They said the fact that no money was taken did not necessarily mean it was a case of state-sponsored espionage, only that the bank was able to stop the hackers before they could siphon customer accounts.

Further investigation told a different story [Goldstein 2015]: “Soon after the hacking was discovered at JPMorgan, agents with the Federal Bureau of Investigation determined the attack was not particularly sophisticated even though the bank’s security people had argued otherwise. The hacking succeeded largely because the bank failed to properly put updates on a remote server that was part of its vast digital network.”

Attribution is one of the toughest parts of the business. A National Academies report quoted a former Justice Department official as saying, “I have seen too many situations where government officials claimed a high degree of confidence as to the source, intent, and scope of an attack, and it turned out they were wrong on every aspect of it. That is, they were often wrong, but never in doubt” [Owens, Dam, and Lin 2009, p. 142].

3.4 What’s at Risk?

What do you have that’s worthwhile? A better way to ask that question is to wonder, “What do I have that an attacker—any attacker—might want?”

Generically speaking, every computer has certain things: an identity, bandwidth, and credentials. All of these are valuable to some attackers, though of course which attackers will value a particular computer will depend on just what access that machine grants them. An old, slow computer that is nevertheless used for online banking would indeed be attractive to some people. Furthermore, the more or less random pattern of non-APT attacks means that it may indeed be hit. To a first approximation, every computer is at risk; the excuse that “there’s nothing interesting on this machine” is exactly that: an excuse. This fact is the base level from which all risk assessments must start.

For generic machines, the next level up depends on particular characteristics of the machine. Computers with good bandwidth and static IP addresses can be used to host illicit servers of various types: bogus web servers for phishing scams, archives of stolen data, and so on. They’re also more valuable as DDoS botnet nodes because their fast links let them fire more garbage at the victim. (Aside: some sources say that in the Underground Economy bots are called boats, because they can carry anything—their payload modules are easily updatable and are quite flexible. The same machine that is a DDoS engine today might host a “warez” archive tomorrow and a phishing site the day after.)

The more interesting—and higher-risk—category involves those computers that have specific, monetizable assets. A large password file? That’s salable. A customer database? Salable; the price will depend on what fields are included. Credit card numbers in bulk are lucrative, especially if they’re linked to names, addresses, expiration dates, card verification values (CVV2s), etc. Lists of email addresses? Spammers like them, both for their own use and for resale to other spammers. Real web servers can be converted into malware dispensers.

Particular organizations often have data useful to people in that field. A school’s administrative computers are valuable to people who want to sell improved grades. Business executives have been accused of hacking in to rivals’ systems [Harper 2013; B. Sullivan 2005]. Corporations have been accused of hacking into environmental groups’ computers [Jolly 2011].

A closely related set of at-risk machines are client machines that connect to actual target machines. Login credentials can be stolen or the entire machine hijacked to get at the data of interest.

If you work for a large company, you have a special problem: you almost certainly do not know of everything that’s valuable. Indeed, some of the most valuable data may be very closely held; you may never have heard of the unit that has it. The attackers might know, either through blind chance or because they have other useful intelligence. For example, major corporate hires are sometimes mentioned in the trade press or in business publications. If a new executive is hired with a particular specialty not related to what your company is currently doing, it’s news—but it’s news that you, as a computer security specialist, may not have seen. Someone tracking that technology or your company might spot it, though, and target this new business unit.

The solution—and it’s easier said than done—is, of course, cooperation within the company. Every business unit with valuable data needs to tell the corporate security group that it exists. They don’t have to say just what it is; they do need to know and report its value and to what class of rival. Another company? A company with a reputation for lax corporate ethics? A company headquartered in a country that doesn’t respect intellectual property rights? A minor foreign intelligence agency? The Andromedans themselves? The hard part is not just the intracompany cooperation, though that can be challenging enough; rather, it’s getting non-security people to understand the nature of the threat. There’s a lot of hype out there and noise about threats that aren’t real; consequently, there are a lot of skeptics. (Tell them they need to buy their own copy of this book. . . ) Again: the issue of what defenses to deploy is a separate question from ascertaining which assets are at risk.

3.5 The Legacy Problem

When identifying what’s at risk, don’t neglect legacy systems. Greenfield development is very rare; virtually all non-trivial software development projects are based on ancient code and/or have to talk to ancient database systems running on obsolete hardware with an obsolete OS. It’s probably economically infeasible to do anything about it, either. The application may be tied to a particular release and patch level of its OS [Chen et al. 2005]; fixing that would cost a significant amount of money that isn’t in the budget because the code currently (mostly) works. Besides, organizationally it’s someone else’s system, and you haven’t the remit to do anything about it. But if a penetration happens because your new application opened up a channel to someone else’s ancient, crufty code, you know whose problem that is.

All that said, the asset still exists; protection of it still needs to be part of your analysis. The solutions won’t be as elegant, as complete, or in most cases as cheap as it would be had things been designed properly; as noted, perfect foresight is impossible. I return to this issue in Section 11.5.