Chapter 15. System Administration

“He kept asking me stupid questions, was too dumb to learn from his own mistakes, made work for other people to mop up after him, and held a number of opinions too tiresome to list. He shouldn’t have been in the course and I told him to tell Dr. Vohlman, but he didn’t listen. Fred was a waste of airspace and one of the most powerful bogon emitters in the Laundry.”

“Bogon?”

“Hypothetical particles of cluelessness. Idiots emit bogons, causing machinery to malfunction in their presence. System administrators absorb bogons, letting the machinery work again. Hacker folklore—”

The Atrocity Archives

—CHARLES STROSS

15.1 Sysadmins: Your Most Important Security Resource

<RANT>

A good system administrator’s value, to misquote a line from Proverbs 31, “is far beyond that of rubies.” Proper system administration can avert far more security problems than any other single measure. Your sysadmins apply patches, configure firewalls, investigate incidents, and more. Being a system administrator is a high-stress but often low-status position. The job is interrupt-driven; there are generally far too many alligators for them to even think of draining the swamp, even when they know exactly how to do it. Sysadmins typically have too few resources to do the job properly, but are blamed when the inevitable failures occur. They’re often on call 24 × 7, but frequently report to someone who equates running Windows Update on a single computer with keeping a modern data center on the air. Of course, all of these issues are magnified when it comes to security, since it’s often very hard to tell whether a given preventive measure actually accomplished anything. But management wants things to work perfectly, even though the people responsible for that aren’t given the resources—or respect—necessary.

</RANT>

Rants aside, I’m 100% serious about the importance of system administration. If nothing else, most security problems are due not just to buggy code, but to buggy code for which patches already exist. Sysadmins are, of course, the people who install such patches; per Chapter 13, it’s not a simple process in a mission-critical environment. To people who have had hands-on involvement in operations, whether of data centers, networks, or services—and I’ve done all of these—the importance of doing this well is self-evident. How to accomplish it is a trickier question.

I do not claim to be a management expert. That said, the single biggest complaint I’ve heard from sysadmins is lack of respect from their user community and by extension from upper management. Some of this is inevitable. When something almost always works, it’s natural to ignore it most of the time, and only pay attention when there’s a failure—and at that point, people are generally looking for someone to blame. In the same vein, many users perceive that a similar-seeming service at another location or company works better; again, the sysadmins play the role of goats, even if the difference is either not present or is due to factors beyond their control. Strong leadership, including managers who can act as buffers and shields, is vital, as (of course) are more tangible tokens of recognition such as good salaries.

Why is this relevant to this book? It’s quite simple: system administrators are the front-line soldiers in the fight for security. They implement many of the security mechanisms selected by system architects, and of course take care of patch installation and system upgrades. They’re also the people most likely to notice security problems, whether from looking at logs, noticing performance anomalies, or fielding user complaints. Furthermore (and of necessity), a good system administrator has to know a fair amount about real-world security issues and threats. (A remarkable number of really good security people drifted into the field from the sysadmin world.) The question this chapter is tackling is how to apply this book’s principles to system administration, and vice versa.

15.2 Steering the Right Path

Aside from setting security policies—the subject of most of the rest of this book—one of the most crucial decisions a system administrator has to make is how much control to exercise. Both extremes, the Scylla of complete anarchy and the Charybdis of totalitarianism, have disadvantages, including security weaknesses.

The security risks of anarchy—of not setting policies, of too easily acceding to requests for variances, of not enforcing policies—are fairly obvious. In a world where something as simple as a flash drive can wreak havoc [Falliere, Murchu, and Chien 2011; Kenyon 2011; Mills 2010], it is clear that first, there must be policies, and sometimes stringent ones, and second, that these really need to be enforced.

On the other hand, too much stridency about minor matters also has to be avoided. Sysadmins are (generally speaking) human, too; Lord Acton’s dictum about the corrupting influence of power is applicable. Far too often, the real need for security is instantiated as inflexibility, pointless rules, and deafness to legitimate needs. This stereotypical administrator, depicted by Scott Adams in his Dilbert cartoon strips as “Mordac, the Preventer of Information Services,” has its roots in the real world, too.

The problem with rules that are too strict is, as I’ve noted before, that people will evade them. The story on page xiii about the modems is true (yes, I know at which company it happened, and I know people who were there at the time); it’s an example of what happens when a security policy is too strict for the culture. A security policy has to represent a balance between four different interests: the security folk, who understand the threat models; the system administrators, who know what’s feasible to implement (and what it will cost); the business managers, who understand what the organization is supposed to be doing; and the line managers, who understand what employees will and won’t put up with. (It would be better to ask the employees themselves, but that’s often infeasible.)

Deciding on the policy is an iterative process, where several people play more than one role. A sysadmin might say, “Yes, I can enable that function, but only if we upgrade the desktops to a newer release of the OS, because that version implements it securely.” Both sets of managers will object to the budget hit for the upgrade (though the line managers may, on behalf of the employees they manage, endorse bright, shiny new hardware as long as they don’t have to pay for it), but everyone is in trouble if there’s a major penetration. Too often, the argument will come down to a culture war—the geeks against the bean counters, the technical folk against the suits—which helps no one, especially since it’s the sysadmins who take the opprobrium for “needless” restrictions on what people can do.

There’s no deterministic algorithm for settling this, of course. The best approach is for all sides to come equipped with numbers and alternatives. What are the benefits—in dollars, euros, zorkmids, what have you—to the company’s business of the managers’ preferred strategy? What are the costs and risks of the (nominally more secure) alternatives? What assets are at risk if there is a penetration? Who are the possible enemies, and which of those assets would each group be interested in? What are the odds on one of those groups attacking, and via which paths? How strong are the defenses? How bad will the morale hit be if certain measures are implemented? Will this manifest itself in lowered productivity and/or higher turnover?

As I’m sure you realize, few of these questions can be answered with any degree of accuracy or confidence. The business folk—yes, the much-reviled suits and bean counters—are likely to have the best numbers, where “best” includes “most accurate.” (There’s a certain irony in them, and not the geeks, being the most quantitative.) I’m not saying that their market or development cost projections are always correct—I’m talking about profits, not prophets—but on balance, they do far, far better than security people can on their projections. Besides, they’re much less likely to view a failure as a sin, rather than as a simple economic misjudgment. The essence of what they do is taking calculated risks; security folk, on the other hand, generally live for risk avoidance.

Implicit in the previous paragraphs is that the system administrators are more or less neutral parties. It’s not their product that will be affected by security-imposed delays, nor are they responsible for the overall security architecture of the product. Of course, they’ll get the blame if the upgrade goes badly wrong or if there is a penetration.

A recent issue that illustrates this nicely is the so-called Bring Your Own Device (BYOD) movement: employees purchase and use their own equipment rather than using company-issued phones, laptops, etc. There may be financial advantages to the company—employees will often foot much of the bill—and perhaps morale benefits (Mac aficionados tend to be unhappy when forced to use Windows machines and vice versa) and functionality challenges (Does vital corporate software work on random computers running random releases of random operating systems? How is it to be maintained?), but in this book let’s restrict our attention to a much simpler question: is BYOD secure? Let’s look at it.

The first big issue is system administration: who is ensuring that user-owned devices are administered properly? Penetration by 0-days is rare; the overwhelming majority of attacks use vulnerabilities for which patches exist. As we’ve seen, patching isn’t easy, but with company-administered machines there is someone responsible for dealing with the trade-off. Does the corporate sysadmin group have the right to install patches on employee machines? Does it even have the ability to do so, especially if they have no competence in or infrastructure for some kinds of devices? What about antivirus software? Is it installed? Is it up to date? Is it even necessary or possible? (At the moment, at least, it isn’t possible for third parties to write functional antivirus software for Apple’s iOS—there are no kernel or application hooks for it, and the same mechanisms that are intended to prevent installation of unapproved applications also block AV software. That may or may not play well with corporate policies that demand the installation of such packages.)

Naturally, threat models have to be considered. If you’re dealing with the Andromedans, the precise device may not matter as much. A government-controlled mobile phone company in the United Arab Emirates pushed an update containing spyware out to its BlackBerry customers; presumably, this was intended to work around the strong encryption used by Research In Motion’s (RIM) devices [Zetter 2009b]. Travelers to China often eschew laptops and smart phones [Perlroth 2012]. It is hard to see how corporate system administration would be an adequate counter to this class of attack. It isn’t even clear that other countries’ equivalents of MI-31 can mount a credible defense, as the Iranians have learned.

Against lesser threats, though, good administration does make a difference. Most users do not upgrade their software [Skype 2012]. More subtly, users are not prepared to assess the comparative risks and benefits of different software packages. Which browser is most secure, Internet Explorer, Firefox, Safari, Chrome, or Opera? Suppose the comparison was between Internet Explorer 6 and Firefox 14? Internet Explorer 9 and Firefox 3? With what patches or service packs installed? On what releases of what operating systems?

Even assuming that all of the administration is done as well by the user as a pro would manage, there’s another issue: what else is installed, and what web sites does the user visit? It’s one thing for corporate policy to say “thou shalt not” for company-owned machines; it’s quite another to insist on it for employee-owned devices that may be shared by other family members. Don’t get me wrong; there are legitimate concerns here. It is not an exaggeration to say that “adult” web sites often host the computer equivalent of sexually transmitted diseases [Wondracek et al. 2010]; religious and ideological sites are even worse [P. Wood 2012].

From the above analysis, it would seem clear that BYOD is a bad idea. It’s not so simple. Again, recall the distinction between insecurity as a sin and insecurity as a financial risk. There are monetary and morale benefits to BYOD; this benefit must be weighed against the potential costs. How to choose?

As always, we cannot do a strict quantitative analysis; the risks are too uncertain. Still, there are guidelines that are helpful. Start by making two assessments: how strict is your security policy, and how much knowledge, overall, does your sysadmin group have? Sites with loose policies, for example, many universities, take relatively little additional risk by permitting BYOD; by contrast, defense contractors probably should not. Most sites, though, are somewhere in the middle. That’s where the sysadmin group comes in. Can you buy or build a tool to do a minimal evaluation of a user’s system, for all variants of major interest? (This seems daunting, but in reality there are only a handful of choices for which there will be very much employee demand.) Insist that this tool be installed as a minimum precondition for connecting to the network, and use it to assess the essential security state of these devices. (I should note that it is vitally important to be honest about what such a tool does and to be scrupulously careful to limit its abilities; betraying employee trust is a surefire route to all sorts of bad outcomes.)

Developing and using such a tool properly isn’t easy. In general, large organizations will have large-enough sysadmin groups to manage it. Small organizations, unless they’re supported by honest and reasonably priced consultants, probably cannot. If you can’t, consider treating employee-owned devices as semitrusted, per the analysis in Section 9.4. The same suggestion applies to devices where an assessment tool is difficult, such as many smart phones.

Again, though, remember the threat model. The more serious the threat, the less safe BYOD is. This should be used as a discount factor to the sysadmin clue quotient. In particular, an evaluation tool is precisely the sort of atom blaster that can point both ways [Asimov 1951]; by definition, it detects certain security problems on a computer. This is wonderfully useful information to an attacker.

15.3 System Administration Tools and Infrastructure

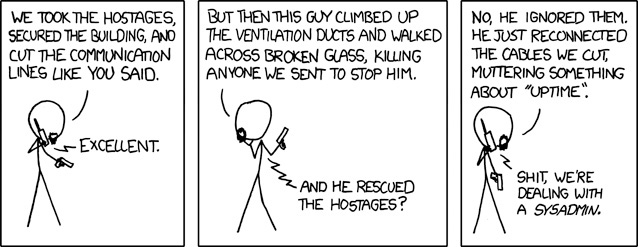

There’s a diagram floating around the net (Figure 15.1) that shows a lot of the trouble with system administration. Much of it is routine but interrupt-driven: a disk has crashed, or the print spooler isn’t working right, or some vendor has released an urgent security patch. At the same time, the background work of upgrading the LAN switch to gigabit Ethernet, moving Legal to its own VLAN, and installing the new 32-terapixel display in the CEO’s conference room has to continue.

While there are many facets of system administration that can benefit from automation, this is a security book, so I’ll restrict my focus to security issues. The importance of all of these is proportional to the size of the organization; spending the time to write scripts and build infrastructure is less cost-effective for smaller sites. There are, broadly speaking, three relevant activities: shipping out patches and software upgrades; routine log collection and analysis; and sending specific queries to many machines while investigating or analyzing a (suspected) incident. (More generally, there are a number of good books on system administration, such as [Limoncelli, Hogan, and Chalup 2007].)

The starting point for all of these efforts is a good database of computers, not just the ordinary one of machines and IP addresses, but also hardware information and which versions of which software packages are installed on each. Furthermore, you want each machine marked by role; you want to be able to differentiate, say, mission-critical machines from less vital ones. Suppose some vendor releases a critical patch to some package. On which machines do you install it? You don’t necessarily want to install it everywhere immediately; as noted earlier, patches carry their own risks (Chapter 13). Instead, your patch distribution system should do a database dip and select the machines that are at serious risk. These machines—those of which are up and report successful installation—should be marked as updated. That last item is important not just to avoid duplicate installation—quite likely, the machines would reject that themselves—but to make it easy to install an updated version of the patch should the vendor ship one out.

Perhaps the patch is to a device driver and only affects certain hardware versions. You don’t want to install it on unaffected machines; you never know when some “harmless” update will have bad side effects. (Many, many years ago, when reading the release notes for an update to a system I was administering—not “administrating”; that’s a dreadful neologism—I realized that the previous version of one device driver had never been tested. It couldn’t have been; for complicated reasons not worth explaining in this book, it couldn’t even have been loaded into memory; the linker would have rejected it.)

Configuration files are more difficult to handle than are simple software upgrades. For the latter, the vendor has (probably) done what’s needed to delete the old code and install the new. Configuration files, though, are per-machine; you cannot just overwrite them. With a suitable database, though [Bellovin and Bush 2009; Finke 1997a; Finke 2000; Finke 2003], you can build new config files on your master machine and ship them out to the right places.

There are many more scenarios possible, but it should be clear by now that you need a real database as the foundation for your automation system. I’ve described a large, complex system, but even a much simpler version will go a long way towards simplifying the task. However, do not confuse a simple GUI form with a database; they’re not the same. (More generally, there is a persistent but erroneous notion that anything with a full-screen interface instead of a nasty command line is a priori and ipso facto user friendly. Good sysadmins are pickier about their friends; they’ll gladly trade a bit more time up front learning things and in exchange get a lot more power to do what they really want to do.) When you use a GUI, your options are limited to those anticipated by the forms’ designer. Your configurations and needs may not match.

Building and maintaining the proper database is neither easy nor cheap. Operations that were once simple, such as installing a single package on a single machine, are now more complicated and more expensive. You can’t just double-click on the distribution file; instead, you have to copy the file to your master sysadmin box, update the database to say where you want it, create templates for the configuration files, and then click the “Make it so” button. This is clearly not worthwhile if you’re running just a few computers, but if you’ve got a few hundred to deal with it’s another story.

There are, of course, plenty of large installations where this kind of setup makes sense. It’s done all too rarely, though, because of the aforementioned interrupts and alligators. Put another way, the sysadmin group rarely has the resources—people, money, and time—to build the infrastructure necessary to operate efficiently. Given that “efficiently” often translates to “securely,” this is a crucial deficiency. Foresighted management realizes this.

Alas, even if you get to build the necessary pieces, short-sightedness in the executive suite can doom the effort. I know of one group that did such a good job on their database-driven administration that the sysadmins appeared to be underemployed. The group was pared back sharply, the database moldered into uselessness—and suddenly, the sysadmins were overloaded and they had to ramp up again. By this time, though, the really competent people with vision were long gone. (The company itself? It’s now out of business, though not because of system administration woes.)

There’s a more subtle advantage to database-driven sysadmin tools: ultimately, you’ll need less code. Code is bad: it’s buggy, needs maintenance, etc. The more things you do via special-purpose tools, the more programs you’ll need to maintain. A new kind of device, or even a new version of an existing device, can require an entirely new program. By contrast, a database-driven design may require only new templates. If new code is needed, it’s likely to be small snippets in well-defined places; much of the complex, controlling logic is in the driver program that can remain unchanged.

As discussed in Section 16.3, automated log file analysis tools are necessary, too. I won’t belabor the point here, save to note that sysadmins are, as always, on the front lines when it comes to using these tools and analyzing their output.

15.4 Outsourcing System Administration

Given how vital system administration is to security, and given how difficult it can be to do it well, the question naturally arises of outsourcing the function. Is it a good idea? Often, the answer is “yes,” but there are some caveats.

As noted, system administration isn’t easy. If, however, you have the resources to build the necessary tools and databases, it can be done effectively and efficiently. Note, though, the conditional: if you have the resources, you can do it well. Note also what I said at the start of this chapter about the status of system administration in typical organizations: do you have the resources?

It would seem, then, that for most organizations it would make sense to outsource system administration. The issue, though, is not so simple, for one reason: policy. What are the policies of the providing organization? This includes not just security policies, though those are obviously crucial. The other (and often more important) issue is which versions of which operating systems and applications are supported, and with which configurations.

Consider an absurd generalization: a Linux-oriented site will almost certainly be unhappy with a provider that specializes in Windows. More plausible cases are both more likely and more problematic. Suppose, for example, that you have a crucial application that only runs on a particular release of a particular OS. Will the providing company support that version for long enough to permit you to migrate safely? This is the canonical example, but it’s almost too easy; you can always repatriate a single machine and run it yourself. Too much of that, though, and you have the worst of both worlds: expensively outsourced administration of desktop machines—the ones that are easy to handle with vendor tools or simple scripts—and homegrown (and probably manual) handling of the more complex servers. Alternately, consider conundrums like BYOD or telecommuting, where part of the trade-off is employee productivity or morale versus (perhaps notional) security: is your evaluation the same as some provider’s?

The trade-offs here are inherent in the problem. System administration at scale requires automated tools; automation, in turn, thrives on uniformity. This is, of course, as true for home-built automation as for a provider’s. The provider, operating at larger scale, may even support more flexibility than you can—but is it the right flexibility? Can they support the trade-offs and compromises that are right for your organization and your goals?

The decision is even more complex when one looks ahead. For the foreseeable future, there will be more heterogeneity, not less, in both device choice and configuration options. Which solution will be better able to adapt? Will you want to move ahead more slowly or more quickly than the provider?

There cannot be a single answer to any of these questions. What is essential is to understand the choices. Almost certainly, a good provider of system administration services can operate more efficiently than all but the largest, most sophisticated organizations can on their own. On the other hand, that efficiency can come at a serious price in your necessary functionality.

15.5 The Dark Side Is Powerful

No discussion of system administration is complete without a mention of the potential dangers posed by rogue system administrators. Sysadmins are generally all-powerful; that means that they’re all-powerful for evil as well as for good. On systems with all-powerful administrative logins, such as root on Unix-like machines, a sysadmin can access every single file, no matter the file permissions. Nor will encryption help if the rogue sysadmin also controls the machine on which decryption will take place; installing a keystroke logger or other form of key-stealing software is child’s play for any decent superuser. The threat is exactly the same as described in Section 6.4, only here the enemy is a highly privileged insider.

In the absence of other controls, then, one must assume that a system administrator has access to every file and resource accessible (or potentially accessible) to any member of the organization. The usual organization solution to protecting high-value resources is some form of two-person control: make sure that another system administrator approves any changes. There have been research approaches to this problem [Potter, Bellovin, and Nieh 2009], though commercial solutions are few and far between. However, even if they do exist they may be only a theoretical fix; the realities of human behavior may vitiate the protection, especially in a small organization. Consider: one administrator—a person who, per the above, is probably overworked and underappreciated—is being asked to assume that a close colleague (who is suffering from the same slings and arrows of outrageous management) isn’t trustworthy. Will the (potential) rogue’s changes be checked carefully, with all the insult that that implies, or will the checker simply click “OK” without reading it? Nor is handing off just the double-checking to another organization’s administrators likely to work; too many routine changes require too much context for easy evaluation by an outsider.

The NSA realized the danger posed by rogue or subverted system administrators long ago [[Redacted] 1996]:

In their quest to benefit from the great advantages of networked computer systems, the U.S. military and intelligence communities have put almost all of their classified information “eggs” into one very precarious basket: computer system administrators. A relatively small number of system administrators are able to read, copy, move, alter, and destroy almost every piece of classified information handled by a given agency or organization. An insider-gone-bad with enough hacking skills to gain root privileges might acquire similar capabilities. It seems amazing that so few are allowed to control so much—apparently with little or no supervision or security audits. The system administrators might audit users, but who audits them?

This is not meant as an attack on the integrity of system administrators as a whole, nor is it an attempt to blame anyone for this gaping vulnerability. It is, rather, a warning that system administrators are likely to be targeted—increasingly targeted—by foreign intelligence services because of their special access to information. . .

. . . if the next Aldrich Ames turns out to be a system administrator who steals and sells classified reports stored on-line by analysts or other users, will the users be liable in any way? Clearly, steps must be taken to counter the threat to system administrators and to ensure individual accountability for classified information that is created, processed, or stored electronically.

Note carefully the part about targeting, and consider which class of enemies might do this. The NSA, of course, has always worried about APTs.

If you can’t check the work, can you check the people? That is, will employee background checks weed out the bad apples? While they may identify the obvious misfits, they’re by no means a guarantee; even the NSA has had its failures [Drew and Sengupta 2013]. Heath offers an interesting analysis [2005, p. 76], by comparing the societal rate of disqualifying factors with the rate found by investigators. In the 1960s, the NSA felt that it had to extirpate homosexuality from its ranks, since such “deviance” was an “obvious” security weakness. Despite an intensive effort, they found about 1% of the people they should have found. Some of that could, perhaps, be explained by self-selection bias, but probably not all of it. Furthermore, she notes that there is no good data showing the predictive value of any of the standard issues of concern such as heavy alcohol use.

The best answer is a combination of remedies. Certainly, for high-security enterprises some level of background checking is a good idea; banks, for example, are generally well advised to avoid hiring people with a lengthy criminal record for embezzlement. Similarly, some amount of two-person control can help, even if only done randomly. Finally, and perhaps most important, there should be some amount of auditing: what changes were made, and why? This in turn requires that all privileged operations be done in response to trouble tickets. These may be self-created—a good sysadmin will frequently detect problems that have not (or not yet) been noticed (or at least identified) by ordinary users; it’s preposterous to insist that such problems not be fixed—but the entry must exist nevertheless. (Should you insist that another sysadmin fix such problems? Perhaps, but the learning curve to understand the details of the issue may be steep.) Finally, all privileged operations must be carried out via an interface that logs precisely what was done. If a script is run, a copy of the script should be filed away. If new files are used to replace old ones, save both copies. This sort of structure will permit later analysis by an auditor.

No discussion of rogue system administrators would be complete without mentioning the most infamous one of all, Edward Snowden. The story isn’t complete; a good summary up to a certain point can be found in [Landau 2013; Landau 2014]. Without going into the larger issues raised by Snowden’s activities, there are some purely technical ones: how did he accomplish what he did, what safeguards should have been in place, and what could or should have been done by the NSA to prevent or detect such behavior, or at least figure out after the fact what was taken? None of these are easy. Here, though, it was a perfect storm of threat models: very sensitive data, a system administrator who turned to the Dark Side and impersonated other users [Esposito, Cole, and Windrem 2013], and more.