Chapter 1

Introduction to UTM (Unified Threat Management)

Information in this chapter:

• Basic Network Security Concepts

• Computer and Network Security Concepts and Principles

• Computer and Network Security Technology Concepts

• Network Security Technology Concepts

• Commonly used Computer and Network Security Terms

• Unified Threat Management (UTM) Foundations

• The History of the Unified Threat Management (UTM) Concept

• UTM vs other Security Architectures

- UTM vs Next-Generation Firewalls

- Protecting against Blended Threats

• Enhancing Operational Response Times (Meeting and Enhancing SLAs)

• Getting a Better Support Experience

- Increasing Network Availability

- Easier Investment Justification

• Current UTM Market Landscape

Introduction

Internet and Security

It’s 4 PM and you realize you forgot today was your wedding anniversary. Some years ago, this would have meant problems back home with your spouse. Today, you can simply go to a site like Google or Bing and search for something to cover for you missing the occasion: look for recommendations for a good restaurant, book seats for a nice show, send flowers, or even buy a gift you can pick up on your way back home. You don’t even need to be at your office: you can do it from a cybercafe, a public kiosk, or conveniently from your smartphone while on the train or bus (never while driving your car!). This wouldn’t have been possible back in 1999.

Today we do many activities with computers connected to the Internet, and as new users and generations are brought online, many rely on the fact that computers and the Internet are there and will be there. We go to school, shop, do home banking, chat, and interact on social networks everyday and people think the services must be there. They take that for granted. However, the amount of effort, technology, and skill required to keep all the services on the Internet will be a surprise to many. The worst thing is that many of these newcomers begin their online life with little or no education on how to be a good Internet Citizen (or netizen), and that also means they don’t know the minimum measures they need to take to turn their online experience into a safe and pleasant one.

Among all the disciplines that are used to keep the Internet up and running, Internet Security is of special relevance: the day we began trading over the Internet and money began to be represented by bits flowing on wires, it became attractive to professional attackers and criminals to be online as well. Internet Security is what helps to keep the infrastructure up and running, and it is also the discipline that can keep the Internet as a safe place for us, our kids, and future generations.

Basic Network Security Concepts

Several network security books, especially the ones that are dedicated to firewalls, begin explaining technical concepts right at the first chapter. This book can’t be an exception. I would say the material below could be too basic if you are already a computer security master and you are looking to get directly into how Fortinet does things differently with FortiGates. If this is the case, it might be a good idea to jump to Chapter 2 FortiGate hardware platform overview of this book. Otherwise, if you are relatively new to computer security or would like to review a different point of view on how to approach the computer and network security challenge, then please keep reading: the author of this chapter enjoyed writing it and tried his best to explain everything in a fun way, whenever possible:-)

But before getting deeper into security, I would like to mention some areas where you might need to get some expertise if you want to really be a network security star. If you are already seasoned, probably this would be a good reminder on areas you should keep updated. If you are new, then this could provide a nice road map to go deeper on the field after you finish reading this book:

• Programming: Know at least one third-generation programming language, one fourth-generation programming language, and one script language. The differentiation is made because each one will help you understand different concepts and will teach you to think in different ways when you analyze problems. Some options are C language, SQL, and Korn-shell scripting, but it could also be C#, Ruby or Python, and Oracle SQL. If you want to become a pen-tester, you probably might want to learn a bit of assembler as well. Please note I mentioned “know,” which is different from “master.” This is important because you probably don’t want to become a professional programmer, but you will need to be fluent enough in the language so you can understand code you read (exploit code or source code of Web Applications, for example), modify that to suit your needs or automate tasks.

• Operating System: An operating system is the program that is loaded on a device, responsible for hardware and programs management. Every device from a cell phone, to a game console, to a tablet, to a personal computer, has an operating system. You need to understand how it works: memory management; I/O Management in general; processor, disk, and other hardware resources allocation; networking interface management, process management. As with programming, probably you don’t need to know how to tune kernel parameters or how to tune the server to achieve maximum performance. However, you need to understand how the operating system works, so you can identify and troubleshoot issues faster, as well as to understand how to secure an environment more effectively. It might not be a must, but experience on at least one of the following operating systems is highly desirable and will always come handy: Microsoft Windows (any version) or a Un*x flavor such as HP-UX, IBM AIX, FreeBSD, OpenBSD, or GNU/Linux.

• Networking: One of the reasons why organizations need security is because of the open nature of the Internet, designed to provide robust connectivity using a range of open protocols to solve problems by collaboration. Almost no computer works alone these days. It’s quite important to know as much as you can about networking. One example of the networking importance: in the experience of this book authors, at least eighty percent (80%) of the issues typically faced with network security devices (especially devices with a firewall component like the FortiGate) are related to network issues more than to product issues. Due to this, it’s important knowing how switching technologies work, how ARP handles conversions between MAC addresses and IP addresses, STP and how it builds “paths” on a switching topology, 802.3AD and interface bindings, 802.1x and authentication, TCP and its connection states, and how static routing and dynamic routing with RIP, OSPF, and BGP work. All those are important, and I would dare to say, almost critical. And on networking, you will need a bit more than just “understanding”: real-world experience on configuring switches, routers, and other network devices will save your neck more than one time while configuring network security devices.

Yes, as you can see, being a security professional requires a lot of knowledge on the technical side, but it is rewarding in the sense that you always get to look at the bigger picture and then, by analysis, cover all the parts to ensure everything works smoothly and securely.

Computer and Network Security Concepts and Principles

Having covered all that we will now review security concepts. We won’t explain all the details about them here, since they will be better illustrated in the chapters to come, where all the concepts, technologies, and features mentioned are put to practical use. We will offer here definitions in such a way that have meaning through our book and may not necessarily be the same ones commonly used by other vendors.

Computer and Network Security is a complex discipline. In order to walk towards becoming a versed person, you need to truly understand how many things work: from programming, to hardware architectures, to networks, and even psychology. Going through the details of each field necessary to consider yourself a security professional is way beyond the scope of a single book, let alone a section within a book chapter. If you are interested on knowing more about this field, there are many references out there. In general the Common Body of Knowledge (CBK)1 proposed by organizations like (ISC)2 or ISACA, and certifications like Certified Information Systems Security Professional (CISSP),2 Certified Information Systems Auditor (CISA),3 or Certified Information Security Manager (CISM)4 have good reputation in the industry, and are considered to cover a minimum set of knowledge that put you right on the track to become a security professional.

This book also assumes that you already have some experience with operating systems, computers, and networks. We won’t explain here basic concepts and technologies like netmasks, network segment, switch, or router. We will try to cover any of these concepts in the context of an explanation, if they are affected somehow to achieve a result.

Admittedly, even though effort has been done to keep this book fun, the paragraphs below could be a bit boring if you have already worked with computer security for a while. Having said all the above, we will be discussing here some general security concepts in an attempt to standardize the meaning of these concepts and principles in the context of this book.

Probably the first concepts we need to review are those that are related directly to the Computer, Network, or Information Security fields first. It’s very hard to say these days if we should be talking about “Internet Security,” “Data Security,” “Information Security,” “Computer Security,” or “Network Security” when we are discussing subjects around this matter. However, for the purpose of this book we will use the terms “Network Security,” “Computer Security,” and “Information Security” more than the others, since this book discusses a technology whose focus is to be a mechanism to protect computer networks and digital information assets.

The order the concepts are presented is relevant because we try to go from the basic to the most complete and specific ones.

• Security: Perhaps this must be the very first term we need to define. For the effects of this book, Security will refer to a set of disciplines, processes, and mechanisms oriented to protect assets and add certainty to the behavior of such protected assets, so you can have confidence that your operations and processes will be deterministic. This is, you can predict the results by knowing the actions taken over an asset. It is commonly accepted that Security is an ongoing process, it consists of Processes, People and Mechanisms (Technology), and it should be integrated to business processes. In that regard, the concept of Security is similar to the concept of Quality.

• Information Security or Network Security: It is the concept of security applied to Computer Networks. In other words, it is the set of disciplines, processes, and mechanisms oriented to protect computer network assets, such as PCs, Servers, routers, Mobile devices. This includes the intangible parts that keep these physical components operating, such as programs, operating systems, configuration tables, databases, and data. The protection should be against threats and vulnerabilities, such as unauthorized access or modification, disruption, destruction, or disclosure.

• Confidentiality: A security property of information; it mandates that information should be known by the authorized entities the information is intended for. So, if a letter or e-mail should be only known by the e-mail’s author and recipient, ensuring confidentiality means nobody else should be able to read such letter.

• Integrity: A security property of information; data should always accurately model the represented objects or reality, and should not be modified in such a way that is unknown by the data owners and custodians. So, if an electronic spreadsheet has a set of values that represent money on a bank account or the inventory of a warehouse, the values recorded in the spreadsheet should accurately represent the money in the bank account and the objects held in the warehouse if you decide to count each coin or bill in the bank deposit or every item in the warehouse.

• Availability: A security property of information; data and systems should be ready to be used when authorized users need them. If I need to print a letter from my computer, availability should be the PC, the network, and printer are working ok for me: there is electricity to power the devices, the network is properly configured to carry data from my PC to the printer, and the printer has enough ink and paper. Availability should not be confused with High Availability, which is a related but different concept.

• Authentication: It is the process of identifying entity and making such entity prove it is who claimed it is. One of the most common and simple requests of authentication occurs when someone knocks at your door. Before opening the door you may ask “who is this?” Then entity behind your door claims an identity (“It’s Joe”), and you are able to verify that by the voice you hear. If you are unsure, you will ask for a second round of authentication: “Joe who?” “Joe the plumber” might ring a bell this time to confirm the identity of the person you were waiting for.

• Authorization: It is the process of granting an entity access to resources or assets. So, Joe the plumber is home to help you fix a faucet in the bathroom. You will authorize him to be in the bathroom and perhaps allow him use your wrench or screwdriver. But he won’t be on the master bedroom nor allow him use your computer.

• Auditing: It is the process of ensuring every activity leave a trace in such a way that someone can reconstruct what actually happened: What was done, by whom, when, and how should be recorded.

• Threat: An inherent danger over an object that, due to its nature, will remain constant through the life of that object. For example, a car could be stolen independently if it’s well guarded and taken care of, brand new or old. You can increase or decrease the possibility of a danger to happen, but the danger will always be there.

• Vulnerability: A specific weakness of an object due to a certain condition of the environment where an object or asset is placed, stored, used, or needed. For example, a car can be stolen more easily if the locks are not placed, it has no alarm, windows are open, or you are parking in a bad neighborhood. All these are vulnerabilities that increase the likelihood of realizing the threat of the car being stolen.

• Attack: An action, intentional or incidental, successful or not, performed against an object or an asset, that exploits a given vulnerability in an attempt to realize a threat. An attack is when a thief actually tries to open the car’s door using an open window to attempt to take the car away.

Computer and Network Security Technology Concepts

Once we have outlined several important network security concepts, there are several Computer and Network Security Technologies that are worth to define for three reasons: (1) we will be talking about them through the book since Fortinet products, especially FortiGate, the subject of this book, implement these technologies. (2) Since nowadays many vendors offer overlapping features often using different terms to mean the same thing and sometimes working against each other, it is good to set an expectation and define what can be expected out of a feature, how it does complement others, and what you shouldn’t expect out of it (and why). (3) All these are typically network border protection technologies, meaning they are typically deployed between the internal (protected) network and the outside network, where we would expect the attackers to sit. We will review this in a little more detailed manner; it is not the case anymore, but in the meanwhile we will study the notion that the technologies below are border technologies to make the explanation a bit easier to understand.

Again, the order in which the concepts appear is meaningful in the sense of going from the fundamental to the most specific.

Firewall: Probably the firewall is the most basic, necessary, and deployed network security technology. The basic firewall responsibility is to allow or deny communications entering or leaving a host, a network, or a group of networks. Communications are allowed or denied based on a set of policies or rules. These rules can be something simple (similar to Access-Control Lists on a switch or router) or something real complex like specifying times, users, network segments, and a lot of more complete connection-context information. The firewall is also responsible for checking some communications integrity, ensuring received connections adhere to network standards, and are not performing suspicious or obviously dangerous activity. The most common Firewall technology used worldwide is Stateful Inspection, which allows tracking connections by state, recorded on a session table. Firewalls are regularly also tasked with authentication duties, since it is a way to track the origin of a connection. Since the firewall is regularly the foundation for other inspections, it is mandatory that the firewall be as fast as possible, preferably wire speed across a broad range of packet sizes because otherwise the firewall would become a network bottleneck raising the temptation to remove it.

VPN (Virtual Private Network): Allows communications between two or more given points to be private. Such points could be a host, or a network, or a group of networks. The communications are secured using one of a range of available encryption algorithms that obscure the information being transferred and also ensure it is not modified while in transit, providing privacy. The most common VPN technologies are IPSec VPN and SSL VPN. Since the VPN could carry most of the traffic, if the information exchange occurs only between specific parties, it is of high importance to have the best possible performance, and wire speed is preferred. A VPN is a perfect addition to a firewall device, since that way the firewall can inspect the traffic going inside the VPN, and the VPN can protect the traffic allowed by the firewall.

Traffic Shaping: It is a module that allows regulation of the way network resources are assigned to entities requesting for them. Once bandwidth-intensive applications such as file sharing or Peer-to-Peer (p2p) applications had to compete with bandwidth-sensitive or latency-sensitive applications such as Voice over IP (VOIP) in a network that had limits, it became necessary to have a way to regulate how bandwidth was assigned. Traffic Shaping mechanisms do this by delaying packets corresponding to applications, users, or IP addresses labeled with low priority under a security environment, traffic found “clean” by security mechanisms could be prioritized according to business rules, which could help to maintain service availability for critical services. For this reason, it makes sense to deploy a Traffic Shaper on the same physical device running a firewall.

IPS (Intrusion Protection System): Also known as Intrusion Prevention System (IPS) or Intrusion Detection and Protection (IDP) System. An IPS should not be confused with an IDS (Intrusion Detection System), since an IDS can only detect but not react, while an IPS can both detect and react to an event. A network-based IPS performs deep analysis on the traffic so network-based attacks can be detected. These attacks are often performed trying to take advantage of a known vulnerability on the operating system or application software. One typical IPS technique is recognizing patterns of known bad behavior, so when an attack is being performed it can be caught by simply identifying its “signature” (the known pattern). This is known as misuse detection. Another common technique involves learning or pre-configuring what the common behavior is and then detecting deviations from it, like deviations from a protocol standard or known environment statistics. This is known as anomaly detection. Robust Intrusion Protection Systems use both technologies to increase effectiveness. An IPS is a great companion to a firewall because then allowed traffic can be further inspected, and attacks (intentional or accidental) generated by trusted sources can be detected and stopped. Sometimes an IPS is used to measure firewall effectiveness, by measuring attacks before and after the firewall blocks traffic.

Application Control: Is a module that allows or permits traffic from a given application, regardless of the method (port, protocol, application) used to transfer traffic. Since applications increasingly run their traffic over the same network ports to ensure successful behavior of the application, it is important to have a mechanism that could effectively identify and control these applications. Application Control is a mechanism that was created to resolve this need, and it does in a very similar way to how IPS recognizes attacks: by creating “signatures” of the traffic generated by applications and then recognizing these signatures in the traffic flow. Application Control is a great companion to a firewall because it allows deeper enforcement, by extending to the Application level the criteria for allowing or denying traffic.

AntiSpam: Is a module that detects and removes unwanted e-mail (spam) messages. It regularly applies verification mechanisms to determine if the e-mail is spam. Some of these mechanisms are quite simple, like rejecting messages from a list of known offenders. Other mechanisms involve comparing the message received against a database of known bad messages and a centralized list of known mail servers that are used to send SPAM. Since typically SPAM messages come from outside the organization, it makes sense to scan mail traffic on the network border right after it has been authorized by the firewall. It should be noted that preventing spam from being sent becomes important when considered in the wider context of your Internet reputation.

Antivirus: Viruses are probably one of the first problems computer users had once computers became personal. Due to this, viruses are probably the most diverse form of computer problem related to security, and it follows that Antivirus is probably the most widely known protection mechanism. Basically an Antivirus is responsible for detecting, removing, and reporting on malicious code. Malicious code (malware) can be self-replicating code that attaches itself to valid programs (virus), programs that appear to be a valid application so users execute them (trojan horses), or other type of malware like spyware or adware. While Antivirus is typically deployed at host level, a network Antivirus, a mechanism that detects and stops malicious code at the point where content is leaving or entering a network, becomes important when it is necessary to ensure all the computers in such a network have the same level of protection, probably additional to the protection already deployed directly on the hosts. Antivirus is a great companion to a firewall system because it can look for malicious code in very specific ways over traffic that has been allowed, but to do it in an effective way it needs to have high performance so it doesn’t become a bottleneck: this is why it needs to be accelerated and this is why it is more effective if it only looks on traffic that has been approved by faster security mechanism, such as a firewall.

DLP (Data Leak Prevention, Data Leak Protection): It is a module that helps tracking specific content entering or leaving the network. It is able to look for very specific contents such as words inside an e-mail message or phrases within a PDF file. As regulation increased, DLP became more important since it can distinguish between attacks and legitimate behavior. One typical example is when an employee sends from his corporate e-mail classified information such as customer lists, checking balances, or credit card numbers: it is not a virus and it is certainly not a network attack. However, if this is done by someone from the technical department and not the accounting department, you might have reasons to be concerned. This is the generic problem DLP is trying to solve: detect bad human behavior that does not necessarily break a rule from the technical standpoint. Due to this, DLP is a great addition to firewall, IPS, and Antivirus devices, because it can complement them by detecting things those mechanisms simply can’t due to their nature.

Web Content Filter (URL Filter): Is a mechanism that allows or blocks Web Traffic, based on the type of content. The most common method is classifying the web pages into categories. These generic categories are usually broad such as Games, Finance and Banking, File Sharing, Storage or Phishing websites. It makes sense to deploy a Web Content Filter on the same device where a firewall is running, because the Web Content Filter can greatly enhance the web-surfing policy’s granularity. As well, it makes a lot of sense to deploy a network Antivirus and an IPS in conjunction to the web content filter, because this way it is ensured users get clean traffic.

Cache: A cache system stores locally a copy of some content that might be requested by more than one user, so the next time a user requests for it, the content doesn’t have to be downloaded from the remote site, saving time and bandwidth. The most common type of cache is the Web Cache, but it is not the only one. It makes sense to deploy a cache system along with other security technologies like a firewall, an Antivirus, and a web content filter, because it is more efficient to store locally content that was previously authorized and inspected.

WAN Optimization: It is a series of mechanisms that help to reduce the amount of traffic that passes through WAN links, avoiding the usage of expensive bandwidth. Cache is one of the mechanisms used for WAN Optimization, but not the only one, since other techniques are also used, such as TCP optimization, deduplication, and byte-caching. While this is not necessarily a security technology per se, WAN optimization techniques can help to reduce the possibility of certain attacks, such as Denial of Service. The real benefit of this technology, however, is the possibility of reducing WAN link costs, as well as, for certain applications such as Web applications, gives the user the “illusion” that the application is faster than it really is. Since WAN Optimization technologies assume the traffic they will be processing is valid, it is a good idea to ensure that such traffic is, in effect, clean. After all, it makes no sense to “optimize” and make sure an attack or a virus spreading gets better network response times. Due to this, and the fact that WAN Optimization is typically deployed at network border, it makes sense to integrate WAN Optimization to a network security device, especially one that can have Firewall, Network Antivirus, and IPS.

SSL Inspection: Provides the ability to inspect content encrypted by applications using the Secure Socket Layer cryptographic technique. The technique used to perform this function requires the communication to flow through the solution in which it would perform a man-in-the-middle takeover of the SSL session. With this ability, various security inspection features can be applied to the content such as DLP, Web page content filtering, and Antivirus. Example of SSL-based encrypted applications is web browsing session using HTTPS, file transfers with FTPS, and encrypted email with SMTPS, POP3S, and IMAPS.

If you conduct research and take it to some time back, say the mid-1990s early 2000s, you would probably discover that many of the technologies mentioned could be found independent. At some point in time, these technologies began to merge into each other, and since that point an understanding of the security value and efficiencies achieved means that today it is not unusual to find them all integrated into a single device and a single product. But these are not the only ones as we will see now…

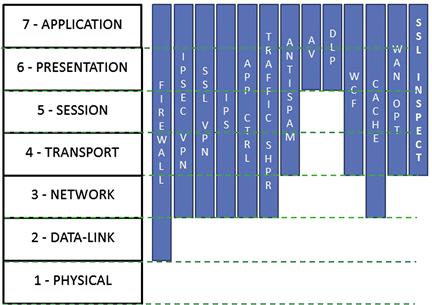

All the above-described mechanisms work on different layers of the ISO model, shown in Figure 1.1.

Figure 1.1 OSI Model and Security Features Positioning

Network Security Technology Concepts

The network security technologies mentioned in the section above are commonly deployed at the network’s border, mainly for historical reasons: the first approach to network security was to define an internal network, which was considered protected and secure; and an evil external network where all the bad guys were waiting for an opportunity to attack. This way of thinking meant the natural place to put a device to secure the defended network was at the network border or frontier. But nowadays this is not the case: increases in the performance of devices doing security inspection that allow protecting higher and higher bandwidths, the need to apply segmentation on networks that increase in complexity with the addition of new services, the struggle in defining where the network starts, and ends, more users and additional applications, the difficulty of identifying good vs evil in all scenarios, added to the always-evolving nature of threats that increase potential attack vectors, have forced the deployment of network-border technologies within what was traditionally considered an internal network.

In addition to the technologies discussed previously, there are some others that adapt better to Internal Security deployments:

• Wireless Controller: Wireless networks are popular. The ability to centrally manage the wireless infrastructure for services like roaming for users, avoid signal conflicts or interference are available, becomes more and more important for organizations. Managing all these wireless network functions is the job of a Wireless controller. The importance of having security on such environments increases as well, wireless networks would become an easy target because they don’t require potential attackers to be on the same physical premises where the network is: as long as there is signal coverage that’s enough. Due to all the above reasons, putting a Wireless controller on a device that also does security makes sense: the secure wireless controller ensures the wireless network is available with good quality services, while allowed traffic is cleaned to ensure no attack, misuse, or abuse is conducted purposely or inadvertently.

• Network Access Control (NAC) or Network Access Protection (NAP): This is a concept that was popular some years ago, but was abandoned due to complexities of implementation. However, the concepts remain and are still valid today in a lot of ways. The idea of NAC or NAP is to evaluate each device joining the network to decide whether or not it is compliant with a set of requirements, such as operating system version, running an Antivirus and host firewall enabled. Once the device is evaluated, compliant devices would be allowed and non-compliant devices would be quarantined. It is a good idea to have on a local security device a way to ensure that internal devices meet a minimum security posture, so this internal segment does not represent a problem for the rest of the network, and moreover, in the case that a security problem is detected, the threat can be contained.

• Vulnerability Management (VM) or Vulnerability Assessment System (VAS): This technology identifies known vulnerabilities in a set of devices, so they can be solved. Typically, these systems have a list of known vulnerabilities along with their impact ratings (how dangerous the problem is), which is updated periodically. VAS systems are typically used to analyze internal networks and it makes sense to have one along your internal network security device.

As you probably identified at some point while reading, we are mentioning the above technologies because all of them are implemented on Fortinet’s FortiGate systems. Once again, we will study all these technologies in detail in the following chapters of this book, but it is important to have an overall view on them from an introductory perspective. Let’s now review other concepts that are also commonly used by network and computer security professionals.

Commonly used Computer and Network Security Terms

There are other common concepts which, even though are not necessarily related to products or technologies, are important thorough this book because they will be used frequently. The list does not contain the more obvious concepts and it is by no means exhaustive, but it contains concepts that are more likely to be misunderstood, and we believe it is possible that you will be using them on conversations with customers, suppliers, and colleagues. This is why we believe it is important to standardize them, at least for the scope of this book. To review the list of terms, please refer the glossary section of this book.

Unified Threat Management (UTM) Foundations

The World before UTM

If you think about the last years of past century (1998, 1999), the technology world was different from today in several ways: the Internet had had a steady growth as a new economic platform. Many companies were growing and a lot of people were getting rich just by stating they were doing something new over the Internet. In this Internet-growing economy, there were many things that fostered the growth of IT departments, including budget: the hope that getting market share and a strong brand name would eventually lure customers to buy, made many people invest in companies working somehow with the Internet.

When the year 2000 arrived, many had a lot of hopes, expectations, and fears. But after all, the world didn’t end as some religious leaders preached. Also, computers and computer networks didn’t really collapse due to the famous Y2K bug as many thought could happen. But then, the so-called Dot-com bubble burst did happen. This event, associated with the fact that the problem happened to companies that somehow were trying to make money with the Internet, lessened people’s faith in IT. We won’t go deeper here on how or why after a period of fast growth, things went bad. Many books cover that phenomenon from several perspectives. What is important, though, is that things simply never were the same. People and organizations became more cautious and conservative when investing money for online initiatives, and budgets for IT departments began shrinking.

At the same time, another interesting thing happened: Internet Security became more and more important. There were many reasons for this, among them:

• Organizations realized that, despite the dot-com bubble burst, it actually made sense to go online to develop new products and services, reach new customers and markets, or simply keep a stronger relationship with existing ones.

• It was important for companies to build and keep a good reputation. E-Commerce, especially business-to-consumer, was still on its early days, and it was important to give potential customers the confidence they needed to buy.

• The fact that a larger number of people went online, and that the majority of these people were simply not technically educated, was an opportunity for criminals to take advantage of it.

Due to the above, the Internet Security market began to mature. Companies that sold Firewalls, Intrusion Detection Systems, which were considered by many the minimum security technologies that an organization should have, became more specialized—adding robustness, speed, and ease-of-use to their technologies but didn’t think of adding new features or protections to them. So, soon other technologies were created to offer protection for aspects of the online corporate. Then solutions like AntiSpam gateways, to “clean” the e-mail flow, were born. We also saw AntiSpyware tools being offered to get rid of programs that informed attackers of our online activities or private information. It seemed at this point that every time attackers found a new way to break things, a new Anti-X technology needed to be developed. Very few thought on the need of developing more protections into existing technologies and combining several of them.

The History of the Unified Threat Management (UTM) Concept

Unified Threat Management is a concept that was used for the first time in a report5 issued by IDC in 2004 and called “Worldwide Threat Management Security Appliances 2004–2008 Forecast and 2003 Vendor Shares: The Rise of the Unified Threat Management Security Appliance.” This report, signed by Charles J. Kolodgy as author, mentioned that UTM was a new category of security appliances and that it was necessary to have at least the functionality of a firewall, a network intrusion prevention system, and a gateway Antivirus to be part of this security appliance category. However, even though the term was first mentioned at this point, the reality is that it actually described what it was already being done by some companies, especially Fortinet, which was already shipping a firewall that included IPS and Antivirus, alongside other functionality.

Fortinet was founded in 20006 by Ken and Michael Xie, two brothers that already had a history of innovation: Ken Xie was the previous Founder, President, and CEO of NetScreen, a firm that under his leadership pioneered the ASIC-accelerated security concept, overcoming the performance issues that software-based solutions had shown at the time. Michael Xie is a former Vice President of Engineering for ServGate and Software Director and Architect for NetScreen,7 and also holder of several US patents8 in the fields of Network and Computer Security. The original name of the company when it was founded on November 2000 was “Appligation, Inc,” which was later changed to “ApSecure” on December of the same year. Later, the name was once again changed (this time for good) to Fortinet,9 which comes from the combination of two words that symbolize what the company delivers with its technologies: Fortified Networks. The name was decided in an internal company competition.

From its inception, Fortinet had a vision to deliver-enhanced performance and drive consolidation into the Content Security market, developing products and services to provide broad, integrated, and high-performance protection against dynamic security threats, while simplifying the IT security infrastructure. So, the idea was to provide high-performance technology that was secure, consolidated, and simple to deploy and manage. While this concept was relatively easy to understand and a powerful business and technology proposition, it was contrary to what everybody else had been preaching at the time, and this posed some difficulties since the concept was not truly understood initially. Let’s see why.

Around year 2000 when Fortinet was born, the biggest organizations with presence on the Internet already had some security solutions deployed: firewalls, IPSec VPNs, Intrusion Detection Systems, Web Content Filters. In the next few years, the same organizations were pushed to purchase new security elements such as SSL VPNs, AntiSpam, Intrusion Prevention Systems, AntiSpyware, and a whole set of additional solutions. While the complexity and cost of this approach increased, this was the accepted status quo: since most (if not all) organizations had all these technologies and operated that way, it was accepted that other approaches might be risky. Since the UTM concept was born at a time where people already had purchased some network security components that were working apparently fine, it faced fierce opposition. We say “apparently fine” since despite having all these security components, organizations didn’t stop having issues: security technology wasn’t making things easier at all and wasn’t responding to the challenge of bringing more security to the environments where it was deployed.

UTM vs Other Security Architectures

All the above derived into some “religious wars” between competing “technology fiefdoms” on whether this was the right way to do security or not. The old security architecture where point products were doing specific things had already a large installed base, a large amount of support, and also a long history of working fine (or almost fine). An innovative security architecture was not going to be accepted that easily by people that had a vested interest in keeping the status quo, but at the end the clear advantages of the UTM Security Architecture were winning increasing market share.

We will look now at some of those “religious or philosophical wars” that might be of interest to the reader of this book, to understand as well why UTM is still a great technology and business proposition.

UTM vs Best-of-Breed

Consolidation and convergence in Security was not well seen initially, despite consolidation being present in other areas of computing. The PC is probably the most convergent device, since it can be used as a telephone, radio, DVD player, or typewriter, just to give some examples. You have multifunction printers that also can be used for fax, copier, and scanner functions. Today there are convergent networks that carry voice, data, and multimedia at the same time, which wasn’t possible in 1990. This convergence took time, but it happened and brought value to our lives. In 2002, when Fortinet launched its first product,10 convergence in security was clearly not the main trend for existing vendors, who had specific products to solve specific problems and post dot-com bubble revenue stream to protect.

So, when Fortinet showed up in the arena, with a device called a “Secure Content Processing Gateway”11 it was clearly disruptive. The device could do at the same time, Firewall, IPsec VPN, Traffic Shaping (all these “standard” functionality in regular firewalls at the time); but also could deliver Antivirus and Worm protection for e-mail and Web traffic, as well as Intrusion Detection and Web Content Filtering (which were traditionally delivered by separate devices). Several questions were raised: Can this device deliver the same quality as a separate device? What happens with performance? How does it compare with what I have today? Is it ok to “put all our eggs into one basket”?

Many opponents to this idea were companies that had been marketing point solutions: Web Filters, Antivirus, Intrusion Protection systems. But without a doubt, the ones that opposed the most were traditional Firewall companies that saw a direct threat here. The firewall had not changed much for about a decade. So, when a new company announced a lot more functionality on the same firewall device, they were immediately perceived as a direct threat.

The questions mentioned above are still asked today, when these lines are being written, nine years after the launch of the “Secure Content Processing Gateway.” But now after more than 100,000 customers and 750,000 devices shipped, it is possible to say that:

• Fortinet can deliver all the security functionalities with no real performance compromise, given proper sizing.

• Fortinet can deliver functionalities with the same quality of equivalent separate devices. Moreover, vendors of such separate functionalities are beginning to integrate additional capabilities, further validating Fortinet’s approach.

• Fortinet’s FortiGuard Labs are capable of providing comprehensive intelligence that feeds Fortinet’s customers updated and state-of-the-art security.

• Fortinet has a strong value proposition in both the technical and the business side, and simplifies keeping a highly available environment, so it makes sense to switch from a “best-of-breed” environment to a UTM environment with Fortinet.

Not only that, but UTM can solve issues that a point-product architecture introduces. The section “Solving problems with UTM” below further clarifies this.

UTM vs Next-Generation Firewalls

Another concept brought recently as a contender of the UTM concept, is the “Next-Generation Firewall” (NGFW) one, which is mainly pushed by the research and consultancy firm Gartner, Inc. To understand the roots of this “conflict,” we need to take a look at history.

One of the first times the term “Next-Generation Firewall” was mentioned by Gartner was on a document published in 2004 titled “Next-Generation Firewalls Will Include Intrusion-Prevention,”12 which highlighted the importance of coupling technologies like Deep-Packet Inspection, IPS, and in general application-inspection capabilities to a firewall, with the objective of stopping threats like worms and viruses and extending protection to the application layer, so packets with malicious payloads could be stopped. So far, the UTM definition seemed to fit what a Next-Generation Firewall was according to Gartner, but since UTM was a concept brought up initially by IDC, a Gartner competitor, it is unsurprising that Gartner wouldn’t adopt the term UTM and probably that’s why it came with the Next-Generation Firewall (NGFW) concept instead. Later in the “Magic Quadrant for Enterprise Network Firewalls”13 document published in November 2008 Gartner mentioned “Next-Generation Firewalls” would be security devices that include Enterprise-grade firewall plus Integrated Deep-packet inspection or IPS, Application Identification, and integrated extra firewall intelligence such as Web Content Filter, while allowing interoperability with third-party rule management technologies such as Algosec or Tufin. The UTM definition still applied and thus far Gartner didn’t expressly indicate that UTM was not a Next-Generation firewall.

Later, in October 2009, Gartner published a note called “Defining the Next-Generation Firewall”14 which mentioned the criteria for choosing a NGFW were standard firewall capabilities, this time including VPN, integrated IPS functions with tight interoperability between the IPS and firewall components, application awareness, and extra-firewall intelligence. If you take a look at this, the UTM definition still applied as it didn’t change that much from what Gartner said before. Let’s remember that from IDC’s definition of UTM, proposed in 2004, a UTM was a security device containing a firewall, an IPS, and an Antivirus as minimum. Fortinet was by then considered an UTM even though it was also offering Application awareness (Application Control) and Web Content Filtering, but it could also be considered a Next-Generation Firewall by Gartner’s definition at this point. Then, it happened: in this same document, Gartner expressly mentioned a UTM was NOT a NGFW, and since Fortinet had been by the time the recognized leader on UTM, automatically Fortinet wasn’t a NGFW. This of course, caused a lot of confusion.

Having said all that, it seems that according to the concepts proposed by Gartner that Fortinet’s flagship product FortiGate could be considered a NGFW by definition, but of course, Gartner is the only one that can officially put or remove a product, brand, or technology on their reports. On the other side, the Next-Generation Firewall definition seems to be close enough to the UTM definition to wonder if NGFW is actually Gartner’s name for UTM, which came around the same time, but got bigger traction at the time. So, what is the answer? The authors of this book all consider the FortiGate to be NGFW and a UTM solution. Bottom line, the feature and functionality offered on the FortiGate meets both NGFW and UTM definitions.

UTM vs XTM

The very same inventor of the UTM term, Charles Kolodgy, in an article for SC Magazine,15 also coined the term XTM, which is eXtensible Threat Management. According to Mr. Kolodgy, UTM devices will evolve to XTM platforms, which will add even more features, such as reputation-based protections, logging, NAC, Vulnerability Management, and Network Bandwidth Management. All of those are features that are already being delivered by FortiGate, so it is natural to think that if the concept of XTM becomes as strong as the concept of UTM, Fortinet should be considered as part of the brands delivering on this concept.

Solving Problems with UTM

Before UTM, the security world seemed to be evolving in such a way that every new threat needed a new protection technology, which led to scenarios that were more complex, more expensive, less effective to manage, and more difficult to operate. When the trend seemed to be clear, and it was when Fortinet’s founders decided it was time to do something about it. The success that UTM has enjoyed since then and the way UTM has evolved seem to confirm that it is a very valid proposition in the security world. Let’s see why.

Unified Threat Management (UTM) exists because it solves three critical needs: The need of better security, the need of more efficient security (both from the engineering and the cost standpoints), and the need of having cost effectiveness.

Better Security

The first problem UTM can solve really well is the need to increase security. Let’s review a couple of examples on how this is achieved.

Consistent Security Policy

Let’s face it: even though many organizations have a security policy, a lot of them keep it only in a book but it is actually never enforced with technology. One of the reasons is that it is complex to deploy and configure technology. Why? Let’s think on a very basic directive: To avoid infection risks, an organization wants to block Windows executable files entering the network. To make it simple, let’s say this means files with extensions .exe and .com. Where do you do it? Probably your firewall, IPS, network Antivirus, AntiSpam, and web content filter have all the capability to block files; but if you do it on only one probably you will miss some coverage, while if you do it in all of them it becomes harder to maintain, because probably the configuration steps are different. Configuring files blocking on all the devices will also add latency, which will reduce performance which you never want. Now let’s assume you have different Firewall, IPS, or Network Antivirus brands in different locations or even different segments in the same network. It becomes increasingly complex quickly.

Now, let’s think you have only one place to configure file blocking. And once you configure it there it will be done for Web, Mail, IM, or other traffic that traverses the network. Not only that, but also it will be done only once instead of three times, doing security in an efficient way. Even if you have to apply this policy in several network segments and several branches, it would still be the same policy.

UTM enables consistent security policy deployments.

Protecting against Blended Threats

Today, very few threats consist of a single attack vector: the majority of them consist of at least two or three. Take into consideration for example recent worms that can replicate themselves by exploiting a known vulnerability on a Web server or a web browser or possibly on a file server, while also spreading by e-mail messages sent to your whole address book, as well as messages on social networks like facebook or twitter and finally on messages via Instant Message applications like Microsoft MSN Messenger. Yes, these things exist and they are called Blended Threats.

From what we’ve seen in the recent years, blended threats will only get worse: Conficker, Zeus, and Stuxnet garnered media attention not long ago and proved the blended threat is one of the only ways to make an attack succeed, and the reason for this is because detecting a blended threat is difficult if an organization is using the old security architecture where point products were used to solve point problems, have little or no cooperation between them. Stuxnet is especially interesting because it was a blended threat that could spread in different ways but focused on SCADA environments (infrastructure and grid systems) as a target, making it potentially dangerous even to human lives.

Today, point products that don’t cooperate among themselves to share information will be defeated by a blended threat: while the Antivirus could stop some malicious code coming from an IP address and the IPS can detect and stop a network port scan coming from the same source, there is no easy way to correlate the information from these two technologies today: you can use a Security Event Management product, but that is complex and expensive; or you can build scripts to do that for you, but this is difficult and not everybody has the skill to do it. Imagine if you try to integrate your AntiSpam system to your Network Data Leak Prevention while you try to get some Application Control enforced. Even if you had the money and the time to hire the skill (and assuming you could find someone willing to do it), the result likely wouldn’t be what you are looking for. Think about it: today, even with all the security products deployed, there are still security issues happening, and this is not because the products being used are not good, but because those products don’t help to get an overall big picture and there is no communication among them because they were not built to protect against attacks that would use more than a single attack vector.

Imagine if you had an easy way to tell if a certain IP address is launching viruses and port scans, and also visiting some web pages classified as potentially dangerous or hacker sites while using network control applications via HTTP (port TCP/80). You can accomplish this with a FortiGate, which is why Fortinet can help protect an infrastructure against blended threats. Of course having a UTM is not the Holy Grail of security, but it makes a lot easier to implement an effective defense.

UTM makes protection against blended threats possible.

Implementing Clean Pipes

Today in many countries it is common to use a device to filter water before drinking it. The people using them regularly ask which one purifies water better, and then installs it. Some people ask about how they work, but few understand why carbon filter and reverse osmosis do a good job at purifying water. And certainly very few people care if their own filter is catching mold, bacteria, fungi, or something else: all they care about is the fact that the water they drink is clean so they don’t get sick.

The Clean Pipes concept work the same way: the idea is that you have today all kinds of bad traffic in the outside network, which is typically the Internet. Bad traffic could be virus, spam, attacks, bad applications, phishing pages, and a long etcetera list. The reality is that an average user doesn’t care if an attack is a worm, a browser exploit, or ransomware: she just wants to have clean traffic she can trust.

UTM can offer the necessary characteristics for effective Clean Pipes:

a. Security comprehensiveness: To make the approach effective it is necessary to ensure as many security components as possible are enabled, pretty much like having several water filtering methods together in the same filter. You will need at least Firewall, VPN, IPS, Antivirus, AntiSpam, Web Content Filter, Application Control and DLP features to some degree, in order to have good security to offer.

b. Optimized analysis: It is also necessary to establish a pre-arranged order: it would probably not be that good if you filter mold after doing water disinfection with chlorine. Likewise, it would probably not be good to analyze for viruses traffic that will be stopped by a firewall policy.

c. Holistic solution: You need to do it everywhere where you have Internet access or it would not be effective protection, and to do that it is necessary to have a cost-effective solution. So, if you have a distributed organization with two or three big locations but tens or thousands of branches, think on the money, time, and resources required to deploy on each branch a network Antivirus, an IPS, an AntiSpam box, a URL Filtering box, and a Firewall/VPN device, not to mention a WAN Optimization device and Vulnerability scanner. But if you only need to place one device, things become increasingly simple quickly.

d. Scalability: You might need to add more users to your network tomorrow. Or more sites. Regardless if you need vertical scalability (add more processing power to cover for an increasing amount of users, bandwidth, or services) or horizontal scalability (adding more places where you need inspection), the architecture you choose needs to grow with your needs.

UTM can cover for all of the above, enabling a Clean Pipe service.

More Efficient Security

Overall efficiency is something that is almost never considered when an organization is looking for security technology. The reason for saying this, is that security projects generally focus on performance, cost, and operation of a specific device, not for the organization as a whole. Why is that? Because it is the way things have being done for a good part of the last decade. However, if you look deeper, there are efficiencies that could be achieved by using a consolidated security approach like that which UTM proposes. Let’s see.

Higher Performance

Network bandwidth is growing all the time, and it’s getting cheaper too. Applications on the other hand, demand more and more bandwidth to work well and many tolerate little or no latency. Due to this, any device that is placed in the network needs to have as much performance as possible so it doesn’t become a bottleneck.

The above is especially relevant when border security technologies are used for internal network segmentation: typically the speed required on an Internet connection is significantly lower than the speed on internal networks. It is highly important to achieve as much performance as possible on a security solution, since otherwise users would reject it: whenever a user notices additional delay or additional steps to do something, complaints arise.

One of the ways UTM helps to increase performance is by achieving inspection efficiencies. Think for example on an e-mail message leaving a corporate network: it will probably traverse at least an AntiSpam, a Network Antivirus, an IPS, and a Firewall system. That’s four times the same connection is analyzed and three times the connection is inspected at Application Level (by the AntiSpam, Antivirus, and IPS systems). Now imagine a user downloading a file from a web server. Probably a Web Content Filter, a Web Cache, a Network Antivirus, an IPS, and a Firewall will analyze that connection, and that would be at least four devices (or five if you throw a DLP there) doing inspection at application level, which is known to cause delays and be resource intensive.

Now, this is considering that the functions are complementary, but quite often the functions overlap and that increases inefficiency. Think about our “blocking executable files” example mentioned above: several devices trying to block devices is definitely a waste of resources and degrades performance.

What if instead of opening a connection three or four times, it is opened only once? This would be more efficient and even if the time to analyze the same takes the same time, just by the savings on time and resources when opening and closing a connection, it makes worth the effort. Now, think if some of the analysis could be done in parallel. Assuming enough resources are available (i.e. the resources sizing was done properly) that would be even faster, right?

High performance is something UTM architectures can achieve.

Enhancing Operational Response Times (Meeting and Enhancing SLAs)

Increasing dependency on Internet services increases customer demand. People trust the e-mail they send will reach its destination not immediately but in the next minute (have you held a conversation over the phone while sending an e-mail with a document you were going to discuss?). People trust web services to do online payments and students use Google to reduce the amount of time to find information for their school homework. If something fails, everybody wants quick answers and even better quick solutions, right?

Now, imagine an organization that has the old network security architecture. Let’s say it consists of three different systems: a Firewall, an IPS, and Web Content Filter to make it simple (organizations today would probably have a Network Antivirus, a Web Cache Proxy, a Network Data Link Prevention system as additional boxes). Now let’s imagine a couple of users call the helpdesk with a simple request: “I don’t have Internet access because I can’t browse Google or Yahoo!” Did this ever happen to you? The engineers working on the network or the security department (or both, depending on how the organization structures the IT function) would need to troubleshoot three different systems. Assuming they are trained and proficient on these technologies, it will still take them time to review the logs on three different boxes, probably with different format, to determine where the problem is. Of course, none of this takes infrastructure troubleshooting (switches, routers, servers, and client systems) into account.

Now let’s imagine a modern infrastructure. Let’s say there is a device in charge of performing the Firewall, IPS, and Web Content Filter functions. When a user calls with a connectivity problem, there is one console that needs to be reviewed, with a consistent log format and everything in a single place. And if changes need to be applied, there is one device that needs to be reconfigured. Instead of taking N minutes times 3 to review the issue, it takes only N minutes, reducing the response time by 66% (even if it were 50%, that’s already something) which enables better service to users.

UTM makes possible to enhance operational response times.

Getting a better Support Experience

If you ever had an environment where three (or more) different products from different vendors were interoperating and you had an issue where it wasn’t clear exactly on which device the problem was, probably you were already a victim of the finger-pointing syndrome.

Imagine there is a web connection being blocked and there is interoperation between your firewall, your web content filter, your Antivirus, your Application Control, and your IPS and there is suspicion the connection is being blocked due to a product malfunction because the configuration was working fine and the problem happened out of nowhere. Or think of an e-mail environment where the outgoing messages are analyzed by AntiSpam, Antivirus, Data Leak Prevention, IPS, and Firewall systems and a user simply reports her e-mails are not reaching their destination after several tries and the configuration seems to be ok so everything points to a product malfunction again.

In any of the both cases above, which product’s problem is it? Who is going to help you to troubleshoot it? Who should fix it? And all this is without taking into account the troubleshooting you need to do on the underlying infrastructure which has to be reviewed anyway: switches, routers, servers, and also the PCs, laptops, tablets, or whatever client system is being used. Wouldn’t you like to reduce the amount of potential failure points and also the potential finger-pointing to where the problem might sit?

With UTM, since the functions reside on a single box, you have only one support you need to call, and there is only one entity that should give you the answer.

UTM helps you reduce the stress of technical support finger-pointing.

Increasing Network Availability

One of the recurrent arguments against UTM is the fact that it might decrease availability because it’s like “putting all your eggs in one basket.” The assumption here is that if one component fails, the whole solution will be down. But let’s take a closer look.

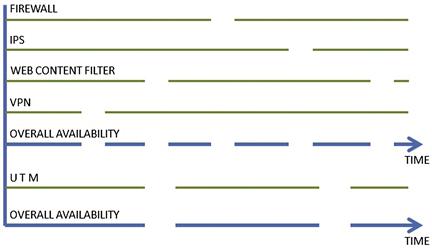

Once again, let’s say an organization has a firewall, an IPS, and a Web Content Filter system. The general assumption would be that having a separate product architecture is better from the availability standpoint because if one of them goes down, the others are still active. This is only partially true because if the device fails there will be a network outage. If there is a planned maintenance window, there will still be some service outage. Now, let’s assume each device fails or is brought down for maintenance reasons twice per year. Remember since they are separate devices they probably won’t fail or be maintained at the same time, but at different times. That is a total of six times the network service is disrupted within a year.

Now, think you have a UTM device that covers for the Firewall, IPS, and Web Content Filter functions. Let’s assume this device fails three times instead of just once or twice: that’s still 50% less outages that you had with three separate products. Even if the UTM had four or five failures, that would still be more availability than with point products (Figure 1.2).

Figure 1.2 Best-of-Breed vs UTM Availability Comparison

All of the above is, of course, not considering redundancy. Remember UTM solutions can be placed in High Availability like any other network security solution. Assuming that you have the same three point products mentioned above, you would need to deploy six boxes if you want to have high availability, and probably you would need more if you wanted availability of five nines. This has not only a bigger cost but also more complexity and additional maintenance overload, which is also increased because you might need some additional infrastructure elements like load balancers or probably additional switches also, which if configured in a fully meshed topology (where all network elements are connected to each other) could become unnecessarily complex. With UTM you would need only two boxes to cover for your availability needs, which would make a simpler, cleaner, and more elegant network design while helping increase network service time. If you need redundancy, in most of the cases an additional box (for a total of three) will cover your needs.

UTM helps you increase your network availability while keeping your security.

Cost Effectiveness

There is a famous Spanish saying that goes “nobody is in fight with his wallet,” meaning that most of the people try to save some money whenever you can. And certainly in crisis times, when IT budgets are not abundant as they used to be, and we need to squeeze as much value as we can out of the money we have, it is always wise.

Granted, probably you dear reader, being this is a (mainly) technical book, have little or nothing to do with the way budgets are planned and exercised in your organization. But probably you have to convince someone on why the technology product you are recommending is the best one for your organization, or you have to help your boss to support the purchase decision of a UTM product. This is where this information becomes handy. Now if you are a reader that has the direct budget authority then this information would only help further justify your UTM purchasing decision.

Easier Investment Justification

So, your organization needs a firewall. Someone needs to ask for money to buy it. You could probably mention “network security” as one of the reasons to buy it and it will be approved. Then an IPS is required and the same person might go for the second time to request money to enhance “network security.” By the time money is requested to purchase an AntiSpam, DLP, Network Antivirus, or some other network security component, someone might wonder why so much money is being invested in network security components: “Didn’t we just buy something to do network security? Why do we need to keep buying more of these things?” Explaining the difference between network-based attacks and application-level attacks, or between HTTP and SMTP, to somebody on the business side might not be an easy conversation, and today it isn’t a necessary conversation.

This is a completely different situation than when you only need to ask for money for Network security once. Maybe twice if you are doing High Availability (HA) in two phases, but that’s pretty much of it. It would be an easier conversation to have with people that don’t understand bits and bytes (and probably don’t need to and thus don’t care).

Oh! and finally imagine how easier the security purchase justification would be if you can print a single executive report showing how many security policy violations the firewall stopped, how many attacks the IPS prevented, how many viruses the Network Antivirus prevented, and how many threats the other security systems protected against. Imagine that: a single two page executive report that you can hand to someone showing the value behind your security system. UTM can definitely do this.

Licensing Simplicity

When security functions are in different boxes, quite often they are licensed in different ways: Firewalls by performance or protected IP addresses. Antivirus by performance or users. AntiSpam by mail boxes. Web Content Filters by the amount of seats. This makes purchasing security solutions complex and expensive: what happens if you need High Availability? What happens with the renewal price if the amount of users or services increases next year? Exactly how much money do you need to schedule on your next year budget to pay for the renewal?

When all the security functions are on the same box it makes sense to have a single licensing model. Most UTM vendors follow this simplified model. Some of them charge separately for different security functions, but it is still simpler than dealing with different vendors and different licensing schemes.

By the way, licensing is never an issue with Fortinet since all features become included as part of the price when a Bundle is purchased, and there is no limit in the amount of users, connections, or other criteria. So, as long as the UTM solution is sized appropriately to meet today’s and tomorrow’s potential growth environment, what to use or what not to use is only a matter of technical configuration, not licensing.

Lowering Operational Costs

Probably you have heard the concept of Total Cost of Ownership (TCO). But you may not have been too convinced by their arguments on why they had an advantage from the Cost Perspective in the long term.

TCO is what you pay for a product not only for the purchasing price but adding the amount of money you need to spend on its maintenance. Talking about network devices you have electricity and cooling from the physical perspective, the maintenance cost you pay to be entitled to support and upgrades, and also the money you invest on people so they can keep the products running well, which means the salary and training an organization pays to its engineers or the fees paid to a consultant. All this adds up.

From simple math, it will be cheaper to power and cool one box instead of three (or more). It will be easier to pay for support and upgrades for a single box than to pay for three. And of course, it is cheaper to pay for an engineer to attend the training for a single technology than to pay for three courses. Moreover, with a single technology an engineer will have the chance to become an expert, which would allow him to solve issues more quickly and be proficient in tuning the configuration to extract the maximum value out of a product, avoiding the common case of having a product that does 100 things but you only use 20 of them. Besides, with IT budgets shrinking it is quite common to find IT engineers tasked with several responsibilities, and having less technologies to be worried about will create time for them to focus on other equally important things.

UTM allows an organization to lower operational costs.

Current UTM Market Landscape

The Unified Threat Management (UTM) market has matured in the last couple of years, with new players in the arena trying to capture market and with Fortinet continuously leading with innovation.

Below you will find some notes about how different UTM implementations work and how Fortinet’s offer compares against them. We have to place here a disclaimer: the Authors of this book are Fortinet employees at the time these pages are being written, so there is a potential conflict of interest in that regard. However, we are sticking strictly to points that can be verified using public sources, so the reader can confirm everything being mentioned here.

UTM a-lá Fortinet

Fortinet was named UTM market leader for the 25th consecutive quarter by analyst firm IDC16 in July 2012. This is an important accolade for the company and its flagship product FortiGate because it recognizes Fortinet’s continued efforts to keep innovation, service, and strong value proposition. It also shows the market has being recognizing this for more than five years in a row now, which is a further validation of the previous statement.

Fortinet’s UTM implementation is called FortiGate. It is actually the product this book is about. Let’s analyze some of the main features that make it unique and valuable when it comes to UTM.

Reliable Performance

Security processing is resource intensive. This is one of the reasons why Fortinet chose to create a security architecture that would use Hardware Acceleration as an integral component.

Application Specific Integrated Circuits (ASICs) make it possible to achieve wire-speed performance in some of the underlying functions like Firewall or VPN. This is important because if the foundation technologies are slow, everything else will be slow. As it will be discussed in Chapters 2 and 3, FortiGate makes use of ASICs both for Network Processing (Layers 1–4 of the OSI model) and Content Processing (Layers 4–7 of the OSI model), which results on hardware accelerations of pretty much all the security functions FortiGate delivers. With Fortinet, the Firewall and the VPN components (essential components that must be present in a network security solution) will never be the bottleneck.

ASICs bring another benefit: predictable performance. Since the processors are 100% dedicated to the task they are doing, they are not “distracted” with something else. Security technologies running over generic CPUs, for example, need to use the main CPUs for everything: from security inspection functions to administrative and resource management functions. So, if an administrative function takes some resources they won’t be available to security inspection functions, and since probably the amount of administrative load changes over time, there is no reliable way to get exactly the same resources to the inspection function and thus it will never be exactly the same performance. ASICs in the other end will only be doing one thing: security inspection. So, to give an example with the firewall function: even if the main (generic) CPU of the FortiGate is busy adding users, showing statistics, sending SNMP traps, or browsing logs for the administrator, the Network Processor ASIC (the one used to accelerate firewall functions) will always and only doing firewalling, which will allow to be certain that the performance shown will always be the same regardless of the amount of administrative load in the device.

Selective Functionality

Security policies often discriminate between different user or application categories. This is necessary especially when trying to enforce a well-known security principle: need-to-know. Users or applications will often be allowed to flow in certain network segments, but depending on what their purpose and target audience is, their behavior might change. You already know this: marketing needs access to Social Network sites but they need games blocked there, and also need to put appropriate IPS and AV inspections for the Web traffic passing through. Web Applications need access to backend Databases but only to certain ports, and while protecting this traffic by an IPS that covers threats against the operating system and the database. And the list goes on…

As discussed previously, UTM implies the fact that several functions running separated previously, will now run together. One of the concerns administrators have is that if they deploy a FortiGate, they will need to use all the features, and they will be enabled for all the users. This is not true at all.

FortiGate is flexible enough to allow an administrator to enable or disable a feature in the box at will. The Protection Profile concept, which will be explained with more detail in following chapters, allows a security administrator to define a criteria on where, when, and how a security feature like Antivirus, IPS, Web Content Filter, DLP, or other, will be used. All the features can be enabled or disabled at will, but can also be customized to in some cases the Antivirus only inspects web traffic, while in other segments it inspects Web, FTP, and Mail traffic for example. The IPS can be customized to protect against HTTP browser attacks in one segment, while it protects against GNU/Linux and Windows operating systems and database traffic for another segment. You can be granular to say for example, Antivirus is only applied when a user connects via VPN. Or you can have different Web Content Filtering settings depending on whether the user is on a wired or a wireless network. The range of possibilities is as big as your imagination (or your security policy) allows.

Some other vendors might use an all-or-nothing approach limiting the options you have to enforce your security policy, but certainly not Fortinet.

Homegrown Technology

A common question customers ask is if Fortinet licenses technology, or has an OEM agreement with other security vendors in order to provide some of the security functions like Antivirus or Web Content Filtering. The answer is no.

Fortinet has developed in-house all the security technologies included on its FortiGate product line. This means the IPS, Antivirus, Web Content Filter, Data Leak Prevention, and other technologies were created from the ground-up at Fortinet. All the technologies were created with the premise that they would be part of a device that was doing already other functions, so they were not “glued” at some point, but rather architected as part of an overall solution.