C H A P T E R 8

SQL Server Relational Database

In this chapter, I’ll cover a few areas that can have a large impact on the performance of your data tier, even if you have already optimized your queries fully.

For example, our principle of minimizing round-trips also applies to round-trips between the web tier and the database. You can do that using change notifications, multiple result sets, and command batching.

The topics that I’ll cover include the following:

- How SQL Server manages memory

- Stored procedures

- Command batching

- Transactions

- Table-valued parameters

- Multiple result sets

- Data precaching

- Data access layer

- Query and schema optimization

- Data paging

- Object relational models

- XML columns

- Data partitioning

- Full-text search

- Service Broker

- Data change notifications

- Resource Governor

- Scaling up vs. scaling out

- High availability

- Miscellaneous performance tips

How SQL Server Manages Memory

Similar to ASP.NET, it’s possible to have a fast query (or page) but a slow database (or site). One of the keys to resolving this and to architecting your database for speed is to understand how SQL Server manages memory.

Memory Organization

On 32-bit systems, SQL Server divides RAM into an area that can hold only data pages and a shared area that holds everything else, such as indexes, compiled queries, results of joins and sorts, client connection state, and so on. 32 bits is enough to address up to 4GB at a time. The default configuration is that 2GB of address space is reserved for the operating system, leaving 2GB for the application. When running under one of the 32-bit versions of Windows Server, SQL Server can use Address Windowing Extensions (AWE) to map views of up to 64GB dynamically into its address space. (AWE isn’t available for the desktop versions of Windows.) However, it can use memory addressed with AWE only for the data area, not for the shared area. This means that even if you have 64GB of RAM, with a 32-bit system you might have only 1GB available for shared information.

You can increase the memory available to 32-bit versions of SQL Server on machines with 16GB or less by adding the /3GB flag in the operating system’s boot.ini file. That reduces the address space allocated to the operating system from 2GB to 1GB, leaving 3GB for user applications such as SQL Server. However, since limiting the RAM available to the OS can have an adverse effect on system performance, you should definitely test your system under load before using that switch in production.

On 64-bit systems, the division between data pages and shared information goes away. SQL Server can use all memory for any type of object. In addition, the memory usage limit increases to 2TB. For those reasons, combined with the fact that nearly all CPUs used in servers for quite a few years now are capable of supporting 64-bit operating systems (so there shouldn’t be any additional hardware or software cost), I highly recommend using 64-bit systems whenever possible.

Reads and Writes

SQL Server uses three different kinds of files to store your data. The primary data store, or MDF file, holds tables, indexes, and other database objects. You can also have zero or more secondary data stores, or NDF files, which hold the same type of content in separate filegroups. The LDF file holds the database log, which is a list of changes to the data file.

The MDF and NDF files are organized as 64KB extents, which consist of eight physically contiguous 8KB pages. Table or index rows are stored on a page serially, with a header at the beginning of the page and a list of row offsets at the end that indicate where each row starts in the page. Rows can’t span multiple pages. Large columns are moved to special “overflow” pages.

When SQL Server first reads data from disk, such as with a SELECT query, it reads pages from the data files into a pool of 8KB buffers, which it also uses as a cache. When the pool is full, the least-recently used buffers are dropped first to make room for new data.

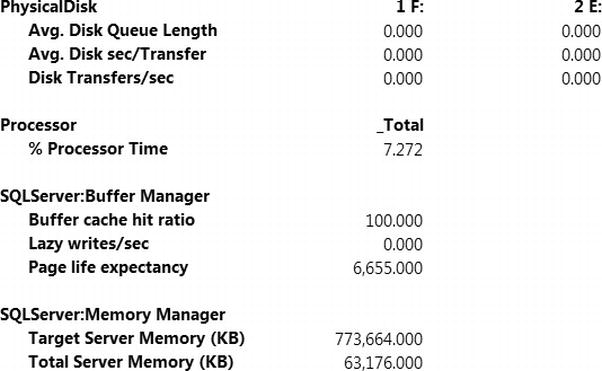

Since SQL Server can use all available memory as a large cache, making sure your server has plenty of RAM is an important step when it comes to maximizing performance. It would be ideal if you have enough room to fit the entire database in RAM. See the “Scaling Up vs. Scaling Out” section in this chapter for some tips on how to determine whether your server needs more memory. Based on my experience, it’s common in high-performance sites to see database servers with 32GB of RAM or more.

When you modify data, such as with an INSERT, UPDATE, or DELETE, SQL Server makes the requested changes to the data pages in memory, marks the associated data buffers as modified, writes the changes to the database log file (LDF), and then returns to the caller after the log write completes. A dedicated “lazy writer” thread periodically scans the buffer pool looking for modified buffers, which it writes to the data file (MDF). Modified buffers are also written to the MDF file if they need to be dropped to make room for new data or during periodic checkpoints.

Writes to the log file are always sequential. When properly configured on a disk volume by itself, the disk heads shouldn’t have to move very much when writing to the log file, and write throughput can be very high.

Writes to the data file will generally be at random locations in the MDF file, so the disk heads will move around a lot; throughput is normally a small fraction of what’s possible with an equivalently configured log drive. In fact, I’ve seen a factor of 50-to-1 performance difference, or more, between random and sequential writes on similar drives. See Chapter 10 for details.

To avoid seeks and thereby maximize write throughput, it’s especially important to have the database log file on a set of dedicated spindles, separate from the data file.

Performance Impact

Understanding the way that SQL manages memory leads to several important conclusions:

- The first time you access data will be much slower than subsequent accesses, since data has to be read from disk into the buffer cache. This can be very important during system startup and during a database cluster failover, since those servers will start with an empty cache. It also leads to the beneficial concepts of database warm-up and precaching of database content.

- Aggregation queries (sum, count, and so on) and other queries that scan large tables or indexes can require a large number of buffers and have a very adverse effect on performance if they cause SQL Server to drop other data from the cache.

- With careful design, it’s possible to use SQL Server as an in-memory cache.

- Write performance is determined largely by how fast SQL Server can sequentially write to the log file, while read performance is mostly determined by a combination of the amount of RAM available and how fast it can do random reads from the data file.

- When writes to the database log start happening simultaneously with the lazy writer thread writing modified pages to the data file, or simultaneously with data reads hitting the disk, the resulting disk seeks can cause the speed of access to the log file to decrease dramatically if the log and data files are on the same disk volume. For that reason, it’s important to keep the log and data files on separate disk spindles.

Stored Procedures

Using stored procedures as your primary interface to the database has a number of advantages:

- Stored procedures allow easy grouping and execution of multiple T-SQL statements, which can help reduce the number of round-trips that the web server requires to perform its tasks.

- They allow you to make changes on the data tier without requiring changes on the web tier. This helps facilitate easy and fast application evolution and iterative improvement and tuning of your schema, indexes, queries, and so on.

- They more easily support a comprehensive security framework than dynamic SQL. You can configure access to your underlying tables and other objects so that your web tier can access them only through a specific set of procedures.

Another way to think of it is that stored procedures are a best practice for the same reason that accessors are a best practice in object-oriented code: they provide a layer of abstraction that allows you to modify easily all references to a certain object or set of objects.

When you submit a command to SQL Server, it needs to be compiled into a query plan before it can be executed. Those plans can be cached. The caching mechanism uses the command string as the key for the plan cache; commands that are exactly the same as one another, including whitespace and embedded arguments, are mapped to the same cached plan.

In dynamic ad hoc T-SQL, where parameters are embedded in the command, SQL Server performs an optimization that automatically identifies up to one parameter. This allows some commands to be considered the same so they can use the same query plan. However, if the command varies by more than one parameter, the extra differences are still part of the string that’s used as the key to the plan cache, so the command will be recompiled for each variation. Using stored procedures and parameterized queries can help minimize the time SQL Server spends performing compilations, while also minimizing plan cache pollution (filling the cache with many plans that are rarely reused).

When you’re writing stored procedures, one of your goals should be to minimize the number of database round-trips. It’s much better to call one stored procedure that invokes ten queries than ten separate procedures that invoke one query each. I generally don’t like to get too much business logic in them, but using things like conditionals is normally fine. Also, keep in mind that, as with subroutines or even user controls, it’s perfectly acceptable for one stored procedure to call another one.

I suggest using dynamic T-SQL only when you can’t create the queries you need with static T-SQL. For those times when it’s unavoidable, be sure to use parameterized queries for best performance and security. Forming queries by simply concatenating strings has a very good chance of introducing SQL injection attack vulnerabilities into your application.

Here’s an example of creating a table and a stored procedure to access it. I’m also using a SCHEMA, which is a security-related best practice:

create schema [Traffic] authorization [dbo]

CREATE TABLE [Traffic].[PageViews] (

[PvId] BIGINT IDENTITY NOT NULL,

[PvDate] DATETIME NOT NULL,

[UserId] UNIQUEIDENTIFIER NULL,

[PvUrl] VARCHAR(256) NOT NULL

)

ALTER TABLE [Traffic].[PageViews]

ADD CONSTRAINT [PageViewIdPK]

PRIMARY KEY CLUSTERED ([PvId] ASC)

CREATE PROCEDURE [Traffic].[AddPageView]

@pvid BIGINT OUT,

@userid UNIQUEIDENTIFIER,

@pvurl VARCHAR (256)

AS

BEGIN

SET NOCOUNT ON

DECLARE @trandate DATETIME

SET @trandate = GETUTCDATE()

INSERT INTO [Traffic].[PageViews]

(PvDate, UserId, PvUrl)

VALUES

(@trandate, @userid, @pvurl)

SET @pvid = SCOPE_IDENTITY()

end

The stored procedure gets the current date, inserts a row into the [Traffic].[PageViews] table, and returns the resulting primary key as an output variable.

You will be using these objects in examples later in the chapter.

Command Batching

Another way to reduce the number of database round-trips is to batch several commands together and send them all to the server at the same time.

Using SqlDataAdapter

A typical application of command batching is to INSERT many rows. As an example, let’s create a test harness that you can use to evaluate the effect of using different batch sizes. Create a new web form called sql-batch1.aspx, and edit the markup to include the following:

<form id="form1" runat="server">

<div>

Record count: <asp:TextBox runat="server" ID="cnt" /><br />

Batch size: <asp:TextBox runat="server" ID="sz" /><br />

<asp:Button runat="server" Text="Submit" /><br />

<asp:Literal runat="server" ID="info" />

</div>

</form>

You will use the two text boxes to set the record count and the batch size and an <asp:Literal> to display the results.

The conventional way to do command batching for INSERTs, UPDATEs, and DELETEs with ADO.NET is to use the SqlDataAdapter class. Edit the code-behind as follows:

using System;

using System.Collections;

using System.Data;

using System.Data.SqlClient;

using System.Diagnostics;

using System.Text;

using System.Web.UI;

public partial class sql_batch1 : Page

{

public const string ConnString =

"Data Source=server;Initial Catalog=Sample;Integrated Security=True";

protected void Page_Load(object sender, EventArgs e)

{

if (this.IsPostBack)

{

int numRecords = Convert.ToInt32(this.cnt.Text);

int batchSize = Convert.ToInt32(this.sz.Text);

int numBatches = numRecords / batchSize;

long pvid = -1;

using (SqlConnection conn = new SqlConnection(ConnString))

{

conn.Open();

conn.StatisticsEnabled = true;

for (int j = 0; j < numBatches; j++)

{

DataTable table = new DataTable();

table.Columns.Add("pvid", typeof(long));

table.Columns.Add("userid", typeof(Guid));

table.Columns.Add("pvurl", typeof(string));

After parsing the input parameters and creating a SqlConnection, in a loop that’s executed once for each batch, create a new DataTable with three columns that correspond to the database table.

using (SqlCommand cmd =

new SqlCommand("[Traffic].[AddPageView]", conn))

{

cmd.CommandType = CommandType.StoredProcedure;

SqlParameterCollection p = cmd.Parameters;

p.Add("@pvid", SqlDbType.BigInt, 0, "pvid").Direction =

ParameterDirection.Output;

p.Add("@userid", SqlDbType.UniqueIdentifier, 0, "userid");

p.Add("@pvurl", SqlDbType.VarChar, 256, "pvurl");

Next, create a SqlCommand object that references the stored procedure, and define its parameters, including their data types and the names of the columns that correspond to each one. Notice that the first parameter has its Direction property set to ParameterDirection.Output to indicate that it’s an output parameter.

using (SqlDataAdapter adapter = new SqlDataAdapter())

{

cmd.UpdatedRowSource = UpdateRowSource.OutputParameters;

adapter.InsertCommand = cmd;

adapter.UpdateBatchSize = batchSize;

Guid userId = Guid.NewGuid();

for (int i = 0; i < batchSize; i++)

{

table.Rows.Add(0, userId,

"http://www.12titans.net/test.aspx");

}

try

{

adapter.Update(table);

pvid = (long)table.Rows[batchSize - 1]["pvid"];

}

catch (SqlException ex)

{

EventLog.WriteEntry("Application",

"Error in WritePageView: " + ex.Message + "

",

EventLogEntryType.Error, 101);

break;

}

}

}

}

Next, set UpdatedRowSource to UpdateRowSource.OutputParameters to indicate that the runtime should map the pvid output parameter of the stored procedure back into the DataTable. Set UpdateBatchSize to the size of the batch, and add rows to the DataTable with the data. Then call adapter.Update() to synchronously send the batch to the server, and get the pvid response from the last row. In the event of an exception, write an entry in the operating system Application log.

StringBuilder result = new StringBuilder();

result.Append("Last pvid = ");

result.Append(pvid.ToString());

result.Append("<br/>");

IDictionary dict = conn.RetrieveStatistics();

foreach (string key in dict.Keys)

{

result.Append(key);

result.Append(" = ");

result.Append(dict[key]);

result.Append("<br/>");

}

this.info.Text = result.ToString();

}

}

}

}

Then you display the pvid of the last record along with the connection statistics, using the <asp:Literal> control. Each time you submit the page, it will add the requested number of rows to the PageViews table.

Results

The client machine I used for the examples in this chapter had a 6-core 2.67GHz Xeon X5650 CPU with 24GB of RAM running 64-bit Windows 7 Ultimate. SQL Server 2012 RC0 Enterprise was running under 64-bit Windows Server 2008 R2 Enterprise as a virtual machine on the same physical box, configured as a single CPU with 4-cores and 4GB RAM. The database data file was on an 8-drive RAID-50 volume, and the log file was on a 2-drive RAID-0. Both volumes used MLC SSD drives.

Here are the results after adding 20,000 rows on my test server, with a batch size of 50:

Last pvid = 20000

IduRows = 0

Prepares = 0

PreparedExecs = 0

ConnectionTime = 7937

SelectCount = 0

Transactions = 0

BytesSent = 3171600

NetworkServerTime = 7636

SumResultSets = 0

BuffersReceived = 400

BytesReceived = 1003206

UnpreparedExecs = 400

ServerRoundtrips = 400

IduCount = 0

BuffersSent = 400

ExecutionTime = 7734

SelectRows = 0

CursorOpens = 0

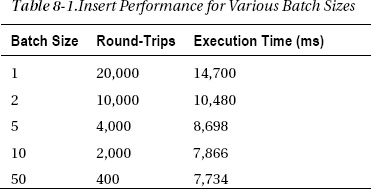

The test took 400 round-trips and about 7.7 seconds to execute. In Table 8-1, I’ve shown the results of running the test for various batch sizes, while maintaining the number of rows at 20,000.

You can see that throughput roughly doubles as you increase the batch size from 1 to 10 and that larger batch sizes don’t show any additional improvement (or they might even be a little slower). At that point, you are limited by disk speed rather than round-trips.

Limitations

Although this technique works reasonably well for INSERTs, it’s not as good for UPDATEs and DELETEs, unless you already happen to have populated a DataTable for other reasons. Even then, SqlDataAdapter will send one T-SQL command for each modified row. In most real-life applications, a single statement with a WHERE clause that specifies multiple rows will be much more efficient, when possible.

This highlights a limitation of this approach, which is that it’s not general purpose. If you want to do something other than reflect changes to a single DataTable or DataSet, you can’t use the command batching that SqlDataAdapter provides.

Another issue with SqlDataAdapter.Update() is that it doesn’t have a native async interface. Recall from earlier chapters that the general-purpose async mechanisms in .NET use threads from the ASP.NET thread pool, and therefore have an adverse impact on scalability. Since large batches tend to take a long time to run, not being able to call them asynchronously from a native async interface can cause or significantly compound scalability problems, as described earlier.

Building Parameterized Command Strings

The alternative approach is to build a parameterized command string yourself, separating commands from one another with semicolons. As crude as it might sound, it’s very effective, and it addresses the problems with the standard approach in that it will allow you to send arbitrary commands in a single batch.

As an example, copy the code and markup from sql-batch1.aspx into a new web form called sql-batch2.aspx, and edit the code-behind, as follows:

public const string ConnString =

"Data Source=server;Initial Catalog=Sample;Integrated Security=True;Async=True";

protected async void Page_Load(object sender, EventArgs e)

{

Add Async=True to the connection string and to the Page directive in the .aspx file. Add the async keyword to the declaration for the Page_Load() method.

if (this.IsPostBack)

{

int numRecords = Convert.ToInt32(this.cnt.Text);

int batchSize = Convert.ToInt32(this.sz.Text);

int numBatches = numRecords / batchSize;

StringBuilder sb = new StringBuilder();

string sql =

"EXEC [Traffic].[AddPageView] @pvid{0} out, @userid{0}, @pvurl{0};";

for (int i = 0; i < batchSize; i++)

{

sb.AppendFormat(sql, i);

}

string query = sb.ToString();

You construct the batch command by using EXEC to call your stored procedure, appending a number to the end of each parameter name to make them unique, and using a semicolon to separate each command.

using (SqlConnection conn = new SqlConnection(ConnString))

{

await conn.OpenAsync();

conn.StatisticsEnabled = true;

SqlParameterCollection p = null;

for (int j = 0; j < numBatches; j++)

{

using (SqlCommand cmd = new SqlCommand(query, conn))

{

p = cmd.Parameters;

Guid userId = Guid.NewGuid();

for (int i = 0; i < batchSize; i++)

{

p.Add("pvid" + i, SqlDbType.BigInt).Direction =

ParameterDirection.Output;

p.Add("userid" + i, SqlDbType.UniqueIdentifier).Value = userId;

p.Add("pvurl" + i, SqlDbType.VarChar, 256).Value =

"http://www.12titans.net/test.aspx";

}

To finish building the batch command, assign a value to each numbered parameter. As in the previous example, pvid is an output parameter, userid is set to a new GUID, and pvurl is a string.

try

{

await cmd.ExecuteNonQueryAsync();

}

catch (SqlException ex)

{

EventLog.WriteEntry("Application",

"Error in WritePageView: " + ex.Message + "

",

EventLogEntryType.Error, 101);

}

}

}

StringBuilder result = new StringBuilder();

result.Append("Last pvid = ");

result.Append(p["pvid" + (batchSize - 1)].Value);

result.Append("<br/>");

IDictionary dict = conn.RetrieveStatistics();

foreach (string key in dict.Keys)

{

result.Append(key);

result.Append(" = ");

result.Append(dict[key]);

result.Append("<br/>");

}

this.info.Text = result.ToString();

}

}

}

Next, asynchronously execute and await all the batches you need to reach the total number of records requested and collect and display the resulting statistics.

The reported ExecutionTime statistic is much lower than with the previous example (about 265ms for a batch size of 50), which shows that ExecuteNonQueryAsync() is no longer waiting for the command to complete. However, from the perspective of total elapsed time, the performance of this approach is about the same. The advantages of this approach are that you can include arbitrary commands and that it runs asynchronously.

Transactions

As I mentioned earlier, each time SQL Server makes any changes to your data, it writes a record to the database log. Each of those writes requires a round-trip to the disk subsystem, which you should try to minimize. Each write also includes some overhead. Therefore, you can improve performance by writing multiple changes at once. The way to do that is by executing multiple writes within one transaction. If you don’t explicitly specify a transaction, SQL Server transacts each change separately.

There is a point of diminishing returns with regard to transaction size. Although larger transactions can help improve disk throughput, they can also block other threads if the commands acquire any database locks. For that reason, it’s a good idea to adopt a middle ground when it comes to transaction length – not too short and not too long – to give other threads a chance to run in between the transactions.

Copy the code and markup from sql-batch2.aspx into a new web form called sql-batch3.aspx, and edit the inner loop, as follows:

for (int j = 0; j < numBatches; j++)

{

using (SqlTransaction trans = conn.BeginTransaction())

{

using (SqlCommand cmd = new SqlCommand(query, conn))

{

cmd.Transaction = trans;

p = cmd.Parameters;

Guid userId = Guid.NewGuid();

for (int i = 0; i < batchSize; i++)

{

p.Add("pvid" + i, SqlDbType.BigInt).Direction =

ParameterDirection.Output;

p.Add("userid" + i, SqlDbType.UniqueIdentifier).Value = userId;

p.Add("pvurl" + i, SqlDbType.VarChar, 256).Value =

"http://www.12titans.net/test.aspx";

}

try

{

await cmd.ExecuteNonQueryAsync();

trans.Commit();

}

catch (SqlException ex)

{

trans.Rollback();

EventLog.WriteEntry("Application",

"Error in WritePageView: " + ex.Message + "

",

EventLogEntryType.Error, 101);

}

}

}

}

Call conn.BeginTransaction() to start a new transaction. Associate the transaction with the SqlCommand object by setting cmd.Transaction.

After the query is executed, call trans.Commit() to commit the transaction. If the query throws an exception, then call trans.Rollback() to roll back the transaction (in a production environment, you may want to wrap that call in a separate try / catch block, in case it fails).

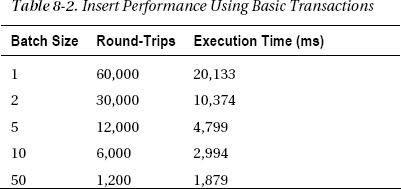

Table 8-2 shows the results of the performance tests, after truncating the table first to make sure you’re starting from the same point.

Notice that the number of round-trips has tripled in each case. That’s because ADO.NET sends the BEGIN TRANSACTION and COMMIT TRANSACTION commands in separate round-trips. That, in turn, causes the performance for the first case to be worse than without transactions, since network overhead dominates. However, as the batch size increases, network overhead becomes less significant, and the improved speed with which SQL Server can write to the log disk becomes apparent. With a batch size of 50, inserting 20,000 records takes only 24 percent as long as it did without explicit transactions.

Using Explicit BEGIN and COMMIT TRANSACTION Statements

Partly for fun and partly because the theme of this book is, after all, ultra-fast ASP.NET, you can eliminate the extra round-trips by including the transaction commands in the text of the command string. To illustrate, make a copy of sql-batch2.aspx (the version without transaction support), call it sql-batch4.aspx, and edit the part of the code-behind that builds the command string, as follows:

StringBuilder sb = new StringBuilder();

string sql = "EXEC [Traffic].[AddPageView] @pvid{0} out, @userid{0}, @pvurl{0};";

sb.Append("BEGIN TRY; BEGIN TRANSACTION;");

for (int i = 0; i < batchSize; i++)

{

sb.AppendFormat(sql, i);

}

sb.Append(

"COMMIT TRANSACTION;END TRY

BEGIN CATCH

ROLLBACK TRANSACTION

END CATCH");

string query = sb.ToString();

The T-SQL syntax allows you to use semicolons to separate all the commands except BEGIN CATCH and END CATCH. For those, you should use newlines instead.

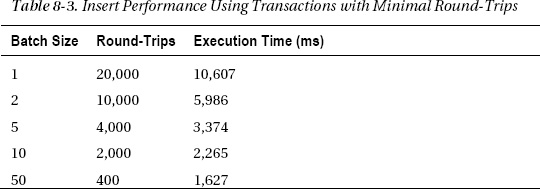

Table 8-3 shows the test results. Notice that the difference from the previous example is greatest for the smaller batch sizes and diminishes for the larger batch sizes. Even so, the largest batch size is about 14 percent faster, although the code definitely isn’t as clean as with BeginTransaction().

Table-Valued Parameters

T-SQL doesn’t support arrays. In the past, developers have often resorted to things like comma-separated strings or XML as workarounds. SQL Server 2008 introduced table-valued parameters. The idea is that since tables are somewhat analogous to an array, you can now pass them as arguments to stored procedures. This not only provides a cleaner way to do a type of command batching, but it also performs well, assuming that the stored procedure itself uses set-based commands and avoids cursors.

To extend the previous examples, first use SQL Server Management Studio (SSMS) to add a new TABLE TYPE and a new stored procedure.

create type PageViewType as table (

[UserId] UNIQUEIDENTIFIER NULL,

[PvUrl] VARCHAR(256) NOT NULL

)

CREATE PROCEDURE [Traffic].[AddPageViewTVP]

@pvid BIGINT OUT,

@rows PageViewType READONLY

AS

BEGIN

SET NOCOUNT ON

DECLARE @trandate DATETIME

SET @trandate = GETUTCDATE()

INSERT INTO [Traffic].[PageViews]

SELECT @trandate, UserId, PvUrl

FROM @rows

SET @pvid = SCOPE_IDENTITY()

END

You use the TABLE TYPE as the type of one of the arguments to the stored procedure. T-SQL requires that you mark the parameter READONLY. The body of the stored procedure uses a single insert statement to insert all the rows of the input table into the destination table. It also returns the last identity value that was generated.

To use this procedure, copy the code and markup from sql-batch1.aspx to sql-batch5.aspx, add the async keywords to the Page directive, connection string and method declaration as in the previous examples, and edit the main loop, as follows:

for (int j = 0; j < numBatches; j++)

{

DataTable table = new DataTable();

table.Columns.Add("userid", typeof(Guid));

table.Columns.Add("pvurl", typeof(string));

using (SqlCommand cmd = new SqlCommand("[Traffic].[AddPageViewTVP]", conn))

{

cmd.CommandType = CommandType.StoredProcedure;

Guid userId = Guid.NewGuid();

for (int i = 0; i < batchSize; i++)

{

table.Rows.Add(userId, "http://www.12titans.net/test.aspx");

}

SqlParameterCollection p = cmd.Parameters;

p.Add("pvid", SqlDbType.BigInt).Direction = ParameterDirection.Output;

SqlParameter rt = p.AddWithValue("rows", table);

rt.SqlDbType = SqlDbType.Structured;

rt.TypeName = "PageViewType";

try

{

await cmd.ExecuteNonQueryAsync();

pvid = (long)p["pvid"].Value;

}

catch (SqlException ex)

{

EventLog.WriteEntry("Application",

"Error in WritePageView: " + ex.Message + "

",

EventLogEntryType.Error, 101);

break;

}

}

}

Here’s what the code does:

- Creates a

DataTablewith the two columns that you want to use for the database inserts.- Adds

batchSizerows to theDataTablefor each batch, with your values for the two columns- Configures the

SqlParametersfor the command, including settingpvidas an output value and adding theDataTableas the value of the rows table-valued parameter. ADO.NET automatically transforms theDataTableinto a table-valued parameter.- Asynchronously executes the command and retrieves the value of the output parameter.

- Catches database exceptions and writes a corresponding message to the Windows error log.

In addition to providing a form of command batching, this version also has the advantage of executing each batch in a separate transaction, since the single insert statement uses one transaction to do its work.

It’s worthwhile to look at the command that goes across the wire, using SQL Profiler. Here’s a single batch, with a batch size of 2:

declare @p1 bigint

SET @p1=0

DECLARE @p2 dbo.PageViewType

INSERT INTO @p2 VALUES

('AD08202A-5CE9-475B-AD7D-581B1AE6F5D1',N'http://www.12titans.net/test.aspx')

INSERT INTO @p2 VALUES

('AD08202A-5CE9-475B-AD7D-581B1AE6F5D1',N'http://www.12titans.net/test.aspx')

EXEC [Traffic].[AddPageViewTVP] @pvid=@p1 OUTPUT,@rows=@p2

SELECT @p1

Notice that the DataTable rows are inserted into an in-memory table variable, which is then passed to the stored procedure.

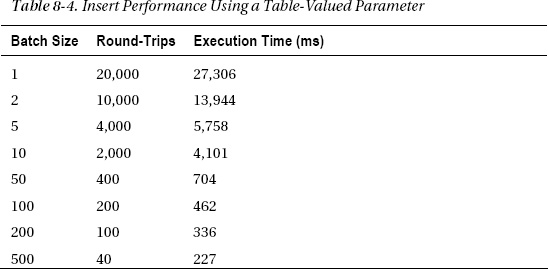

Table 8-4 shows the performance of this approach.

The performance isn’t as good as the previous example (sql-batch4.aspx) until the batch size reaches 50. However, unlike with the previous examples, in this case write performance continues to improve even if you increase the batch size to 500. The best performance here has more than 64 times the throughput of the original one-row-at-a-time example. A single row takes only about 11 microseconds to insert, which results in a rate of more than 88,000 rows per second.

Multiple Result Sets

If you need to process a number of queries at a time, each of which produces a separate result set, you can have SQL Server process them in a single round-trip. When executed, the command will return multiple result sets. This provides a mechanism to avoid issuing back-to-back queries separately; you should combine them into a single round-trip whenever possible.

You might do this by having a stored procedure that issues more than one SELECT statement that returns rows, or perhaps by executing more than one stored procedure in a batch, using the command batching techniques described earlier.

As an example, first create a stored procedure:

CREATE PROCEDURE [Traffic].[GetFirstLastPageViews]

@count INT

AS

BEGIN

SET NOCOUNT ON

SELECT TOP (@count) PvId, PvDate, UserId, PvUrl

FROM [Traffic].[PageViews]

ORDER BY Pvid ASC

SELECT TOP (@count) PvId, PvDate, UserId, PvUrl

FROM [Traffic].[PageViews]

ORDER BY Pvid DESC

END

The procedure returns the first and last rows in the PageViews table, in two result sets, using a parameterized count.

Using SqlDataReader.NextResult()

Create a web form called sql-result1.aspx, add Async="True" to the Page directive, and edit the <form> part of the markup as follows:

<form id="form1" runat="server">

<div>

Count: <asp:TextBox runat="server" ID="cnt" /><br />

<asp:Button runat="server" Text="Submit" /><br />

<asp:GridView runat="server" ID="first" />

<br />

<asp:GridView runat="server" ID="last" />

</div>

</form>

The form has one text box for a count parameter, a submit button, and two data GridView controls.

Next, edit the code-behind, as follows:

using System;

using System.Collections;

using System.Data;

using System.Data.SqlClient;

using System.Diagnostics;

using System.Text;

using System.Web.UI;

public partial class sql_result1 : Page

{

public const string ConnString =

"Data Source=server;Initial Catalog=Sample;Integrated Security=True;Async=True";

protected async void Page_Load(object sender, EventArgs e)

{

if (this.IsPostBack)

{

int numRecords = Convert.ToInt32(this.cnt.Text);

using (SqlConnection conn = new SqlConnection(ConnString))

{

await conn.OpenAsync();

using (SqlCommand cmd =

new SqlCommand("[Traffic].[GetFirstLastPageViews]", conn))

{

cmd.CommandType = CommandType.StoredProcedure;

SqlParameterCollection p = cmd.Parameters;

p.Add("count", SqlDbType.Int).Value = numRecords;

try

{

SqlDataReader reader = await cmd.ExecuteReaderAsync();

this.first.DataSource = reader;

this.first.DataBind();

await reader.NextResultAsync();

this.last.DataSource = reader;

this.last.DataBind();

}

catch (SqlException ex)

{

EventLog.WriteEntry("Application",

"Error in GetFirstLastPageView: " + ex.Message + "

",

EventLogEntryType.Error, 102);

throw;

}

}

}

}

}

}

The code executes the stored procedure and then binds each result set to a GridView control. Calling reader.NextResultAsync() after binding the first result set causes the reader to asynchronously advance to the next set of rows. This approach allows you to use a single round-trip to retrieve the two sets of rows generated by the stored procedure.

Using SqlDataAdapter and a DataSet

You can also use SqlDataAdapter to load more than one result set into multiple DataTables in a DataSet.

For example, make a copy of sql-result1.aspx called sql-result2.aspx, and edit the code-behind, as follows:

using (SqlCommand cmd = new SqlCommand("[Traffic].[GetFirstLastPageViews]", conn))

{

cmd.CommandType = CommandType.StoredProcedure;

SqlParameterCollection p = cmd.Parameters;

p.Add("count", SqlDbType.Int).Value = numRecords;

using (SqlDataAdapter adapter = new SqlDataAdapter(cmd))

{

try

{

DataSet results = new DataSet();

adapter.Fill(results);

this.first.DataSource = results.Tables[0];

this.first.DataBind();

this.last.DataSource = results.Tables[1];

this.last.DataBind();

}

catch (SqlException ex)

{

EventLog.WriteEntry("Application",

"Error in GetFirstLastPageView: " + ex.Message + "

",

EventLogEntryType.Error, 102);

throw;

}

}

}

The call to adapter.Fill() will check to see whether more than one result set is available. For each result set, it will create and load one DataTable in the destination DataSet. However, this approach doesn’t work with asynchronous database calls, so it’s only suitable for background threads or perhaps infrequently used pages where synchronous calls are acceptable.

Data Precaching

As I mentioned earlier, after SQL Server reads data from disk into memory, the data stays in memory for a while; exactly how long depends on how much RAM is available and the nature of subsequent commands. This aspect of the database points the way to a powerful and yet rarely used performance optimization technique: precaching at the data tier.

Approach

In the cases where you can reasonably predict the next action of your users and where that action involves database access with predictable parameters, you can issue a query to the database that will read the relevant data into RAM before it’s actually needed. The goal is to precache the data at the data tier, so that when you issue the “real” query, you avoid the initial disk access. This can also work when the future command will be an UPDATE or a DELETE, since those commands need to read the associated rows before they can be changed.

Here are a few tips to increase the effectiveness of database precaching with a multiserver load-balanced web tier:

- You should issue the precache command either from a background thread or from an asynchronous Ajax call. You should not issue it in-line with the original page, even if the page is asynchronous.

- You should limit (throttle) the number precaching queries per web server to avoid unduly loading the server based solely on anticipated future work.

- Avoid issuing duplicate precaching queries.

- Don’t bother precaching objects that will probably already be in database memory, such as frequently used data.

- You should discard precaching queries if they are too old, since there’s no need to execute them after the target page has run.

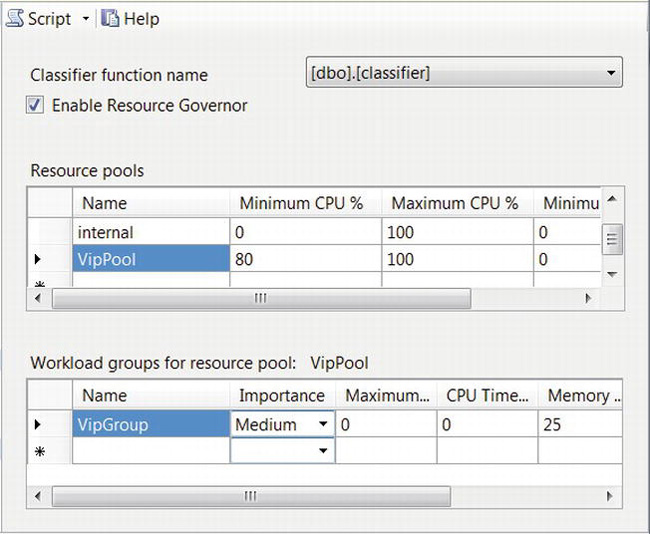

- Execute precaching commands with a lower priority, using Resource Governor, so that they don’t slow down “real” commands.

Precaching Forms-Based Data

You may be able to use database precaching with some forms-based data. For example, consider a login page. After viewing that page, it’s likely that the next step for a user will be to log in, using their username and password. To validate the login, your application will need to read the row in the Users table that contains that user’s information. Since the index of the row you need is the user’s name and since you don’t know what that is until after they’ve started to fill in the form, Ajax can provide the first part of a solution here for precaching.

When the user exits the username box in the web form, you can issue an async Ajax command to the server that contains the user’s name. For precaching, you don’t care about the password, since the name alone will be enough to find the right row.

On the web server, the other side of the Ajax call would queue a request to a background thread to issue a SELECT to read the row of interest. The server will process that request while the user is typing their password. Although you might be tempted to return a flag from the Ajax call to indicate that the username is valid, that’s usually not recommended, for security reasons. In addition, the Ajax call can return more quickly if it just queues the request and doesn’t wait for a result.

When the user clicks the Login button on the web form, the code on the server will validate the username and password by reissuing a similar query. At that point, the data will already be in memory on the database server, so the query will complete quickly.

Precaching Page-at-a-Time Data

Another example would be paging through data, such as in a catalog. In that case, there may be a good chance that the user will advance to the next page after they finish viewing the current one. To make sure that the data for the next page are in memory on the database server, you could do something like this:

- Queue a work item to a background thread that describes the parameters to read the next page of data from the catalog and a timestamp to indicate when you placed the query in the queue.

- When the background thread starts to process the work item, it should discard the request if it’s more than a certain age (perhaps one to three seconds), since the user may have already advanced to the next page by then.

- Check the work item against a list of queries that the background thread has recently processed. If the query is on that list, then discard the request. This may be of limited utility in a load-balanced environment, but it can still help in the event of attacks or heavy burst-oriented traffic.

- Have the background thread use a connection to the server that’s managed by Resource Governor (see later in this chapter), so that the precaching queries from all web servers together don’t overwhelm database resources. That can also help from a security perspective by minimizing the impact of a denial-of-service attack.

- Cache the results of the query at the web tier, if appropriate.

- After issuing the query, the background thread might sleep for a short time before retrieving another work item from the queue, which will throttle the number of read-ahead requests that the web server can process.

The performance difference between using data that’s already in memory and having to read it from disk first can be very significant – as much as a factor of ten or even much more, depending on the size of the data, the details of the query and the associated schema, and the speed of your disk subsystem.

Data Access Layer

An often-cited best practice for data-oriented applications is to provide a layer of abstraction on top of your data access routines. That’s usually done by encapsulating them in a data access layer (DAL), which can be a class or perhaps one or more assemblies, depending on the complexity of your application.

The motivations for grouping the data access code in one place include easing maintenance, database independence (simplifying future migration to other data platforms), encouraging consistent patterns, maximizing code reuse, and simplifying management of command batches, connections, transactions, and multiple result sets.

With synchronous database commands, the DAL methods would typically execute the command and return the result. If you use asynchronous commands everywhere you can, as I suggested in earlier chapters, you will need to modify your DAL accordingly.

For the Asynchronous Programming Model (APM), in the same style as the ADO.NET libraries, you should divide your code into one method that begins a query and another that ends it and collects the results.

Here’s an example:

public static class DAL

{

public static IAsyncResult AddBrowserBegin(RequestInfo info,

AsyncCallback callback)

{

SqlConnection conn =

new SqlConnection(ConfigData.TrafficConnectionStringAsync);

SqlCommand cmd = new SqlCommand("[Traffic].[AddBrowser]", conn);

cmd.CommandType = CommandType.StoredProcedure;

cmd.Parameters.Add("id", SqlDbType.UniqueIdentifier).Value = info.BrowserId;

cmd.Parameters.Add("agent", SqlDbType.VarChar, 256).Value = info.Agent;

conn.Open();

return cmd.BeginExecuteNonQuery(callback, cmd);

}

public static void AddBrowserEnd(IAsyncResult ar)

{

using (SqlCommand cmd = ar.AsyncState as SqlCommand)

{

if (cmd != null)

{

try

{

cmd.EndExecuteNonQuery(ar);

}

catch (SqlException e)

{

EventLog.WriteEntry("Application",

"Error in AddBrowser: " + e.Message,

EventLogEntryType.Error, 103);

throw;

}

finally

{

cmd.Connection.Dispose();

}

}

}

}

}

The AddBrowserBegin() method creates a SqlConnection from an async connection string, along with an associated SqlCommand. After filling in the parameters, it opens the connection, begins the command, and returns the resulting IAsyncResult.

The AddBrowserEnd() method ends the command and calls Dispose() on the SqlConnection and SqlCommand objects (implicitly via a using statement for SqlCommand and explicitly for SqlConnection).

For the Task-based Asynchronous Pattern (TAP), it only takes a single method; you can simplify the code considerably:

public static async void AddBrowser(RequestInfo info)

{

using (SqlConnection conn =

new SqlConnection(ConfigData.TrafficConnectionStringAsync))

{

using (SqlCommand cmd = new SqlCommand("[Traffic].[AddBrowser]", conn))

{

cmd.CommandType = CommandType.StoredProcedure;

cmd.Parameters.Add("id", SqlDbType.UniqueIdentifier).Value = info.BrowserId;

cmd.Parameters.Add("agent", SqlDbType.VarChar, 256).Value = info.Agent;

await conn.OpenAsync();

await cmd.ExecuteNonQueryAsync();

}

}

}

You will probably also want to include connection and transaction management in your DAL. ADO.NET uses connection pools to reuse connections as much as it can. Connections are pooled based entirely on a byte-for-byte comparison of the connection strings; different connection strings will not use connections from the same pool. However, even with identical connection strings, if you execute a command within the scope of one SqlConnection object and then execute another command within the scope of a different SqlConnection, the framework will treat that as a distributed transaction. To avoid the associated performance impact and complexity, it’s better to execute both commands within the same SqlConnection. In fact, it would be better still to batch the commands together, as described earlier. Command batching and caching are also good things to include in your DAL.

Query and Schema Optimization

There’s a definite art to query and schema optimization. It’s a large subject worthy of a book of its own, so I’d like to cover just a few potentially high-impact areas.

Clustered and Nonclustered Indexes

Proper design of indexes, and in particular the choice between clustered and nonclustered indexes and their associated keys, is critical for optimal performance of your database.

As I mentioned earlier, SQL Server manages disk space in terms of 8KB pages and 64KB extents. When a clustered index is present, table rows within a page and the pages within an extent are ordered based on that index. Since a clustered index determines the physical ordering of rows within a table, each table can have only one clustered index.

A table can also have secondary, or nonclustered, indexes. You can think of a nonclustered index as a separate table that only has a subset of the columns from the original table. You specify one or more columns as the index key and they will determine the physical order of the rows in the index. By default, the rows in a nonclustered index only contain the key and the clustered index key, if there is one and if it’s unique. However, you can also include other columns from the table.

A table without any indexes is known as a heap and is unordered.

Neither a clustered nor a nonclustered index has to be unique or non-null, though both can be. Of course, both types of indexes can also include multiple columns, and you can specify an ascending or descending sort order. If a clustered index is not unique, then all nonclustered indexes include a 4-byte pointer back to the original row, instead of the clustered index.

Including the clustered index key in the nonclustered index allows SQL Server to quickly find the rest of the columns associated with a particular row, through a process known as a key lookup. SQL Server may also use the columns in the nonclustered index to satisfy the query; if all the columns you need to satisfy your query are present in the nonclustered index, then the key lookup can be skipped. Such a query is covered. You can help create covered queries and eliminate key lookups by adding the needed columns to a nonclustered index, assuming the additional overhead is warranted.

Index Performance Issues

Since SQL Server physically orders rows by the keys in your indexes, consider what happens when you insert a new row. For an ascending index, if the value of the index for the new row is greater than that for any previous row, then it is inserted at the end of the table. In that case, the table grows smoothly, and the physical ordering is easily maintained. However, if the key value places the new row in the middle of an existing page that’s already full of other rows, then that page will be split by creating a new one and moving half of the existing rows into it. The result is fragmentation of the table; its physical order on disk is no longer the same as its logical order. The process of splitting the page also means that it is no longer completely full of data. Both of those changes will significantly decrease the speed with which you will be able to read the full table.

The fastest way for SQL Server to deliver a group of rows from disk is when they are physically next to each other. A query that requires key lookups for each row or that even has to directly seek to each different row will be much slower than one that can deliver a number of contiguous rows. You can take advantage of this in your index and query design by preferring indexes for columns that are commonly used in range-based WHERE clauses, such as BETWEEN.

When there are indexes on a table, every time you modify the table, the indexes may also need to be modified. Therefore, there’s a trade-off between the cost of maintaining indexes and their use in allowing queries to execute faster. If you have a table where you are mostly doing heavy INSERTs and only very rarely do a SELECT, then it may not be worth the performance cost of maintaining an extra index (or any index at all).

If you issue a query with a column in the WHERE clause that doesn’t have an index on it, the result is usually either a table scan or an index scan. SQL Server reads every row of the table or index. In addition to the direct performance cost of reading every row, there can also be an indirect cost. If your server doesn’t have enough free memory to hold the table being scanned, then buffers in the cache will be dropped to make room, which might negatively affect the performance of other queries. Aggregation queries, such as COUNT and SUM, by their nature often involve table scans, and for that reason, you should avoid them as much as you can on large tables. See the next chapter for alternatives.

Index Guidelines

With these concepts in mind, here are some guidelines for creating indexes:

- Prefer narrow index keys that always increase in value, such as an integer

IDENTITY. Keeping them narrow means that more rows will fit on a page, and having them increase means that new rows will be added at the end of the table, avoiding fragmentation.- Avoid near-random index keys such as strings or

UNIQUEIDENTIFIERs. Their randomness will cause a large number of page splits, resulting in fragmentation and associated poor performance.- Although exact index definitions may evolve over time, begin by making sure that the columns you use in your

WHEREandJOINclauses have indexes assigned to them.- Consider assigning the clustered index to a column that you often use to select a range of rows or that usually needs to be sorted. It should be unique for best performance.

- In cases where you have mostly

INSERTs and almost noSELECTs (such as for logs), you might choose to use a heap and have no indexes. In that case, SQL Server will insert new rows at the end of the table, which prevents fragmentation. That allowsINSERTs to execute very quickly.- Avoid table or index scans. Some query constructs can force a scan even when an index is available, such as a

LIKEclause with a wildcard at the beginning.

Although you can use NEWSEQUENTIALID() to generate sequential GUIDs, that approach has some significant limitations:

- It can be used only on the server, as the

DEFAULTvalue for a column. One of the more useful aspects of GUIDs as keys is being able to create them from a load-balanced web tier without requiring a database round-trip, which doesn’t work with this approach.- The generated GUIDs are only guaranteed to be increasing for “a while.” In particular, things like a server reboot can cause newly generated GUIDs to have values less than older ones. That means new rows aren’t guaranteed to always go at the end of tables; page splits can still happen.

- Another reason for using GUIDs as keys is to have user-visible, non-guessable values. When the values are sequential, they become guessable.

Example with No Indexes

Let’s start with a table by itself with no indexes:

create table ind (

v1 INT IDENTITY,

v2 INT,

v3 VARCHAR(64)

)

The table has three columns: an integer IDENTITY, another integer, and a string. Since it doesn’t have any indexes on it yet, this table is a heap. INSERTs will be fast, since they won’t require any validations for uniqueness and since the sort order and indexes don’t have to be maintained.

Next, let’s add a half million rows to the table, so you’ll have a decent amount of data to run test queries against:

declare @i int

SET @i = 0

WHILE (@i < 500000)

BEGIN

INSERT INTO ind

(v2, v3)

VALUES

(@i * 2, 'test')

SET @i = @i + 1

END

The v1 IDENTITY column will automatically be filled with integers from 1 to 500,000. The v2 column will contain even integers from zero to one million, and the v3 column will contain a fixed string. Of course, this would be faster if you did multiple inserts in a single transaction, as discussed previously, but that’s overkill for a one-time-only script like this.

You can have a look at the table’s physical characteristics on disk by running the following command:

dbcc showcontig (ind) with all_indexes

Since there are no indexes yet, information is displayed for the table only:

Table: 'ind' (101575400); index ID: 0, database ID: 23

TABLE level scan performed.

- Pages Scanned................................: 1624

- Extents Scanned..............................: 204

- Extent Switches..............................: 203

- Avg. Pages per Extent........................: 8.0

- Scan Density [Best Count:Actual Count].......: 99.51% [203:204]

- Extent Scan Fragmentation ...................: 0.98%

- Avg. Bytes Free per Page.....................: 399.0

- Avg. Page Density (full).....................: 95.07%

You can see from this that the table occupies 1,624 pages and 204 extents and that the pages are 95.07 percent full on average.

Adding a Clustered Index

Here’s the first query that you’re going to optimize:

SELECT v1, v2, v3

FROM ind

WHERE v1 BETWEEN 1001 AND 1100

You’re retrieving all three columns from a range of rows, based on the v1 IDENTITY column.

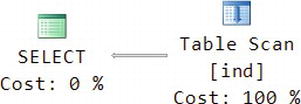

Before running the query, let’s look at how SQL Server will execute it. Do that by selecting it in SSMS, right-clicking, and selecting Display Estimated Execution Plan. Here’s the result:

This shows that the query will be executed using a table scan; since the table doesn’t have an index yet, the only way to find any specific values in it is to look at each and every row.

Before executing the query, let’s flush all buffers from memory:

checkpoint

DBCC DROPCLEANBUFFERS

The CHECKPOINT command tells SQL Server to write all the dirty buffers it has in memory out to disk. Afterward, all buffers will be clean. The DBCC DROPCLEANBUFFERS command then tells it to let go of all the clean buffers. The two commands together ensure that you’re starting from the same place each time: an empty buffer cache.

Next, enable reporting of some performance-related statistics after running each command:

set statistics io on

SET STATISTICS TIME ON

STATISTICS IO will tell you how much physical disk I/O was needed, and STATISTICS TIME will tell you how much CPU time was used.

Run the query, and click the Messages tab to see the reported statistics:

Table 'ind'. Scan count 1, logical reads 1624,

physical reads 29, read-ahead reads 1624,

lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

SQL Server Execution Times:

CPU time = 141 ms, elapsed time = 348 ms.

The values you’re interested in are 29 physical reads, 1,624 read-ahead reads, 141ms of CPU time, and 348ms of elapsed time. Notice that the number of read-ahead reads is the same as the total size of the table, as reported by DBCC SHOWCONTIG in the previous code listing. Also notice the difference between the CPU time and the elapsed time, which shows that the query spent about half of the total time waiting for the disk reads.

![]() Note I don’t use logical reads as my preferred performance metric because they don’t accurately reflect the load that the command generates on the server, so tuning only to reduce that number may not produce any visible performance improvements. CPU time and physical reads are much more useful in that way.

Note I don’t use logical reads as my preferred performance metric because they don’t accurately reflect the load that the command generates on the server, so tuning only to reduce that number may not produce any visible performance improvements. CPU time and physical reads are much more useful in that way.

The fact that the query is looking for the values of all columns over a range of the values of one of them is an ideal indication for the use of a clustered index on the row that’s used in the WHERE clause:

CREATE UNIQUE CLUSTERED INDEX IndIX ON ind(v1)

Since the v1 column is an IDENTITY, that means it’s also UNIQUE, so you include that information in the index. This is almost the same as SQL Server’s default definition of a primary key from an index perspective, so you can accomplish nearly the same thing as follows:

ALTER TABLE ind ADD CONSTRAINT IndIX PRIMARY KEY (v1)

The difference between the two is that a primary key does not allow NULLs, where the unique clustered index does, although there can be only one row with a NULL when the index is unique.

Repeating the DBCC SHOWCONTIG command now shows the following relevant information:

- Pages Scanned................................: 1548

- Extents Scanned..............................: 194

- Avg. Page Density (full).....................: 99.74%

There are a few less pages and extents, with a corresponding increase in page density.

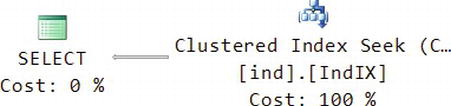

After adding the clustered index, here’s the resulting query plan:

The table scan has become a clustered index seek, using the newly created index.

After flushing the buffers again and executing the query, here are the relevant statistics:

physical reads 3, read-ahead reads 0

CPU time = 0 ms, elapsed time = 33 ms.

The total number of disk reads has dropped from 1,624 to just 3, CPU time has decreased from 141ms to less than 1ms, and elapsed time has decreased from 348ms to 33ms. At this point, our first query is fully optimized.

Adding a Nonclustered Index

Here’s the next query:

SELECT v1, v2, v3

FROM ind

WHERE v2 BETWEEN 1001 AND 1100

This is similar to the previous query, except it’s using v2 in the WHERE clause instead of v1.

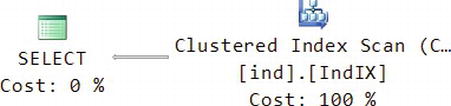

Here’s the initial query plan:

This time, instead of scanning the table, SQL Server will scan the clustered index. However, since each row of the clustered index contains all three columns, this is really the same as scanning the whole table.

Next, flush the buffers again, and execute the query. Here are the results:

physical reads 24, read-ahead reads 1549

CPU time = 204 ms, elapsed time = 334 ms.

Sure enough, the total number of disk reads still corresponds to the size of the full table. It’s a tiny bit faster than the first query without an index, but only because the number of pages decreased after you added the clustered index.

To speed up this query, add a nonclustered index:

CREATE UNIQUE NONCLUSTERED INDEX IndV2IX ON ind(v2)

As with the first column, this column is also unique, so you include that information when you create the index.

Running DBCC SHOWCONTIG now includes information about the new index:

- Pages Scanned................................: 866

- Extents Scanned..............................: 109

- Avg. Page Density (full).....................: 99.84%

You can see that it’s a little more than half the size of the clustered index. It’s somewhat smaller since it only includes the integer v1 and v2 columns, not the four-character-long strings you put in v3.

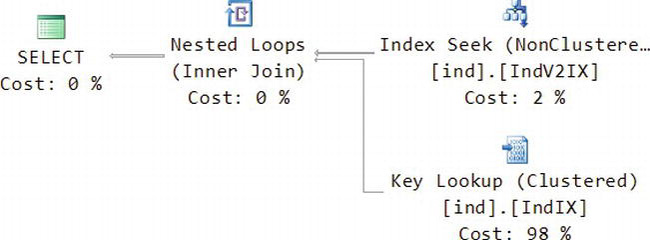

Here’s the new query plan:

This time, SQL Server will do an inexpensive index seek on the IndV2IX nonclustered index you just created. That will allow it to find all the rows with the range of v2 values that you specified. It can also retrieve the value of v1 directly from that index, since the clustered index column is included with all nonclustered indexes. However, to get the value of v3, it needs to execute a key lookup, which finds the matching row using the clustered index. Notice too that the key lookup is 98 percent of the cost of the query.

The two indexes amount to two physically separate tables on disk. The clustered index contains v1, v2, and v3 and is sorted and indexed by v1. The nonclustered index contains only v1 and v2 and is sorted and indexed by v2. After retrieving the desired rows from each index, the inner join in the query plan combines them to form the result.

After flushing the buffers again and executing the query, here are the results:

physical reads 4, read-ahead reads 2

CPU time = 0 ms, elapsed time = 35 ms.

The CPU time and elapsed time are comparable to the first query when it was using the clustered index. However, there are more disk reads because of the key lookups.

Creating a Covered Index

Let’s see what happens if you don’t include v3 in the query:

SELECT v1, v2

FROM ind

WHERE v2 BETWEEN 1001 AND 1100

Here’s the query plan:

Since you don’t need v3 any more, now SQL Server can just use an index seek on the nonclustered index.

After flushing the buffers again, here are the results:

physical reads 3, read-ahead reads 0

CPU time = 0 ms, elapsed time = 12 ms.

You’ve eliminated the extra disk reads, and the elapsed time has dropped significantly too.

You should be able to achieve a similar speedup for the query that uses v3 by adding v3 to the nonclustered index to create a covered index and avoid the key lookups:

create unique nonclustered index IndV2IX on ind(v2)

INCLUDE (v3)

WITH (DROP_EXISTING = ON)

This command will include v3 in the existing index, without having to separately drop it first.

Here’s the updated DBCC SHOWCONTIG for the nonclustered index:

- Pages Scanned................................: 1359

- Extents Scanned..............................: 170

- Avg. Page Density (full).....................: 99.98%

The index has grown from 866 pages and 109 extents to 1,359 pages and 170 extents, while the page density remains close to 100 percent. It’s still a little smaller than the clustered index because of some extra information that is stored in the clustered index other than just the column data.

The new query plan for the original query with v3 that you’re optimizing is exactly the same as the plan shown earlier for the query without v3:

Here are the statistics:

physical reads 3, read-ahead reads 0

CPU time = 0 ms, elapsed time = 12 ms.

The results are also the same as the previous test. However, the price for this performance is that you now have two complete copies of the table: one with v1 as the index, and one with v2 as the index. Therefore, while SELECTs of the type you used in the examples here will be fast, INSERTs, UPDATEs, and DELETEs will be slower, because they now have to change two physical tables instead of just one.

Index Fragmentation

Now let’s see what happens if you insert 5,000 rows of data that has a random value for v2 that’s in the range of the existing values, which is between zero and one million. The initial values were all even numbers, so you can avoid uniqueness collisions by using odd numbers. Here’s the T-SQL:

declare @i int

SET @i = 0

WHILE (@i < 5000)

BEGIN

INSERT INTO ind

(v2, v3)

VALUES

((CONVERT(INT, RAND() * 500000) * 2) + 1, 'test')

SET @i = @i + 1

END

Those 5,000 rows are 1 percent of the 500,000 rows already in the table. After running the script, here’s what DBCC SHOWCONTIG reports for the clustered index:

- Pages Scanned................................: 1564

- Extents Scanned..............................: 199

- Avg. Page Density (full).....................: 99.70%

For the nonclustered index, it reports the following:

- Pages Scanned................................: 2680

- Extents Scanned..............................: 339

- Avg. Page Density (full).....................: 51.19%

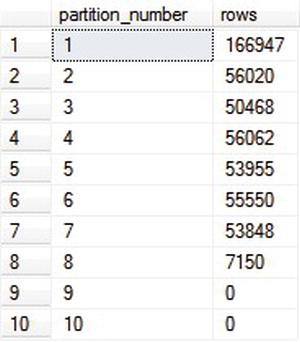

Notice that the clustered index has just a few more pages and extents, and it remains at close to 100 percent page density. However, the nonclustered index has gone from 1,359 pages and 170 extents at close to 100 percent density to 2,680 pages, 339 extents, and about 50 percent density. Since the clustered index doesn’t depend on the value of v2 and since v1 is an IDENTITY value that steadily increases, the new rows can just go at the end of the table.

Rows in the nonclustered index are ordered based on v2. When a new row is inserted, SQL Server places it in the correct page and position to maintain the sort order on v2. If that results in more rows than will fit on the page, then the page is split in two, and half the rows are placed in each page. That’s why you see the average page density at close to 50 percent.

Excessive page splits can have a negative impact on performance, since they can mean that many more pages have to be read to access the same amount of data.

You can explicitly defragment the indexes for the ind table with the following command:

DBCC INDEXDEFRAG(SampleDB, 'ind')

COLUMNSTORE Index

SQL Server 2012 introduced a new, special purpose type of index called COLUMNSTORE, available only in the Enterprise and Developer editions. COLUMNSTORE indexes improve the efficiency of index scans. They’re useful in scenarios where you have very large tables (generally multiple millions of rows) that you often query using joins and aggregates, such as with a fact table in a data warehouse.

One downside of COLUMNSTORE indexes is that they cause your table to become read-only. Limited modification is possible without dropping and re-creating the index on the entire table, but it requires using data partitioning (covered later in this chapter) to switch out part of the table.

The upside of COLUMNSTORE indexes is that they can provide a very significant performance boost for certain types of queries. Scans of even billions of rows can complete in a few seconds or less.

As the name implies, COLUMNSTORE indexes organize data by columns, rather than by rows as in a conventional index. The indexes don’t have a particular order. SQL Server compresses the rows within each column after first re-ordering them to optimize the amount of compression that’s possible.

As an example, let’s optimize the following query, which uses a table scan:

SELECT COUNT(*), v3

FROM ind

GROUP BY v3

Here are the initial statistics:

Scan count 1, logical reads 1557, physical reads 2, read-ahead reads 1595

CPU time = 219 ms, elapsed time = 408 ms.

Create the index:

CREATE NONCLUSTERED COLUMNSTORE INDEX indcolIX

ON ind (v1, v2, v3)

It’s a sound practice to include all of the eligible columns of your table in the index. There are several data types you can’t use in a COLUMNSTORE index: BINARY, VARBINARY, IMAGE, TEXT, NTEXT, VARCHAR(MAX), CURSOR, HIERARCHYID, TIMESTAMP, UNIQUEIDENTIFIER, SQLVARIANT, XML, DECIMAL or NUMERIC with precision larger than 18, and DATETIMEOFFSET with precision greater than 2.

Here are the statistics after repeating the query with the index in place:

Scan count 1, logical reads 10, physical reads 1, read-ahead reads 2

CPU time = 125 ms, elapsed time = 140 ms.

Due to the high compressibility of the data (all rows contain the same value of v3), the query only requires three physical disk reads for the index, compared to 1,597 reads without the index. CPU time and elapsed time are also much lower.

Miscellaneous Query Optimization Guidelines

Here are a few high-level guidelines for optimizing your queries:

- If you’re writing new queries or code that invokes queries, you should make frequent and regular use of the SQL Profiler to get a feeling for how many round-trips your code is making and how long the queries are taking. For me, it plays an irreplaceable role when I’m doing that kind of work.

- Avoid cursors. Processing data a row-at-a-time in T-SQL is very expensive. Although there are exceptions, 99 percent of the time, it’s worth the effort to rewrite your queries to use set-based semantics, if possible. Alternatives include things like table variables, temporary tables, and so on. I’ve rewritten some cursor-based queries that ran 1,000 times faster as set-based operations. If you can’t avoid cursors, identify all read-only queries, and mark the associated cursors as

FAST_FORWARD.- Avoid triggers. Triggers are a powerful tool, but you should think of them as a last resort; use them only if there is no other way. They can introduce massive amounts of overhead, which tends to be of the slow, row-at-a-time type. Because triggers are nominally hidden from the view of developers, what’s worse is that the extra overhead is hidden too.

- To avoid performance problems because of deadlocks, make a list of all the stored procedures in your system and the order in which they modify tables, and work to ensure that order is consistent from one stored procedure to another. In cases where consistent order isn’t possible, either use an increased transaction isolation level or use locking hints or increased lock granularity.

- Use

SET NOCOUNT ONat the top of most of your stored procedures to avoid the extra overhead of returning a result row count. However, when you want to register the results of a stored procedure withSqlDependencyorSqlCacheDependency, then you must not useSET NOCOUNT ON. Similarly, some of the logic that synchronizesDataSets uses the reported count to check for concurrency collisions.

Data Paging

If you have a large database table and you need to present all or part of it to your users, it can be painfully slow to present a large number of rows on a single page. Imagine a user trying to scroll through a web page with a million rows on it. Not a good idea. A better approach is to display part of the table. While you’re doing so, it’s also important to avoid reading the entire table at both the web tier and the database tier.

Common Table Expressions

You can use common table expressions (CTEs) to address this issue (among many other cool things). Using the PageViews table from the beginning of the chapter as an example, here’s a stored procedure that returns only the rows you request, based on a starting row and a page size:

CREATE PROC [Traffic].[PageViewRows]

@startrow INT,

@pagesize INT

AS

BEGIN

SET NOCOUNT ON

;WITH ViewList ([row], [date], [user], [url]) AS (

SELECT ROW_NUMBER() OVER (ORDER BY PvId) [row], PvDate, UserId, PvUrl

FROM [Traffic].[PageViews]

)

SELECT [row], [date], [user], [url]

FROM ViewList

WHERE [row] BETWEEN @startrow AND @startrow + @pagesize - 1

END

The query works by first declaring an outer frame, including a name and an optional list of column names, in this case, ViewList ([row], [date], [user], [url]).

Next, you have a query that appears to retrieve all the rows in the table, while also applying a row number, using the ROW_NUMBER() function, with which you need to specify the column you want to use as the basis for numbering the rows. In this case, you’re using OVER (ORDER BY PvId). The columns returned by this query are the same ones listed in the outer frame. It might be helpful to think of this query as returning a temporary result set.

Although the ROW_NUMBER() function is very handy, unfortunately you can’t use it directly in a WHERE clause. This is what drives you to using a CTE in the first place, along with the fact that you can’t guarantee that the PvId column will always start from one and will never have gaps.

Finally, at the end of the CTE, you have a query that references the outer frame and uses a WHERE clause against the row numbers generated by the initial query to limit the results to the rows that you want to display. SQL Server only reads as many rows as it needs to satisfy the WHERE clause; it doesn’t have to read the entire table.

![]() Note The

Note The WITH clause in a CTE should be preceded by a semicolon to ensure that SQL Server sees it as the beginning of a new statement.

OFFSET

SQL Server 2012 introduced an alternative approach to CTEs for data paging that’s much easier to use: OFFSET.

For example:

SELECT PvId [row], PvDate, UserId, PvUrl

FROM [Traffic].[PageViews]

ORDER BY [row]

OFFSET 10 ROWS FETCH NEXT 5 ROWS ONLY

OFFSET requires an ORDER BY clause. An OFFSET of zero produces the same results as TOP.

Detailed Example of Data Paging

To demonstrate data paging in action, let’s build an example that allows you to page through a table and display it in a GridView control.

Markup

First, add a new web form to your web site, called paging.aspx, and edit the markup as follows:

<%@ Page Language="C#" EnableViewState="false" AutoEventWireup="false"

CodeFile="paging.aspx.cs" Inherits="paging" %>

Here you’re using several of the best practices discussed earlier: both ViewState and AutoEventWireup are disabled.

<!DOCTYPE html>

<html>

<head runat="server">

<title></title>

</head>

<body>

<form id="form1" runat="server">

<div>

<asp:GridView ID="pvgrid" runat="server" AllowPaging="true"

PageSize="5" DataSourceID="PageViewSource">

<PagerSettings FirstPageText="First" LastPageText="Last"

Mode="NumericFirstLast" />

</asp:GridView>

In the GridView control, enable AllowPaging, set the PageSize, and associate the control with a data source. Since you want to use the control’s paging mode, using a data source is required. Use the <PagerSettings> tag to customize the page navigation controls a bit.

<asp:ObjectDataSource ID="PageViewSource" runat="server"

EnablePaging="True" TypeName="Samples.PageViews"

SelectMethod="GetRows" SelectCountMethod="GetCount"

OnObjectCreated="PageViewSource_ObjectCreated"

OnObjectDisposing="PageViewSource_ObjectDisposing">

</asp:ObjectDataSource>

</div>

</form>

</body>

</html>

Use an ObjectDataSource as the data source, since you want to have programmatic control over the details. Set EnablePaging here, associate the control with what will be your new class using TypeName, and set a SelectMethod and a SelectCountMethod, both of which will exist in the new class. The control uses SelectMethod to obtain the desired rows and SelectCountMethod to determine how many total rows there are so that the GridView can correctly render the paging navigation controls. Also, set OnObjectCreated and OnObjectDisposing event handlers.

Stored Procedure

Next, use SSMS to modify the stored procedure that you used in the prior example as follows:

ALTER PROC [Traffic].[PageViewRows]

@startrow INT,

@pagesize INT,

@getcount BIT,

@count INT OUT

AS

BEGIN

SET NOCOUNT ON

SET @count = -1;

IF @getcount = 1

BEGIN

SELECT @count = count(*) FROM [Traffic].[PageViews]

END

SELECT PvId [row], PvDate, UserId, PvUrl

FROM [Traffic].[PageViews]

ORDER BY [row]

OFFSET @startrow - 1 ROWS FETCH NEXT @pagesize ROWS ONLY

END

Rather than requiring a separate round trip to determine the number of rows in the table, what the example does instead is to add a flag to the stored procedure, along with an output parameter. The T-SQL incurs the overhead of running the SELECT COUNT(*) query (which requires a table scan) only if the flag is set, and returns the result in the output parameter.

Code-Behind

Next, edit the code-behind as follows:

using System;

using System.Web.UI;

using System.Web.UI.WebControls;

using Samples;

public partial class paging : Page

{

protected override void OnInit(EventArgs e)

{

base.OnInit(e);

this.RegisterRequiresControlState(this);

}

Since AutoEventWireup is disabled, override the OnEvent-style methods from the base class.

The ObjectDataSource control needs to know how many rows there are in the target table. Obtaining the row count is expensive; counting rows is a form of aggregation query that requires reading every row in the table. Since the count might be large and doesn’t change often, you should cache the result after you get it the first time to avoid having to repeat that expensive query.