VMware was formed as a company in 1998 to provide x86 virtualization solutions. Virtualization was introduced in the 1970s to allow applications to share and fully utilize centralized computing resources on mainframe systems. Through the 1980s and 1990s, virtualization fell out of favor as the low-cost x68 desktops and servers established a model of distributed computing. The broad use of Linux and Windows solidified x86 as the standard architecture for server computing. This model of computing introduced new management challenges, including the following:

Lower server utilization. As x86 server use spread through organizations, studies began to find that the average physical utilization of servers ranged between 10 and 15 percent. Organizations typically installed only one application per server to minimize the impact of updates and vulnerabilities rather than installing multiple applications per physical host to drive up overall utilization.

Increased infrastructure and management costs. As x86 servers proliferated through information technology (IT) organizations, the operational costs—including power, cooling, and facilities—increased dramatically for servers that were not being fully utilized. The increase in server counts also added management complexity that required additional staff and management applications.

Higher maintenance load for end-user desktops. Although the move to a distributed computing model provided freedom and flexibility to end users and the applications they use, this model increased the management and security load on IT departments. IT staff faced numerous challenges, including conforming desktops to corporate security policies, installing more patches, and dealing with the increased risk of security vulnerabilities.

In 1999, VMware released VMware Workstation, which was designed to run multiple operating systems (OSs) at the same time on desktop systems. A person in a support or development type position might require access to multiple OSs or application versions, and prior to VMware Workstation, this would require using multiple desktop systems or constantly restaging a single system to meet immediate needs. Workstation significantly reduced the hardware and management costs in such as scenario, as those environments could be hosted on a single workstation. With snapshot technology, it was simple to return the virtual machines to a known good configuration after testing or development, and as the virtual machine configuration was stored in a distinct set of files, it was easy to share gold virtual machine images among users.

In 2001, VMware released both VMware GSX Server and ESX Server. GSX Server was similar to Workstation in that a host OS, either Linux or Windows, was required on the host prior to the installation of GSX Server. With GSX Server, users could create and manage virtual machines in the same manner as with Workstation, but the virtual machines were now hosted on a server rather than a user’s desktop. GSX Server would later be renamed VMware Server. VMware ESX Server was also released as a centralized solution to host virtual machines, but its architecture was significantly different from that of GSX Server. Rather than requiring a host OS, ESX was installed directly onto the server hardware, eliminating the performance overhead, potential security vulnerabilities, and increased management required for a general server OS such as Linux or Windows. The hypervisor of ESX, known as the VMkernel, was designed specifically to host virtual machines, eliminating significant overheard and potential security issues.

VMware ESX also introduced the VMware Virtual Machine File System (VMFS) partition format. The original version released with ESX 1.0 was a simple flat file system designed for optimal virtual machine operations. VMFS version 2 was released with ESX Server 2.0 and implemented clustering capabilities. The clustering capabilities added to VMFS allowed access to the same storage by multiple ESX hosts by implementing per-file locking. The capabilities of VMFS and features in ESX opened the door in 2003 for the release of VMware VirtualCenter Server (now known as vCenter Server). VirtualCenter Server provided centralized management for ESX hosts and included innovative features such as vMotion, which allowed for the migration of virtual machines between ESX hosts without interruption, and High Availability clusters.

In 2007, VMware publicly released its second-generation bare-metal hypervisor VMware ESXi (ESX integrated) 3.5. VMware ESX 3 Server ESXi Edition was in production prior to this, but this release was never made public. ESXi 3.5 first appeared at VMworld in 2007, when it was distributed to attendees on a 1GB universal serial bus (USB) flash device. The project to design ESXi began around 2001 with a desire to remove the console operating system (COS) from ESX. This would reduce the surface attack area of the hypervisor level, make patching less frequent, and potentially decrease power requirements if ESXi could be run in an embedded form. ESXi was initially planned to be stored in the host’s read-only memory (ROM), but the design team found that this would not provide sufficient storage; so, early versions were developed to boot from Pre-boot Execution Environment (PXE). Concerns about the security of PXE led to a search for another solution, which was eventually determined to be the use of a flash device embedded within the host. VMware worked with original equipment manufacturer (OEM) vendors to provide servers with embedded flash, and such servers were used to demonstrate ESXi at VMworld 2007.

The release of VMware ESXi generated a lot of interest, especially due to the lack of the COS. For seasoned ESX administrators, the COS provided an important avenue for executing management scripts and troubleshooting commands. The COS also provided the mechanism for third-party applications such as backup and hardware monitoring to operate. These challenges provided some significant hurdles for administrators planning their migration from ESX to ESXi. VMware released the Remote Command-Line Interface (RCLI) to provide access to the commands that were available in the ESX COS, but there were gaps in functionality that made a migration from ESX to ESXi challenging.

With the release vSphere 4.0 and now in 2010 of vSphere 4.1, VMware has made significant progress toward alleviating the management challenges due to the removal of the COS. Improvements have been made in the RCLI (now known as the vSphere Command-Line Interface [vCLI]), and the release of PowerCLI, based on Windows PowerShell, has provided another scripting option. Third-party vendors have also updated applications to work with the vSphere application programming interface (API) that ESXi exposes for management purposes.

VMware has also stated that vSphere 4.1 is the last release that includes VMware ESX and its COS. For existing vSphere environments, this signals the inevitable migration from VMware ESX to ESXi. The purpose of this book is to facilitate your migration from ESX to ESXi. With ESXi, you have a product that supports the same great feature set you find with VMware ESX. This chapter discusses the similarity of features and highlights some of the differences in configuring and using ESXi due to its architecture. The chapters in this book review the aspects of installation, configuration, management, and security that are different with ESXi than they are when you manage your infrastructure with ESX.

In this chapter, you shall examine the following items:

Understanding the architecture of ESXi

Managing VMware ESXi

Comparing ESXi and ESX

Exploring what’s new in vSphere 4.1

The technology behind VMware ESXi represents VMware’s next-generation hypervisor, which will provide the foundation of VMware virtual infrastructure products for years to come. Although functionally equivalent to ESX, ESXi eliminates the Linux-based service console that is required for management of ESX. The removal from its architecture results in a hyper-visor without any general operating system dependencies, which improves reliability and security. The result is a footprint of less than 90MB, allowing ESXi to be embedded onto a host’s flash device and eliminating the need for a local boot disk.

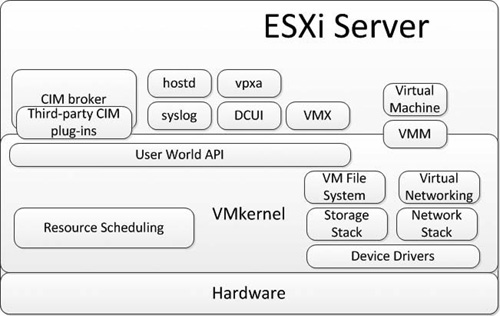

The heart of ESXi is the VMkernel shown in Figure 1.1. All other processes run on top of the VMkernel, which controls all access to the hardware in the ESXi host. The VMkernel is a POSIX-like OS developed by VMware and is similar to other OSs in that it uses process creation, file systems, and process threads. Unlike a general OS, the VMkernel is designed exclusively around running virtual machines, thus the hypervisor focuses on resource scheduling, device drivers, and input/output (I/O) stacks. Communication for management with the VMkernel is made via the vSphere API. Management can be accomplished using the vSphere client, vCenter Server, the COS replacement vCLI, or any other application that can communicate with the API.

Executing above the VMkernel are numerous processes that provide management access, hardware monitoring, as well as an execution compartment in which a virtual machine operates. These processes are known as “user world” processes, as they operate similarly to applications running on a general OS, except that they are designed to provide specific management functions for the hypervisor layer.

The virtual machine monitor (VMM) process is responsible for providing an execution environment in which the guest OS operates and interacts with the set of virtual hardware that is presented to it. Each VMM process has a corresponding helper process known as VMX and each virtual machine has one of each process.

The hostd process provides a programmatic interface to the VMkernel. It is used by the vSphere API and for the vSphere client when making a direct management connection to the host. The hostd process manages local user and groups as well as evaluates the privileges for users that are interacting with the host. The hostd also functions as a reverse proxy for all communications to the ESXi host.

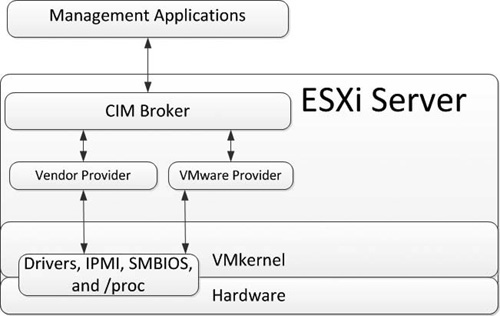

VMware ESXi relies on the Common Information Model (CIM) system for hardware monitoring and health status. The CIM broker provides a set of standard APIs that remote management applications can use to query the hardware status of the ESXi host. Third-party hardware vendors are able to develop their own hardware-specific CIM plug-ins to augment the hardware information that can be obtained from the host.

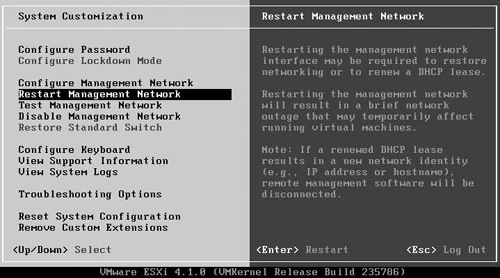

The Direct Console User Interface (DCUI) process provides a local management console for ESXi. The DCUI appears as a BIOS-like, menu-driven interface, as shown in Figure 1.2, for initial configuration and troubleshooting. To access the DCUI, a user must provide an administrative account such as root, but the privilege can be granted to other users, as discussed in Chapter 11, “Under the Hood with the ESXi Tech Support Mode.” Using the DCUI is discussed in Chapter 3, “Management Tools.”

The vpxa process is responsible for vCenter Server communications. This process runs under the security context of the vpxuser. Commands and queries from vCenter Server are received by this process before being forwarded to the hostd process for processing. The agent process is installed and executes when the ESXi host is joined to a High Availability (HA) cluster. The syslog daemon is responsible for forwarding logging data to a remote syslog receiver. The steps to configure the syslog daemon are discussed in Chapter 6, “System Monitoring and Management.” ESXi also includes processes for Network Time Protocol (NTP)–based time synchronization and for Internet Small Computer System Interface (iSCSI) target discovery.

To enable management communication, ESXi opens a limited number of network ports. As mentioned previously, all network communication with the management interfaces is proxied via the hostd process. All unrecognized network traffic is discarded and is thus not able to reach other system processes. The common ports including the following:

80. This port provides access to display only the static Welcome page. All other traffic is redirected to port 443.

443. This port acts as a reverse proxy to a number of services to allow for Secure Sockets Layer (SSL) encrypted communication. One of these services is the vSphere API, which provides communication for the vSphere client, vCenter Server, and vCLI.

902. Remote console communication between the vSphere client and ESXi host is made over this port.

5989. This port is open to allow communication with the CIM broker to obtain hardware health data for the ESXi host.

Rather than relying on COS agents to provide management functionality, as is the case with ESX, ESXi exposes a set of APIs that enable you to manage your ESXi hosts. This agentless approach simplifies deployments and management upkeep. To fill the management gap left by the removal of the COS, VMware has provided two remote command-line options with the vCLI and Power-CLI. These provide a CLI and scripting capabilities in a more secure manner than accessing the console of a vSphere host. For last-resort troubleshooting, ESXi includes both a menu-driven interface with the DCUI and a command-line interface at the host console with Tech Support Mode.

ESXi can be deployed in the following two formats: Embedded and Installable. With ESXi Embedded, your server comes preloaded with ESXi on a flash device. You simply need to power on the host and configure your host as appropriate for your environment. The DCUI can be used to configure the IP configuration for the management interface, to set a hostname and DNS configuration, and also to set a password for the root account. The host is then ready to join your virtual infrastructure for further configuration such as networking and storage. This configuration can be accomplished remotely with a configuration script or features within vCenter Server such as Host Profiles or vNetwork Distributed Switches. With ESXi Embedded, a new host can be ready to begin hosting virtual machines within a very short time frame. ESXi Installable is intended for installation on a host’s boot disk. New to ESXi 4.1 is support for Boot from storage area network (SAN), which provides the capability to function with diskless servers. ESXi 4.1 also introduces scripted installations for ESXi Installable. The ESXi installer can be started from either a CD or PXE source and the installation file can be accessed via a number of protocols, including HyperText Transfer Protocol (HTTP), File Transfer Protocol (FTP), and Network File System (NFS). The installation file permits scripts to be run pre-install, post-install, and on first boot. This enables advanced configuration, such as the creating of the host’s virtual networking, to be performed as a completely automated function. Scripted installations are discussed further in Chapter 4, “Installation Options.”

For post-installation management, VMware provides a number of options both graphical and scripted. The vSphere client can be used to manage an ESXi directly or to manage a host via vCenter Server. To provide functionality that was previously available only in the COS, the vSphere client has been enhanced to allow configuration of such items as the following:

Time configuration. Your ESXi host can be set to synchronize time with a NTP server.

Datastore file management. You can browse your datastores and manage files, including moving files between datastores and copying files from and to your management computer.

Management of users. You can create users and groups to be used to assign privileges directly to your ESXi host.

Exporting of diagnostic data. The client option exports all system logs from ESXi for further analysis.

For scripting and command-line–based configuration, VMware provides the following two management options: the vCLI and PowerCLI. The vCLI was developed as a replacement to the esxcfg commands found in the service console of ESX. The commands execute with the exact same syntax with additional options added for authentication and to specify the host to run the commands against. The vCLI is available for both Linux and Windows, as well as in a virtual appliance format known as the vSphere Management Assistant (vMA). The vCLI includes commands such as vmkfstools, vmware-cmd, and resxtop, which is the vCLI equivalent of esxtop. PowerCLI extends Microsoft PowerShell to allow for the management of vCenter Server objects such as hosts and virtual machines. PowerShell is an object-orientated scripting language designed to replace the traditional Windows command prompt and Windows Scripting Host. With relatively simple PowerCLI scripts, it is possible to run complex tasks on any number of ESXi hosts or virtual machines. These scripting options are discussed further in Chapter 8, “Scripting and Automation with the vCLI,” and Chapter 9, “Scripting and Automation with PowerCLI.”

If you want to enforce central audited access to your ESXi through vCenter Server, ESXi includes Lockdown Mode. This can be used to disable all access via the vSphere API except for vpxuser, which is the account used by vCenter Server to communicate with your ESXi host. This security feature ensures that the critical root account is not used for direct ESXi host configuration. Lockdown Mode affects connections made with the vSphere client and any other application using the API such as the vCLI. Other options for securing your ESXi hosts are discussed in Chapter 7, “Securing ESXi.”

For third-party systems management and backup products that have relied on a COS agent, VMware has been working with its partners to ensure that these products are compatible with the vSphere API and thus compatible with ESXi. The API integration model significantly reduces management overhead by eliminating the need to install and maintain software agents on your vSphere host.

The Common Information Model is an open standard that provides monitoring of the hardware resources in ESXi without the dependence on COS agents. The CIM implementation in ESXi consists of a CIM object manager (the CIM broker) and a number of CIM providers, as shown in Figure 1.3. The CIM providers are developed by VMware and hardware partners and function to provide management and monitoring access to the device drivers and hardware in the ESXi host. The CIM broker collects all the information provided by the various CIM providers and makes this information available to management applications via a standard API.

Due to the firmware-like architecture of ESXi, keeping your systems up to date with patches and upgrades is far simpler than with ESX. With ESXi, you no longer need to review a number of patches and decide which is applicable to your ESX host; now each patch is a complete system image and contains all previously released bug fixes and enhancements. ESXi hosts can be patched with vCenter Update Manager or the vCLI. As the ESXi system partitions contain both the new system image and the previously installed system image, it is a very simple and quick process to revert to the prepatched system image.

Discussions of ESXi and ESX most often focus on the differences in architecture and management due to the removal of the COS. The availability of ESXi as a free product also leads some to believe that ESXi may be inferior or not as feature-rich as ESX. As discussed in the previous sections, the architecture of ESXi is superior and represents the future of VMware’s hypervisor design. The following section explores the features of vSphere 4.1 that are available and identical with both ESXi and ESX.

The main feature set for vSphere 4.1 is summarized in Table 1.1. Items listed in this table are available in both ESXi and ESX. The product vSphere hypervisor refers to the free offering of ESXi. This edition can be run only as a standalone host and the API for this edition limits scripts to read-only functions. With the other license editions, you have the option of running ESXi or ESX. This allows you to run a mixed environment if you plan to make a gradual migration to ESXi. If you are considering the Essentials or Essentials Plus license editions, these are available in license kits that include vCenter Server Foundation; they are limited to three physical hosts.

Table 1.1. vSphere Feature List

vSphere Hypervisor | Essentials | Essentials Plus | Standard | Advanced | Enterprise | Enterprise Plus | |

|---|---|---|---|---|---|---|---|

Host Capabilities | |||||||

Memory per Host | 256GB | 256GB | 256GB | 256GB | 256GB | 256GB | Unlimited |

Cores per Processor | 6 | 6 | 6 | 6 | 12 | 6 | 12 |

vCenter Agent License | Not Included | X | X | X | X | X | X |

Product Features | |||||||

Thin Provisioning | X | X | X | X | X | X | X |

Update Manager | X | X | X | X | X | X | |

vStorage APIs for Data Protection | X | X | X | X | X | X | |

Data Recovery | X | Sold separately | X | X | X | ||

High Availability | X | X | X | X | X | ||

vMotion | X | X | X | X | X | ||

Virtual Serial Port Concentrator | X | X | X | ||||

Hot Add Memory or CPU | X | X | X | ||||

vShield Zones | X | X | X | ||||

Fault Tolerance | X | X | X | ||||

vStorage APIs for Array Integration | X | X | |||||

vStorage APIs for Multipathing | X | X | |||||

Storage vMotion | X | X | |||||

Distributed Resources Scheduler | X | X | |||||

Distributed Power Management | X | X | |||||

Storage I/O Control | X | ||||||

Network I/O Control | X | ||||||

Distributed Switch | X | ||||||

Host Profiles | X |

Beginning with host capabilities, both ESXi and ESX support up to 256GB of host memory for most licensed editions and an unlimited amount of memory when either is licensed at the Enterprise Plus level. Both editions support either 6 or 12 cores per physical processor slot depending on the license edition that you choose. As support for ESXi has increased, hardware vendors have improved certification testing for ESXi, and you’ll find that support for ESXi and ESX is nearly identical. With the exception of the free VMware hypervisor offering, all license additions include a vCenter Server Agent license. The process of adding or removing host to vCenter Server is identical between ESXi and ESX, as is the case for assigning licenses to specific hosts in your datacenter.

Tip

If you plan to install ESXi with hardware components such as storage controllers or network cards that are not on VMware’s Hardware Compatibility List (HCL), you should check with the vendor for specific installation instructions. ESXi does not enable you to add device drivers manually during the installation process as you can with ESX.

The following are some of the common features worth mentioning. When you are configuring these features with the vSphere client, in almost all cases you won’t see any distinctions between working with ESXi and ESX. Thin Provisioning is a feature designed to provide a higher level of storage utilization. Prior to vSphere, when a virtual machine was created the entire space for the virtual disk was allocated on your storage datastore. This could lead to a waste of space when the virtual machines did not use the storage allocated. With Thin Provisioning, storage used by virtual disks is dynamically allocated, allowing for the overallocation of storage to achieve higher utilization. Improvements in vCenter Server alerts allow for the monitoring of datastore usage to ensure that datastores retain sufficient free space for snapshot and other management files. vSphere also introduced the ability to grow datastores dynamically. With ESXi and ESX, if a datastore is running low on space, you no longer have to rely on using extents or migrating virtual machines to another datastore. Rather, the array storing the Virtual Machine File System (VMFS) datastore can be expanded using the management software for your storage system and then the datastore can be extended using the vSphere client or the vCLI.

Update Manager is a feature to simplify the management of patches for ESXi, ESX, and virtual machines within your infrastructure. Use of this feature is covered in Chapter 10, “Patching and Updating ESXi.” While the patching processes for ESXi and ESX are significantly different, Update Manager provides a unified view to keeping both flavors of vSphere up to date.

vMotion, High Availability (HA), Distributed Resource Scheduler (DRS), Storage vMotion, and Fault Tolerance (FT) are some of the features included with vSphere to ensure a high level of availability for your virtual machines. Configuration of HA and DRS clusters is the same regardless of whether you choose ESXi or ESX, and you can run mixed clusters to allow for a gradual migration to ESXi from ESX.

The VMware vNetwork Distributed Switch (dvSwitch) provides centralized configuration of networking for hosts within your vCenter Server datacenter. Rather than configuring networking on a host-by-host level, you can centralize configuration and monitoring of your virtual networking to eliminate the risk of a configuration mistake at the host level, which could lead to downtime or a security compromise of a virtual machine.

The last feature this section highlights is Host Profiles. This feature is discussed in subsequent chapters. Host Profiles are used to standardize and simplify how you manage your vSphere host configurations. With Host Profiles, you capture a policy that contains the configuration of networking, storage, security settings, and other features from a properly configured host. That policy can then be applied to other hosts to ensure configuration compliance and, if necessary, an incompliant host can be updated with the policy to ensure that all your hosts maintain a proper configuration. This is one of the features that, although available with both ESXi and ESX, reflects the architectural changes between the two products. A Host Profile that you create for your ESX host may include settings for the COS. Such settings do not apply to ESXi. Likewise, with ESXi, you can configure the service settings for the DCUI, but these settings are not applicable to ESX.

When ESXi was first released, VMware documented a comparison between the two hypervisors that highlighted some of the differences between the products. New Knowledge Base (KB) articles were published as subsequent versions of ESXi were released. The following list documents the Knowledge Base articles for each release:

ESXi 3.5: http://kb.vmware.com/kb/1006543

ESXi 4.0: http://kb.vmware.com/kb/1015000

ESXi 4.1: http://kb.vmware.com/kb/1023990

These KB articles make worthwhile reading, as they highlight the work that VMware has done to bring management parity to VMware ESXi. The significant differences are summarized in Table 1.2.

Table 1.2. ESXi and ESX Differences

ESX 3.5 | ESX 4.0 | ESX 4.1 | ESXi 3.5 | ESXi 4.0 | ESXi 4.1 | |

|---|---|---|---|---|---|---|

Capability | ||||||

Service Console (COS) | Present | Present | Present | Removed | Removed | Removed |

Command-Line Interface | COS | COS + vCLI | COS + vCLI | RCLI | PowerCLI + vCLI | PowerCLI + vCLI |

Advanced Troubleshooting | COS | COS | COS | Tech Support Mode | Tech Support Mode | Tech Support Mode |

Scripted Installations | X | X | X | X | ||

Boot from SAN | X | X | X | X | ||

SNMP | X | X | X | Limited | Limited | Limited |

Active Directory Integration | 3rd Party in COS | 3rd Party in COS | X | X | ||

Hardware Monitoring | 3rd Party COS Agents | 3rd Party COS Agents | 3rd Party COS Agents | CIMProviders | CIMProviders | CIMProviders |

Web Access | X | X | ||||

Host Serial Port Connectivity | X | X | X | X | ||

Jumbo Frames | X | X | X | X | X |

The significant architectural difference between ESX and ESXi is the removal of the Linux COS. This change has an impact on a number of related aspects, including installation, CLI configuration, hardware monitoring, and advanced troubleshooting. With ESX, the COS is a Linux environment that provides privileged access to the ESX VMkernel. Within the COS, you can manage your host by executing commands and scripts, adding device drivers, and installing Linux-based management agents. As seen previously in this chapter, ESXi was designed to make a server a virtualization appliance. Thus, ESXi behaves more like a firmware-based device than a traditional OS. ESXi includes the vSphere API, which is used to access the VMkernel by management or monitoring applications.

CLI configuration for ESX is accomplished via the COS. Common tasks involve items such as managing users, managing virtual machines, and configuring networking. With ESXi 3.5, the RCLI was provided as an installation package for Linux and Windows, as well as in the form of a virtual management appliance. Some COS commands such as esxcfg-info, esxcfg-resgrp, and esxcfg-swiscsi were not available in the initial RCLI, making a wholesale migration to ESXi difficult for diehard COS users. Subsequent releases of the vCLI have closed those gaps, and VMware introduced PowerCLI, which provides another scripting option for managing ESXi. The COS on ESX has also provided a valuable troubleshooting avenue that allows administrators to issue commands to diagnose and report support issues. With the removal of the COS, ESXi offers several alternatives for this type of access. First, the DCUI enables the user to repair or reset the system configuration as well as to restart management agents and to view system logs. Second, the vCLI provides a number of commands, such as vmware-cmd and resxtop, which can be used to remotely diagnose issues. The vCLI is explored further in Chapter 8, and relevant examples are posted throughout the other chapters in this book. Last, ESXi provides Tech Support Mode (TSM), which allows low-level access to the VMkernel so that you can run diagnostic commands. TSM can be accessed at the console of ESXi or remotely via Secure Shell (SSH). TSM is not intended for production use, but it provides an environment similar to the COS for advanced troubleshooting and configuration.

Two gaps between ESXi and ESX when ESXi was first released were scripted installs and Boot from SAN. ESX supports KickStart, which can be used to fully automate installations. As you will see in subsequent chapters, ESXi is extremely easy and fast to install, but it was initially released without the ability to automate installations, making deployment in large environments more tedious. While the vCLI could be used to provide post-installation configuration, there was not an automated method to deploy ESXi until support for scriptable installations was added in ESXi 4.1. With ESXi 4.1, scripted installations are supported using a mechanism similar to KickStart, including the ability to run pre- and post-installation scripts. VMware ESX also supports Boot from SAN. With this model, a dedicated logical unit number (LUN) must be configured for each host. With the capability to run as an embedded hypervisor, prior versions of ESXi were able to operate in a similar manner without the need for local storage. With the release of ESXi 4.1, Boot from SAN is now supported as an option for ESXi Installable.

ESX supports Simple Network Management Protocol (SNMP) for both get and trap operations. SNMP is further discussed in Chapter 6. Configuration of SNMP on ESX is accomplished in the COS and it is possible to add additional SNMP agents within the COS to provide hardware monitoring. ESXi offers only limited SNMP support. Only SNMP trap operations are supported and it is not possible to install additional SNMP agents.

It has always been possible to integrate ESX with Active Directory (AD) through the use of third-party agents, allowing administrators to log in directly to ESX with an AD account and eliminating the need to use the root account for administrative tasks. Configuration of this feature was accomplished within the COS. With vSphere 4.1, both editions now support AD integration and configuration can be accomplished via the vSphere client, Host Profiles, or with the vCLI. This is demonstrated in Chapter 6.

Hardware monitoring of ESX has been accomplished via agent software installed within the COS. Monitoring software can communicate directly with the agents to ascertain hardware health and other hardware statistics. This is not an option with the firmware model employed with ESXi, and as discussed earlier, hardware health is provided by standards-based CIM providers. VMware partners are able to develop their own proprietary CIM providers to augment the basic health information that is reported by the ESXi standard providers.

The initial version of ESX was configured and managed via a Web-based interface with only a simple Windows application required on a management computer to access the console of a virtual machine. This feature was available in later versions of ESX, and via Web browser plug-ins, it was possible to provide a basic management interface to ESX without the need for a client installation on the management computer. Due to the lean nature of the ESXi system image, this option is not available. It is possible to provide this functionality to ESXi hosts that are managed with vCenter Server.

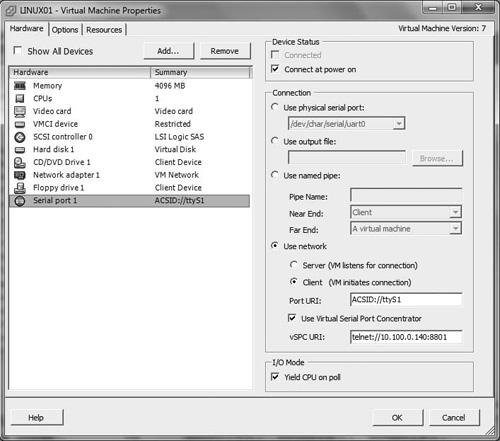

Via the COS, ESX has supported connecting a host’s serial ports to a virtual machine. This capability provided the option to virtualize servers that required physical connectivity to a serial port–based device connected to the host. This option has not been available with ESXi until the release of ESXi 4.1. When configuring a serial port on a virtual machine, you can select between the option of Use Physical Serial Port on the Host, Output to File, Connect to Named Pipe, and Connect via Network. The Connect via Network option refers to the Virtual Serial Port Concentrator feature that is discussed in the “What’s New with ESXi 4.1” section. If you require connectivity to serial port–based devices for your virtual machines and the ability to migrate virtual machines, you should investigate using a serial over IP-device. With such a device, a virtual machine is no longer tethered to a specific ESXi host and can be migrated with vMotion between hosts, as connectivity between the serial device and the virtual machine occurs over the network.

The last item mentioned in Table 1.2 is support for jumbo frames. The initial release of ESXi supported jumbo frames only within virtual machines and not for VMkernel traffic. Other minor network features, such as support for NetQueue and the Cisco Discovery Protocol, were also not available. These gaps in functionality were closed with ESXi 4.0.

As you have seen in the preceding section, in terms of function, there is no difference between ESXi and ESX, and the great features such as vMotion and HA that you’ve used function the same as you migrate to ESXi. The removal of the COS could pose a significant challenge in your migration. Over the last few releases of ESXi, VMware has made significant progress to provide tools that replicate the tasks that you have performed with the COS. If you have made heavy use of the COS, you should carefully plan how those scripts will be executed with ESXi. Subsequent chapters look more closely at using the vCLI and PowerCLI to perform some of the tasks that you have performed in the COS. You should also review any COS agents and third-party tools that you may utilize to ensure that you have a supported equivalent with ESXi. Lastly, because of the removal of the COS, ESXi does not have a Service Console port. Rather, the functionality provided to ESX through the Service Console port is handled by the VMkernel port in ESXi.

Each new release from VMware of its virtual infrastructure suite has always included innovative new features and improvements to make management of your infrastructure easier. The release of vSphere 4.1 is no different and includes over 150 improvements and new features. These range in improvements to vCenter Server, ESXi, and virtual machine capabilities. Comprehensive documentation can be found at the link http://www.vmware.com/products/vsphere/mid-size-and-enterprise-business/resources.html.

One significant change is that vCenter Server ships only as a 64-bit version. This reflects a common migration of enterprise applications to 64-bit only. This change also removes the performance limitations of running on a 32-bit OS. Along with other performance and scalability enhancements, this change allows vCenter to respond more quickly, perform more concurrent tasks, and manage more virtual machines per datacenter. Concurrent vMotion operations per 1 Gigabit Ethernet (GbE) link have been increased to 4 and up to 8 for 10GbE links. If your existing vCenter installation is on a 32-bit server and you want to update your deployment to vCenter 4.1, you have to install vCenter 4.1 on a new 64-bit server and migrate the existing vCenter database. This process is documented in Chapter 5, “Migrating from ESX.” For existing vCenter 4.0 installations running on a 64-bit OS, an in-place upgrade may be performed.

vSphere 4.1 now includes integration with AD to allow seamless authentication when connecting directly to VMware ESXi. vCenter Server has always provided integration with AD, but now with AD integration you no longer have to maintain local user accounts on your ESXi host or use the root account for direct host configuration. AD integration is enabled on the Authentication Services screen as shown in Figure 1.4. Once your ESXi host has been joined to your domain, you may assign privileges to users or groups that are applied when a user connects directly to ESXi using the vSphere client, vCLI, or other application that communicates with ESXi via the vSphere API.

A number of enhancements have been added to Host Profiles since the feature was introduced in vSphere 4.0. These include the following additional configuration settings:

With support for configuration of the root password, users can easily update this account password on the vSphere 4.1 hosts in their environment.

User privileges that you can configure from the vSphere client on a host can now be configured through Host Profiles.

Configuration of physical network interface cards (NICs) can now be accomplished using the device’s Peripheral Component Interconnect (PCI) ID. This aids in network configuration in your environment if you employ separate physical NICs for different types of traffic such as management, storage, or virtual machine traffic.

Host Profiles can be used to configure AD integration. When the profile is applied to a new host, you only have to supply credentials with the appropriate rights to join a computer to the domain.

A number of new features and enhancements have been made that impact virtual machine operation. Memory overhead has been reduced, especially for large virtual machines running on systems that provide hardware memory management unit (MMU) support. Memory Compression provides a new layer to enhance memory overcommit technology. This layer exists between the use of ballooning and disk swapping and is discussed further in Chapter 6. It is now possible to pass through USB devices connected to a host into a virtual machine. This could include devices such as security dongles and mass storage devices. When a USB device is connected to an ESXi host, that device is made available to virtual machines running on that host. The USB Arbitrator host component manages USB connection requests and routes USB device traffic to the appropriate virtual machine. A USB device can be used in only a single virtual machine at a time. Certain features such as Fault Tolerance and Distributed Power Management are not compatible with virtual machines using USB device passthrough, but a virtual machine can be migrated using vMotion and the USB connection will persist after the migration. After a virtual machine with a USB device is migrated using vMotion, the USB devices remain connected to the original host and continue to function until the virtual machine is suspended or powered down. At that point, the virtual machine would need to be migrated back to the original host to reconnect to the USB device.

Some environments use serial port console connections to manage physical hosts, as these connections provide a low-bandwidth option to connect to servers. vSphere 4.1 offers the Virtual Serial Port Concentrator (vSPC) to enable this management option for virtual machines. The vSPC feature allows redirection of a virtual machine’s serial ports over the network using telnet or SSH. With the use of third-party virtual serial port concentrators, virtual machines can be managed in the same convenient and secure manner as physical hosts. The vSPC settings are enabled on a virtual machine as shown in Figure 1.5.

vSphere 4.1 includes a number of storage-related enhancements to improve performance, monitoring, and troubleshooting. ESXi 4.1 supports Boot from SAN for iSCSI, Fibre Channel, and Fibre Channel over Ethernet (FCOE). Boot from SAN provides a number of benefits, including cheaper servers, which can be denser and require less cooling; easier host replacement, as there is no local storage; and centralized storage management. ESXi Boot from iSCSI SAN is supported on network adapters capable of using the iSCSI Boot Firmware Table (iBFT) format. Consult the HCL at http://www.vmware.com/go/hcl for a list of adapters that are supported for booting ESXi from an iSCSI SAN. vSphere 4.1 also adds support for 8GB Fibre Channel Host Bus Adapters (HBAs). With 8GB HBAs, throughput to Fibre Channel SANs is effectively doubled. For improved iSCSI performance, ESXi enables 10GB iSCSI hardware offloads (Broadcom 57111) and 1GB iSCSI hardware offloads (Broadcom 5709). Broadcom iSCSI offload technology enables on-chip processing of iSCSI traffic, freeing up host central processing unit (CPU) resources for virtual machine usage.

Storage I/O Control enables storage prioritization across a cluster of ESXi hosts that access the same datastore. This feature extends the familiar concept of shares and limits that is available for the CPU and memory on a host. Configuration of shares and limits is handled on a per-virtual-machine basis, but Storage I/O Control enforces storage access by evaluating the total share allocation for all virtual machines regardless of the host that the virtual machine is running on. This ensures that low-priority virtual machines running on one host do not have the equivalent I/O slots that are being allocated to high-priority virtual machines on another host. Should Storage I/O Control detect that the average I/O latency for a datastore has exceeded a configured threshold, it begins to allocate I/O slots according to the shares allocated to the virtual machines that access the datastore. Configuration of Storage I/O Control is discussed further in Chapter 3.

The vStorage API for Array Integration (VAAI) is a new API available for storage partners to use as a means of offloading specific storage functions in order to improve performance. With the 4.1 release of vSphere, the VAAI offload capability supports the following three capabilities:

Full copy. This enables the array to make full copies of data within the array without requiring the ESXi host to read or write the data.

Block zeroing. The storage array handles zeroing out blocks during the provisioning of virtual machines.

Hardware-assisted locking. This provides an alternative to small computer systems interface (SCSI) reservations as a means to protect VMFS metadata.

The full-copy aspect of VAAI provides significant performance benefits when deploying new virtual machines, especially in a virtual desktop environment where hundreds of new virtual machines may be deployed in a short period. Without the full copy option, the ESXi host is responsible for the read-and-write operations required to deploy a new virtual machine. With full copy, these operations are offloaded to the array, which significantly reduces the time required as well as reducing CPU and storage network load on the ESXi host. Full copy can also reduce the time required to perform a Storage vMotion operation, as the copy of the virtual disk data is handled by the array on VAAI-capable hardware and does not need to pass to and from the ESXi host.

Block zeroing also improves the performance of allocating new virtual disks, as the array is able to report to ESXi that the process is complete immediately while in reality it is being completed as a background process. Without VAAI, the ESXi host must wait until the array has completed the zeroing process to complete the task of creating a virtual disk, which can be time-consuming for large virtual disks.

The third enhancement for VAAI is hardware-assisted locking. This provides a more granular option to protect VMFS metadata than SCSI reservations. Hardware-assisted locking uses a storage array atomic test and set capability to enable a fine-grained block-level locking mechanism. Any VMFS operation that allocates space, such as the starting or creating of virtual machines, results in VFMS having to allocate space, which in the past has required a SCSI reservation to ensure the integrity of the VMFS metadata on datastores shared by many ESXi hosts. Hardware-assisted locking provides a more efficient manner to protect the metadata.

You can consult the vSphere HCL to see whether your storage array supports any of these VAAI features. It is likely that your array would require a firmware update to enable support. You would also have to enable one of the advanced settings shown in Table 1.3, as these features are not enabled by default.

Table 1.3. Advanced Settings to Enable VAAI

VAAI Feature | Advanced Configuration Setting |

|---|---|

Full Copy | DataMover.HardwareAcceleratedMove |

Block Zeroing | DataMover.HardwareAcceleratedInit Locking |

Hardware-Assisted | VMFS3.HardwareAcceleratedLocking |

The storage enhancements in vSphere 4.1 also include new performance metrics to expand troubleshooting and monitoring capabilities for both the vSphere client and the vCLI command resxtop. These include new metrics for NFS devices to close the gap in metrics that existed between NFS storage and block-based storage. Additional throughput and latency statistics are available for viewing all datastore activity from an ESXi host, as well as for a specific storage adapter and path. At the virtual machine level, it is also possible to view throughput and latency statistics for virtual disks or for the datastores used by the virtual machine.

vSphere 4.1 also includes a number of innovative networking features. ESXi now supports Internet Protocol Security (IPSec) for communication coming from and arriving at an ESXi host for IPv6 traffic. When you configure IPSec on your ESXi host, you are able to authenticate and encrypt incoming and outgoing packets according to the security associations and policies that you configure. Configuration of IPSec is discussed in Chapter 7. With ESXi 4.1, IPSec is supported for the following traffic types:

Virtual machine

vSphere client and vCenter Server

vMotion

ESXi management

IP storage (iSCSI, NFS)—this is experimentally supported

IPSec for ESXi is not supported for use with the vCLI, for VMware HA, or for VMware FT logging.

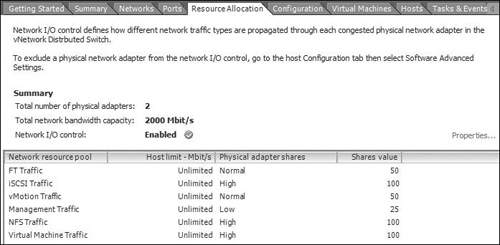

Network I/O Control is a new network traffic management feature for dvSwitches. Network I/O Control implements a software scheduler within the dvSwitch to isolate and prioritize traffic types on the links that connect your ESXi host to your physical network. This feature is especially helpful if you plan to run multiple traffic types over a paired set of 10GbE interfaces, as might be the case with blade servers. In such a case, Network I/O Control would ensure that virtual machine network traffic, for example, would not interfere with the performance of IP-based storage traffic.

Network I/O Control is able to recognize the following traffic types leaving a dvSwitch on ESXi:

Virtual machine

Management

iSCSI

NFS

Fault Tolerance logging

vMotion

Network I/O Control uses shares and limits to control traffic leaving the dvSwitch. These values are configured on the Resource Allocation tab as shown in Figure 1.6. Shares specify the relative importance of a traffic type being transmitted to the host’s physical NICs. The share settings work the same as for CPU and memory resources on an ESXi host. If there is no resource contention, a traffic type could consume the entire network link for the dvSwitch. However, if two traffic types begin to saturate a network link, shares come into play in determining how much bandwidth is to be allocated for each traffic type.

Limit values are used to specify the maximum limit that can be used by a traffic type. If you configure limits, the values are specified in megabits per second (Mbps). Limits are imposed before shares and limits apply over a team of NICs. Shares, on the other hand, schedule and prioritize traffic for each physical NIC in a team.

Note

Configuration of iSCSI traffic resource pool shares do not apply to iSCSI traffic generated by iSCSI HBAs in your host.

The last new networking feature that this section highlights is Load-Based Teaming (LBT). This is another management feature for dvSwitches designed to avoid network congestion on ESXi physical NICs caused by imbalances in the mapping of traffic to those uplinks. LBT is an additional load-balancing policy available on the Teaming and Failover policy for a port group on a dvSwitch. This option appears in the list as “Route Based on Physical NIC Load.” LBT works to adjust manually the mapping of virtual ports to physical NICs to balance network load leaving and entering the dvSwitch. If ESXi detects congestion on a network link signified by 75 percent or more utilization over a 30-second period, LBT attempts to move one or more virtual ports to a less utilized link within the dvSwitch.

Note

The vSphere client is no longer bundled with ESXi and ESX builds. Once you have completed an installation of either product, the link on the Welcome page redirects you to a download of the vSphere client from VMware’s Web site. The vSphere client is still available for download from the Welcome page for vCenter Server.

VMware ESXi represents a significant step forward in hypervisor design and provides an efficient manner for turning servers into virtualization appliances. With ESXi, you have the same great features that you’ve been using with ESX. Both can be run side by side in the same clusters to allow you to perform a gradual migration to ESXi. With the removal of the COS, ESXi does not have any dependencies on a general operating system, which improves security and reliability. For seasoned COS administrators, VMware has provided two feature-rich alternatives with the vCLI and PowerCLI. ESXi includes the vSphere API, which eliminates the need for COS agents for management and backup systems. ESXi also leverages the CIM model to provide agentless hardware monitoring.