Chapter 11

Bringing a vSphere Design Together

In this chapter, we'll pull together all the various topics that we've covered so far throughout this book and put them to use in a high-level walkthrough of a VMware vSphere design. Along the way, we hope you'll get a better understanding of VMware vSphere design and the intricacies that are involved in creating a design.

This chapter will cover the following topics:

- Examining the decisions made in a design

- Considering the reasons behind design decisions

- Exploring the impact on the design of changes to a decision

- Mitigating the impact of design changes

Sample Design

For the next few pages, we'll walk you, at a high level, through a simple VMware vSphere design for a fictional company called XYZ Widgets. We'll first provide a business overview, followed by an overview of the major areas of the design, organized in the same fashion as the chapters in the book. Because VMware vSphere design documentation can be rather lengthy, we'll include only relevant details and explanations. A real-world design will almost certainly need to be more complete, more detailed, and more in-depth than what is presented in this chapter. Our purpose here is not to provide a full and comprehensive vSphere design but rather to provide a framework in which to think about how the various vSphere design points fit together and interact with each other and to help promote a holistic view of the design.

We'll start with a quick business overview and a review of the virtualization goals for XYZ Widgets.

Business Overview for XYZ Widgets

XYZ Widgets is a small manufacturing company. XYZ currently has about 60 physical servers, many of which are older and soon to be out of warranty and no longer under support. XYZ is also in the process of implementing a new ERP system. To help reduce the cost of refreshing the hardware, gain increased flexibility with IT resources, and reduce the hardware acquisitions costs for the new ERP implementation, XYZ has decided to deploy VMware vSphere in its environment. As is the case with many smaller organizations, XYZ has a very limited IT staff, and the staff is responsible for all aspects of IT—there are no dedicated networking staff and no dedicated storage administrators.

XYZ has the following goals in mind:

- XYZ would like to convert 60 existing workloads into VMs via a physical-to-virtual (P2V) process. These workloads should be able to run unmodified in the new vSphere environment, so they need connectivity to the same VLANs and subnets as the current physical servers.

- Partly due to the ERP implementation and partly due to business growth, XYZ needs the environment to be able to hold up to 200 VMs in the first year. This works out to be over 300% growth in the anticipated number of VMs over the next year.

- The ERP environment is really important to XYZ's operations, so the environment should provide high availability for the ERP applications.

- XYZ wants to streamline its day-to-day IT operations, so the design should incorporate that theme. XYZ management feels the IT staff should be able to “do more with less.”

These other requirements and constraints also affected XYZ's vSphere design:

- XYZ has an existing Fibre Channel (FC) storage area network (SAN) and an existing storage array that it wants to reuse. An analysis of the array shows that adding drives and drive shelves to the array will allow it to handle the storage requirements (both capacity and performance) that are anticipated. Because this design decision is already made, it can be considered a design constraint.

- There are a variety of workloads on XYZ's existing physical servers, including Microsoft Exchange 2007, DHCP, Active Directory domain controllers, web servers, file servers, print servers, some database servers, and a collection of application servers. Most of these workloads are running on Microsoft Windows Server 2003, but some are Windows Server 2008 and some are running on Red Hat Enterprise Linux.

- A separate network infrastructure refresh project determined that XYZ should adopt 10 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE) for network and storage connectivity. Accordingly, Cisco Nexus 5548 switches will be the new standard access-layer switch moving forward (replacing older 1 Gbps access-layer switches), so this is what XYZ must use in its design. This is another design constraint.

- XYZ would like to use Active Directory as its single authentication point, as it currently does today.

- XYZ doesn't have an existing monitoring or management framework in place today.

- XYZ has sufficient power and cooling in its datacenter to accommodate new hardware (especially as older hardware is removed due to the virtualization initiative), but it could have problems supporting high-density power or cooling requirements. The new hardware must take this into consideration.

Now that you have a rough idea of the goals behind XYZ's virtualization initiative, let's review its design, organized topically according to the chapters in this book.

Hypervisor Design

XYZ's vSphere design calls for the use of VMware vSphere 5.1, which—like vSphere 5.0—only offers the ESXi hypervisor, not the older ESX hypervisor with the RHEL-based Service Console. Because this is its first vSphere deployment, XYZ has opted to keep the design as simple as possible and to go with a local install of ESXi, instead of using boot from SAN or AutoDeploy.

vSphere Management Layer

XYZ purchased licensing for VMware vSphere Enterprise Plus and will deploy VMware vCenter Server 5.1 to manage its virtualization environment. To help reduce the overall footprint of physical servers, XYZ has opted to run vCenter Server as a VM. To accommodate the projected size and growth of the environment, XYZ won't use the virtual appliance version of vCenter Server, but will use the Windows Server–based version instead. The vCenter Server VM will run vCenter Server 5.1, and XYZ will use separate VMs to run vCenter Single Sign-On and vCenter Inventory Service. The databases for the various vCenter services will be provided by a clustered instance of Microsoft SQL Server 2008 running on Windows Server 2008 R2 64-bit. Another VM will run vCenter Update Manager to manage updates for the VMware ESXi hosts. No other VMware management products are planned for deployment in XYZ's environment at this time.

Server Hardware

XYZ Widgets has historically deployed HP ProLiant rack-mount servers in its datacenter. In order to avoid retraining the staff on a new hardware platform or new operational procedures, XYZ opted to continue to use HP ProLiant rack-mount servers for its new VMware vSphere environment. It selected the HP DL380 G8, picking a configuration using a pair of Intel Xeon E5-2660 CPUs and 128 GB RAM. The servers will have a pair of 146 GB hot-plug hard drives configured as a RAID 1 mirror for protection against drive failure.

Network connectivity is provided by a total of four on-board Gigabit Ethernet (GbE) network ports and a pair of 10 GbE ports on a converged network adapter (CNA) that provides FCoE support. (More information about the specific networking and shared storage configurations is provided in an upcoming section.) Previrtualization capacity planning indicates that XYZ will need 10 servers in order to virtualize the 200 workloads it would like to virtualize (a 20:1 consolidation ratio). This consolidation ratio provides an (estimated) VM-to-core ratio of about 2:1. This VM-to-core ratio depends on the number of VMs that XYZ runs with only a single vCPU versus multiple vCPU. Older workloads will likely have only a single vCPU, whereas some of the VMs that will handle XYZ's new ERP implementation are likely to have more vCPUs.

Networking Configuration

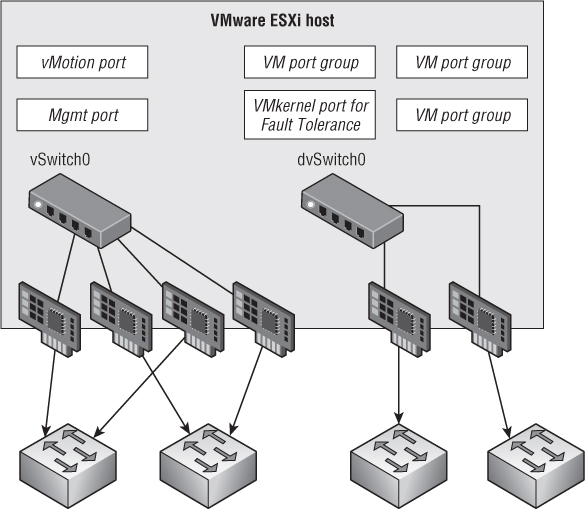

As we mentioned, each of the proposed VMware vSphere hosts has a total of four 1 GbE and two 10 GbE network ports. XYZ Widgets proposes to use a hybrid network configuration that uses both vSphere Standard Switches as well as a vSphere Distributed Switch.

Each ESXi host will have a single vSwitch (vSphere Standard Switch) that contains all four on-board 1 GbE ports. This vSwitch will handle the management and vMotion traffic, and vMotion will be configured to use multiple NICs to improve live migration performance times. XYZ elected not to have vMotion run across the 10 GbE ports because these ports are also carrying storage traffic via FCoE.

The vSphere Distributed Switch (VDS, or dvSwitch) will be uplinked to the two 10 GbE ports and will contain distributed port groups for the following traffic types:

- Fault tolerance (FT)

- VM traffic spanning three different VLANs

A group of Cisco Nexus 5548 switches provides upstream network connectivity, and every server will be connected to two switches for redundancy. Although the Nexus 5548 switches support multichassis link aggregation, the VDS won't be configured with the “Route based on IP hash” load-balancing policy; instead, it will use the default “Route based on originating virtual port ID.” XYZ may evaluate the use of load-based teaming (LBT) on the dvSwitch at a later date. Each Nexus 5548 switch has redundant connections to XYZ's network core as well as FC connections to the SAN fabric.

Shared Storage Configuration

XYZ Widgets already owned a FC-based SAN that was installed for a previous project. The determination was made, based on previrtualization capacity planning, that the SAN needed to be able to support an additional 15,000 I/O operations per second (IOPS) in order to virtualize XYZ's workloads. To support this workload, XYZ has added a four 200 GB enterprise flash drives (EFDs), forty-five 600 GB 15K SAS drives, and eleven 1 TB SATA drives. These additional drives support an additional 38.8 TB of raw storage capacity and approximately 19,000 IOPS (without considering RAID overhead).

The EFDs and the SAS drives will be placed into a single storage pool from which multiple LUNs will be placed. In addition to supporting vSphere APIs for Array Integration (VAAI) and vSphere APIs for Storage Awareness (VASA), the array has the ability to automatically tier data based on usage. XYZ will configure the array so that the most frequently used data is placed on the EFDs, and the data that is least frequently used will be placed on the SATA drives. The EFDs will be configured as RAID 1 (mirror) groups, the SAS drives as RAID 5 groups, and the SATA drives as a RAID 6 group. The storage pool will be carved into 1 TB LUNs and presented to the VMware ESXi hosts.

As described earlier, the VMware ESXi hosts are attached via FCoE CNAs to redundant Nexus 5548 FCoE switches. The Nexus 5548 switches have redundant uplinks to the FC directors in the SAN core, and the storage controllers of XYZ's storage array—an active/passive array according to VMware's definitions—have multiple ports that are also attached to the redundant SAN fabrics. The storage array is Asymmetric Logical Unit Access (ALUA) compliant.

VM Design

XYZ has a number of physical workloads that will be migrated into its VMware vSphere environment via a P2V migration. These workloads consist of various applications running on Windows Server 2003 and Windows Server 2008. During the P2V process, XYZ will right-size the resulting VM to ensure that it isn't oversized. The right-sizing will be based on information gathered during the previrtualization capacity-planning process.

For all new VMs moving forward, the guest OS will be Windows Server 2008 R2. XYZ will use a standard of 8 GB RAM per VM and a single vCPU. The single vCPU can be increased later if performance needs warrant doing so. A thick-provisioned 40 GB Virtual Machine Disk Format (VMDK) will be used for the system disk, using the LSI Logic SAS adapter (the default adapter for Windows Server 2008). XYZ chose the LSI Logic SAS adapter for the system disk because it's the default adapter for this guest OS and because support for the adapter is provided out of the box with Windows Server 2008. XYZ felt that using the paravirtual SCSI adapter for the system disk added unnecessary complexity. Additional VMDKs will be added on a per-VM basis as needed and will use the paravirtualized SCSI adapter. Because these data drives are added after the installation of Windows into the VM, XYZ felt that the use of the paravirtualized SCSI driver was acceptable for these virtual disks.

Given the relative newness of Windows Server 2012, XYZ decided to hold off on migrating workloads to this new server OS as part of this project.

VMware Datacenter Design

XYZ will configure vCenter Server to support only a single datacenter and a single cluster containing all 10 of its VMware ESXi hosts. The cluster will be enabled for vSphere High Availability (HA) and vSphere Distributed Resource Scheduling (DRS). Because the cluster is homogenous with regard to CPU type and family, XYZ has elected not to enable vSphere Enhanced vMotion Compatibility (EVC) at this time. vSphere HA will be configured to perform host monitoring but not VM monitoring, and vSphere DRS will be configured as Fully Automated and set to act on recommendations of three stars or greater.

Security Architecture

XYZ will ensure that the firewall on the VMware ESXi hosts is configured and enabled, and only essential services will be allowed through the firewall. Because XYZ doesn't initially envision using any management tools other than vCenter Server and the vSphere Web Client, the ESXi hosts will be configured with Lockdown Mode enabled. Should the use of the vSphere command-line interface (vCLI) or other management tools prove necessary later, XYZ will revisit this decision.

To further secure the vSphere environment, XYZ will place all management traffic on a separate VLAN and will tightly control access to that VLAN. vMotion traffic and FT logging traffic will be placed on separate, nonroutable VLANs to prevent any sort of data leakage.

vCenter Server will be a member of XYZ's Active Directory domain and will use default permissions. XYZ's VMware administrative staff is fairly small and doesn't see a need for a wide number of highly differentiated roles within vCenter Server. vCenter Single Sign-On will use XYZ's existing Active Directory deployment as an identity source.

Monitoring and Capacity Planning

XYZ performed previrtualization capacity planning. The results indicated that 10 physical hosts with the proposed specifications would provide enough resources to virtualize the existing workloads and provide sufficient room for initial anticipated growth. XYZ's VMware vSphere administrators plan to use vCenter Server's performance graphs to do both real-time monitoring and basic historical analysis and trending.

vCenter Server's default alerts will be used initially and then customized as needed after the environment has been populated and a better idea exists of what normal utilization will look like. vCenter Server will send email via XYZ's existing email system in the event a staff member needs to be alerted regarding a threshold or other alarm. After the completion of the first phase of the project—which involves the conversion of the physical workloads to VMs—then XYZ will evaluate whether additional monitoring and management tools are necessary. Should additional monitoring and capacity-planning tools prove necessary, XYZ is leaning toward the use of vCenter Operations to provide additional insight into the performance and utilization of the vSphere environment.

Examining the Design

Now that you've seen an overview of XYZ's VMware vSphere design, we'd like to explore the design in a bit more detail through a series of questions. The purpose of these questions is to get you thinking about how the various aspects of a design integrate with each other and are interdependent on each other. You may find it helpful to grab a blank sheet of paper and start writing down your thoughts as you work through these questions.

These questions have no right answers, and the responses that we provide here are simply to guide your thoughts—they don't necessarily reflect any concrete or specific recommendations. There are multiple ways to fulfill the functional requirements of any given design, so keep that in mind! Once again, we'll organize the questions topically according to the chapters in this book; this will also make it easier for you to refer back to the appropriate chapter where applicable.

Hypervisor Design

As you saw in Chapter 2, “The ESXi Hypervisor,” the decisions about how to install and deploy VMware ESXi are key decision points in vSphere designs and will affect other design decisions:

vSphere Management Layer

We discussed design decisions concerning the vSphere management layer in Chapter 3, “The Management Layer.” In this section, we'll examine some of the design decisions XYZ made regarding its vSphere management layer:

Are there other disadvantages that you see to running vCenter Server as a VM? What about other advantages of this configuration?

Figure 11.1 A hybrid network configuration for XYZ's vSphere environment

Server Hardware

Server hardware and the design decisions around server hardware were the focus of our discussion in Chapter 4, “Server Hardware.” In this section we ask you a few questions about XYZ's hardware decisions and the impact on the company's design:

- The design description indicates that XYZ will use 10 physical servers. They will fit into a single physical chassis but may be better spread across two physical chassis to protect against the failure of a chassis. This increases the cost of the solution.

- Depending on the specific type of blade selected, the number and/or type of NICs might change. If the number of NICs was reduced too far, this would have an impact on the networking configuration. Changes to the network configuration (for example, having to cut out NFS traffic due to limited NICs) could then affect the storage configuration. And the storage configuration might need to change as well, depending on the availability of CNAs for the server blades and FCoE-capable switches for the back of the blade chassis.

In all likelihood, CPU utilization wouldn't be a bottleneck; memory usually runs out before CPU capacity, but it depends on the workload characteristics. Without additional details, it's almost impossible to say for certain if an increase in CPU capacity would help improve the consolidation ratio. However, based on our experience, XYZ is probably better served by increasing the amount of memory in its servers instead of increasing CPU capacity.

Keep in mind that there are some potential benefits to the “scale-up” model, which uses larger servers like quad-socket servers instead of smaller dual-socket servers. This approach can yield higher consolidation ratios, but you'll need to consider the impacts on the rest of the design. One such potential effect to the design is the escalated risk of and impact from a server failure in a scale-up model with high consolidation ratios. How many workloads will be affected? What will an outage to that many workloads do to the business? What is the financial impact of this sort of outage? What is the risk of such an outage? These are important questions to ask and answer in this sort of situation.

Networking Configuration

The networking configuration of any vSphere design is a critical piece, and we discussed networking design in detail in Chapter 5, “Designing Your Network.” XYZ's networking design is examined in greater detail in this section.

As a side note regarding link aggregation, the number of links in a link aggregate is important to keep in mind. Most networking vendors recommend the use of one, two, four, or eight uplinks due to the algorithms used to place traffic on the individual members of the link-aggregation group. Using other numbers of uplinks will most likely result in an unequal distribution of traffic across those uplinks.

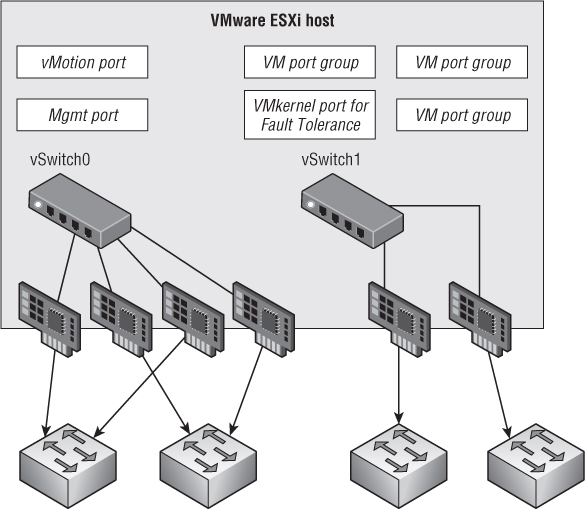

As the result of using only vSwitches, the administrative overhead is potentially increased because changes to the network configuration of the ESXi hosts must be performed on each individual host, instead of being centrally managed like the VDS. The fact that vSwitches are managed per host introduces the possibility of a configuration mismatch between hosts, and configuration mismatches could result in VMs being inaccessible from the network after a vMotion (either a manual vMotion or an automated move initiated by vSphere DRS).

On the flip side, given that XYZ is using Enterprise Plus licensing, it could opt to use host profiles to help automate the management of the vSwitches and help reduce the likelihood of configuration mismatches between servers.

There is also a loss of functionality, because a distributed switch supports features that a standard vSwitch doesn't support, such as Switched Port Analyzer (SPAN), inbound and outbound traffic shaping, and private VLANs. It's also important to understand that some additional VMware products, such as vCloud Director, require a VDS for full functionality. Although XYZ doesn't need vCloud Director today, switching to vSwitches might limit future growth opportunities, and this consideration must be included in the design analysis.

Figure 11.2 A potential configuration for XYZ using vSphere standard switches instead of a VDS

Shared Storage Configuration

The requirement of shared storage to use so many of vSphere's most useful features, like vMotion, makes shared storage design correspondingly more important in your design. Refer back to Chapter 6, “Storage,” if you need more information as we take a closer look at XYZ's shared storage design:

- The amount of I/O being generated by the VMs placed on that NFS datastore, because network throughput would likely be the bottleneck in this instance.

- The amount of time it took to back up or restore an entire datastore. XYZ would need to ensure that these times fell within its agreed recovery time objective (RTO) for the business.

Historically speaking, VMware has had a tendency to support new features and functionality on block storage platforms first, followed by NFS support later. Although this trend isn't guaranteed to continue in the future, it would be an additional fact XYZ would need to take into account when considering a migration to NFS.

Finally, a move to only NFS would prevent the use of raw device mappings (RDMs) for any applications in the environment, because RDMs aren't possible on NFS.

The storage configuration might also need to change, depending on the I/O patterns and amount of I/O generated by the VMs.

Finally, using the software iSCSI initiator would affect CPU utilization by requiring additional CPU cycles to process storage traffic. This could have a negative impact on the consolidation ratio and require XYZ to purchase more servers than originally planned.

VM Design

As we described in Chapter 7, “Virtual Machines,” VM design also needs to be considered with your vSphere design. Here are some questions and thoughts on XYZ's VM design:

Many variations of Linux are also affected, so XYZ should ensure that it corrects the file-system alignment on any Linux-based VMs as well.

Note that both Windows Server 2008 and Windows Server 2012 properly align partitions by default.

VMware Datacenter Design

The logical design of the VMware vSphere datacenter and clusters was discussed at length in Chapter 8, “Datacenter Design.” Here, we'll apply the considerations mentioned in that chapter to XYZ's design:

Reducing cluster size means you reduce the ability of DRS to balance workloads across the entire environment, and you limit the ability of vSphere HA to sustain host failures. A cluster of 10 nodes might be able to support the failure of 2 nodes, but can a cluster of 5 nodes support the loss of 2 nodes? Or is the overhead to support that ability too great with a smaller cluster?

However, the use of vCenter Server as a VM introduces some potential operational complexity around the use of EVC. VMware has a Knowledge Base article that outlines the process required to enable EVC when vCenter Server is running as a VM; see kb.vmware.com/kb/1013111. To avoid this procedure, XYZ might want to consider enabling EVC in the first phase of its virtualization project.

Security Architecture

We focused on the security of vSphere designs in Chapter 9, “Designing with Security in Mind.” As we review XYZ's design in the light of security, feel free to refer back to our security discussions from Chapter 9 for more information:

Monitoring and Capacity Planning

Chapter 10, “Monitoring and Capacity Planning,” centered on the use and incorporation of monitoring and capacity planning in your vSphere design. Here, we examine XYZ's design in this specific area:

If XYZ needed application-level awareness, it would need to deploy an additional solution to provide that functionality. That additional solution would increase the cost of the project, would potentially consume resources on the virtualization layer and affect the overall consolidation ratio, and could require additional training for the XYZ staff.

Summary

In this chapter, we've used a sample design for a fictional company to illustrate the information presented throughout the previous chapters. You've seen how functional requirements drive design decisions and how different decisions affect various parts of the design. We've also shown examples of both intended and unintended impacts of design decisions, and we've discussed how you might mitigate some of these unintended impacts. We hope the information we've shared in this chapter has helped provide a better understanding of what's involved in crafting a VMware vSphere design.