Chapter 6

Storage

The storage component of any design of a vSphere environment is commonly regarded as one of the most crucial to overall success. It's fundamental to the capabilities of virtualization. Many of the benefits provided by VMware's vSphere wouldn't be possible without the technologies and features offered by today's storage equipment.

Storage technologies are advancing at a tremendous pace, particularly notable for such a traditionally staunch market. This innovation is being fueled by new hardware capabilities, particularly with widespread adoption of flash-based devices. Pioneering software advancements are being made possible via the commoditization of many storage array technologies that were previously only available to top-tier enterprise storage companies. When VMware launched vSphere 5, it was colloquially referred to as the storage release, due to the sheer number of new storage features and the significant storage improvements it brought.

The storage design topics discussed in this chapter are as follows:

- Primary storage design factors to consider

- What makes for an efficient storage solution

- How to design your storage with sufficient capacity

- How to design your storage to perform appropriately

- Whether local storage is still a viable option

- Which storage protocol you should use

- Using multipathing with your storage choice

- How to implement vSphere 5 storage features in your design

Dimensions of Storage Design

In the past, those who designed, built, configured, and most importantly, paid for server storage were predominantly interested in how much space they could get for their dollar. Servers used local direct attached storage (DAS), with the occasional foray into two node clusters, where performance was limited to the local bus, the speed of the disks, and the RAID configuration chosen. These configurations could be tweaked to suit all but the most demanding of server-room requirements. If greater performance was required, companies scaled out with multiple servers; or if they had the need (and the cash!), they invested in expensive dedicated Fibre Channel storage area network devices (SANs) with powerful array technologies. Times were relatively simple. CIOs cared about getting the most bang for their buck, and $/GB (cost per gigabyte) was what was on the storage planning table.

With the advent of virtualization, storage is now much more than just capacity. Arguably, the number of terabytes (TBs) that your new whizzy storage array can provide is one of the lesser interests when you're investigating requirements. Most shared storage units, even the more basic ones, can scale to hundreds of TBs.

Some of the intrinsic vSphere capabilities mean that storage is significantly more mobile than it was previously. Features such as Storage vMotion help abstract not just the server hardware but also the storage. Upgrading or replacing storage arrays isn't the thing of nightmares anymore; and the flexibility to switch in newer arrays makes the situation far more dynamic. Recent vSphere additions, such as Storage Distributed Resource Scheduler (Storage DRS) and Profile-Driven Storage, allow you to eke out even more value from your capital expenditure. Some of the innovative solutions around flash storage that are now available provide many options to quench virtualization's thirst for more input/output operations per second (IOPS).

Rather than intimidating or constraining the vSphere architect in you, this should open your mind to a world of new possibilities. Yes, there are more things to understand and digest, but they're all definable. Like any good design, storage requirements can still be planned and decisions made using justifiable, measurable analysis. Just be aware that counting the estimated number and size of all your projected VMs won't cut the mustard for a virtualized storage design anymore.

Storage Design Factors

Storage design comes down to three principle factors:

- Availability

- Performance

- Capacity

These must all be finely balanced with an ever-present fourth factor:

- Cost

Availability

Availability of your vSphere storage is crucial. Performance and capacity issues aren't usually disruptive and can be dealt with without downtime if properly planned and monitored. However, nothing is more noticeable than a complete outage. You can (and absolutely should) build redundancy in to every aspect of a vSphere design, and storage is cardinal in this equation. In a highly available environment, you wouldn't have servers with one power supply unit (PSU), standalone switches, or single Ethernet connections. Shared storage in its very nature is centralized and often solitary in the datacenter. Your entire cluster of servers will connect to this one piece of hardware. Wherever possible, this means every component and connection must have sufficient levels of redundancy to ensure that there are no single points of failure.

Different types of storage are discussed in this chapter, and as greater levels of availability are factored in, the cost obviously rises. However, the importance of availability should be overriding in almost any storage design.

Performance

Performance is generally less well understood than capacity or availability, but in a virtualized environment where there is significant scope for consolidation, it has a much greater impact. You can use several metrics, such as IOPS, throughput (measured in MBps), and latency, to accurately measure performance. These will be explained in greater depth later in the chapter.

This doesn't have to be the black art that many think it is—when you understand how to measure performance, you can use it effectively to underpin a successful storage design.

Capacity

Traditionally, capacity is what everyone thinks of as the focus for a storage array's principal specification. It's a tangible (as much as ones and zeros on a rusty-colored spinning disk can be), easily describable, quantitative figure that salesmen and management teams love. Don't misunderstand: it's a relevant design factor. You need space to stick stuff. No space, no more VMs. Capacity needs to be managed on an ongoing basis, and predicted and provisioned as required. However, unlike availability and performance, it can normally be augmented as requirements grow.

It's a relatively straightforward procedure to add disks and enclosures to most storage arrays without incurring downtime. As long as you initially scoped the fundamental parts of the storage design properly, you can normally solve capacity issues relatively easily.

Cost

Costs can be easy or difficult to factor in, depending on the situation. You may be faced with a set amount of money that you can spend. This is a hard number, and you can think of it as one of your constraints in the design.

Alternatively, the design may need such careful attention to availability, performance, and/or capacity that money isn't an issue to the business. You must design the best solution you can, regardless of the expense.

Although you may feel that you're in one camp or the other, cost is normally somewhat flexible. Businesses don't have a bottomless pit of cash to indulge infrastructure architects (unfortunately); nor are there many managers who won't listen to reasoned, articulate explanations as to why they need to adjust either their budget or their expectations of what can be delivered.

Generally, the task of a good design is to take in the requirements and provide the best solution for the lowest possible cost. Even if you aren't responsible for the financial aspects of the design, it's important to have an idea of how much money is available.

Storage Efficiency

Storage efficiency is a term used to compare cost against each of the primary design factors. Because everything relates to how much it costs and what a business can afford, you should juxtapose solutions on that basis.

Availability Efficiency

You can analyze availability in a number of ways. Most common service-level agreements (SLAs) use the term 9s. The 9s refers to the amount of availability as a percentage of uptime in a year, as shown in Table 6.1.

Table 6.1 The 9s

| Availability % | Downtime per year |

| 90% | 36.5 days |

| 99% | 3.65 days |

| 99.5% | 1.83 days |

| 99.9% | 8.76 hours |

| 99.99% | 52.6 minutes |

| 99.999% (“5 nines”) | 5.26 minutes |

Using a measurement such as the 9s can give you a quantitative level of desired availability; however, the 9s can be open to interpretation. Often used as marketing terminology, you can use the 9s to understand what makes a highly available system. The concept is fairly simple.

If you have a single item for which you can estimate how frequently it will fail (mean time between failures [MTBF]) and how quickly it can be brought back online after a failure (mean time to recover [MTTR]), then you can calculate the applicable 9s value:

Availability = ((minutes in a year – average annual downtime in minutes) / minutes in a year) × 100

For example, a router that on average fails once every 3 years (MTBF) and that takes 4 hours to replace (MTTR) can be said to have on average an annual downtime of 75 minutes. This equates to

Availability = ((525600 − 75) / 525600) × 100 = 99.986%

As soon as you introduce a second item into the mix, the risk of failure is multiplied by the two percentages. Unless you're adding a 100 percent rock-solid, non-fallible piece of equipment (very unlikely, especially because faults are frequently caused by the operator), the percentage drops, and your solution can be considered less available.

As an example, if you have a firewall in front of the router, with the same chance of failure, then a failure in either will create an outage. The availability of that solution is halved: it's 99.972 percent, which means an average downtime of 150 minutes every year.

However, if you can add additional failover items in the design, then you can reverse the trend and increase the percentage for that piece. If you have two, then the risk may be halved. Add three, and the risk drops to one-third. In the example, adding a second failover router (ignoring the firewall) reduces the annual downtime to 37.5 minutes; a third reduces it to 25 minutes.

As you add more levels of redundancy to each area, the law of diminishing returns sets in, and it becomes less economical to add more. The greatest benefit is adding a second item, which is why most designs require at least one failover item at each level. If each router costs $5,000, the second one reduces downtime from a 1-router solution by 37.5 minutes (75 – 37.5). The third will only reduce it by a further 12.5 minutes (37.5 – 25), even though it costs as much as the second. As you can see, highly available solutions can be very expensive. Less reliable parts tend to need even more redundancy.

During the design, you should be aware of any items that increase the possibility of failure. If you need multiple items to handle load, but any one of them failing creates an outage, then you increase the potential for failure as you add more nodes. Inversely, if the load is spread across multiple items, then this spreads the risk; therefore, any failures have a direct impact on performance.

Paradoxically, massively increasing the redundancy to increase availability to the magic “five 9s” often introduces so much complexity that things take a turn south. No one said design was easy!

You can also use other techniques to calculate high availability, such as MTBF by itself.

Also worthy of note is the ability to take scheduled outages for maintenance. Does this solution really need a 24/7 answer? Although a scheduled outage is likely to affect the SLAs, are there provisions for accepted regular maintenance, or are all outages unacceptable? Ordinarily, scheduled maintenance isn't considered against availability figures; but when absolute availability is needed, things tend to get very costly.

This is where availability efficiency is crucial to a good design. Often, there is a requirement to propose different solutions based on prices. Availability efficiency usually revolves around showing how much the required solution will cost at different levels. The 9s can easily demonstrate how much availability costs, when a customer needs defined levels of performance and capacity.

Performance Efficiency

You can measure performance in several ways. These will be explained further in this chapter; but the most common are IOPS, MBps, and latency in milliseconds (ms).

Performance efficiency is the cost per IOPS, per MBps, or per ms latency. IOPS is generally the most useful of the three; most architects and storage companies refer to it as $/IOPS. The problem is, despite IOPS being a measureable test of a disk, many factors in today's advanced storage solutions—such as RAID type, read and write cache, and tiering—skew the figures so much that it can be difficult to predict and attribute a value to a whole storage device.

This is where lab testing is essential for a good design. To understand how suitable a design is, you can use appropriate testing to determine the performance efficiency of different storage solutions. Measuring the performance of each option with I/O loads comparable to the business's requirements, and comparing that to cost, gives the performance efficiency.

Capacity Efficiency

Capacity efficiency is the easiest to design for. $/GB is a relatively simple calculation, given the sales listings for different vendors and units. Or so you may think.

The “Designing for Capacity” section of this chapter will discuss some of the many factors that affect the actual usable space available. Just because you have fifteen 1 TB disks doesn't mean you can store exactly 15 TB of data. As you'll see, several factors eat into that total significantly; but perhaps more surprising is that several technologies now allow you to get more for less.

Despite the somewhat nebulous answer, you can still design capacity efficiency. Although it may not necessarily be a linear calculation, if you can estimate your storage usage, you can predict your capacity efficiency. Based on the cost of purchasing disks, if you know how much usable space you have per disk, then it's relatively straightforward to determine $/GB.

Other Efficiencies

Before moving on, it's worth quickly explaining that other factors are involved in storage efficiencies. Availability, performance, and capacity features can all be regarded as capital expenditure (CAPEX) costs; but as the price of storage continues to drop, it's increasingly important to understand the operational expenditure (OPEX) costs. For example, in your design, you may wish to consider these points:

vSphere Storage Features

As vSphere has evolved, VMware has continued to introduce features in the platform to make the most of its available storage. Advancements in redundancy, management, performance control, and capacity usage all make vSphere an altogether more powerful virtualization stage. These technologies, explained later in the chapter, allow you to take your design beyond the capabilities of your storage array. They can complement and work with some available array features, making it easier to manage, augment some array features to increase that performance or capacity further, or simply replace the need to pay the storage vendor's premium for an array-based feature.

Despite the stunning new possibilities introduced with flash storage hardware, the most dramatic changes in storage are software based. Not only are storage vendors introducing great new options with every new piece of equipment, but VMware reinvents the storage landscape for your VMs with every release.

Designing for Capacity

An important aspect of any storage design involves ensuring that it has sufficient capacity not just for the initial deployment but also to scale up for future requirements. Before discussing what you should consider in capacity planning, let's review the basics behind the current storage options. What decisions are made when combining raw storage into usable space?

RAID Options

Modern servers and storage arrays use Redundant Array of Independent/Inexpensive Disks (RAID) technologies to combine disks into logical unit numbers (LUNs) on which you can store data. Regardless of whether we're discussing local storage; a cheap, dumb network-attached storage (NAS) or SAN device; or a high-end enterprise array, the principles of RAID and their usage still apply. Even arrays that abstract their storage presentation in pools, groups, and volumes use some type of hidden RAID technique.

The choice of which RAID type to use, like most storage decisions, comes down to availability, performance, capacity, and cost. In this section, the primary concerns are both availability and capacity. Later in the chapter, in the “Designing for Performance” section, we discuss RAID to evaluate its impact on storage performance.

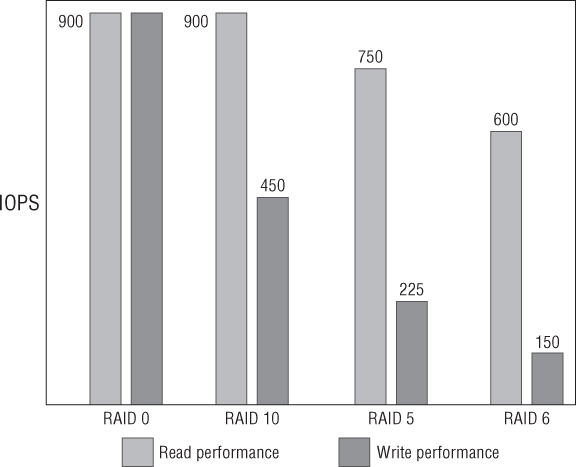

Many different types of RAID (and non-RAID) solutions are available, but these examples cover the majority of cases that are used in VMware solutions. Figure 6.1 compares how different RAID types mix the data-to-redundancy ratio.

Figure 6.1 Capacity versus redundancy

RAID 0

RAID 0 stripes all the disks together without any parity or mirroring. Because no disks are lost to redundancy, this approach maximizes the capacity and performance of the RAID set. However, with no redundancy, just one failed disk will destroy all of your data. For this reason, RAID 0 isn't suitable for a VMware (or almost any other) production setting.

RAID 10

RAID 10 describes a pair of disks, or multiples thereof, that mirror each other. From an availability perspective, this approach gives an excellent level of redundancy, because every block of data is written to a second disk. Multiple disks can fail as long as one copy of each pair remains available. Rebuild times are also short in comparison to other RAID types. However, capacity is effectively halved; in every pair of disks, exactly one is a parity disk. So, RAID 10 is the most expensive solution.

Without considering performance, RAID 10 is useful in a couple of vSphere circumstances. It's often used in situations where high availability is crucial. If the physical environment is particularly volatile—for example, remote sites with extremes of temperature or humidity, ground tremors, or poor electrical supply—or if more redundancy is a requirement due to the importance of the data, then RAID 10 always provides a more robust solution. RAID 1 (two mirrored disks) is often used on local disks for ESXi's OS, because local disks are relatively cheap and capacity isn't normally a requirement when shared storage is available.

RAID 5

RAID 5 is a set of disks that stripes parity across the entire group using the equivalent of one disk (as opposed to RAID 4, which assigns a single specific disk for parity). Aside from performance differences, RAID 5 is a very good option to maximize capacity. Only one disk is lost for parity, so you can use n – 1 for data.

However, this has an impact on availability, because the loss of more than one disk at a time will cause a complete loss of data. It's important to consider the importance of your data and the reliability of the disks before selecting RAID 5. The MTBFs, rebuild times, and availability of spares/replacements are significant factors.

RAID 5 is a very popular choice for SCSI/SAS disks that are viewed as fairly reliable options. After a disk failure, RAID 5 must be rebuilt onto a replacement before a second failure. SCSI/SAS disks tend to be smaller in capacity and faster, so they rebuild much more quickly. Because SCSI/SAS disks also tend to be more expensive than their SATA counterparts, it's important to get a good level of capacity return from them.

With SAN arrays, it's common practice to allocate one or more spare disks. These spare disks are used in the event of a failure and are immediately moved in as replacements when needed. An advantage from a capacity perspective is that one spare can provide additional redundancy to multiple RAID sets.

If you consider your disks reliable, and you feel that two simultaneous failures are unlikely, then RAID 5 is often the best choice. After all, RAID redundancy should never be your last line of defense against data loss. RAID 5 provides the best capacity, with acceptable availability given the right disks and hot spares.

RAID 6

An increasingly popular choice among modern storage designs is RAID 6. It's similar in nature to RAID 5, in that the parity data is distributed across all member disks, but it uses the equivalent of two disks. This means it loses some capacity compared to RAID 5 but can withstand two disks failing in quick succession. This is particularly useful when you're creating larger RAID groups.

RAID 6 is becoming more popular as drive sizes increase (therefore increasing rebuild times), because MTBF drops as physical tolerances on disks become tighter, and as SATA drives become more pervasive in enterprise storage.

Other Vendor-Specific RAID Options

The basic RAID types mentioned cover most scenarios for vSphere deployments, but you'll encounter many other options when talking to different storage vendors. Many of these are technically very similar to the basic types, such as RAID-DP from NetApp. RAID-DP is similar to a RAID 6 group, but rather than the parity being distributed across all disks, RAID-DP uses two specific disks for parity (like RAID 4). The ZFS file system designed by Sun Microsystems (now Oracle), which includes many innovative storage technologies on top, uses a self-allocating disk mechanism not dissimilar to RAID 5, called RAID-Z. Although it differs in the way it writes data to disks, it uses the premise of a disk's worth of parity across the group like RAID 5. ZFS is used in many Solaris and BSD-based storage systems. Linux has a great deal of RAID, logical volume manager (LVM), and file system options, but due to licensing incompatibilities it has never adopted ZFS. Linux has a new file system called BTRFS that is set to compete directly with ZFS and is likely to be the basis of many new storage solutions in the near future as it stabilizes and features are quickly added.

Some storage arrays effectively make the RAID choices for you, by hiding the details and describing disks in terms of pools, volumes, aggregates, and so on. They abstract the physical layer and present the storage in a different way. This allows you to select disks on more user-friendly terms, while hiding the technical details. This approach may reduce the level of granularity that storage administrators are used to, but it also reduces complexity and arguably makes default decisions that are optimized for their purpose.

Basic RAID Storage Rules

The following are some additional basic rules you should follow in vSphere environments:

- Ensure that spares are configured to automatically replace failed disks.

- Consider the physical location of the hardware and the warranty agreement on replacements, because they will affect your RAID choices and spares policy.

- Follow the vendor's advice regarding RAID group sizes and spares.

- Consider the importance of the data, because the RAID type is the first defense against disk failures (but definitely shouldn't be the only defense). Remember that availability is always of paramount importance when you're designing any aspect of storage solutions.

- Replace failed disks immediately, and configure any phone-home options to alert you (or the vendor directly) as soon as possible.

- Use predictive failure detection if available, to proactively replace disks before they fail.

Estimating Capacity Requirements

Making initial estimates for your storage requirements can be one of the easier design decisions. Calculating how much space you really need depends on the tasks ahead of you. If you're looking at a full virtualization implementation, converting physical servers to VMs, there are various capacity planners available to analyze the existing environment. If the storage design is to replace an existing solution that you've outgrown, then the capacity needs will be even more apparent. If you're starting anew, then you need to estimate the average VM, with the flexibility to account for unusually large servers (file servers, mailbox servers, databases, and so on).

In addition to the VMDK disk files, several additional pieces need space on the datastores:

VMFS Capacity Limits

VMFS isn't the only storage option available for VMs, but it's by far the most popular. You can make different assumptions when using Network File System (NFS) datastores, and they will be discussed later in the chapter in “Choosing a Protocol.” Using raw device mapping disks (RDMs) to store VM data is another option, but this is out of the scope of this chapter. Chapter 7 will look at RDMs in more detail; for the purposes of capacity planning for a storage array, consider their use frugally and note the requirement for separate LUNs for each RDM disk where needed.

VMFS itself is described in a little more detail in the “vSphere Storage Features” section later in this chapter, but it's worth detailing the impact that it can have on the LUN sizing at this point. VMFS-5 volumes can be up to 64 TB in size (as opposed to their predecessor, which was ≈ 2 TB), which allows for very high consolidation ratios. Whereas previously, anyone who wanted very large datastores looked to NFS options (although concatenating VMFS extents was technically possible), now block storage can have massive datastores, removing another potential constraint from your design. In reality, other performance factors will likely mean that most datastores should be created much smaller than this.

With VMFS-3, extents could be used to effectively grow the smaller datastores up to 64 TB. Extents are a concatenation of additional partitions to the first VMFS partition. This is no longer required for this purpose, but extents still exist as a useful tool. With VMFS-5, 32 extents are possible. The primary use case for extents today is to nondisruptively grow a volume. This can be a lifesaver if your storage array doesn't support growing LUNs online, so you can expand the VMFS volume. Instead, you can create additional LUNs, present them to the same hosts that can see the first VMFS partition, and add them as extents.

There are technical arguments why extents can be part of a design. The stigma regarding extents arose partially because they were used in cases where planning didn't happen properly, and somewhat from the belief that they would cause performance issues. In reality, extents can improve performance when each extent is created on a new physical LUN, thereby reducing LUN queues, aiding multipathing, and increasing throughput. Any additional LUNs should have the same RAIDing and use similar disk types (same speed and IOPS capability).

However, despite any potential performance benefits, there are still pitfalls involving extents that make them difficult to recommend. You must take care when managing the LUNs on the array, because taking just one of the extent LUNs offline is likely to affect many (if not all) of the VMs on the datastore. When you add LUNs to the VMFS volume, data from VMs can be written across all the extents. Taking one LUN offline can crash all the VMs stored on the volume—and pray that you don't delete the LUN as well. Most midrange SANs can group LUNs into logical sets to prevent this situation, but it still remains a risk that a single corrupt LUN can affect more VMs than normal. The head LUN (the first LUN) contains the metadata for all the extents. This one is particularly important, because a loss of the head LUN corrupts the entire datastore. This LUN attracts all the SCSI reservation locks on a non-VAAI-backed LUN.

Datastore clusters are almost the evolution of the extent, without the associated risks. If you have the licensing for datastore clusters and Storage DRS, you shouldn't even consider using extents. You still get the large single storage management point (with individual datastores up to 64 TB) and multiple LUN queues, paths, and throughput.

The most tangible limit on datastores currently is the size of the VMDK disks, which can only be up to 2 TB (2 TB minus 512 KB, to be exact). VMDKs on NFS datastore are limited in the same way. If you absolutely must have individual disks larger than 2 TB, some workarounds are as follows:

- Use the VM's guest OS volume management, such as Linux LVM or Windows dynamic disks, to combine multiple VMDK disks to make larger guest volumes.

- Use physical RDMs, which can be up to 64 TB (virtual RDMs are still limited to 2 TB).

- Use in-guest mounting of remote IP storage, such as an iSCSI LUN via a guest initiator or a mounted NFS export. This technique isn't recommended because the storage I/O is considered regular network traffic by the hypervisor and so isn't protected and managed in the same way.

Large or Small Datastores?

Just how big should you make your datastores? There are no hard-and-fast rules, but your decision relies on several key points. Let's see why you would choose one or the other:

- You have fewer datastores and array LUNs to manage.

- You can create more VMs without having to make frequent visits to the array to provision new LUNs.

- Large VMDK disk files can be created.

- It allows more space for snapshots and future expansion.

- Having fewer VMs in each datastore means you have more granularity when it comes to RAID type.

- Disk shares can be apportioned more appropriately.

- ESXi hosts can use the additional LUNs to make better use of multipathing.

- Storage DRS can more efficiently balance the disk usage and performance across more datastores.

In reality, like most design decisions, the final solution is likely to be a sensible compromise of both extremes. Having one massive datastore would likely cause performance issues, whereas having a single datastore per VM would be too large an administrative overhead for most, and you'd soon reach the upper limit of 256 LUNs on a host.

The introduction of datastore clusters and Storage DRS helps to solve some of the conundrum regarding big or small datastores. These features can give many of the performance benefits of the smaller datastores while still having the reduced management overheads associated with larger datastores. We delve into datastore clusters and Storage DRS later in the chapter.

The size of your datastores will ultimately be impacted primarily by two elements:

vSphere 5 limits your datastores to a voluminous 2,048 VMs, but consider that more a theoretical upper limit and not the number of VMs around which to create an initial design. Look at your VMs, taking into account the previous two factors, and estimate a number of VMs per datastore that you're comfortable with. Then, multiply that number by your average estimated VM size. Finally, add a fudge factor of 25 percent to account for short-term growth, snapshots, and VM swap files, and you should have an average datastore size that will be appropriate for the majority of your VMs. Remember, you may need to create additional datastores that are specially provisioned for VMs that are larger, are more I/O intensive, need different RAID requirements, or need increased levels of protection.

Fortunately, with the advent of Storage vMotion, moving your VMs to different-sized datastores no longer requires an outage.

Thin Provisioning

The ability to thin-provision new VM disks from the vSphere client GUI was introduced in vSphere 4. You can convert existing VMs to thinly provisioned ones during Storage vMotions. Chapter 7 explains in more depth the practicalities of thin-provisioning VMs, but you need to make a couple of important design decisions when considering your storage as a whole.

Thin provisioning has been available on some storage arrays for years. It's one of the ways to do more for less, and it increases the amount of usable space from disks. Since the support of NFS volumes, thin provisioning has been available to ESXi servers. Basically, despite the guest operating system (OS) seeing its full allocation of space, the space is actually doled out only as required. This allows all the spare (wasted) space within VMs to be grouped together and used for other things (such as more VMs).

The biggest problem with any form of storage thin-provisioning is the potential for overcommitment. It's possible—and desirable, as long as it's controlled properly—to allocate more storage than is physically available (otherwise, what's the point?). Banks have operated on this premise for years. They loan out far more money than they have in their vaults. As long as everyone doesn't turn up at the same time wanting their savings back, everything is okay. If all the VMs in a datastore want the space owed to them at once, then you run into overcommitment problems. You've effectively promised more than is available.

To help mitigate the risk of overcommiting the datastores, you can use both the Datastore Disk Usage % and Datastore Disk Overallocation % alarm triggers in vSphere. Doing so helps proactively monitor the remaining space and ensures that you're aware of potential issues before they become a crisis. In the vSphere Client, you can at a glance compare the amounts provisioned against the amounts utilized and get an idea of how thinly provisioned your VMs are.

Many common storage arrays now support VMware's vStorage APIs for Array Integration (VAAI). This VAAI support provides several enhancements and additional capabilities, which are explained later in the chapter. But pertinent to the thin-provisioning discussion is the ability of VAAI-capable arrays to allow vSphere to handle thin provisioning more elegantly.

With VAAI arrays, vSphere 5 can also:

- Tell the array to reclaim dead space created when files are deleted from a datastore. Ordinarily, the array wouldn't be aware of VMs you deleted or migrated off a datastore via Storage vMotion (including Storage DRS). VAAI informs the array that those blocks are no longer needed and can be safely reclaimed. This feature must be manually invoked from the command line and is discussed later in the VAAI section.

- Provide better reporting and deal with situations where thin provisioning causes a datastore to run out of space. Additional advanced warnings are available by default (when they hit 75 percent full), and VMs are automatically paused when space is no longer available due to overcommitment.

The take-home message is, when planning to use thin provisioning on the SAN, look to see if your storage arrays are VAAI capable. Older arrays may be compatible but require a firmware upgrade to the controllers to make this available. When you're in the market for a new array, you should check to see if this VAAI primitive is available (some arrays offer compatibility with only a subset of the VAAI primitives).

Why do this? At any one time, much of the space allocated to VMs is sitting empty. You can save space, and therefore money, on expensive disks by not providing all the space at once. It's perfectly reasonable to expect disk capacity and performance to increase in the future and become less expensive, so thin provisioning is a good way to hold off purchases as long as possible. As VMs need more capacity, you can add it as required. But doing so needs careful monitoring to prevent problems.

Should You Thin-Provision Your VMs?

Sure, there are very few reasons not to do this, and one big, fat, money-saving reason to do it. As we said earlier, thin provisioning requires careful monitoring to prevent out-of-space issues on the datastores. vCenter has built-in alarms that you can easily configure to alert you of impending problems. The trick is to make sure you'll have enough warning to create more datastores or move VMs around to avert anything untoward. If that means purchasing and fitting more disks, then you'd better set the threshold suitably low.

As we've stated, there are a few reasons not to use vSphere thin provisioning:

- It can cause overcommitment.

- It prevents the use of eager-zeroed thick VMDK disks, which can increase write performance (Chapter 7 explains the types of VM disks in more depth).

- It creates higher levels of consolidation on the array, increasing the I/O demands on the SPs, LUNs, paths, and so on.

- Converting existing VMs to thin-provisioned ones can be time-consuming.

- You prefer to use your storage array's thin provisioning instead.

There are also some situations where it isn't possible to use thin-provisioned VMDK files:

- Fault-tolerant (FT) VMs

- Microsoft clustering shared disks

Does Thin Provisioning Affect the VM's Performance?

vSphere's thin provisioning of VM disks has been shown to make no appreciable difference to their performance, over default VMDK files (zeroed thick). It's also known that thin provisioning has little impact on file fragmentation of either the VMDK files or their contents. The concern primarily focused around the frequent SCSI locking required as the thin disk expanded; but this has been negated through the use of the new Atomic Test & Set (ATS) VAAI primitive, which dramatically reduces the occasions that LUN is locked.

If Your Storage Array Can Thin-Provision, Should You Do It on the Array, in vSphere, or Both?

Both array and vSphere thin provisioning should have similar results, but doing so on the array can be more efficient. Thin provisioning on both is likely to garner little additional saving (a few percent, probably), but you double the management costs by having to babysit two sets of storage pools. By thin-provisioning on both, you expedite the rate at which you can become oversubscribed.

The final decision on where to thin-provision disks often comes down to who manages your vSphere and storage environment. If both are operationally supported by the same team, the choice is normally swayed by the familiarity of the team with both tools. Array thin-provisioning is more mature, and arguably a better place to start; but if your team is predominantly vSphere focused and the majority of your shared storage is used by VMs, then perhaps this is where you should manage it. Who do you trust the most with operational capacity management issues—the management tools and processes of your storage team, or those of your vSphere team?

Data Deduplication

Some midrange and enterprise storage arrays offer what is known as data deduplication, often shortened to dedupe. This feature looks for common elements that are identical and records one set of them. The duplicates can be safely removed and thus save space. This is roughly analogous to the way VMware removes identical memory blocks in its transparent page sharing (TPS) technique.

The most common types of deduplication are as follows:

Deduplication can be done inline or post-process. Inline means the data is checked for duplicates as it's being written (synchronously). This creates the best levels of space reduction; but because it has a significant impact in I/O performance, it's normally used only in backup and archiving tools. Storage arrays tend to use post-process deduplication, which runs as a scheduled task against the data (asynchronously). Windows Server 2012's built-in deduplication is run as a scheduled task. Even post-process deduplication can tax the arrays' CPUs and affect performance, so you should take care to schedule these jobs only during times of lighter I/O.

It's also worth noting that thin provisioning can negate some of the benefits you see with block-level deduplication, because one of the big wins normally is deduplicating all the empty zeros in a file system. It isn't that you don't see additional benefits from using both together; just don't expect the same savings as you do on a thickly provisioned LUN or VMDK file.

Array Compression

Another technique to get more capacity for less on storage arrays is compression. This involves algorithms that take objects (normally files) and compress them to squash out the repeating patterns. Anyone who has used WinZip or uncompressed a tarball will be familiar with the concept of compression.

Compression can be efficient in capacity reduction, but it does have an impact on an array's CPU usage during compression, and it can affect the disk-read performance depending on the efficiency of the on-the-fly decompression. Traditionally the process doesn't affect the disk writes, because compression is normally done as a post process. Due to the performance cost, the best candidates for compression are usually low I/O sources such as home folders and archives of older files.

With the ever-increasing capabilities of array's CPUs, more efficient compression algorithms, and larger write caches, some new innovative vendors can now compress their data inline. Interestingly, this can improve write performance, because only compressed data is written to the slower tiers of storage. The performance bottleneck on disk writes is usually the point when the data has to be written to disk. By reducing the amount of writes to the spinning disks, the effective efficiency can be increased, as long as the CPUs can keep up with processing the ingress of data.

Downside of Saving Space

There is a downside to saving space and increasing your usable capacity? You may think this is crazy talk; but as with most design decisions, you must always consider the practical impacts. Using these newfangled technological advances will save you precious GB of space, but remember that what you're really doing is consolidating the same data but using fewer spindles to do it. Although that will stave off the need for more capacity, you must realize the potential performance repercussions. Squeezing more and more VMs onto a SAN puts further demands on limited I/O.

Designing for Performance

Often, in a heavily virtualized environment, particularly one that uses some of the space-reduction techniques just discussed, a SAN will hit performance bottlenecks long before it runs out of space. If capacity becomes a problem, then you can attach extra disks and shelves. However, not designing a SAN for the correct performance requirements can be much more difficult to rectify. Upgrades are usually prohibitively expensive, often come with outages, and always create an element of risk. And that is assuming the SAN can be upgraded.

Just as with capacity, performance needs are a critical part of any well-crafted storage design.

Measuring Storage Performance

All the physical components in a storage system plus data characteristics combine to provide the resulting performance. You can use many different metrics to judge the performance levels of a disk and the storage array, but the three most relevant and commonly used are as follows:

How to Calculate a Disk's IOPS

To calculate the potential IOPS from a single disk, use the following equation:

IOPS = 1 / (rotational latency + average read/write seek time)

For example, suppose a disk has the following characteristics:

If you expect the usage to be around 75 percent reads and 25 percent writes, then you can expect the disk to provide an IOPS value of

1 / (0.002 + 0.00425) = 160 IOPS

What Can Affect a Storage Array's IOPS?

Looking at single-disk IOPS is relatively straightforward. However, in a vSphere environment, single disks don't normally provide the performance (or capacity or redundancy) required. So, whether the disks are local DAS storage or part of a NAS/SAN device, they will undoubtedly be aggregated together. Storage performance involves many variables. Understanding all the elements and how they affect the resulting IOPS available should clarify how an entire system will perform.

Disks

The biggest single effect on an array's IOPS performance comes from the disks themselves. They're the slowest component in the mix, with most disks still made from mechanical moving parts. Each disk has its own physical properties, based on the number of platters, the rotational speed (RPMs), the interface, and so on; but disks are predicable, and you can estimate any disk's IOPS.

The sort of IOPS you can expect from a single disk is shown in Table 6.2.

Table 6.2 Average IOPS per disk type

| RPM | IOPS |

| SSD (SLC) | 6,000–50,000 |

| SSD (MLC) | 1,000+ (modern MLC disks vary widely; check the disk specifications and test yourself) |

| 15 K (normally FC/SAS) | 180 |

| 10 K (normally FC/SAS) | 130 |

| 7.2 K (normally SATA) | 80 |

| 5.4 K (normally SATA) | 50 |

Solid-state drive (SSD) disks, sometimes referred to as flash drives, are viable options in storage arrays. Prices have dropped rapidly, and most vendors provide hybrid solutions that include them in modern arrays. The IOPS value can vary dramatically based on the generation and underlying technology such as multi-level cell (MLC) or the faster, more reliable single-level cell (SLC). If you're including them in a design, check carefully what sort of IOPS you'll get. The numbers in Table 6.2 highlight the massive differential available.

Despite the fact that flash drives are approximately 10 times the price of regular hard disk drives, they can be around 50 times faster. So, for the correct usage, flash disks can provide increased efficiency with more IOPS/$. Later in this section, we'll explore some innovative solutions using flash drives and these efficiencies.

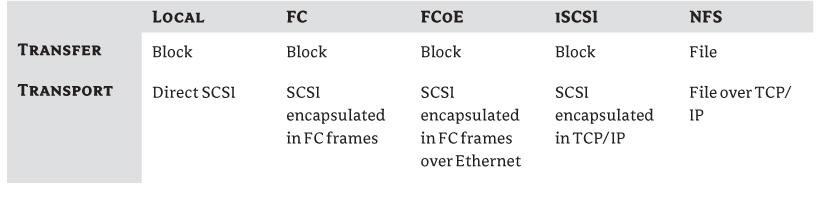

RAID Configuration

Creating RAID sets not only aggregates the disks' capacity and provides redundancy, but also fundamentally changes their performance characteristics (see Figure 6.2):

Figure 6.2 Performance examples

Interfaces

The interface is the physical connection from the disks. The disks may be connected to a RAID controller in a server, a storage controller, or an enclosure's backplane. Several different types are in use, such as IDE, SATA, SCSI, SAS, and FC, and each has its own standards with different recognized speeds. For example, SATA throughput is 1.5 Gbps, SATA II is backward compatible but qualified for 3 Gbps, and SATA III ups this to 6 Gbps.

Controllers

Controllers sit between the disks and servers, connected via the disk (and enclosure) interfaces on one side and the connectors to the server on the other. Manufacturers may refer to them as controllers, but the terms SPs and heads are often used in SAN hardware. Redundancy is often provided by having two or more controllers in an array.

Controllers are really mini-computers in themselves, running a customized OS. They're responsible for most of the special features available today, such as deduplication, failover, multipathing, snapshots, replication, and so on. Onboard server controllers and SAN controllers present their storage as block storage (raw LUNs), whereas NAS devices present their storage as a usable file system such as NFS. However, the waters become a little murky as vendors build NAS facilities into their SANs and vice versa.

Controllers almost always use an amount of non-volatile memory to cache the data before destaging it to disk. This memory is orders of magnitude faster than disks and can significantly improve IOPS. The cache can be used for writes and reads, although write cache normally has the most significance. Write cache allows the incoming data to be absorbed very quickly and then written to the slower disks in the background. However, the size of the cache limits its usefulness, because it can quickly fill up. At that point, the IOPS are again brought down to the speed of the disks, and the cache needs to wait to write the data out before it can empty itself and be ready for new data.

Controller cache helps to alleviate some of the effect of RAID write penalties mentioned earlier. It can collect large blocks of contiguous data and write them to disk in single operation. The earlier RAID calculations are often changed substantially by controllers; they can have a significant effect on overall performance.

Transport

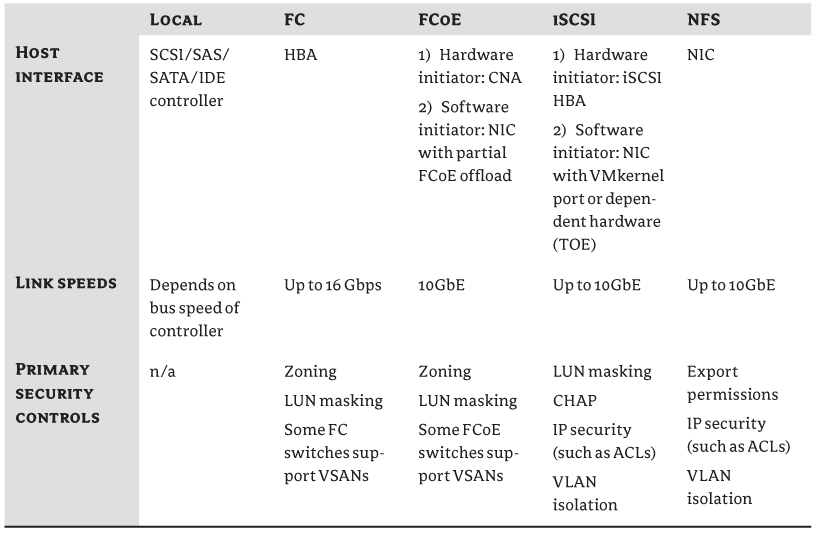

The term transport in this instance describes how data gets from the servers to the arrays. If you're using a DAS solution, this isn't applicable, because the RAID controller is normally mounted directly to the motherboard. For shared storage, however, a wide variety of technologies (and therefore design decisions) are available. Transport includes the protocol, the topology, and the physical cables/connectors and any switching equipment used. The protocol you select determines the physical aspects, and you can use a dizzying array of methods to get ones and zeros from one rack to another.

Later in the chapter in “Choosing a Protocol,” we'll examine the types of protocols in more depth, because it's an important factor to consider when you're designing a storage architecture. Each protocol has an impact on how to provide the required redundancy, multipathing options, throughput, latency, and so on. But suffice it to say, the potential storage protocols that are used in a vSphere deployment are Fibre Channel (FC), FCoE, iSCSI, and NFS.

Other Performance Factors to Consider

In addition to the standard storage components we've mentioned, you can customize other aspects to improve performance.

Queuing

Although block storage, array controllers, LUNs, and host bus adapters (HBAs) can queue data, there can still be a bottleneck from outstanding I/O. If the array can't handle the level of IOPS, the queue fills faster than it can drain. This queuing causes latency, and excessive amounts can be very detrimental to overall performance. When the queue is full, the array sends I/O-throttling commands back to the host's HBAs to slow down the traffic. The amount of queuing, or queue depth, is usually configurable on devices and can be optimized for your requirements. The QUED column in esxtop shows the queuing levels in real time.

Each LUN gets its own queue, so changes to HBA queue depths can affect multiple LUN queues. If multiple VMs are active on a LUN, you also need to update the Disk.SchedNumReqOutstanding value. This is the level of active disk requests being sent to the LUN by the VMs. Normally, that value should equal the queue-depth number. (VMware's Knowledge Base article 1267 explains how to change these values: http://kb.vmware.com/kb/1267.)

The default queue-depth settings are sufficient for most use cases. However, if you have a small number of very I/O-intensive VMs, you may benefit from increasing the queue depth. Take care before you decide to change these values; it's a complex area where good intentions can lead to bigger performance issues. Increasing queue depth on the hosts unnecessarily can create more latency than needed. Often, a more balanced design, where VM loads are spread evenly across HBAs, SPs, and LUNs, is a better approach than adjusting queue-depth values. You should check the array and the HBA manufacturer's documentation for their recommendations.

Partition Alignment

Aligning disk partitions can make a substantial difference—up to 30 percent in the performance of some operations. When partitions are aligned properly, it increases the likelihood that the SAN controller can write a full stripe. This reduces the RAID write penalty that costs so much in terms of IOPS.

You need to address partition alignment on vSphere in two areas: the VMFS volume and the guest OS file system. When you create VMFS datastores from within the vSphere client, it aligns them automatically for you. In most cases, local VMFS isn't used for performance-sensitive VMs; but if you're planning to use this storage for such tasks, you should create the partition in the client.

The most likely place where partitions aren't aligned properly is in the guest OSes of the VMs. Chapter 7 will have a more in-depth examination of this topic and how to align or realign a VM's partitions.

Workload

Every environment is different, and planning the storage depends on what workloads are being generated. You can optimize storage for different types of storage needs: the ratio of reads to writes, the size of the I/O, and how sequential or random the I/O is.

Writes always take longer than reads. Individual disks are slower to write data than to read them. But more important, the RAID configurations that have some sort of redundancy always penalize writes. As you've seen, some RAID types suffer from write penalties significantly more than others. If you determine that you have a lot of writes in your workloads, you may attempt to offset this with a larger controller cache. If, however, you have a negligible number of writes, you may choose to place more importance on faster disks or allocate more cache to reads.

The size of I/O requests varies. Generally speaking, larger requests are dealt with more quickly than small ones. You may be able to optimize certain RAID settings on the array or use different file-system properties.

Sequential data can be transferred to disk more quickly than random data because the disk heads don't need to move around as much. If you know certain workloads are very random, you can place them on the faster disks. Alternatively, most controller software attempts to derandomize the data before it's destaged from cache, so your results may vary depending on the vendor's ability to perform this efficiently.

VMs

Another extremely important aspect of your design that impacts your storage performance is the VMs. Not only are they the customers for the storage performance, but they also have a role to play in overall speed.

Naturally, this will be discussed in more depth in Chapter 7, but it's worth noting the effect it can have on your storage design. How you configure a VM affects its storage performance but can also affect the other VMs around it. Particularly I/O-intensive VMs can affect other VMs on the same host, datastore (LUN), path, RAID set, or controller. If you need to avoid IOPS contention for a particular VM, you can isolate it, thus guaranteeing it IOPS. Alternatively, if you wish to reduce the impact of I/O from VMs on others, you can spread the heavy hitters around, balancing the load. Chapter 8, “Datacenter Design,” also looks at how disk shares can spread I/O availability.

We've already mentioned guest OS alignment, but you can often tune the guest OS to the environment for your storage array. The VM's hardware and drivers also have an impact on how it utilizes the available storage. How the data is split across VMDKs, whether its swapfile is segregated to a separate VMDK, and how the balance of different SAN drive types and RAIDing are used for different VM disks all affect the overall storage design.

vSphere Storage Performance Enhancements

Later in the chapter, we look at the result of several vSphere technologies that can have an impact on the performance of your VMs. Features such as Storage I/O Control (SIOC), VAAI, and Storage DRS can all improve the VMs' storage performance. Although these don't directly affect the array's performance per se, by optimizing the VMs' use of the array, they provide a more efficient and overall performant system.

Newer Technologies to Increase Effective IOPS

Recently, many SAN vendors have been looking at ways to improve the performance of their arrays. This is becoming important as the density of IOPS required per disk has risen sharply. This jump in the need for IOPS is partly because of the consolidation that vSphere lends itself to, and partly due to advancements in capacity optimizations, such as deduplication.

Write Coalescing

Coalescing is a function of most SPs to improve the effective IOPS. It attempts to take randomized I/O in the write cache and reorganize it quickly into more sequential data. This allows it to be more efficiently striped across the disks and cuts down on write latency. By its very nature, coalescing doesn't help optimize disk reads, so it can only help with certain types of I/O.

Large Cache

Today's controller cache can vary from around 256 MB on a server's RAID controller to hundreds of gigabytes on larger enterprise SANs.

Some SAN vendors have started to sell add-on cards packed with terabytes of nonvolatile memory. These massive cache cards are particularly helpful in situations where the data is being compressed heavily and IOPS/TB are very high. A good example is virtual desktop infrastructure (VDI) workloads such as VMware View deployments.

Another approach is to augment the existing controller cache with one or more flash drives. These aren't as responsive as the onboard memory cache, but they're much less expensive and can still provide speeds that are exponentially (at least 50 times) more than the SAS/SATA disks they're cache for. This relatively economical option means you can add terabytes of cache to SANs.

These very large caches are making massive improvements to storage arrays' IOPS. But these improvements can only be realized in certain circumstances, and it's important that you consider your own workload requirements.

The one criticism of this technique is that it can't preemptively deal with large I/O requests. Large cache needs a period of time to warm up when it's empty, because although you don't want to run out of cache, it isn't very useful if it doesn't hold the data you're requesting. After being emptied, it takes time to fill with suitable requested data. So, for example, even though SP failover shouldn't affect availability of your storage, you may find that performance is heavily degraded for several hours afterward as the cache refills.

Cache Prefetch

Some controllers can attempt to prefetch data in their read caches. They look at the blocks that are being requested and try to anticipate what the next set of blocks might be, so they're ready if a host subsequently requests it. Vendors use various algorithms, and cache prefetch relies on the sort of workloads presented to it. Some read the next set of blocks; others do it based on previous reads. This helps to deliver the data directly from the cache instead of having to wait for slower disks, thus potentially improving response time.

Cache Deduplication

Cache deduplication does something very similar to disk deduplication, in that it takes the contents of the cache's data and removes identical blocks. It effectively increases the cache size and allows more things to be held in cache. Because cache is such a critical performance enhancement, this extra cache undoubtedly helps improve the array's performance. Cache deduplication can be particularly effective when very similar requests for data are being made, such as VDI boot storms or desktop recomposes.

Tiering

Another relatively new innovation on midrange and enterprise SANs is the tiering of disks. Until recently, SANs came with 10 K or 15 K drives. This was the only choice, along with whatever RAIDing you wanted to create, to divide the workload and create different levels of performance. However, SATA disks are used increasingly, because they have large capacity and are much less expensive. Add to that the dramatic drop in prices for flash drives, which although smaller provide insane levels of performance, and you have a real spread of options. All of these can be mixed in different quantities to provide both the capacity and the performance required.

Initially, only manual tiering was available: SAN administrators created disk sets for different workloads. This was similar to what they did with drive speeds and different types of RAID. But now you have a much more flexible set of options with diverse characteristics.

Some storage arrays have the ability to automate this tiering, either at the LUN level or down to the block level. They can monitor the different requests and automatically move the more frequently requested data to the faster flash disks and the less requested to the slower but cheaper SATA disks. You can create rules to ensure that certain VMs are always kept on a certain type of disk, or you can create schedules to be sure VMs that need greater performance at set times are moved into fast areas in advance.

Automatic tiering can be very effective at providing extra IOPS to the VMs that really need it, and only when they need it. Flash disks help to absorb the increase in I/O density caused by capacity-reduction techniques. Flash disks reduce the cost of IOPS, and the SATA disks help bring down the cost of the capacity.

Host-Based Flash Cache

An increasingly popular performance option is host-based caching cards. These are PCIe-based flash storage, which due to the greater throughput available on the PCIe bus are many times faster than SATA- or SAS-based SSD flash drives. At the time of writing, the cards offer hundreds of GBs of storage but are largely read cache. Current market examples of this technology are the Fusion-io cards and EMC's VFCache line.

Host-based flash cache is similar to the large read cache options that are available on many of the mainstream storage arrays, but being host-based the latency is extremely low (measured in microseconds instead of milliseconds). The latency is minimal because once the cache is filled, the requests don't need to traverse the storage network back to the SAN. However, instead of centralizing your large read cache in front of your array, you're dispersing it across multiple servers. This clearly has scalability concerns, so you need to identify the top-tier workloads to run on a high performance cluster of servers. Currently the majority of the PCIe cards sold are only for rack servers; blade-server mezzanine cards aren't generally available, so if an organization is standardizing on blades, it needs to make exceptions to introduce this technology or wait until appropriate cards become available.

Most PCIe flash-based options are focused as read-cache devices. Many offer write-through caching; but because most use nonpersistent storage, it's advisable to only use this write cache for ephemeral data such as OS swap space or temporary files. Even if you trust this for write caching, or the device has nonvolatile storage, it can only ever act as a write buffer to your back-end storage array. This is useful in reducing latency and absorbing peaks but won't help with sustained throughput. Buffered writes eventually need to be drained into the SAN; and if you saturate the write cache, your performance becomes limited by the underlying ingest rate of the storage array.

PCIe flash-based cache is another option in the growing storage tier mix. It has the potential to be very influential if the forthcoming solutions can remain array agnostic. If it's deeply tied to a vendor's own back-end SAN, then it will merely be another tiered option. But if it can be used as a read cache for any array, then this could be a boon for customers who want to add performance at levels normally available only in the biggest, most expensive enterprise arrays. Eventually, PCIe flash read caches are likely to be overtaken by the faster commodity RAM-based software options, but it will be several years before those are large enough to be beneficial for wide-scale uptake. In the meantime, as prices drop, PCIe cards and their driver and integration software will mature, and the write-buffering options will allow them to develop into new market segments.

RAM-Based Storage Cache

A new option for very high I/O requirements is appliances that use server RAM to create a very fast read cache. This is particularly suitable for VDI workloads where the desktop images are relatively small in size, are good candidates for in-cache deduplication, but generate a lot of I/O. The entire desktop image can be cached in RAM (perhaps only 15 GB worth for a Windows 7 image), and all the read requests can be served directly from this tier. This can be a dedicated server for caching or a virtual appliance that grabs a chunk of the ESXi server's RAM. RAM is orders of magnitude faster than local SAS/SATA SSD or even PCIe flash, so performance is extremely impressive and helps to reduce the high IOPS required of the shared storage. Atlantis's ILIO software is an example of such a product.

With vSphere 5.0, VMware introduced a host capability called content based read cache (CBRC) but opted to keep it disabled by default. When VMware View 5.1 was released about six months later, View had a feature called View Storage Accelerator that enabled and utilized the CBRC. CBRC is VMware's answer to RAM-based storage cache. It keeps a deduplicated read cache in the host server's RAM, helping to deliver a faster storage response and absorbing the peaks associated with VDI workloads.

Server memory cache will only ever provide a read-cache option due to the volatile nature of RAM. Read caches for VDI are useful in reducing peaks, particular in boot-storm type scenarios, but VDI is an inherently write-intensive workload. The argument goes that if you're offloading huge chunks of reads, the back-end storage arrays can concentrate on write workloads. VDI is becoming the poster child of read-cache options, because of the relatively small capacity requirements but high IOPS required. Anyone who has tried to recompose hundreds of desktop VMs on an underscaled array knows how painful a lack of horsepower can be.

Although RAM continues to drop in price and grow in capacity, it will always be an expensive GB/$ proposition compared to other local flash-based storage. It will be interesting to see how valuable RAM cache becomes for more generalized server workloads as it becomes feasible to allocate more sizable amounts of RAM as a read-cache option. The ability of centralized storage arrays to deal more efficiently with heavy write loads will become increasingly crucial.

Measuring Your Existing IOPS Usage

When you know what affects the performance of your storage and how you can improve the design to suit your environment, you should be able to measure your current servers and estimate your requirements.

Various tools exist to measure performance:

- Iometer (www.iometer.org/) is an open source tool that can generate different workloads on your storage device. It lets you test your existing storage or generate suitable workloads to test new potential solutions.

- To monitor existing VMs, start with the statistics available in the vSphere client. You can also use esxtop to look at the following statistics:

- DAVG—Disk latency at the array

- KAVG and QUED—Queue-depth statistics showing latency at the VMkernel

- For very in-depth VM monitoring, the vscsiStats tool provides a comprehensive toolset for storage analysis.

- Windows VMs and physical servers can monitor IOPS with the perfmon tool. Just add the counters Disk Reads/sec and Disk Writes/sec from the Physical Disk performance objects to view the IOPS in real time. These can then be captured to a CSV file so you can analyze typical loads over time.

- Linux/Unix VMs and physical servers can use a combination of top and iostat to perform similar recordings of storage usage.

When you're testing VMs, it's worth noting that the hypervisor can create some inaccuracies in guest-based performance tools such as perfmon due to timing issues, especially when the CPU is under strain. Remember to take into account the requirements of nonvirtual servers that may use the same storage, because they may affect the performance of the VMs.

vSphere has added new host and VM performance metrics in both the vSphere Client and in esxtop/resxtop. These additional statistics cover both real-time and trending in vCenter and bring the NFS data on par with the existing block-based support. To make the most of the tools, use the latest host software available.

Local Storage vs. Shared Storage

Shared storage, aka SANs or NAS devices, have become so commonplace in vSphere deployments that local storage is often disregarded as an option. It's certainly true that each new release of VMware's datacenter hypervisor layers on more great functionality that takes advantage of shared storage. But local storage has its place and can offer tangible advantages. Each design is different and needs to be approached with an open mind. Don't dismiss local storage before you identify the real needs of your company.

Local Storage

Local storage, or DAS, can come in several forms. Predominantly, we mean the disks from which you intend to run the VMs, mounted as VMFS datastores. These disks can be physically inside or attached to the host's disk bays. The disks can also be in a separate enclosure connected via a SCSI cable to an external-facing SCSI card's connector. Even if externally mounted, it's logically still local host storage. With local storage, you can mount a reasonable amount of capacity via local SCSI.

You can install vSphere 5 locally on SCSI, SAS, and SATA disks or USB flash drives (including SD cards), although your mileage may vary if the disk controller isn't listed on VMware's approved HCL. Theoretically you can use any of them for local storage for VMs, but clearly USB/SD flash storage was only meant to load the ESXi OS and not to run VMs.

First, let's identify more clearly when you don't want to deploy VMs on local storage. Certain features need storage that multiple hosts can access; if these will be part of your solution, you'll need at least some shared storage. Make no mistake, there are definite advantages to using shared storage (hence its overwhelming popularity):

- Local storage can't take advantage of DRS. Although enhancements in vSphere 5.1 mean that shared storage is no longer a requirement for vMotion, DRS still won't move VMs on local storage.

- High availability (HA) hosts need to be able to see the same VMs to recover them when a protected host fails.

- FT hosts need a second host to access the same VM, so it can step in if the first host fails.

- You can manage storage capacity and performance as a pool of resources across hosts, the same way host clusters can pool compute resources. SIOC, Storage DRS, and Policy-Driven Storage features discussed later in the chapter demonstrate many of the ways that storage can be pooled this way.

- RDM disks can't use local storage. In turn, this excludes the use of Microsoft clustering across multiple hosts.

- When you use shared storage, you can recover from host failures far more easily. If you're using local storage and a server fails for whatever reason, the VMs will be offline until the server can be repaired. This often means time-consuming backup restores. With shared storage, even without the use of HA, you can manually restart the VMs on another cluster host.

- Local storage capacity is limited by several factors, including the size of the SCSI enclosures, the number of SCSI connectors, and the number of datastores. Generally, only so many VMs can be run on a single host. As the number of hosts grows, the administrative overhead soon outweighs the cost savings.

- Performance may also be limited by the number of spindles available in constrained local storage. If performance is an issue, then SSD can be adopted locally, but it's expensive to use in more than a handful of servers and capacity becomes a problem again.

- With shared storage, it's possible to have a common store for templates and ISOs. With local storage, each host needs to have a copy of each template.

- It's possible with shared storage to run ESXi from diskless servers and have them boot from SAN. This effectively makes the hosts stateless, further reducing deployment and administrative overheads.

With all that said, local storage has some advantages of its own. If you have situations where these features or benefits aren't a necessity, then you may find that these positives create an interesting new solution:

- Far and away the greatest advantage of local storage is the potential cost savings. Not only are local disks often cheaper, but an entire shared-storage infrastructure is expensive to purchase and maintain. When a business is considering shared storage for a vSphere setup, this may be the first time it has ventured into the area. The company will have the initial outlay for all the pieces that make up a SAN or NAS solution, will probably have training expenses for staff, and may even need additional staff to maintain the equipment. This incipient cost can be prohibitive for many smaller companies.

- Local storage is usually already in place for the ESXi installable OS. This means there is often local space available for VMFS datastores, or it's relatively trivial to add extra disks to the purchase order for new servers.

- The technical bar for implementing local storage is very low in comparison to the challenges of configuring a new SAN fabric or a NAS device/server.

- Local storage can provide good levels of performance for certain applications. Although the controller cache is likely to be very limited in comparison to modern SANs, latency will be extremely low for obvious reasons.

- You can use local storage to provide VMFS space for templates and ISO files. Using local storage does have an impact. Localized templates and ISOs are available only to that host, which means all VMs need to be built on the designated host and then cold-migrated to the intended host.

- You can also use local storage for VM's swap files. This can save space on the more expensive shared storage for VMs. However, this approach can have an effect on DRS and HA if there isn't enough space on the destination hosts to receive migrated VMs.

- Many of the advanced features that make shared storage such an advantage, such as DRS and HA, aren't available on the more basic licensing terms. In a smaller environment, these licenses and hence the features may not be available anyway.

- Local storage can be ideal in test lab situations, or for running development and staging VMs, where redundancy isn't a key requirement. Many companies aren't willing to pay the additional costs for these VMs if they're considered nonessential or don't have SLAs around their availability.

vSphere 5.1's vMotion enhancements, which allow VMs on local disks to be hot migrated, reaffirm that local VMFS storage can be a valid choice in certain circumstances. Now hosts with only local storage can be patched and have scheduled hardware outages with no downtime using vMotion techniques. Local storage still has significant limitations such as no HA or DRS support, but if the budget is small or the requirements are low then this may still be a potential design option.

What about Local Shared Storage?

Another storage possibility is becoming increasing popular in some environments. There are several different incarnations, but they're often referred to as virtual SANs, virtual NAS, or virtual storage devices. They use storage (normally local) and present it as a logical FC, iSCSI, or NFS storage device. Current marketplace solutions include VMware's own VSA (see sidebar), HP's LeftHand, StarWind Software, and NexentaVSA.

VSA Appliances

VSA Requirements

- Minimum 6 GB of RAM (24 GB is recommended)

- At least four network adapters (no jumbo frames)

VSA Performance

- Speed of the disks

- Number of disks

- RAID type

VSA Appliances

VSA Requirements

- Minimum 6 GB of RAM (24 GB is recommended)

- At least four network adapters (no jumbo frames)

VSA Performance

- Speed of the disks

- Number of disks

- RAID type

- Local RAID controller (the size of the onboard write cache is particularly relevant)

- Speed/quality of the replication network (VSA's internode host-mirrored disk replication is synchronous, which means VM disk writes aren't acknowledged until they're written to both copies)

VSA Design Considerations

- Eight 3 TB disks in RAID 6 with no hot spare. This provides 18 TB of usable space on a two host cluster or 27 TB across three hosts (each datastore must have room to be mirrored once).

- Twenty-eight 2 TB disks in RAID 6 (sixteen of them in an external enclosure in their own RAID 6 group) with no hot spare. This provides 24 TB of usable space on a two host cluster or 36 TB across three hosts.

Virtual arrays allow you to take advantage of many of the benefits of shared-storage devices with increased VMware functionality but without the cost overheads of a full shared-storage environment. Multiple hosts can mount the same LUNs or NFS exports, so the VMs appear on shared storage and can be vMotioned and shared among the hosts. Templates can be seen by all the hosts, even if they're stored locally.

But remember that these solutions normally still suffer from the same single-point-of-failure downsides of local storage. There are products with increasing levels of sophistication that allow you to pool several local-storage sources together and even cluster local LUNs into replica failover copies across multiple locations.

Several storage vendors also produce cut-down versions of their SAN array software installed within virtual appliances, which allow you to use any storage to mimic their paid-for storage devices. These often have restrictions and are principally created so that customers can test and become familiar with a vendor's products. However, they can be very useful for small lab environments, allowing you to save on shared storage but still letting you manage it the same way as your primary storage.

Additionally, it's feasible to use any server storage as shared resources. Most popular OSes can create NFS exports, which can be used for vSphere VMs. In fact, several OSes are designed specifically for this purpose, such as the popular Openfiler project (www.openfiler.com) and the FreeNAS project (http://freenas.org). These sorts of home-grown shared-storage solutions certainly can't be classed as enterprise-grade solutions, but they may give you an extra option for adding shared features when you have no budget. If your plan includes regular local storage, then some virtualized shared storage can enhance your capabilities, often for little or no extra cost.

Shared Storage