Chapter 9

Designing with Security in Mind

In this chapter, we'll change our point of view and look at a design from the perspective of a malicious user. We won't say hacker, because typically your virtual infrastructure isn't exposed to the outside world. Because this book isn't about how to protect your perimeter network from the outside world, but about vSphere design, we'll assume the security risks that need to be mitigated come primarily from the inside. We'll discuss these topics:

- The importance of security in every aspect of your environment

- Potential security risks and their mitigating factors

Why Is Security Important?

We're sure you don't need someone to explain the answer to this question. Your personal information is important to you. Your company's information is no less important to your company. In some lines of business, the thought of having information leak out into the public is devastating. Consider the following theoretical example. Your company is developing a product and has a number of direct competitors on the market. Due to a security slip, the schematics for a new prototype of your product have found their way out of the company. Having the schematics in the open can and will cause huge damage to the company's reputation and revenue. There's a reason that security is one of the five principles of design that we've discussed throughout this book.

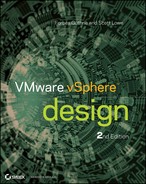

At VMworld 2010, the slide in Figure 9.1 was presented, showing that the number of virtual OS instances is larger than the number deployed on physical hardware—and that number is only expected to rise.

Figure 9.1 The number of VMs deployed will only increase in the future.

Your corporate databases will run as VMs, your application servers will be virtual, and your messaging servers will also run as VMs. Perhaps all of these are already running as VMs in your environment. There is also a good chance that in the not-too-distant future, your desktop and perhaps even your mobile phone will be VMs (or, at the very least, will host one or more VMs). You need to ensure that the data stored on all these entities is as safe and secure as it can possibly be.

Because this chapter focuses specifically on security, we'll discuss these top-level security-related topics:

- Separation of duties

- vCenter Server permissions

- Security in vCenter Linked Mode

- Command-line access to ESXi hosts

- Managing network access

- The demilitarized zone (DMZ)

- Protecting the VMs

- Change management

- Protecting your data

- Auditing and compliance

In each section, we'll provide an example risk scenario followed by recommendations on mitigating that potential risk. Let's start with a discussion on the separation of duties.

Separation of Duties

A centralized model of administration can be a good thing but can also be a major security risk.

Risk Scenario

John, your VI Admin, has all the keys to your kingdom. In Active Directory, John has Domain Admin credentials. He also has access to all the network components in your enterprise. He has access to the physical datacenter where all the ESXi hosts are located. He has access to the backend storage where all your VMs are located and to all of the organization's Common Internet File System (CIFS) and Network File System (NFS) shares that store the company data.

And now John finds out that he will be replaced/retired. You can imagine what a huge security risk this may turn out to be. John could tamper with network settings on the physical switches. He could remove all access control lists (ACLs) on the network components and cause damage by compromising the corporate firewall, exposing information to the outside, or perhaps even opening a hole to allow access into the network at a later date.

John could also tamper with domain permissions. He could change passwords on privileged accounts and delete or tamper with critical resources in the domain.

John could access confidential information stored on the storage array, copy it to an external device, and sell it to the competitors.

John could then access the vCenter Server, power off several VMs (including the corporate mail server and domain controllers), and delete them. Before virtualization, John would have had to go into the datacenter and start a fire to destroy multiple servers and OSes. In the virtual infrastructure, it's as simple as marking all the VMs and then pressing Delete: there go 200 servers. Just like that. Clearly, this isn't a pretty situation.

Risk Mitigation

The reason not to give the keys to the kingdom to one person or group is nightmare scenarios like the one we just described. As we've discussed elsewhere, part of a comprehensive vSphere design is a consideration of the operational issues and concerns—including separation of duties and responsibilities.

Ironically, the larger the environment, the easier it becomes to separate duties across different functions. You reach a stage where one person can't manage storage, the domain, the network, and the virtual infrastructure on their own. The sheer volume of time needed for all the different roles makes it impossible.

Setting up dedicated teams for each function has certain benefits, but also some drawbacks:

Each team manages its own realm and can access other realms but doesn't have super-user rights in areas not under its control. Active Directory admins don't have full storage rights, network admins don't have Domain Admin privileges, and so on.

Having only one person dealing with the infrastructure doesn't allow for out-of-the-box thinking. The same person could be the one who designed and implemented the entire infrastructure and has been supporting it since its inception. Therefore, this person could be deep in a certain trail of thought, making it extremely difficult to think in new ways.

Structuring your IT group according to different technology areas furthers the IT silos that are common in many organizations. This can inhibit teamwork and cooperation between the different groups and impair the IT department's ability to respond quickly and flexibly to changing business needs.

Although ensuring a proper balance in the separation of duties is primarily an operational issue, there are technical aspects to this discussion as well. For example, vCenter Server offers role-based access controls that help with the proper separation of duties. Some of the potential security concerns—and mitigations—for the use of vCenter Server's role-based access controls are described in the next section.

vCenter Server Permissions

vCenter Server's role-based access controls give you extensive command over the actions you can perform on almost every part of your infrastructure. This presents some challenges if these access controls aren't designed and implemented carefully.

Risk Scenario

Bill is a power user. He has a decent amount of technical knowledge, knows what VMware is, and knows what benefits can be reaped with virtualization. Unfortunately, with knowledge come pitfalls. Bill was allocated a VM with one vCPU and 2 GB RAM. The VM was allocated a 50 GB disk on Tier-2 storage. You delegated the Administrator role to Bill on the VM because he asked to be able to restart the machine if needed.

Everything runs fine until one day you notice that the performance of one of your ESXi hosts has degraded drastically. After investigating, you find that one VM has been allocated four vCPUs, 16 GB RAM, and three additional disks on Tier-1 storage. The storage allocated for this VM has tripled in size because of snapshots taken on the VM. As a result, you're low on space on your Tier-1 storage. This VM has been assigned higher shares than all others (even though it's a test machine). Because of this degradation in performance, your other production VMs suffered a hit in performance and weren't available for a period of time.

Risk Mitigation

This example could have been a lot worse. The obvious reason this happened was bad planning and a poor use of vCenter Server's access controls.

Let's make an analogy to a physical datacenter packed with hundreds of servers. Here are some problems you might encounter. Walking around the room, you can do the following in front of a server:

- Open a CD drive and put in a malicious CD.

- Plug in a USB device.

- Pull out a hard disk (or two or three).

- Connect to the VGA port, and see what's happening on the screen.

- Switch the hard disk order.

- Reset the server.

- Power off the server.

Going around to the back of the server, you can do the following:

- Connect or disconnect a power cable.

- Connect or disconnect a network port.

- Attach a serial device.

- Attach a USB device.

- Connect to the VGA port, and see what's happening on the screen.

As you can see from this list, a lot of bad things could happen. Just as you wouldn't allow users to walk into your datacenter, open your keyboard/video/mouse (KVM) switch, and log in to your servers, you shouldn't allow users access to your vSphere environment unless they absolutely need that privilege. When they do have access, they should only have the rights to do what they need to do, and no more.

Thankfully, vSphere has a large number of privileges that can be assigned at almost any level of the infrastructure: storage, VM, and network cluster. Control can be extremely granular for any part of the infrastructure.

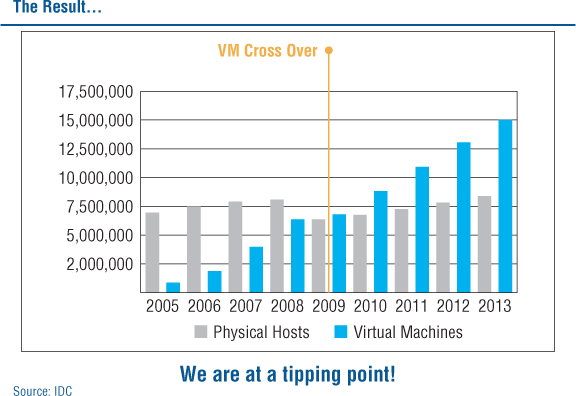

Let's get back to our analogy of the physical server room. In theory, you could create a server rack that was completely secure so the only thing a specific user with access could do is reset a physical server. Granted, creating such a server rack would be complicated, but with vSphere, you can create a role that allows the user to do only this task. Figure 9.2 shows such a role.

Figure 9.2 vCenter Server allows you to create a role that can only reset a VM.

With vSphere, you can assign practically any role you'd like. For example, you can allow a user to only create a screenshot of the VM, install VMware tools on a VM, or deploy a VM from a template—but not create their own new VM. You should identify the minimum tasks your user needs to perform to fulfill the job and allocate only the necessary privileges. This is commonly known as the principle of least privilege. For example, a help-desk user doesn't need administrative permissions to add hosts to the cluster but may need permission to deploy or restart VMs. This is also clearly related to the idea of separation of privileges—it allows you to clearly define the appropriate roles and assign the correct privileges to each role.

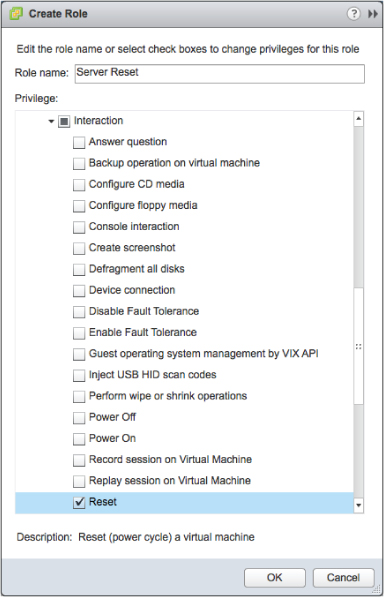

To return to the risk example, if Bill had been allocated the privileges shown in Figure 9.3, your production VMs wouldn't have suffered an outage.

Figure 9.3 Very granular permissions can be combined to create a limited user role.

If Bill only had the options shown, he wouldn't have been able to

- Create a snapshot.

- Add more hard disks.

- Change his CPU/RAM allocation.

- Increase his VM shares.

With the proper design strategy, you can accommodate your users' needs and delegate permissions and roles to your staff that let them do their tasks, but that also make sure their environment is stable and limited to only what they should use. Be sure your vSphere design takes this key operational aspect into consideration and supplies the necessary technical controls on the backend.

The use of Linked Mode with multiple vCenter Server instances is another area of potential concern, which we explore in the next section.

Security in vCenter Linked Mode

Linked Mode allows you to connect different vCenter Server instances in your organization. We discussed the design considerations in Chapter 3, “The Management Layer,” but let's take a closer look at security.

Risk Scenario

XYZ.com is a multinational company. It has a forest divided into child domains per country: US, UK, FR, HK, and AU. At each site, the IT staff's level of expertise differs—some are more experienced than others.

Gertrude, the VI Admin, joined the vCenter Servers together in Linked Mode. Suddenly, things are happening in her vCenter environment that shouldn't be. Machine settings are being changed, VMs are being powered off, and datastores are being added and removed.

After investigating, Gertrude finds that a user in one of the child domains is doing this. This user is part of the Administrators group at one of the smaller sites and shouldn't have these permissions.

Risk Mitigation

Proper planning is the main way to mitigate risk here. When you connect multiple vCenter Servers, they become one entity. If a user/group has Administrator privileges on the top level and these permissions are propagated down the tree, then that user/group will have full Administrator permissions on every single item in the infrastructure!

In a multidomain structure with vCenter Servers in different child and parent domains, there are two basic ways to divide the permissions: per-site permissions and global permissions.

Per-Site Permissions

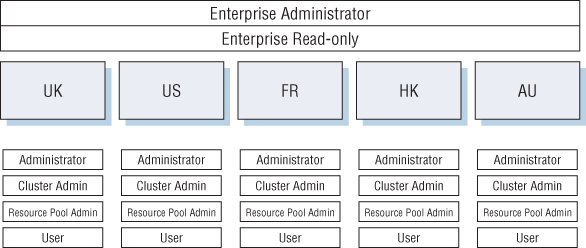

With per-site permissions, each site maintains its administrative roles but isn't given full administrative rights to the other sites. Figure 9.4 shows such a structure.

Figure 9.4 The per-site permission structure is one way to handle permissions in Linked Mode environments.

You can see that each site has its own permissions. The top level has two groups: Enterprise Administrator and Enterprise Read-only. The need for the Enterprise Administrators group is obvious: you'll have a group that has permissions on the entire structure. But what is the need for the second group?

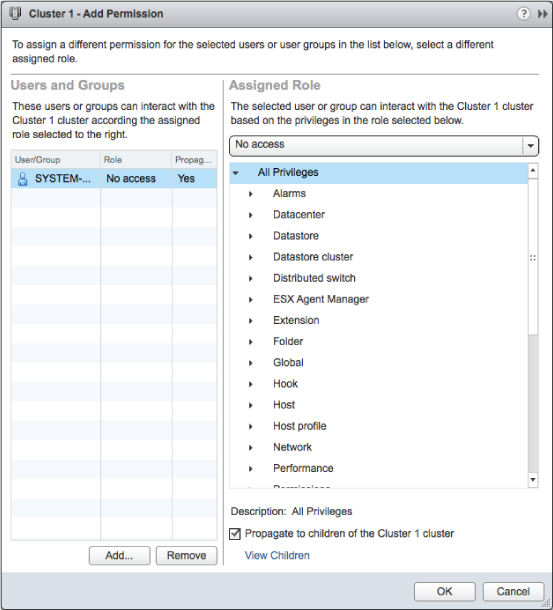

To allow the insight from one site into the other sites requires a certain level of permissions. For most organizations, this isn't a security risk because the permission is read-only; but in some cases it's unacceptable to allow the view into certain parts of the infrastructure (financial servers and domain controllers). In these cases, you can block propagation at that level. Figure 9.5 depicts such a configuration, where a specific user is blocked from accessing a cluster called Cluster 1.

Figure 9.5 To protect specific areas within linked vCenter Server instances, use the No Access permission.

Global Permissions

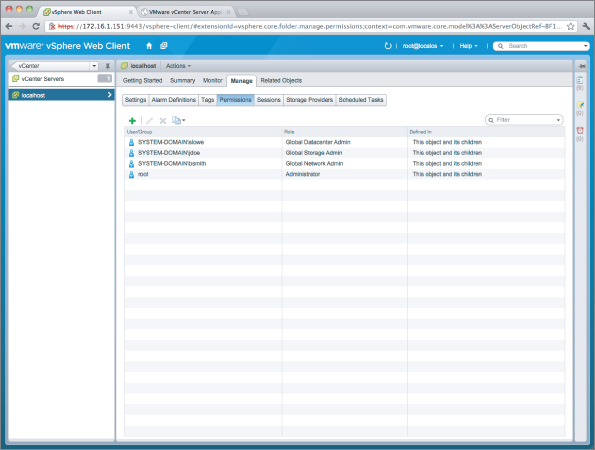

In the global permissions model, roles are created for different tasks, but these roles are global. They must be applied at every vCenter tree, but this only needs to be done once. Figure 9.6 shows such a structure.

Figure 9.6 The global permission structure uses broad roles that are applicable across multiple Linked Mode instances.

Which is the preferred structure? That depends on your specific environment and needs, and is determined by the design factors (functional requirements, constraints, risks, and assumptions). When we said the functional requirements were key to the entire design process, we were serious.

In the risk scenario, Gertrude should have completed her homework before allowing people she didn't trust to access the vCenter environment. If there were areas that people shouldn't access, she should have denied access using the No Access role.

Access control in all its forms—including the role-based access controls that vCenter Server uses—is a key component in ensuring a secure environment. Let's look at another form of access control: controlling access to command-line interfaces for managing ESXi hosts.

Command-Line Access to ESXi Hosts

If only everything could be done from the vSphere Client (some administrators cringe at the thought) … but it can't. The same is true for vSphere 5.1's Web Client. Although both the original Windows-based client and the new Web Client are powerful administrative tools, sometimes there are still certain functions that need to be done from a command line. This can have certain security risks, as you'll see.

Risk Scenario

Mary, your VI Admin, was with the company for many years. But her relationship with the company went downhill, and her contract ended—not on a good note, unfortunately.

One morning, you come into the office, and several critical servers are blue-screening. After investigation, you find that several ESXi hosts and VM settings have been altered, and these changes caused the outages.

Who changed the settings? You examine the logs from vCenter Server, but there's no record of changes being made. The trail seems to have ended.

Risk Mitigation

How could the changes have been made without vCenter Server having a record of them? Simple: Someone bypassed vCenter Server and went directly to the hosts, most likely via a command-line interface.

In older versions of vSphere that shipped with both ESX and ESXi, vSphere architects and administrators had to lock down and secure ESX's Red Hat Enterprise Linux (RHEL)-based Service Console. Prior to vSphere 4.1, ESXi had an unsupported command-line environment, but it didn't really present a security risk; in vSphere 4.1 and later, the ESXi Shell could be activated (but it issued an alert about this configuration change). In all cases, root SSH access to ESX hosts (and ESXi hosts with the shell enabled) was disabled by default (and rightfully so). Denying root access is also part of VMware's security best practices.

But as we all know, in certain cases it's necessary, or just plain easier, to perform actions on the host from the command line. How do we mitigate this potential security risk?

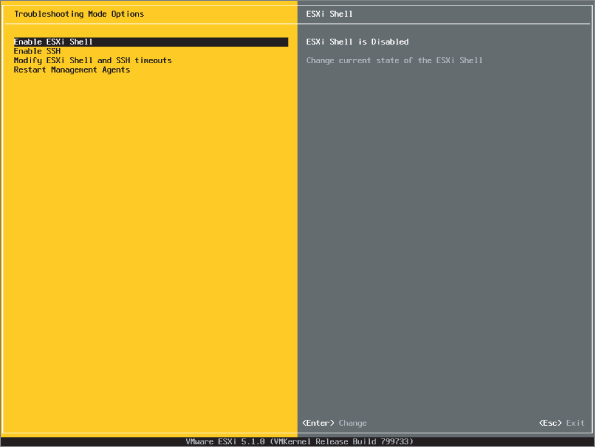

Disabling ESXi Shell and SSH Access

This might be a bit of overkill, but one way to mitigate the risk of users bypassing vCenter Server and performing tasks directly on the ESXi hosts is to simply turn off the ESXi Shell (referred to as Tech Support Mode [TSM] in earlier versions of vSphere) and SSH access to ESXi hosts. Both of these commands are available from the Direct Console User Interface (DCUI), as you can see in Figure 9.7.

Figure 9.7 By default, the ESXi Shell and SSH access are disabled.

Although these settings aren't a panacea, they're a good first step toward mitigating the risk of direct command-line access. However, keeping the ESXi Shell and SSH disabled still doesn't address access to the ESXi hosts via other CLI methods, such as the vSphere Management Assistant (vMA). Let's look at how to secure the vMA.

vMA Remote Administration

VMware usually releases a version of the vMA with the relevant releases of vSphere. As we discussed in Chapter 3, the vMA is a Linux-based virtual appliance. Whereas ESXi uses a limited BusyBox shell environment (and thus has limited controls), the vMA is a full Linux environment, and you can apply the full set of security controls to what can—or more appropriately, can't—be done by users using the vMA.

You can use a number of methods to tighten the security of the vMA:

- You can use sudo to control which users are allowed to run which commands. For example, using sudo you could let some users run the vicfg-vswitch command but not the vicfg-vmknic command. This technique almost demands an entire section just for itself; sudo is an incredibly flexible and powerful tool. For example, you can configure sudo to log all commands (that would have been handy in this scenario). It's beyond the scope of this book to provide a comprehensive guide to sudo, so we recommend you utilize any of the numerous “how to” guides available on the Internet.

- You can integrate the vMA into your Active Directory domain, which means one less set of credentials to manage. (Note that in vSphere 5.1 the vMA doesn't take advantage of vCenter's new Single Sign-On [SSO] functionality; however, SSO integrates into Active Directory so the end result is much the same.) You can use Active Directory credentials with sudo as well.

- You can tightly control who is allowed to access the vMA via SSH, using either the built-in controls in the SSH daemon or the network-based control (ACLs and/or firewalls).

Keeping the ESXi Shell disabled, leaving SSH disabled, and locking down permissions in the vMA are all good methods. However, it's still possible to bypass vCenter Server and go directly to the hosts. To close all possible avenues, you'll need Lockdown Mode.

Enable Lockdown Mode

Lockdown Mode provides a mechanism whereby ESXi hosts can only be managed via vCenter Server. In vSphere 4.1 and later, when Lockdown Mode is enabled access to ESXi hosts via the vSphere API, vSphere command-line interface (vCLI, including vMA), and PowerCLI are restricted. This provides an outstanding way to ensure that only vCenter Server can manage the ESXi hosts.

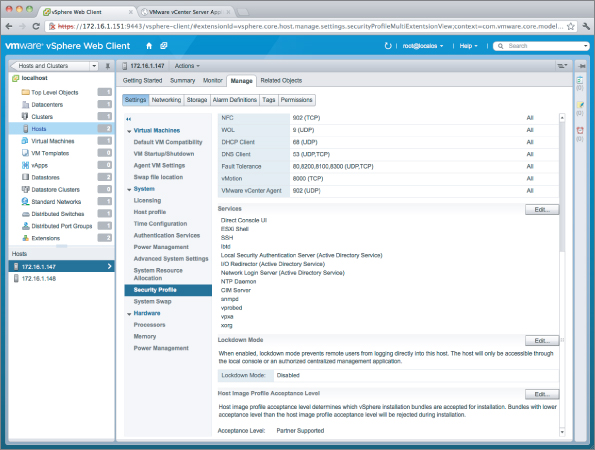

Lockdown Mode can be enabled via the vSphere Client, via the vSphere Web Client, or via the DCUI. Figure 9.8 shows a screenshot of the option to enable Lockdown Mode in the vSphere Web Client.

Figure 9.8 Lockdown Mode can be enabled using the vSphere Web Client.

Note that it's generally recommended to enable or disable Lockdown Mode via the vSphere Client or the vSphere Web Client; disabling Lockdown Mode via the DCUI can undo certain permission settings. We recommend using the DCUI to disable Lockdown Mode only as a last resort if vCenter Server is unavailable.

In this scenario, keeping the ESXi Shell and SSH disabled on the ESXi hosts would have forced users through other channels, like the vMA. The use of sudo on the vMA would have provided the logging you needed to track down what commands were run and by whom. Further, if you really wanted to prevent any form of access to the ESXi hosts except through vCenter Server, Lockdown Mode would satisfy that need.

In the next section, we discuss how to use the network to manage access to your vSphere environment.

Managing Network Access

We touched on this topic in Chapter 5, “Designing Your Network,” when we discussed planning your network architecture and design. Let's go into a bit more detail here.

Risk Scenario

Rachel, a hacker from the outside world, has somehow hacked into your network. Now that Rachel has control of a machine, she starts to fish around for information of value. Using a network sniffer, she discovers that there is a vCenter Server on the same subnet as the machine she controls. Rachel manages to access the vCenter Server, creates a local administrative user on the server, and then logs in to the vCenter with full Admin privileges.

She sees where all the ESXi hosts are and where all the storage is, and she performs a vMotion of a confidential Finance server from one host to another. She captures the traffic of the VM while in transit and is able to extract sensitive financial information. The potential damage is catastrophic.

Risk Mitigation

We won't get into how Rachel managed to gain control of a computer in your corporate network. Instead we'll focus on the fact that all the machines were on the same network.

Separate Management Network

Your servers shouldn't be on the same subnets as your users' computers. These are two completely different security zones. They probably have different security measures installed on them to protect them from the outside world.

You can provide extra security by separating your server farm onto separate subnets from your user computers. These could even be on separate physical switches if you want to provide an additional layer of segregation, although most organizations find that the use of VLANs is a sufficient barrier in this case. The question you may ask is, “How will this help me?”

This will help in a number of ways:

- If you suffer an intrusion such as the one described, malicious attackers like Rachel can't use a network sniffer to see most of the traffic from the separate management network. Because your servers reside on a different subnet, they must pass through a router—and that router will shield a great deal of traffic from other subnets. (Speaking in more technical terms, the dedicated management network will be a separate broadcast domain.)

- You can deploy a security device (an intrusion-protection system, for example) that will protect your server farm from a malicious attack from somewhere else on the network. Such appliances (they're usually physical appliances) examine the traffic going into the device and out to the server. With the assistance of advanced heuristics and technologies, the appliance detects suspicious activity and, if necessary, blocks the traffic from reaching the destination address.

- You can deploy a management proxy appliance, such as that provided by HyTrust, to proxy all management traffic to and from the virtual infrastructure. These devices can provide additional levels of authentication, logging, and more granular controls over the actions that can be performed.

- You can utilize a firewall (or simple router-based ACLs) to control the traffic moving into or out of the separate management network. This gives you the ability to tightly control which systems communicate and the types of communication they're permitted.

Fair enough, so using a separate management network is a good idea. But what traffic needs to be exposed to users, and what traffic should be separate? Typically, the only traffic that should be exposed to your users is your VM traffic. Your end users shouldn't have any interaction with management ports, the VMkernel interfaces you use for IP storage, or out-of-band management ports such as Integrated Lights Out (iLO), Integrated Management Module (IMM), Dell Remote Assistance Cards (DRACs), or Remote Supervisor Adapter (RSA). From a security point of view, your users shouldn't even be able to reach these IP addresses.

But what does need to interact with these interfaces? All the virtual infrastructure components need to talk to each other:

- ESXi management interfaces need to be available for vSphere High Availability (HA) heartbeats between the hosts in the cluster.

- The vCenter Server needs to communicate with your ESXi hosts.

- ESXi hosts need to communicate with IP storage.

- Users with vSphere permissions need to communicate with the vCenter Server.

- Monitoring systems need to poll the infrastructure for statistics and also receive alerts when necessary.

- Designated management stations or systems running PowerCLI scripts and/or using the remote CLI (such as the vMA) to perform remote management tasks on your hosts will need access to vCenter Server, if not the ESXi hosts as well.

Depending on your corporate policy and security requirements, you may decide to put your entire virtual infrastructure behind a firewall. In this case, only traffic defined in the appropriate rules will pass through the firewall; otherwise, it will be dropped (and should be logged as well). This way, you can define a very specific number of machines that are allowed to interact with your infrastructure.

Designated Management Stations

A related approach is to use designated management stations that are permitted access to managing the virtual infrastructure. First, you'll need to determine what kind of remote management you need:

- vSphere Client

- vCLI

- PowerShell

From this list, you can see that you'll need at least one management station, and perhaps two. The vSphere Client and PowerShell need a Windows machine. The vCLI can run on either Windows or Linux, and the vMA can provide the vCLI via a prepackaged Linux virtual appliance. This might make it reasonably easy, especially in smaller environments, to lock down which systems are allowed to manage the virtual infrastructure by simply controlling where the appropriate management software is installed. Further, with these management stations, you can provide a central point of access to your infrastructure, and you don't have to define a large number of firewall rules for multiple users who need to perform their daily duties. (You will, of course, have to provide the correct security for these management stations.)

However, with the introduction of the vSphere Web Client in vSphere 5.1, this model begins to break down. Now, any supported browser can potentially become a management station, and the idea of designated management stations might not be a good fit. At this point, you might have to look at other options, such as controls that reside at the network layer.

Network Port-Based Access

The Cisco term for this is port security. You define at the physical switch level which MAC addresses are allowed to interact with a particular port in the switch. Utilizing this approach, you can define a very specific list of hosts that interact with your infrastructure and which ports they're allowed to access. You can define settings that allow certain users to access the management IP but not the VMkernel address. Other users can access your storage-management IPs but not the ESXi hosts. You can achieve significant granularity with this method, but there is a trade-off—solutions like this can sometimes create additional management overhead. The potential additional management overhead should be evaluated against the operational impact it might have.

Separate vMotion Network

Traffic between hosts during a vMotion operation isn't encrypted at all. This means the only interfaces that should be able to access this information are the vMotion interfaces themselves, and nothing else.

One way you can achieve this is to put this traffic on a separate, nonroutable network/VLAN. By doing so, you ensure that nothing outside of this subnet can access the traffic, and you prevent the potential risk that unauthorized users could somehow gain access to confidential information during a vMotion operation or interfere with vMotion operations.

Note that this does create certain serious challenges related to long-distance vMotion. However, long-distance vMotion isn't a reality for most organizations, because it requires considerable additional resources (storage and/or network connectivity between sites). Latency between different sites and, of course, stretching the network or the VLANs between the sites can be quite a challenge. Comprehensive network design is beyond the scope of this book.

Going back to the earlier example, if you had segregated the infrastructure network from the corporate traffic, your dear hacker friend Rachel wouldn't have discovered that there was a vCenter server. She wouldn't have been able to access the vCenter Server because her IP wouldn't have been authorized for access, and she wouldn't have been able to sniff the vMotion traffic. Even though she could still have compromised some users' computers, this wouldn't have led to the compromise of the entire virtual infrastructure.

In addition to planning how you can segregate network traffic types to enhance security, you also need to think carefully about how you consolidate network traffic and what the impact on security is as a result. The use of a demilitarized zone (DMZ) is one such example and is the topic of our next section.

The DMZ

Your infrastructure will sooner or later contain machines that have external-facing interfaces. It's best to plan that design properly to avoid mistakes like the one we'll describe next.

Risk Scenario

Harry, the sysadmin in your organization, wanted to use your virtualization infrastructure for a few extra VMs that were needed (yesterday, as always) in the DMZ. So, he connected an additional management port to the DMZ and exposed it to the outside world. Little did he know that doing so was a big mistake.

The ESXi host was compromised. Not only that, but because the host was using IP storage (NFS in this case), the intruder managed to extract information from the central storage and the network as well.

Risk Mitigation

In the previous section, we mentioned that the end user shouldn't have any interaction with the management interfaces of your ESXi hosts. The only thing that should be exposed are the VM networks. The primary mitigation of risk when using vSphere with (or in) a DMZ is proper design—ensuring that the proper interfaces are connected to the proper segments and that proper operational procedures are in place to prevent accidental exposure of data and/or systems through human error or misconfiguration.

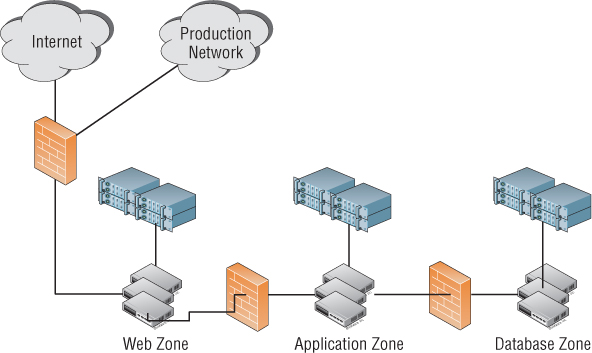

With that in mind, let's take a look at some DMZ architectures. A traditional DMZ environment that uses physical hosts and physical firewalls would look similar to the diagram in Figure 9.9.

Figure 9.9 This traditional DMZ environment uses separate physical hosts and : physical firewalls.

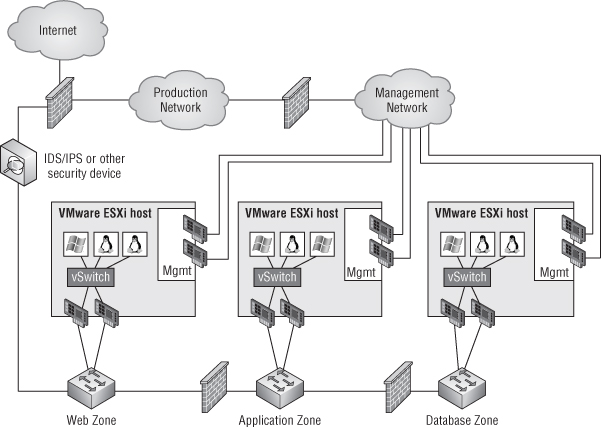

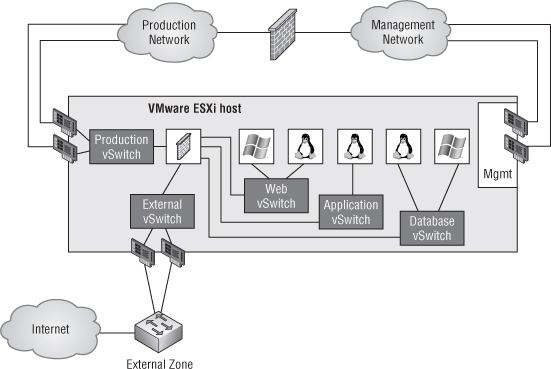

A virtual DMZ will look similar to Figure 9.10.

Figure 9.10 This virtualized DMZ uses VMs and physical firewalls.

Notice that the management ports aren't exposed to the DMZ, but the VM network cards are exposed—just like their physical counterparts from Figure 9.9.

DMZ configurations can be divided into three categories:

- Partially collapsed with separate physical zones

- Partially collapsed with separate virtual zones

- Fully collapsed

Partially Collapsed DMZ with Separate Physical Zones

Figure 9.10, shown earlier, graphically describes this configuration. In this configuration, you need a separate ESXi host (or cluster of hosts) for each and every one of your zones. You have complete separation of the different application types and security risks. Of course, this isn't an optimal configuration, because you can end up putting in a huge number of hosts for each separate zone and then having resources stranded in one zone or another—which is the opposite of the whole idea of virtualization.

Partially Collapsed DMZ with Separate Virtual Zones

Figure 9.11 depicts a partially collapsed DMZ with separate virtual zones.

Figure 9.11 In this configuration, a single host (or cluster) spans multiple security zones.

In this configuration, you use different zones in a single ESXi host (or a cluster of hosts). The separation is done only on the network connections of each zone, with a firewall separating the different network zones on the network level. You have to plan accordingly to allow for a sufficient number of network cards for your hosts to provide connectivity to every zone.

This configuration makes much better use of your virtualization resources, but it's more complex and error prone. A key risk is the accidental connection of a VM to the wrong security zone. Using Figure 9.11 as an example, the risk is accidentally connecting an application server to the Web vSwitch instead of the Application vSwitch, thereby exposing the workload to a different security zone than what was intended. To mitigate this potential risk, you should apply the same change-control and change-management procedures to the virtual DMZ as those you have in place for your physical DMZ.

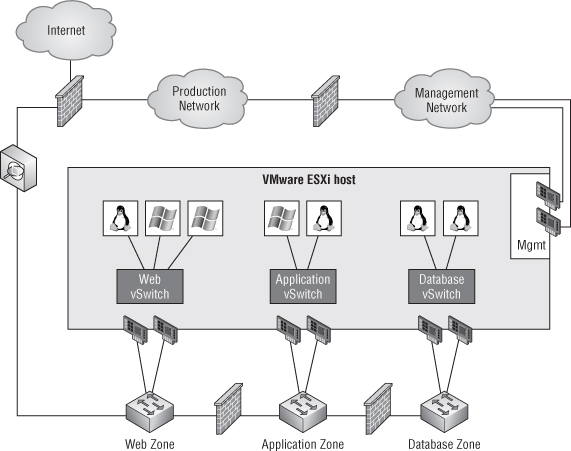

Fully Collapsed DMZ

This is by far the most complex configuration of the three, as you can see in Figure 9.12.

Figure 9.12 A fully collapsed DMZ employs both VMs and virtual security appliances to provide separate security zones.

In this configuration, there are no physical firewalls between the different security zones: the firewall is a virtual appliance (running as a VM on the ESXi cluster) that handles network segregation. This virtual appliance could be VMware's vShield Edge, Cisco's ASA 1000v, or some other third-party virtual firewall or virtual security appliance. As noted in the previous configuration, you should make sure your processes are in effect and audited regularly for compliance.

In all three DMZ configurations we've reviewed—partially collapsed with separate physical zones, partially collapsed with separate virtual zones, and fully collapsed—you'll note that management traffic is kept isolated from the VM traffic, which exposes VMs to a DMZ while preventing the ESXi hosts from being exposed to potentially malicious traffic.

However, protecting management traffic is only part of the picture. You also need to protect the storage that is backing the vSphere environment.

Separation of Storage

How possible is it for someone to break out of a compromised ESXi host onto a shared network resource? That depends who you ask. Has it been done? Will it be done? These are questions that have yet to be answered. Is it worth protecting against this possibility? Many organizations would say yes.

With that in mind, you can use the following methods to help protect your storage resources in DMZ environments:

- Use Fibre Channel (FC) host bus adapters (HBAs) to eliminate the possibility of an IP-based attack (because there is no IP in a typical FC environment). It might be possible for an attack to be crafted from within the SCSI stack to escape onto the network, but this would be a difficult task to accomplish.

- Theoretically, the same is true for Fibre Channel over Ethernet (FCoE), although the commonality of Ethernet between the FCoE and IP environments might lessen the strong separation that FC offers.

- Use separate physical switches for your storage network, providing an air gap between the storage network and the rest of the network. That air gap is bridged only by the ESXi hosts' VMkernel interfaces and the storage array's interfaces.

- Never expose the storage array to VM-facing traffic without a firewall between them. If the VMs must have access to storage array resources via IP-based storage, tread very carefully.

The theory behind all these approaches is that there is as little network connectivity as possible between the corporate network (the storage array) and the DMZ, thus reducing the network exposure.

Going back to the example, the situation with Harry and the exposed management port on the external-facing network should never have happened. Keeping these ports on a dedicated segment that isn't exposed to the outside world minimizes the chance of compromising the server. In addition, using the FC infrastructure that is currently in place minimizes the possible attack surface into your network from the DMZ.

You should take into consideration two more important things regarding the DMZ. First, it's absolutely possible to set up a secure DMZ solution based completely on your vSphere infrastructure. Doing so depends on proper planning and adhering to the same principles you would apply to your physical DMZ; the components are just in a slightly different location in your design. Second, a breach is more likely to occur due to misconfiguration of your DMZ than because the technology can't provide the proper level of security.

Firewalls in the Virtual Infrastructure

VMs are replacing physical machines everywhere, and certain use cases call for providing security even in your virtual environment. You saw this in the previous section when we discussed fully collapsed DMZ architectures (see the section “Fully Collapsed DMZ”).

What are the security implications of using virtual firewalls? That's our focus in this section. Note that we won't be talking about risk and risk mitigation because you can provide a secure firewall in your environment by continuing to use your current corporate firewall. Doing so, however, presents certain challenges, as you'll see.

The Problem

Herbert has a development environment in which he has to secure a certain section behind a firewall. All the machines are sitting on the same vSwitch on the same host. Herbert can't pass the traffic through his corporate firewall because all traffic that travels on the same vSwitch never leaves the host.

He deploys a Linux machine with iptables as a firewall, which provides a good solution, but the management overhead makes this too much of a headache. In addition, in order for this to work, he has to keep all the machines on the same host, which brings the risk of losing the entire environment if the host goes down.

The Solution

There are two ways to get around this problem. One is to use the physical firewall, and the second is to use virtual firewalls that have built-in management tools to allow for central management and control.

Physical Firewall

The main point to consider is that in order to pass traffic through the physical firewall, the traffic must go out of the VM, out through the VM port group and vSwitch, out through the physical NIC, and from there onto the firewall. If the traffic is destined for a VM on the same host, then the traffic does a hairpin turn at the firewall (assuming the firewall even supports such a configuration; many firewalls don't) and traverses the exact same path back to its destination. Traffic on that network segment is doubled (out and then back again), when ideally it should have been self-contained in the host. Although the vast majority of workloads won't be network constrained, it's important to note that network throughput VM-to-VM across a vSwitch is much higher than it would be if the traffic had to go out onto the physical network and back again.

Virtual Firewall

A virtual firewall is a VM with one or more vNICs. It sits as a buffer between the physical layer and the VMs. After the VMs are connected to a virtual firewall, they're no longer connected to the external network; all traffic flows through the virtual firewall.

You might think that this sort of configuration is exactly what Herbert built earlier using Linux and iptables. You're correct—sort of. To avoid the issues that normally come with this type of configuration, some virtual firewalls operate as a bump in the wire, meaning they function as transparent Layer 2 bridges (as opposed to routed Layer 3 firewalls). This allows them to bypass issues with IP addresses, but it does introduce some complexities (like the VMs needing to be connected to an isolated vSwitch with no uplinks—a configuration that doesn't normally support vMotion).

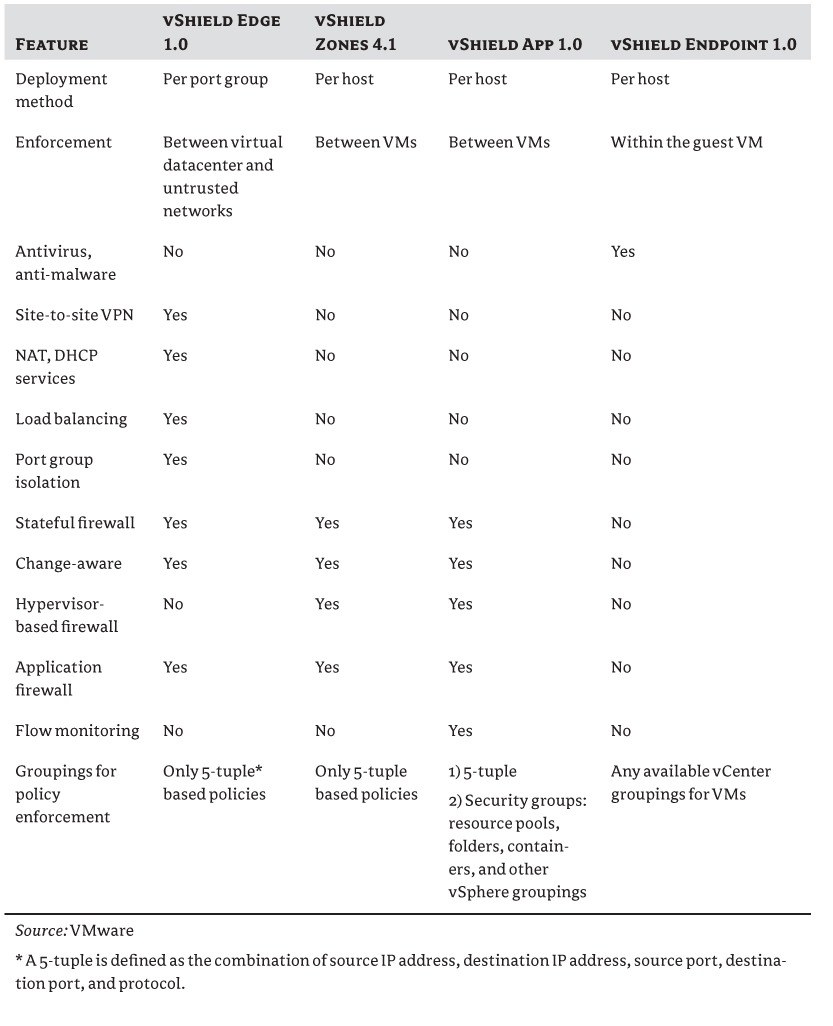

VMware provides a suite of applications called VMware vShield that can protect your virtual environment at different levels. Table 9.1 describes some of the differences between the products in the vShield product family. Note that as of vSphere 5.1, vShield Endpoint is now included with all editions of vSphere and no longer needs to be purchased separately (although the purchase of a supported antivirus engine to use Endpoint is still necessary).

Table 9.1 VMware vShield product comparisons

Other third-party vApps provide similar functionality. This is an emerging market, so you should perform a full evaluation of which product will fit best into your organization's current infrastructure and best meet your specific security requirements.

Change Management

As we mentioned in the section “The DMZ,” a security breach is more likely to occur due to misconfiguration (or human error) than because the software can't provide the appropriate level of security. This is why we want to discuss change management in the context of security. Although this isn't really a security feature, it's still something that should be implemented in every organization.

Risk Scenario

Barry, your junior virtualization administrator, downloaded a new vApp from the Internet: an evaluation version of a firewall appliance. He deployed the appliance, started the software setup, answered a few simple questions, and filled in a few fields (including his administrative credentials to the virtual infrastructure). All of a sudden, a new vSwitch was created on all ESXi hosts, and all traffic was routed through the appliance.

You start to receive calls saying that some applications aren't working correctly. Web traffic isn't getting to where it should go. You begin to troubleshoot the problem from the OS side. After a long analysis, you discover the change that Barry made.

The result is far too much downtime for the applications and far too much time spent looking in the wrong direction.

Risk Mitigation

You don't want changes made to your infrastructure without documenting and testing them before the fact. Bad things are sure to happen otherwise. To mitigate the risk of untested and undocumented changes, organizations implement change control or change management.

The problem with discussing change management with companies is that the idea of change management is different for every organization. Different companies have different procedures, different regulations, different compliance requirements, and different levels of aversion to risk (and different risks). Is there, then, a way to discuss change management in some sort of standardized way? That's the attempted goal behind the Information Technology Infrastructure Library (ITIL).

ITIL attempts to provide a baseline set of processes, tasks, procedures, and checklists that a company can use to help align IT services with the needs of the organization. ITIL is very broad, but for the purposes of this discussion we'll focus on the Service Transition portion where change management is discussed. The purpose of change management in ITIL is to ensure that changes are properly approved (through some sort of approval/review chain), tested before implementation so as to minimize risk to the organization, and aligned to the needs of the business (such as providing improved performance, more reliable service, additional revenue, or lower costs).

There are those who hate ITIL with a passion and those who swear by it. Our purpose here isn't to say that ITIL is perfect and every organization should adopt it, but rather to emphasize that proper change management is an important part of every IT organization's operational procedures. Let's take a look at a couple of items you might want to include in your design to help emphasize the importance of change management as a way to mitigate risk.

Test Environment

To mitigate problems, prepare a test environment that is as close as possible to your production environment. We say “as close as possible” because you can't always have another SAN, an entire network stack, or a blade chassis. You need a vCenter Server, central storage, and some ESXi hosts. The beauty of this is that today, all of these components can be VMs. Numerous sessions at the VMworld conference and a large number of blogs explain how to set up such a home lab for testing purposes. To lower the licensing cost for this lab, you can purchase low-end bundles (Essentials and Essentials Plus) for a minimal cost.

Play around in your test environment before implementing anything on your production systems. Document the changes so they can be reproduced when needed when you implement for real.

Before you make the changes, answer these questions:

- What are the implications of this change?

- Who or what will be affected?

- How much of a risk is this change?

- Can the change be reversed?

- How long will it take to roll back?

Change Process

All the questions in the previous list should be answered by all the relevant parties involved. This way, you can identify all the angles—and not only from the point of view in your position.

We aren't always aware of the full picture, because in most cases it isn't possible to be. In most organizations, the storage person, the network person, the help-desk team, and the hardware person aren't the same person. They aren't even on the same team. You can benefit from the different perspectives of other teams to get a fuller picture of how changes will impact your users. Having a process in place for involving all stakeholders in change decisions will also ensure that you're involved in changes initiated by other groups.

In the previous risk example, Barry should never have installed this appliance on the production system. He should have deployed it in the test environment, and he would have seen that changes were made in the virtual network switches that could cause issues. Barry also should have brought this change through a proper change-management process.

Not every organization implements ITIL, not every organization wants to, and not every organization needs to. You should find the process that works for you: one that will make your job easier and keep your environment stable and functioning in the best fashion.

Protecting the VMs

You can secure the infrastructure to your heart's content, but without taking certain measures to secure the VMs in this infrastructure, you'll be exposed to risk.

Risk Scenario

Larry just installed a new VM—Windows 8, from an ISO he got from the help desk. What Larry did not do was install an antivirus client in the VM; he also didn't update the OS with the latest service pack and security patches. This left a vulnerable OS sitting in your infrastructure.

Then, someone exploited the OS to perform malicious activity. They installed a rootkit on the machine, which stole information and finally caused a Denial of Service (DoS) attack against your corporate web server.

Risk Mitigation

The OS installed into a VM is no different than the OS installed onto a physical server. Thus, you need to ensure that a VM is always treated like any other OS on your network. It should go without saying that you shouldn't allow vulnerable machines in your datacenter.

In order to control what OSes are deployed in your environment, you should have a sound base of standardized templates from which you deploy your OSes. Doing so provides standardization in your company and also ensures that you have a secure baseline for all VMs deployed in the infrastructure, whatever flavor of OS they may be.

Regarding the patching of VMs, you should treat them like any other machine on the network. Prior to vSphere 5.1, VMware Update Manager (VUM) can provide patching for certain guest OSes, but this functionality was discontinued in vSphere 5.1. VMware has decided that it should patch only the vSphere environment and leave the guest OSes to the third-party vendors. This is actually a good thing. Most of the large OS vendors already provided patch-management functionality (think of Microsoft and Software Update Services/Windows Server Update Services [SUS/WSUS]) that offered benefits over VUM with regard to features, reliability, and OS integration. It didn't make sense for VMware to try to continue to push this functionality, in our opinion.

You don't only need to patch the VMs—you also need to patch the templates from which those VMs are deployed! Ensure that you have operational procedures in place to regularly update the templates with the latest security patches and fixes. You'll also want to test the changes to those templates before rolling them into production!

Don't forget about providing endpoint protection for your VMs. You can deploy antivirus/anti-malware directly into the guest OS in every VM, but a more efficient solution might be to look at hypervisor-based protection. We mentioned vShield Endpoint in the earlier section “Firewalls in the Virtual Infrastructure,” but let's discuss it in a bit more detail here.

What is vShield Endpoint? The technology adds a layer to the hypervisor that allows you to offload antivirus and anti-malware functions to a hardened, tamper-proof security VM, thereby eliminating the need for an agent in every VM. vShield Endpoint plugs directly into vSphere and consists of a hardened security VM (delivered by VMware partners), a driver for VMs to offload file events, and the VMware Endpoint security (EPSEC) loadable kernel module (LKM) to link the first two components at the hypervisor layer.

There are multiple benefits to handling this method of protection:

- You deploy an antivirus engine and signature files to a single security VM instead of every individual VM on your host.

- You free up resources on each of your VMs. Instead of having the additional CPU and RAM resources used on the VM for protecting the OS, the resource usage is offloaded to the host. This means you can raise the consolidation ratios on your hosts.

- You eliminate the occurrence of antivirus storms and bottlenecks that occur when all the VMs on the host (and the network) start their malware and antivirus scan or updates at the same time.

- You obscure the antivirus and anti-malware client software from the guest OS, thus making it harder for malware to enter the system and attack the antivirus software.

As of the writing of this book, only a few vendors offered vShield Endpoint-compatible solutions. Kaspersky, McAfee, and Trend Micro all offer solutions that integrate with vShield Endpoint. If you use a vendor other than one of these three, you'll have to protect your VMs the old way. And you'll have to plan accordingly for certain possibilities.

Antivirus Storms

Antivirus storms happen all the time in the physical infrastructure, but they're much more of an issue when the infrastructure is virtual. When each OS is running on its own computer, each computer endures higher CPU/RAM usage and increased disk I/O during the scan/update. But when all the OSes on a host scan/update at the same time, it can cripple a host or a storage array.

You'll have to plan how to stagger these scans over the day or week in order to spread out the load on the hosts. You can do so by creating several groups of VMs and spreading them out over a schedule or assigning them to different management servers that schedule scans/updates at different times. We won't go into the details of how to configure these settings, because they vary with each vendor's products.

Ensuring That Machines Are Up to Date

Each organization has its own corporate policies in place to make sure machines have the correct software and patches installed. There are several methods of achieving this.

One option is checking the OSes with a script at user login for antivirus software and up-to-date patches. If the computer isn't compliant, then the script logs off the user and alerts the help desk.

A more robust solution is Microsoft's Network Access Protection (NAP). Here, only computers that comply with a certain set of rules are allowed to access the appropriate resources. Think of it as a DMZ in your corporate network. You connect to the network, and your computer is scanned for compliance. If it isn't compliant, you're kept in the DMZ until your computer is updated; then you're allowed full access to the network. If for some reason the computer can't be made compliant, you aren't allowed out of the DMZ. This of course is only one of the solutions available today; there are other, similar ones.

Looking back at the risk example from the beginning of the section, if you had only allowed the deployment of VMs from predefined templates, Larry wouldn't have been able to create a system that was vulnerable in the first place:

- The VM would have been installed with the latest patch level.

- Antivirus software would have been deployed (automatically) to the VM, or the VM would have been protected by software at the hypervisor level.

- No rootkit could have been installed, and there would have been no loss of information.

- Your corporate web server would never have been attacked, and there would have been no outage.

And you would sleep better at night.

Protecting the Data

What would you do if someone stole a server or a desktop in your organization? How much of a security breach would it be? How would you restore the data that was lost?

Risk Scenario

Gary is a member of the IT department. He was presented with an offer he couldn't refuse, albeit an illegal one: he was approached by a competitor of yours to “retrieve” certain information from your company.

Gary knew the information was stored on a particular VM in your infrastructure. So, Gary cloned this VM—not through vCenter, but through the storage (from a snapshot), and he tried to cover his tracks. He then copied the VM off the storage to an external device and sneaked it out of the company.

Three months down the road, you find out that your competitor has somehow managed to release a product amazingly similar to the one you're planning to release this month. Investigations, audit trails, and many, many hours and dollars later, you find the culprit and begin damage-control and cleanup.

Risk Mitigation

This example may be apocryphal, and you can say that you trust your employees and team members, but things like this happen in the real world. There are people who will pay a lot of money for corporate espionage, and they have a lot to gain from it.

In this case, put on your paranoid security person hat, and think about the entry points where you could lose data.

A Complete VM

Walking out past the security guard with a 2U server and a shelf of storage under your coat or in your pocket is pretty much impossible. You'd be noticed immediately. But with virtualization, you can take a full server with up to 2 TB of data on one 3.5” hard disk, put it in your bag or in your coat pocket, and walk out the door, and no one will be any the wiser.

One of the great benefits of virtualization is the encapsulation of the server into a group of files that can be moved from one storage device to another. VMs can be taken from one platform and moved to another. This can also be a major security risk.

How do you go about protecting the data? First and foremost, trust. You have to trust the staff who have access to your sensitive data. Trust can be established via corporate procedures and guidelines. Who is allowed to access what? Where? And how?

At the beginning of the chapter, we talked about separation of duties. Giving one person too much power or too much access can leave you vulnerable. So, separation of duties here has clear benefits.

In the risk example, the clone was performed on the storage, not from the vCenter Server. vCenter provides a certain level of security and an audit trail of who did what and when, which you can use to your advantage.

You should limit access to the VM on the backend storage. The most vulnerable piece here is NFS, because the only mechanism that protects the data is the export file that defines who has what kind of access to the data. With iSCSI, the information is still accessible over the wire, but it's slightly more secure. In order to expose a logical unit number (LUN) to a host, you define the explicit LUN mask allowing the iSCSI initiator access to the LUN. And last but not least, don't forget FC. Everything we mentioned for iSCSI is the same, but you'll need a dedicated HBA to connect to a port in the fabric switch as well. This requires physical access to the datacenter, which is more difficult than connecting to a network port on a LAN and accessing the IP storage.

How can the data be exported out of your datacenter? Possibly over the network. If that is the case, you should have measures in place alerting you to abnormal activity on the physical switches. You can use the corporate firewall to block external uploads of data.

If this data has to be copied to physical media, you can limit which devices are able to connect to USB ports on which computers and with which credentials. A multitude of security companies thrive on security paranoia (which is a good thing).

Backup Sets

What about the backup set of your data? Most organizations have more than one backup set. What good does it do, if your backups are located in the same location as your production environment—and the building explodes? To be safe, you need a business continuity planning/disaster recovery (BCP/DR) site.

And here comes the security concern: how is data transported to this site? Trucking the data may be an option, but you'll have to ensure the safety and integrity of the transport each time the backups are moved offsite, whether you use sealed envelopes, armed guards, a member of your staff, or the network. When sending data over the network, you should ensure that your traffic is secured and encrypted. You should choose which solution is suited for you and take the measures to protect the transport.

Virtual Machine Data

In this case, there are no significant differences between a VM that is accessible from the network and its counterpart, the physical server. You should limit network access to your servers to those who need and have the correct authority to access these files. The ways of exporting the files from the VM are the same as with a full VM, as we discussed earlier.

Back to your “faithful” employee, Gary. Gary shouldn't have been granted the same level of access to all the components, the virtual infrastructure, and the storage backend. Your security measures should have detected the large amount of traffic that was being migrated out of the storage array to a non-authorized host. In addition, Gary shouldn't have been working in your organization in the first place. Some organizations periodically conduct compulsory polygraph tests for IT personnel who have access to confidential information.

And last but not least, you should protect your information. If data is sensitive and potentially damaging if it falls to the wrong hands, then you should take every measure possible to secure it. Be alert to every abnormal (or even normal) attempt to access the data in any way or form.

Cloud Computing

Any virtualization book published in this day and age that doesn't mention cloud computing is pretty bizarre, so let's not deviate from this norm.

Risk Scenario

Carrie set up the internal cloud in your organization. She also contracted the services of a provider to supply virtual computing infrastructure somewhere else, to supplement the internal cloud's capacity when needed and in a cost-effective manner.

Due to a security issue at the cloud provider, there was a security breach, and your VMs were compromised. In addition, because the VMs were connected to your organization, an attack was launched from these hosts into your corporate network, which caused additional damage.

Risk Mitigation

VMware announced its private/public cloud solution at VMworld 2010. Since then, several large providers have begun to supply cloud computing services to the public using VMware vCloud. In addition, Amazon's Elastic Compute Cloud (EC2) has seen tremendous uptake, and many organizations—both large and small—are supplementing their compute capacity with instances running in Amazon's datacenters.

Again, put on your paranoid thinking cap. What information do you have in your organization? HR records, intellectual property, financial information—the list can get very long. For each type of information, you'll have restrictions.

But how safe is it to have your data up in the cloud? That depends on your answers to a few questions.

Control

Do you have control over what is happening on the infrastructure that isn't in your location? What level of control? Who else can access the VMs? These are questions you should ask yourself; then, think very carefully about the answers. Would you like someone to access the data you have stored at a provider? What measures can be taken to be sure this won't happen? How can you ensure that no one can access the VMs from the ESXi hosts on which the VMs are stored?

These are all possible scenarios and security vulnerabilities that exist in your organization as well—but in this case, the machines are located outside your network, outside your company, outside your city, and possibly in a different country.

Using the cloud may also present several different legal issues. Here's an example. You have a customer-facing application on a server somewhere in a cloud provider's datacenter. The provider also gave services to a client that turned out to be performing illegal activities. The authorities dispatched a court order to allow law-enforcement agencies to seize the data of the offender—but instead the law-enforcement agencies took a full rack of servers from the datacenter. What if you had a VM on one of the servers in that rack? This is a true story that happened in Dallas in 2009. It may be pessimistic, but it's better to be safe than sorry.

Here's something else to consider: will you ever have the same level of control over a server in the cloud that you have in your local datacenter, and what do you need to do to reach that same level? If you'll never have the same level of control, what amount of exposure are you willing to risk by using an external provider?

Data Transfer

Suppose you've succeeded in acquiring the correct amount of control over your server in the cloud. Now you have to think about how to transfer the data back and forth between your corporate network and the cloud.

You'll have to ensure the integrity and security of the transfer, which means a secure tunnel between your network and the cloud. This isn't a task to be taken lightly; it will require proper and thorough planning. Do you want the set of rules for your data flow from your organization out to the cloud to be the same as that for the flow of information from the cloud into your corporate network?

Back to the risk example. Carrie should have found a sound and secure provider, one with a solid reputation and good security. In addition, she should have set up the correct firewall rules to allow only certain kinds of traffic back into your corporate network, and made sure only the relevant information that absolutely needed to be located in the cloud was there.

The future will bring many different solutions to provide these kinds of services and to secure them as well. Because this technology is only starting to become a reality, the dangers we're aware of are only the ones we know about today. Who knows what the future will hold?

Auditing and Compliance

Many companies have to comply with certain standards and regulatory requirements, such as HIPAA, the Sarbanes-Oxley Act, the Data Privacy and Protection Act, ISO 17799, the PCI Data Security Standard, and so on. As in the section “Virtual Firewall,” this isn't so much a question of risk as it is a problem and solution.

The Problem

You've put your virtual infrastructure in place, and you continue to use it and deploy it further. You add hosts, import some VMs, add new storage, deploy a new cluster—things change, and things grow. And with a virtual infrastructure, the setup is more dynamic than before. How do you make sure everything is the way it should be? Are all your settings correct? How do you track all these changes?

The Solution

There are several ways you can ease your way into creating a compliant and standardized environment, including using host profiles, collecting centralized logs, and performing security audits.

Host Profiles

VMware has incorporated a feature called Host Profiles that does exactly what its name says. You can define a profile for a host and then apply the profile to a host, to a cluster, and even to all the hosts in your environment.

A profile is a collection of settings that you configure on a host. For example:

- vSwitch creation

- VMkernel creation

- Virtual machine port group creation

- NTP settings

- Local users and groups

This is just a short list of what you can do with host profiles. To enhance this, Host Profiles not only configures the hosts with the attached profile but also alerts you when a change is made to a host that causes it to no longer be compliant.

For example, someone may add a new datastore to the ESXi host or change the name of a port group. Why are these changes important? Because in both cases, the changes weren't made across all the hosts in the cluster—vMotion may fail or may succeed, but the destination host won't have the port group, and the VM will be disconnected from the network after the migration.

Using Host Profiles can ease your deployment of hosts and keep them in compliance with a standard configuration.

Centralized Log Collection

Each ESXi host is capable of sending its logs to a syslog server. The benefit of doing so is to have a central location with the logs of all the hosts in your environment. It's easier to archive these logs from one location, easier to analyze them, and easier to perform root-cause analysis, instead of having to go to every host in the environment. The use of a centralized logging facility is even more critical when you move to vSphere 5.0 or later, where only ESXi is present. ESXi can't preserve logs over system reboots/failures. This can be particularly problematic if an outage was unexpected and you have no logs previous to the outage. A syslog server will be a critical element in your design, now that ESXi will be the default hypervisor.

Security Audits

You should conduct regular audits of your environment. They should include all components your environment uses: storage, network, vCenter, ESXi, and VMs. You shouldn't rely on the external audit you undergo once a year, because there is a significant chance that if something has changed (and this is a security risk), you don't want to wait until it's discovered in the external audit (if you're lucky) or is exploited (in which case you aren't so lucky any more).

As we said earlier, environments aren't static: they evolve, and at a faster pace than you may think. The more hands that delve into the environment, the more changes are made, and the bigger the chance of something falling out of compliance with your company policy.

Create a checklist of things to check. For example:

- Are all the hosts using the correct credentials?

- Are the network settings on each host the same?

- Are the LUN masks/NFS export permissions correct?

- Which users have permissions to the vCenter Server, and what permissions do they have?

- Are any dormant machines no longer in use?

Your list should be composed of the important issues in your environment. It shouldn't be used in place of other monitoring tools or compliance tools that you already have in place but as an additional measure to secure your environment.

Summary

Throughout this chapter, you've seen different aspects of security and why it's important to keep your environment up to date and secure. You must protect your environment at every level: guest, host, storage, network, vCenter, and even outside your organization up in the cloud. Identifying your weakest link or softest spot and addressing the issue is your way to provide the proper security for your virtual infrastructure.

In the next chapter, we'll go into monitoring and capacity management.