CHAPTER 12 Sparsity in Redundant Dictionaries

Complex signals such as audio recordings or images often include structures that are not well represented by few vectors in any single basis. Indeed, small dictionaries such as bases have a limited capability of sparse expression. Natural languages build sparsity from large redundant dictionaries of words, which evolve in time. Biological perception systems also seem to incorporate robust and redundant representations that generate sparse encodings at later stages. Larger dictionaries incorporating more patterns can increase sparsity and thus improve applications to compression, denoising, inverse problems, and pattern recognition.

Finding the set of M dictionary vectors that approximate a signal with a minimum error is NP-hard in redundant dictionaries. Thus, it is necessary to rely on “good” but nonoptimal approximations, obtained with computational algorithms. Several strategies and algorithms are investigated. Best-basis algorithms restrict the approximations to families of orthogonal vectors selected in dictionaries of orthonormal bases. They lead to fast algorithms, illustrated with wavelet packets, local cosine, and bandlet orthonormal bases. To avoid the rigidity of orthogonality, matching pursuits find freedom in greediness. One by one they select the best approximation vectors in the dictionary. But greediness has it own pitfalls. A basis pursuit implements more global optimizations, which enforce sparsity by minimizing the l1 norm of decomposition coefficients.

Sparse signal decompositions in redundant dictionaries are applied to noise removal, signal compression, and pattern recognition, and multichannel signals such as color images are studied. Pursuit algorithms can nearly reach optimal M-term approximations in incoherent dictionaries that include vectors that are sufficiently different. Learning and updating dictionaries are studied by optimizing the approximation of signal examples.

12.1 IDEAL SPARSE PROCESSING IN DICTIONARIES

Computing an optimal M-term approximation in redundant dictionaries is computationally intractable, but it sets a goal that will guide most of the following sections and algorithms. The resulting compression algorithms and denoising estimators are described in Sections 12.1.2 and 12.1.3.

12.1.1 Best M-Term Approximations

Let ![]() be a dictionary of P unit norm vectors

be a dictionary of P unit norm vectors ![]() in a signal space

in a signal space ![]() N. We study sparse approximations of f ∈

N. We study sparse approximations of f ∈ ![]() N with vectors selected in D. Let

N with vectors selected in D. Let ![]() be a subset of vectors in D. We denote by |Λ| the cardinal of the index set Λ. The orthogonal projection of f on the space VΛ; generated by these vectors is

be a subset of vectors in D. We denote by |Λ| the cardinal of the index set Λ. The orthogonal projection of f on the space VΛ; generated by these vectors is

The set ![]() is called the support of the approximation coefficients a[p]. Its cardinal

is called the support of the approximation coefficients a[p]. Its cardinal ![]() is the 10 pseudo-norm giving the number of nonzero coefficients of a. This support carries geometrical information about f relative to D. In a wavelet basis, it gives the multiscale location of singularities and edges. In a time-frequency dictionary, it provides the location of transients and time-frequency evolution of harmonics.

is the 10 pseudo-norm giving the number of nonzero coefficients of a. This support carries geometrical information about f relative to D. In a wavelet basis, it gives the multiscale location of singularities and edges. In a time-frequency dictionary, it provides the location of transients and time-frequency evolution of harmonics.

The best M-term approximation fΛ; minimizes the approximation error ‖f–fΛ‖ with |Λ| = M dictionary vectors. If D is an orthonormal basis, then Section 9.2.1 proves that the best approximation vectors are obtained by thresholding the orthogonal signal coefficients at some level T. This is not valid if D is redundant, but Theorem 12.1 proves that a best approximation is still obtained by minimizing an 10 Lagrangian where T appears as a Lagrange multiplier:

This Lagrangian penalizes the approximation error ‖f–fΛ‖2 by the number of approximation vectors.

Theorem 12.1.

is a best approximation support, which satisfies for all ![]() ,

,

Proof.

The minimization (12.3) implies that any ![]() satisfies

satisfies

Therefore, if ![]() , then

, then ![]() , which proves (12.4).

, which proves (12.4).

This theorem proves in (12.4) that minimizing the 10 Lagrangian yields a best approximation fM = fΛT of f with M = |ΛT| terms in D. The decay of the approximation error is controlled in (12.5) by the Lagrangian decay as a function of T. If D is an orthonormal basis, Theorem 12.2 derives that the resulting approximation is a thresholding at T.

NP-Hard Support Covering

In general, computing the approximation support (12.3) which minimizes the 10 Lagrangian is proved by Davis, Mallat, and Avellaneda [201] to be an NP-hard problem. This means that there exists dictionaries where finding this solution belongs to a class of NP-complete problems, for which it has been conjectured for the last 40 years that the solution cannot be found with algorithms of polynomial complexity.

The proof [201] shows that for particular dictionaries, finding a best approximation is equivalent to a set-covering problem, which is known to be NP-hard. Let us consider a simple dictionary ![]() with vectors having exactly three nonzero coordinates in an orthonormal basis

with vectors having exactly three nonzero coordinates in an orthonormal basis ![]() ,

,

If the sets ![]() define an exact partition of a subset Ω of {0,…, N – 1}, then

define an exact partition of a subset Ω of {0,…, N – 1}, then ![]() has an exact and optimal dictionary decomposition:

has an exact and optimal dictionary decomposition:

Finding such an exact decomposition for any Ω, if it exists, is an NP-hard three-sets covering problem. Indeed, the choice of one element in the solution influences the choice of all others, which essentially requires us to try all possibilities. This argument shows that redundancy makes the approximation problem much more complex. In some dictionaries such as orthonormal bases, it is possible to find optimal M-term approximations with fast algorithms, but these are particular cases.

Since optimal solutions cannot be calculated exactly, it is necessary to find algorithms of reasonable complexity that find “good” if not optimal solutions. Section 12.2 describes the search for optimal solutions restricted to sets of orthogonal vectors in well-structured tree dictionaries. Sections 12.3 and 12.4 study pursuit algorithms that search for more flexible and thus nonorthogonal sets of vectors, but that are not always optimal. Pursuit algorithms may yield optimal solutions, if the optimal support Λ satisfies exact recovery properties (studied in Section 12.5).

12.1.2 Compression by Support Coding

Chapter 10 describes transform code algorithms that quantize and code signal coefficients in an orthonormal basis. Increasing the dictionary size can reduce the approximation error by offering more choices. However, it also increases the number of bits needed to code which approximation vectors compress a signal. Optimizing the distortion rate is a trade-off between both effects.

We consider a transform code that approximates f by its orthogonal projection fΛ on the space VΛ generated by the dictionary vectors ![]() , and that quantizes the resulting coefficients. The quantization error is reduced by orthogonalizing the family

, and that quantizes the resulting coefficients. The quantization error is reduced by orthogonalizing the family ![]() , for example, with a Gram-Schmidt algorithm, which yields an orthonormal basis

, for example, with a Gram-Schmidt algorithm, which yields an orthonormal basis ![]() of VΛ. The orthogonal projection on VΛ can then be written as

of VΛ. The orthogonal projection on VΛ can then be written as

These coefficients are uniformly quantized with

and the signal recovered from quantized coefficients is

The set Λ is further restricted to coefficients ![]() , and thus

, and thus ![]() , and thus, which has no impact on

, and thus, which has no impact on ![]() .

.

Distortion Rate

Let us compute the distortion rate as a function of the dictionary size P. The compression distortion is decomposed in an approximation error plus a quantization error:

With (12.11), we derive that the coding distortion is smaller than the 10 Lagrangian (12.2):

This result shows that minimizing the 10 Lagrangian reduces the compression distortion.

Having a larger dictionary offers more possibilities to choose A and further reduce the Lagrangian. Suppose that some optimization process finds an approximation support ΛT such that

where C and s depend on the dictionary design and size. The number M of nonzero quantized coefficients is ![]() . Thus, the distortion rate satisfies

. Thus, the distortion rate satisfies

where R is the total number of bits required to code the quantized coefficients of ![]() with a variable-length code.

with a variable-length code.

As in Section 10.4.1, the bit budget R is decomposed into R0 bits that code the support set ![]() , plus R1 bits to code the M nonzero quantized values Q({f, gp)) for p ∈ Λ. Let P be the dictionary size. We first code M = |ΛT| ≤ P with log2 P bits. There are

, plus R1 bits to code the M nonzero quantized values Q({f, gp)) for p ∈ Λ. Let P be the dictionary size. We first code M = |ΛT| ≤ P with log2 P bits. There are ![]() subsets of size M in a set of size P. Coding ΛT without any other prior geometric information thus requires

subsets of size M in a set of size P. Coding ΛT without any other prior geometric information thus requires ![]() bits. As in (10.48), this can be implemented with an entropy coding of the binary significance map

bits. As in (10.48), this can be implemented with an entropy coding of the binary significance map

The proportion pk of quantized coefficients of amplitude |QΔ(f, gp))| = kΔ typically has a decay of pk = (k−1+ε) for ε > 0, as in (10.57). We saw in (10.58) that coding the amplitude of the M nonzero coefficients with a logarithmic variable length lk = log2 (π2/6) + 2 log2 k, and coding their sign, requires a total number of bits R1 ~ M bits. For M ![]() P, it results that the total bit budget is dominated by the number of bits R0 to code the approximation support ΛT,

P, it results that the total bit budget is dominated by the number of bits R0 to code the approximation support ΛT,

and hence that

For a distortion satisfying (12.15), we get

When coding the approximation support ΛT in a large dictionary of size P as opposed to an orthonormal basis of size N, it introduces a factor log2 P in the distortion rate (12.17) instead of the log2N factor in (10.8). This is worth it only if it is compensated by a reduction of the approximaton constant C or an increase of the decay exponent s.

Distortion Rate for Analog Signals

A discrete signal f [n] is most often obtained with a linear discretization that projects an analog signal ![]() (x) on an approximation space UN of size N. This linear approximation error typically decays like O(N−β). From the discrete compressed signal

(x) on an approximation space UN of size N. This linear approximation error typically decays like O(N−β). From the discrete compressed signal ![]() [n], a discrete-to-analog conversion restores an analog approximation fN(x) ∈UN of

[n], a discrete-to-analog conversion restores an analog approximation fN(x) ∈UN of ![]() (x).

(x).

Let us choose a discrete resolution N~R(2s−1)/β. If the dictionary has a polynomial size P=O(Nγ), then similar to (10.62), we derive from (12.17) that

Thus, the distortion rate in a dictionary of polynomial size essentially depends on the constant C and the exponent s of the 10 Lagrangian decay ![]() in (12.14). To optimize the asymptotic distortion rate decay, one must find dictionaries of polynomial sizes that maximize s. Section 12.2.4 gives an example of a bandlet dictionary providing such optimal approximations for piecewise regular images.

in (12.14). To optimize the asymptotic distortion rate decay, one must find dictionaries of polynomial sizes that maximize s. Section 12.2.4 gives an example of a bandlet dictionary providing such optimal approximations for piecewise regular images.

12.1.3 Denoising by Support Selection in a Dictionary

A hard thresholding in an orthonormal basis is an efficient nonlinear projection estimator, if the basis defines a sparse signal approximation. Such estimators can be improved by increasing the dictionary size. A denoising estimator in a redundant dictionary also projects the observed data on a space generated by an optimized set Λ of vectors. Selecting this support is more difficult than for signal approximation or compression because the noise impacts the choice of Λ. The model selection theory proves that a nearly optimal set is estimated by minimizing the 10 Lagrangian, with an appropriate multiplier T.

Noisy signal observations are written as

where W[n] is a Gaussian white noise of variance σ2. Let ![]() be a dictionary of P-unit norm vectors. To any subfamily of vectors,

be a dictionary of P-unit norm vectors. To any subfamily of vectors, ![]() corresponds an orthogonal projection estimator on the space VΛ generated by these vectors:

corresponds an orthogonal projection estimator on the space VΛ generated by these vectors:

The orthogonal projection in VΛ satisfies ![]() , so

, so

The bias term ‖f–f‖2 is the signal approximation error, which decreases when |Λ| increases. On the contrary, the noise energy ‖WΛ‖2 in VΛ increases when |Λ| increases. Reducing the risk amounts to finding a projection support A that balances these two terms to minimize their sum.

Since VΛ is a space of dimension |Λ|, the projection of a white noise of variance σ2 satisfies E{‖WΛ‖2} = |Λ| σ2. However, there are 2P possible subsets Λ in Γ, and ‖WΛ;‖2 may potentially take much larger values than |Λ|σ2 for some particular sets Λ. A concentration inequality proved in Lemma 12.1 of Theorem 12.3 shows that for any subset Λ of Γ,

with a probability that tends to 1 as P increases for Λ large enough. It results that the estimation error is bounded by the approximation Lagrangian:

However, the set ΛT that minimizes this Lagrangian,

can only be found by an oracle because it depends on f, which is unknown. Thus, we need to find an estimator that is nearly as efficient as the oracle projector on this subset ΛT of vectors.

Penalized Empirical Error

Estimating the oracle set ΛT in (12.21) requires us to estimate ‖f−fΛ‖2 for any Λ ⊂ Γ. A crude estimator is given by the empirical norm

This may seem naive because it yields a large error,

Since X = f + W and ![]() , the expected error is

, the expected error is

which is of the order of Nσ2 if |Λ| ![]() N. However, the first large term does not influence the choice of Λ. The component that depends on Λ is the smaller term

N. However, the first large term does not influence the choice of Λ. The component that depends on Λ is the smaller term ![]() , which is only of the order |Λ|σ2.

, which is only of the order |Λ|σ2.

Thus, we estimate ![]() with

with ![]() in the oracle formula (12.21), and define the best empirical estimation

in the oracle formula (12.21), and define the best empirical estimation ![]() as the orthogonal projection on a space

as the orthogonal projection on a space ![]() , where

, where ![]() minimizes the penalized empirical risk:

minimizes the penalized empirical risk:

Theorem 12.3 proves that this estimated set ![]() yields a risk that is within a factor of 4 of the risk obtained by the oracle set ΛT in (12.21). This theorem is a consequence of the more general model selection theory of Barron, Birge, and Massart [97], where the optimization of Λ is interpreted as a model selection. Theorem 12.3 was also proved by Donoho and Johnstone [220] for estimating a best basis in a dictionary of orthonormal bases.

yields a risk that is within a factor of 4 of the risk obtained by the oracle set ΛT in (12.21). This theorem is a consequence of the more general model selection theory of Barron, Birge, and Massart [97], where the optimization of Λ is interpreted as a model selection. Theorem 12.3 was also proved by Donoho and Johnstone [220] for estimating a best basis in a dictionary of orthonormal bases.

Theorem 12.3: Barron, Birgé, Massart, Donoho, Johnstone. Let σ2 be the noise variance and ![]() with

with ![]() . For any f ∈

. For any f ∈ ![]() N, the best empirical set

N, the best empirical set

yields a projection estimator ![]() of f, which satisfies

of f, which satisfies

Proof.

Concentration inequalities are at the core of this result. Indeed, the penalty T2 |Λ| must dominate the random fluctuations of the projected noise. We give a simplified proof provided in [233]. Lemma 12.1 uses a concentration inequality for Gaussian variables to ensure with high probability that the noise energy is simultaneously small in all the subspaces VΛ spanned by subsets of vectors in D.

Lemma 12.1.

with a probability greater than 1−2e−u/P.

This lemma is based on Tsirelson’s lemma which proves that for any function L from ![]() N to

N to ![]() that is 1-Lipschitz (|L(f)−L(g)| ≤ ‖f−g‖), and for any normalized Gaussian white noise vector W of variance σ2 = 1,

that is 1-Lipschitz (|L(f)−L(g)| ≤ ‖f−g‖), and for any normalized Gaussian white noise vector W of variance σ2 = 1,

The orthogonal projection’s norm L(W) = ‖WΛ;‖ is 1-Lipschitz. Applying Tsirelson’s lemma to ![]() for

for ![]() yields

yields

Let us now compute the probability of the existence of a set ![]() that does not satisfy the lemma condition (12.25), by considering each subset of Γ:

that does not satisfy the lemma condition (12.25), by considering each subset of Γ:

from which we get (12.25) by observing that ![]() because

because ![]() . This finishes the proof of Lemma 12.1.

. This finishes the proof of Lemma 12.1.

By construction, the best empirical set ![]() compared to the oracle set ΛT in (12.21) satisfies

compared to the oracle set ΛT in (12.21) satisfies

By using ![]() and a similar equality for

and a similar equality for ![]() together with the equalities

together with the equalities ![]() and

and ![]() , we derive that

, we derive that

The vectors ![]() generate a space

generate a space ![]() of dimension smaller or equal to

of dimension smaller or equal to ![]() . We denote by

. We denote by ![]() the orthogonal projection of the noise W on this space. The inner product is bounded by writing

the orthogonal projection of the noise W on this space. The inner product is bounded by writing

Lemma 12.1 implies

with a probability greater than ![]() . Applying

. Applying ![]() successively with β = 1/2 and β = 1 gives

successively with β = 1/2 and β = 1 gives

Inserting this bound in (12.26) yields

So that if ![]() ,

,

which implies for ![]() that

that

where this result holds with probability greater than ![]() .

.

Since this is valid for all u ≤ 0, one has

which implies by integration over u that

which proves the theorem result (12.24).

This theorem proves that the selection of a best-penalized empirical projection produces a risk that is within a factor of 4 of the minimal oracle risk obtained by selecting the best dictionary vectors that approximate f. Birge and Massart [114] obtain a better lower bound for Λ (roughly ![]() and thus

and thus ![]() ) and a multiplicative factor smaller than 4 with a more complex proof using Talgrand’s concentration inequalities.

) and a multiplicative factor smaller than 4 with a more complex proof using Talgrand’s concentration inequalities.

If D is an orthonormal basis, then Theorem 12.2 proves that the optimal estimator ![]() is a hard-thresholding estimator at T. Thus, this theorem generalizes the thresholding estimation theorem (11.7) of Donoho and Johnstone that computes an upper bound of the thresholding risk in an orthonormal basis with P = N.

is a hard-thresholding estimator at T. Thus, this theorem generalizes the thresholding estimation theorem (11.7) of Donoho and Johnstone that computes an upper bound of the thresholding risk in an orthonormal basis with P = N.

The minimum Lagrangian value L0(T, f, ΛT) is reduced by increasing the size of the dictionary D. However, this is paid by also increasing T proportionally to logeP, so that the penalization term T2|Λ| is big enough to dominate the impact of the noise on the selection of dictionary vectors. Increasing D is thus worth it only if the decay of L0(T, f, ΛT) compensates the increase of T, as in the compression application of Section 12.1.2.

Estimation Risk for Analog Signals

Discrete signals are most often obtained by discretizing analog signals, and the estimation risk can also be computed on the input analog signal, as in Section 11.5.3. Let f[n] be the discrete signal obtained by approximating an analog signal f(x) in an approximation space UN of size N. An estimator ![]() of f[n] is converted into an analog estimation F(x) ∈ UN of f(x), with a discrete-to-analog conversion. We verify as in (11.141) that the total risk is the sum of the discrete estimation risk plus a linear approximaton error:

of f[n] is converted into an analog estimation F(x) ∈ UN of f(x), with a discrete-to-analog conversion. We verify as in (11.141) that the total risk is the sum of the discrete estimation risk plus a linear approximaton error:

Suppose that the dictionary has a polynomial size P = O(Nγ) and that the 10 Lagrangian decay satisfies

If the linear approximation error satisfies ![]() , then by choosing

, then by choosing ![]() , we derive from (12.24) in Theorem 12.3 that

, we derive from (12.24) in Theorem 12.3 that

When the noise variance σ2 decreases, the risk decay depends on the decay exponent s of the 10 Lagrangian. Optimized dictionaries should thus increase s as much as possible for any given class Θ of signals.

12.2 DICTIONARIES OF ORTHONORMAL BASES

To reduce the complexity of sparse approximations selected in a redundant dictionary, this section restricts such approximations to families of orthogonal vectors. Eliminating approximations from nonorthogonal vectors reduces the number of possible approximation sets Λ in D, which simplifies the optimization. In an orthonormal basis, an optimal nonlinear approximation selects the largest-amplitude coefficients. Dictionaries of orthonormal bases take advantage of this property by regrouping orthogonal dictionary vectors in a multitude of orthonormal bases.

Definition 12.1. A dictionary D is said to be a dictionary of orthonormal bases of ![]() N if any family of orthogonal vectors in D also belongs to an orthonormal basis B of

N if any family of orthogonal vectors in D also belongs to an orthonormal basis B of ![]() N included in D.

N included in D.

A dictionary of orthonormal bases ![]() is thus a family of P>N vectors that can also be viewed as a union of orthonormal bases, many of which share common vectors. Wavelet packets and local cosine bases in Chapter 8 define dictionaries of orthonormal bases. In Section 12.2.1 we prove that finding a best signal approximation with orthogonal dictionary vectors can be casted as a search for a best orthonormal basis in which orthogonal vectors are selected by a thresholding. Compression and denoising algorithms are implemented in such a best basis. Tree-structured dictionaries are introduced in Section 12.2.2, in order to compute best bases with a fast dynamic programming algorithm.

is thus a family of P>N vectors that can also be viewed as a union of orthonormal bases, many of which share common vectors. Wavelet packets and local cosine bases in Chapter 8 define dictionaries of orthonormal bases. In Section 12.2.1 we prove that finding a best signal approximation with orthogonal dictionary vectors can be casted as a search for a best orthonormal basis in which orthogonal vectors are selected by a thresholding. Compression and denoising algorithms are implemented in such a best basis. Tree-structured dictionaries are introduced in Section 12.2.2, in order to compute best bases with a fast dynamic programming algorithm.

12.2.1 Approximation, Compression, and Denoising in a Best Basis

Sparse approximations of signals f ∈ ![]() N are constructed with orthogonal vectors selected from a dictionary

N are constructed with orthogonal vectors selected from a dictionary ![]() of orthonormal bases with compression and denoising applications.

of orthonormal bases with compression and denoising applications.

Best Basis

We denote by Λo ⊂ γ a collection of orthonormal vectors in D. Sets of nonorthogonal vectors are not considered. The orthogonal projection of f on the space generated by these vectors is then ![]() .

.

In an orthonormal basis ![]() , Theorem 12.2 proves that the Lagrangian

, Theorem 12.2 proves that the Lagrangian ![]() is minimized by selecting coefficients above T. The resulting minimum is

is minimized by selecting coefficients above T. The resulting minimum is

Theorem 12.4 derives that a best approximation from orthogonal vectors in D is obtained by thresholding coefficients in a best basis that minimizes this 10 Lagrangian.

Theorem 12.4.

the thresholded set ![]() satisfies

satisfies

and for all Λo ⊂ γ,

Proof.

Since any vector in D belongs to an orthonormal basis B ⊂ D, we can write ![]() , so (12.28) with (12.29) implies (12.30). The optimal approximation result (12.31), like (12.4), comes from the fact that

, so (12.28) with (12.29) implies (12.30). The optimal approximation result (12.31), like (12.4), comes from the fact that ![]() .

.

This theorem proves in (12.31) that the thresholding approximation ![]() of f in the best orthonormal basis BT is the best approximation of f from M = |ΛT| orthogonal vectors in D.

of f in the best orthonormal basis BT is the best approximation of f from M = |ΛT| orthogonal vectors in D.

Compression in a Best Orthonormal Basis

Section 12.1.2 shows that quantizing signal coefficients over orthogonal dictionary vectors ![]() yields a distortion

yields a distortion

where 2T = Δ is the quantization step. A best transform code that minimizes this Lagrangian upper bound is thus implemented in the best orthonormal basis ![]() T in (12.29).

T in (12.29).

With an entropy coding of the significance map (12.16), the number of bits R0 to code the indexes of the M nonzero quantized coefficients among P dictionary elements is ![]() . However, the number of sets Λo of orthogonal vectors in D is typically much smaller than the number 2P of subsets Λ in γ and the resulting number R0 of bits is thus smaller.

. However, the number of sets Λo of orthogonal vectors in D is typically much smaller than the number 2P of subsets Λ in γ and the resulting number R0 of bits is thus smaller.

A best-basis search improves the distortion rate d(R, f) if the Lagrangian approximation reduction is not compensated by the increase of R0 due to the increase of the number of orthogonal vector sets. For example, if the original signal is piecewise smooth, then a best wavelet packet basis does not concentrate the signal energy much more efficiently than a wavelet basis. Despite the fact that a wavelet packet dictionary includes a wavelet basis, the distortion rate in a best wavelet packet basis is then larger than in a single wavelet basis. For geometrically regular images, Section 12.2.4 shows that a dictionary of bandlet orthonormal bases reduces the distortion rate of a wavelet basis.

Denoising in a Best Orthonormal Basis

To estimate a signal f from noisy signal observations

where W[n] is a Gaussian white noise, Theorem 12.3 proves that a nearly optimal estimator is obtained by minimizing a penalized empirical Lagrangian,

Restricting Λ to be a set Λo of orthogonal vectors in ![]() reduces the set of possible signal models. As a consequence, Theorem 12.3 remains valid for this subfamily of models. Theorem 12.4 proves in (12.30) that the Lagrangian (12.32) is minimized by thresholding coefficients in a best basis. A best-basis thresholding thus yields a risk that is within a factor of 4 of the best estimation obtained by an oracle. This best basis can be calculated with a fast algorithm described in Section 12.2.2.

reduces the set of possible signal models. As a consequence, Theorem 12.3 remains valid for this subfamily of models. Theorem 12.4 proves in (12.30) that the Lagrangian (12.32) is minimized by thresholding coefficients in a best basis. A best-basis thresholding thus yields a risk that is within a factor of 4 of the best estimation obtained by an oracle. This best basis can be calculated with a fast algorithm described in Section 12.2.2.

12.2.2 Fast Best-Basis Search in Tree Dictionaries

Tree dictionaries of orthonormal bases are constructed with a recursive split of orthogonal vector spaces and by defining specific orthonormal bases in each subspace. For any additive cost function such as the 10 Lagrangian (12.2), a fast dynamic programming algorithm finds a best basis with a number of operations proportional to the size P of the dictionary.

Recursive Split of Vectors Spaces

A tree dictionary ![]() is obtained by recursively dividing vector spaces into q orthogonal subspaces, up to a maximum recursive depth. This recursive split is represented by a tree. A vector space Wld is associated to each tree node at a depth d and position l. The q children of this node correspond to an orthogonal partition of Wld into q orthogonal subspaces

is obtained by recursively dividing vector spaces into q orthogonal subspaces, up to a maximum recursive depth. This recursive split is represented by a tree. A vector space Wld is associated to each tree node at a depth d and position l. The q children of this node correspond to an orthogonal partition of Wld into q orthogonal subspaces ![]() at depth d + 1, located at the positions ql+iO≤i<q:

at depth d + 1, located at the positions ql+iO≤i<q:

Space ![]() at the root of the tree is the full signal space

at the root of the tree is the full signal space ![]() N. One or several specific orthonormal bases are constructed for each space Wld. The dictionary D is the union of all these specific orthonormal bases for all the spaces Wld of the tree.

N. One or several specific orthonormal bases are constructed for each space Wld. The dictionary D is the union of all these specific orthonormal bases for all the spaces Wld of the tree.

Chapter 8 defines dictionaries of wavelet packet and local cosine bases along binary trees (q = 2) for one-dimensional signals and along quad-trees (q = 4) for images. These dictionaries are constructed with a single basis for each space Wld. For signals of size N, they have P = N log2 N vectors. The bandlet dictionary in Section 12.2.4 is also defined along a quad-tree, but each space Wld has several specific orthonormal bases corresponding to different image geometries.

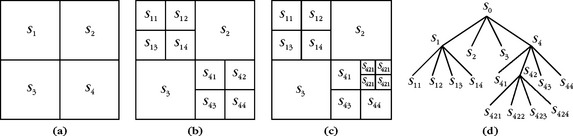

An admissible subtree of a full dictionary tree is a subtree where each node is either a leaf or has its q children. Figure 12.1(b) gives an example of a binary admissible tree. We verify by induction that the vector spaces at the leaves of an admissible tree define an orthogonal partition of W00 = ![]() N into orthogonal subspaces. The union of orthonormal bases of these spaces is therefore an orthonormal basis of

N into orthogonal subspaces. The union of orthonormal bases of these spaces is therefore an orthonormal basis of ![]() N.

N.

Additive Costs

A best basis can be defined with a cost function that is not necessarily the 10 Lagrangian (12.2). An additive cost function of a signal f in a basis ![]() is defined as a sum of independent contributions from each coefficient in

is defined as a sum of independent contributions from each coefficient in ![]() :

:

A best basis of ![]() N in

N in ![]() minimizes the resulting cost,

minimizes the resulting cost,

The 10 Lagrangian (12.2) is an example of additive cost function,

The minimization of an 11 norm is obtained with

In Section 12.4.1 we introduce a basis pursuit algorithm that also minimizes the 11 norm of signal coefficients in a redundant dictionary. A basis pursuit selects a best basis but without imposing any orthogonal constraint.

Fast Best-Basis Selection

The fast best-basis search algorithm, introduced by Coifman and Wickerhauser [180], relies on the dictionary tree structure and on the cost additivity. This algorithm is a particular instance of the Classification and Regression Tree (CART) algorithm by Breiman et al. [9]. It explores all tree nodes, from bottom to top, and at each node it computes the best basis ![]() of the corresponding space Wld.

of the corresponding space Wld.

The cost additivity property (12.34) implies that an orthonormal basis ![]() , which is a union of q orthonormal families Bi, has a cost equal to the sum of their cost:

, which is a union of q orthonormal families Bi, has a cost equal to the sum of their cost:

As a result, the best basis ![]() , which minimizes this cost among all bases of

, which minimizes this cost among all bases of ![]() , is either one of the specific bases of

, is either one of the specific bases of ![]() or a union of the best bases

or a union of the best bases ![]() that were previously calculated for each of its subspace

that were previously calculated for each of its subspace ![]() for 0 ≤ i<q. The decision is thus performed by minimizing the resulting cost, as described in Algorithm 12.1.

for 0 ≤ i<q. The decision is thus performed by minimizing the resulting cost, as described in Algorithm 12.1.

ALGORITHM 12.1

![]() Compute all dictionary coefficients

Compute all dictionary coefficients ![]() .

.

![]() Initialize the cost of each tree space

Initialize the cost of each tree space ![]() by finding the basis

by finding the basis ![]() of minimum cost among all specific bases

of minimum cost among all specific bases ![]() of

of ![]() :

:

![]() For each tree node (d,l), visited from the bottom to the top (d decreasing), if we are not at the bottom and if

For each tree node (d,l), visited from the bottom to the top (d decreasing), if we are not at the bottom and if

then set ![]() ; otherwise set

; otherwise set ![]() .

.

This algorithm outputs the best basis ![]() that has a minimum cost among all bases of the dictionary. For wavelet packet and local cosine dictionaries, there is a single specific basis per space

that has a minimum cost among all bases of the dictionary. For wavelet packet and local cosine dictionaries, there is a single specific basis per space ![]() , so (12.38) is reduced to computing the cost in this basis. In a bandlet dictionary there are many specific bases for each

, so (12.38) is reduced to computing the cost in this basis. In a bandlet dictionary there are many specific bases for each ![]() corresponding to different geometric image models.

corresponding to different geometric image models.

For a dictionary of size P, the number of comparisons and additions to construct this best basis is O(P). The algorithmic complexity is thus dominated by the computation of the P dictionary coefficients ![]() . If implemented with O(P) operations with a fast transform, then the overall computational algorithmic complexity is O(P). This is the case for wavelet packet, local cosine, and bandlet dictionaries.

. If implemented with O(P) operations with a fast transform, then the overall computational algorithmic complexity is O(P). This is the case for wavelet packet, local cosine, and bandlet dictionaries.

12.2.3 Wavelet Packet and Local Cosine Best Bases

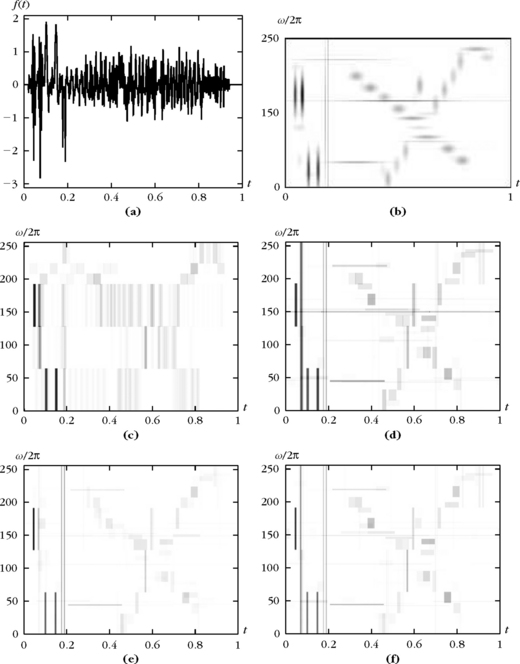

A best wavelet packet or local cosine basis selects time-frequency atoms that match the time-frequency resolution of signal structures. Therefore, it adapts the time-frequency geometry of the approximation support ΛT. Wavelet packet and local cosine dictionaries are constructed in Chapter 8. We evaluate these approximations through examples that also reveal their limitations.

Best Orthogonal Wavelet Packet Approximations

A wavelet packet orthogonal basis divides the frequency axis into intervals of varying dyadic sizes 2. Each frequency interval is covered by a wavelet packet function that is uniformly translated in time. A best wavelet packet basis can thus be interpreted as a “best” segmentation of the frequency axis in dyadic sizes intervals.

A signal is well approximated by a best wavelet packet basis, if in any frequency interval, the high-energy structures have a similar time-frequency spread. The time translation of the wavelet packet that covers this frequency interval is then well adapted to approximating all the signal structures in this frequency range that appear at different times. In the best basis computed by minimizing the 10 Lagrangian in (12.36), Theorem 12.4 proves that the set of ΛT of wavelet packet coefficients above T correspond to the orthogonal wavelet packet vectors that best approximate f in the whole wavelet packet dictionary. These wavelet packets are represented by Heisenberg boxes, as explained in Section 8.1.2.

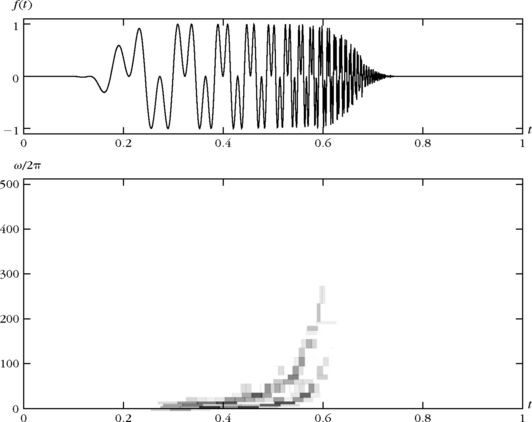

Figure 12.2 gives the best wavelet packet approximation set ΛT of a signal composed of two hyperbolic chirps. The proportion of wavelet packet coefficients that are retained is M/N = |ΛT|/N = 8%. The resulting best M-term orthogonal approximaton ![]() has a relative error

has a relative error ![]() . The wavelet packet tree was calculated with the symmlet 8 conjugate mirror filter. The time support of chosen wavelet packets is reduced at high frequencies to adapt itself to the chirps’ rapid modification of frequency content. The energy distribution revealed by the wavelet packet Heisenberg boxes in ΛT is similar to the scalogram calculated in Figure 4.17.

. The wavelet packet tree was calculated with the symmlet 8 conjugate mirror filter. The time support of chosen wavelet packets is reduced at high frequencies to adapt itself to the chirps’ rapid modification of frequency content. The energy distribution revealed by the wavelet packet Heisenberg boxes in ΛT is similar to the scalogram calculated in Figure 4.17.

FIGURE 12.2 The top signal includes two hyperbolic chirps. The Heisenberg boxes of the best orthogonal wavelet packets in ΛT are shown in the bottom image. The darkness of each rectangle is proportional to the amplitude of the corresponding wavelet packet coefficient.

Figure 8.6 gives another example of a best wavelet packet basis for a different multichirp signal, calculated with the entropy cost C(x) = |x| loge |x| in (12.34).

Let us mention that the application of best wavelet packet bases to pattern recognition is difficult because these dictionaries are not translation invariant. If the signal is translated, its wavelet packet coefficients are severely modified and the Lagrangian minimization may yield a different basis. This remark applies to local cosine bases as well.

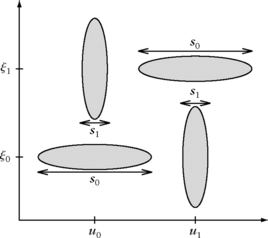

If the signal includes different types of high-energy structures, located at different times but in the same frequency interval, there is no wavelet packet basis that is well adapted to all of them. Consider, for example, a sum of four transients centered, respectively, at u0 and u1 at two different frequencies ζ0 and ζ1:

The smooth window g has a Fourier transform g whose energy is concentrated at low frequencies. The Fourier transform of the four transients have their energy concentrated in frequency bands centered, respectively, at ζ0 and ζ1:

If s0 and s1 have different values, the time and frequency spread of these transients is different, which is illustrated in Figure 12.3. In the best wavelet packet basis selection, the first transient ![]() “votes” for a wavelet packet basis with a scale 2j that is of the order s0 at the frequency ζ0 whereas

“votes” for a wavelet packet basis with a scale 2j that is of the order s0 at the frequency ζ0 whereas ![]() “votes” for a wavelet packet basis with a scale 2j that is close to s1 at the same frequency. The “best” wavelet packet is adapted to the transient of highest energy. The energy of the smaller transient is then spread across many “best” wavelet packets. The same thing happens for the second pair of transients located in the frequency neighborhood of ξ1.

“votes” for a wavelet packet basis with a scale 2j that is close to s1 at the same frequency. The “best” wavelet packet is adapted to the transient of highest energy. The energy of the smaller transient is then spread across many “best” wavelet packets. The same thing happens for the second pair of transients located in the frequency neighborhood of ξ1.

Speech recordings are examples of signals that have properties that rapidly change in time. At two different instants in the same frequency neighborhood, the signal may have totally different energy distributions. A best orthogonal wavelet packet basis is not adapted to this time variation and gives poor nonlinear approximations. Sections 12.3 and 12.4 show that a more flexible nonorthogonal approximation with wavelet packets, computed with a pursuit algorithm, can have the required flexibility.

As in one dimension, an image is well approximated in a best wavelet packet basis if its structures within a given frequency band have similar properties across the whole image. For natural scene images, a best wavelet packet often does not provide much better nonlinear approximations than the wavelet basis included in this wavelet packet dictionary. However, for specific classes of images such as fingerprints, one may find wavelet packet bases that significantly outperform the wavelet basis [122].

Best Orthogonal Local Cosine Representations

Tree dictionaries of local cosine bases are constructed in Section 8.5 with P = N log2 N local cosine vectors. They divide the time axis into intervals of varying dyadic sizes. A best local cosine basis adapts the time segmentation to the variations of the signal time-frequency structures. It is computed with O(Nlog2N) operations with the best-basis search algorithm from Section 12.2.2.

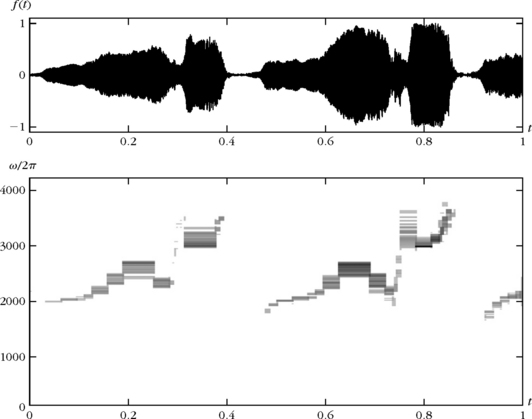

In comparison with wavelet packets, we gain time adaptation but we lose frequency flexibility. A best local cosine basis is therefore well adapted to approximating signals with properties that may vary in time, but that do not include structures of very different time and frequency spreads at any given time. Figure 12.4 shows the Heisenberg boxes of the set ΛT of orthogonal local cosine vectors that best approximate the recording of a bird song, computed by minimizing the 10 Lagrangian (12.36). The chosen threshold T yields a relative approximation error ![]() with |ΛT|/N = 11% coefficients. The selected local cosine vectors have a time and frequency resolution adapted to the transients and harmonic structures of the signal. Figure 8.19 shows a best local cosine basis that is calculated with an entropy cost function for a speech recording.

with |ΛT|/N = 11% coefficients. The selected local cosine vectors have a time and frequency resolution adapted to the transients and harmonic structures of the signal. Figure 8.19 shows a best local cosine basis that is calculated with an entropy cost function for a speech recording.

FIGURE 12.4 Recording of a bird song (top). The Heisenberg boxes of the best orthogonal local cosine vectors in ΛT are shown in the bottom image. The darkness of each rectangle is proportional to the amplitude of the local cosine coefficient.

The sum of four transients (12.39) is not efficiently represented in a wavelet packet basis but neither is it well approximated in a best local cosine basis. Indeed, if the scales s0 and s1 are very different, at u0 and u1 this signal includes two transients at the frequency ζ0 and ζ1, respectively, that have a very different time-frequency spread. In each time neighborhood, the size of the window is adapted to the transient of highest energy. The energy of the second transient is spread across many local cosine vectors. Efficient approximations of such signals require more flexibility, which is provided by the pursuit algorithms from Sections 12.3 and 12.4. Figure 12.5 gives a denoising example with a best local cosine estimator. The signal in Figure 12.5(b) is the bird song contaminated by an additive Gaussian white noise of variance σ2 with an SNR of 12 db. According to Theorem 12.3, a best orthogonal projection estimator is computed by selecting a set ![]() of best orthogonal local cosine dictionary vectors, which minimizes an empirical penalized risk. This penalized risk corresponds to the empirical 10 Lagrangian (12.23), which is minimized by the best-basis algorithm. The chosen threshold of T = 3.5 σ is well below the theoretical universal threshold of

of best orthogonal local cosine dictionary vectors, which minimizes an empirical penalized risk. This penalized risk corresponds to the empirical 10 Lagrangian (12.23), which is minimized by the best-basis algorithm. The chosen threshold of T = 3.5 σ is well below the theoretical universal threshold of ![]() , which improves the SNR. The Heisenberg boxes of local cosine vectors indexed by

, which improves the SNR. The Heisenberg boxes of local cosine vectors indexed by ![]() are shown in boxes of the remaining coefficients in Figure 12.5(c). The orthogonal projection

are shown in boxes of the remaining coefficients in Figure 12.5(c). The orthogonal projection ![]() is shown in Figure 12.5(d).

is shown in Figure 12.5(d).

FIGURE 12.5 (a) Original bird song. (b) Noisy signal (SNR = 12 db). (c) Heisenberg boxes of the set ![]() of estimated best orthogonal local cosine vectors. (d) Estimation reconstructed from noisy local cosine coefficients in

of estimated best orthogonal local cosine vectors. (d) Estimation reconstructed from noisy local cosine coefficients in ![]() (SNR = 15.5db).

(SNR = 15.5db).

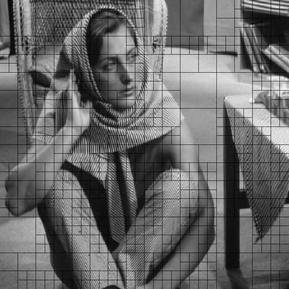

In two dimensions, a best local cosine basis divides an image into square windows that have a size adapted to the spatial variations of local image structures. Figure 12.6 shows the best-basis segmentation of the Barbara image, computed by minimizing the 11 norm of its coefficients, with the 11 cost function (12.37). The squares are bigger in regions where the image structures remain nearly the same. Figure 8.22 shows another example of image segmentation with a best local cosine basis, also computed with an 11 norm.

12.2.4 Bandlets for Geometric Image Regularity

Bandlet dictionaries are constructed to improve image representations by taking advantage of their geometric regularity. Wavelet coefficients are not optimally sparse but inherit geometric image regularity. A bandlet transform applies a directional wavelet transform over wavelet coefficients to reduce the number of large coefficients. This directional transformation depends on a geometric approximation model calculated from the image. Le Pennec, Mallat, and Peyre [342, 365, 396] introduced dictionaries of orthogonal bandlet bases, where the best-basis selection optimizes the geometric approximation model. For piecewise Cα images, the resulting M-term bandlet approximations have an optimal asymptotic decay in O(M−α).

Approximation of Piecewise Cα Images

Definition 9.1 defines a piecewise Cα image f as a function that is uniformly Lipschitz α everywhere outside a set of edge curves, which are also uniformly Lipschitz α. This image may also be blurred by an unknown convolution kernel. If f is uniformly Lipschitz α without edges, then Theorem 9.16 proves that a linear wavelet approximation has an optimal error decay ![]() . Edges produce a larger linear approximation error

. Edges produce a larger linear approximation error ![]() , which is improved by a nonlinear wavelet approximation

, which is improved by a nonlinear wavelet approximation ![]() , but without recovering the O(M−α) decay. For α = 2, Section 93 shows that a piecewise linear approximation over an optimized adaptive triangulation with M triangles reaches the error decay O(M−2). Thresholding curvelet frame coefficients also yields a nonlinear approximation error

, but without recovering the O(M−α) decay. For α = 2, Section 93 shows that a piecewise linear approximation over an optimized adaptive triangulation with M triangles reaches the error decay O(M−2). Thresholding curvelet frame coefficients also yields a nonlinear approximation error ![]() that is nearly optimal. However, curvelet approximations are not as efficient as wavelets for less regular functions such as bounded variation images. If f is piecewise Cα with α > 2, curvelets cannot improve the M−2 decay either.

that is nearly optimal. However, curvelet approximations are not as efficient as wavelets for less regular functions such as bounded variation images. If f is piecewise Cα with α > 2, curvelets cannot improve the M−2 decay either.

The beauty of wavelet and curvelet approximation comes from their simplicity. A simple thresholding directly selects the signal approximation support. However, for images with geometric structures of various regularity, these approximations do not remain optimal when the regularity exponent α changes. It does not seem possible to achieve this result without using a redundant dictionary, which requires a more sophisticated approximation scheme.

Elegant adaptive approximation schemes in redundant dictionaries have been developed for images having some geometric regularity. Several algorithms are based on the lifting technique described in Section 7.8, with lifting coefficients that depend on the estimated image regularity [155, 234, 296, 373, 477]. The image can also be segmented adaptively in dyadic squares of various sizes, and approximated on each square by a finite element such as a wedglet, which is a step edge along a straight line with an orientation that is adjusted [216]. Refinements with polynomial edges have also been studied [436], but these algorithms do not provide M-term approximation errors that decay like O(M−α) for all piecewise regular Cα images.

Bandletization of Wavelet Coefficients

A bandlet transform takes advantage of the geometric regularity captured by a wavelet transform. The decomposition coefficients of f in an orthogonal wavelet basis can be written as

for x = (x1, x2) and n = (n1, n2). The function ![]() has the directional regularity of f, for example along an edge, and it is regularized by the convolution with

has the directional regularity of f, for example along an edge, and it is regularized by the convolution with ![]() . Figure 12.7 shows a zoom on wavelet coefficients near an edge.

. Figure 12.7 shows a zoom on wavelet coefficients near an edge.

FIGURE 12.7 Orthogonal wavelet coefficients at a scale 2j are samples of a function ![]() , shown in (a). The filtered image

, shown in (a). The filtered image ![]() varies regularly when moving along an edge γ (b).

varies regularly when moving along an edge γ (b).

Bandlets retransform wavelet coefficients to take advantage of their directional regularity. This is implemented with a directional wavelet transform applied over wavelet coefficients, which creates new vanishing moments in appropriate directions. The resulting bandlets are written as

where ![]() is a directional wavelet of length 2i, of width 2l, and that has a position indexed by m in the wavelet coefficient array. The bandlet function

is a directional wavelet of length 2i, of width 2l, and that has a position indexed by m in the wavelet coefficient array. The bandlet function ![]() is a finite linear combination of wavelets

is a finite linear combination of wavelets ![]() and thus has the same regularity as these wavelets. Since

and thus has the same regularity as these wavelets. Since ![]() has a square support of width proportional to 2j, the bandlet φp has a support length proportional to 2j+i and a support width proportional to 2j.

has a square support of width proportional to 2j, the bandlet φp has a support length proportional to 2j+i and a support width proportional to 2j.

If the regularity exponent α is known then in a neighborhood of an edge, one would like to have elongated bandlets with an aspect ratio defined by 2l+j = (2i+j)α, and thus l = αi + (a − 1)j. Curvelets satisfy this property for α = 2. However, when α is not known in advance and may change, the scale parameters i and l must be adjusted adaptively.

As a result of (12.41), the bandlet coefficients of a signal ![]() can be written as

can be written as

They are computed by applying a discrete directional wavelet tranform on the signal wavelet coefficients ![]() for each k and 2j. This is also a called a bandletization of wavelet coefficients.

for each k and 2j. This is also a called a bandletization of wavelet coefficients.

Geometric Approximation Model

The discrete directional wavelets ![]() are defined with a geometric approximation model providing information about the directional image regularity. Many constructions are possible [359]. We describe here a geometric approximation model that is piecewise parallel and yields orthogonal bandlet bases.

are defined with a geometric approximation model providing information about the directional image regularity. Many constructions are possible [359]. We describe here a geometric approximation model that is piecewise parallel and yields orthogonal bandlet bases.

For each scale 2j and direction k, the array of wavelet transform coefficients ![]() is divided into squares of various sizes, as shown in Figure 12.8(b). In regular image regions, wavelet coefficients are small and do not need to be retransformed. Near junctions, the image is irregular in all directions and these few wavelet coefficients are not retransformed either. It is in the neighborhood of edges and directional image structures that an appropriate retransformation can improve the wavelet sparsity.

is divided into squares of various sizes, as shown in Figure 12.8(b). In regular image regions, wavelet coefficients are small and do not need to be retransformed. Near junctions, the image is irregular in all directions and these few wavelet coefficients are not retransformed either. It is in the neighborhood of edges and directional image structures that an appropriate retransformation can improve the wavelet sparsity.

FIGURE 12.8 (a) Wavelet coefficients of the image. (b) Example of segmentation of an array of wavelet coefficients ![]() for a particular direction k and scale 2j.

for a particular direction k and scale 2j.

A geometric flow is defined over each edge square. It provides the direction along which the discrete bandlets ![]() are constructed. It is a vector field, which is parallel horizontally or vertically and points in local directions in which

are constructed. It is a vector field, which is parallel horizontally or vertically and points in local directions in which ![]() is the most regular. Figure 12.9(a) gives an example. The segmentation of wavelet coefficients in squares and the specification of a geometric flow in each square defines a geometric approximation model that is used to construct a bandlet basis.

is the most regular. Figure 12.9(a) gives an example. The segmentation of wavelet coefficients in squares and the specification of a geometric flow in each square defines a geometric approximation model that is used to construct a bandlet basis.

FIGURE 12.9 (a) Square of wavelet coefficients including an edge. A geometric flow nearly parallel to the edge is shown with arrows. (b) A vertical warping w maps the flow onto a horizontal flow. (c) Support of directional wavelets ![]() of length 2i and width 2l in the warped domain. (d) Directional wavelets

of length 2i and width 2l in the warped domain. (d) Directional wavelets ![]() in the square of wavelet coefficients.

in the square of wavelet coefficients.

Bandlets with Alpert Wavelets

Let us consider a square of wavelet coefficients where a geometric flow is defined. We suppose that the flow is parallel vertically. Its vectors can thus be written ![]() . Let

. Let ![]() be a primitive of

be a primitive of ![]() . Wavelet coefficients are translated vertically with a warping operator

. Wavelet coefficients are translated vertically with a warping operator ![]() so that the resulting geometric flow becomes horizontal, as shown in Figure 12.9(b). In the warped domain, the regularity of

so that the resulting geometric flow becomes horizontal, as shown in Figure 12.9(b). In the warped domain, the regularity of ![]() is now horizontal.

is now horizontal.

Warped directional wavelets ![]() are defined to take advantage of this horizontal regularity over the translated orthogonal wavelet coefficients, which are not located on a square grid anymore. Directional wavelets can be constructed with piecewise polynomial Alpert wavelets [84], which are adapted to nonuniform sampling grids [365] and have q vanishing moments. Over a square of width 2i, a discrete Alpert wavelet

are defined to take advantage of this horizontal regularity over the translated orthogonal wavelet coefficients, which are not located on a square grid anymore. Directional wavelets can be constructed with piecewise polynomial Alpert wavelets [84], which are adapted to nonuniform sampling grids [365] and have q vanishing moments. Over a square of width 2i, a discrete Alpert wavelet ![]() has a length 2i, a total of 2i × 2l coefficients on its support, and thus a width of the order of 2l and a position m2l. These directional wavelets are horizontal in the warped domain, as shown in Figure 12.9(c). After inverse warping,

has a length 2i, a total of 2i × 2l coefficients on its support, and thus a width of the order of 2l and a position m2l. These directional wavelets are horizontal in the warped domain, as shown in Figure 12.9(c). After inverse warping, ![]() is parallel to the geometric flow in the original wavelet square, and

is parallel to the geometric flow in the original wavelet square, and ![]() is an orthonormal basis over the square of 22i wavelet coefficients. The fast Alpert wavelet transform computes 22i bandlet coefficients in a square of 22i coefficients with O(22i) operations.

is an orthonormal basis over the square of 22i wavelet coefficients. The fast Alpert wavelet transform computes 22i bandlet coefficients in a square of 22i coefficients with O(22i) operations.

Figure 12.10 shows in (b), (c), and (d) several directional Alpert wavelets ![]() on squares of different lengths 2i, and for different width 2l. The corresponding bandlet functions φp(x) are computed in (b′), (c′), and (d′), with the wavelets

on squares of different lengths 2i, and for different width 2l. The corresponding bandlet functions φp(x) are computed in (b′), (c′), and (d′), with the wavelets ![]() corresponding to the squares shown in Figure 12.10(a).

corresponding to the squares shown in Figure 12.10(a).

Dictionary of Bandlet Orthonormal Bases

A bandlet orthonormal basis is defined by segmenting each array of wavelet coefficients ![]() in squares of various sizes, and by applying an Alpert wavelet transform along the geometric flow defined in each square. A dictionary of bandlet orthonormal bases is associated to a family of geometric approximation models corresponding to different segmentations and different geometric flows. Choosing a best basis is equivalent to finding an image’s best geometric approximation model.

in squares of various sizes, and by applying an Alpert wavelet transform along the geometric flow defined in each square. A dictionary of bandlet orthonormal bases is associated to a family of geometric approximation models corresponding to different segmentations and different geometric flows. Choosing a best basis is equivalent to finding an image’s best geometric approximation model.

To compute a best basis with the fast algorithm in Section 12.2.2, a tree-structured dictionary is constructed. Each array of wavelet coefficients is divided in squares obtained with a dyadic segmentation. Figure 12.11 illustrates such a segmentation. Each square is recursively subdivided into four squares of the same size until the appropriate size is reached. This subdivision is represented by a quad-tree, where the division of a square appears as the subdivision of a node in four children nodes. The leafs of the quad-tree correspond to the squares defining the dyadic segmentation, as shown in Figure 12.11. At a scale 2j, the size 2i of a square defines the length 2j+i of its bandlets. Optimizing this segmentation is equivalent to locally adjusting this length. The resulting bandlet dictionary has a tree structure. Each node of this tree corresponds to a space ![]() generated by a square of 22i orthogonal wavelets at a given wavelet scale 2j and orientation k. A bandlet orthonormal basis of

generated by a square of 22i orthogonal wavelets at a given wavelet scale 2j and orientation k. A bandlet orthonormal basis of ![]() is associated to each geometric flow.

is associated to each geometric flow.

FIGURE 12.11 (a–c) Construction of a dyadic segmentation by successive subdivisions of squares. (d) Quad-tree representation of the segmentation. Each leaf of the tree corresponds to a square in the final segmentation.

The number of different geometric flows depends on the geometry’s required precision. Suppose that the edge curve in the square is parametrized horizontally and defined by (x1, γ(x1)). For a piecewise Cα image, γ(x1) is uniformly Lipschitz α. Tangent vectors to the edge are (1, γ′(x1)) and γ′(x1) is uniformly Lipschitz α – 1. If α ≤ q, then it can be approximated by a polynomial γ′ (x1) of degree q − 2 with

The polynomial γ′(x1) is specified by q − 1 parameters that must be quantized to limit the number of possible flows. To satisfy (12.42), these parameters are quantized with a precision 2−i. The total number of possible flows in the square of width 2i is thus O(2i(q−1)). A bandlet dictionary ![]() of order q is constructed with Cq wavelets having q vanishing moments, and with polynomial flows of degree q − 2.

of order q is constructed with Cq wavelets having q vanishing moments, and with polynomial flows of degree q − 2.

Bandlet Approximation

A best M-term bandlet signal approximation is computed by finding a best basis BT and the corresponding best approximation support ΛT, which minimize the 10 Lagrangian

This minimization chooses a best dyadic square segmentation of each wavelet coefficient array, and a best geometric flow in each square. It is implemented with the best-basis algorithm from Section 12.2.2.

An image ![]() is first approximated by its orthogonal projection in an approximation space VL of dimension N = 2−2L. The resulting discrete signal

is first approximated by its orthogonal projection in an approximation space VL of dimension N = 2−2L. The resulting discrete signal ![]() has the same wavelet coefficients as

has the same wavelet coefficients as ![]() at scales 2j > 2L, and thus the same bandlet coefficients at these scales. A best approximation support ΛT calculated from f yields an M = |ΛT| term approximation of

at scales 2j > 2L, and thus the same bandlet coefficients at these scales. A best approximation support ΛT calculated from f yields an M = |ΛT| term approximation of ![]() :

:

Theorem 12.5, proved in [342, 365], computes the nonlinear approximation error ![]() for piecewise regular images.

for piecewise regular images.

Theorem 12.5: Le Pennec, Mallat, Peyre. Let ![]() ∈ L2[0, 1]2 be a piecewise Cα regular image. In a bandlet dictionary of order q ≥ α, for T > 0 and 2L = N−1/2 ∼ T2,

∈ L2[0, 1]2 be a piecewise Cα regular image. In a bandlet dictionary of order q ≥ α, for T > 0 and 2L = N−1/2 ∼ T2,

For M = |ΛT|, the resulting best bandlet approximation ![]() M has an error

M has an error

Proof.

The proof finds a bandlet orthogonal basis ![]() such that

such that

Since ![]() , it implies (12.44). Theorem 12.1 derives in (12.5) that

, it implies (12.44). Theorem 12.1 derives in (12.5) that ![]() with

with ![]() . A piecewise regular image has a bounded total variation, so Theorem 9.18 proves that a linear approximation error with N larger-scale wavelets has an error

. A piecewise regular image has a bounded total variation, so Theorem 9.18 proves that a linear approximation error with N larger-scale wavelets has an error ![]() . Since

. Since ![]() , it results that

, it results that

which proves (12.45).

We give the main ideas for constructing a bandlet basis B that satisfies (12.46). Detailed derivations can be found in [365]. Following Definition 9.1, a function ![]() is piecewise Cα with a blurring scale

is piecewise Cα with a blurring scale ![]() where

where ![]() is uniformly Lipschitz α on

is uniformly Lipschitz α on ![]() , where the edge curves er are uniformly Lipschitz α and do not intersect tangentially. Since hs is a regular kernel of size s, the wavelet coefficients of

, where the edge curves er are uniformly Lipschitz α and do not intersect tangentially. Since hs is a regular kernel of size s, the wavelet coefficients of ![]() at a scale 2j behave as the wavelet coefficients of

at a scale 2j behave as the wavelet coefficients of ![]() at a scale 2j′ ∼ 2j + s multiplied by s−1. Thus, it has a marginal impact on the proof. We suppose that s = 0 and consider a signal

at a scale 2j′ ∼ 2j + s multiplied by s−1. Thus, it has a marginal impact on the proof. We suppose that s = 0 and consider a signal ![]() that is not blurred.

that is not blurred.

Wavelet coefficients ![]() are computed at scales 2j > 2L = T2. A dyadic segmentation of each wavelet coefficient array

are computed at scales 2j > 2L = T2. A dyadic segmentation of each wavelet coefficient array ![]() is computed according to Figure 12.8, at each scale 2j > 2L and orientation k = 1, 2, 3. Wavelet arrays are divided into three types of squares. In each type of square a geometric flow is specified, so that the resulting bandlet basis B has a Lagrangian that satisfies

is computed according to Figure 12.8, at each scale 2j > 2L and orientation k = 1, 2, 3. Wavelet arrays are divided into three types of squares. In each type of square a geometric flow is specified, so that the resulting bandlet basis B has a Lagrangian that satisfies ![]() . This is proved by verifying that the number of coefficients above T is O(T−2/(α + 1)) and that the energy of coefficients below T is O(T2−2/(α + 1)).

. This is proved by verifying that the number of coefficients above T is O(T−2/(α + 1)) and that the energy of coefficients below T is O(T2−2/(α + 1)).

![]() Regular squares correspond to coefficients

Regular squares correspond to coefficients ![]() , such that f is uniformly Lipschitz α over the support of all

, such that f is uniformly Lipschitz α over the support of all ![]() .

.

![]() Edge squares include coefficients corresponding to wavelets with support that intersects a single edge curve. This edge curve can be parametrized horizontally or vertically in each square.

Edge squares include coefficients corresponding to wavelets with support that intersects a single edge curve. This edge curve can be parametrized horizontally or vertically in each square.

![]() Junction squares include coefficients corresponding to wavelets with support that intersects at least two different edge curves.

Junction squares include coefficients corresponding to wavelets with support that intersects at least two different edge curves.

Over regular squares, since ![]() is uniformly Lipschitz α, Theorem 915 proves in (9.15) that

is uniformly Lipschitz α, Theorem 915 proves in (9.15) that ![]() . These small wavelet coefficients do not need to be retransformed and no geometric flow is defined over these squares. The number of coefficients above T in such squares is indeed O(T−2/(α + 1)) and the energy of coefficients below T is O(T2−2/(α + 1)).

. These small wavelet coefficients do not need to be retransformed and no geometric flow is defined over these squares. The number of coefficients above T in such squares is indeed O(T−2/(α + 1)) and the energy of coefficients below T is O(T2−2/(α + 1)).

Since edges do not intersect tangentially, one can construct junction squares of width 2i ≤ C where C does not depend on 2j. As a result, over the |log2 T2| scales 2j ≥ T2, there are only O(|log2T|) wavelet coefficients in these junction squares, which thus have a marginal impact on the approximation.

At a scale 2j, an edge square S of width 2i has O(2i2−j) large coefficients having an amplitude O(2j) along the edge. Bandlets are created to reduce the number of these large coefficients that dominate the approximation error. Suppose that the edge curve in S is parametrized horizontally and defined by (x1, y(x1)). Following (12.42), a geometric flow of vectors (1, γ′(x1)) is defined over the square, where γ′(x1) is a polynomial of degree q − 2, which satisfies

Let ![]() be the warping that maps this flow to a horizontal flow, as illustrated in Figure 12.9. One can prove [365] that the warped wavelet transform satisfies

be the warping that maps this flow to a horizontal flow, as illustrated in Figure 12.9. One can prove [365] that the warped wavelet transform satisfies

The bandlet transform takes advantage of the regularity of ![]() along x1 with Alpert directional wavelets having q vanishing moments along x1. Computing the amplitude of the resulting bandlet coefficients shows that there are

along x1 with Alpert directional wavelets having q vanishing moments along x1. Computing the amplitude of the resulting bandlet coefficients shows that there are ![]() bandlet coefficients of amplitude larger than T and the error of all coefficients below T is

bandlet coefficients of amplitude larger than T and the error of all coefficients below T is ![]() . The total length of edge squares is proportional to the total length of edges in the image, which is O(1). Summing the errors over all squares gives a total number of bandlet coefficients, which is

. The total length of edge squares is proportional to the total length of edges in the image, which is O(1). Summing the errors over all squares gives a total number of bandlet coefficients, which is ![]() , and a total error, which is

, and a total error, which is ![]() .

.

As a result, the bandlet basis B defined over the three types of squares satisfies ![]() , which finishes the proof.

, which finishes the proof.

The best-basis algorithm finds a best geometry to approximate each image. This theorem proves that the resulting approximation error decays as quickly as if the image was uniformly Lipschitz α over its whole support [0, 1]2. Moreover, this result is adaptive in the sense that it is valid for all α ≤ q.

The downside of bandlet approximations is the dictionary size. In a square of width 2i, we need ![]() polynomial flows, each of which defines a new bandlet family. As a result, a bandlet dictionary of order q includes

polynomial flows, each of which defines a new bandlet family. As a result, a bandlet dictionary of order q includes ![]() different bandlets. The total number of operations to compute a best bandlet approximation is O(P), which becomes very large for q > 2. A fast implementation is described in [398] for q = 2 where P = (N3/2). Theorem 12.5 is then reduced to α ≤ 2. It still recovers the O(M−2) decay for C2 images obtained in Theorem 9.19 with piecewise linear approximations over an adaptive triangulation.

different bandlets. The total number of operations to compute a best bandlet approximation is O(P), which becomes very large for q > 2. A fast implementation is described in [398] for q = 2 where P = (N3/2). Theorem 12.5 is then reduced to α ≤ 2. It still recovers the O(M−2) decay for C2 images obtained in Theorem 9.19 with piecewise linear approximations over an adaptive triangulation.

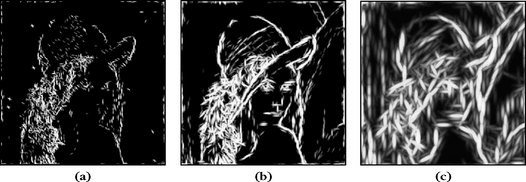

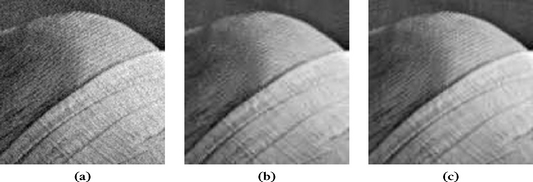

Figure 12.12 shows a comparison of the approximation of a piecewise regular image with M largest orthogonal wavelet coefficients and the best M orthogonal bandlet coefficients. Wavelet approximations exhibit more ringing artifacts along edges because they do not capture the anisotropic regularity of edges.

Bandlet Compression

Following the results of Section 12.1.2, a bandlet compression algorithm is implemented by quantizing the best bandlet coefficients of f with a bin size Δ = 2T. According to Section 12.1.2, the approximation support ΛT is coded on Ro = M log2(P/N) bits and the amplitude of nonzero quantized coefficients with R1 ˜ M bits [365]. If f is the discretization of apiecewise Cα image ![]() , since Lo(T,f,ΛT)=O(T2–2/(α+1)), we derive from (12.17) with s = (α+1)/2 that the distortion rate satisfies

, since Lo(T,f,ΛT)=O(T2–2/(α+1)), we derive from (12.17) with s = (α+1)/2 that the distortion rate satisfies

Analog piecewise Cα images are linearly approximated in a multiresolution space of dimension N with an error ![]() . Taking this into account, we verify that the analog distortion rate satisfies the asymptotic decay rate (12.18)

. Taking this into account, we verify that the analog distortion rate satisfies the asymptotic decay rate (12.18)

Although bandlet compression improves the asymptotic decay of wavelet compression, such coders are not competitive with a JPEG-2000 wavelet image coder, which requires less computations. Moreover, when images have no geometric regularity, despite the fact that the decay rate is the same as with wavelets, bandlets introduce an overhead because of the large dictionary size.

Bandlet Denoising

Let W be a Gaussian white noise of variance σ2. To estimate f from X = f + W, abest bandlet estimator ![]() is computed according to Section 12.2.1 by projecting X on an optimized family of orthogonal bandlets indexed by

is computed according to Section 12.2.1 by projecting X on an optimized family of orthogonal bandlets indexed by ![]() . It is obtained by thresholding at T the bandlet coefficients of X in the best bandlet basis

. It is obtained by thresholding at T the bandlet coefficients of X in the best bandlet basis ![]() , which minimizes Lo(T,X,B),for

, which minimizes Lo(T,X,B),for![]() .

.

An analog estimator ![]() of

of ![]() is reconstructed from the noisy signal coefficients in

is reconstructed from the noisy signal coefficients in ![]() with the analog bandlets

with the analog bandlets ![]() . If

. If ![]() is a piecewise Cα image

is a piecewise Cα image ![]() , then Theorem 12.5 proves that

, then Theorem 12.5 proves that ![]() . The computed risk decay (12.27) thus applies for s = (α+ 1)/2:

. The computed risk decay (12.27) thus applies for s = (α+ 1)/2:

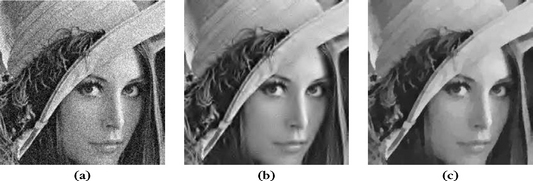

This decay rate [233] shows that a bandlet estimation over piecewise Cα images nearly reaches the minimax risk ![]() calculated in (11.152) for uniformly Cα images. Figure 12.13 gives a numerical example comparing a best bandlet estimation and a translation-invariant wavelet thresholding estimator for an image including regular geometric structures. The threshold is T = 3σ instead of

calculated in (11.152) for uniformly Cα images. Figure 12.13 gives a numerical example comparing a best bandlet estimation and a translation-invariant wavelet thresholding estimator for an image including regular geometric structures. The threshold is T = 3σ instead of ![]() , because it improves the SNR.

, because it improves the SNR.

12.3 GREEDY MATCHING PURSUITS

Computing an optimal M-term approximation fM of a signal f with M vectors selected in a redundant dictionary ![]() is NP-hard. Pursuit strategies construct nonoptimal yet efficient approximations with computational algorithms. Matching pursuits are greedy algorithms that select the dictionary vectors one by one, with applications to compression, denoising, and pattern recognition.

is NP-hard. Pursuit strategies construct nonoptimal yet efficient approximations with computational algorithms. Matching pursuits are greedy algorithms that select the dictionary vectors one by one, with applications to compression, denoising, and pattern recognition.

12.3.1 Matching Pursuit

Matching pursuit introduced by Mallat and Zhang [366] computes signal approximations from a redundant dictionary, by iteratively selecting one vector at a time. It is related to projection pursuit algorithms used in statistics [263] and to shape-gain vector quantizations [27].

Let ![]() be a dictionary of P > N vectors having a unit norm. This dictionary is supposed to be complete, which means that it includes N linearly independent vectors that define a basis of the signal space

be a dictionary of P > N vectors having a unit norm. This dictionary is supposed to be complete, which means that it includes N linearly independent vectors that define a basis of the signal space ![]() . A matching pursuit begins by projecting f on a vector

. A matching pursuit begins by projecting f on a vector ![]() and by computing the residue Rf:

and by computing the residue Rf:

To minimize ![]() , we must choose

, we must choose ![]() such that

such that ![]() is maximum. In some cases it is computationally more efficient to find a vector φpo that is almost optimal:

is maximum. In some cases it is computationally more efficient to find a vector φpo that is almost optimal: