Adaptive Filters

Vítor H. Nascimento and Magno T.M. Silva, Department of Electronic Systems Engineering, University of São Paulo, São Paulo, SP, Brazil, [email protected]

Abstract

This chapter provides an introduction to adaptive signal processing, covering basic principles through the most important recent developments. After a brief example for, we present an overview of how adaptive filters work, in which we use only deterministic arguments and concepts from basic linear systems theory, followed by a description of a few common applications of adaptive filters. Later, we turn to a more general model for adaptive filters based on stochastic processes and optimum estimation. Then three of the most important adaptive algorithms—LMS, NLMS, and RLS—are derived and analyzed in detail. The chapter closes with a brief description of some important extensions to the basic adaptive filtering algorithms and some promising current research topics. Since adaptive filter theory brings together results from several fields, short reviews are provided for most of the necessary material in separate sections, that the reader may consult if needed.

Keywords

Adaptive signal processing; Digital filters; Parameter estimation; Adaptive equalizers; Blind equalizers; Deconvolution; System identification; Echo cancellers

Acknowledgment

The authors thank Prof. Wallace A. Martins (Federal University of Rio de Janeiro) for his careful reading and comments on the text.

1.12.1 Introduction

Adaptive filters are employed in situations in which the environment is constantly changing, so that a fixed system would not have adequate performance. As they are usually applied in real-time applications, they must be based on algorithms that require a small number of computations per input sample.

These algorithms can be understood in two complementary ways. The most straightforward way follows directly from their name: an adaptive filter uses information from the environment and from the very signal it is processing to change itself, so as to optimally perform its task. The information from the environment may be sensed in real time (in the form of a so-called desired signal), or may be provided a priori, in the form of previous knowledge of the statistical properties of the input signal (as in blind equalization).

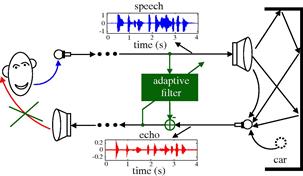

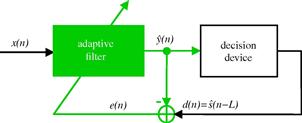

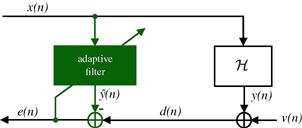

On the other hand, we can think of an adaptive filter also as an algorithm to separate a mixture of two signals. The filter must have some information about the signals to be able to separate them; usually this is given in the form of a reference signal, related to only one of the two terms in the mixture. The filter has then two outputs, corresponding to each signal in the mixture (see Figure 12.1). As a byproduct of separating the signals, the reference signal is processed in useful ways. This way of viewing an adaptive filter is very useful, particularly when one is learning the subject.

Figure 12.1 Inputs and outputs of an adaptive filter. Left: detailed diagram showing inputs, outputs and internal variable. Right: simplified diagram.

In the following sections we give an introduction to adaptive filters, covering from basic principles to the most important recent developments. Since the adaptive literature is vast, and the topic is still an active area of research, we were not able to treat all interesting topics. Along the text, we reference important textbooks, classic papers, and the most promising recent literature. Among the many very good textbooks on the subject, four of the most popular are [1–4].

We start with an application example, in the next section. In it, we show how an adaptive filter is applied to cancel acoustic echo in hands-free telephony. Along the text we return frequently to this example, to illustrate new concepts and methods. The example is completed by an overview of how adaptive filters work, in which we use only deterministic arguments and concepts from basic linear systems theory, in Section 1.12.1.2. This overview shows many of the compromises that arise in adaptive filter design, without the more complicated math. Section 1.12.1.3 gives a small glimpse of the wide variety of applications of adaptive filters, describing a few other examples. Of course, in order to fully understand the behavior of adaptive filters, one must use estimation theory and stochastic processes, which is the subject of Sections 1.12.2 and 1.12.3. The remaining sections are devoted to extensions to the basic algorithms and recent results.

Adaptive filter theory brings together results from several fields: signal processing, matrix analysis, control and systems theory, stochastic processes, and optimization. We tried to provide short reviews for most of the necessary material in separate links (“boxes”), that the reader may follow if needed.

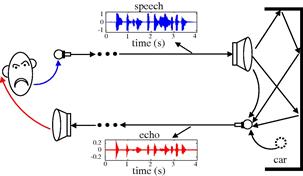

1.12.1.1 Motivation—acoustic echo cancellation

Suppose that you are talking with a person who uses a hands-free telephone inside a car. Let us assume that the telephone does not have any acoustic echo canceler. In this case, the sound of your voice will reach the loudspeaker, propagate inside the car, and suffer reflections every time it encounters the walls of the car. Part of the sound can be absorbed by the walls, but part of it is reflected, resulting in an echo signal. This signal is fed back to the microphone and you will hear your own voice with delay and attenuation, as shown in Figure 12.2.

Figure 12.2 Echo in hands-free telephony. Click speech.wav to listen to a speech signal and echo.wav to listen to the corresponding echo signal.

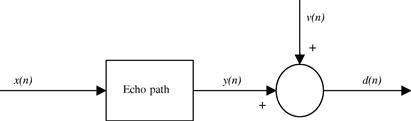

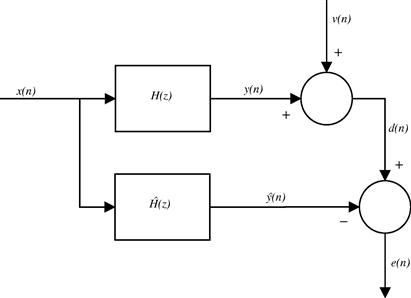

The echo signal depends on the acoustic characteristics of each car and also on the location of the loudspeaker and microphone. Furthermore, the acoustic characteristics can change during a phone call, since the driver may open or close a window, for example. In order to follow the variations of the environment, the acoustic echo must be canceled by an adaptive filter, which performs on-line updating of its parameters through an algorithm. Figure 12.3 shows the insertion of an adaptive filter in the system of Figure 12.2 to cancel the echo signal. In this configuration, the adaptive filter must identify the echo path and provide at its output an estimate of the echo signal. Thus, a synthesized version of the echo is subtracted from the signal picked up by the car microphone. In an ideal scenario, the resulting signal will be free of echo and will contain only the signal of interest. The general set-up of an echo canceler is shown in Figure 12.4. The near-end signal (near with respect to the echo canceler) may be simply noise when the person inside the car is in silence, as considered in Figures 12.2 and 12.3, or it can be a signal of interest, that is, the voice of the user in the car.

You most likely have already heard the output of an adaptive echo canceler. However, you probably did not pay attention to the initial period, when you can hear the adaptive filter learning the echo impulse response, if you listen carefully. We illustrate this through a simulation, using a measured impulse response of 256 samples (sampled at 8 kHz), and an echo signal produced from a recorded voice signal. The measured impulse response is shown in Figure 12.5. In the first example, the near-end signal is low-power noise (more specifically, white Gaussian noise with zero mean and variance ![]() ). Listen to e_nlms.wav, and pay attention to the beginning. You will notice that the voice (the echo) is fading away, while the adaptive filter is learning the echo impulse response. This file is the output of an echo canceler adapted using the normalized least-mean squares algorithm (NLMS), which is described in Section 1.12.3.2.

). Listen to e_nlms.wav, and pay attention to the beginning. You will notice that the voice (the echo) is fading away, while the adaptive filter is learning the echo impulse response. This file is the output of an echo canceler adapted using the normalized least-mean squares algorithm (NLMS), which is described in Section 1.12.3.2.

Listen now to e_lsl.wav in this case the filter was adapted using the modified error-feedback least-squares lattice algorithm (EF-LSL) [5], which is a low-cost and stable version of the recursive least-squares algorithm (RLS) described in Section 1.12.3.3. Now you will notice that the echo fades away much faster. This fast convergence is characteristic of the EF-LSL algorithm, and is of course a very desirable property. On the other hand, the NLMS algorithm is simpler to implement and more robust against imperfections in the implementation. An adaptive filtering algorithm must satisfy several requirements, such as convergence rate, tracking capability, noise rejection, computational complexity, and robustness. These requirements are conflicting, so that there is no best algorithm that will outperform all the others in every situation. The many algorithms available in the literature are different compromises between these requirements [1–3].

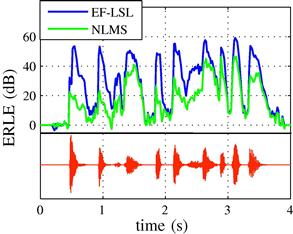

Figure 12.6 shows 4 s of the echo signal, prior to cancellation (bottom) and a measure of the quality of echo cancellation, the so-called echo return loss enhancement (ERLE) (top). The ERLE is defined as the ratio

![]()

The higher the ERLE, the better the performance of the canceler. The ERLE drops when the original echo is small (there is nothing to cancel!), and increases again when the echo is strong. The figure shows that EF-LSL performs better than NLMS (but at a higher computational cost).

Figure 12.6 Echo return loss enhancement for NLMS ![]() and EF-LSL

and EF-LSL ![]() algorithms. Bottom trace: echo signal prior to cancellation. The meaning of the several parameters is explained in Sections 1.12.3.1, 1.12.3.2, and 1.12.3.3.

algorithms. Bottom trace: echo signal prior to cancellation. The meaning of the several parameters is explained in Sections 1.12.3.1, 1.12.3.2, and 1.12.3.3.

Assuming now that the near-end signal is another recorded voice signal (Click DT_echo.wav to listen to this signal), we applied again the EF-LSL algorithm to cancel the echo. This case simulates a person talking inside the car. Since the speech of this person is not correlated to the far-end signal, the output of the adaptive filter converges again to an estimate of the echo signal. Thus, a synthesized version of the echo is subtracted from the signal picked up by the car microphone and the resulting signal converges to the speech of the person inside the car. This result can be verified by listening to the file e_lslDT.wav.

This is a typical example of use of an adaptive filter. The environment (the echo impulse response) changes constantly, requiring a constant re-tuning of the canceling filter. Although the echo itself is not known, we have access to a reference signal (the clean speech of the far-end user). This is the signal that is processed to obtain an estimate of the echo. Finally, the signal captured by the near-end microphone (the so-called desired signal) is a mixture of two signals: the echo, plus the near-end speech (or ambient noise, when the near-end user is silent). The task of the adaptive filter can be seen as to separate these two signals.

We should mention that the name desired signal is somewhat misleading, although widespread in the literature. Usually we are interested in separating the “desired” signal into two parts, one of which is of no interest at all!

We proceed now to show how an algorithm can perform this task, modeling all signals as periodic to simplify the arguments.

1.12.1.2 A quick tour of adaptive filtering

Before embarking in a detailed explanation of adaptive filtering and its theory, it is a good idea to present the main ideas in a simple setting, to help the reader create intuition. In this section, we introduce the least-mean squares (LMS) algorithm and some of its properties using only deterministic arguments and basic tools from linear systems theory.

1.12.1.2.1 Posing the problem

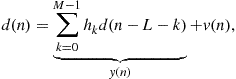

Consider again the echo-cancellation problem, seen in the previous section (see Figure 12.7). Let ![]() represent the echo,

represent the echo, ![]() represent the voice of the near-end user, and

represent the voice of the near-end user, and ![]() the voice of the far-end user (the reference).

the voice of the far-end user (the reference). ![]() represents the signal that would be received by the far-end user without an echo canceler (the mixture, or desired signal).

represents the signal that would be received by the far-end user without an echo canceler (the mixture, or desired signal).

The echo path represents the changes that the far-end signal suffers when it goes through the digital-to-analog (D/A) converter, the loudspeaker, the air path between the loudspeaker and the microphone (including all reflections), the microphone itself and its amplifier, and the analog-to-digital (A/D) converter. The microphone signal ![]() will always be a mixture of the echo

will always be a mixture of the echo ![]() and an unrelated signal

and an unrelated signal ![]() , which is composed of the near-end speech plus noise. Our goal is to remove

, which is composed of the near-end speech plus noise. Our goal is to remove ![]() from

from ![]() . The challenge is that we have no means to measure

. The challenge is that we have no means to measure ![]() directly, and the echo path is constantly changing.

directly, and the echo path is constantly changing.

1.12.1.2.2 Measuring how far we are from the solution

How is the problem solved, then? Since we cannot measure ![]() , the solution is to estimate it from the measurable signals

, the solution is to estimate it from the measurable signals ![]() and

and ![]() . This is done as follows: imagine for now that all signals are periodic, so they can be decomposed as Fourier series

. This is done as follows: imagine for now that all signals are periodic, so they can be decomposed as Fourier series

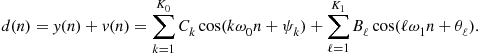

(12.1)

(12.1)

for certain amplitudes ![]() and

and ![]() , phases

, phases ![]() and

and ![]() , and frequencies

, and frequencies ![]() and

and ![]() . The highest frequencies appearing in

. The highest frequencies appearing in ![]() and

and ![]() must satisfy

must satisfy ![]() ,

, ![]() , since we are dealing with sampled signals (Nyquist criterion). We assume for now that the echo path is fixed (not changing), and can be modeled by an unknown linear transfer function

, since we are dealing with sampled signals (Nyquist criterion). We assume for now that the echo path is fixed (not changing), and can be modeled by an unknown linear transfer function ![]() . In this case,

. In this case, ![]() would also be a periodic sequence with fundamental frequency

would also be a periodic sequence with fundamental frequency ![]() ,

,

with ![]() . The signal picked by the microphone is then

. The signal picked by the microphone is then

Since we know ![]() , if we had a good approximation

, if we had a good approximation ![]() to

to ![]() , we could easily obtain an approximation

, we could easily obtain an approximation ![]() to

to ![]() and subtract it from

and subtract it from ![]() , as in Figure 12.8.

, as in Figure 12.8.

How could we find ![]() in real time? Recall that only

in real time? Recall that only ![]() , and

, and ![]() can be observed. For example, how could we know that we have the exact filter, that is, that

can be observed. For example, how could we know that we have the exact filter, that is, that ![]() , by looking only at these signals? To answer this question, take a closer look at

, by looking only at these signals? To answer this question, take a closer look at ![]() . Let the output of

. Let the output of ![]() be

be

(12.2)

(12.2)

Assuming that the cosines in ![]() and

and ![]() have no frequencies in common, the simplest approach is to measure the average power of

have no frequencies in common, the simplest approach is to measure the average power of ![]() . Indeed, if all frequencies

. Indeed, if all frequencies ![]() and

and ![]() appearing in

appearing in ![]() are different from one another, then the average power of

are different from one another, then the average power of ![]() is

is

(12.3)

(12.3)

If ![]() , then

, then ![]() is at its minimum,

is at its minimum,

which is the average power of ![]() . In this case,

. In this case, ![]() is at its optimum:

is at its optimum: ![]() , and the echo is completely canceled.

, and the echo is completely canceled.

It is important to see that this works because the two signals we want to separate, ![]() and

and ![]() , are uncorrelated, that is, they are such that

, are uncorrelated, that is, they are such that

(12.4)

(12.4)

This property is known as the orthogonality condition. A form of orthogonality condition will appear in general whenever one tries to minimize a quadratic function, as is the case here. This is discussed in more detail in Section 1.12.2.

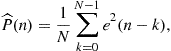

The average power ![]() could then be used as a measure of how good is our approximation

could then be used as a measure of how good is our approximation ![]() . The important point is that it is very easy to find a good approximation to

. The important point is that it is very easy to find a good approximation to ![]() . It suffices to low-pass filter

. It suffices to low-pass filter ![]() , for example,

, for example,

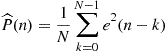

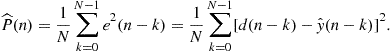

(12.5)

(12.5)

is a good approximation if the window length ![]() is large enough.

is large enough.

The adaptive filtering problem then becomes an optimization problem, with the particularity that the cost function that is being minimized is not known exactly, but only through an approximation, as in (12.5). In the following sections, we discuss in detail the consequences of this fact.

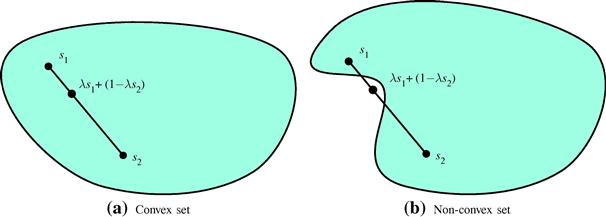

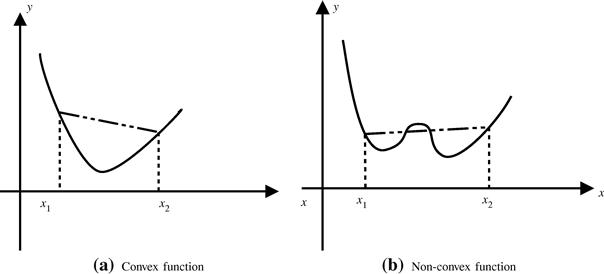

It is also important to remark that the average error power is not the only possible choice for cost function. Although it is the most popular choice for a number of reasons, in some applications other choices are more adequate. Even posing the adaptive filtering problem as an optimization problem is not the only alternative, as shown by recent methods based on projections on convex sets [6].

1.12.1.2.3 Choosing a structure for the filter

Our approximation ![]() will be built by minimizing the estimated average error power (12.5) as a function of the echo path model

will be built by minimizing the estimated average error power (12.5) as a function of the echo path model ![]() , that is, our estimated echo path will be the solution of

, that is, our estimated echo path will be the solution of

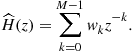

![]() (12.6)

(12.6)

Keep in mind that we are looking for a real-time way of solving (12.6), since ![]() can only be obtained by measuring

can only be obtained by measuring ![]() for a certain amount of time. We must then be able to implement the current approximation

for a certain amount of time. We must then be able to implement the current approximation ![]() , so that

, so that ![]() and

and ![]() may be computed.

may be computed.

This will impose practical restrictions on the kind of function that ![]() may be: since memory and processing power are limited, we must choose beforehand a structure for

may be: since memory and processing power are limited, we must choose beforehand a structure for ![]() . Memory and processing power will impose a constraint on the maximum order the filter may have. In addition, in order to write the code for the filter, we must decide if it will depend on past outputs (IIR filters) or only on past inputs (FIR filters). These choices must be made based on some knowledge of the kind of echo path that our system is likely to encounter.

. Memory and processing power will impose a constraint on the maximum order the filter may have. In addition, in order to write the code for the filter, we must decide if it will depend on past outputs (IIR filters) or only on past inputs (FIR filters). These choices must be made based on some knowledge of the kind of echo path that our system is likely to encounter.

To explain how these choices can be made, let us simplify the problem a little more and restrict the far-end signal ![]() to a simple sinusoid (i.e., assume that

to a simple sinusoid (i.e., assume that ![]() ). In this case,

). In this case,

![]()

with ![]() . Therefore,

. Therefore, ![]() must satisfy

must satisfy

![]() (12.7)

(12.7)

only for ![]() —the value of

—the value of ![]() for other frequencies is irrelevant, since the input

for other frequencies is irrelevant, since the input ![]() has only one frequency. Expanding (12.7), we obtain

has only one frequency. Expanding (12.7), we obtain

![]() (12.8)

(12.8)

The optimum ![]() is defined through two conditions. Therefore an FIR filter with just two coefficients would be able to satisfy both conditions for any value of

is defined through two conditions. Therefore an FIR filter with just two coefficients would be able to satisfy both conditions for any value of ![]() , so we could define

, so we could define

![]()

and the values of ![]() and

and ![]() would be chosen to solve (12.6). Note that the argument generalizes for

would be chosen to solve (12.6). Note that the argument generalizes for ![]() : in general, if

: in general, if ![]() has

has ![]() harmonics, we could choose

harmonics, we could choose ![]() as an FIR filter with length

as an FIR filter with length ![]() , two coefficients per input harmonic.

, two coefficients per input harmonic.

This choice is usually not so simple: in practice, we would not know ![]() , and in the presence of noise (as we shall see later), a filter with fewer coefficients might give better performance, even though it would not completely cancel the echo. The structure of the filter also affects the dynamic behavior of the adaptive filter, so simply looking at equations such as (12.7) does not tell the whole story. Choosing the structure for

, and in the presence of noise (as we shall see later), a filter with fewer coefficients might give better performance, even though it would not completely cancel the echo. The structure of the filter also affects the dynamic behavior of the adaptive filter, so simply looking at equations such as (12.7) does not tell the whole story. Choosing the structure for ![]() is one of the most difficult steps in adaptive filter design. In general, the designer must test different options, initially perhaps using recorded signals, but ultimately building a prototype and performing some tests.

is one of the most difficult steps in adaptive filter design. In general, the designer must test different options, initially perhaps using recorded signals, but ultimately building a prototype and performing some tests.

1.12.1.2.4 Searching for the solution

Returning to our problem, assume then that we have decided that an FIR filter with length ![]() is adequate to model the echo path, that is,

is adequate to model the echo path, that is,

Our minimization problem (12.6) now reduces to finding the coefficients ![]() that solve

that solve

![]() (12.9)

(12.9)

Since ![]() must be computed from measurements, we must use an iterative method to solve (12.9). Many algorithms could be used, as long as they depend only on measurable quantities (

must be computed from measurements, we must use an iterative method to solve (12.9). Many algorithms could be used, as long as they depend only on measurable quantities (![]() , and

, and ![]() ). We will use the steepest descent algorithm (also known as gradient algorithm) as an example now. Later we show other methods that also could be used. If you are not familiar with the gradient algorithm, see Box 1 for an introduction.

). We will use the steepest descent algorithm (also known as gradient algorithm) as an example now. Later we show other methods that also could be used. If you are not familiar with the gradient algorithm, see Box 1 for an introduction.

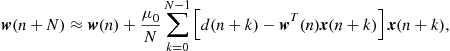

The cost function in (12.9) is

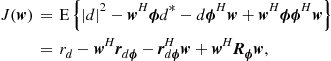

We need to rewrite this equation so that the steepest descent algorithm can be applied. Recall also that now the filter coefficients will change, so define the vectors

![]()

and

![]()

where ![]() denotes transposition. At each instant, we have

denotes transposition. At each instant, we have ![]() , and our cost function becomes

, and our cost function becomes

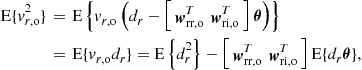

(12.10)

(12.10)

which depends on ![]() . In order to apply the steepest descent algorithm, let us keep the coefficient vector

. In order to apply the steepest descent algorithm, let us keep the coefficient vector ![]() constant during the evaluation of

constant during the evaluation of ![]() , as follows. Starting from an initial condition

, as follows. Starting from an initial condition ![]() , compute for

, compute for ![]() (The notation is explained in Boxes 2 and 6.)

(The notation is explained in Boxes 2 and 6.)

![]() (12.11)

(12.11)

where ![]() is a positive constant, and

is a positive constant, and ![]() . Our cost function now depends on only one

. Our cost function now depends on only one ![]() (compare with (12.10)):

(compare with (12.10)):

(12.12)

(12.12)

We now need to evaluate the gradient of ![]() . Expanding the expression above, we obtain

. Expanding the expression above, we obtain

and

(12.13)

(12.13)

As we needed, this gradient depends only on measurable quantities, ![]() and

and ![]() , for

, for ![]() . Our algorithm for updating the filter coefficients then becomes

. Our algorithm for updating the filter coefficients then becomes

(12.14)

(12.14)

where we introduced the overall step-size ![]() .

.

We still must choose ![]() and

and ![]() . The choice of

. The choice of ![]() is more complicated and will be treated in Section 1.12.1.2.5. The value usually employed for

is more complicated and will be treated in Section 1.12.1.2.5. The value usually employed for ![]() may be a surprise: in almost all cases, one uses

may be a surprise: in almost all cases, one uses ![]() , resulting in the so-called least-mean squares (LMS) algorithm

, resulting in the so-called least-mean squares (LMS) algorithm

![]() (12.15)

(12.15)

proposed initially by Widrow and Hoff in 1960 [7] (Widrow [8] describes the history of the creation of the LMS algorithm). The question is, how can this work, if no average is being used for estimating the error power? An intuitive answer is not complicated: assume that ![]() is a very small number so that

is a very small number so that ![]() for a large

for a large ![]() . In this case, we can approximate

. In this case, we can approximate ![]() from (12.15) as follows.

from (12.15) as follows.

![]()

Since ![]() , we have

, we have ![]() . Therefore, we could approximate

. Therefore, we could approximate

![]()

so ![]() steps of the LMS recursion (12.15) would result

steps of the LMS recursion (12.15) would result

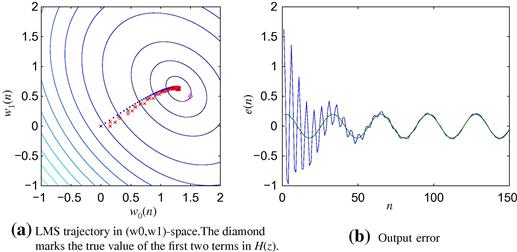

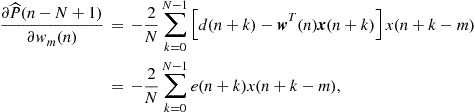

just what would be obtained from (12.14). The conclusion is that, although there is no explicit average being taken in the LMS algorithm (12.15), the algorithm in fact computes an implicit, approximate average if the step-size is small. This is exactly what happens, as can be seen in the animations in Figure 12.9. These simulations were prepared with

![]()

where ![]() and

and ![]() were chosen randomly in the interval

were chosen randomly in the interval ![]() . Several step-sizes were used. The estimates computed with the LMS algorithm are marked by the crosses (red in the web version). The initial condition is at the left, and the theoretical optimum is at the right end of each figure. In the simulations, the true echo path was modeled by the fixed filter

. Several step-sizes were used. The estimates computed with the LMS algorithm are marked by the crosses (red in the web version). The initial condition is at the left, and the theoretical optimum is at the right end of each figure. In the simulations, the true echo path was modeled by the fixed filter

![]()

For comparison, we also plotted the estimates that would be obtained by the gradient algorithm using the exact value of the error power at each instant. These estimates are marked with small dots (blue in the web version).

Figure 12.9 LMS algorithm for echo cancelation with sinusoidal input. Click LMS video files to see animations on your browser.

Note that when a small step-size is used, such as ![]() in Figure 12.9a, the LMS filter stays close to the dots obtained assuming exact knowledge of the error power. However, as the step-size increases, the LMS estimates move farther away from the dots. Although convergence is faster, the LMS estimates do not reach the optimum and stay there: instead, they hover around the optimum. For larger step-sizes, the LMS estimates can go quite far from the optimum (Figure 12.9b and c). Finally, if the step-size is too large, the algorithm will diverge, that is, the filter coefficients grow without bounds (Figure 12.9d).

in Figure 12.9a, the LMS filter stays close to the dots obtained assuming exact knowledge of the error power. However, as the step-size increases, the LMS estimates move farther away from the dots. Although convergence is faster, the LMS estimates do not reach the optimum and stay there: instead, they hover around the optimum. For larger step-sizes, the LMS estimates can go quite far from the optimum (Figure 12.9b and c). Finally, if the step-size is too large, the algorithm will diverge, that is, the filter coefficients grow without bounds (Figure 12.9d).

1.12.1.2.5 Tradeoff between speed and precision

The animations in Figure 12.9 illustrate an important problem in adaptive filters: even if you could magically chose as initial conditions the exact optimum solutions, the adaptive filter coefficients would not stay there! This happens because the filter does not know the exact value of the cost function it is trying to minimize (in this case, the error power): since ![]() is an approximation, noise (and the near-end signal in our echo cancellation example) would make the estimated gradient non-zero, and the filter would wander away from the optimum solution. This effect keeps the minimum error power obtainable using the adaptive filter always a little higher than the optimum value. The difference between the (theoretical, unattainable without perfect information) optimum and the actual error power is known as excess mean-square error (EMSE), and the ratio between the EMSE and the optimum error is known as misadjustment (see Eqs. (12.171) and (12.174)).

is an approximation, noise (and the near-end signal in our echo cancellation example) would make the estimated gradient non-zero, and the filter would wander away from the optimum solution. This effect keeps the minimum error power obtainable using the adaptive filter always a little higher than the optimum value. The difference between the (theoretical, unattainable without perfect information) optimum and the actual error power is known as excess mean-square error (EMSE), and the ratio between the EMSE and the optimum error is known as misadjustment (see Eqs. (12.171) and (12.174)).

For small step-sizes the misadjustment is small, since the LMS estimates stay close to the estimates that would be obtained with exact knowledge of the error power. However, a small step-size also means slow convergence. This trade-off exists in all adaptive filter algorithms, and has been an intense topic of research: many algorithms have been proposed to allow faster convergence without increasing the misadjustment. We will see some of them in the next sections.

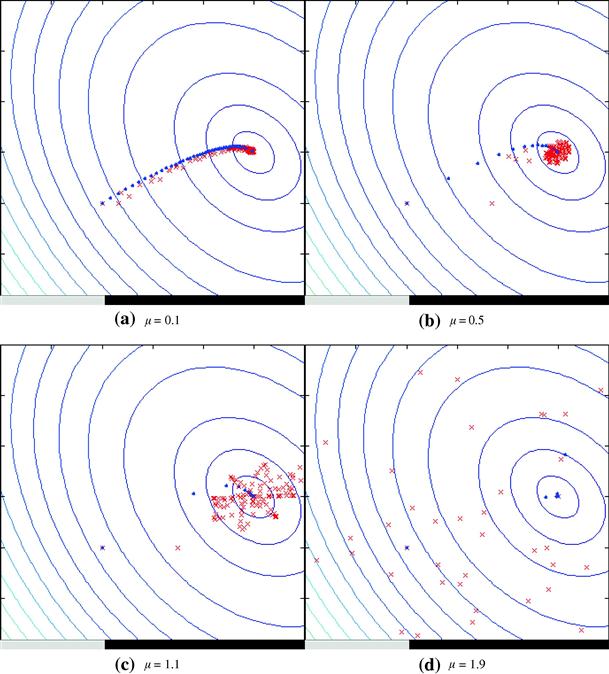

The misadjustment is central to evaluate the performance of an adaptive filter. In fact, if we want to eliminate the echo from the near-end signal, we would like that after convergence, ![]() . This is indeed what happens when the step-size is small (see Figure 12.10a). However, when the step-size is increased, although the algorithm converges more quickly, the performance after convergence is not very good, because of the wandering of the filter weights around the optimum (Figure 12.10b–d—in the last case, for

. This is indeed what happens when the step-size is small (see Figure 12.10a). However, when the step-size is increased, although the algorithm converges more quickly, the performance after convergence is not very good, because of the wandering of the filter weights around the optimum (Figure 12.10b–d—in the last case, for ![]() , the algorithm is diverging.).

, the algorithm is diverging.).

Figure 12.10 Compromise between convergence rate and misadjustment in LMS. Plots of ![]() for several values of

for several values of ![]() .

.

We will stop this example here for the moment, and move forward to a description of adaptive filters using probability theory and stochastic processes. We will return to it during discussions of stability and model order selection.

The important message from this section is that an adaptive filter is able to separate two signals in a mixture by minimizing a well-chosen cost function. The minimization must be made iteratively, since the true value of the cost function is not known: only an approximation to it may be computed at each step. When the cost function is quadratic, as in the example shown in this section, the components of the mixture are separated such that they are in some sense orthogonal to each other.

1.12.1.3 Applications

Due to the ability to adjust themselves to different environments, adaptive filters can be used in different signal processing and control applications. Thus, they have been used as a powerful device in several fields, such as communications, radar, sonar, biomedical engineering, active noise control, modeling, etc. It is common to divide these applications into four groups:

In the first three cases, the goal of the adaptive filter is to find an approximation ![]() for the signal

for the signal ![]() , which is contained in the signal

, which is contained in the signal ![]() . Thus, as

. Thus, as ![]() approaches

approaches ![]() , the signal

, the signal ![]() approaches

approaches ![]() . The difference between these applications is in what we are interested. In interference cancellation, we are interested in the signal

. The difference between these applications is in what we are interested. In interference cancellation, we are interested in the signal ![]() , as is the case of acoustic echo cancellation, where

, as is the case of acoustic echo cancellation, where ![]() is the speech of the person using the hands-free telephone (go back to Figures 12.4 and 12.7). In system identification, we are interested in the filter parameters, and in prediction, we may be interested in the signal

is the speech of the person using the hands-free telephone (go back to Figures 12.4 and 12.7). In system identification, we are interested in the filter parameters, and in prediction, we may be interested in the signal ![]() and/or in the filter parameters. Note that in all these applications (interference cancellation, system identification and prediction), the signal

and/or in the filter parameters. Note that in all these applications (interference cancellation, system identification and prediction), the signal ![]() is an approximation for

is an approximation for ![]() and should not converge to zero, except when

and should not converge to zero, except when ![]() . In inverse system identification, differently from the other applications, the signal at the output of the adaptive filter must be as close as possible to the signal

. In inverse system identification, differently from the other applications, the signal at the output of the adaptive filter must be as close as possible to the signal ![]() and thus, ideally, the signal

and thus, ideally, the signal ![]() should be zeroed. In the sequel, we give an overview of these four groups in order to arrive at a common formulation that will simplify our analysis of adaptive filtering algorithms in further sections.

should be zeroed. In the sequel, we give an overview of these four groups in order to arrive at a common formulation that will simplify our analysis of adaptive filtering algorithms in further sections.

1.12.1.3.1 Interference cancellation

In a general interference cancellation problem, we have access to a signal ![]() , which is a mixture of two other signals,

, which is a mixture of two other signals, ![]() and

and ![]() . We are interested in one of these signals, and want to separate it from the other (the interference) (see Figure 12.13). Even though we do not know

. We are interested in one of these signals, and want to separate it from the other (the interference) (see Figure 12.13). Even though we do not know ![]() or

or ![]() , we have some information about

, we have some information about ![]() , usually in the form of a reference signal

, usually in the form of a reference signal ![]() that is related to

that is related to ![]() through a filtering operation

through a filtering operation ![]() . We may know the general form of this operation, for example, we may know that the relation is linear and well approximated by an FIR filter with 200 coefficients. However, we do not know the parameters (the filter coefficients) necessary to reproduce it. The goal of the adaptive filter is to find an approximation

. We may know the general form of this operation, for example, we may know that the relation is linear and well approximated by an FIR filter with 200 coefficients. However, we do not know the parameters (the filter coefficients) necessary to reproduce it. The goal of the adaptive filter is to find an approximation ![]() to

to ![]() , given only

, given only ![]() and

and ![]() . In the process of finding this

. In the process of finding this ![]() , the adaptive filter will construct an approximation for the relation

, the adaptive filter will construct an approximation for the relation ![]() . A typical example of an interference cancellation problem is the echo cancellation example we gave in Section 1.12.1.1. In that example,

. A typical example of an interference cancellation problem is the echo cancellation example we gave in Section 1.12.1.1. In that example, ![]() is the far-end voice signal,

is the far-end voice signal, ![]() is the near-end voice signal, and

is the near-end voice signal, and ![]() is the echo. The relation

is the echo. The relation ![]() is usually well approximated by a linear FIR filter with a few hundred taps.

is usually well approximated by a linear FIR filter with a few hundred taps.

Figure 12.13 Scheme for interference cancellation and system identification. ![]() and

and ![]() are correlated to each other.

are correlated to each other.

The approximation for ![]() is a by-product, that does not need to be very accurate as long as it leads to a

is a by-product, that does not need to be very accurate as long as it leads to a ![]() close to

close to ![]() . This configuration is shown in Figure 12.13 and is called interference cancellation, since the interference

. This configuration is shown in Figure 12.13 and is called interference cancellation, since the interference ![]() should be canceled. Note that we do not want to make

should be canceled. Note that we do not want to make ![]() , otherwise we would not only be killing the interference

, otherwise we would not only be killing the interference ![]() , but also the signal of interest

, but also the signal of interest ![]() .

.

There are many applications of interference cancellation. In addition to acoustic and line echo cancellation, we can mention, for example, adaptive notch filters for cancellation of sinusoidal interference (a common application is removal of 50 or 60 Hz interference from the mains line), cancellation of the maternal electrocardiography in fetal electrocardiography, cancellation of echoes in long distance telephone circuits, active noise control (as in noise-canceling headphones), and active vibration control.

1.12.1.3.2 System identification

In interference cancellation, the coefficients of the adaptive filter converge to an approximation for the true relation ![]() , but as mentioned before, this approximation is a by-product that does not need to be very accurate. However, there are some applications in which the goal is to construct an approximation as accurate as possible for the unknown relation

, but as mentioned before, this approximation is a by-product that does not need to be very accurate. However, there are some applications in which the goal is to construct an approximation as accurate as possible for the unknown relation ![]() between

between ![]() and

and ![]() , thus obtaining a model for the unknown system. This is called system identification or modeling (the diagram in Figure 12.13 applies also for this case). The signal

, thus obtaining a model for the unknown system. This is called system identification or modeling (the diagram in Figure 12.13 applies also for this case). The signal ![]() is now composed of the output

is now composed of the output ![]() of an unknown system

of an unknown system ![]() , plus noise

, plus noise ![]() . The reference signal

. The reference signal ![]() is the input to the system which, when possible, is chosen to be white noise. In general, this problem is harder than interference cancellation, as we shall see in Section 1.12.2.1.5, due to some conditions that the reference signal

is the input to the system which, when possible, is chosen to be white noise. In general, this problem is harder than interference cancellation, as we shall see in Section 1.12.2.1.5, due to some conditions that the reference signal ![]() must satisfy. However, one point does not change: in the ideal case, the signal

must satisfy. However, one point does not change: in the ideal case, the signal ![]() will be equal to the noise

will be equal to the noise ![]() . Again, we do not want to make

. Again, we do not want to make ![]() , otherwise we would be trying to model the noise (however, the smaller the noise the easier the task, as one would expect).

, otherwise we would be trying to model the noise (however, the smaller the noise the easier the task, as one would expect).

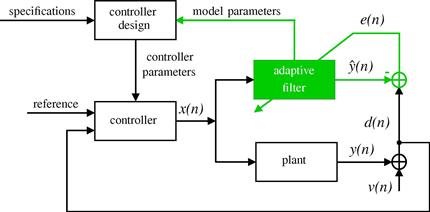

In many control systems, an unknown dynamic system (also called as plant in control system terminology) is identified online and the result is used in a self-tuning controller, as depicted in Figure 12.14. Both the plant and the adaptive filter have the same input ![]() . In practical situations, the plant to be modeled is noisy, which is represented by the signal

. In practical situations, the plant to be modeled is noisy, which is represented by the signal ![]() added to the plant output. The noise

added to the plant output. The noise ![]() is generally uncorrelated with the plant input. The task of the adaptive filter is to minimize the error model

is generally uncorrelated with the plant input. The task of the adaptive filter is to minimize the error model ![]() and track the time variations in the dynamics of the plant. The model parameters are continually fed back to the controller to obtain the controller parameters used in the self-tuning regulator loop [15].

and track the time variations in the dynamics of the plant. The model parameters are continually fed back to the controller to obtain the controller parameters used in the self-tuning regulator loop [15].

There are many applications that require the identification of an unknown system. In addition to control systems, we can mention, for example, the identification of the room impulse response, used to study the sound quality in concert rooms, and the estimation of the communication channel impulse response, required by maximum likelihood detectors and blind equalization techniques based on second-order statistics.

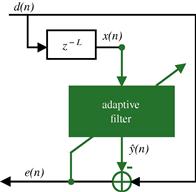

1.12.1.3.3 Prediction

In prediction, the goal is to find a relation between the current sample ![]() and previous samples

and previous samples ![]() , as shown in Figure 12.15. Therefore, we want to model

, as shown in Figure 12.15. Therefore, we want to model ![]() as a part

as a part ![]() that depends only on

that depends only on ![]() , and a part

, and a part ![]() that represents new information. For example, for a linear model, we would have

that represents new information. For example, for a linear model, we would have

Thus, we in fact have again the same problem as in Figure 12.13. The difference is that now the reference signal is a delayed version of ![]() , and the adaptive filter will try to find an approximation

, and the adaptive filter will try to find an approximation ![]() for

for ![]() , thereby separating the “predictable” part

, thereby separating the “predictable” part ![]() of

of ![]() from the “new information”

from the “new information” ![]() , to which the signal

, to which the signal ![]() should converge. The system

should converge. The system ![]() in Figure 12.13 now represents the relation between

in Figure 12.13 now represents the relation between ![]() and the predictable part of

and the predictable part of ![]() .

.

Prediction finds application in many fields. We can mention, for example, linear predictive coding (LPC) and adaptive differential pulse code modulation (ADPCM) used in speech coding, adaptive line enhancement, autoregressive spectral analysis, etc.

For example, adaptive line enhancement (ALE) seeks the solution for a classical detection problem, whose objective is to separate a narrowband signal ![]() from a wideband signal

from a wideband signal ![]() . This is the case, for example, of finding a low-level sine wave (predictable narrowband signal) in noise (non-predictable wideband signal). The signal

. This is the case, for example, of finding a low-level sine wave (predictable narrowband signal) in noise (non-predictable wideband signal). The signal ![]() is constituted by the sum of these two signals. Using

is constituted by the sum of these two signals. Using ![]() as the reference signal and a delayed replica of it, i.e.,

as the reference signal and a delayed replica of it, i.e., ![]() , as input of the adaptive filter as in Figure 12.15, the output

, as input of the adaptive filter as in Figure 12.15, the output ![]() provides an estimate of the (predictable) narrowband signal

provides an estimate of the (predictable) narrowband signal ![]() , while the error signal

, while the error signal ![]() provides an estimate of the wideband signal

provides an estimate of the wideband signal ![]() . The delay

. The delay ![]() is also known as decorrelation parameter of the ALE, since its main function is to remove the correlation between the wideband signal

is also known as decorrelation parameter of the ALE, since its main function is to remove the correlation between the wideband signal ![]() present in the reference

present in the reference ![]() and in the delayed predictor input

and in the delayed predictor input ![]() .

.

1.12.1.3.4 Inverse system identification

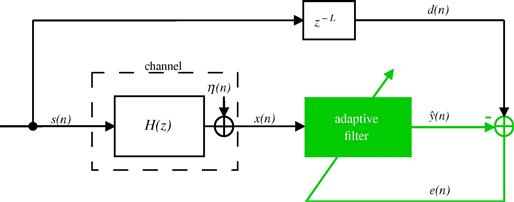

Inverse system identification, also known as deconvolution, has been widely used in different fields as communications, acoustics, optics, image processing, control, among others. In communications, it is also known as channel equalization and the adaptive filter is commonly called as equalizer. Adaptive equalizers play an important role in digital communications systems, since they are used to mitigate the inter-symbol interference (ISI) introduced by dispersive channels. Due to its importance, we focus on the equalization application, which is explained in the sequel.

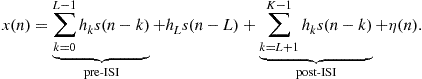

A simplified baseband communications system is depicted in Figure 12.16. The signal ![]() is transmitted through an unknown channel, whose model is constituted by an FIR filter with transfer function

is transmitted through an unknown channel, whose model is constituted by an FIR filter with transfer function ![]() and additive noise

and additive noise ![]() . Due to the channel memory, the signal at the receiver contains contributions not only from

. Due to the channel memory, the signal at the receiver contains contributions not only from ![]() , but also from the previous symbols

, but also from the previous symbols ![]() , i.e.,

, i.e.,

(12.38)

(12.38)

Assuming that the overall channel-equalizer system imposes a delay of ![]() samples, the adaptive filter will try to find an approximation

samples, the adaptive filter will try to find an approximation ![]() for

for ![]() and for this purpose, the two summations in (12.38), which constitute the inter-symbol interference, must be mitigated. Of course, when you are transmitting information the receiver will not have access to

and for this purpose, the two summations in (12.38), which constitute the inter-symbol interference, must be mitigated. Of course, when you are transmitting information the receiver will not have access to ![]() . The filter will adapt during a training phase, in which the transmitter sends a pre-agreed signal. You can see that in this case, the role of

. The filter will adapt during a training phase, in which the transmitter sends a pre-agreed signal. You can see that in this case, the role of ![]() and

and ![]() is the reverse of that in system identification. In this case, you would indeed like to have

is the reverse of that in system identification. In this case, you would indeed like to have ![]() . The problem is that this is not possible, given the presence of noise in

. The problem is that this is not possible, given the presence of noise in ![]() . The role of the adaptive filter is to approximately invert the effect of the channel, at the same time trying to suppress the noise (or at least, not to amplify it too much). In one sense, we are back to the problem of separating two signals, but now the mixture is in the reference signal

. The role of the adaptive filter is to approximately invert the effect of the channel, at the same time trying to suppress the noise (or at least, not to amplify it too much). In one sense, we are back to the problem of separating two signals, but now the mixture is in the reference signal ![]() , so what can and what cannot be done is considerably different from the other cases.

, so what can and what cannot be done is considerably different from the other cases.

The scheme of Figure 12.16 is also called training mode, since the delayed version of the transmitted sequence ![]() (training sequence) is known at the receiver. After the convergence of the filter, the signal

(training sequence) is known at the receiver. After the convergence of the filter, the signal ![]() is changed to the estimate

is changed to the estimate ![]() obtained at the output of a decision device, as shown in Figure 12.17. In this case, the equalizer works in the so-called decision-directed mode. The decision device depends on the signal constellation—for example, if

obtained at the output of a decision device, as shown in Figure 12.17. In this case, the equalizer works in the so-called decision-directed mode. The decision device depends on the signal constellation—for example, if ![]() , the decision device returns

, the decision device returns ![]() for

for ![]() , and

, and ![]() for

for ![]() .

.

1.12.1.3.5 A common formulation

In all the four groups of applications, the inputs of the adaptive filter are given by the signals ![]() and

and ![]() and the output by

and the output by ![]() . Note that the input

. Note that the input ![]() appears effectively in the signal

appears effectively in the signal ![]() , which is computed and fed back at each time instant

, which is computed and fed back at each time instant ![]() , as shown in Figure 12.18. In the literature, the signal

, as shown in Figure 12.18. In the literature, the signal ![]() is referred to as desired signal,

is referred to as desired signal, ![]() as reference signal and

as reference signal and ![]() as error signal. These names unfortunately are somewhat misleading, since they give the impression that our goal is to recover exactly

as error signal. These names unfortunately are somewhat misleading, since they give the impression that our goal is to recover exactly ![]() by filtering (possibly in a nonlinear way) the reference

by filtering (possibly in a nonlinear way) the reference ![]() . In almost all applications, this is far from the truth, as previously discussed. In fact, except in the case of channel equalization, exactly zeroing the error would result in very poor performance.

. In almost all applications, this is far from the truth, as previously discussed. In fact, except in the case of channel equalization, exactly zeroing the error would result in very poor performance.

The only application in which ![]() is indeed a “desired signal” is channel equalization, in which

is indeed a “desired signal” is channel equalization, in which ![]() . Despite this particularity in channel equalization, the common feature in all adaptive filtering applications is that the filter must learn a relation between the reference

. Despite this particularity in channel equalization, the common feature in all adaptive filtering applications is that the filter must learn a relation between the reference ![]() and the desired signal

and the desired signal ![]() (we will use this name to be consistent with the literature.) In the process of building this relation, the adaptive filter is able to perform useful tasks, such as separating two mixed signals; or recovering a distorted signal. Section 1.12.2 explains this idea in more detail.

(we will use this name to be consistent with the literature.) In the process of building this relation, the adaptive filter is able to perform useful tasks, such as separating two mixed signals; or recovering a distorted signal. Section 1.12.2 explains this idea in more detail.

1.12.2 Optimum filtering

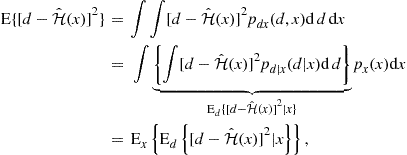

We now need to extend the ideas of Section 1.12.1.2, that applied only to periodic (deterministic) signals, to more general classes of signals. For this, we will use tools from the theory of stochastic processes. The main ideas are very similar: as before, we need to choose a structure for our filter and a means of measuring how far or how near we are to the solution. This is done by choosing a convenient cost function, whose minimum we must search iteratively, based only on measurable signals.

In the case of periodic signals, we saw that minimizing the error power (12.3), repeated below,

would be equivalent to zeroing the echo. However, we were not able to compute the error power exactly—we used an approximation through a time-average, as in (12.5), repeated here:

where ![]() is a convenient window length (in Section 1.12.1.2, we saw that choosing a large window length is approximately equivalent to choosing

is a convenient window length (in Section 1.12.1.2, we saw that choosing a large window length is approximately equivalent to choosing ![]() and a small step-size). Since we used an approximated estimate of the cost, our solution was also an approximation: the estimated coefficients did not converge exactly to their optimum values, but instead hovered around the optimum (Figures 12.9 and 12.10).

and a small step-size). Since we used an approximated estimate of the cost, our solution was also an approximation: the estimated coefficients did not converge exactly to their optimum values, but instead hovered around the optimum (Figures 12.9 and 12.10).

The minimization of the time-average ![]() also works in the more general case in which the echo is modeled as a random signal—but what is the corresponding exact cost function? The answer is given by the property of ergodicity that some random signals possess. If a random signal

also works in the more general case in which the echo is modeled as a random signal—but what is the corresponding exact cost function? The answer is given by the property of ergodicity that some random signals possess. If a random signal ![]() is such that its mean

is such that its mean ![]() does not depend on

does not depend on ![]() and its autocorrelation

and its autocorrelation ![]() depends only on

depends only on ![]() , it is called wide-sense stationary (WSS) [16]. If

, it is called wide-sense stationary (WSS) [16]. If ![]() is also (mean-square) ergodic, then

is also (mean-square) ergodic, then

(12.39)

(12.39)

Note that ![]() is an ensemble average, that is, the expected value is computed over all possible realizations of the random signal. Relation (12.39) means that for an ergodic signal, the time average of a single realization of the process is equal to the ensemble-average of all possible realizations of the process. This ensemble average is the exact cost function that we would like to minimize.

is an ensemble average, that is, the expected value is computed over all possible realizations of the random signal. Relation (12.39) means that for an ergodic signal, the time average of a single realization of the process is equal to the ensemble-average of all possible realizations of the process. This ensemble average is the exact cost function that we would like to minimize.

In the case of adaptive filters, (12.39) holds approximately for ![]() for a finite value of

for a finite value of ![]() if the environment does not change too fast, and if the filter adapts slowly. Therefore, for random variables we will still use the average error power as a measure of how well the adaptive filter is doing. The difference is that in the case of periodic signals, we could understand the effect of minimizing the average error power in terms of the amplitudes of each harmonic in the signal, but now the interpretation will be in terms of ensemble averages (variances).

if the environment does not change too fast, and if the filter adapts slowly. Therefore, for random variables we will still use the average error power as a measure of how well the adaptive filter is doing. The difference is that in the case of periodic signals, we could understand the effect of minimizing the average error power in terms of the amplitudes of each harmonic in the signal, but now the interpretation will be in terms of ensemble averages (variances).

Although the error power is not the only possible choice for cost function, it is useful to study this choice in detail. Quadratic cost functions such as the error power have a number of properties that make them popular. For example, they are differentiable, and so it is relatively easy to find a closed-form solution for the optimum, and in the important case where all signals are Gaussian, the optimum filters are linear. Of course, quadratic cost functions are not the best option in all situations: the use of other cost functions, or even of adaptive filters not based on the minimization of a cost function, is becoming more common. We will talk about some of the most important alternatives in Sections 1.12.5.2 and 1.12.5.4.

Given the importance of quadratic cost functions, in this section we study them in detail. Our focus is on what could and what could not be done if the filter were able to measure perfectly ![]() at each instant. This discussion has two main goals: the first is to learn what is feasible, so we do not expect more from a filter than it can deliver. The second is to enable us to make more knowledgeable choices when designing a filter—for example, which filter order should be used, and for system identification, which input signal would result in better performance. In Section 1.12.4.4.3 we will study the performance of adaptive filters taking into consideration their imperfect knowledge of the environment.

at each instant. This discussion has two main goals: the first is to learn what is feasible, so we do not expect more from a filter than it can deliver. The second is to enable us to make more knowledgeable choices when designing a filter—for example, which filter order should be used, and for system identification, which input signal would result in better performance. In Section 1.12.4.4.3 we will study the performance of adaptive filters taking into consideration their imperfect knowledge of the environment.

1.12.2.1 Linear least-mean squares estimation

In the examples we gave in previous sections, a generic adaptive filtering problem resumed to this: we are given two sequences, ![]() and

and ![]() , as depicted in Figure 12.18. It is known, from physical arguments, that

, as depicted in Figure 12.18. It is known, from physical arguments, that ![]() has two parts, one that is in some sense related to

has two parts, one that is in some sense related to ![]() , and another that is not, and that both parts are combined additively, that is,

, and another that is not, and that both parts are combined additively, that is,

![]() (12.40)

(12.40)

where ![]() is an unknown relation, and

is an unknown relation, and ![]() is the part “unrelated” to

is the part “unrelated” to ![]() . In our examples so far,

. In our examples so far, ![]() was linear, but we do not need to restrict ourselves to this case. Our objective is to extract

was linear, but we do not need to restrict ourselves to this case. Our objective is to extract ![]() and

and ![]() from

from ![]() , based only on observations of

, based only on observations of ![]() and

and ![]() . We saw in Section 1.12.1.2 that the average power of the difference

. We saw in Section 1.12.1.2 that the average power of the difference ![]() between

between ![]() and our current approximation

and our current approximation ![]() could be used as a measure of how close we are to the solution. In general,

could be used as a measure of how close we are to the solution. In general, ![]() is time-variant, i.e., depends directly on

is time-variant, i.e., depends directly on ![]() . We will not write this dependence explicitly to simplify the notation.

. We will not write this dependence explicitly to simplify the notation.

In this section we ask in some sense the inverse problem, that is: given two sequences ![]() and

and ![]() , what sort of relation will be found between them if we use

, what sort of relation will be found between them if we use ![]() as a standard? The difference is that we now do not assume a model such as (12.40); instead, we want to know what kind of model results from the exact minimization of

as a standard? The difference is that we now do not assume a model such as (12.40); instead, we want to know what kind of model results from the exact minimization of ![]() , where

, where ![]() is defined as the difference between

is defined as the difference between ![]() and a function of

and a function of ![]()

We can answer this question in an entirely general way, without specifying beforehand the form of ![]() . This is done in Box 4. However, if we have information about the physics of a problem and know that the relation

. This is done in Box 4. However, if we have information about the physics of a problem and know that the relation ![]() is of a certain kind, we can restrict the search for the solution

is of a certain kind, we can restrict the search for the solution ![]() to this class. This usually reduces the complexity of the filter and increases its convergence rate (because less data is necessary to estimate a model with less unknowns, as we will see in Section 1.12.3). In this section we focus on this second option.

to this class. This usually reduces the complexity of the filter and increases its convergence rate (because less data is necessary to estimate a model with less unknowns, as we will see in Section 1.12.3). In this section we focus on this second option.

The first task is to describe the class ![]() of allowed functions

of allowed functions ![]() . This may be done by choosing a relation that depends on a few parameters, such as (recall that the adaptive filter output is

. This may be done by choosing a relation that depends on a few parameters, such as (recall that the adaptive filter output is ![]() )

)

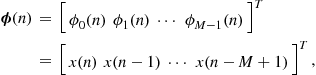

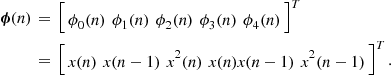

![]() (12.41)

(12.41)

![]() (12.42)

(12.42)

(12.43)

(12.43)

![]() (12.44)

(12.44)

In each of these cases, the relation between the input sequence ![]() and

and ![]() is constrained to a certain class, for example, linear length-

is constrained to a certain class, for example, linear length-![]() FIR filters in (12.41), first-order IIR filters in (12.42), and second-order Volterra filters in (12.43). Each class is described by a certain number of parameters: the filter coefficients

FIR filters in (12.41), first-order IIR filters in (12.42), and second-order Volterra filters in (12.43). Each class is described by a certain number of parameters: the filter coefficients ![]() in the case of FIR filters,

in the case of FIR filters, ![]() and

and ![]() in the case of IIR filters, and so on. The task of the adaptive filter will then be to choose the values of the parameters that best fit the data.

in the case of IIR filters, and so on. The task of the adaptive filter will then be to choose the values of the parameters that best fit the data.

For several practical reasons, it is convenient if we make the filter output depend linearly on the parameters, as happens in (12.41) and in (12.43). It is important to distinguish linear in the parameters from input-output linear: (12.43) is linear in the parameters, but the relation between the input sequence ![]() and the output

and the output ![]() is nonlinear. What may come as a surprise is that the IIR filter of Eq. (12.42) is not linear in the parameters: in fact,

is nonlinear. What may come as a surprise is that the IIR filter of Eq. (12.42) is not linear in the parameters: in fact, ![]() — you can see that

— you can see that ![]() depends nonlinearly on

depends nonlinearly on ![]() and

and ![]() .

.

Linearly parametrized classes ![]() , such as

, such as ![]() (12.41) and

(12.41) and ![]() (12.43) are popular because in general it is easier to find the optimum parameters, both theoretically and in real time. In fact, when the filter output depends linearly on the parameters and the cost function is a convex function of the error, it can be shown that the optimal solution is unique (see Box 5). This is a very desirable property, since it simplifies the search for the optimal solution.

(12.43) are popular because in general it is easier to find the optimum parameters, both theoretically and in real time. In fact, when the filter output depends linearly on the parameters and the cost function is a convex function of the error, it can be shown that the optimal solution is unique (see Box 5). This is a very desirable property, since it simplifies the search for the optimal solution.

In the remainder of this section we will concentrate on classes of relations that are linear in the parameters. As ![]() shows, this does not imply that we are restricting ourselves to linear models. There are, however, adaptive filtering algorithms that use classes of relations that are not linear in the parameters, such as IIR adaptive filters. On the other hand, blind equalization algorithms are based on non-convex cost functions.

shows, this does not imply that we are restricting ourselves to linear models. There are, however, adaptive filtering algorithms that use classes of relations that are not linear in the parameters, such as IIR adaptive filters. On the other hand, blind equalization algorithms are based on non-convex cost functions.

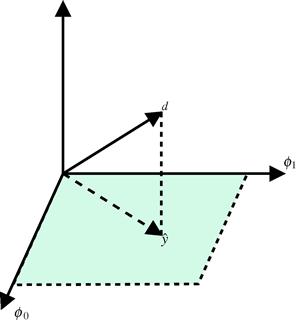

Assume then that we have chosen a convenient class of relations ![]() that depends linearly on its parameters. That is, we want to solve the problem

that depends linearly on its parameters. That is, we want to solve the problem

![]() (12.45)

(12.45)

We want to know which properties the solution to this problem will have when ![]() depends linearly on a finite number of parameters. In this case,

depends linearly on a finite number of parameters. In this case, ![]() is a linear combinations of certain functions

is a linear combinations of certain functions ![]() of

of ![]()

![]() (12.46)

(12.46)

where in general ![]() ,

, ![]() . The vector

. The vector ![]() is known as regressor. In the case of length-

is known as regressor. In the case of length-![]() FIR filters, we would have

FIR filters, we would have

![]()

whereas in the case of second-order Volterra filters with memory 1, we would have

![]()

Our problem can then be written in general terms as: find ![]() such that

such that

![]() (12.47)

(12.47)

We omitted the dependence of the variables on ![]() to lighten the notation. Note that, in general,

to lighten the notation. Note that, in general, ![]() will also depend on

will also depend on ![]() .

.

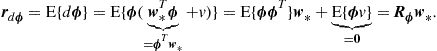

To solve this problem, we use the facts that ![]() to expand the expected value

to expand the expected value

![]()

Recall that the weight vector ![]() is not random, so we can take it out of the expectations.

is not random, so we can take it out of the expectations.

Define the autocorrelation of ![]() , and also the cross-correlation vector and autocorrelation matrix

, and also the cross-correlation vector and autocorrelation matrix

![]() (12.48)

(12.48)

The cost function then becomes

![]() (12.49)

(12.49)

Differentiating ![]() with respect to

with respect to ![]() , we obtain

, we obtain

![]()

and equating the result to zero, we see that the optimal solution must satisfy

![]() (12.50)

(12.50)

These are known as the normal, or Wiener-Hopf, equations. The solution, ![]() , is known as the Wiener solution. A note of caution: the Wiener solution is not the same thing as the Wiener filter. The Wiener filter is the linear filter that minimizes the mean-square error, without restriction of filter order [17]. The difference is that the Wiener solution has the filter order pre-specified (and is not restricted to linear filters, as we saw).

, is known as the Wiener solution. A note of caution: the Wiener solution is not the same thing as the Wiener filter. The Wiener filter is the linear filter that minimizes the mean-square error, without restriction of filter order [17]. The difference is that the Wiener solution has the filter order pre-specified (and is not restricted to linear filters, as we saw).

When the autocorrelation matrix is non-singular (which is usually the case), the Wiener solution is

![]() (12.51)

(12.51)

Given ![]() , the optimum error will be

, the optimum error will be

![]() (12.52)

(12.52)

Note that the expected value of ![]() is not necessarily zero:

is not necessarily zero:

![]() (12.53)

(12.53)

If, for example, ![]() and

and ![]() , then

, then ![]() . In practice, it is good to keep

. In practice, it is good to keep ![]() , because we usually know that

, because we usually know that ![]() should approximate a zero-mean signal, such as speech or noise. We will show shortly, in Section 1.12.2.1.4, how to guarantee that

should approximate a zero-mean signal, such as speech or noise. We will show shortly, in Section 1.12.2.1.4, how to guarantee that ![]() has zero mean.

has zero mean.

1.12.2.1.1 Orthogonality condition

A key property of the Wiener solution is that the optimum error is orthogonal to the regressor ![]() , that is,

, that is,

(12.54)

(12.54)

We saw a similar condition in Section 1.12.1.2. It is very useful to remember this result, known as the orthogonality condition: from it, we will find when to apply the cost function (12.47) to design an adaptive filter. Note that when ![]() has zero mean, (12.54) also implies that

has zero mean, (12.54) also implies that ![]() and

and ![]() are uncorrelated.

are uncorrelated.

Now that we have the general solution to (12.47), we turn to the question: for which class of problems is the quadratic cost function adequate?

1.12.2.1.2 Implicit vs. physical models

From (12.54), we see that the fact that we are minimizing the mean-square error between ![]() and a linear combination of the regressor

and a linear combination of the regressor ![]() induces a model

induces a model

![]() (12.56)

(12.56)

in which ![]() is orthogonal to

is orthogonal to ![]() . This is not an assumption, as we sometimes see in the literature, but a consequence of the quadratic cost function we chose. In other words, there is always a relation such as (12.56) between any pair

. This is not an assumption, as we sometimes see in the literature, but a consequence of the quadratic cost function we chose. In other words, there is always a relation such as (12.56) between any pair ![]() , as long as both have finite second-order moments. This relation may make sense from a physical analysis of the problem (as we saw in the echo cancellation example), or be simply a consequence of solving (12.47) (see Section 1.12.2.1.3 for examples).

, as long as both have finite second-order moments. This relation may make sense from a physical analysis of the problem (as we saw in the echo cancellation example), or be simply a consequence of solving (12.47) (see Section 1.12.2.1.3 for examples).

On the other hand, the orthogonality condition allows us to find out when the solution of (12.47) will be able to successfully solve a problem: assume now that we know beforehand, by physical arguments about the problem, that ![]() and

and ![]() must be related through

must be related through

![]() (12.57)

(12.57)

where ![]() is a certain coefficient vector and

is a certain coefficient vector and ![]() is orthogonal to

is orthogonal to ![]() . Will the solution to (12.47) be such that

. Will the solution to (12.47) be such that ![]() and

and ![]() ?

?

To check if this is the case, we only need to evaluate the cross-correlation vector ![]() assuming the model (12.57):

assuming the model (12.57):

(12.58)

(12.58)

Therefore, ![]() also obeys the normal equations, and we can conclude that

also obeys the normal equations, and we can conclude that ![]() , as long as

, as long as ![]() is nonsingular.

is nonsingular.

Even if ![]() is singular, the optimal error

is singular, the optimal error ![]() will always be equal to

will always be equal to ![]() (in a certain sense). In fact, if

(in a certain sense). In fact, if ![]() is singular, then there exists a vector

is singular, then there exists a vector ![]() such that

such that ![]() (see Fact 2 in Box 3), and thus any solution

(see Fact 2 in Box 3), and thus any solution ![]() of the normal equations (we know from (12.58) that there is at least one,

of the normal equations (we know from (12.58) that there is at least one, ![]() ) will have the form

) will have the form

![]() (12.59)

(12.59)

where ![]() is the null-space of

is the null-space of ![]() . In addition,

. In addition,

![]()

Therefore, ![]() with probability one. Then,

with probability one. Then,

![]()

with probability one. Therefore, although we cannot say that ![]() always, we can say that they are equal with probability one. In other words, when

always, we can say that they are equal with probability one. In other words, when ![]() is singular we may not be able to identify

is singular we may not be able to identify ![]() , but (with probability one) we are still able to separate

, but (with probability one) we are still able to separate ![]() into its two components. More about this in Section 1.12.2.1.5.

into its two components. More about this in Section 1.12.2.1.5.

1.12.2.1.3 Undermodeling

We just saw that the solution to the quadratic cost function (12.47) is indeed able to separate the two components of ![]() when the regressor that appears in physical model (12.57) is the same

when the regressor that appears in physical model (12.57) is the same ![]() that we used to describe our class

that we used to describe our class ![]() , that is, when our choice of

, that is, when our choice of ![]() includes the general solution from Box 4. Let us check now what happens when this is not the case.

includes the general solution from Box 4. Let us check now what happens when this is not the case.

Assume there is a relation

![]() (12.60)

(12.60)

in which ![]() is orthogonal to

is orthogonal to ![]() , but our class

, but our class ![]() is defined through a regressor

is defined through a regressor ![]() that is a subset of the “correct” one,

that is a subset of the “correct” one, ![]() . This situation is called undermodeling: our class is not rich enough to describe the true relation between

. This situation is called undermodeling: our class is not rich enough to describe the true relation between ![]() and

and ![]() .

.

Assume then that