Learning Theory

Ambuj Tewari* and Peter L. Bartlett†, *439 West Hall 1085 South University Ann Arbor, MI, USA, †387 Soda Hall #1776 Berkeley, CA, USA, [email protected], [email protected]

Abstract

We present an overview of learning theory including its statistical and computational aspects. We start by giving a probabilistic formulation of learning problems including classification and regression where the learner knows nothing about the probability distribution generating the data. We then consider the principle of empirical risk minimization (ERM) that chooses a function from a given class based on its performance on the observed data. Learning guarantees for ERM are shown to be intimately connected with the uniform law of large numbers. A uniform law of large numbers ensures that empirical means converge to true expectations uniformly over a function class. Tools such as Rademacher complexity, covering numbers, and combinatorial dimensions known as the Vapnik-Chervonenkis (VC) and fat shattering dimensions are introduced. After considering the case of learning using a fixed function class, we turn to the problem of model selection: how to choose a function class from a family based on available data? We also survey alternative techniques for studying generalization ability of learning algorithms including sample compression, algorithmic stability, and the PAC-Bayesian theorem. After dealing with statistical issues, we study computational models of learning such the basic and agnostic PAC learning models, the statistical query, and the mistake bound model. Finally, we point out extensions of the basic theory beyond the probabilistic setting of a learner passively learning a single task from independent and identically distributed samples.

1.14.1 Introduction

In a section on Learning Machines in one of his most famous papers [1], Turing wrote:

Instead of trying to produce a programme to simulate the adult mind, why not rather try to produce one which simulates the child’s? If this were then subjected to an appropriate course of education one would obtain the adult brain. Presumably the child brain is something like a notebook as one buys it from the stationer’s. Rather little mechanism, and lots of blank sheets. (Mechanism and writing are from our point of view almost synonymous.) Our hope is that there is so little mechanism in the child brain that something like it can be easily programmed. The amount of work in the education we can assume, as a first approximation, to be much the same as for the human child.

The year was 1950. Earlier, in 1943, McCulloch and Pitts [2] had already hit upon the idea that mind-like machines could be built by studying how biological nerve cells behave. This directly lead to neural networks. By the late 1950s, Samuels [3] had programmed a machine to play checkers and Rosenblatt [4] had proposed one of earliest models of a learning machine, the perceptron. The importance of the perceptron in the history of learning theory cannot be overemphasized. Vapnik says that the appearance of the perceptron was “when the mathematical analysis of learning processes truly began.” Novikoff’s perceptron theorem (we will prove it in Section 1.14.6.5.2) was also proved in 1962 [5].

Vapnik [6] points out the interesting fact that the 1960s saw four major developments that were to have lasting influence on learning theory. First, Tikhonov and other developed regularization theory for the solution of ill posed inverse problems. Regularization theory has had and continues to have tremendous impact on learning theory. Second, Rosenblatt, Parzen, and Chentsov pioneered nonparametric methods for density estimation. These beginnings were crucial for a distribution free theory of non-parametric classification and regression to emerge later. Third, Vapnik and Chervonenkis proved the uniform law of large numbers for indicators of sets. Fourth, Solomonoff, Kolmogorov, and Chaitin all independently discovered algorithmic complexity: the idea that the complexity of a string of 0’s and 1’s can be defined by the length of the shortest program that can generate that string. This later led Rissanen to propose his Minimum Description Length (MDL) principle.

In 1986, a major technique to learn weights in neural networks was discovered: backpropagation [7]. Around the same time, Valiant [8] published his paper introducing a computational model of learning that was to be called the PAC (probably approximately correct) model later. The 1990s saw the appearance of support vector machines [9] and AdaBoost [10]. This brings us to the brink of the second millenium which is where we end our short history tour.

As we can see, learning theory has absorbed influences from a variety of sources: cybernetics, neuroscience, nonparametric statistics, regularization theory of inverse problems, and the theory of computation and algorithms. Ongoing research is not only trying to overcome known limitations of classic models but is also grappling with new issues such as dealing with privacy and web-scale data.

Learning theory is a formal mathematical theory. Assumptions are made, beautiful lemmas are proved, and deep theorems are discovered. But developments in learning theory also lead to practical algorithms. SVMs and AdaBoost are prime examples of high impact learning algorithms evolving out of a principled and well-founded approach to learning. We remain hopeful that learning theory will help us discover even more exciting learning algorithms in the future.

Finally, a word about the mathematical background needed to read this overview of learning theory. Previous exposure to mathematical reasoning and proofs is a must. So is familiarity with probability theory. But measure theory is not needed as we will avoid all measure-theoretic details. It will also be helpful, but not essential, to be aware of the basics of computational complexity such as the notion of polynomial time computation and NP-completeness. Similarly, knowing a little bit of functional analysis will help.

1.14.2 Probabilistic formulation of learning problems

A basic problem in learning theory is that of learning a functional dependence ![]() from some input space

from some input space![]() to some output or label space

to some output or label space ![]() . Some special choices for the output space deserve particular attention since they arise often in applications. In multiclass classification, we have

. Some special choices for the output space deserve particular attention since they arise often in applications. In multiclass classification, we have ![]() and the goal is to classify any input

and the goal is to classify any input ![]() into one of K given classes. A special case of this problem is when

into one of K given classes. A special case of this problem is when ![]() . Then, the problem is called binary classification and it is mathematically convenient to think of the output space as

. Then, the problem is called binary classification and it is mathematically convenient to think of the output space as ![]() rather than

rather than ![]() . In regression, the output space

. In regression, the output space ![]() is some subset of the set

is some subset of the set ![]() of real numbers. Usually

of real numbers. Usually ![]() is assumed to be a bounded interval, say

is assumed to be a bounded interval, say ![]() .

.

We assume that there is an underlying distribution P over ![]() and that the learner has access to n samples

and that the learner has access to n samples

![]()

where the ![]() are independent and identically distributed (or iid) random variables with the same distribution P. Note that P itself is assumed to be unknown to the learner. A learning rule or learning algorithm

are independent and identically distributed (or iid) random variables with the same distribution P. Note that P itself is assumed to be unknown to the learner. A learning rule or learning algorithm![]() is simply a mapping

is simply a mapping

![]()

That is, a learning rule maps the n samples to a function from ![]() to

to ![]() . When the sample is clear from context, we will denote the function returned by a learning rule itself by

. When the sample is clear from context, we will denote the function returned by a learning rule itself by ![]() .

.

1.14.2.1 Loss function and risk

In order to measure the predictive performance of a function ![]() , we use a loss function. A loss function

, we use a loss function. A loss function ![]() is a non-negative function that quantifies how bad the prediction

is a non-negative function that quantifies how bad the prediction ![]() is if the true label is y. We say that

is if the true label is y. We say that ![]() is the loss incurred by f on the pair

is the loss incurred by f on the pair ![]() . In the classification case, binary or otherwise, a natural loss function is the 0–1 loss:

. In the classification case, binary or otherwise, a natural loss function is the 0–1 loss:

![]()

The 0–1 loss function, as the name suggests, is 1 if a misclassification happens and is 0 otherwise. For regression problems, some natural choices for the loss function are the squared loss:

![]()

or the absolute loss:

![]()

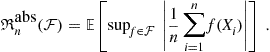

Given a loss function, we can define the risk of a function ![]() as its expected loss under the true underlying distribution:

as its expected loss under the true underlying distribution:

![]()

Note that the risk of any function f is not directly accessible to the learner who only sees the samples. But the samples can be used to calculate the empirical risk of f:

![]()

Minimizing the empirical risk over a fixed class ![]() of functions leads to a very important learning rule, namely empirical risk minimization (ERM):

of functions leads to a very important learning rule, namely empirical risk minimization (ERM):

![]()

Under appropriate conditions on ![]() ,

, ![]() , and

, and ![]() , an empirical risk minimizer is guaranteed to exist though it will not be unique in general. If we knew the distribution P then the best function from

, an empirical risk minimizer is guaranteed to exist though it will not be unique in general. If we knew the distribution P then the best function from ![]() would be

would be

![]()

as ![]() minimizes the expected loss on a random

minimizes the expected loss on a random ![]() pair drawn from P. Without restricting ourselves to the class

pair drawn from P. Without restricting ourselves to the class ![]() , the best possible function to use is

, the best possible function to use is

![]()

where the infimum is with respect to all3 functions.

For classification with 0–1 loss, ![]() is called the Bayes classifier and is given simply by

is called the Bayes classifier and is given simply by

![]()

Note that ![]() depends on the underlying distribution P. If we knew P, we could achieve minimum misclassification probability by always predicting, for a given x, the label y with the highest conditional probability (ties can be broken arbitrarily). The following result bounds the excess risk

depends on the underlying distribution P. If we knew P, we could achieve minimum misclassification probability by always predicting, for a given x, the label y with the highest conditional probability (ties can be broken arbitrarily). The following result bounds the excess risk![]() of any function f in the binary classification case.

of any function f in the binary classification case.

For the regression case with squared loss, ![]() is called the regression function and is given simply by the conditional expectation of the label given x:

is called the regression function and is given simply by the conditional expectation of the label given x:

![]()

In this case, the excess risk takes a particularly nice form.

1.14.2.2 Universal consistency

A particularly nice property for a learning rule ![]() to have is that its (expected) excess risk converges to zero as the sample size n goes to infinity. That is,

to have is that its (expected) excess risk converges to zero as the sample size n goes to infinity. That is,

![]()

Note ![]() is a random variable since

is a random variable since ![]() depends on a randomly drawn sample. A rule

depends on a randomly drawn sample. A rule ![]() is said to be universally consistent if the above convergence holds irrespective of the true underlying distribution P. This notion of consistency is also sometimes called weak universal consistency in order to distinguish it from strong universal consistency that demands

is said to be universally consistent if the above convergence holds irrespective of the true underlying distribution P. This notion of consistency is also sometimes called weak universal consistency in order to distinguish it from strong universal consistency that demands

![]()

with probability 1.

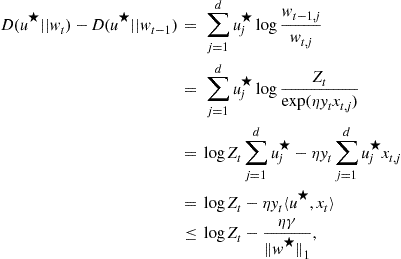

The existence of universally consistent learning rules for classification and regression is a highly non-trivial fact which has been known since Stone’s work in the 1970s [11]. Proving universal consistency typically requires addressing the estimation error versus approximation error trade-off. For instance, often the function ![]() is guaranteed to lie in a function class

is guaranteed to lie in a function class ![]() that does not depend on the sample. The classes

that does not depend on the sample. The classes ![]() typically grow with n. Then we can decompose the excess risk of

typically grow with n. Then we can decompose the excess risk of ![]() as

as

This decomposition is useful as the estimation error is the only random part. The approximation error depends on how rich the function class ![]() is but does not depend on the sample. The reason we have to trade these two errors off is that the richer the function class

is but does not depend on the sample. The reason we have to trade these two errors off is that the richer the function class ![]() the smaller the approximation error will be. However, that leads to a large estimation error. This trade-off is also known as the bias-variance trade-off. The bias-variance terminology comes from the regression with squared error case but tends to be used in the general loss setting as well.

the smaller the approximation error will be. However, that leads to a large estimation error. This trade-off is also known as the bias-variance trade-off. The bias-variance terminology comes from the regression with squared error case but tends to be used in the general loss setting as well.

1.14.2.3 Learnability with respect to a fixed class

Unlike universal consistency, which requires convergence to the minimum possible risk over all functions, learnability with respect to a fixed function class ![]() only demands that

only demands that

![]()

We can additionally require quantitative bounds on the number of samples needed to reach, with high probability, a given upper bound on excess risk relative to ![]() . The sample complexity of a learning rule

. The sample complexity of a learning rule ![]() is the minimum number

is the minimum number ![]() such that for all

such that for all ![]() , we have

, we have

![]()

with probability at least ![]() . We now see how we can arrive at sample complexity bounds for ERM via uniform convergence of empirical means to true expectations.

. We now see how we can arrive at sample complexity bounds for ERM via uniform convergence of empirical means to true expectations.

We mention some examples of functions classes that arise often in practice.

Linear Functions: This is one of the simplest functions classes. In any finite dimensional Euclidean space ![]() , define the class of real valued functions

, define the class of real valued functions

![]()

where ![]() denotes the standard inner product. The weight vector might be additionally constrained. For instance, we may require

denotes the standard inner product. The weight vector might be additionally constrained. For instance, we may require ![]() for some norm

for some norm ![]() . The set

. The set ![]() takes care of such constraints.

takes care of such constraints.

Linear Threshold Functions or Halfspaces: This is class of binary valued function obtained by thresholding linear functions at zero. This gives us the class

![]()

Reproducing Kernel Hilbert Spaces: Linear functions can be quite restricted in their approximation ability. However, we can get a significant improvement by first mapping the inputs ![]() into a feature space through a feature mapping

into a feature space through a feature mapping![]() where

where ![]() is a very high dimensional Euclidean space (or an infinite dimensional Hilbert space) and then considering linear functions in the feature space:

is a very high dimensional Euclidean space (or an infinite dimensional Hilbert space) and then considering linear functions in the feature space:

![]()

where the inner product now carries the subscript ![]() to emphasize the space where it operates. Many algorithms access the inputs only through pairwise inner products

to emphasize the space where it operates. Many algorithms access the inputs only through pairwise inner products

![]()

In such cases, we do not care what the feature mapping is or how complicated it is to evaluate, provided we can compute these inner products efficiently.

This idea of using only inner products leads us to reproducing kernel Hilbert space. We say that a function ![]() is symmetric positive semidefinite (psd) if it satisfies the following two properties.

is symmetric positive semidefinite (psd) if it satisfies the following two properties.

Positive Semidefiniteness: For any ![]() , the symmetric matrix

, the symmetric matrix

![]()

is psd.4 The matrix K is often called the Gram matrix.

Note that, given a feature mapping ![]() , it is trivial to verify that

, it is trivial to verify that

![]()

is a symmetric psd function. However, the much less trivial converse is also true. Every symmetric psd function arises out of a feature mapping. This is a consequence of the Moore-Aronszajn theorem. To state the theorem, we first need a definition. A reproducing kernel Hilbert space or RKHS![]() is a Hilbert space of functions

is a Hilbert space of functions ![]() such that there exists a kernel

such that there exists a kernel ![]() satisfying the properties:

satisfying the properties:

This theorem gives us the feature mapping easily. Starting from a symmetric psd function K, we use the Moore-Aronszajn theorem to get an RKHS ![]() whose reproducing kernel is K. Now, by the reproducing property, for any

whose reproducing kernel is K. Now, by the reproducing property, for any ![]() ,

,

![]()

which means that ![]() given by

given by ![]() is a feature mapping we were looking for. Given this equivalence between symmetric psd functions and RKHSes, we will use the word kernel for a symmetric psd function itself.

is a feature mapping we were looking for. Given this equivalence between symmetric psd functions and RKHSes, we will use the word kernel for a symmetric psd function itself.

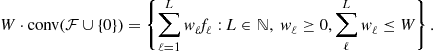

Convex Hulls: This example is not about a function class but rather about a means of obtaining a new function class from an old one. Say, we have class ![]() of binary valued functions

of binary valued functions ![]() . We can consider functions that take a majority vote over a subset of functions in

. We can consider functions that take a majority vote over a subset of functions in ![]() . That is,

. That is,

More generally, we can assign weights ![]() to

to ![]() and consider a weighted majority

and consider a weighted majority

Constraining the weights to sum to no more than W gives us the scaled convex hull

The class above can, of course, be thresholded to produce binary valued functions.

1.14.2.4 ERM and uniform convergence

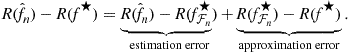

The excess risk of ERM relative to ![]() can be decomposed as

can be decomposed as

![]()

By the definition of ERM, the difference ![]() is non-positive. The difference

is non-positive. The difference ![]() is also easy to deal with since it deals with a fixed (i.e. non-random) function

is also easy to deal with since it deals with a fixed (i.e. non-random) function ![]() . Using Hoeffding’s inequality (see Section 1.14.3.1), we have, with probability at least

. Using Hoeffding’s inequality (see Section 1.14.3.1), we have, with probability at least ![]() ,

,

![]()

This simply reflects the fact that as the sample size n grows, we expect the empirical average of ![]() to converge to its true expectation.

to converge to its true expectation.

The matter with the difference ![]() is not so simple since

is not so simple since ![]() is a random function. But we do know

is a random function. But we do know ![]() lies in

lies in ![]() . So we can clearly bound

. So we can clearly bound

![]() (14.1)

(14.1)

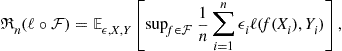

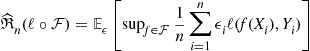

This provides a generalization bound (or, more properly, a generalization error bound) for ERM. A generalization bound is an upper bound on the true expectation ![]() of a learning rule in terms of its empirical performance

of a learning rule in terms of its empirical performance ![]() (or some other performance measure computed from data). A generalization bound typically involves additional terms that measure the statistical complexity of the class of functions that the learning rule uses. Note the important fact that the bound above is valid not just for ERM but for any learning rule that outputs a function

(or some other performance measure computed from data). A generalization bound typically involves additional terms that measure the statistical complexity of the class of functions that the learning rule uses. Note the important fact that the bound above is valid not just for ERM but for any learning rule that outputs a function ![]() .

.

The measure of complexity in the bound (14.1) above is the maximum deviation between true expectation and empirical average over functions in ![]() . For each fixed

. For each fixed![]() , we clearly have

, we clearly have

![]()

both in probability (weak law of large numbers) and almost surely (strong law of large numbers). But in order for

![]()

the law of large numbers has to hold uniformly over the function class ![]() .

.

To summarize, we have shown in this section that, with probability at least ![]() ,

,

![]() (14.2)

(14.2)

Thus, we can bound the excess risk of ERM if we can prove uniform convergence of empirical means to true expectations for the function class ![]() . This excess risk bound, unlike the generalization error bound (14.1), is applicable only to ERM.

. This excess risk bound, unlike the generalization error bound (14.1), is applicable only to ERM.

1.14.2.5 Bibliographic note

There are several book length treatments of learning theory that emphasize different aspects of the subject. The reader may wish to consult Anthony and Biggs [12], Anthony and Bartlett [13], Devroye et al. [14], Vapnik [15], Vapnik [6], Vapnik [16], Vidyasagar [17], Natarajan [18], Cucker and Zhou [19], Kearns and Vazirani [20], Cesa-Bianchi and Lugosi [21], Györfi et al. [22], and Hastie et al. [23].

1.14.3 Uniform convergence of empirical means

Let us consider the simplest case of a finite function class ![]() . In this case, Hoeffding’s inequality (see Theorem 5) gives us for any

. In this case, Hoeffding’s inequality (see Theorem 5) gives us for any ![]() , with probability at least

, with probability at least ![]() ,

,

![]()

Therefore, denoting the event that the above inequality fails to hold for function f by ![]() , we have

, we have

![]()

The first inequality is called a union bound in probability theory. It is exact if and only if all the events it is applied to are pairwise disjoint, i.e., ![]() for

for ![]() . Clearly, if there are functions that are “similar” to each other, the bound above will be loose. Nevertheless, the union bound is an important starting point for the analysis of uniform convergence of empirical means and for a finite class, it gives us the following result.

. Clearly, if there are functions that are “similar” to each other, the bound above will be loose. Nevertheless, the union bound is an important starting point for the analysis of uniform convergence of empirical means and for a finite class, it gives us the following result.

It is instructive to focus on the first term on the right hand side. First, it decreases as n increases. This means that ERM gets better as it works with more data. Second, it increases as the function class ![]() grows in size. But the dependence is logarithmic and hence mild.

grows in size. But the dependence is logarithmic and hence mild.

However, the bound above is useless if ![]() is not finite. The goal of the theory we will build below (often called “VC theory” after its architects Vapnik and Chervonenkis) will be to replace

is not finite. The goal of the theory we will build below (often called “VC theory” after its architects Vapnik and Chervonenkis) will be to replace ![]() with an appropriate measure of the capacity of an infinite function class

with an appropriate measure of the capacity of an infinite function class ![]() .

.

1.14.3.1 Concentration inequalities

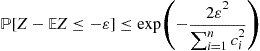

Learning theory uses concentration inequalities heavily. A typical concentration inequality will state that a certain random variable is tightly concentrated around its mean (or in some cases median). That is, the probability that they deviate far enough from the mean is small.

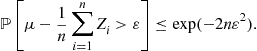

The following concentration inequality, called Hoeffding’s inequality, applies to sums of iid random variables.

A couple of remarks are in order. First, even though we have stated Hoeffding’s inequality as applying to bounded iid random variables, only the boundedness and independence are really needed. It is possible to let the ![]() ’s have different distributions. Second, the above result provides probability upper bounds for deviations below the mean

’s have different distributions. Second, the above result provides probability upper bounds for deviations below the mean ![]() but a similar result holds for deviations of

but a similar result holds for deviations of ![]() above

above ![]() as well.

as well.

In fact, Hoeffding’s inequality can be considered a special case of the following inequality that is often called McDiarmid’s inequality or the bounded differences inequality.

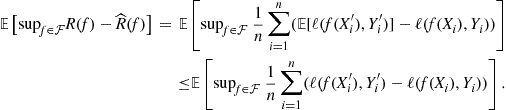

1.14.3.2 Rademacher complexity

Let us recall that we need to control the following quantity:

![]()

Since this satisfies the conditions of McDiarmid’s inequality with ![]() , we have, with probability at least

, we have, with probability at least ![]() ,

,

![]()

Now, we can appeal to a powerful idea known as symmetrization. The basic idea is to replace

![]()

with

![]()

where ![]() are samples drawn from the distribution P that are independent of each other and of the original sample. The new sample is often referred to as the ghost sample. The point of introducing the ghost sample is that, by an application of Jensen’s inequality, we get

are samples drawn from the distribution P that are independent of each other and of the original sample. The new sample is often referred to as the ghost sample. The point of introducing the ghost sample is that, by an application of Jensen’s inequality, we get

Now, the distribution of

![]()

is invariant under exchanging any ![]() with an

with an ![]() . That is, for any fixed choice of

. That is, for any fixed choice of ![]() signs

signs ![]() , the above quantity has the same distribution as

, the above quantity has the same distribution as

![]()

This allows us introduce even more randomization by making the ![]() ’s themselves random. This gives,

’s themselves random. This gives,

This directly yields a bound in terms of the Rademacher complexity:

where ![]() is a Rademacher random variable that is either -1 or +1, each with probability

is a Rademacher random variable that is either -1 or +1, each with probability ![]() . The subscript below the expectation serves as a reminder that the expectation is being taken with respect to the randomness in

. The subscript below the expectation serves as a reminder that the expectation is being taken with respect to the randomness in ![]() ’s, and

’s, and ![]() ’s. We have thus proved the following theorem.

’s. We have thus proved the following theorem.

An interesting observation is that the sample dependent quantity

also satisfies the bounded differences condition with ![]() . This is the empirical Rademacher average and the expectation in its definition is only over the Rademacher random variables. Applying McDiarmid’s inequality, we have,

. This is the empirical Rademacher average and the expectation in its definition is only over the Rademacher random variables. Applying McDiarmid’s inequality, we have,

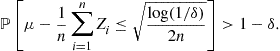

![]()

with probability at least ![]() .

.

To prove a bound on the Rademacher complexity of a finite class, the following lemma, due to [24], is useful.

The Rademacher complexity, and its empirical counterpart, satisfy a number of interesting structural properties.

For proofs of these properties, see [25]. However, note that the Rademacher complexity is defined there such that an extra absolute value is taken before the supremum over ![]() . That is,

. That is,

Nevertheless, the proofs given there generalize quite easily to definition of Rademacher complexity that we are using. For example, see [26], where the Rademacher complexity as we have defined it here (without the absolute value) is called free Rademacher complexity.

Using these properties, it is possible to directly bound the Rademacher complexity of several interesting functions classes. See [25] for examples. The contraction property is a very useful one. It allows us to transition from the Rademacher complexity of the loss class to the Rademacher complexity of the function class itself (for Lipschitz losses).

The Rademacher complexity can also be bounded in terms of another measure of complexity of a function class: namely covering numbers.

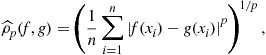

1.14.3.3 Covering numbers

The idea underlying covering numbers is very intuitive. Let ![]() be any (pseudo-) metric space and fix a subset

be any (pseudo-) metric space and fix a subset ![]() . A set

. A set ![]() is said to be an

is said to be an ![]() -cover of T if

-cover of T if

![]()

In other words “balls” of radius ![]() placed at elements of

placed at elements of ![]() “cover” the set T entirely. The covering number (at scale

“cover” the set T entirely. The covering number (at scale ![]() ) of T is defined as the size of the smallest cover of T (at scale

) of T is defined as the size of the smallest cover of T (at scale ![]() ).

).

![]()

The logarithm of covering number is called entropy.

Given a sample ![]() , define the data dependent metric on

, define the data dependent metric on ![]() as

as

where ![]() . Taking the limit

. Taking the limit ![]() suggests the metric

suggests the metric

![]()

These metrics gives us the p-norm covering numbers

![]()

These covering numbers increase with p. That is, for ![]() ,

,

![]()

Finally, we can also define the worst case (over samples) p-norm covering number

![]()

A major result connecting Rademacher complexity with covering numbers is due to [27].

1.14.3.4 Binary valued functions: VC dimension

For binary function classes ![]() , we can provide distribution free upper bounds on the Rademacher complexity or covering numbers in terms of a combinatorial parameter called the Vapnik-Chervonenkis (VC) dimension.

, we can provide distribution free upper bounds on the Rademacher complexity or covering numbers in terms of a combinatorial parameter called the Vapnik-Chervonenkis (VC) dimension.

To define it, consider a sequence ![]() . We say that

. We say that ![]() is shattered by

is shattered by ![]() if each of the

if each of the ![]() possible

possible ![]() labelings of these n points are realizable using some

labelings of these n points are realizable using some ![]() . That is,

. That is, ![]() is shattered by

is shattered by ![]() iff

iff

![]()

The VC dimension of ![]() is the length of the longest sequence that can shattered by

is the length of the longest sequence that can shattered by ![]() :

:

![]()

The size of the restriction of ![]() to

to ![]() is called the shatter coefficient:

is called the shatter coefficient:

![]()

Considering the worst sequence gives us the growth function

![]()

If ![]() , then we know that

, then we know that ![]() for all

for all ![]() . One can ask: what is the behavior of the growth function for

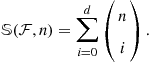

. One can ask: what is the behavior of the growth function for ![]() ? The following combinatorial lemma proved independently by Vapnik and Chervonenkis [28], Sauer [29], and Shelah [30], gives the answer to this question.

? The following combinatorial lemma proved independently by Vapnik and Chervonenkis [28], Sauer [29], and Shelah [30], gives the answer to this question.

Thus, for a class with finite VC dimension, the Rademacher complexity can be bounded directly using Massart’s lemma.

It is possible to shave off the ![]() factor in the bound above plugging an estimate of the entropy

factor in the bound above plugging an estimate of the entropy ![]() for VC classes due to [31] into Theorem 10.

for VC classes due to [31] into Theorem 10.

These results show that for a class ![]() with finite VC dimension d, the sample complexity required by ERM to achieve

with finite VC dimension d, the sample complexity required by ERM to achieve ![]() excess risk relative to

excess risk relative to ![]() is, with probability at least

is, with probability at least ![]() . Therefore, a finite VC dimension is sufficient for learnability with respect to a fixed functions class. In fact, a finite VC dimension also turns out to be necessary (see, for instance, [14, Chapter 14]). Thus, we have the following characterization.

. Therefore, a finite VC dimension is sufficient for learnability with respect to a fixed functions class. In fact, a finite VC dimension also turns out to be necessary (see, for instance, [14, Chapter 14]). Thus, we have the following characterization.

Note that, when ![]() , the dependence of the sample complexity on

, the dependence of the sample complexity on ![]() is polynomial and on

is polynomial and on ![]() is logarithmic. Moreover, such a sample complexity is achieved by ERM. On the other hand, when

is logarithmic. Moreover, such a sample complexity is achieved by ERM. On the other hand, when ![]() , then no learning algorithm, including ERM, can have bounded sample complexity for arbitrarily small

, then no learning algorithm, including ERM, can have bounded sample complexity for arbitrarily small ![]() .

.

1.14.3.5 Real valued functions: fat shattering dimension

For real valued functions, getting distribution free upper bounds on Rademacher complexity or covering numbers involves combinatorial parameters similar to the VC dimension. The appropriate notion for determining learnability using a finite number of samples turns out to be a scale sensitive combinatorial dimension known as the fat shattering dimension.

Fix a class ![]() consisting of bounded real valued functions

consisting of bounded real valued functions ![]() and a scale

and a scale ![]() . We say that a sequence

. We say that a sequence ![]() is

is ![]() -shattered by

-shattered by ![]() if there exists a witness sequence

if there exists a witness sequence ![]() such that, for every

such that, for every ![]() , there is an

, there is an ![]() such that

such that

![]()

In words, this means that, for any of the ![]() possibilities for the sign pattern

possibilities for the sign pattern ![]() , we have a function

, we have a function ![]() whose values at the points

whose values at the points ![]() is above or below

is above or below ![]() depending on whether

depending on whether ![]() is

is ![]() or

or ![]() . Moreover, the gap between

. Moreover, the gap between ![]() and

and ![]() is required to be at least

is required to be at least ![]() .

.

The fat-shattering dimension of ![]() at scale

at scale ![]() is the size of the longest sequence that can be

is the size of the longest sequence that can be ![]() -shattered by

-shattered by ![]() :

:

![]()

A bound on ![]() -norm covering numbers in terms of the fat shattering dimension were first given by Alon et al. [32].

-norm covering numbers in terms of the fat shattering dimension were first given by Alon et al. [32].

Using this bound, the sample complexity of learning a real valued function class of bounded range using a Lipschitz loss5![]() is

is ![]() where

where ![]() for a universal constant

for a universal constant ![]() . The importance of the fat shattering dimension lies in the fact that if

. The importance of the fat shattering dimension lies in the fact that if ![]() for some

for some ![]() , then no learning algorithm can have bounded sample complexity for learning the functions for arbitrarily small values of

, then no learning algorithm can have bounded sample complexity for learning the functions for arbitrarily small values of ![]() .

.

Note that here, unlike the binary classification case, the dependence of the sample complexity on ![]() is not fixed. It depends on how fast

is not fixed. It depends on how fast ![]() grows as

grows as ![]() . In particular, if

. In particular, if ![]() grows as

grows as ![]() then the sample complexity is polynomial in

then the sample complexity is polynomial in ![]() (and logarithmic in

(and logarithmic in ![]() ).

).

1.14.4 Model selection

In general, model selection refers to the task of selecting an appropriate model from a given family based on available data. For ERM, the “model” is the class ![]() of functions

of functions ![]() that are intended to capture the functional dependence between the input variable X and the output Y. If we choose too small an

that are intended to capture the functional dependence between the input variable X and the output Y. If we choose too small an ![]() then ERM will not have good performance if

then ERM will not have good performance if ![]() is not even well approximated by any member of

is not even well approximated by any member of ![]() . One the other hand, if

. One the other hand, if ![]() is too large (say all functions) then the estimation error will be so large that ERM will not perform well even if

is too large (say all functions) then the estimation error will be so large that ERM will not perform well even if ![]() .

.

1.14.4.1 Structural risk minimization

Consider the classification setting with 0–1 loss. Suppose we have a countable sequence of nested function classes:

![]()

where ![]() has VC dimension

has VC dimension ![]() . The function

. The function ![]() chosen by ERM over

chosen by ERM over ![]() satisfies the bound

satisfies the bound

with high probability. As k increases, the classes become more complex. So, the empirical risk term will decrease with k whereas the VC dimension term will increase. The principle of structural risk minimization (SRM) chooses a value of k by minimizing the right hand side above.

Namely, we choose

![]() (14.3)

(14.3)

for some universal constant C.

It can be shown (see, for example, [14, Chapter 18]) that SRM leads to universally consistent rules provided appropriate conditions are placed on the functions classes ![]() .

.

The penalty term in (14.3) depends on the VC dimension of ![]() . It turns out that it is not essential. All we need is an upper bound on the risk of ERM in terms of the empirical risk and some measure of the complexity of the function class. One could also imagine proving results for regression problems by replacing the VC dimension with fat-shattering dimension. We can think of SRM as penalizing larger classes by their capacity to overfit the data. However, the penalty in SRM is fixed in advance and is not data dependent. We will now look into more general penalties.

. It turns out that it is not essential. All we need is an upper bound on the risk of ERM in terms of the empirical risk and some measure of the complexity of the function class. One could also imagine proving results for regression problems by replacing the VC dimension with fat-shattering dimension. We can think of SRM as penalizing larger classes by their capacity to overfit the data. However, the penalty in SRM is fixed in advance and is not data dependent. We will now look into more general penalties.

1.14.4.2 Model selection via penalization

Given a (not necessarily nested) countable or finite collection of models ![]() and a penalty function

and a penalty function ![]() , define

, define ![]() as the minimizer of

as the minimizer of

![]()

Recall that ![]() is the result of ERM over

is the result of ERM over ![]() . Also note that the penalty can additionally depend on the data. Now define the penalized estimator as

. Also note that the penalty can additionally depend on the data. Now define the penalized estimator as

![]()

The following theorem shows that any probabilistic upper bound ![]() on the risk

on the risk ![]() can be used to design a penalty for which a performance bound can be given.

can be used to design a penalty for which a performance bound can be given.

Note that the result says that the penalized estimator achieves an almost optimal trade-off between the approximation error ![]() and the expected complexity

and the expected complexity ![]() . For a good upper bound

. For a good upper bound ![]() , the expected complexity will be a good upper bound on the (expected) estimation error. Therefore, the model selection properties of the penalized estimator depend on how good an upper bound

, the expected complexity will be a good upper bound on the (expected) estimation error. Therefore, the model selection properties of the penalized estimator depend on how good an upper bound ![]() we can find.

we can find.

Note that structural risk minimization corresponds to using a distribution free upper bound

The looseness of these distribution free upper bounds has prompted researchers to design data dependent penalties. We shall see an examples based on empirical Rademacher averages in the next section.

1.14.4.3 Data driven penalties using Rademacher averages

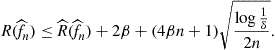

The results of Section 1.14.3.2 tell us that there exists universal constants ![]() such that

such that

![]()

Thus, we can use the data dependent penalty

![]()

in Theorem 18 to get the bound

Note that the penalty here is data dependent. Moreover, except for the ![]() term, the penalized estimator makes the optimal trade-off between the Rademacher complexity, an upper bound on the estimation error, and the approximation error.

term, the penalized estimator makes the optimal trade-off between the Rademacher complexity, an upper bound on the estimation error, and the approximation error.

1.14.4.4 Hold-out estimates

Suppose out of ![]() iid samples we set aside m samples where m is much smaller than n. Since the hold-out set of size m is not used in the computation of

iid samples we set aside m samples where m is much smaller than n. Since the hold-out set of size m is not used in the computation of ![]() , we can estimate

, we can estimate ![]() by the hold-out estimate

by the hold-out estimate

![]()

By Hoeffding’s inequality, the assumption of Theorem 18 holds with ![]() . Moreover

. Moreover ![]() . Therefore, we have

. Therefore, we have

1.14.5 Alternatives to uniform convergence

The approach of deriving generalization bounds using uniform convergence arguments is not the only one possible. In this section, we review three techniques for proving generalization bounds based on sample compression, algorithmic stability, and PAC-Bayesian analysis respectively.

1.14.5.1 Sample compression

The sample compression approach to proving generalization bounds was introduced by [33]. It applies to algorithms whose output ![]() (that is learned using some training set S) can be reconstructed from a compressed subset

(that is learned using some training set S) can be reconstructed from a compressed subset ![]() . Here

. Here ![]() is a sequence of indices

is a sequence of indices

![]()

and ![]() is simply the subsequence of S indexed by

is simply the subsequence of S indexed by ![]() . For the index sequence

. For the index sequence ![]() above, we say that its length

above, we say that its length ![]() is k. To formalize the idea that the algorithms output can be reconstructed from a small number of examples, we will define a compression function

is k. To formalize the idea that the algorithms output can be reconstructed from a small number of examples, we will define a compression function

![]()

where ![]() is the set of all possible index sequences of finite length. It is assumed that

is the set of all possible index sequences of finite length. It is assumed that ![]() for an S is of size n. We also define a reconstruction function

for an S is of size n. We also define a reconstruction function

![]()

We assume that the learning rule satisfies, for any S,

![]() (14.5)

(14.5)

This formalizes our intuition that to reconstruct ![]() it suffices to remember only those examples whose indices are given by

it suffices to remember only those examples whose indices are given by ![]() .

.

The following result gives a generalization bound for such an algorithm.

1.14.5.2 Stability

Stability is property of a learning algorithm that ensures that the output, or some aspect of the output, of the learning algorithm does not change much if the training data set changes very slightly. This is an intuitive notion whose roots in learning theory go back to the work of Tikhonov on regularization of inverse problems. To see how stability can be used to give generalization bounds, let us consider a result of Bousquet and Elisseeff [34] that avoids going through uniform convergence arguments by directly showing that any learning algorithm with uniform stability enjoys exponential tail generalization bounds in terms of both the training error and the leave-one-out error of the algorithm.

Let ![]() denote the sample of size

denote the sample of size ![]() obtained by removing a particular input

obtained by removing a particular input ![]() from a sample S of size n. A learning algorithm is said to have uniform stability

from a sample S of size n. A learning algorithm is said to have uniform stability![]() if for any

if for any ![]() and any

and any ![]() , we have,

, we have,

![]()

We have the following generalization bound for a uniformly stable learning algorithm.

Note that this theorem gives tight bound when ![]() scales as

scales as ![]() . As such it cannot be applied directly to the classification setting with 0–1 loss where

. As such it cannot be applied directly to the classification setting with 0–1 loss where ![]() can only be 0 or 1. A good example of a learning algorithm that exhibits uniform stability is regularized ERM over a reproducing kernel Hilbert space using a convex loss function that has Lipschitz constant

can only be 0 or 1. A good example of a learning algorithm that exhibits uniform stability is regularized ERM over a reproducing kernel Hilbert space using a convex loss function that has Lipschitz constant ![]() :

:

![]()

A regularized ERM using kernel K is defined by

![]()

where ![]() is a regularization parameter. Regularized ERM has uniform stability

is a regularization parameter. Regularized ERM has uniform stability ![]() for

for ![]() , where

, where

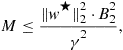

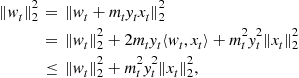

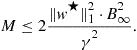

![]()

The literature on stability of learning algorithms is unfortunately thick with definitions. For instance, Kutin and Niyogi [35] alone consider over a dozen variants! We chose a simple (but quite strong) definition from Bousquet and Elisseeff above to illustrate the general point that stability can be used to derive generalization bounds. But we like to point the reader to Mukherjee et al. [36] where a notion of leave-one-out (LOO) stability is defined and shown sufficient for generalization for any learning algorithm. Moreover, for ERM they show it is necessary and sufficient for both generalization and learnability with respect to a fixed class. Shalev-Shwartz et al. [37] further examine the connections between stability, generalization and learnability in Vapnik’s general learning setting that includes supervised learning (learning from example, label pairs) as a special case.

1.14.5.3 PAC-Bayesian analysis

PAC-Bayesian analysis refers to a style of analysis of learning algorithms that output not just a single function ![]() but rather a distribution (called a “posterior distribution”) over

but rather a distribution (called a “posterior distribution”) over ![]() . Moreover, the complexity of the data-dependent posterior distribution is measured relative to a fixed distribution chosen in advance (that is, in a data independent way). The fixed distribution is called a “prior distribution.” Note that the terms “prior” and “posterior” are borrowed from the analysis of Bayesian algorithms that start with a prior distribution on some parameter space and, on seeing data, make updates to the distribution using Bayes’ rule to obtain a posterior distribution. Even though the terms are borrowed, their use is not required to be similar to their use in Bayesian analysis. In particular, the prior used in the PAC-Bayes theorem need not be “true” in any sense and the posterior need not be obtained using Bayesian updates: the bound holds for any choice of the prior and posterior.

. Moreover, the complexity of the data-dependent posterior distribution is measured relative to a fixed distribution chosen in advance (that is, in a data independent way). The fixed distribution is called a “prior distribution.” Note that the terms “prior” and “posterior” are borrowed from the analysis of Bayesian algorithms that start with a prior distribution on some parameter space and, on seeing data, make updates to the distribution using Bayes’ rule to obtain a posterior distribution. Even though the terms are borrowed, their use is not required to be similar to their use in Bayesian analysis. In particular, the prior used in the PAC-Bayes theorem need not be “true” in any sense and the posterior need not be obtained using Bayesian updates: the bound holds for any choice of the prior and posterior.

Denote the space of probability distributions over ![]() by

by ![]() . There might be measurability issues in defining this for general function classes. So, we can assume that

. There might be measurability issues in defining this for general function classes. So, we can assume that ![]() is a countable class for the purpose of the theorem below. Note, however, that the cardinality of the class

is a countable class for the purpose of the theorem below. Note, however, that the cardinality of the class ![]() never enters the bound explicitly anywhere. For any

never enters the bound explicitly anywhere. For any ![]() , define

, define

![]()

In the context of classification, a randomized classifier that, when asked for a prediction, samples a function from a distribution ![]() and uses if for classification, is called a Gibbs classifier. Note that

and uses if for classification, is called a Gibbs classifier. Note that ![]() is the risk of such a Gibbs classifier.

is the risk of such a Gibbs classifier.

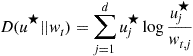

Also recall the definition of Kullback-Leibler divergence or relative entropy:

![]()

For real numbers ![]() we define (with some abuse of notation):

we define (with some abuse of notation):

![]()

With these definitions, we can now state the PAC Bayesian theorem [38].

To get more interpretable bounds from this statement, the following inequality is useful for ![]() :

:

![]()

This implies that if ![]() then two inequalities hold:

then two inequalities hold:

![]()

The first gives us a version of the PAC-Bayesian bound that is often presented:

The second gives us the interesting bound

If the loss ![]() is close to zero, then the dominating term is the second term that scales as

is close to zero, then the dominating term is the second term that scales as ![]() . If not, then the first term, which scales as

. If not, then the first term, which scales as ![]() , dominates.

, dominates.

1.14.6 Computational aspects

So far we have only talked about sample complexity issues ignoring considerations of computational complexity. To properly address the latter, we need a formal model of computation and need to talk about how functions are represented by the learning algorithm. The branch of machine learning that studies these questions is called computational learning theory. We will now provide a brief introduction to this area.

1.14.6.1 The PAC model

The basic model in computational learning theory is the Probably Approximately Correct (PAC) model. It applies to learning binary valued functions and uses the 0–1 loss. Since a ![]() valued function is specified unambiguously be specifying the subset of

valued function is specified unambiguously be specifying the subset of ![]() where it is one, we have a bijection between binary valued functions and concepts, which are simply subsets of

where it is one, we have a bijection between binary valued functions and concepts, which are simply subsets of ![]() . Thus we will use (binary valued) function and concept interchangeably in this section. Moreover, the basic PAC model considers what is sometimes called the realizable case. That is, there is a “true” concept in

. Thus we will use (binary valued) function and concept interchangeably in this section. Moreover, the basic PAC model considers what is sometimes called the realizable case. That is, there is a “true” concept in ![]() generating the data. This means that

generating the data. This means that ![]() for some

for some ![]() . Hence the minimal risk in the class is zero:

. Hence the minimal risk in the class is zero: ![]() . Note, however, that the distribution over

. Note, however, that the distribution over ![]() is still assumed to be arbitrary.

is still assumed to be arbitrary.

To define the computational complexity of a learning algorithm, we assume that ![]() is either

is either ![]() or

or ![]() . We assume a model of computation that can store a single real number in a memory location and can perform any basic arithmetic operation (additional, subtraction, multiplication, division) on two real numbers in one unit of computation time.

. We assume a model of computation that can store a single real number in a memory location and can perform any basic arithmetic operation (additional, subtraction, multiplication, division) on two real numbers in one unit of computation time.

The distinction between a concept and its representation is also an important one when we study computational complexity. For instance, if our concepts are convex polytopes in ![]() , we may choose a representation scheme that specifies the vertices of the polytope. Alternatively, we may choose to specify the linear equalities describing the faces of the polytope. Their vertex-based and face-based representations can differ exponentially in size. Formally, a representation scheme is a mapping

, we may choose a representation scheme that specifies the vertices of the polytope. Alternatively, we may choose to specify the linear equalities describing the faces of the polytope. Their vertex-based and face-based representations can differ exponentially in size. Formally, a representation scheme is a mapping ![]() that maps a representation (consisting of symbols from a finite alphabet

that maps a representation (consisting of symbols from a finite alphabet ![]() and real numbers) to a concept. As noted above, there could be many

and real numbers) to a concept. As noted above, there could be many ![]() ’s such that

’s such that ![]() for a given concept f. The size of representation is assumed to be given by a function

for a given concept f. The size of representation is assumed to be given by a function ![]() . A simple choice of size could be simply the number of symbols and real numbers that appear in the representation. Finally, the size of a concept f is simply the size of its smallest representation:

. A simple choice of size could be simply the number of symbols and real numbers that appear in the representation. Finally, the size of a concept f is simply the size of its smallest representation:

![]()

The last ingredient we need before can give the definition of efficient learnability in the PAC model is the representation class ![]() used by the algorithm for producing its output. We call

used by the algorithm for producing its output. We call ![]() the hypothesis class and it is assumed that there is a representation in

the hypothesis class and it is assumed that there is a representation in ![]() for every function in

for every function in ![]() . Moreover, it is required that for every

. Moreover, it is required that for every ![]() and any

and any ![]() , we can evaluate

, we can evaluate ![]()

![]() in time polynomial in d and size

in time polynomial in d and size![]() .

.

Recall that we have assumed ![]() or

or ![]() . We say that an algorithm efficiently PAC learns

. We say that an algorithm efficiently PAC learns![]() using hypothesis class

using hypothesis class ![]() if, for any

if, for any ![]() , given access to iid examples

, given access to iid examples ![]() , inputs

, inputs ![]() (accuracy),

(accuracy), ![]() (confidence), and

(confidence), and ![]() , the algorithm satisfies the following conditions:

, the algorithm satisfies the following conditions:

For examples of classes ![]() that are efficiently PAC learnable (using some

that are efficiently PAC learnable (using some ![]() ), see the texts [12,18,20].

), see the texts [12,18,20].

1.14.6.2 Weak and strong learning

In the PAC learning definition, the algorithm must be able to achieve arbitrarily small values of ![]() and

and ![]() . A weaker definition would require the learning algorithm to work only for fixed choices of

. A weaker definition would require the learning algorithm to work only for fixed choices of ![]() and

and ![]() . We can modify the definition of PAC learning by removing

. We can modify the definition of PAC learning by removing ![]() and

and ![]() from the input to the algorithm. Instead, we assume that there are fixed polynomials

from the input to the algorithm. Instead, we assume that there are fixed polynomials ![]() and

and ![]() such that the algorithm outputs

such that the algorithm outputs ![]() that satisfies

that satisfies

![]()

with probability at least

![]()

Such an algorithm is called a weak PAC learning algorithm for ![]() . The original definition, in contrast, can be called a definition of strong PAC learning. The definition of weak PAC learning does appear quite weak. Recall that an accuracy of

. The original definition, in contrast, can be called a definition of strong PAC learning. The definition of weak PAC learning does appear quite weak. Recall that an accuracy of ![]() can be trivially achieved by an algorithm that does not even look at the samples but simply outputs a hypothesis that outputs a random

can be trivially achieved by an algorithm that does not even look at the samples but simply outputs a hypothesis that outputs a random ![]() sign (each with probability a half) for any input. The desired accuracy above is just an inverse polynomial away from the trivial accuracy guarantee of

sign (each with probability a half) for any input. The desired accuracy above is just an inverse polynomial away from the trivial accuracy guarantee of ![]() . Moreover, the probability with which the weak accuracy is achieved is not required to be arbitrarily close to 1.

. Moreover, the probability with which the weak accuracy is achieved is not required to be arbitrarily close to 1.

A natural question to ask is whether a class that is weakly PAC learnable using ![]() is also strongly learnable using

is also strongly learnable using ![]() ? The answer, somewhat surprisingly, is “Yes.” So, the weak PAC learning definition only appears to be a relaxed version of strong PAC learning. Moreover, a weak PAC learning algorithm, given as a subroutine or blackbox, can be converted into a strong PAC learning algorithm. Such a conversion is called boosting.

? The answer, somewhat surprisingly, is “Yes.” So, the weak PAC learning definition only appears to be a relaxed version of strong PAC learning. Moreover, a weak PAC learning algorithm, given as a subroutine or blackbox, can be converted into a strong PAC learning algorithm. Such a conversion is called boosting.

A boosting procedure has to start with a weak learning algorithm and boost two things: the confidence and the accuracy. It turns out that boosting the confidence is easier. The basic idea is to run the weak learning algorithm several times on independent samples. Therefore, the crux of the boosting procedure lies in boosting the accuracy. Boosting the accuracy seems hard until one realizes that the weak learning algorithm does have one strong property: it is guaranteed to work no matter what the underlying distribution of the examples is. Thus, if a first run of the weak learning only produces a hypothesis ![]() with slightly better accuracy than

with slightly better accuracy than ![]() , we can make the second run focus only on those examples where

, we can make the second run focus only on those examples where ![]() makes a mistake. Thus, we will focus the weak learning algorithm on “harder portions” of the input distribution. While this simple idea does not directly work, a variation on the same theme was shown to work by Schapire [39]. Freund [40] then presented a simpler boosting algorithm. Finally, a much more practical boosting procedure, called AdaBoost, was discovered by them jointly [10]. AdaBoost counts as one of the great practical success stories of computational learning theory. For more details on boosting, AdaBoost, and the intriguing connections of these ideas to game theory and statistics, we refer the reader to [41].

makes a mistake. Thus, we will focus the weak learning algorithm on “harder portions” of the input distribution. While this simple idea does not directly work, a variation on the same theme was shown to work by Schapire [39]. Freund [40] then presented a simpler boosting algorithm. Finally, a much more practical boosting procedure, called AdaBoost, was discovered by them jointly [10]. AdaBoost counts as one of the great practical success stories of computational learning theory. For more details on boosting, AdaBoost, and the intriguing connections of these ideas to game theory and statistics, we refer the reader to [41].

1.14.6.3 Random classification noise and the SQ model

Recall that the basic PAC model assumes that the labels are generated using a function f belonging to the class ![]() under consideration. Our earlier development of statistical tools for obtaining sampling complexity estimates did not require such an assumptions (though the rates did depend on whether

under consideration. Our earlier development of statistical tools for obtaining sampling complexity estimates did not require such an assumptions (though the rates did depend on whether ![]() or not).

or not).

There are various noise models that extend the PAC model by relaxing the noise-free assumption and thus making the problem of learning potentially harder. Perhaps the simplest is the random classification noise model [42]. In this model, when the learning algorithm queries for the next labeled example of the form ![]() , it receives it with probability

, it receives it with probability ![]() but with probability

but with probability ![]() , it receives

, it receives ![]() . That is, with some probability

. That is, with some probability ![]() , the label is flipped. The definition of efficient learnability remains the same except that now the learning algorithm has to run in time polynomial in

, the label is flipped. The definition of efficient learnability remains the same except that now the learning algorithm has to run in time polynomial in ![]() and

and ![]() where

where ![]() is an input to the algorithm and is an upper bound on

is an input to the algorithm and is an upper bound on ![]() .

.

Kearns [43] introduced the statistical query (SQ) model where learning algorithms do not directly access the labeled example but only make statistical queries about the underlying distribution. The queries take the form ![]() where

where ![]() is a binary valued function of the labeled examples and

is a binary valued function of the labeled examples and ![]() is a tolerance parameter. Given such a query, the oracle in the SQ model responds with an estimate of

is a tolerance parameter. Given such a query, the oracle in the SQ model responds with an estimate of ![]() that is accurate to within an additive error of

that is accurate to within an additive error of ![]() . It is easy to see, using Hoeffding’s inequality, that given access to the usual PAC oracle that provides iid examples of the form

. It is easy to see, using Hoeffding’s inequality, that given access to the usual PAC oracle that provides iid examples of the form ![]() , we can simulate the SQ oracle by just drawing a sample of size polynomial in

, we can simulate the SQ oracle by just drawing a sample of size polynomial in ![]() and

and ![]() and estimating the probability

and estimating the probability ![]() based on it. The simulation with succeed with probability

based on it. The simulation with succeed with probability ![]() . Kearns gave a more complicated simulation that mimics the SQ oracle given access to only a PAC oracle with random misclassification noise.

. Kearns gave a more complicated simulation that mimics the SQ oracle given access to only a PAC oracle with random misclassification noise.

This lemma means that learning algorithm that learns a concept class ![]() in the SQ model can also learn

in the SQ model can also learn ![]() in the random classification noise model.

in the random classification noise model.

1.14.6.4 The agnostic PAC model

The agnostic PAC model uses the same definition of learning as the one we used in Section 1.14.2.3). That is, the distribution of examples ![]() is arbitrary. In particular, it is not assumed that

is arbitrary. In particular, it is not assumed that ![]() for any f. The goal of the learning algorithm is to output a hypothesis

for any f. The goal of the learning algorithm is to output a hypothesis ![]() with

with ![]() with probability at least

with probability at least ![]() . We say that a learning algorithm efficiently learns

. We say that a learning algorithm efficiently learns ![]() using

using ![]() in the agnostic PAC model if the algorithm not only outputs a probably approximately correct hypothesis h but also runs in time that is polynomial in

in the agnostic PAC model if the algorithm not only outputs a probably approximately correct hypothesis h but also runs in time that is polynomial in ![]() , and d.

, and d.

The agnostic model has proved to be a very difficult one for exhibiting efficient learning algorithms. Note that the statistical issue is completely resolved: a class ![]() is learnable iff it has finite VC dimension. However, the “algorithm” implicit in this statement is ERM which, even for the class of halfspaces

is learnable iff it has finite VC dimension. However, the “algorithm” implicit in this statement is ERM which, even for the class of halfspaces

![]()

leads to NP-hard problems. For instance, see [44] for very strong negative results about agnostic learning of halfspaces. However, these results apply to proper agnostic learning only. That is, the algorithm outputs a hypothesis that is also a halfspace. An efficient algorithm for learning halfspaces in the agnostic PAC model using a general hypothesis class would be a major breakthrough.

1.14.6.5 The mistake bound model

The mistake bound model is quite different from the PAC model. Unlike the PAC model, no assumption is made on the distribution of the inputs. There is also no distinction between a training phase where the learner uses a batch of examples to learn a hypothesis and a test phase where the risk of the learned hypothesis determines the learner’s expected loss. Instead, learning happens in a series of trials (or rounds) in an online fashion. At any given round ![]() , the order of events is as follows:

, the order of events is as follows:

Note that the basic model assumes that is a true function f generating the labels (the case when such an f is assumed to exist is often called the realizable case). The identity of this function is, of course, hidden from the learner. The goal of the learner is to minimize the total number of mistakes it makes:

![]()

where ![]() is the 0–1 loss. If a learning algorithm can guarantee, for any choice

is the 0–1 loss. If a learning algorithm can guarantee, for any choice ![]() of the true concept, that the total mistake bound will be a polynomial function of d and

of the true concept, that the total mistake bound will be a polynomial function of d and ![]() as well as the computation per round is polynomial in the same parameters, then we say that the algorithm efficiently learns

as well as the computation per round is polynomial in the same parameters, then we say that the algorithm efficiently learns![]() in the mistake bound model.

in the mistake bound model.

Recall that, in the PAC model, VC dimension of ![]() characterizes learnability of

characterizes learnability of ![]() if we ignore computational considerations. Moreover, VC dimension characterizes learnability in the agnostic PAC model as well. In the mistake bound model, it is the Littlestone dimension [45] whose finiteness provides a necessary and sufficient condition for learnability. There is also an agnostic version of the mistake bound model where we drop the assumption that

if we ignore computational considerations. Moreover, VC dimension characterizes learnability in the agnostic PAC model as well. In the mistake bound model, it is the Littlestone dimension [45] whose finiteness provides a necessary and sufficient condition for learnability. There is also an agnostic version of the mistake bound model where we drop the assumption that ![]() for some

for some ![]() . It is also known that the Littlestone dimension characterizes learnability (ignoring efficiency) even in the agnostic online mistake bound model [46].

. It is also known that the Littlestone dimension characterizes learnability (ignoring efficiency) even in the agnostic online mistake bound model [46].

The Littlestone dimension of a class ![]() is defined as follows. A

is defined as follows. A ![]() -valued tree (or simply an

-valued tree (or simply an ![]() -tree) of height t is a complete binary tree of height t whose internal nodes are labeled with instances from

-tree) of height t is a complete binary tree of height t whose internal nodes are labeled with instances from ![]() . A (complete binary) tree of height t has exactly

. A (complete binary) tree of height t has exactly ![]() leaves which we identify, from left to right, with the

leaves which we identify, from left to right, with the ![]() sequences length t

sequences length t![]() sequences ordered lexicographically (with

sequences ordered lexicographically (with ![]() taking precedence over

taking precedence over ![]() in determining the lexicographic order). For example, the leftmost leaf corresponds to all

in determining the lexicographic order). For example, the leftmost leaf corresponds to all ![]() ’s sequence whereas the rightmost leaf corresponds to the all

’s sequence whereas the rightmost leaf corresponds to the all ![]() ’s sequences. If

’s sequences. If ![]() is a leaf, we denote its associated

is a leaf, we denote its associated ![]() sequence by

sequence by ![]() .

.

An ![]() -tree of height t is said to be shattered by

-tree of height t is said to be shattered by ![]() if for each of the

if for each of the ![]() leaves the following is true: there is a function

leaves the following is true: there is a function ![]() such that the sequence of values taken by f on the internal nodes on the path from the root to the leaf

such that the sequence of values taken by f on the internal nodes on the path from the root to the leaf ![]() is exactly

is exactly ![]() . The Littlestone dimension of

. The Littlestone dimension of ![]() is the height of the tallest tree that is shattered by

is the height of the tallest tree that is shattered by ![]() .

.

It is easy to see that if an ![]() -valued sequence

-valued sequence ![]() is shattered by

is shattered by ![]() then the

then the ![]() -tree of height t obtained by repeating

-tree of height t obtained by repeating ![]() across level i for

across level i for ![]() is also shattered by

is also shattered by ![]() . This proves the following relationship between

. This proves the following relationship between ![]() and

and ![]() .

.

A converse to the above theorem is not possible. Indeed, the class of threshold functions on ![]() :

:

![]()

has ![]() but

but ![]() . A finite class

. A finite class ![]() has a finite Littlestone dimension satisfying the upper bound

has a finite Littlestone dimension satisfying the upper bound ![]() .

.

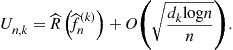

1.14.6.5.1 Halving and weighted majority

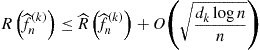

Note that we proved risk bounds for a finite class in the probabilistic framework using Hoeffding’s inequality along with a simple union bound. Here we show how to deal with a finite class in the online mistake bound model. In the realizable case, a simple algorithm called halving is guaranteed to make no more than ![]() mistakes.

mistakes.

At the beginning of round t, the halving algorithm considers a subset ![]() of the original function class. Predictions are made by taking a majority vote over

of the original function class. Predictions are made by taking a majority vote over ![]() :

:

Therefore, if a mistake is made, then ![]() reduces by at least a factor of 2. Moreover, the true function is never eliminated since it will always satisfy

reduces by at least a factor of 2. Moreover, the true function is never eliminated since it will always satisfy ![]() . Therefore, if

. Therefore, if ![]() is the number of mistakes made by the halving algorithm in t rounds, then we have

is the number of mistakes made by the halving algorithm in t rounds, then we have

![]()

This implies that ![]() . Thus we have proved a mistake bound for halving.

. Thus we have proved a mistake bound for halving.

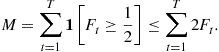

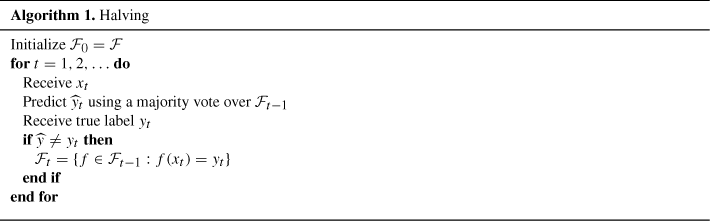

In the non-realizable case, halving does not work directly but a suitable modification does. Since no function in the class can be guaranteed to be correct all the time, it does not make sense to eliminate functions that make mistakes. The crucial idea here is to keep weights for different functions in the class. Then, when a functions makes a mistake, it is not eliminated but its weight is multiplied by a factor of ![]() where

where ![]() is a parameter. The prediction are now taken using a weighted majority vote over all

is a parameter. The prediction are now taken using a weighted majority vote over all ![]() :

:

(14.6)

(14.6)

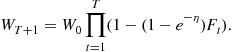

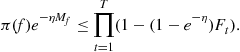

It turns out we can shave off a factor of 2 in the above mistake bound by using a randomized version of the weighted majority algorithm. Instead of taking the majority vote with the weights ![]() , we can consider a Gibbs classifier that chooses a function

, we can consider a Gibbs classifier that chooses a function ![]() randomly from

randomly from ![]() with probability proportional to

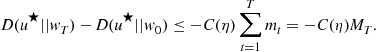

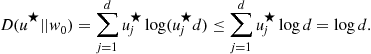

with probability proportional to ![]() and then outputs