Chapter 9. Tomorrow’s Security Cogs and Levers

Without changing our patterns of thought, we will not be able to solve the problems that we created with our current patterns of thought.

Information security is not just about technology. It is about people, processes, and technology, in that order—or more accurately, about connecting people, processes, and technology together so that humans and entire systems can make informed decisions. It may at first seem rather odd to start a chapter in a book about the future of security management technology with a statement that puts the role of technology firmly in third place, but I felt it was important to put that stake in the ground to provide context for the rest of this chapter.

This doesn’t mean that I belittle the role of technology in security. I firmly believe that we are at the very beginnings of an information technology revolution that will affect our lives in ways few of us can imagine, let alone predict. It’s easy to dismiss futuristic ideas; many of us still laugh at historical predictions from the 1970s and 1980s portraying a future where self-guided hovercars will whisk us to the office in the mornings and where clunky humanoid robots will mix us cocktails when we get home from work, yet fundamental technological breakthroughs are emerging before our eyes that will spark tomorrow’s technological advances.

One such spark, which feeds my conviction that we are on the cusp of an exponential technology curve, is the development of programming languages and artificial intelligence technology that will improve itself at rates humans can’t match. Think about that for a moment: programming languages that can themselves create better languages, which in turn can create better languages, and so on. Software that can reprogram itself to be better based on what it learns about itself, designing and solving solutions to problems that we didn’t even imagine were solvable (assuming we even knew about them in the first place). Many people debate ethical medical research and cry foul about how human cloning could change the planet, but they may well be focused on the wrong problem.

Information security and its relationship with technology, of course, dates back through history. The Egyptians carved obfuscated hieroglyphs into monuments; the Spartans used sticks and wound messages called scytales to exchange military plans; and the Romans’ Caesar ciphers are well documented in school textbooks. Many historians attribute the victory in the Second World War directly to the code breakers at Bletchley Park who deciphered the famous Enigma machine, yet even this monumental technological event, which ended the World War and changed history forever, may pale into insignificance next to changes to come.

The packet switching network invented by Donald Davies in 1970 also changed the world forever when the sudden ability of computers to talk to other computers with which they previously had no relationship opened up new possibilities for previously isolated computing power. Although the early telegraph networks almost a century before may have aroused the dream of an electronically connected planet, it was only in the 1970s, 1980s, and 1990s that we started to wire the world together definitively with copper cables and later with fiber-optic technology. Today that evolution is entering a new phase. Instead of wiring physical locations together with twisted copper cables, we are wiring together software applications and data with service-oriented architectures (SOAs) and, equally importantly, wiring people into complex social networks with new types of human relationships.

While I would be foolish to think I could predict the future, I often find myself thinking about future trends in information security and about the potential effect of information security on the future—two very distinct but interrelated things. Simply put, I believe that information security in the future will be very different from the relatively crude ways in which we operate today.

The security tools and technology available to the masses today can only be described as primitive in comparison to electronic gaming, financial investment, or medical research software. Modern massive multiplayer games are built on complex physics engines that mimic real-world movement, leverage sophisticated artificial intelligence engines that provide human-like interactions, and connect hundreds of thousands of players at a time in massively complex virtual worlds. The financial management software underpinning investment banks performs “supercrunching” calculations on data sets pulled from public and private sources and builds sophisticated prediction models from petabytes of data.[80] Medical research systems analyze DNA for complex patterns of hereditary diseases, predicting entire populations’ hereditary probability to inherit genetic traits.

In stark contrast, the information security management programs that are supposed to protect trillions of dollars of assets, keep trade secrets safe from corporate espionage, and hide military plans from the mucky paws of global terrorists are often powered by little more than Rube Goldberg machines (Heath Robinson machines if you are British) fabricated from Excel spreadsheets, Word documents, homegrown scripts, Post-It notes, email systems, notes on the backs of Starbucks cups, and hallway conversations. Is it any wonder we continue to see unprecedented security risk management failures and that most security officers feel they are operating in the dark? If information security is to keep pace (and it will), people, processes, and (the focus of this chapter) information security technology will need to evolve. The Hollywood security that security professionals snigger at today needs to become a reality tomorrow.

I am passionate about playing a part in shaping the security technology of the future, which to me involves defining and creating what I call the “security cogs of tomorrow.” This chapter discusses technology trends that I believe will have a significant influence over the security industry and explores how they can be embraced to build information security risk management systems that will help us to do things faster, better, and more cheaply than we can today. We can slice and dice technology a million ways, but advances usually boil down to those three things: faster, better, and cheaper.

I have arranged this chapter into a few core topics:

Cloud Computing and Web Services: The Single Machine Is Here

Connecting People, Process, and Technology: The Potential for Business Process Management

Social Networking: When People Start Communicating, Big Things Change

Information Security Economics: Supercrunching and the New Rules of the Grid

Platforms of the Long-Tail Variety: Why the Future Will Be Different for Us All

Before I get into my narrative, let me share a few quick words said by Upton Sinclair and quoted effectively by Al Gore in his awareness campaign for climate change, An Inconvenient Truth, and which I put on a slide to start my public speaking events:

It’s difficult to get a man to understand something when his salary depends on him not understanding it.

Challenging listeners to question the reason why they are being presented ideas serves as a timely reminder of common, subtle bias for thoughts and ideas presented as fact. For transparency, at this time of writing I work for Microsoft. My team, the Connected Information Security Group, has a long-term focus of advancing many of the themes discussed here. This chapter represents just my perspective—maybe my bias—but my team’s performance depends on how closely the future measures up to the thoughts in this chapter!

Cloud Computing and Web Services: The Single Machine Is Here

Civilization advances by extending the number of important operations which we can perform without thinking of them.

Today, much is being made of “cloud computing” in the press. For at least the past five years, the computer industry has also expressed a lot of excitement about web services, which can range from Software as a Service (SaaS) to various web-based APIs and service-oriented architecture (SOA, pronounced “so-ah”).

Cloud computing is really nothing more than the abstraction of computing infrastructure (be it storage, processing power, or application hosting) from the hardware system or users. Just as you don’t know where your photo is stored physically after you upload it to Flickr, you can run an entire business on a service that is free to run it on any system it chooses. Thus, part or all of the software runs somewhere “in the cloud.” The system components and the system users don’t need to know and frankly don’t care where the actual machines are located or where the data physically resides. They care about the functionality of the system instead of the infrastructure that makes it possible, in the same way that average telephone users don’t care which exchanges they are routed through or what type of cable the signal travels over in order to talk to their nanas.

But even though cloud computing is a natural extension of other kinds of online services and hosting services, it’s an extremely important development in the history of the global network. Cloud computing democratizes the availability of computing power to software creators from virtually all backgrounds, giving them supercomputers on-demand that can power ideas into reality. Some may say this is a return to the old days when all users could schedule time on the mainframe and that cloud computing is nothing new, but that’s hardly the point. The point is that this very day, supercomputers are available to anyone who has access to the Internet.

Web services are standards-based architectures that expose resources (typically discrete pieces of application functionality) independently of the infrastructure that powers them. Web services allow many sites to integrate their applications and data economically by exposing functionality in standards-based formats and public APIs. SOAs are sets of web services woven together to provide sets of functionality and are the catalyst that is allowing us to connect (in the old days we may have said “wire together”) powerful computing infrastructure with software functionality, data, and users.

I’m not among the skeptics who minimize the impact of cloud computing or web services. I believe they will serve up a paradigm shift that will fundamentally change the way we all think about and use the Internet as we know it today. In no area will that have effects more profound than in security—and it is producing a contentious split in the security community.

Builders Versus Breakers

Security people fall into two main categories:

Builders usually represent the glass as half full. While recognizing the seriousness of vulnerabilities and dangers in current practice, they are generally optimistic people who believe that by advancing the state they can change the world for the better.

Breakers usually represent the glass as half empty, and are often so pessimistic that you wonder, when listening to some of them, why the Internet hasn’t totally collapsed already and why any of us have money left unpilfered in our bank accounts. Their pessimism leads them to apply the current state of the art to exposing weaknesses and failures in current approaches.

Every few years the next big thing comes along and polarizes security people into these two philosophical camps. I think I hardly need to state that I consider myself a builder.

Virtual digital clouds of massive computing power, along with virtual pipes to suck it down and spit it back out (web services), trigger suspicions that breakers have built up through decades of experience. Hover around the water coolers of the security “old school,” and you will likely see smug grins and knowing winks as they utter pat phrases such as, “You can’t secure what you don’t control,” “You can’t patch a data center you don’t own,” and the ultimate in cynicism, “Why would you trust something as important as security to someone else?”

I’ve heard it all, and of course it’s all hard to argue against. These are many valid arguments against hosting and processing data in the cloud, but by applying standard arguments for older technologies, breakers forget a critical human trait that has been present throughout history: when benefits outweigh drawbacks, things almost always succeed. With the economic advantages of scalable resources on demand, the technological advantages of access to almost unlimited computing resources, and the well-documented trend of service industries, from restaurants to banking, that provide commodity goods, the benefits of cloud computing simply far outweigh the drawbacks.

One reason I deeply understand the breaker mentality springs from a section of my own career. In 2002, I joined a vulnerability management firm named Foundstone (now owned by McAfee) that sold a network vulnerability scanner. It ran as a client in the traditional model, storing all data locally on the customer’s system. Our main competitor, a company called Qualys, offered a network scanner as a service on their own systems with data stored centrally at their facilities. We won customers to our product by positioning hosted security data as an outrageous risk. Frankly, we promoted FUD (Fear, Uncertainty, and Doubt). Most customers at the time agreed, and it became a key differentiator that drove revenue and helped us sell the company to McAfee. My time at Foundstone was among the most rewarding I have had, but I also feel, looking back, that our timing was incredibly fortunate. Those inside the dust storm watched the cultural sands shift in a few short years, and we found more and more customers not only accepting an online model but demanding it.

The same is true of general consumers, of course. Over five million WordPress blog users have already voted with their virtual feet, hosting their blogs online. And an estimated 10% of the world’s end-user Internet traffic comes from hosted, web-based email, such as Yahoo! Mail, Gmail, and Live Mail. Google is renowned for building megalithic data centers across the world; Microsoft is investing heavily in a cloud operating system called Azure, along with gigantic data center infrastructures to host software and services; and Amazon has started renting out parts of the infrastructure that they built as part of their own bid to dominate the online retailing space.

Clouds and Web Services to the Rescue

The question security professionals should be asking is not “Can cloud computing and web services be made secure?” but “How can we apply security to this new approach?” Even more cleverly, we should think: “How can we embrace this paradigm to our advantage?”

The good news is that applying security to web services and cloud computing is not as hard as people may think. What at first seems like a daunting task just requires a change of paradigm. The assumption that the company providing you with a service also has to guarantee your security is just not valid.

To show you how readily you can see the new services as a boon to security instead of a threat, let me focus on a real-world scenario. Over Christmas I installed a nice new Windows Home Server in our house. Suddenly, we are immersed in the digital world: our thousands of photos and videos of the kids can be watched on the TV in the living room via the Xbox, the six computers scattered around the house all get backed up to a central server, and the family at home once again feels connected. Backing up to a central server is all well and good, but what happens if we get robbed and someone steals the PCs and the server?

Enter the new world of web services and cloud computing. To mitigate the risk of catastrophic system loss, I wrote a simple plug-in (see the later section Platforms of the Long-Tail Variety: Why the Future Will Be Different for Us All) to the home server that makes use of the Amazon Web Services platform. At set intervals, the system copies the directories I chose onto the server and connects via web services to Amazon’s S3 (Simple Storage System) cloud infrastructure. The server sends a backup copy of the data I choose to the cloud. I make use of the WS-Security specification (and a few others) for web services, ensuring the data is encrypted and not tampered with in transport, and I make use of an X.509 digital certificate to ensure I am communicating with Amazon. To further protect the data, I encrypt it locally before it is sent to Amazon, ensuring that if Amazon is hacked, my data will not be exposed or altered. The whole solution took 30 minutes to knock up, thanks to some reusable open source code on the Internet.

So, storing your personal data on someone else’s server seems scary at first, but when you think it through and apply well-known practices to new patterns, you realize that the change is not as radical as you first thought. The water cooler conversations about not being able to control security on physical servers located outside your control may be correct, but the solution is to apply security at a different point in the system.

It is also worth pointing out that the notion of using services from other computers is hardly new. We all use DNS services from someone else’s servers every day. When we get over the initial shock of disruptive technologies (and leave the breaker’s pessimism behind), we can move on to the important discussions about cloud computing and web services, and embrace what we can now do with these technologies.

A New Dawn

In a later section of this chapter, I discuss supercrunching, a term used to describe massive analysis of large sets of data to derive meaning. I think supercrunching has a significant part to play in tomorrow’s systems. Today we are bound by the accepted limitations of local storage and local processing, often more than we think; if we can learn to attack our problems on the fantastically larger scale allowed by Internet-connected services, we can achieve new successes.

This principle can reap benefits in security monitoring, taking us beyond the question of how to preserve the security we had outside the cloud and turning the cloud into a source of innovation for security.

Event logs can provide an incredible amount of forensic information, allowing us to reconstruct an event. The question may be as simple as which user reset a specific account password or as complex as which system process read a user’s token. Today there are, of course, log analysis tools and even a whole category of security tools called Security Event Managers (SEMs), but these don’t even begin to approach the capabilities of supercrunching. Current tools run on standard servers with pretty much standard hardware performing relatively crude analysis.

A few short years ago I remember being proud of a system I helped build while working for a big financial services company in San Francisco; the system had a terabyte of data storage and some beefy Sun hardware. We thought we were cutting-edge, and at the time we were! But the power and storage that is now available to us all if we embrace the new connected computing model will let us store vast amounts of security monitoring data for analysis and use the vast amounts of processing power to perform complex analysis.

We will then be able to look for patterns and derive meaning from large data sets to predict security events rather than react to them. You read that correctly: we will be able to predict from a certain event the probability of a tertiary event taking place. This will allow us to provide context-sensitive security or make informed decisions about measures to head off trouble.

In a later section of this chapter (Social Networking: When People Start Communicating, Big Things Change), I discuss social networking. Social networking will have a profound impact on security when people start to cooperate efficiently. Sharing the logfiles I mentioned earlier with peers will enable larger data sets to be analyzed and more accurate predictions to be made.

It’s also worth noting that a cloud service is independent from any of the participating parties, and therefore can be a neutral and disinterested facilitator. For a long time, companies have been able to partition their network to allow limited access to trusted third parties, or provide a proxy facility accessible to both parties. This practice was not lost on the plethora of folks trying to compete for the lucrative and crucial identity management area. Identity management services such as OpenID and Windows Live ID operate in the cloud, allowing them to bind users together across domains.

Connecting People, Process, and Technology: The Potential for Business Process Management

Virtually every company will be going out and empowering their workers with a certain set of tools, and the big difference in how much value is received from that will be how much the company steps back and really thinks through their business processes, thinking through how their business can change, how their project management, their customer feedback, their planning cycles can be quite different than they ever were before.

New York Times columnist Thomas Friedman wrote an excellent book in 2005 called The World Is Flat (Farrar, Straus and Giroux) in which he explored the outsourcing revolution, from call centers in India and tax form processing in China to radiography analysis in Australia. I live Friedman’s flat world today; in fact, I am sitting on a plane to Hyderabad to visit part of my development team as I write this text. My current team is based in the United States (Redmond), Europe (London and Munich), India (Hyderabad), and China (Beijing). There’s a lot of media attention today on the rise of skilled labor in China and India providing goods and services to the Western world, but when you look back at history, the phenomenon is really nothing new. Friedman’s book reveals that there has been a shift in world economic power about every 500 years throughout history, and that shift has always been catalyzed by an increase in trading.

And furthermore, what has stimulated that increase in trading? It’s simple: connectivity and communication. From the Silk Road across China to the dark fiber heading out of Silicon Valley, the fundamental principle of connecting supply and demand and exchanging goods and services continues to flourish. What’s interesting (Friedman goes on to say) is that in today’s world workflow software has been a key “flattener,” meaning that the ability to route electronic data across the Internet has enabled and accelerated these particular global market shifts (in this case, in services). Workflow software—or more accurately, Business Process Management (BPM) software, a combination of workflow design, orchestration, business rules engines, and business activity monitoring tools—will dramatically change both the ways we need to view the security of modern business software and how we approach information security management itself.

Diffuse Security in a Diffuse World

In a flat world, workforces are decentralized. Instead of being physically connected in offices or factories as in the industrial revolution, teams are combined onto projects, and in many cases individuals combined into teams, over the Internet.

Many security principles are based on the notion of a physical office or a physical or logical network. Some technologies (such as popular file-sharing protocols such as Common Internet File System [CIFS] and LAN-based synchronization protocols such as Address Resolution Protocol [ARP]) take this local environment for granted. But those foundations become irrelevant as tasks, messages, and data travel a mesh of loosely coupled nodes.

The effect is similar to the effects of global commerce, which takes away the advantage of renting storefront property on your town’s busy Main Street or opening a bank office near a busy seaport or railway station. Tasks are routed by sophisticated business rules engines that determine whether a call center message should be routed to India or China, or whether the cheapest supplier for a particular good has the inventory in stock.

BPM software changes the very composition of supply chains, providing the ability to dynamically reconfigure a supply chain based on dynamic business conditions. Business transactions take place across many companies under conditions ranging from microseconds to many years. Business processes are commonly dehydrated and rehydrated as technologies evolve to automatically discover new services. The complexity and impact of this way of working will only increase.

For information security, of course, this brings significant new challenges. Over thousands of years, humans have associated security with physical location. They have climbed hills, built castles with big walls, and surrounded themselves with moats. They have worked in office buildings where there are physical controls on doors and filing cabinets, put their money in bank vaults (that seems so quaint nowadays), and locked their dossiers (including the ones on computers) in their offices or data centers. Internet security carried over this notion with firewalls and packet filters inspecting traffic as it crossed a common gateway.

Today, groups such as the Jericho Forum are championing of the idea of “deperimeterization” as companies struggle to deal with evolving business models. A company today is rarely made up of full-time employees that sit in the same physical location and are bound by the same rules. Companies today are collaborations of employees, business partners, outsourcing companies, temporary contractors (sometimes called “perma-vendors”), and any number of other unique arrangements you can think of. They’re in Beijing, Bangalore, Manhattan, and the Philippines. They are bound by different laws, cultures, politics, and, of course, real and perceived security exigencies. The corporate firewall no longer necessarily protects the PC that’s logged into the corporate network and also, incidentally, playing World of Warcraft 24/7. Indeed, the notion of a corporate network itself is being eroded by applications that are forging their own application networks connected via web services and messaging systems through service-oriented architectures.

When a company’s intellectual property and business data flow across such diverse boundaries and through systems that are beyond their own security control, it opens up a whole new world of problems and complexity. We can no longer have any degree of confidence that the security controls we afford our own security program are effective for the agent in a remote Indian village. Paper contracts requiring vendors to install the latest anti-malware software is of little comfort after the botnet was activated from Bulgaria, bringing the key logger alive and altering the integrity of the system’s processing. Never has the phrase “security is as only good as the weakest link” been more apt, and systems architects are being forced to operate on the premise that the weakest link can be very weak indeed.

BPM As a Guide to Multisite Security

Despite these obvious concerns, I believe the same technologies and business techniques encompassed by the term BPM will play a critical role in managing information security in the future.

For example, if we examine today’s common information security process of vulnerability management, we can easily imagine a world where a scalable system defines the business process and parcels various parts of it off to the person or company that can do it faster, better, or more cheaply. If we break a typical vulnerability management process down, we can imagine it as a sequence of steps (viewed simplistically here for illustrative purposes, of course), such as the analysis of vulnerability research, the analysis of a company’s own data and systems to determine risk, and eventual management actions, such as remediation.

Already today, many companies outsource the vulnerability research to the likes of iDefense (now a VeriSign company) or Secunia, who provide a data feed via XML that can be used by corporate analysts. When security BPM software (and a global network to support it) emerges, companies will be able to outsource this step not just to a single company, in the hope that it has the necessary skills to provide the appropriate analysis, but to a global network of analysts. The BPM software will be able to route a task to an analyst who has a track record in a specific obscure technology (the best guy in the world at hacking system X or understanding language Y) or a company that can return an analysis within a specific time period. The analysts may be in a shack on a beach in the Maldives or in an office in London; it’s largely irrelevant, unless working hours and time zones are decision criteria.

Business rules engines may analyze asset management systems and decide to take an analysis query that comes in from San Francisco and route it to China so it can be processed overnight and produce an answer for the corporate analysts first thing in the morning.

This same fundamental change to the business process of security research will likely be extended to the intelligence feeds powering security technology, such as anti-virus engines, intrusion detection systems, and code review scanners. BPM software will be able to facilitate new business models, microchunking business processes to deliver the end solution faster, better, or more cheaply. This is potentially a major paradigm shift in many of the security technologies we have come to accept, decoupling the content from the delivery mechanism. In the future, thanks to BPM software security, analysts will be able to select the best anti-virus engine and the best analysis feed to fuel it—but they will probably not come from the same vendor.

When Nicholas Carr wrote Does IT Matter?,[81] he argued that technology needs to be realigned to support business instead of driving it. BPM is not rocket science (although it may include rocket science in its future), but it’s doing just that: realigning technology to support the business. In addition to offering radical improvements to information security by opening new markets, BPM can deliver even more powerful changes through its effects on the evolution of the science behind security. The Business Process Management Initiative (BPMI) has defined five tenets of effective BPM programs. These tenets are unrelated to information security, but read as a powerful catalyst. I’ll list each tenet along with what I see as its most important potential effects on security:

Understand and Document the Process

Security effect: Implement a structured and effective information security program

Understand Metrics and Objectives

Security effect: Understand success criteria and track their effectiveness

Model and Automate Process

Security effect: Improve efficiency and reduce cost

Understand Operations and Implement Controls

Security effect: Improve efficiency and reduce cost

Security effect: Fast and accurate compliance and audit data (visibility)

Optimize and Improve

Security effect: Do more with less

Security effect: Reduce cost

Put another way: if you understand and document your process, metrics, and objectives; model and automate your process; understand and implement your process; and optimize and improve the process, you will implement a structured and effective information security program, understand the success criteria and track effectiveness, improve efficiency and reduce cost, produce fast and accurate compliance, audit data, and ultimately do more with less and reduce the cost of security. This is significant!

While the topic of BPM for information security could of course fill a whole book—when you consider business process modeling, orchestration, business rules design, and business activity modeling—it would be remiss to leave the subject without touching upon the potential BPM technologies have for simulation. When you understand a process, including its activities, process flows, and business rules, you have a powerful blueprint describing how something should work. When you capture business activity data from real-world orchestrations of this process, you have powerful data about how it actually works.

Simulation offers us the ability to alter constraints and simulate results before we spend huge resources and time. Take for example a security incident response process in which simulation software predicts the results of a certain number or type of incidents. We could predict failure or success based on facts about processes, and then change the constraints of the actual business to obtain better results. Simulation can take place in real time, helping avoid situations that a human would be unlikely to be able to predict. I believe that business process simulation will emerge as a powerful technique to allow companies to do things better, faster, and more cheaply. Now think about the possibilities of BPM when connected to social networks, and read on!

Social Networking: When People Start Communicating, Big Things Change

Human beings who are almost unique (among animals) in having the ability to learn from the experience of others, yet are also remarkable in their apparent disinclination to do so.

One night at sea, Horatio Hornblower, the fictional character from C. S. Forester’s series of novels, is woken up by his first officer, who is alarmed to see a ship’s light in his sea lane about 20 miles away, refusing to move. Horatio quickly joins the deck and commands the ship via radio communications to move starboard 20 degrees at once. The operator refuses and indignantly tells 1st Baron Horatio that it is he who should be moving his ship starboard 20 degrees at once. Incensed and enraged, Horatio Hornblower pulls rank and size on the other ship, stating that he’s a captain and that he’s on a large battleship. Quietly and calmly, the operator replies, informing Captain Hornblower that his is in fact the biggest vessel, being a lighthouse on a cliff above treacherous rocks.

Each time I tell this story, it reminds me just how badly humans communicate. We are all guilty, yet communication is crucial to everything we do. When people communicate, they find common interests and form relationships.

Social networking is considered to be at the heart of Web 2.0 (a nebulous term that describes the next generation of Internet applications), yet it is really nothing new. Throughout history people have lived in tribes, clans, and communities that share a bond of race, religion, and social or economic values, and at every point, when social groups have been able to connect more easily, big things have happened. Trading increases, ideas spread, and new social, political, and economic models form.

I started a social network accidentally in 2001 called the Open Web Application Security Project (OWASP). We initially used an email distribution list and a static website to communicate and collaborate. In the early days, the project grew at a steady rate. But it was only late in 2003, when a wiki was introduced and everyone could easily collaborate, that things really took off. Today the work of OWASP is recommended by the Federal Trade Commission, the National Institute for Standards, and the hotly debated Payment Card Industry Data Security Standard, or PCI-DSS. I learned many valuable life lessons starting OWASP, but none bigger than the importance of the type of social networking technology you use.[82]

The State of the Art and the Potential in Social Networking

Today Facebook and MySpace are often held up as the leading edge of social networking software that brings together people who share a personal bond. People across the world can supposedly keep in touch better than they could before it was created. The sites are certainly prospering, with megalevels of subscribers (250 million+ users each) and their media darling status. But in my observations, the vast majority of their users spend their time digitally “poking” their friends or sending them fish for their digital aquariums. To the older kids like me, this is a bit like rock ‘n’ roll to our parents’ parents—we just don’t get it—but I am ready to accept I am just getting old.

Equally intriguing are social networks forming in virtual worlds such as Second Life. With their own virtual economy, including inflation-capped monetary systems, the ability to exchange real-world money for virtual money, and a thriving virtual real estate market, Second Life has attracted a lot of interest from big companies like IBM. Recently, researchers were able to teleport an avatar from one virtual world to another, and initiatives such as the OpenSocial API indicate that interoperability of social networks is evolving. Networks of networks are soon to emerge!

Social networking platforms like these really offer little for corporations today, let alone for security professionals, but this will change. And when it changes, the implications for information security could be significant.

If social networking today is about people-to-people networking, social networking tomorrow may well be about business-to-business. For several years, investment banks in New York, government departments in the U.S., and other industry groups have shared statistical data about security among themselves in small private social networks. The intelligence community and various police forces make sensitive data available to those who “need to know,” and ad hoc networks have formed all over the world to serve more specific purposes. But in the grand scheme of things, business-to-business social networking is very limited. Information security is rarely a competitive advantage (in fact, studies of stock price trends after companies have suffered serious data breaches indicate a surprisingly low correlation, so one could argue that it’s not a disadvantage at all), and most businesses recognize that the advantages in collaborating far outweigh the perceived or real disadvantages.

This still begs the question, “Why isn’t social networking more prevalent?” I would argue that it’s largely because the right software is lacking. We know that humans don’t communicate well online without help, and we can cite cases such as the catalytic point when OWASP moved to a wiki and theorize that when the right type of social networking technology is developed and introduced, behavior similar to what we have seen throughout history will happen.

Social Networking for the Security Industry

What this may look like for the security industry is hard to predict, but if we extrapolate today’s successful social networking phenomenon to the security industry, some useful scenarios emerge.

Start with the principle of reliability behind eBay, for instance, a highly successful auction site connecting individual sellers to individual buyers across the globe. Its success is based partially on a reputation economy, where community feedback about sellers largely dictates the level of trust a buyer has in connecting.

Now imagine a business-to-business eBay-type site that deals in information security. It could list “security services wanted” ads, allowing companies to offer up service contracts and the criteria for a match, and allow service providers to bid for work based on public ratings and credentials earned from performing similar work for similar clients.

eBay-type systems not only act as conduits to connect buyers and sellers directly, but potentially can provide a central data of market information on which others can trade. Take, for example, security software. How much should you pay for a license for the next big security source code scanning technology to check your 12 million lines of code? In the future, you may be able to consult the global database and discover that other companies like you paid an average of X.

In order for these types of social networks to succeed, they will likely have to operate on a “pay to play” model. It will be a challenge to seed them with enough data to make them enticing enough to play at the beginning, but I have no doubt the networks will emerge. Virus writers in Eastern Europe and China have long traded techniques via old-school bulletin boards, and we are starting to see the first stages of exploit exchanges and even an exploit auction site. Why shouldn’t the white hats take advantage of the same powerful networks?

Actually, large social networks of security geeks already exist. They typically use email distribution lists, a surprisingly old-school, yet effective, means of communication.

Before the 2008 Black Hat Conference, a U.S.-based “researcher” named Dan Kaminsky announced he was going to discuss details of a serious vulnerability in DNS. Within days, mailing lists such as “Daily Dave” were collaborating to speculate about what the exploit was and even share code. Imagine the effectiveness of a more professional social network where security engineers could share empirical data and results from tests and experiments.

Security in Numbers

Social networking isn’t just about connecting people into groups to trade. It’s a medium for crowdsourcing, which exploits the wisdom of crowds to predict information. This could also play an interesting role in the future security market.

To give you a simple example of crowdsourcing, one Friday at work someone on my team sent out a simple spreadsheet containing a quiz. It was late morning on the East Coast, and therefore late on Friday afternoon in Europe and late evening in our Hyderabad office. Most people on the team were in Redmond and so were sitting in traffic; most Brits were getting ready to drink beer and eat curry on Friday evening; and most Indians were out celebrating life. Here are the stats of what happened in the next 50 minutes:

Request started at 11:07 AM EST

Crowd size of 70+ across multiple time zones

Active listening crowd of probably less than 30 due to time zone differences

Participation from 8

Total puzzles = 30

Initial unsolved puzzles = 21

Total responses = 35

Total time taken to complete all puzzles = 50 minutes

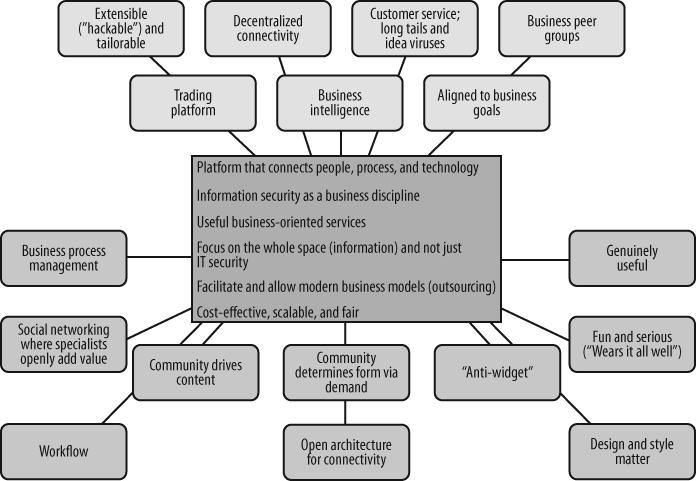

The brain is still the most powerful computer created (as of today), and when we build distributed networks of such powerful computers, we can expect great results. Networks can support evolving peer groups who wish to share noncompetitive information in a sheltered network. For social networking to be truly useful to the security industry, it will likely need to develop a few killer applications. My money is on the mass collection, sharing, and analysis of information and benchmarking. Participants can run benchmarks of service quality in relation to software cost, corporate vulnerabilities, and threat profiles. The potential scope is enormous, as shown through the MeMe map in Figure 9-1, taken from a security industry source.

Information Security Economics: Supercrunching and the New Rules of the Grid

Curphey turned to his friend Jeff Cave in an investment bank server room in London in the late ’90s and said, “What’s the time?” Cave replied, “Quite simply the measurement of distance in space, dear chap.”

Scene 1: Imagine you are picnicking by a river and you notice someone in distress in the water. You jump in and pull the person out. The mayor is nearby and pins a medal on you. You return to your picnic. A few minutes later, you spy a second person in the water. You perform a second rescue and receive a second medal. A few minutes later, a third person, a third rescue, and a third medal, and so on through the day. By sunset, you are weighed down with medals and honors. You are a local hero! Of course, somewhere in the back of your mind there is a sneaking suspicion that you should have walked upriver to find out why people were falling in all day—but then again, that wouldn’t have earned you as many awards.

Scene 2: Imagine you are a software tester. You find a bug. Your manager is nearby and pins a “bug-finder” award on you. A few minutes later, you find a second bug, and so on. By the end of the day, you are weighed down with “bug-finder” awards and all your colleagues are congratulating you. You are a hero! Of course, the thought enters your mind that maybe you should help prevent those bugs from getting into the system—but you squash it. After all, bug prevention doesn’t win nearly as many awards as bug hunting.

Simply put: what you measure is what you get. B. F. Skinner told us 50 years ago that rats and people tend to perform those actions for which they are rewarded. It’s still true today. As soon as developers find out that a metric is being used to evaluate them, they strive mightily to improve their performance relative to that metric—even if their actions don’t actually help the project. If your testers find out that you value finding bugs, you will end up with a team of bug-finders. If prevention is not valued, prevention will not be practiced. The same is of course true of many other security disciplines, such as tracking incidents, vulnerabilities, and intrusions.

Metrics and measurement for information security has become a trendy topic in recent years, although hardly a new one. Peter Drucker’s famous dictum, “If you can’t measure it, you can’t manage it,” just sounds like common sense and has been touted by good security managers as long as I can remember.

Determining the Return on Investment (ROI) for security practices has become something of a Holy Grail, sought by security experts in an attempt to appeal to the managers of firms (all of whom have read Drucker or absorbed similar ideas through exposure to their peers). The problem with ROI is that security is in one respect like gold mining. You find an enormous variance in the success rates of different miners (one could finish a season as a millionaire while another is still living in a shack 20 years later), but their successes cannot be attributed to their tools. Metrics about shovels and shifters will only confuse you. In short, ROI measures have trouble computing return on these kinds of high-risk investments. In my opinion, security ROI today is touted by shovel salesmen.

I have high hopes for metrics, but I think the current mania fails to appreciate the subtleties we need to understand. I prefer the term Economics to Metrics or Measurement, and I think that Information Security Economics will emerge as a discipline that could have a profound impact on how we manage risk.

Information Security Economics is about understanding the factors and relationships that affect security at a micro and macro level, a topic that has tremendous depth and could have a tremendous impact. If we compare the best efforts happening in the security industry today against the complex supercrunching in financial or insurance markets, we may be somewhat disillusioned, yet the same advanced techniques and technology can probably be applied to the new security discipline with equally effective results.

I recently read a wonderful story in the book Super Crunchers: Why Thinking-by-Numbers Is the New Way to Be Smart by Ian Ayres (Bantam Press) about a wine economist called Orley Ashenfelter. Ashenfelter is a statistician at Princeton who loves wine but is perplexed by the pomp and circumstance around valuing and rating wine in much the same way I am perplexed by the pomp and circumstance surrounding risk management today. In the 1980s, wine critics dominated the market with predictions based on their own reputations, palate, and frankly very little more. Ashenfelter, in contrast, studied the Bordeaux region of France and developed a statistic model about the quality of wine.

His model was based on the average rainfall in the winter before the growing season (the rain that makes the grapes plump) and the average sunshine during the growing season (the rays that make the grapes ripe), resulting in simple formula:

quality= 12.145 + (0.00117 *winter rainfall) + (0.0614 *average growing season temperature) (0.00386 *harvest rainfall)

Of course he was chastised and lampooned by the stuffy wine critics who dominated the industry, but after several years of producing valuable results, his methods are now widely accepted as providing important valuation criteria for wine. In fact, as it turned out, the same techniques were used by the French in the late 19th century during a wine census.

It’s clear that from understanding the factors that affect an outcome, we can build economic models. And the same principles—applying sound economic models based on science—can be applied to many information security areas.

For the field of security, the most salient aspect of Orley Ashenfelter’s work is the timing of his information. Most wine has to age for at least 18 months before you can taste it to ascertain its quality. This is of course a problem for both vineyards and investors. But with Ashenfelter’s formula, you can predict the wine’s quality on the day the grapes are harvested. This approach could be applied in security to answer simple questions such as, “If I train the software developers by X amount, how will that affect the security of the resulting system?” or “If we deploy this system in the country Hackistanovia with the following factors, what will be the resulting system characteristics?”

Of course, we can use this sort of security economics only if we have a suitably large set of prior data to crunch in the first place, as Ashenfelter did. The current phase, where the field is adopting metrics and measurement may be generating the data, and the upcoming supercrunching phase will be able to analyze it, but social networking will probably be the catalyst to prompt practitioners to share resources and create vast data warehouses from which we can analyze information and build models. Security economics may well provide a platform on which companies can demonstrate the cost-benefit ratios of various kinds of security and derive a competitive advantage implementing them.

Platforms of the Long-Tail Variety: Why the Future Will Be Different for Us All

A “platform” is a system that can be programmed and therefore customized by outside developers—users—and in that way, adapted to countless needs and niches that the platform’s original developers could not have possibly contemplated, much less had time to accommodate.

In October 2004, Chris Anderson, the editor in chief of the popular Wired Magazine, wrote an article about technology economics.[83] The article spawned a book called The Long Tail (Hyperion) that attempts to explain economic phenomena in the digital age and provide insight into opportunities for future product and service strategies.

The theory suggests that the distribution curve for products and services is altering to a skewed shape that concentrates a large portion of the demand in a “long tail”: many different products or service offerings that each enjoy a small consumer base. In many industries, there is a greater total demand for products and services the industries consider to be “niches” than for the products and services considered to be mainstays. Companies that exploit niche products and services previously thought to be uneconomical include iTunes, WordPress, YouTube, Facebook, and many other Internet economy trends.

I fundamentally believe that information security is a long-tail market, and I offer three criteria to support this statement:

Every business has multiple processes.

Processes that are similar in name between businesses are actually highly customized (i.e., no two businesses are the same).

Many processes are unique to small clusters of users.

To understand the possible implications of the long-tail theory for the information security industry, we can look to other long-tail markets and three key forces that drive change.

Democratization of Tools for Production

A long time ago, I stopped reading articles in the popular technology press (especially the security press). I sense that these journals generally write articles with the goal of selling more advertising, while bloggers generally write articles so people will read them. That is a subtle but important difference. If I read an article in the press, chances are that it includes commentary from a so-called “industry insider.” Usually, these are people who tell the reporter what they want to hear to get their names in print, and they’re rarely the people I trust and want to hear from. I read blogs because I listen to individuals with honest opinions. This trend is, of course, prevalent throughout the new economy and will become more and more important to information security. A practitioner at the heart of the industry is better at reporting (more knowledgeable and more in tune) than an observer.

Much as blogging tools have democratized publishing and GarageBand has democratized music production, tools will democratize information security. In fact, blogging has already had a significant effect, allowing thousands of security professionals to offer opinions and data.

The most far-reaching change will be the evolution of tools into platforms. In software terms, a platform is a system that can be reprogrammed and therefore customized by outside developers and users for countless needs and niches that the platform’s original developers could not have possibly contemplated, much less had time to accommodate. (This is the point behind the Andreessen quote that started this section.) When Google offered an API to access its search and mapping capabilities, it drove the service to a new level of use; the same occurred when Facebook offered a plug-in facility for applications.

As I’ll describe in the following section, a security platform will allow people to build the tools they want to solve the problems they are facing.

When we talk about platforms, we of course need to be careful. Any term that has the potential to sell more technology is hijacked by the media and its essence often becomes diluted. Quite a few tools are already advertised as security platforms, but few really are.

Democratization of Channels for Distribution

There’s no shortage of security information. Mailing lists, BBSs (yes, I am old), blogs, and community sites abound, along with professionally authored content. There’s also no shortage of technology, both open source and commercial. But in today’s economy, making information relevant is paramount, and is one of the key reasons for the success of Google, iTunes, and Amazon.com. Their rise has been attributed largely to their ability to aggregate massive amounts of data and filter it to make it relevant to the user. Filtering and ordering become especially critical in a world that blurs the distinction between what was traditionally called “professionally authored” and “amateur created.” This, in essence, is a better information distribution model.

Another characteristic that has democratized distribution in other long-tail markets is microchunking, a marketing strategy for delivering to each user exactly the product she wants—and no more. Microchunking also facilitates the use of new channels to reach customers.

The Long Tail uses the example of music, which for a couple decades was delivered in CD form only. These days, delivery options also include online downloads, cell phone ringtones, and materials for remix.

The security field, like much of the rest of the software industry, already offers one type of flexibility: you can install monitoring tools on your own systems or outsource them. In tomorrow’s world, security users will also want to remix offerings. They may want the best scanning engine from vendor A combined with the best set of signatures from Vendor B. In Boolean terminology, customers are looking for “And,” not “Or.”

The underlying consideration for security tools is that one size doesn’t fit all. Almost all corporate security people I talk to repeat this theme, sharing their own version for the 80/20 rule: 80% of the tool’s behavior meets your requirements, and you live with the 20% that doesn’t—but that 20% causes you 80% of your pain!

Let’s take threat-modeling tools for software. The key to mass appeal in the future will be to support all types of threat-modeling methodologies, including the users’ own twists and tweaks. Overlaying geodata on your own data concerning vulnerabilities and processing the mix with someone else’s visualization tools might help you see hotspots in a complex virtual world and distinguish the wood from the trees. These types of overlays may help us make better risk decisions based on business performance data. In an industry with a notoriously high noise-to-signal ratio, we will likely see tools emerge that produce higher signal quality faster, cheaper, and more efficiently than ever before.

Connection of Supply and Demand

Perhaps the biggest changes will take place in how the next generation connects people, process, and technology. Search, ontology (information architecture), and communities will all play important roles.

The advice from The Long Tail is this: people will tell you what they like and don’t like, so don’t try to predict—just measure and respond. Recommendations, reviews, and rankings are key components of what is called the reputation economy. These filters help people find things and present them in a contextually useful way.

Few information security tools today attempt to provide contextually useful information. What we will likely see are tools that merge their particular contributions with reputation mechanisms. A code review tool that finds a potential vulnerability may match it to crowdsourced advice, which is itself ranked by the crowd and then provides contextual information like “50% of people who found this vulnerability also had vulnerability X.” Ratings and ranking will help connect the mass supply of information with the demand.

To summarize the three trends in the democratization of security tools, I believe that real platforms will emerge in the security field that connect people, processes, and technology. They will be driven by the democratization of tools for production, the democratization of tools for distribution, and the connection of supply and demand. No two businesses are the same, and a true security platform will adapt to solving problems the original designers could have never anticipated.

Conclusion

I was fortunate enough to have been educated by the Information Security Group of Royal Holloway, University of London. They are the best in the business, period. Readers of The Da Vinci Code will recognize the name as the school where Sophie Neveu, the French cryptographer in the book, was educated.

Several years before I worked for Microsoft, Professor Fred Piper at the Information Security Group approached me for an opinion on the day that he was to speak at the British Computer Society. He posed to me a straightforward question: “Would Microsoft have been so successful if security was prominent in Windows from day one?” At this point, I should refer you back to my Upton Sinclair quote earlier in this chapter; but it does leave an interesting thought about the role security will have in the overall landscape of information technology evolution.

I was once accused of trivializing the importance of security when I put up a slide at a conference with the text “Security is less important than performance, which is less important than functionality,” followed by a slide with the text “Operational security is a business support function; get over your ego and accept it.” As a security expert, of course, I would never diminish the importance of security; rather, I create better systems by understanding the pressures that other user requirements place on experts and how we have to fit our solutions into place.

I started the chapter by saying that anyone would be foolish to predict the future, but I hope you will agree with me that the next several years in security are an interesting time to think about, and an even more interesting time to influence and shape. I hope that when I look back on this text and my blog in years to come, I’ll cringe at their resemblance to the cocktail-mixing house robots from movies of the 1970s. I believe the right elements are really coming together where technology can create better technology.

Advances in technology have been used to both arm and disarm the planet, to empower and oppress populations, and to attack and defend the global community and all it will have become. The areas I’ve pulled together in this chapter—from business process management, number crunching and statistical modeling, visualization, and long-tail technology—provide fertile ground for security management systems in the future that archive today’s best efforts in the annals of history. At least I hope so, for I hate mediocrity with a passion and I think security management systems today are mediocre at best!

Acknowledgments

This chapter is dedicated to my mother, Margaret Curphey, who passed away after an epileptic fit in 2004 at her house in the south of France. When I was growing up (a lot of time frankly off the rails), she always encouraged me to think big and helped me understand that there is nothing in life you can’t achieve if you put your mind to it. She made many personal sacrifices that led me to eventually find my calling (albeit later in life that she would have hoped) in the field of information security. She always used to say that it’s all good and well thinking big, but you have to do something about it as well. I am on the case, dear. I also, of course, owe a debt of gratitude for the continued support and patience of my wife, Cara, and the young hackers, Jack, Hana, and Gabe.

[80] One could, of course, argue that the 2008 credit crunch should have been predicted. The lapse may be the fault of prejudices fed to the programmers, rather than the sophistication of the programs.

[81] Does IT Matter?, Nicholas Carr, Harvard Business School Press, 2004.

[82] I can’t take credit for starting the wiki (Jeff Williams can); on the contrary, I actually opposed it because I thought it was unstructured and would lead to a deterioration in content quality. I learned a good lesson.