16

A Test Environment for Studying the Human-Likeness of Robotic Eye Movements*

Stefan Kohlbecher, Klaus Bartl, and Erich Schneider

University of Munich Hospital Munich, Germany

Jürgen Blume, Alexander Bannat, Stefan Sosnowski, Kolja Kühnlenz, and Gerhard Rigoll

Technische Universität München Munich, Germany

Frank Wallhoff

Jade University of Applied Sciences Oldenburg, Germany

CONTENTS

16.3.1 Handling of Eye Vergence

Portions reprinted, with permission, from "Experimental Platform for Wizard-of-Oz Evaluations of Biomimetic Active Vision in Robots," 2009 IEEE International Conference on Robotics and Biomimetics (ROBIO), © 2009 IEEE.

Abstract

A novel paradigm for the evaluation of human-robot interaction is proposed, with special focus on the importance of natural eye and head movements in nonverbal human-machine communication scenarios We present an experimental platform that will enable Wizard-of-Oz experiments in which a human experimenter (wizard) teleoperates a robotic head and eyes with his own head and eyes Because the robot is animated based on the nonverbal behaviors of the human experimenter, the whole range of human eye movements can be presented without having to implement a complete gaze behavior model first. The experimenter watches and reacts to the video stream of the participant, who directly interacts with the robot Results are presented that focus on those technical aspects of the experimental platform that enable real-time and human-like interaction capabilities In particular, the tracking of ocular motor dynamics, its replication in a robotic active vision system, and the involved teleoperation delays are evaluated This setup will help to answer the question of which aspects of human gaze and head movement behavior have to be implemented to achieve humanness in active vision systems of robots

16.1 Introduction

How important is it to resemble human performance in artificial eyes and which aspects of ocular and neck motor functionality are key to people's attribution of humanness to robot heads? In a first step toward answering this question, we have implemented an experimental platform that facilitates evaluations of human-like robotic eye and head movements in human-machine interactions. It is centered around a pair of artificial eyes exceeding human performance in terms of acceleration and angular velocity Due to their modular nature and small form factor, they were already integrated into two different robots

We also provide two distinct ways to control the robotic eye and head movements. There is a simple MATLAB (The MathWorks, Natick, MA, USA) interface that allows easy implementation of experiments in controlled environments But we also wanted to be able to examine eye and head movements in the context of natural conversation scenarios Facing the challenging task of implementing a complicated conversation flow model that involves speech recognition, synthesis, and semantic processing, we decided to take a shortcut and put a real human "inside" the robot who would not only speak and listen through the robot but also be able to naturally control the robot's eyes and head with his own eyes and head

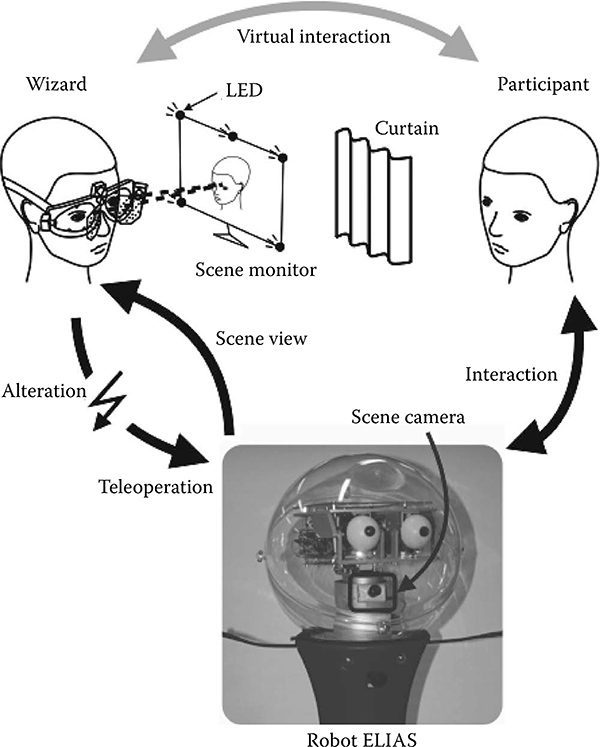

This novel Wizard-of-Oz scenario, in which the experimenter (wizard) teleoperates the head and eye movements of a robot (see Figure 16.1), ensures that the robot is equipped with natural, human-like eye movements from the beginning, without the need to implement a gaze model first.

FIGURE 16.1

Experimental platform for Wizard-of-Oz evaluations of gaze-based human-robot interaction. The hidden experimenter (wizard, left) teleoperates a robot (bottom) with his head and eyes. Equipped with a head-mounted eye tracker and a LED-based head tracker, the wizard sits in front of a calibrated computer screen that displays the video stream of the robot scene camera. Thereby, the wizard—and hence the robot—can observe and react to the actions of the human participant (right) With this setup, a natural gaze-based and nonverbal interaction can be established between the robot and a human participant. In future experiments the tele-operation channel will be systematically altered (denoted by a flash) in order to examine the effects of artificial changes in ocular motor functionality on, for example, participant-robot interaction performance © 2009 IEEE

Direct communication between humans normally occurs through speech But there are other, nonverbal channels, too, such as body language, gestures, facial expressions, and eye contact Eye movements are a vital component of communication (Argyle, Cook, and Argyle 1976; Emery 2000; Langton, Watt, and Bruce 2000) An overview of the different roles of gaze in human communication can be found in Argyle et al (1973)

The basis for verbal communication has previously been implemented in robotic systems Speech recognition and synthesis are an accepted means of human-machine interaction, used, for example, in call centers For eye contact, however, there does not exist such a sophisticated infrastructure, yet

In our work, we focus on the nonverbal part, in particular on eye and head movements Because a number of robotic vision systems already exist and can be found in the literature, we will give a short overview of the state of the art in this field.

16.1.1 State of the Art

The most obvious purpose a vision system fulfills is that of a sensor system for visual perception. For this purpose, simple systems with fixed stereo cameras can be used Humanoid robots in this category include HRP-2 (Kaneko et al 2004), Johnnie (Cupec, Schmidt, and Lorch 2005), and Justin (Ott et al. n.d.). Robots can also use their eyes as a display for natural human-robot interaction Eye movements are understood by any human and their meaning does not have to be learned The eyes must be moveable but do not have to provide actual vision for the robot Androids like ROMAN (Berns and Hirth 2006), Geminoid HI-1 (Ishiguro and Nishio 2007), and Repliee R1/Q2 (Matsui et al. 2005; Minato et al. 2004) are included in this category. Ideally, the vision system of the robot is used for both purposes This is demanding, because the eyes have to be fast and precise to minimize motion blur and their motions have to be independent of each other to support vergence movements, even when the head rotates around the line of sight (torsional movement) For human-like foveated vision, often peripheral cameras are used, which are either moved with the foveated cameras or are fixed. Cog (Brooks et al. 1999) and Kismet (Breazeal 2003; Breazeal et al 2000, 2001) from the Massachusetts Institute of Technology (MIT) both support a moveable head with eyes that are able to rotate around two independent vertical axes but with only one coupled horizontal axis, which limits vergence movements (as long as the head is not rolled) Whereas Cog has two cameras per eye for both foveal and peripheral vision, Kismet has only moveable foveal vision, with two head-fixed peripheral cameras The SARCOS robots DB and CB at Advanced Telecommunications Research Institute International (ATR) have biomimetic oculomotor control (Shibata and Schaal 2001; Shibata et al 2001; Ude, Gaskett, and Cheng 2006) Domo (Edsinger-Gonzales and Weber 2004) uses only wide-angle cameras, whereas ARMAR has foveal/peripheral vision Other robots with active vision are iCub (Beira et al 2006) and WE-4RII (Miwa et al 2004) Stand-alone systems have been developed as well (Samson et al 2006) There have also been efforts to move from the foveal/peripheral camera pair to a more anthropomorphic eye (Samson et al 2006) Apart from DB/CB none of the robots implement two fully independent eyes, but they share at least one common rotation axis With the exception of stand-alone vision systems (Samson et al 2006), no other system so far has considered ocular torsion

16.1.2 Motivation

In human-robot interaction, gaze-based communication with human performance on the robot side has a great advantage: humans do not have to learn how to use an artificial interface such as a mouse or a keyboard, but they can communicate immediately and naturally just like with other humans This is especially important for service robots who directly interact with people, some of whom might be older or disabled

To our knowledge, human likeness of robotic gaze behavior has not yet been examined with a gaze-based telepresence setup In contrast to the few studies that have applied only simple and stereotyped eye movements, in either virtual reality environments (Garau et al 2001; Lee, Badler, and Badler

2002) or with real robots (MacDorman et al 2005; Sakamoto et al 2007), our approach bears the potential to open up a new field by providing the whole range of coordinated eye and head movements Although in this work we only present technical aspects of our experimental platform, our long-term aim is not a telepresence system per se but rather to use it as a tool to identify which aspects of gaze a social robot should implement The reduction of critical aspects and the omission of unimportant functionalities will eventually simplify the final goal of an autonomous active vision system.

Instead of building a model for robotic interaction from scratch, as has been done, for example, in Breazeal (2003), Lee, Badler, and Badler (2002), and Sidner et al (2004), we use a top-down approach, in which we have a complete cognitive model of a robotic communication partner (controlled by a human). Then, we can restrict or alter its abilities bit by bit (see flash in Figure 16.1) to elucidate the key factors of gaze and head movement behavior in human-robot interactions

16.1.3 Objectives

We provide two different modes for controlling the eye and head movements For scripted experiments, three-dimensional coordinates can directly be fed to the robot Also, laser pointers can be attached to the robotic eyes, thus visualizing the lines of sight in both the real world and the scene camera By manually moving the eyes with the mouse, target points can be selected and later played back by a script

In the experimental Wizard-of-Oz setup outlined in Figure 16.1, the ability to replicate the whole range of ocular motor functionality and to manipulate different aspects is mandatory for enabling examinations of gaze-based human-robot interactions The platform needs to provide human-like interaction capabilities and, in particular, the dynamics of the motion devices at least need to achieve human performance in terms of velocity, acceleration, and bandwidth Most importantly, the critical delays introduced by the eye tracker and the motion devices as well as the teleoperation and video channels need to be short enough for operating the platform in real time To quantify these requirements, human eye movements are characterized by rotational speeds of up to 700 degrees and accelerations of 25,000 deg/s2 (Leigh and Zee 1999), with saccades, the fastest of all eye movements, occurring up to five times per second (Schneider et al. 2003). The cutoff frequency is in the range of 1 Hz (Glasauer 2007) and the delay (latency) of the fastest (vestibulo ocular) reflex is on the order of 10 ms (Leigh and Zee 1999). In addition, the total delay in the virtual interaction loop of Figure 16.1 must not exceed typical human reaction times of about 150 ms Results are presented that focus on those technical aspects that help to fulfill these requirements, thereby providing human-like interaction capabilities

16.2 Methods

The experimental Wizard-of-Oz platform (see Figure 16.1) mainly consists of an eye and head tracker, a robot head, a pair of camera motion devices (robot eyes), and a teleoperation link that connects the motion tracker with the motion devices In addition, there is a video channel that feeds back a view of the participant in the experimental scene In view of the required dynamics and real-time capabilities, the motion trackers and the robot eyes as well as the bidirectional data transmission channels were identified as the critical parts of this chain We will therefore present implementation details of our eye and head tracker and of our novel camera motion devices in subsequent sections

16.2.1 Robots

We have integrated the artificial eyes into two different robotic platforms, each with their specific fields of use.

16.2.1.1 ELIAS

ELIAS (see Figure 16.1) is based on a commercially available robotic platform SCITOS G5 (MetraLabs GmbH, Ilmenau, Germany) and serves as a research platform for human-robot interaction It consists of a mobile base with a differential drive accompanied by a laser scanner and sonar sensors used for navigation. The robotic platform is certified for use in public environments. The built-in industrial PC hosts the robotic-related services, like moving the platform Furthermore, this base is extended with a human-machine interface (HMI) featuring a robotic head, a touch screen, cameras, speakers, and microphones The touch screen is used for direct haptic user input; the cameras can provide visual saliency information, and the microphones are used for speech recognition and audio-based saliency information The modules for the interaction between the human and the robot are run on an additional PC, which is powered by the on-board batteries (providing enough power for the robot to operate for approximately 8 hours). For the communication between and within these computing nodes, two middlewares are applied and the services can be orchestrated using a knowledge-based controller as in Wallhoff et al (2010) However, the services can be additionally advertised using the Bonjour protocol and thereby allow other modules, such as the Wizard-of-Oz module, to request information (e g, current head position) or send control commands

The robotic head itself has two degrees of freedom (DOF; pan/tilt) and was originally equipped with 2-DOF pivotable eyes, which, however, did not meet our requirements and were therefore replaced We chose this particular robot head because, in contrast to a more human-like android platform, it possesses stylized facial features This allowed leaving aside uncanny valley effects that are likely to appear as soon as the robot looks almost human (Mori 1970) Furthermore, this choice reduced human likeness even more to eye and head movement behavior, as opposed to comparing the overall appearance

16.2.1.2 EDDIE

EDDIE (see Figure 16.2) is a robot head that was developed at the Institute of Automatic Control Engineering to investigate the effect of nonverbal communication on human-robot interaction, with the focus on emotional expressions (Sosnowski, Kühnlenz, and Buss 2006). A distinctive feature of this robot head is that it includes not only human-like facial features but also animal-like features (Kühnlenz, Sosnowski, and Buss 2010) The ears can be tilted as well as folded/unfolded and a crown with four feathers is included Accounting for the uncanny valley effect, the design of the head resembles a reasonable degree of familiarity to a human face while remaining clearly machine-like EDDIE has a total of 28 degrees of freedom, with 23 degrees of freedom in the face and 5 degrees of freedom in the neck The 5 degrees of freedom in the neck provide the necessary redundancy to approximate the flexibility and redundancy of the human neck when looking at given coordi-nates It utilizes an experimentally derived humanoid motion model, which solves the redundancy and models the joint trajectories and dependencies For the Wizard-of-Oz scenario, the head tracker data can be directly mapped to the joints of the neck, circumventing the motion model

FIGURE 16.2

Robot EDDIE.

Both robots have their unique advantages: ELIAS is a mobile robot that can be operated securely even in large crowds. We added the option to control this robot wirelessly for scenarios in which a certain latency is tolerable.

EDDIE is currently better suited for static experiments but has more human features and his neck is able to reproduce human head movements correctly.

16.2.2 Camera Motion Device

The most critical parts of the proposed Wizard-of-Oz platform are the camera motion devices that are used as robotic eyes. On the basis of earlier work (Schneider et al. 2005, 2009; Villgrattner and Ulbrich 2008a, 2008b; Wagner, Günthner, and Ulbrich 2006), we have developed a new 2-DOF camera motion device that was considerably reduced in size and weight (see Figure 16.3). At the center of its design there is a parallel kinematics setup with two piezo actuators (Physik Instrumente, Karlsruhe, Germany) that rotate the camera platform around a cardanic gimbal joint (Mädler, Stuttgart, Germany) by means of two push rods with spherical joints (Conrad, Hirschau, Germany). The push rods are attached to linear slide units (THK, Tokyo, Japan), each of which is driven by its own piezo actuator The position of each slide unit is measured by a combination of a magnetic position sensor (Sensitec, Lahnau, Germany) and a linear magnetic encoder scale with a resolution of 1 urn (Sensitec) that is glued to the slide unit The microprocessor (PIC, Microchip, Chandler, AZ) on the printed circuit board (PCB) implements two separate proportional-integral-derivative controllers, one for each actuator-sensor unit The controller outputs are fed into analog driver stages (Physik Instrumente), which deliver the appropriate signals to drive the piezo actuators The transformation of desired angular orientations to linear slide unit positions is performed by means of an inverse kinematic, which has been presented before (Villgrattner and Ulbrich 2008b).

FIGURE 16.3

Camera motion device (robot eye) with the most important parts and dimensions annotated (top). The camera is shown in different orientations: straight ahead (top), up (bottom left), and to the right (bottom right). © 2009 IEEE.

In the Results section we will report on the dynamical properties of the new camera motion device with special focus on the delay that is achieved between the movement of the wizard's eye and its replication in the artificial robot eye.

16.2.3 Eye and Head Tracker

The wizard sits in front of a video monitor that displays the image of the scene view camera that is attached to the robot's neck (see Figure 16.1). A head-mounted eye and head tracker with sampling rates of up to 500 Hz was implemented and used to calculate the intersection of the wizard's gaze with the video monitor plane (see Figures 16.1 and 16.4). Details of the eye-tracking part have been presented before (Boening et al. 2006; Schneider et al. 2009). For head-tracking purposes, the eye tracker was extended by a forward-facing, wide-angled scene camera operating in the near-infrared spectrum. The wizard's screen has five infrared light-emitting diodes (LEDs) arranged in a predefined geometrical setup. These LEDs are detected by the eye tracker's calibrated scene camera, so it is possible to calculate the goggles' position and with it the head pose

FIGURE 16.4

Eye and head tracker. Eye positions are measured by laterally attached FireWire (IEEE 1394) cameras. The eyes are illuminated with infrared light (850 nm) that is reflected by the translucent hot mirrors in front of the eyes. Another wide-angle camera above the forehead is used to determine head pose with respect to bright infrared LEDs on the video monitor (see Figure 16.1). A calibration laser with a diffraction grating is mounted beneath this camera. All cameras are synchronized to the same frame rate, which can be up to 500 Hz.

After a simple calibration procedure, which requires the subject to fixate on five different calibration targets projected by a head-fixed laser, the eye tracker yields eye directions in degrees. The fixation points are generated by a diffraction grating at equidistant angles, regardless of the distance to the projection Because at small distances a parallax error would be generated by the offset between eye and calibration laser, the laser dots are projected to a distant wall At a typical distance of 5 meters, the error decreases to 0.4 degrees. In view of the refixation accuracy of the human eye of about 1 degrees (Eggert 2007), this is a tolerable value

In an additional step, the wizard's origin of gaze, that is, the center of the eyeballs, with respect to the head coordinate system, has to be calculated This is done by letting the wizard fixate on two known points on the screen without moving his head The results are two gaze vectors per eye from which the eyeball center can be calculated in head coordinates After complete calibration, the point of gaze in the screen plane can be determined together with the head pose

During normal operation, the wizard is shown two markers on his screen as a feedback The calculated eye position is displayed together with the intersection of the head direction vector and the screen plane This is used to validate the calibration during the experiment Furthermore, the wizard has better control over the robot, because he sees where the robot's head points to

Depending on the robot's capabilities two, respectively five, head angles plus two eye angles for each eye are sent

In the Results section we will report on the accuracy, resolution, and real-time capable delays that we achieved with this eye tracker

16.2.4 Teleoperation Channel

The robot head (see Figure 16.1) is equipped with a forward-facing, wide-angle camera mounted on the neck The camera is tilted slightly upwards to point to the face level of humans in front of the robot The image of the scene camera is displayed on the wizard's video monitor

To keep the latency low, we decided to use two different transmission channels: a USB connection for the eye and head position signals and standard analog video equipment for the scene view, consisting of an analog video camera and a CRT video monitor, both operating at a 50 Hz PAL frame rate

This setup is well suited for static experiments, but if the robot is mobile, a wireless link is needed Therefore, we added the option to transmit the scene view video as well as the motor commands over a WiFi connection at the cost of a higher overall latency

In the Results section we will report on the measured latencies for each method A short total delay, preferably at only a fraction of the time of human reactions, is of particular importance for future experiments, because we intend to manipulate the teleoperation channel by introducing, for example, additional systematic delays (see flash in Figure 16.1) and test their effects on interaction performance. Therefore, the baseline delay needs to be as close to zero as possible

16.3 Discussion and Results

We have developed a novel experimental platform for Wizard-of-Oz evaluations of biomimetic properties of active vision systems for robots The main question was how well this platform is suited for the envisioned types of experiments Evidently, human eye movements have to be replicated with sufficient fidelity, and the combination of an eye tracker with camera motion devices has to fulfill rigid real-time requirements. In this section we quantify how well the overall design meets these requirements

16.3.1 Handling of Eye Vergence

One fundamental challenge was the question of how to handle vergence eye movements, which occur when points at different depths are fixated on. Given the geometry of the robot, in particular the eye positions and the kinematics of the head, it is possible to direct both lines of sight at one specific point in three-dimensional space This poses a problem in the Wizard-of-Oz scenario, where the wizard is only able to look at a flat surface (i.e., his screen). If the distance to the fixated object is sufficiently large (>6 m), the eyes are nearly parallel, but the nearer the fixation is, the more eye vergence becomes noticeable For now, we have resolved this problem by preselecting the distance at which the wizard fixates. This is an adequate solution for dialog scenarios, where the robot interacts with one or a few persons at roughly the same distance If the scene is static and more control is needed, regions of interest on the wizard's monitor could be mapped to distinct distances To reproduce eye vergence in its entirety, though, a binocular eye tracker combined with a stereoscopic monitor or head-mounted display would be needed

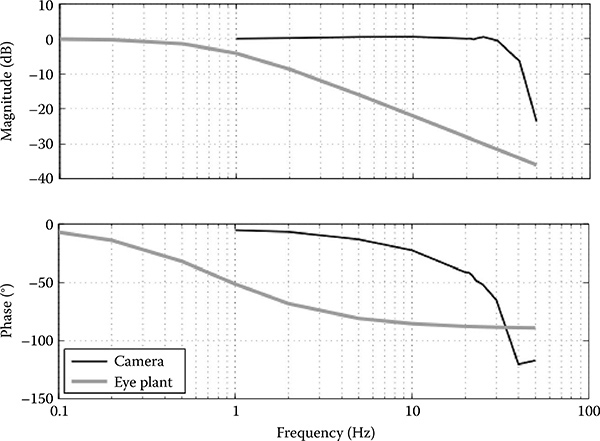

16.3.2 Camera Motion Device

The piezo actuators chosen for the new camera motion device proved particularly beneficial in the overall design. They are backlash free and each piezo actuator can generate a force of up to 2 N, which is sufficient for rotating the cameras with the required dynamics. We have measured the frequency response in a range of 1 to 30 Hz at a deflection amplitude of 2 degrees. The results are plotted in Figure 16.5. The bandwidth is characterized by a corner frequency of about 20 Hz, at which the phase shift reaches 45 degrees. It is therefore a magnitude above the human eye mechanics, with a corner frequency on the order of 1 Hz (Glasauer 2007).

FIGURE 16.5

Bode plot of the frequency response of the camera motion device (black). For comparison, the frequency response of a human eye plant is also plotted (gray). © 2009 IEEE.

In comparison with our previous designs, we were able to significantly reduce the dimensions of the camera motion device This was mainly due to replacing the optical encoders in our previous design (Villgrattner and Ulbrich 2008b) with new magnetic sensors, which were not only more accurate but allowed for a more compact configuration of all parts (see Figure 16.3). We also decided to give up the previous symmetrical design in favor of an asymmetrical one, which helped to additionally reduce device dimensions to an area of 30 mm x 37 mm. This reduction in size also contributed to a reduction of weight to 72 g, and both contributed to improved dynamical properties in terms of achievable velocities and acceleration. The maximal angular velocity measured around the primary position was 3,400 deg/s for both the horizontal and vertical directions. Accelerations were on the order of 170,000 deg/s2. These values are about five times above the values of the human ocular motor system

In the mechanical configuration of Figure 16.3 the sensor resolution of 1 urn yields an angular resolution of 0.01 degrees in the primary position. The workspace is characterized by maximal deflections of 40 and 35 degrees in horizontal and vertical directions, respectively.

16.3.3 Eye and Head Tracker

Eye trackers are usually characterized by their accuracy as well as their spatial and temporal resolution The accuracy of an eye tracker cannot exceed the accuracy with which the human eye is positioned on visual targets. Human refixations usually have a standard deviation on the order of 1 degrees (Eggert 2007). With our eye and head tracker we have measured differences of 0. 6 ± 0. 27 degrees between visual targets on the screen and the corresponding intersections of subjects' gaze vectors with the screen plane (Schneider et al. 2005). The spatial resolution, given as the standard deviation of eye position values during a fixation, is 0.02 degrees. The temporal resolution is determined by the high-speed FireWire cameras, which can be operated at frame rates of up to 600 Hz Such a sampling rate allows measurement of saccades, the fastest human eye movements

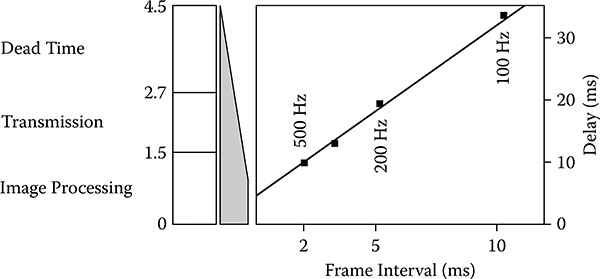

We used a USB-to-RS232 converter to connect the eye tracker with the camera motion device (see Figure 16.1). We then operated the eye tracker at frame rates of 100, 200, and 500 Hz and measured the delay between an artificial eye that was moved in front of an eye-tracker camera and the corresponding movement replicated by the robot eye. As expected, the total delay linearly depended on the time interval between two frames; that is, the inverse frame rate (see Figure 16.6). The delay at 500 Hz was 10 ms, which is on the order of the fastest ocular motor reflex latency in humans (Leigh and Zee 1999). We also measured the delays introduced by image processing, signal transmission, and dead times, which amounted to 1 5, 1. 2, and 1. 8 ms, respectively (see Figure 16.6).

FIGURE 16.6

Delays between eye and corresponding camera movements at different eye tracker frame rates. The system delay depends linearly on the frame interval of the camera. At 500 and 100 Hz the delays are 10 and 32 ms, respectively. The intercept at 4. 5 ms is the sum of the delays caused by image processing, signal transmission, and mechanical dead times. © 2009 IEEE.

In addition to the eye-tracker delay of 10 ms, the delay of the analog video transmission for the scene view (see Figure 16.1) needs to be considered. We measured this delay by using a flashing LED in front of ELIAS's scene camera and a photodiode on the wizard's video monitor This delay amounted to 20 ms The total delay in the virtual interaction loop was therefore 30 ms This is just a fraction of the typical human reaction time of 150 ms

For the wireless link, we measured a latency between 50 and 80 ms depending on synchronization of the camera and monitor, which led to an overall mean latency of 75 ms

16.4 Conclusion and Future Work

In this work we proposed a novel paradigm for the evaluation of humanrobot interaction, with special focus on the importance of natural eye and head movements in human-machine communication scenarios We also presented a unique experimental platform that will enable Wizard-of-Oz experiments in which a human experimenter (wizard) teleoperates a robotic head and eyes with his own head and eyes The platform mainly consists of an eye and head tracker and motion devices for the robot head and his eyes, as well as a feedback video transmission link for the view of the experimental scene We demonstrated that the eye tracker can measure the whole dynamical range of eye movements and that these movements can be replicated by the novel robotic eyes with more than sufficient fidelity. The camera motion device developed combines small dimensions with dynamical properties that exceed the requirements posed by the human ocular motor system In addition, by reducing all critical delays to a minimum, we met the rigid real-time requirements of an experimental platform that is designed to enable seamless human-machine interactions

Preliminary evaluation with human subjects showed a high acceptance of the system In future experiments we intend to answer the question of how important it is to resemble human performance in artificial eyes and which aspects of ocular and neck motor functionality are key to people's attribution of humanness to robotic active vision systems Possible experiments might examine the effects on human-robot interaction performance while the tele-operation channel is manipulated, for example, with artificial delays.

We also plan to control even more aspects of the robot by the wizard, for example, the eyelids (which directly map to the wizard's eye lids) or mouth movements, which would require an additional camera that tracks the wizard's mouth area

Acknowledgments

This work was supported in part within the DFG excellence initiative research cluster Cognition for Technical Systems-CoTeSys, by Deutsche Forschungsgemeinschaft (DFG: GL 342/1-3), by funds from the German Federal Ministry of Education and Research under the Grand code 01 EO 9091, and by the Institute for Advanced Study (IAS), Technische Universität München; see also http://www.tum-ias. de.

References

Argyle, M., Cook, M., and Argyle, M. 1976. Gaze and Mutual Gaze. Cambridge: Cambridge University Press.

Argyle, M., Ingham, R., Alkema, F., and McCallin, M. 1973. The different functions of gaze. Semiótica, 7: 10-32.

Beira, R., Lopes, M., Praça, M., Santos-Victor, J., Bernardino, A., Metta, G., Becchi, F., and Saltaren, R. 2006. Design of the Robot-Cub (iCub) head. Paper read at the IEEE International Conference on Robotics and Automation, May, Orlando, FL, May 15-19, 2006.

Berns, K., and Hirth, J. 2006. Control of facial expressions of the humanoid robot head ROMAN. Paper read at the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, October 9-15, 2006.

Boening, G., Bartl, K., Dera, T., Bardins, S., Schneider, E., and Brandt, T 2006 Mobile eye tracking as a basis for real-time control of a gaze driven head-mounted video camera. In Eye Tracking Research and Applications. New York: ACM.

Breazeal, C. 2003. Toward sociable robots. Robotics and Autonomous Systems, 42(3-4): 167-175

Breazeal, C., Edsinger, A., Fitzpatrick, P., and Scassellati, B 2001 Active vision for sociable robots. Systems, Man and Cybernetics, Part A, 31(5): 443-453.

Breazeal, C., Edsinger, A., Fitzpatrick, P., Scassellati, B., and Varchavskaia, P 2000 Social constraints on animate vision. IEEE Intelligent Systems, 15: 32-37.

Brooks, R., Breazeal, C., Marjanovic, M., Scassellati, B., and Williamson, M. 1999. The Cog Project: Building a humanoid robot. Lecture Notes in Computer Science, 52-87.

Cupec, R., Schmidt, G., and Lorch, O 2005 Vision-guided walking in a structured indoor scenario. Automatika-Zagreb, 46(1/2): 49.

Edsinger-Gonzales, A., and Weber, J 2004 Domo: a force sensing humanoid robot for manipulation research Paper read at the 4th IEEE/RAS International Conference on Humanoid Robots, Santa Monica, CA, November 10-12, 2004.

Eggert, T. 2007. Eye movement recordings: Methods. Developments in Ophthalmology, 40: 15-34

Emery, N 2000 The eyes have it: The neuroethology, function and evolution of social gaze. Neuroscience and Biobehavioral Reviews, 24(6): 581-604.

Garau, M., Slater, M., Bee, S., and Sasse, M 2001 The impact of eye gaze on communication using humanoid avatars. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems . New York: ACM.

Glasauer, S 2007 Current models of the ocular motor system Developments in Ophthalmology, 40: 158-174

Ishiguro, H., and Nishio, S. 2007. Building artificial humans to understand humans. Journal of Artificial Organs, 10(3): 133-142.

Kaneko, K., Kanehiro, F., Kajita, S., Hirukawa, H., Kawasaki, T., Hirata, M., Akachi, K., and Isozumi, T 2004 Humanoid robot HRP-2 Paper read at the IEEE International Conference on Robotics and Automation, New Orleans, LA, April 26-May 1, 2004.

Kühnlenz, K., Sosnowski, S., and Buss, M. 2010. Impact of animal-like features on emotion expression of robot head EDDIE. Advanced Robotics, 24(8-9): 1239-1255.

Langton, S., Watt, R., and Bruce, V. 2000. Do the eyes have it? Cues to the direction of social attention Trends in Cognitive Sciences, 4(2): 50-59

Lee, S., Badler, J., and Badler, N 2002 Eyes alive ACM Transactions on Graphics, 21(3): 637-644

Leigh, R. J., and Zee, D. S. 1999. The Neurology of Eye Movements. New York: Oxford University Press

MacDorman, K., Minato, T., Shimada, M., Itakura, S., Cowley, S., and Ishiguro, H 2005 Assessing human likeness by eye contact in an android testbed Paper read at the XXVII Annual Meeting of the Cognitive Science Society, Stresa, Italy, Juy 21-23, 2005.

Matsui, D., Minato, T., MacDorman, K., and Ishiguro, H 2005 Generating natural motion in an android by mapping human motion Paper read at the IEEE/RSJ International Conference on Intelligent Robots and Systems

Minato, T., Shimada, M., Ishiguro, H., and Itakura, S 2004 Development of an android robot for studying human-robot interaction Lecture Notes in Computer Science, 424-434

Miwa, H., Itoh, K., Matsumoto, M., Zecca, M., Takanobu, H., Roccella, S., Carrozza, M., Dario, P., and Takanishi, A 2004 Effective Emotional Expressions with Emotion Expression Humanoid Robot WE-4RII Paper read at International Conference on Intelligent Robots and Systems (IROS) Sendai, Japan, October 2, 2004

Mori, M. 1970. The uncanny valley. Energy, 7(4): 33-35.

Ott, C., Eiberger, O., Friedl, W., Bauml, B., Hillenbrand, U., Borst, C., Albu-Schaffer, A., Brunner, B., Hirschmuller, H., Kielhofer, S Konietschke, R., Suppa, M., Wimböck, T., Zacharias, F., and Hirginger, G. n. d. A humanoid two-arm system for dexterous manipulation Head and Neck 2: 43

Sakamoto, D., Kanda, T., Ono, T., Ishiguro, H., and Hagita, N 2007 Android as a telecommunication medium with a human-like presence. In Proceedings of the ACM/ IEEE International Conference on Human-Robot Interaction. New York: ACM.

Samson, E., Laurendeau, D., Parizeau, M., Comtois, S., Allan, J., and Gosselin, C 2006 The agile stereo pair for active vision. Machine Vision and Applications, 17(1): 32-50

Schneider, E., Bartl, K., Bardins, S., Dera, T., Boening, G., and Brandt, T 2005 Eye movement driven head-mounted camera: It looks where the eyes look Paper read at the IEEE International Conference on Systems, Man and Cybernetics.

Schneider, E., Glasauer, S., Brandt, T., and Dieterich, M 2003 Nonlinear nystagmus processing causes torsional VOR nonlinearity. Annals of the New York Academy of Sciences, 1004: 500-505

Schneider, E., Villgrattner, T., Vockeroth, J., Bartl, K., Kohlbecher, S., Bardins, S., Ulbrich, H., and Brandt, T 2009 EyeSeeCam: An eye movement-driven head camera for the examination of natural visual exploration Annals of the New York Academy of Sciences, 1164: 461-467

Shibata, T., and Schaal, S 2001 Biomimetic gaze stabilization based on feedback-error-learning with nonparametric regression networks Neural Networks, 14(2): 201-216

Shibata, T., Vijayakumar, S., Conradt, J., and Schaal, S 2001 Biomimetic oculomotor control Journal of Computer Vision, 1(4): 333-356

Sidner, C., Kidd, C., Lee, C., and Lesh, N 2004 Where to look: A study of humanrobot engagement. In Proceedings of the 9th International Conference on Intelligent User Interfaces. New York: ACM.

Sosnowski, S., Kühnlenz, K., and Buss, M. 2006. EDDIE—An emotion-display with dynamic intuitive expressions Paper read at the 15th IEEE International Symposium on Robot and Human Interactive Communication

Ude, A., Gaskett, C., and Cheng, G 2006 Foveated vision systems with two cameras per eye Paper read at the IEEE International Conference on Robotics and Automation

Villgrattner, T., and Ulbrich, H 2008a Control of a piezo-actuated pan/tilt camera motion device Paper read at the 11th International Conference on New Actuators, Bremen, Germany

Villgrattner, T., and Ulbrich, H 2008b Piezo-driven two-degree-of-freedom camera orientation system Paper presented at the IEEE International Conference on Industrial Technology

Wagner, P., Günthner, W., and Ulbrich, H 2006 Design and implementation of a parallel three-degree-of-freedom camera motion device Paper read at the Joint Conference of the 37th International Symposium on Robotics ISR 2006 and the German Conference on Robotics

Wallhoff, F., Blume, J., Bannat, A., Rösel, W., Lenz, C., and Knoll, A. 2010. A skill-based approach towards hybrid assembly. Advanced Engineering Informatics, 24(3): 329-339