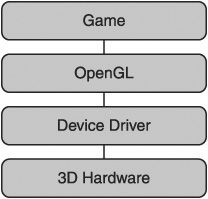

Every computer has special graphics hardware that controls what you see on the screen. OpenGL tells this hardware what to do. Figure 2.1 shows how OpenGL is used by a computer game, or any other piece of software, to issue commands to the graphics hardware using the device drivers supplied by the manufacturer.

The Open Graphics Library is one of the oldest, most popular graphics libraries game creators have. It was developed in 1992 by Silicon Graphics Inc. (SGI) but only really got interesting for game players when it was used for GLQuake in 1997. The GameCube, Wii, PlayStation 3, and the iPhone all base their graphics libraries on OpenGL.

The alternative to OpenGL is Microsoft’s DirectX. DirectX encompasses a larger number of libraries, including sound and input. It is more accurate to compare OpenGL to the Direct3D library in DirectX. The latest version of DirectX is DirectX 11. The Xbox 360 uses a version of DirectX 9.0. DirectX 10 and 11 will only work on computers with the Windows Vista or Windows 7 operating systems installed.

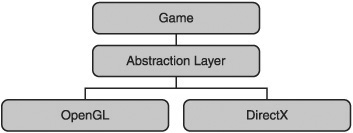

The feature sets of Direct3D and OpenGL are pretty much equivalent. Modern game engines—for example, Unreal—usually build in a layer of abstraction that allows the user to switch between OpenGL and Direct3D as they desire, as shown in Figure 2.2. This abstraction is required when producing multiplatform games that will be released for both the PlayStation 3 and Xbox 360. The Xbox 360 must use Direct3D calls, whereas the PS3 uses OpenGL calls.

DirectX and OpenGL are both excellent graphics libraries. DirectX works on Microsoft platforms, whereas OpenGL is available on a much wider range of platforms. DirectX tends to be updated more often, which means it has access to the very latest graphics features. OpenGL moves slower and the latest graphics features can only be accessed through a rather unfriendly extension mechanism. OpenGL’s slow movement can be quite advantageous as the interface rarely changes and therefore code written years ago will still work with the latest OpenGL versions. Each new version of DirectX changes the interface, which breaks compatibility and requires older code to be tweaked and changed to be updated.

DirectX can be used with C# using the Managed DirectX libraries; unfortunately, these libraries are no longer officially supported or updated. Managed DirectX has been superseded by Microsoft’s XNA game creation library. XNA uses DirectX, but it is a much higher level framework for quickly creating and prototyping games. SlimDX is an independent C# API for DirectX, which is a good alternative to Managed DirectX.

OpenGL is a C-style graphics library. C style means there are no classes or objects; instead, OpenGL is a large collection of functions. Internally, OpenGL is a state machine. Function calls alter the internal state of OpenGL, which then affects how it behaves and how it renders polygons to the screen. The fact that it is a state machine can cause some issues; you may experience a bug in some far flung region of your code by accidentally setting a state in some other area. For this reason, it is very important to carefully note which states are being changed.

The basic unit in OpenGL is the vertex. A vertex is, at its simplest, a point in space. Extra information can be attached to these points—how it maps to a texture, if it has a certain weight or color—but the most important piece of information is its position.

Games spend a lot of their time sending OpenGL vertices or telling OpenGL to move vertices in certain ways. The game may first tell OpenGL that all the vertices it’s going to send are to be made into triangles. In this case, for every three vertices OpenGL receives, it will attach them together with lines to create a polygon, and it may then fill in the surface with a texture or color.

Modern graphics hardware is very good at processing vast sums of vertices, making polygons from them and rendering them to the screen. This process of going from vertex to screen is called the pipeline. The pipeline is responsible for positioning and lighting the vertices, as well as the projection transformation. This takes the 3D data and transforms it to 2D data so that it can be displayed on your screen. A projection transformation may sound a little complicated, but the world’s painters and artists have been doing these transformations for centuries, painting and drawing the world around them on to a flat canvas.

Even modern 2D games are made using vertices. The 2D sprites are made up of two triangles to form a square. This is often referred to as a quad. The quad is given a texture and it becomes a sprite. Two-dimensional games use a special projection transformation that ignores all the 3D data in the vertices, as it’s not required for a 2D game.

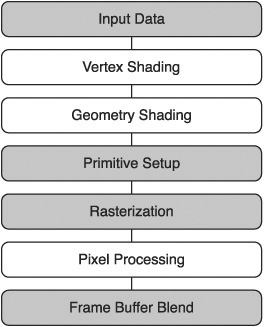

The pipeline flow is shown in Figure 2.3. This pipeline is known as the fixed function pipeline. This means once the programmer has given the input to the pipeline, all the functionality is set and cannot be modified—for instance, adding a blue tint to the screen could easily be done at the pixel processing stage, but in the fixed function pipeline the programmer has no direct control over this stage.

There are six main stages in the fixed function pipeline. Nearly all these stages can be applied in parallel. Each vertex can pass through a particular stage at the same time, provided the hardware supports it. This is what makes graphics cards so much faster than CPU processing.

Input stage. This input is given in the form of vertices, and all the vertices’ properties including position, color, and texture data.

Transform and lighting. The vertices are translated according to the current view. This involves changing the vertex positions from 3D space, also known as world space, to 2D space, also known as screen space. Some vertices can be discarded at this stage if they are not going to affect the final view. Lighting calculations may also be performed for each vertex.

Primitive setup. This is the process of creating the polygons from the vertex information. This involves connecting the vertices together according to the OpenGL states. Games most commonly use triangles or triangle strips as their primitives.

Rasterization. This is the process of converting the polygons to pixels (also called fragments).

Pixel processing. Pixel processing may also be referred to as fragment processing. The pixels are tested to determine if they will be drawn to the frame buffer at this stage.

Frame buffer. The frame buffer is a piece of memory that represents what will be displayed to the screen for this particular frame. Blend settings decide how the pixels will be blended with what has already been drawn.

In the last few years, the pipeline has become programmable. Programs can be uploaded to the graphics card and replace the default stages of the fixed function pipeline. You have probably heard of shaders; this is the name for the small programs that overwrite certain parts of the pipeline.

Figure 2.4 shows the stages of the programmable pipeline. Transform and lighting has been removed; instead, there are vertex and geometry shaders. The pixel processing stage has also become programmable, as a pixel shader. The vertex shader will be run on every vertex that passes through the pipeline. Vertex shaders cannot add vertices, but the properties such as position, color, and texture coordinates can be modified.

Geometry shaders were added a little more recently than pixel and vertex shaders. Geometry shaders take a whole primitive (such as a strip of lines, points, or triangles) as input and the vertex shader is run on every primitive. Geometry shaders can create new vertex information, points, lines, and even primitives. Geometry shaders are used to create point sprites and dynamic tessellation, among other effects. Point sprites are a way of quickly rendering a lot of sprites; it’s a technique that is often used for particle systems to create effects like fire and smoke. Dynamic tessellation is a way to add more polygons to a piece of geometry. This can be used to increase the smoothness of low polygon game models or add more detail as the camera zooms in on the model.

Pixel shaders are applied to each pixel that is sent to the frame buffer. Pixel shaders are used to create bump mapping effects, specular highlights, and per-pixel lighting. Bump map effects is a method to give a surface some extra height information. Bump maps usually consist of an image where each pixel represents a normal vector that describes how the surface is to be perturbed. The pixel shader can use a bump map to give a model a more interesting texture that appears to have some depth. Per-pixel lighting is a replacement for OpenGL’s default lighting equations that work out the light for each vertex and then give that vertex an appropriate color. Per-pixel uses a more accurate lighting model in which each pixel is lit independently. Specular highlights are areas on the model that are very shiny and reflect a lot of light.

This is a great time to learn OpenGL. The current version of OpenGL is very friendly to learners of the library. There are many easy-to-use functions and a lot of the graphics work can be done for you. Think of it like the current version of OpenGL is a bike that comes with training wheels that can be used to help you learn and then be removed when no longer needed. The next version of OpenGL is more like a performance motorbike, everything superfluous has been removed and all that remains is raw power. This is excellent for an experienced OpenGL programmer but a little intimidating for the beginner.

The versions of OpenGL available depend on the graphics cards drivers installed on your system. Nearly every computer supports OpenGL 2.1 and a reasonably recent graphics card will support OpenGL 3.x.

OpenGL ES is a modern version of OpenGL for embedded systems. It is quite similar to recent versions of OpenGL but with a more restricted set of features. It is used on high-end mobile phones such as the Android, BlackBerry, and iPhone. It is also used in military hardware for heads-up displays on things like warplanes.

OpenGL ES supports the programmable pipeline and has support for shaders.

WebGL is currently still in development, but it is a version of OpenGL specifically made for use over the web. Using it will require a browser that supports the HTML 5 canvas tag. It’s hard to know at this stage if it is going to be something that will take off. 3D for the web has been tried before and failed. For instance, VRML (Virtual Reality Modeling Language) was similar to HTML but allowed users to create 3D worlds; it had some academic popularity but never gained traction with general users.

WebGL has some promising backers; a number of big name companies such as Google, Apple, and Mozilla are in the WebGL working group and there are some impressive demos. Currently the performance is very reasonable and it is likely to compete with Flash if it matures.

OpenGL is a library that allows the programmer to send instructions to the graphics card. The graphics card is a piece of specialized hardware to display 3D data. Graphics cards are made up of a number of standard components including the frame buffer, texture memory, and the GPU. The GPU, graphics processing unit, controls how the vertices are processed and displayed on screen.

The CPU sends instructions and data to the GPU, describing how each frame should appear on the screen. The texture memory is usually a large store of memory that is used for storing the vast amount of texture data that games require. The frame buffer is an area of memory that stores the image that will be displayed on the screen in the next frame. Modern cards may have more than one GPU, and on each GPU are many shader processing units for doing massively parallel shader operations. This has been exploited by distributed applications such as Folding@home, which runs protein folding simulations, and on hundreds of thousands of computers all over the world.

The first popular 3D graphics card was the 3dfx Voodoo 1. This was one of the very early graphics cards; it had 2 megabytes of texture memory and 2 megabytes for the frame buffer, and it used the PCI bus and a clock speed of 135MHz. It accelerated early games such as Tomb Raider, Descent II, Quake, and the demo of Quake II, allowing the games to run much smoother and with greater detail. The Voodoo 1 used a standard PCI bus that allows the CPU to send data to the graphics card at an upper limit of 533MB/s. Modern cards have moved on from the PCI bus to the AGP (Accelerated Graphics Port), which achieved a maximum of 2GB/s and then on again to the PCI Express. Current generations of the PCI Express card can send up to 8GB/s.

New graphics cards seem to come out monthly, each faster than the last. At the time of this writing, the fastest card is probably the ATI Radeon HD 5970. It has two GPUs and each GPU has 1,600 shader processors. It has a clock speed of 725MHz and a total computing performance of 4.64 teraflops.

Most modern cards have unique specialized hardware for new operations. This hardware is exposed to OpenGL through the use of extensions. OpenGL is capable of exposing new functionality present in the driver and graphics card when given a string identifying the new extension. For example, the ATI Radeon HD 5970 has two GPUs; this is rather unusual, and to make full use of both GPUs, there are some new extension methods such as AMD_gpu_association. This extension allows the user to split tasks between the two GPUs. If a number of vendors implement the same extension, then it has the letters “EXT” somewhere in the extension string. In some cases, it is possible that the architecture review board that controls the OpenGL specification will elevate an extension to official status. In that case, the extension gets the letters “ARB” included in the string and all vendors are required to support it.

The term shader is a bit misleading. Initially, shader programs were primarily used to shade models by operating on the pixels that make up the surface of each polygon. Over time the abilities of shader programs have expanded; they can now change vertex properties, create new vertices, or even complete general operations.

Shaders operate differently from normal programs that run on the CPU. Shader programs are executed on a large number of elements all at the same time. This means that shader programs are massively parallel, whereas programs on the CPU are generally run serially and only one instance at a time. Shader programs are excellent for doing operations on the mass of pixels and vertices that are the building blocks of a 3D world.

Until recently there were three kinds of shaders—vertex, geometry, and pixel—and each could do only certain operations. Vertex shaders operated on vertices; pixel shaders on pixels; and geometry shaders on primitives. To reduce complexity and allow hardware makers to optimize more efficiently, all these types of shaders were replaced with one called the unified shader.

Shaders are programmed in special languages that run on the graphics card. At the moment, these languages are a lot lower level than C++ (never mind C#). OpenGL has a shading language called GLSL or Open GL Shading Language. It’s a little like C with lots of special operations for dealing with vectors and matrices. DirectX also has its own shading language called HLSL or High Level Shading Language. Both these languages are quite similar. To add to the confusion, there is a third language called Cg, which was developed by Microsoft and Nvidia; it is quite similar to HLSL.

Shaders in games are excellent for creating computationally heavy special effects like lighting of parallax mapping. The current technology makes shader programs useful for little else. This book will concentrate on game programming; therefore, the programmable pipeline won’t be covered as it’s a rather large topic. If you are interested, check the recommended reading section for several excellent books.

Shaders are on the cusp of becoming used for general parallel programming tasks rather than just graphics. For instance, the Nvidia PhysX library allows physics calculations to be done on the GPU rather than the CPU, resulting in much better performance. PhysX is written using yet another shader language called CUDA, but CUDA is a little different. It is a language that is less concerned about pixels and vertices and more concerned with general purpose parallel programming. If in your game you were simulating a city and came up with a novel parallel algorithm to update all the residents of the city, then this could be performed much faster on the GPU, and free the CPU for other tasks. CUDA is often used for scientific research projects as it is a cheap way to harness massive computing power. Some of the applications using CUDA include quantum chemistry calculations, simulating heart muscles, and modeling black holes.

The Tao Framework is a way for C# to use the OpenGL library. Tao wraps up a number of C libraries (shown in Table 2.1) and makes them easy to use from C#. Tao has bindings to Mono, so there’s support for Linux and Macs too.

Table 2.1. The Libraries in the Tao Framework

Library | Use |

|---|---|

Tao.OpenAl | OpenAL is a powerful sound library. |

Tao.OpenGl | OpenGL is the graphics library we’ll be using. |

Tao.Sdl | SDL (Simple DirectMedia Layer), a 2D library built on OpenGL. |

Tao.Platform.Windows | Support for using OpenGL with Windows.Forms. |

Tao.PhysFs | A wrapper for I/O, supports archives like .zips for game assets. |

Tao.FreeGlut | OpenGL Utility Toolkit is a set of wrappers for setting up an OpenGL program as well as some draw routines. |

Tao.Ode | Open Dynamics Engine is a real-time physics engine for games. |

Tao.Glfw | OpenGL Framework is a lightweight multiplatform wrapper class. |

Tao.DevIl | DevIL is an excellent package for loading various image types into OpenGL (bmp, tif, gif, png, etc.). |

Tao.Cg | Cg is a high-level shading language. |

Tao.Lua | Lua is one of the most common scripting languages used in the game industry. |

Tao.FreeType | A font package. |

Tao.FFmpeg | Mainly used for playing video. |

Tao gives C# access not only to OpenGL but a selection of other useful libraries.

OpenAL is short for Open Audio Library, and is a powerful open source library. It was the sound library used in BioShock, Quake 4, Doom III, and the recent Unreal games. It is modeled on OpenGL and has the same state machine style design and extension methods.

SDL, Simple Direct Media Layer, is a cross-platform library that supports input, sound, and graphics. It’s quite a popular library for game makers, especially for independent and open source games. One of the more famous open source games made with SDL is FreeCiv, a multiplayer Civilization clone. It’s also used in most Linux game ports.

PhysFs initially might sound like some kind of physics library, but it’s a small IO library. It allows all the game assets to be packaged in one big binary file or several small ones. Many commercial games have similar systems; for example, Doom’s wad system or Quake’s pak system. It allows games to be easily modified and updated once released.

FreeGLUT is a free version of the OpenGL Utility Toolkit. This is a library that has functions to get the user up and running with OpenGL right away. It has methods for receiving input from the keyboard and mouse. It also has methods for drawing various basic shapes, such as spheres, cubes, and even a teapot (this teapot is quite famous in computer graphics and was modeled by Martin Newell while at the University of Utah). The teapot is quite a complicated surface so it’s useful when testing new graphical techniques. The Toy Story animated movie features the teapot model and DirectX even has its own teapot creation method; D3DXCreateTeapot()). It’s often used when teaching OpenGL, but it is quite restrictive and rarely used for serious projects.

ODE, Open Dynamics Engine, is a multiplatform physics engine that does collision detection and rigid body simulation. It was used in the PC first-person shooter game, S.T.A.L.K.E.R. Glfw, is the third portable OpenGL wrapper that Tao provides access to. Glfw stands for OpenGL framework, and it looks to expand on the functionality provided by GLUT. If you don’t want to use SDL but do want to use a framework to access OpenGL, this may be worth looking at.

DevIL, Developer’s Image Library, is a library that loads textures from disk into OpenGL. It’s similar to OpenGL in that it’s a state machine and it has similar method names. It’s cross-platform and supports a wide range of different image formats (43!). Cg is one of the shader languages mentioned earlier in the chapter. Using Tao.Cg, shader programs can be loaded from a text file or string, processed, and used in OpenGL.

Lua is probably the most popular scripting language for game development. It’s a small, easily embedded language that’s very expressive. Tao.Lua lets functions and data pass between the script and C# program. Tao.FreeType is a basic font package that will convert a free type font to a bitmap. It has a very simple, usable interface.

The final library Tao provides is FFmpeg. The name comes from MPEG, a video standard, and FF, which means Fast Forward. It provides a way to play video. If you wanted to have a cutscene in your game, then this would be a good first stop.

Everything in Tao is totally open source. Most libraries are free to use in a commercial project, but it’s worth checking the license for the specifics. Tao is an excellent package for the budding game creator to get started with. It’s not in the scope of this book to investigate all these libraries; instead, we’ll just concentrate on the most important ones. The OpenGL and Tao.Platform.Windows libraries are used from Chapter 5 onwards. DevIL is covered in Chapter 6. Chapter 9 covers playing sound with OpenAL and handling gamepad input with SDL. All the libraries are useful so it’s worth taking the time to investigate any of the ones that sound interesting.

OpenGL and DirectX3D are the two major industrial graphics libraries in use today. These graphics libraries are a standardized way to talk to the underlying graphics hardware. Graphics hardware is usually made from several standard pieces and is extremely efficient at transforming 3D vertex information to a 2D frame on the screen. This transformation from 3D vertices to a 2D frame is known as the graphics pipeline. There are two types of graphics pipeline; the fixed pipeline, which cannot be programmed, and the programmable pipeline, which allows certain stages of the pipeline to be programmed using shaders.

The Tao Framework is a collection of useful libraries including OpenGL. C# can make use of the Tao Framework to write games using OpenGL. The Tao Framework also includes several other libraries useful for game development. OpenAL, DevIL, and SDL will all be used in this book to develop a simple side-scrolling shooter game.