Chapter 8

Binary Trees

In this chapter we switch from algorithms, the focus of Chapter 7, “Advanced Sorting,” to data structures. Binary trees are one of the fundamental data storage structures used in programming. They provide advantages that the data structures you’ve seen so far cannot. In this chapter you learn why you would want to use trees, how they work, and how to go about creating them.

Why Use Binary Trees?

Why might you want to use a tree? Usually, because it combines the advantages of two other structures: an ordered array and a linked list. You can search a tree quickly, as you can an ordered array, and you can also insert and delete items quickly, as you can with a linked list. Let’s explore these topics a bit before delving into the details of trees.

Slow Insertion in an Ordered Array

Imagine an array in which all the elements are arranged in order—that is, an ordered array—such as you saw in Chapter 2, “Arrays.” As you learned, you can quickly search such an array for a particular value, using a binary search. You check in the center of the array; if the object you’re looking for is greater than what you find there, you narrow your search to the top half of the array; if it’s less, you narrow your search to the bottom half. Applying this process repeatedly finds the object in O(log N) time. You can also quickly traverse an ordered array, visiting each object in sorted order.

On the other hand, if you want to insert a new object into an ordered array, you first need to find where the object will go and then move all the objects with greater keys up one space in the array to make room for it. These multiple moves are time-consuming, requiring, on average, moving half the items (N/2 moves). Deletion involves the same multiple moves and is thus equally slow.

If you’re going to be doing a lot of insertions and deletions, an ordered array is a bad choice.

Slow Searching in a Linked List

As you saw in Chapter 5, “Linked Lists,” you can quickly perform insertions and deletions on a linked list. You can accomplish these operations simply by changing a few references. These two operations require O(1) time (the fastest Big O time).

Unfortunately, finding a specified element in a linked list is not as fast. You must start at the beginning of the list and visit each element until you find the one you’re looking for. Thus, you need to visit an average of N/2 objects, comparing each one’s key with the desired value. This process is slow, requiring O(N) time. (Notice that times considered fast for a sort are slow for the basic data structure operations of insertion, deletion, and search.)

You might think you could speed things up by using an ordered linked list, in which the elements are arranged in order, but this doesn’t help. You still must start at the beginning and visit the elements in order because there’s no way to access a given element without following the chain of references to it. You could abandon the search for an element after finding a gap in the ordered sequence where it should have been, so it would save a little time in identifying missing items. Using an ordered list only helps make traversing the nodes in order quicker and doesn’t help in finding an arbitrary object.

Trees to the Rescue

It would be nice if there were a data structure with the quick insertion and deletion of a linked list, along with the quick searching of an ordered array. Trees provide both of these characteristics and are also one of the most interesting data structures.

What Is a Tree?

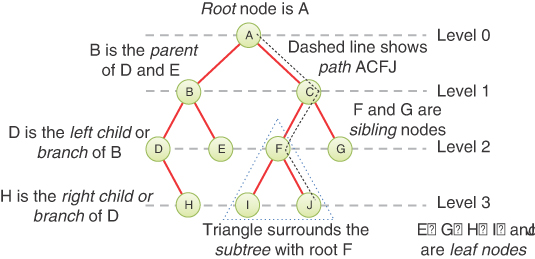

A tree consists of nodes connected by edges. Figure 8-1 shows a tree. In such a picture of a tree the nodes are represented as circles, and the edges as lines connecting the circles.

FIGURE 8-1 A general (nonbinary) tree

Trees have been studied extensively as abstract mathematical entities, so there’s a large amount of theoretical knowledge about them. A tree is actually an instance of a more general category called a graph. The types and arrangement of edges connecting the nodes distinguish trees and graphs, but you don’t need to worry about the extra issues graphs present. We discuss graphs in Chapter 14, “Graphs,” and Chapter 15, “Weighted Graphs.”

In computer programs, nodes often represent entities such as file folders, files, departments, people, and so on—in other words, the typical records and items stored in any kind of data structure. In an object-oriented programming language, the nodes are objects that represent entities, sometimes in the real world.

The lines (edges) between the nodes represent the way the nodes are related. Roughly speaking, the lines represent convenience: it’s easy (and fast) for a program to get from one node to another if a line connects them. In fact, the only way to get from node to node is to follow a path along the lines. These are essentially the same as the references you saw in linked lists; each node can have some references to other nodes. Algorithms are restricted to going in one direction along edges: from the node with the reference to some other node. Doubly linked nodes are sometimes used to go both directions.

Typically, one node is designated as the root of the tree. Just like the head of a linked list, all the other nodes are reached by following edges from the root. The root node is typically drawn at the top of a diagram, like the one in Figure 8-1. The other nodes are shown below it, and the further down in the diagram, the more edges need to be followed to get to another node. Thus, tree diagrams are small on the top and large on the bottom. This configuration may seem upside-down compared with real trees, at least compared to the parts of real trees above ground; the diagrams are more like tree root systems in a visual sense. This arrangement makes them more like charts used to show family trees with ancestors at the top and descendants below. Generally, programs start an operation at the small part of the tree, the root, and follow the edges out to the broader fringe. It’s (arguably) more natural to think about going from top to bottom, as in reading text, so having the other nodes below the root helps indicate the relative order of the nodes.

There are different kinds of trees, distinguished by the number and type of edges. The tree shown in Figure 8-1 has more than two children per node. (We explain what “children” means in a moment.) In this chapter we discuss a specialized form of tree called a binary tree. Each node in a binary tree has a maximum of two children. More general trees, in which nodes can have more than two children, are called multiway trees. We show examples of multiway trees in Chapter 9, “2-3-4 Trees and External Storage.”

Tree Terminology

Many terms are used to describe particular aspects of trees. You need to know them so that this discussion is comprehensible. Fortunately, most of these terms are related to real-world trees or to family relationships, so they’re not hard to remember. Figure 8-2 shows many of these terms applied to a binary tree.

FIGURE 8-2 Tree terms

Root

The node at the top of the tree is called the root. There is only one root in a tree, labeled A in the figure.

Path

Think of someone walking from node to node along the edges that connect them. The resulting sequence of nodes is called a path. For a collection of nodes and edges to be defined as a tree, there must be one (and only one!) path from the root to any other node. Figure 8-3 shows a nontree. You can see that it violates this rule because there are multiple paths from A to nodes E and F. This is an example of a graph that is not a tree.

FIGURE 8-3 A nontree

Parent

Any node (except the root) has exactly one edge connecting it to a node above it. The node above it is called the parent of the node. The root node must not have a parent.

Child

Any node may have one or more edges connecting it to nodes below. These nodes below a given node are called its children, or sometimes, branches.

Sibling

Any node other than the root node may have sibling nodes. These nodes have a common parent node.

Leaf

A node that has no children is called a leaf node or simply a leaf. There can be only one root in a tree, but there can be many leaves. In contrast, a node that has children is an internal node.

Subtree

Any node (other than the root) may be considered to be the root of a subtree, which also includes its children, and its children’s children, and so on. If you think in terms of families, a node’s subtree contains all its descendants.

Visiting

A node is visited when program control arrives at the node, usually for the purpose of carrying out some operation on the node, such as checking the value of one of its data fields or displaying it. Merely passing over a node on the path from one node to another is not considered to be visiting the node.

Traversing

To traverse a tree means to visit all the nodes in some specified order. For example, you might visit all the nodes in order of ascending key value. There are other ways to traverse a tree, as we’ll describe later.

Levels

The level of a particular node refers to how many generations the node is from the root. If you assume the root is Level 0, then its children are at Level 1, its grandchildren are at Level 2, and so on. This is also sometimes called the depth of a node.

Keys

You’ve seen that one data field in an object is usually designated as a key value, or simply a key. This value is used to search for the item or perform other operations on it. In tree diagrams, when a circle represents a node holding a data item, the key value of the item is typically shown in the circle.

Binary Trees

If every node in a tree has at most two children, the tree is called a binary tree. In this chapter we focus on binary trees because they are the simplest and the most common.

The two children of each node in a binary tree are called the left child and the right child, corresponding to their positions when you draw a picture of a tree, as shown in Figure 8-2. A node in a binary tree doesn’t necessarily have the maximum of two children; it may have only a left child or only a right child, or it can have no children at all (in which case it’s a leaf).

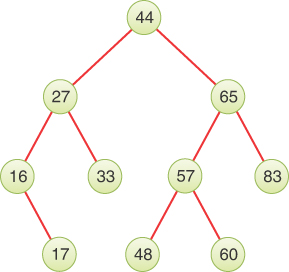

Binary Search Trees

The kind of binary tree we discuss at the beginning of this chapter is technically called a binary search tree. The keys of the nodes have a particular ordering in search trees. Figure 8-4 shows a binary search tree.

FIGURE 8-4 A binary search tree

Note

The defining characteristic of a binary search tree is this: a node’s left child must have a key less than its parent’s key, and a node’s right child must have a key greater than or equal to that of its parent.

An Analogy

One commonly encountered tree is the hierarchical file system on desktop computers. This system was modeled on the prevailing document storage technology used by businesses in the twentieth century: filing cabinets containing folders that in turn contained subfolders, down to individual documents. Computer operating systems mimic that by having files stored in a hierarchy. At the top of the hierarchy is the root directory. That directory contains “folders,” which are subdirectories, and files, which are like the paper documents. Each subdirectory can have subdirectories of its own and more files. These all have analogies in the tree: the root directory is the root node, subdirectories are nodes with children, and files are leaf nodes.

To specify a particular file in a file system, you use the full path from the root directory down to the file. This is the same as the path to a node of a tree. Uniform resource locators (URLs) use a similar construction to show a path to a resource on the Internet. Both file system pathnames and URLs allow for many levels of subdirectories. The last name in a file system path is either a subdirectory or a file. Files represent leaves; they have no children of their own.

Clearly, a hierarchical file system is not a binary tree because a directory may have many children. A hierarchical file system differs in another significant way from the trees that we discuss here. In the file system, subdirectories contain no data other than attributes like their name; they contain only references to other subdirectories or to files. Only files contain data. In a tree, every node contains data. The exact type of data depends on what’s being represented: records about personnel, records about components used to construct a vehicle, and so forth. In addition to the data, all nodes except leaves contain references to other nodes.

Hierarchical file systems differ from binary search trees in other aspects, too. The purpose of the file system is to organize files; the purpose of a binary search tree is more general and abstract. It’s a data structure that provides the common operations of insertion, deletion, search, and traversal on a collection of items, organizing them by their keys to speed up the operations. The analogy between the two is meant to show another familiar system that shares some important characteristics, but not all.

How Do Binary Search Trees Work?

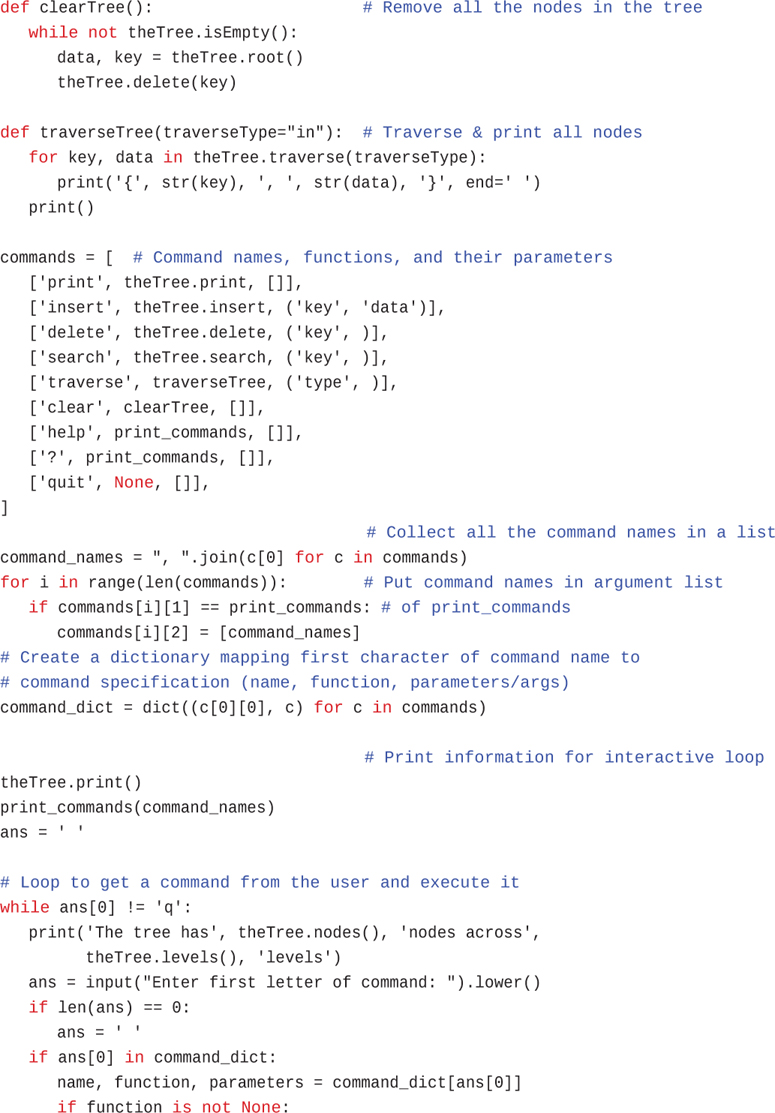

Let’s see how to carry out the common binary tree operations of finding a node with a given key, inserting a new node, traversing the tree, and deleting a node. For each of these operations, we first show how to use the Binary Search Tree Visualization tool to carry it out; then we look at the corresponding Python code.

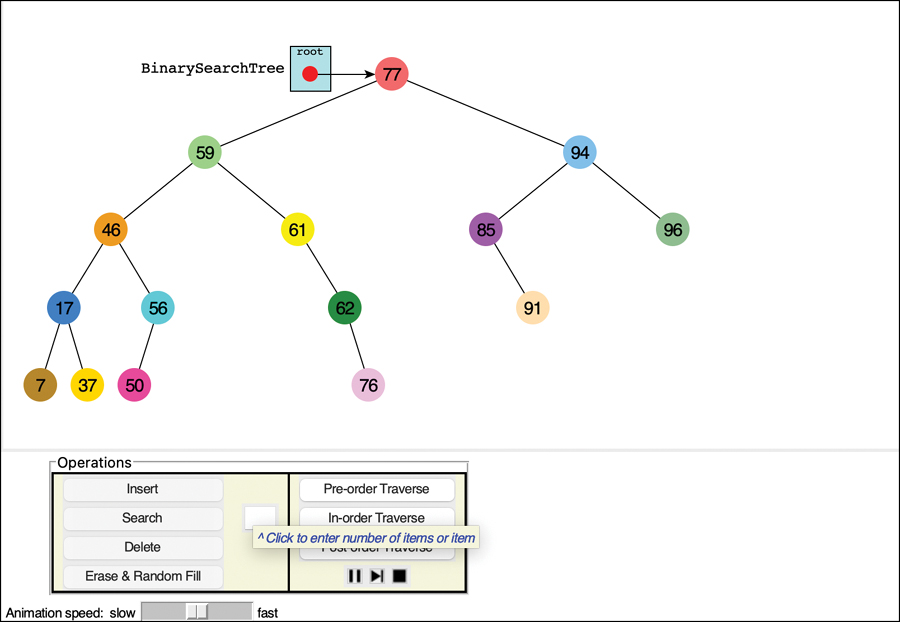

The Binary Search Tree Visualization Tool

For this example, start the Binary Search Tree Visualization tool (the program is called BinaryTree.py). You should see a screen something like that shown in Figure 8-5.

FIGURE 8-5 The Binary Search Tree Visualization tool

Using the Visualization Tool

The key values shown in the nodes range from 0 to 99. Of course, in a real tree, there would probably be a larger range of key values. For example, if telephone numbers were used for key values, they could range up to 999,999,999,999,999 (15 digits including country codes in the International Telecommunication Union standard). We focus on a simpler set of possible keys.

Another difference between the Visualization tool and a real tree is that the tool limits its tree to a depth of five; that is, there can be no more than five levels from the root to the bottom (level 0 through level 4). This restriction ensures that all the nodes in the tree will be visible on the screen. In a real tree the number of levels is unlimited (until the computer runs out of memory).

Using the Visualization tool, you can create a new tree whenever you want. To do this, enter a number of items and click the Erase & Random Fill button. You can ask to fill with 0 to 99 items. If you choose 0, you will create an empty tree. Using larger numbers will fill in more nodes, but some of the requested nodes may not appear. That’s due to the limit on the depth of the tree and the random order the items are inserted. You can experiment by creating trees with different numbers of nodes to see the variety of trees that come out of the random sequencing.

The nodes are created with different colors. The color represents the data stored with the key. We show a little later how that data is updated in some operations.

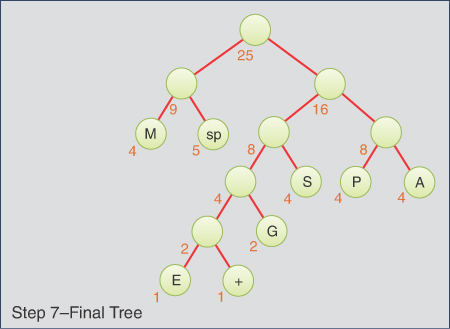

Constructing Trees

As shown in the Visualization tool, the tree’s shape depends both on the items it contains as well as the order the items are inserted into the tree. That might seem strange at first. If items are inserted into a sorted array, they always end up in the same order, regardless of their sequencing. Why are binary search trees different?

One of the key features of the binary search tree is that it does not have to fully order the items as they are inserted. When it adds a new item to an existing tree, it decides where to place the new leaf node by comparing its key with that of the nodes already stored in the tree. It follows a path from the root down to a missing child where the new node “belongs.” By choosing the left child when the new node’s key is less than the key of an internal node and the right child for other values, there will always be a unique path for the new node. That unique path means you can easily find that node by its key later, but it also means that the previously inserted items affect the path to any new item.

For example, if you start with an empty binary search tree and insert nodes in increasing key order, the unique path for each one will always be the rightmost path. Each insertion adds one more node at the bottom right. If you reverse the order of the nodes and insert them into an empty tree, each insertion will add the node at the bottom left because the key is lower than any other in the tree so far. Figure 8-6 shows what happens if you insert nodes with keys 44, 65, 83, and 87 in forward or reverse order.

FIGURE 8-6 Trees made by inserting nodes in sorted order

Unbalanced Trees

The trees shown in Figure 8-6, don’t look like trees. In fact, they look more like linked lists. One of the goals for a binary search tree is to speed up the search for a particular node, so having to step through a linked list to find the node would not be an improvement. To get the benefit of the tree, the nodes should be roughly balanced on both sides of the root. Ideally, each step along the path to find a node should cut the number of nodes to search in half, just like in binary searches of arrays and the guess-a-number game described in Chapter 2.

We can call some trees unbalanced; that is, they have most of their nodes on one side of the root or the other, as shown in Figure 8-7. Any subtree may also be unbalanced like the outlined one on the lower left of the figure. Of course, only a fully balanced tree will have equal numbers of nodes on the left and right subtrees (and being fully balanced, every node’s subtree will be fully balanced too). Inserting nodes one at a time on randomly chosen items means most trees will be unbalanced by one or more nodes as they are constructed, so you typically cannot expect to find fully balanced trees. In the following chapters, we look more carefully at ways to balance them as nodes are inserted and deleted.

FIGURE 8-7 An unbalanced tree (with an unbalanced subtree)

Trees become unbalanced because of the order in which the data items are inserted. If these key values are inserted randomly, the tree will be more or less balanced. When an ascending sequence (like 11, 18, 33, 42, 65) or a descending sequence is encountered, all the values will be right children (if ascending) or left children (if descending), and the tree will be unbalanced. The key values in the Visualization tool are generated randomly, but of course some short ascending or descending sequences will be created anyway, which will lead to local imbalances.

If a tree is created by data items whose key values arrive in random order, the problem of unbalanced trees may not be too much of a problem for larger trees because the chances of a long run of numbers in a sequence is small. Sometimes, however, key values will arrive in strict sequence; for example, when someone doing data entry arranges a stack of forms into alphabetical order by name before entering the data. When this happens, tree efficiency can be seriously degraded. We discuss unbalanced trees and what to do about them in Chapters 9 and 10.

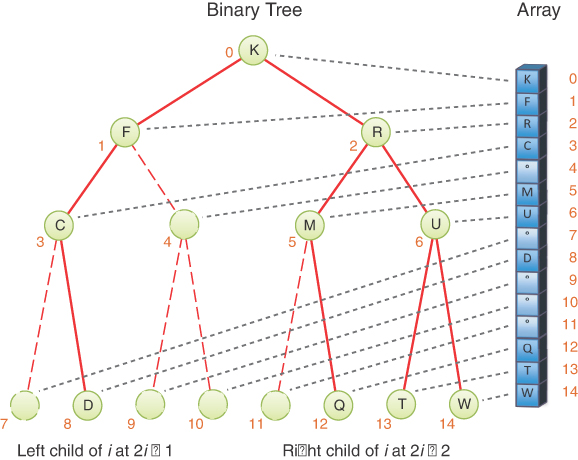

Representing the Tree in Python Code

Let’s start implementing a binary search tree in Python. As with other data structures, there are several approaches to representing a tree in the computer’s memory. The most common is to store the nodes at (unrelated) locations in memory and connect them using references in each node that point to its children.

You can also represent a tree in memory as an array, with nodes in specific positions stored in corresponding positions in the array. We return to this possibility at the end of this chapter. For our sample Python code we’ll use the approach of connecting the nodes using references, similar to the way linked lists were implemented in Chapter 5.

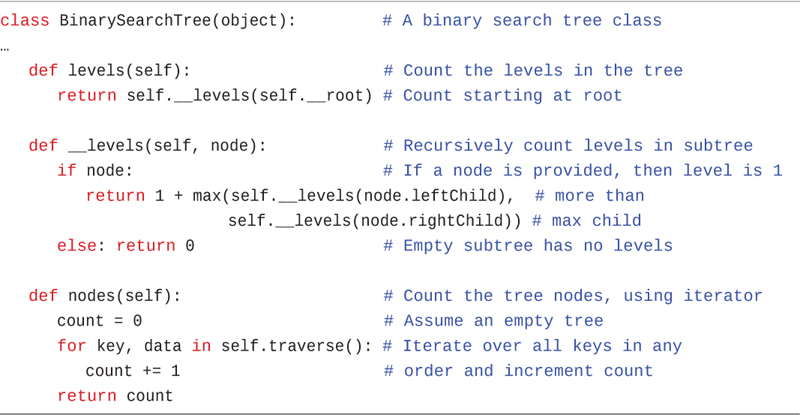

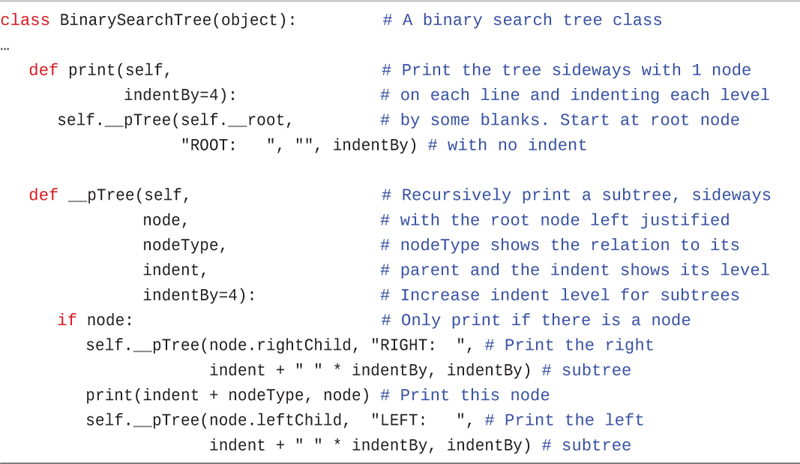

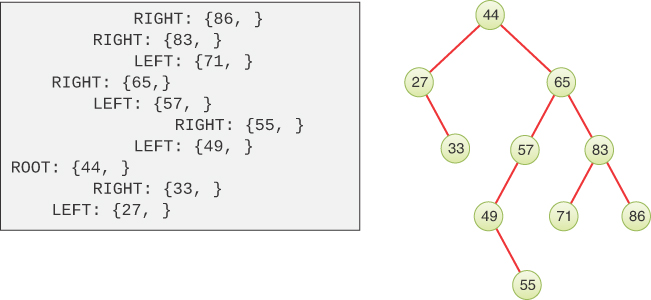

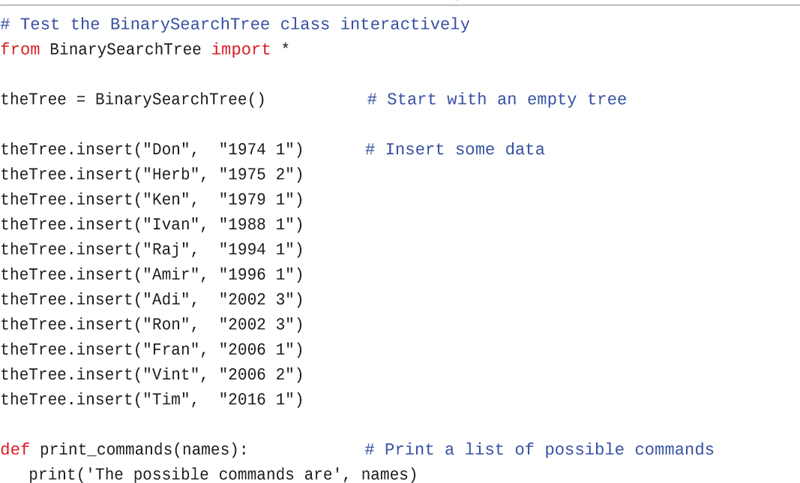

The BinarySearchTree Class

We need a class for the overall tree object: the object that holds, or at least leads to, all the nodes. We’ll call this class BinarySearchTree. It has only one field, __root, that holds the reference to the root node, as shown in Listing 8-1. This is very similar to the LinkedList class that was used in Chapter 5 to represent linked lists. The BinarySearchTree class doesn’t need fields for the other nodes because they are all accessed from the root node by following other references.

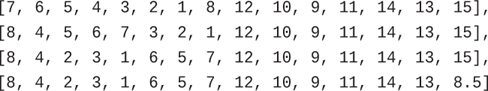

LISTING 8-1 The Constructor for the BinarySearchTree Class

class BinarySearchTree(object): # A binary search tree class def __init__(self): # The tree organizes nodes by their self.__root = None # keys. Initially, it is empty.

The constructor initializes the reference to the root node as None to start with an empty tree. When the first node is inserted, __root will point to it as shown in the Visualization tool example of Figure 8-5. There are, of course, many methods that operate on BinarySearchTree objects, but first, you need to define the nodes inside them.

The __Node Class

The nodes of the tree contain the data representing the objects being stored (contact information in an address book, for example), a key to identify those objects (and to order them), and the references to each of the node’s two children. To remind us that callers that create BinarySearchTree objects should not be allowed to directly alter the nodes, we make a private __Node class inside that class. Listing 8-2 shows how an internal class can be defined inside the BinarySearchTree class.

LISTING 8-2 The Constructors for the __Node and BinarySearchTree Classes

class BinarySearchTree(object): # A binary search tree class … class __Node(object): # A node in a binary search tree def __init__( # Constructor takes a key that is self, # used to determine the position key, # inside the search tree, data, # the data associated with the key left=None, # and a left and right child node right=None): # if known self.key = key # Copy parameters to instance self.data = data # attributes of the object self.leftChild = left self.rightChild = right def __str__(self): # Represent a node as a string return "{" + str(self.key) + ", " + str(self.data) + "}" def __init__(self): # The tree organizes nodes by their self.__root = None # keys. Initially, it is empty. def isEmpty(self): # Check for empty tree return self.__root is None def root(self): # Get the data and key of the root node if self.isEmpty(): # If the tree is empty, raise exception raise Exception("No root node in empty tree") return ( # Otherwise return root data and its key self.__root.data, self.__root.key)

The __Node objects are created and manipulated by the BinarySearchTree’s methods. The fields inside __Node can be initialized as public attributes because the BinarySearchTree‘s methods take care never to return a __Node object. This declaration allows for direct reading and writing without making accessor methods like getKey() or setData(). The __Node constructor simply populates the fields from the arguments provided. If the child nodes are not provided, the fields for their references are filled with None.

We add a __str__() method for __Node objects to aid in displaying the contents while debugging. Notably, it does not show the child nodes. We discuss how to display full trees a little later. That’s all the methods needed for __Node objects; all the rest of the methods you define are for BinarySearchTree objects.

Listing 8-2 shows an isEmpty() method for BinarySearchTree objects that checks whether the tree has any nodes in it. The root() method extracts the root node’s data and key. It’s like peek() for a queue and raises an exception if the tree is empty.

Some programmers also include a reference to a node’s parent in the __Node class. Doing so simplifies some operations but complicates others, so we don’t include it here. Adding a parent reference achieves something similar to the DoublyLinkedList class described in Chapter 5, “Linked Lists”; it’s useful in certain contexts but adds complexity.

We’ve made another design choice by storing the key for each node in its own field. For the data structures based on arrays, we chose to use a key function that extracts the key from each array item. That approach was more convenient for arrays because storing the keys separately from the data would require the equivalent of a key array along with the data array. In the case of node class with named fields, adding a key field makes the code perhaps more readable and somewhat more efficient by avoiding some function calls. It also makes the key more independent of the data, which adds flexibility and can be used to enforce constraints like immutable keys even when data changes.

The BinarySearchTree class has several methods. They are used for finding, inserting, deleting, and traversing nodes; and for displaying the tree. We investigate them each separately.

Finding a Node

Finding a node with a specific key is the simplest of the major tree operations. It’s also the most important because it is essential to the binary search tree’s purpose.

The Visualization tool shows only the key for each node and a color for its data. Keep in mind that the purpose of the data structure is to store a collection of records, not just the key or a simple color. The keys can be more than simple integers; any data type that can be ordered could be used. The Visualization and examples shown here use integers for brevity. After a node is discovered by its key, it’s the data that’s returned to the caller, not the node itself.

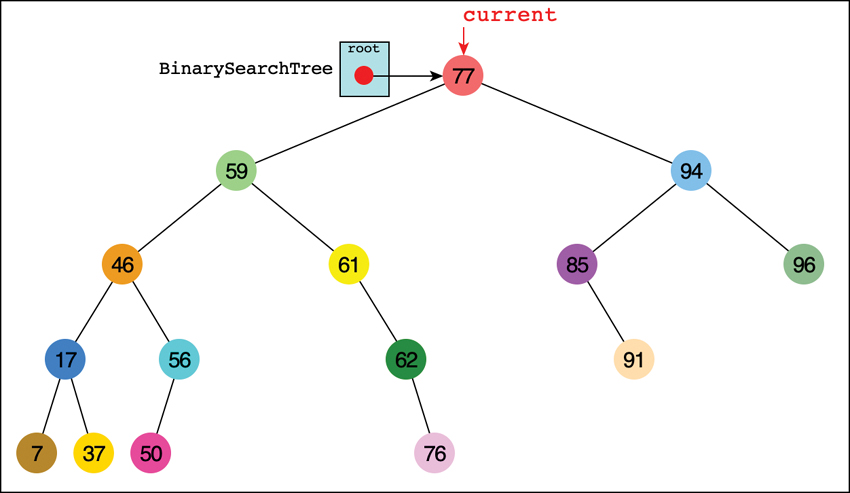

Using the Visualization Tool to Find a Node

Look at the Visualization tool and pick a node, preferably one near the bottom of the tree (as far from the root as possible). The number shown in this node is its key value. We’re going to demonstrate how the Visualization tool finds the node, given the key value.

For purposes of this discussion, we choose to find the node holding the item with key value 50, as shown in Figure 8-8. Of course, when you run the Visualization tool, you may get a different tree and may need to pick a different key value.

FIGURE 8-8 Finding the node with key 50

Enter the key value in the text entry box, hold down the Shift key, and select the Search button, and then the Step button,  . By repeatedly pressing the Step button, you can see all the individual steps taken to find key 50. On the second press, the

. By repeatedly pressing the Step button, you can see all the individual steps taken to find key 50. On the second press, the current pointer shows up at the root of the tree, as seen in Figure 8-8. On the next click, a parent pointer shows up that will follow the current pointer. Ignore that pointer and the code display for a moment; we describe them in detail shortly.

As the Visualization tool looks for the specified node, it makes a decision at the current node. It compares the desired key with the one found at the current node. If it’s the same, it’s found the desired node and can quit. If not, it must decide where to look next.

In Figure 8-8 the current arrow starts at the root. The program compares the goal key value 50 with the value at the root, which is 77. The goal key is less, so the program knows the desired node must be on the left side of the tree—either the root’s left child or one of that child’s descendants. The left child of the root has the value 59, so the comparison of 50 and 59 will show that the desired node is in the left subtree of 59. The current arrow goes to 46, the root of that subtree. This time, 50 is greater than the 46 node, so it goes to the right, to node 56, as shown in Figure 8-9. A few steps later, comparing 50 with 56 leads the program to the left child. The comparison at that leaf node shows that 50 equals the node’s key value, so it has found the node we sought.

FIGURE 8-9 The second to last step in finding key 50

The Visualization tool changes a little after it finds the desired node. The current arrow changes into the node arrow (and parent changes into p). That’s because of the way variables are named in the code, which we show in the next section. The tool doesn’t do anything with the node after finding it, except to encircle it and display a message saying it has been found. A serious program would perform some operation on the found node, such as displaying its contents or changing one of its fields.

Python Code for Finding a Node

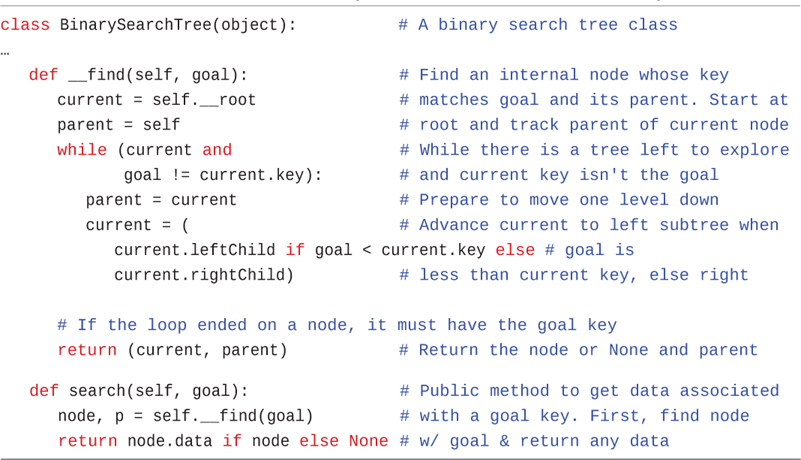

Listing 8-3 shows the code for the __find() and search() methods. The __find() method is private because it can return a node object. Callers of the BinarySearchTree class use the search() method to get the data stored in a node.

LISTING 8-3 The Methods to Find a Binary Search Tree Node Based on Its Key

class BinarySearchTree(object): # A binary search tree class … def __find(self, goal): # Find an internal node whose key current = self.__root # matches goal and its parent. Start at parent = self # root and track parent of current node while (current and # While there is a tree left to explore goal != current.key): # and current key isn't the goal parent = current # Prepare to move one level down current = ( # Advance current to left subtree when current.leftChild if goal < current.key else # goal is current.rightChild) # less than current key, else right # If the loop ended on a node, it must have the goal key return (current, parent) # Return the node or None and parent def search(self, goal): # Public method to get data associated node, p = self.__find(goal) # with a goal key. First, find node return node.data if node else None # w/ goal & return any data

The only argument to __find() is goal, the key value to be found. This routine creates the variable current to hold the node currently being examined. The routine starts at the root – the only node it can access directly. That is, it sets current to the root. It also sets a parent variable to self, which is the tree object. In the Visualization tool, parent starts off pointing at the tree object. Because parent links are not stored in the nodes, the __find() method tracks the parent node of current so that it can return it to the caller along with the goal node. This capability will be very useful in other methods. The parent variable is always either the BinarySearchTree being searched or one of its __Node objects.

In the while loop, __find() first confirms that current is not None and references some existing node. If it doesn’t, the search has gone beyond a leaf node (or started with an empty tree), and the goal node isn’t in the tree. The second part of the while test compares the value to be found, goal, with the value of the current node’s key field. If the key matches, then the loop is done. If it doesn’t, then current needs to advance to the appropriate subtree. First, it updates parent to be the current node and then updates current. If goal is less than current’s key, current advances to its left child. If goal is greater than current’s key, current advances to its right child.

Can't Find the Node

If current becomes equal to None, you’ve reached the end of the line without finding the node you were looking for, so it can’t be in the tree. That could happen if the root node was None or if following the child links led to a node without a child (on the side where the goal key would go). Both the current node (None) and its parent are returned to the caller to indicate the result. In the Visualization tool, try entering a key that doesn’t appear in the tree and select Search. You then see the current pointer descend through the existing nodes and land on a spot where the key should be found but no node exists. Pointing to “empty space” indicates that the variable’s value is None.

Found the Node

If the condition of the while loop is not satisfied while current references some node in the tree, then the loop exits, and the current key must be the goal. That is, it has found the node being sought and current references it. It returns the node reference along with the parent reference so that the routine that called __find() can access any of the node’s (or its parent’s) data. Note that it returns the value of current for both success and failure of finding the key; it is None when the goal isn’t found.

The search() method calls the __find() method to set its node and parent (p) variables. That’s what you see in the Visualization tool after the __find() method returns. If a non-None reference was found, search() returns the data for that node. In this case, the method assumes that data items stored in the nodes can never be None; otherwise, the caller would not be able to distinguish them.

Tree Efficiency

As you can see, the time required to find a node depends on its depth in the tree, the number of levels below the root. If the tree is balanced, this is O(log N) time, or more specifically O(log2 N) time, the logarithm to base 2, where N is the number of nodes. It’s just like the binary search done in arrays where half the nodes were eliminated after each comparison. A fully balanced tree is the best case. In the worst case, the tree is completely unbalanced, like the examples shown in Figure 8-6, and the time required is O(N). We discuss the efficiency of __find() and other operations toward the end of this chapter.

Inserting a Node

To insert a node, you must first find the place to insert it. This is the same process as trying to find a node that turns out not to exist, as described in the earlier “Can’t Find the Node” section. You follow the path from the root toward the appropriate node. This is either a node with the same key as the node to be inserted or None, if this is a new key. If it’s the same key, you could try to insert it in the right subtree, but doing so adds some complexity. Another option is to replace the data for that node with the new data. For now, we allow only unique keys to be inserted; we discuss duplicate keys later.

If the key to insert is not in the tree, then __find() returns None for the reference to the node along with a parent reference. The new node is connected as the parent’s left or right child, depending on whether the new node’s key is less or greater than that of the parent. If the parent reference returned by __find() is self, the BinarySearchTree itself, then the node becomes the root node.

Figure 8-10 illustrates the process of inserting a node, with key 31, into a tree. The __find(31) method starts walking the path from the root node. Because 31 is less than the root node key, 44, it follows the left child link. That child’s key is 27, so it follows that child’s right child link. There it encounters key 33, so it again follows the left child link. That is None, so __find(31) stops with the parent pointing at the leaf node with key 33. The new leaf node with key 31 is created, and the parent’s left child link is set to reference it.

FIGURE 8-10 Inserting a node in binary search tree

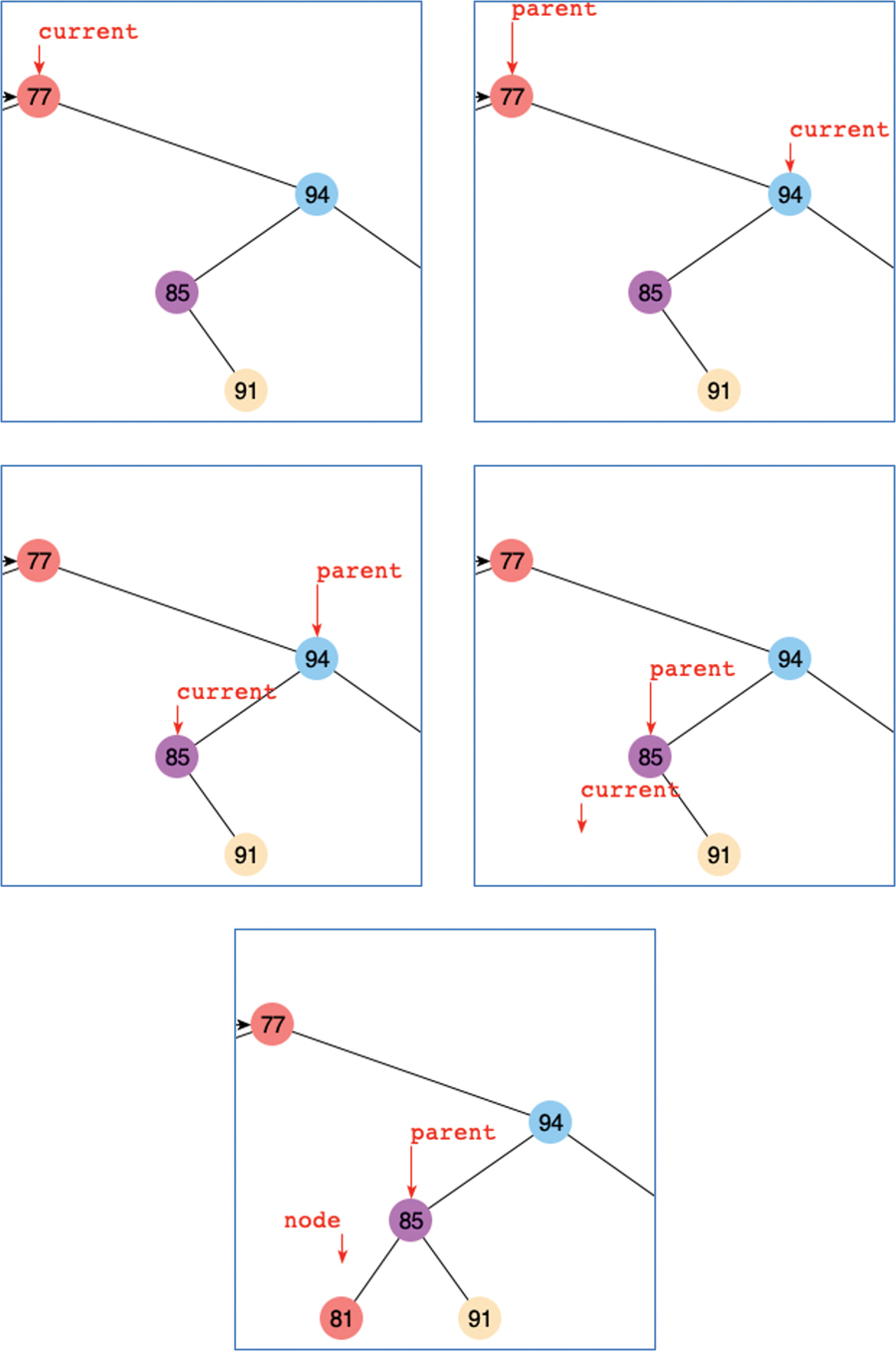

Using the Visualization Tool to Insert a Node

To insert a new node with the Visualization tool, enter a key value that’s not in the tree and select the Insert button. The first step for the program is to find where it should be inserted. For example, inserting 81 into the tree from an earlier example calls the __find() method of Listing 8-3, which causes the search to follow the path shown in Figure 8-11.

FIGURE 8-11 Steps for inserting a node with key 81 using the Visualization tool

The current pointer starts at the root node with key 77. Finding 81 to be larger, it goes to the right child, node 94. Now the key to insert is less than the current key, so it descends to node 85. The parent pointer follows the current pointer at each of these steps. When current reaches node 85, it goes to its left child and finds it missing. The call to __find() returns None and the parent pointer.

After locating the parent node with the empty child where the new key should go, the Visualization tool creates a new node with the key 81, some data represented by a color, and sets the left child pointer of node 85, the parent, to point at it. The node pointer returned by __find() is moved away because it still is None.

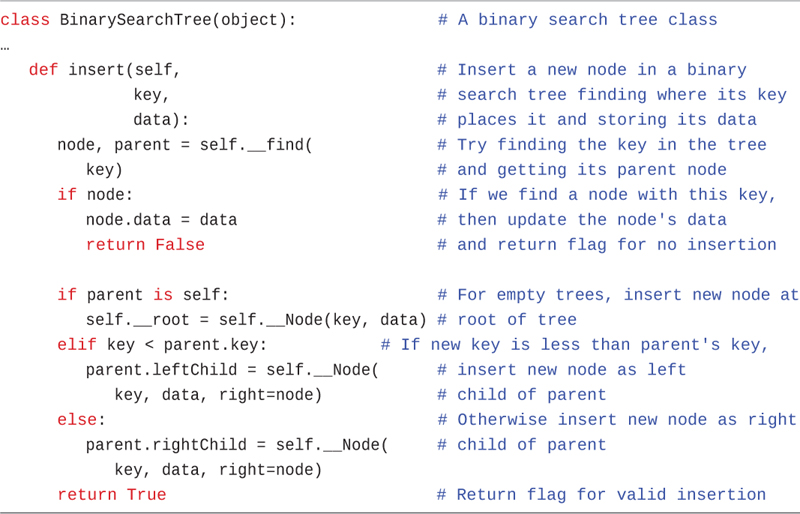

Python Code for Inserting a Node

The insert() method takes parameters for the key and data to insert, as shown in Listing 8-4. It calls the __find() method with the new node’s key to determine whether that key already exists and where its parent node should be. This implementation allows only unique keys in the tree, so if it finds a node with the same key, insert() updates the data for that key and returns False to indicate that no new node was created.

LISTING 8-4 The insert() Method of BinarySearchTree

class BinarySearchTree(object): # A binary search tree class … def insert(self, # Insert a new node in a binary key, # search tree finding where its key data): # places it and storing its data node, parent = self.__find( # Try finding the key in the tree key) # and getting its parent node if node: # If we find a node with this key, node.data = data # then update the node's data return False # and return flag for no insertion if parent is self: # For empty trees, insert new node at self.__root = self.__Node(key, data) # root of tree elif key < parent.key: # If new key is less than parent's key, parent.leftChild = self.__Node( # insert new node as left key, data, right=node) # child of parent else: # Otherwise insert new node as right parent.rightChild = self.__Node( # child of parent key, data, right=node) return True # Return flag for valid insertion

If a matching node was not found, then insertion depends on what kind of parent reference was returned from __find(). If it’s self, the BinarySearchTree must be empty, so the new node becomes the root node of the tree. Otherwise, the parent is a node, so insert() decides which child will get the new node by comparing the new node’s key with that of the parent. If the new key is lower, then the new node becomes the left child; otherwise, it becomes the right child. Finally, insert() returns True to indicate the insertion succeeded.

When insert() creates the new node, it sets the new node’s right child to the node returned from __find(). You might wonder why that’s there, especially because node can only be None at that point (if it were not None, insert() would have returned False before reaching that point). The reason goes back to what to do with duplicate keys. If you allow nodes with duplicate keys, then you must put them somewhere. The binary search tree definition says that a node’s key is less than or equal to that of its right child. So, if you allow duplicate keys, the duplicate node cannot go in the left child. By specifying something other than None as the right child of the new node, other nodes with the same key can be retained. We leave as an exercise how to insert (and delete) nodes with duplicate keys.

Traversing the Tree

Traversing a tree means visiting each node in a specified order. Traversing a tree is useful in some circumstances such as going through all the records to look for ones that need some action (for example, parts of a vehicle that are sourced from a particular country). This process may not be as commonly used as finding, inserting, and deleting nodes but it is important nevertheless.

You can traverse a tree in three simple ways. They’re called pre-order, in-order, and post-order. The most commonly used order for binary search trees is in-order, so let’s look at that first and then return briefly to the other two.

In-order Traversal

An in-order traversal of a binary search tree causes all the nodes to be visited in ascending order of their key values. If you want to create a list of the data in a binary tree sorted by their keys, this is one way to do it.

The simplest way to carry out a traversal is the use of recursion (discussed in Chapter 6). A recursive method to traverse the entire tree is called with a node as an argument. Initially, this node is the root. The method needs to do only three things:

Call itself to traverse the node’s left subtree.

Visit the node.

Call itself to traverse the node’s right subtree.

Remember that visiting a node means doing something to it: displaying it, updating a field, adding it to a queue, writing it to a file, or whatever.

The three traversal orders work with any binary tree, not just with binary search trees. The traversal mechanism doesn’t pay any attention to the key values of the nodes; it only concerns itself with the node’s children and data. In other words, in-order traversal means “in order of increasing key values” only when the binary search tree criteria are used to place the nodes in the tree. The in of in-order refers to a node being visited in between the left and right subtrees. The pre of pre-order means visiting the node before visiting its children, and post-order visits the node after visiting the children. This distinction is like the differences between infix and postfix notation for arithmetic expressions described in Chapter 4, “Stacks and Queues.”

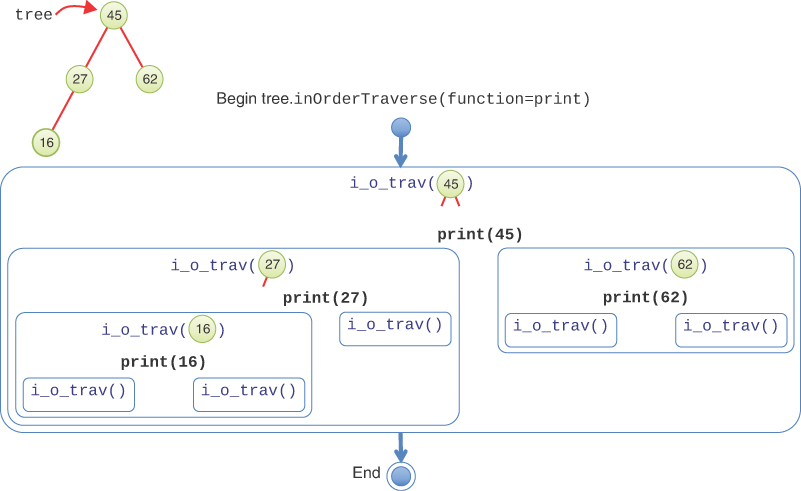

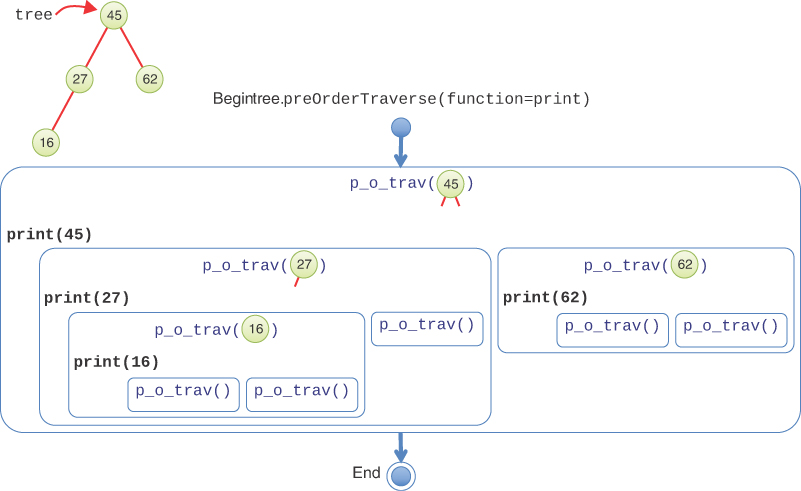

To see how this recursive traversal works, Figure 8-12 shows the calls that happen during an in-order traversal of a small binary tree. The tree variable references a four-node binary search tree. The figure shows the invocation of an inOrderTraverse() method on the tree that will call the print function on each of its nodes.

FIGURE 8-12 In-order traversal of a small tree

The blue rounded rectangles show the recursive calls on each subtree. The name of the recursive method has been abbreviated as i_o_trav() to fit all the calls in the figure. The first (outermost) call is on the root node (key 45). Each recursive call performs the three steps outlined previously. First, it makes a recursive call on the left subtree, rooted at key 27. That shows up as another blue rounded rectangle on the left of the figure.

Processing the subtree rooted at key 27 starts by making a recursive call on its left subtree, rooted at key 16. Another rectangle shows that call in the lower left. As before, its first task is to make a recursive call on its left subtree. That subtree is empty because it is a leaf node and is shown in the figure as a call to i_o_trav() with no arguments. Because the subtree is empty, nothing happens and the recursive call returns.

Back in the call to i_o_trav(16), it now reaches step 2 and “visits” the node by executing the function, print, on the node itself. This is shown in the figure as print(16) in black. In general, visiting a node would do more than just print the node’s key; it would take some action on the data stored at the node. The figure doesn’t show that action, but it would occur when the print(16) is executed.

After visiting the node with key 16, it’s time for step 3: call itself on the right subtree. The node with key 16 has no right child, which shows up as the smallest-sized rectangle because it is a call on an empty subtree. That completes the execution for the subtree rooted at key 16. Control passes back to the caller, the call on the subtree rooted at key 27.

The rest of the processing progresses similarly. The visit to the node with key 27 executes print(27) and then makes a call on its empty right subtree. That finishes the call on node 27 and control passes back to the call on the root of the tree, node 45. After executing print(45), it makes a call to traverse its right (nonempty) subtree. This is the fourth and final node in the tree, node 62. It makes a call on its empty left subtree, executes print(62), and finishes with a call on its empty right subtree. Control passes back up through the call on the root node, 45, and that ends the full tree traversal.

Pre-order and Post-order Traversals

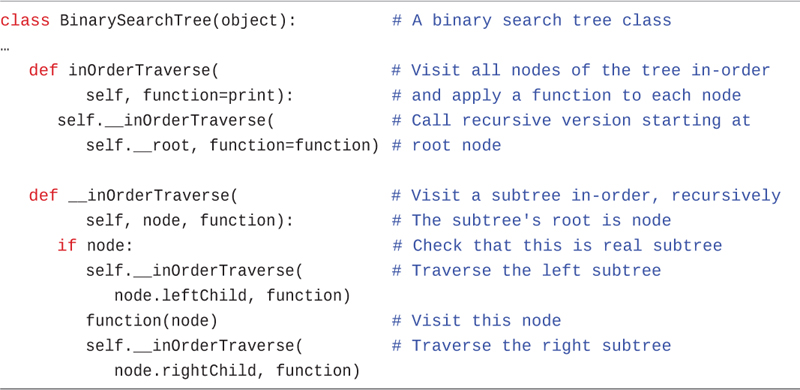

The other two traversal orders are similar: only the sequence of visiting the node changes. For pre-order traversal, the node is visited first, and for post-order, it’s visited last. The two subtrees are always visited in the same order: left and then right. Figure 8-13 shows the execution of a pre-order traversal on the same four-node tree as in Figure 8-12. The execution of the print() function happens before visiting the two subtrees. That means that the pre-order traversal would print 45, 27, 16, 62 compared to the in-order traversal’s 16, 27, 45, 62. As the figures show, the differences between the orders are small, but the overall effect is large.

FIGURE 8-13 Pre-order traversal of a small tree

Python Code for Traversing

Let’s look at a simple way of implementing the in-order traversal now. As you saw in stacks, queues, linked lists, and other data structures, it’s straightforward to define the traversal using a function passed as an argument that gets applied to each item stored in the structure. The interesting difference with trees is that recursion makes it very compact.

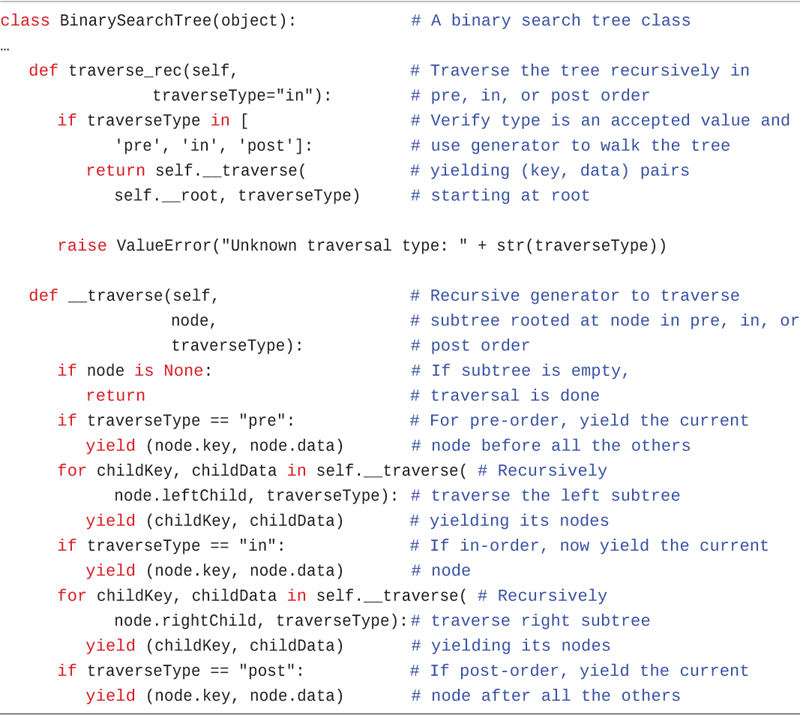

Because these trees are represented using two classes, BinarySearchTree and __Node, you need methods that can operate on both types of objects. In Listing 8-5, the inOrderTraverse() method handles the traversal on BinarySearchTree objects. It serves as the public interface to the traversal and calls a private method __inOrderTraverse() to do the actual work on subtrees. It passes the root node to the private method and returns.

LISTING 8-5 Recursive Implementation of inOrderTraverse()

class BinarySearchTree(object): # A binary search tree class … def inOrderTraverse( # Visit all nodes of the tree in-order self, function=print): # and apply a function to each node self.__inOrderTraverse( # Call recursive version starting at self.__root, function=function) # root node def __inOrderTraverse( # Visit a subtree in-order, recursively self, node, function): # The subtree's root is node if node: # Check that this is real subtree self.__inOrderTraverse( # Traverse the left subtree node.leftChild, function) function(node) # Visit this node self.__inOrderTraverse( # Traverse the right subtree node.rightChild, function)

The private method expects a __Node object (or None) for its node parameter and performs the three steps on the subtree rooted at the node. First, it checks node and returns immediately if it is None because that signifies an empty subtree. For legitimate nodes, it first makes a recursive call to itself to process the left child of the node. Second, it visits the node by invoking the function on it. Third, it makes a recursive call to process the node’s right child. That’s all there is to it.

Using a Generator for Traversal

The inOrderTraverse() method is straightforward, but it has at least three shortcomings. First, to implement the other orderings, you would either need to write more methods or add a parameter that specifies the ordering to perform.

Second, the function passed as an argument to “visit” each node needs to take a __Node object as argument. That’s a private class inside the BinarySearchTree that protects the nodes from being manipulated by the caller. One alternative that avoids passing a reference to a __Node object would be to pass in only the data field (and maybe the key field) of each node as arguments to the visit function. That approach would minimize what the caller could do to the node and prevent it from altering the other node references.

Third, using a function to describe the action to perform on each node has its limitations. Typically, functions perform the same operation each time they are invoked and don’t know about the history of previous calls. During the traversal of a data structure like a tree, being able to make use of the results of previous node visits dramatically expands the possible operations. Here are some examples that you might want to do:

Add up all the values in a particular field at every node.

Add up all the values in a particular field at every node. Get a list of all the unique strings in a field from every node.

Get a list of all the unique strings in a field from every node. Add the node’s key to some list if none of the previously visited nodes have a bigger value in some field.

Add the node’s key to some list if none of the previously visited nodes have a bigger value in some field.

In all these traversals, it’s very convenient to be able to accumulate results somewhere during the traversal. That’s possible to do with functions, but generators make it easier. We introduced generators in Chapter 5, and because trees share many similarities with those structures, they are very useful for traversing trees.

We address these shortcomings in a recursive generator version of the traverse method, traverse_rec(), shown in Listing 8-6. This version adds some complexity to the code but makes using traversal much easier. First, we add a parameter, traverseType, to the traverse_rec() method so that we don’t need three separate traverse routines. The first if statement verifies that this parameter is one of the three supported orderings: pre, in, and post. If not, it raises an exception. Otherwise, it launches the recursive private method, __traverse(), starting with the root node, just like inOrderTraverse() does.

There is an important but subtle point to note in calling the __traverse() method. The public traverse_rec() method returns the result of calling the private __traverse() method and does not just simply call it as a subroutine. The reason is that the traverse() method itself is not the generator; it has no yield statements. It must return the iterator produced by the call to __traverse(), which will be used by the traverse_rec() caller to iterate over the nodes.

Inside the __traverse() method, there are a series of if statements. The first one tests the base case. If node is None, then this is an empty tree (or subtree). It returns to indicate the iterator has hit the end (which Python converts to a StopIteration exception). The next if statement checks whether the traversal type is pre-order, and if it is, it yields the node’s key and data. Remember that the iterator will be paused at this point while control passes back to its caller. That is where the node will be “visited.” After the processing is done, the caller’s loop invokes this iterator to get the next node. The iterator resumes processing right after the yield statement, remembering all the context.

When the iterator resumes (or if the order was something other than pre-order), the next step is a for loop. This is a recursive generator to perform the traversal of the left subtree. It calls the __traverse() method on the node’s leftChild using the same traverseType. That creates its own iterator to process the nodes in that subtree. As nodes are yielded back as key, data pairs, this higher-level iterator yields them back to its caller. This loop construction produces a nested stack of iterators, similar to the nested invocations of i_o_trav() shown in Figure 8-12. When each iterator returns at the end of its work, it raises a StopIteration. The enclosing iterator catches each exception, so the various levels don’t interfere with one another.

LISTING 8-6 The Recursive Generator for Traversal

class BinarySearchTree(object): # A binary search tree class … def traverse_rec(self, # Traverse the tree recursively in traverseType="in"): # pre, in, or post order if traverseType in [ # Verify type is an accepted value and 'pre', 'in', 'post']: # use generator to walk the tree return self.__traverse( # yielding (key, data) pairs self.__root, traverseType) # starting at root raise ValueError("Unknown traversal type: " + str(traverseType)) def __traverse(self, # Recursive generator to traverse node, # subtree rooted at node in pre, in, or traverseType): # post order if node is None: # If subtree is empty, return # traversal is done if traverseType == "pre": # For pre-order, yield the current yield (node.key, node.data) # node before all the others for childKey, childData in self.__traverse( # Recursively node.leftChild, traverseType): # traverse the left subtree yield (childKey, childData) # yielding its nodes if traverseType == "in": # If in-order, now yield the current yield (node.key, node.data) # node for childKey, childData in self.__traverse( # Recursively node.rightChild, traverseType):# traverse right subtree yield (childKey, childData) # yielding its nodes if traverseType == "post": # If post-order, yield the current yield (node.key, node.data) # node after all the others

The rest of the __traverse() method is straightforward. After finishing the loop over all the nodes in the left subtree, the next if statement checks for the in-order traversal type and yields the node’s key and data, if that’s the ordering. The node gets processed between the left and right subtrees for an in-order traversal. After that, the right subtree is processed in its own loop, yielding each of the visited nodes back to its caller. After the right subtree is done, a check for post-order traversal determines whether the node should be yielded at this stage or not. After that, the __traverse() generator is done, ending its caller’s loop.

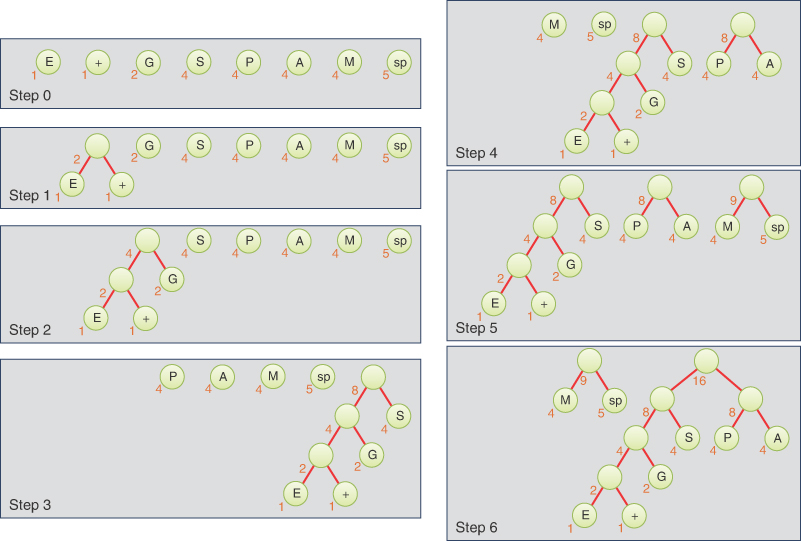

Making the Generator Efficient

The recursive generator has the advantage of structural simplicity. The base cases and recursive calls follow the node and child structure of the tree. Developing the prototype and proving its correct behavior flow naturally from this structure.

The generator does, however, suffer some inefficiency in execution. Each invocation of the __traverse() method invokes two loops: one for the left and one for the right child. Each of those loops creates a new iterator to yield the items from their subtrees back through the iterator created by this invocation of the __traverse() method itself. That layering of iterators extends from the root down to each leaf node.

Traversing the N items in the tree should take O(N) time, but creating a stack of iterators from the root down to each leaf adds complexity that’s proportional to the depth of the leaves. The leaves are at O(log N) depth, in the best case. That means the overall traversal of N items will take O(N×log N) time.

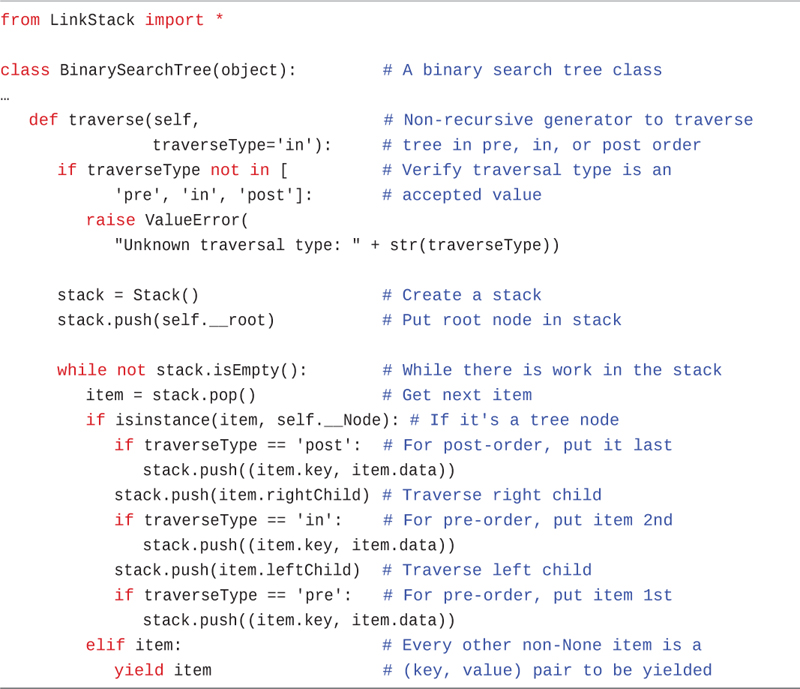

To achieve O(N) time, you need to apply the method discussed at the end of Chapter 6 and use a stack to hold the items being processed. The items include both Node structures and the (key, data) pairs stored at the nodes to be traversed in a particular order. Listing 8-7 shows the code.

The nonrecursive method combines the two parts of the recursive approach into a single traverse() method. The same check for the validity of the traversal type happens at the beginning. The next step creates a stack, using the Stack class built on a linked list from Chapter 5 (defined in the LinkStack module).

Initially, the method pushes the root node of the tree on the stack. That means the remaining work to do is the entire tree starting at the root. The while loop that follows works its way through the remaining work until the stack is empty.

At each pass through the while loop, the top item of the stack is popped off. Three kinds of items could be on the stack: a Node item, a (key, data) tuple, or None. The latter happens if the tree is empty and when it processes the leaf nodes (and finds their children are None).

If the top of the stack is a Node item, the traverse() method determines how to process the node’s data and its children based on the requested traversal order. It pushes items onto the stack to be processed on subsequent passes through the while loop. Because the items will be popped off the stack in the reverse order from the way they were pushed onto it, it starts by handling the case for post-order traversal.

In post-order, the first item pushed is the node’s (key, data) tuple. Because it is pushed first, it will be processed last overall. The next item pushed is the node’s right child. In post-order, this is traversed just before processing the node’s data. For the other orders, the right child is always the last node processed.

After pushing on the right child, the next if statement checks whether the in-order traversal was requested. If so, it pushes the node’s (key, data) tuple on the stack to be processed in-between the two child nodes. That’s followed by pushing the left child on the stack for processing.

LISTING 8-7 The Nonrecursive traverse() Generator

from LinkStack import * class BinarySearchTree(object): # A binary search tree class … def traverse(self, # Non-recursive generator to traverse traverseType='in'): # tree in pre, in, or post order if traverseType not in [ # Verify traversal type is an 'pre', 'in', 'post']: # accepted value raise ValueError( "Unknown traversal type: " + str(traverseType)) stack = Stack() # Create a stack stack.push(self.__root) # Put root node in stack while not stack.isEmpty(): # While there is work in the stack item = stack.pop() # Get next item if isinstance(item, self.__Node): # If it's a tree node if traverseType == 'post': # For post-order, put it last stack.push((item.key, item.data)) stack.push(item.rightChild) # Traverse right child if traverseType == 'in': # For pre-order, put item 2nd stack.push((item.key, item.data)) stack.push(item.leftChild) # Traverse left child if traverseType == 'pre': # For pre-order, put item 1st stack.push((item.key, item.data)) elif item: # Every other non-None item is a yield item # (key, value) pair to be yielded

Finally, the last if statement checks whether the pre-order traversal was requested and then pushes the node’s data on the stack for processing before the left and right children. It will be popped off during the next pass through the while loop. That completes all the work for a Node item.

The final elif statement checks for a non-None item on the stack, which must be a (key, data) tuple. When the loop finds such a tuple, it yields it back to the caller. The yield statement ensures that the traverse() method becomes a generator, not a function.

The loop doesn’t have any explicit handling of the None values that get pushed on the stack for empty root and child links. The reason is that there’s nothing to do for them: just pop them off the stack and continue on to the remaining work.

Using the stack, you have now made an O(N) generator. Each node of the tree is visited exactly once, pushed on the stack, and later popped off. Its key-data pairs and child links are also pushed on and popped off exactly once. The ordering of the node visits and child links follows the requested traversal ordering. Using the stack and carefully reversing the items pushed onto it make the code slightly more complex to understand but improve the performance.

Using the Generator for Traversing

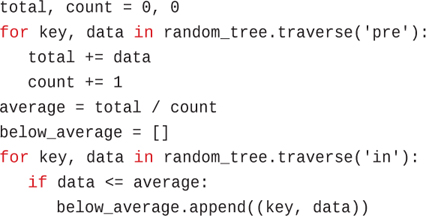

The generator approach (both recursive and stack-based) makes the caller’s loops easy. For example, if you want to collect all the items in a tree whose data is below the average data value, you could use two loops:

total, count = 0, 0 for key, data in random_tree.traverse('pre'): total += data count += 1 average = total / count below_average = [] for key, data in random_tree.traverse('in'): if data <= average: below_average.append((key, data))

The first loop counts the number of items in random_tree and sums up their data values. The second loop finds all the items whose data is below the average and appends the key and data pair to the below_average list. Because the second loop is done in in-order, the keys in below_average are in ascending order. Being able to reference the variables that accumulate results—total, count, and below_average—without defining some global (or nonlocal) variables outside a function body, makes using the generator very convenient for traversal.

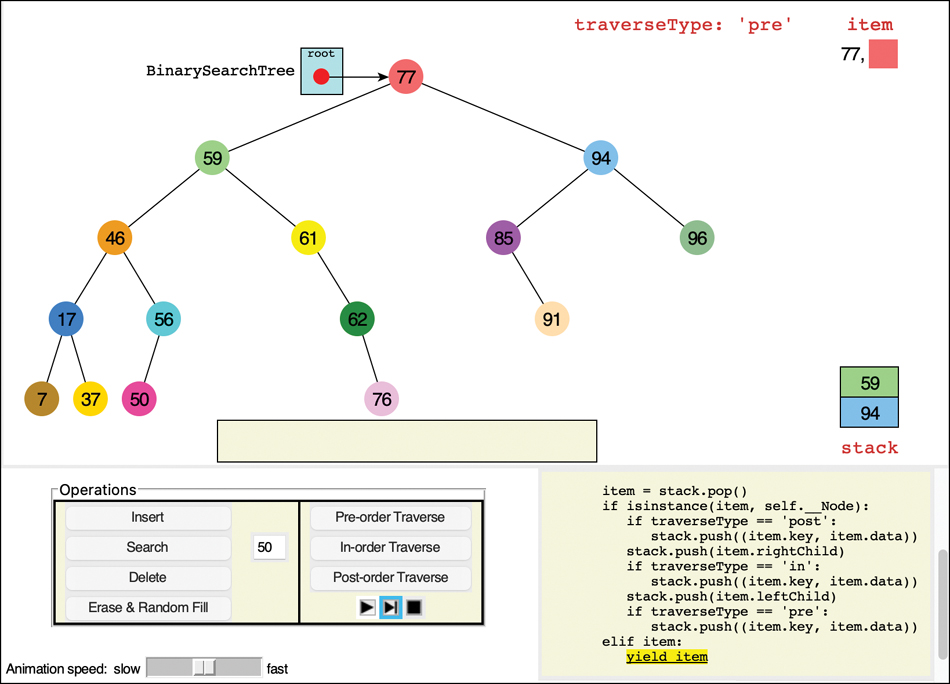

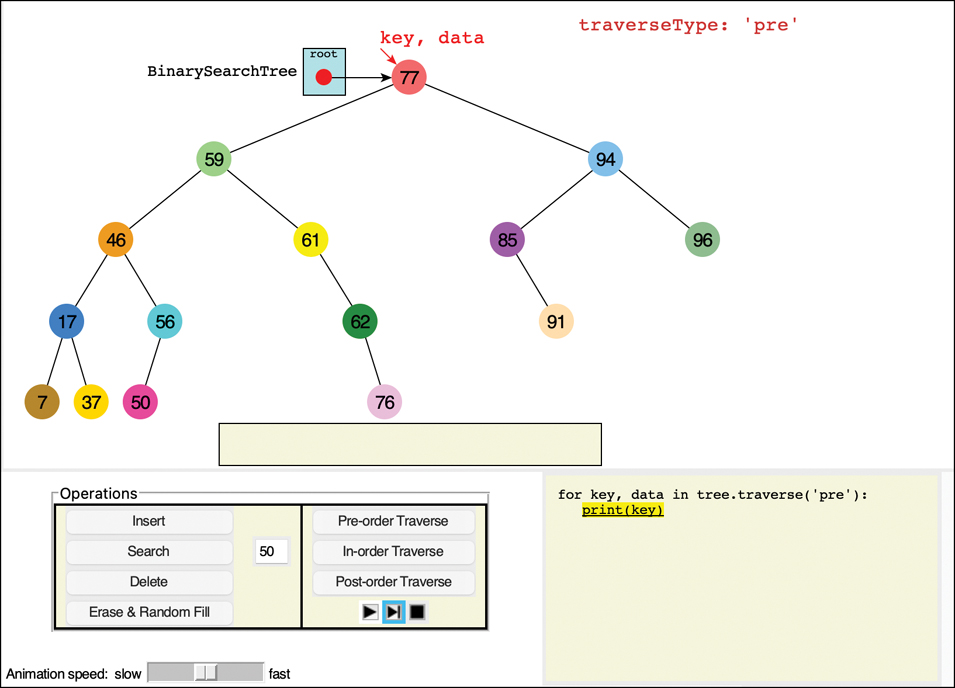

Traversing with the Visualization Tool

The Binary Search Tree Visualization tool allows you to explore the details of traversal using generators. You can launch any of the three kinds of traversals by selecting the Pre-order Traverse, In-order Traverse, or Post-order Traverse buttons. In each case, the tool executes a simple loop of the form

for key, data in tree.traverse("pre"): print(key)

To see the details, use the Step button (you can launch an operation in step mode by holding down the Shift key when selecting the button). In the code window, you first see the short traversal loop. The example calls the traverse() method to visit all the keys and data in a loop using one of the orders such as pre.

Figure 8-14 shows a snapshot near the beginning of a pre-order traversal. The code for the traverse() method appears at the lower right. To the right of the tree above the code, the stack is shown. The nodes containing keys 59 and 94 are on the stack. The top of the stack was already popped off and moved to the top right under the item label. It shows the key, 77, with a comma separating it from its colored rectangle to represent the (key, data) tuple that was pushed on the stack. The yield statement is highlighted, showing that the traverse() iterator is about to yield the key and data back to caller. The loop that called traverse() has scrolled off the code display but will be shown on the next step.

FIGURE 8-14 Traversing a tree in pre-order using the traverse() iterator

When control returns to the calling loop, the traverse() iterator disappears from the code window and so does the stack, as shown in Figure 8-15. The key and data variables are now bound to 77 and the root node’s data. The print statement is highlighted because the program is about to print the key in the output box along the bottom of the tree. The next step shows key 77 being copied to the output box.

FIGURE 8-15 The loop calling the traverse() iterator

After printing, control returns to the for key, data in tree.traverse('pre') loop. That pushes the traverse() iterator back on the code display, along with its stack similar to Figure 8-14. The while loop in the iterator finds that the stack is not empty, so it pops off the top item. That item is node 59, the left child of node 77. The process repeats by pushing on node 59’s children and the node’s key, data pair on the stack. On the next loop iteration, that tuple is popped off the stack, and it is yielded back to the print loop.

The processing of iterators is complex to describe, and the Visualization tool makes it easier to follow the different levels and steps than reading a written description. Try stepping through the processing of several nodes, including when the iterator reaches a leaf node and pushes None on the stack. The stack guides the iterator to return to nodes that remain to be processed.

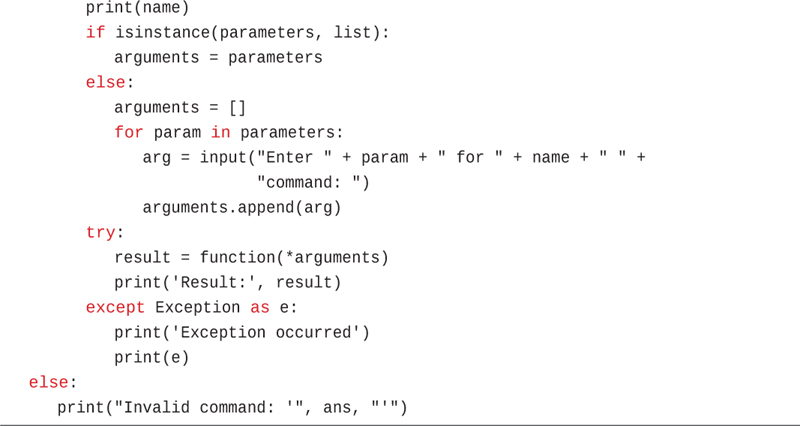

Traversal Order

What’s the point of having three traversal orders? One advantage is that in-order traversal guarantees an ascending order of the keys in binary search trees. There’s a separate motivation for pre- and post-order traversals. They are very useful if you’re writing programs that parse or analyze algebraic expressions. Let’s see why that is the case.

A binary tree (not a binary search tree) can be used to represent an algebraic expression that involves binary arithmetic operators such as +, –, /, and *. The root node and every nonleaf node hold an operator. The leaf nodes hold either a variable name (like A, B, or C) or a number. Each subtree is a valid algebraic expression.

For example, the binary tree shown in Figure 8-16 represents the algebraic expression

FIGURE 8-16 Binary tree representing an algebraic expression

(A+B) * C – D / E

This is called infix notation; it’s the notation normally used in algebra. (For more on infix and postfix, see the section “Parsing Arithmetic Expressions” in Chapter 4.) Traversing the tree in order generates the correct in-order sequence A+B*C–D/E, but you need to insert the parentheses yourself to get the expected order of operations. Note that subtrees form their own subexpressions like the (A+B) * C outlined in the figure.

What does all this have to do with pre-order and post-order traversals? Let’s see what’s involved in performing a pre-order traversal. The steps are

Visit the node.

Call itself to traverse the node’s left subtree.

Call itself to traverse the node’s right subtree.

Traversing the tree shown in Figure 8-16 using pre-order and printing the node’s value would generate the expression

–*+ABC/DE

This is called prefix notation. It may look strange the first time you encounter it, but one of its nice features is that parentheses are never required; the expression is unambiguous without them. Starting on the left, each operator is applied to the next two things to its right in the expression, called the operands. For the first operator, –, these two things are a product expression, *+ABC, and a division expression, /DE. For the second operator, *, the two things are a sum expression, +AB, and a single variable, C. For the third operator, +, the two things it operates on are the variables, A and B, so this last expression would be A+B in in-order notation. Finally, the fourth operator, /, operates on the two variables D and E.

The third kind of traversal, post-order, contains the three steps arranged in yet another way:

Call itself to traverse the node’s left subtree.

Call itself to traverse the node’s right subtree.

Visit the node.

For the tree in Figure 8-16, visiting the nodes with a post-order traversal generates the expression

AB+C*DE/–

This is called postfix notation. It means “apply the last operator in the expression, –, to the two things immediately to the left of it.” The first thing is AB+C*, and the second thing is DE/. Analyzing the first thing, AB+C*, shows its meaning to be “apply the * operator to the two things immediately to the left of it, AB+ and C.” Analyzing the first thing of that expression, AB+, shows its meaning to be “apply the + operator to the two things immediately to the left of it, A and B.” It’s hard to see initially, but the “things” are always one of three kinds: a single variable, a single number, or an expression ending in a binary operator.

To process the meaning of a postfix expression, you start from the last character on the right and interpret it as follows. If it’s a binary operator, then you repeat the process to interpret two subexpressions on its left, which become the operands of the operator. If it’s a letter, then it’s a simple variable, and if it’s a number, then it’s a constant. For both variables and numbers, you “pop” them off the right side of the expression and return them to the process of the enclosing expression.

We don’t show the details here, but you can easily construct a tree like that in Figure 8-16 by using a postfix expression as input. The approach is analogous to that of evaluating a postfix expression, which you saw in the PostfixTranslate.py program in Chapter 4 and its corresponding InfixCalculator Visualization tool. Instead of storing operands on the stack, however, you store entire subtrees. You read along the postfix string from left to right as you did in the PostfixEvaluate() method. Here are the steps when you encounter an operand (a variable or a number):

Make a tree with one node that holds the operand.

Push this tree onto the stack.

Here are the steps when you encounter an operator, O:

Pop two operand trees R and L off the stack (the top of the stack has the rightmost operand, R).

Create a new tree T with the operator, O, in its root.

Attach R as the right child of T.

Attach L as the left child of T.

Push the resulting tree, T, back on the stack.

When you’re done evaluating the postfix string, you pop the one remaining item off the stack. Somewhat amazingly, this item is a complete tree depicting the algebraic expression. You can then see the prefix and infix representations of the original postfix notation (and recover the postfix expression) by traversing the tree in one of the three orderings we described. We leave an implementation of this process as an exercise.

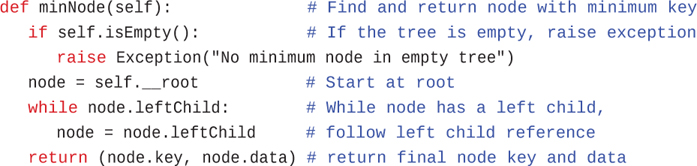

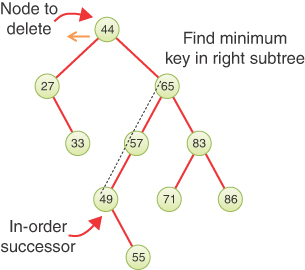

Finding Minimum and Maximum Key Values

Incidentally, you should note how easy it is to find the minimum and maximum key values in a binary search tree. In fact, this process is so easy that we don’t include it as an option in the Visualization tool. Still, understanding how it works is important.

For the minimum, go to the left child of the root; then go to the left child of that child, and so on, until you come to a node that has no left child. This node is the minimum. Similarly, for the maximum, start at the root and follow the right child links until they end. That will be the maximum key in the tree, as shown in Figure 8-17.

FIGURE 8-17 Minimum and maximum key values of a binary search tree

Here’s some code that returns the minimum node’s data and key values:

def minNode(self): # Find and return node with minimum key if self.isEmpty(): # If the tree is empty, raise exception raise Exception("No minimum node in empty tree") node = self.__root # Start at root while node.leftChild: # While node has a left child, node = node.leftChild # follow left child reference return (node.key, node.data) # return final node key and data

Finding the maximum is similar; just swap the right for the left child. You learn about an important use of finding the minimum value in the next section about deleting nodes.

Deleting a Node

Deleting a node is the most complicated common operation required for binary search trees. The fundamental operation of deletion can’t be ignored, however, and studying the details builds character. If you’re not in the mood for character building, feel free to skip to the Efficiency of Binary Search Trees section.

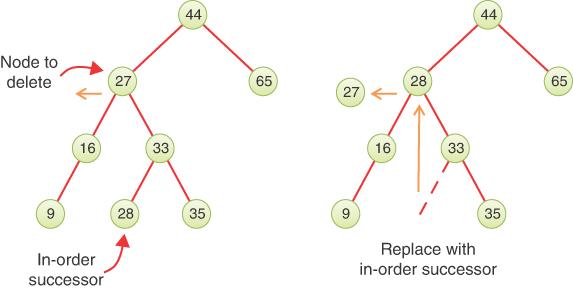

You start by verifying the tree isn’t empty and then finding the node you want to delete, using the same approach you saw in __find() and insert(). If the node isn’t found, then you’re done. When you’ve found the node and its parent, there are three cases to consider:

The node to be deleted is a leaf (has no children).

The node to be deleted has one child.

The node to be deleted has two children.

Let’s look at these three cases in turn. The first is easy; the second, almost as easy; and the third, quite complicated.

Case 1: The Node to Be Deleted Has No Children

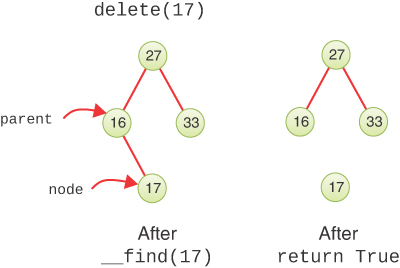

To delete a leaf node, you simply change the appropriate child field in the node’s parent to None instead of to the node. The node object still exists, but it is no longer part of the tree, as shown when deleting node 17 in Figure 8-18.

FIGURE 8-18 Deleting a node with no children

If you’re using a language like Python or Java that has garbage collection, the deleted node’s memory will eventually be reclaimed for other uses (if you eliminate all references to it in the program). In languages that require explicit allocation and deallocation of memory, the deleted node should be released for reuse.

Using the Visualization Tool to Delete a Node with No Children

Try deleting a leaf node using the Binary Search Tree Visualization tool. You can either type the key of a node in the text entry box or select a leaf with your pointer device and then select Delete. You see the program use __find() to locate the node by its key, copy it to a temporary variable, set the parent link to None, and then “return” the deleted key and data (in the form of its colored background).

Case 2: The Node to Be Deleted Has One Child

This second case isn’t very difficult either. The node has only two edges: one to its parent and one to its only child. You want to “cut” the node out of this sequence by connecting its parent directly to its child. This process involves changing the appropriate reference in the parent (leftChild or rightChild or __root) to point to the deleted node’s child. Figure 8-19 shows the deletion of node 16, which has only one child.

FIGURE 8-19 Deleting a node with one child

After finding the node and its parent, the delete method has to change only one reference. The deleted node, key 16 in the figure, becomes disconnected from the tree (although it may still have a child pointer to the node that was promoted up (key 20). Garbage collectors are sophisticated enough to know that they can reclaim the deleted node without following its links to other nodes that might still be needed.

Now let’s go back to the case of deleting a node with no children. In that case, the delete method also made a single change to replace one of the parent’s child pointers. That pointer was set to None because there was no replacement child node. That’s a similar operation to Case 2, so you can treat Case 1 and Case 2 together by saying, “If the node to be deleted, D, has 0 or 1 children, replace the appropriate link in its parent with either the left child of D, if it isn’t empty, or the right child of D.” If both child links from D are None, then you’ve covered Case 1. If only one of D’s child links is non-None, then the appropriate child will be selected as the parent’s new child, covering Case 2. You promote either the single child or None into the parent’s child (or possibly __root) reference.

Using the Visualization Tool to Delete a Node with One Child

Let’s assume you’re using the Visualization tool on the tree in Figure 8-5 and deleting node 61, which has a right child but no left child. Click node 61 and the key should appear in the text entry area, enabling the Delete button. Selecting the button starts another call to __find() that stops with current pointing to the node and parent pointing to node 59.

After making a copy of node 61, the animation shows the right child link from node 59 being set to node 61’s right child, node 62. The original copy of node 61 goes away, and the tree is adjusted to put the subtree rooted at node 62 into its new position. Finally, the copy of node 61 is moved to the output box at the bottom.

Use the Visualization tool to generate new trees with single child nodes and see what happens when you delete them. Look for the subtree whose root is the deleted node’s child. No matter how complicated this subtree is, it’s simply moved up and plugged in as the new child of the deleted node’s parent.

Python Code to Delete a Node

Let’s now look at the code for at least Cases 1 and 2. Listing 8-8 shows the code for the delete() method, which takes one argument, the key of the node to delete. It returns either the data of the node that was deleted or None, to indicate the node was not found. That makes it behave somewhat like the methods for popping an item off a stack or deleting an item from a queue. The difference is that the node must be found inside the tree instead of being at a known position in the data structure.

LISTING 8-8 The delete() Method of BinarySearchTree

class BinarySearchTree(object): # A binary search tree class … def delete(self, goal): # Delete a node whose key matches goal node, parent = self.__find(goal) # Find goal and its parent if node is not None: # If node was found, return self.__delete( # then perform deletion at node parent, node) # under the parent def __delete(self, # Delete the specified node in the tree parent, node): # modifying the parent node/tree deleted = node.data # Save the data that's to be deleted if node.leftChild: # Determine number of subtrees if node.rightChild: # If both subtrees exist, self.__promote_successor( # Then promote successor to node) # replace deleted node else: # If no right child, move left child up if parent is self: # If parent is the whole tree, self.__root = node.leftChild # update root elif parent.leftChild is node: # If node is parent's left, parent.leftChild = node.leftChild # child, update left else: # else update right child parent.rightChild = node.leftChild else: # No left child; so promote right child if parent is self: # If parent is the whole tree, self.__root = node.rightChild # update root elif parent.leftChild is node: # If node is parent's left parent.leftChild = node.rightChild # child, then update else: # left child link else update parent.rightChild = node.rightChild # right child return deleted # Return the deleted node's data

Just like for insertion, the first step is to find the node to delete and its parent. If that search does not find the goal node, then there’s nothing to delete from the tree, and delete() returns None. If the node to delete is found, the node and its parent are passed to the private __delete() method to modify the nodes in the tree.

Inside the __delete() method, the first step is to store a reference to the node data being deleted. This step enables retrieval of the node’s data after the references to it are removed from the tree. The next step checks how many subtrees the node has. That determines what case is being processed. If both a left and a right child are present, that’s Case 3, and it hands off the deletion to another private method, __promote_successor(), which we describe a little later.

If there is only a left subtree of the node to delete, then the next thing to look at is its parent node. If the parent is the BinarySearchTree object (self), then the node to delete must be the root node, so the left child is promoted into the root node slot. If the parent’s left child is the node to delete, then the parent’s left child link is replaced with the node’s left child to remove the node. Otherwise, the parent’s right child link is updated to remove the node.

Notice that working with references makes it easy to move an entire subtree. When the parent’s reference to the node is updated, the child that gets promoted could be a single node or an immense subtree. Only one reference needs to change. Although there may be lots of nodes in the subtree, you don’t need to worry about moving them individually. In fact, they “move” only in the sense of being conceptually in different positions relative to the other nodes. As far as the program is concerned, only the parent’s reference to the root of the subtree has changed, and the rest of the contents in memory remain the same.

The final else clause of the __delete() method deals with the case when the node has no left child. Whether or not the node has a right child, __delete() only needs to update the parent’s reference to point at the node’s right child. That handles both Case 1 and Case 2. It still must determine which field of the parent object gets the reference to the node’s right child, just as in the earlier lines when only the left child was present. It puts the node.rightChild in either the __root, leftChild, or rightChild field of the parent, accordingly. Finally, it returns the data of the node that was deleted.