The TensorFlow programming model signifies how to structure your predictive models. A TensorFlow program is generally divided into four phases when you have imported the TensorFlow library:

- Construction of the computational graph that involves some operations on tensors (we will see what a tensor is soon)

- Creation of a session

- Running a session; performed for the operations defined in the graph

- Computation for data collection and analysis

These main phases define the programming model in TensorFlow. Consider the following example, in which we want to multiply two numbers:

import tensorflow as tf # Import TensorFlow

x = tf.constant(8) # X op

y = tf.constant(9) # Y op

z = tf.multiply(x, y) # New op Z

sess = tf.Session() # Create TensorFlow session

out_z = sess.run(z) # execute Z op

sess.close() # Close TensorFlow session

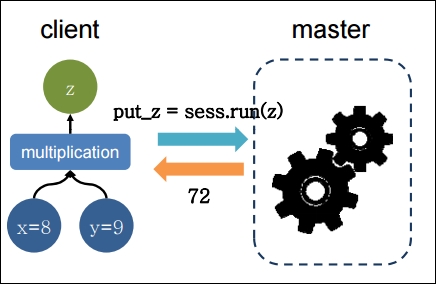

print('The multiplication of x and y: %d' % out_z)# print resultThe preceding code segment can be represented by the following figure:

Figure 5: A simple multiplication executed and returned on a client-master architecture

To make the preceding program more efficient, TensorFlow also allows exchanging data in your graph variables through placeholders (to be discussed later). Now imagine the following code segment that does the same thing but more efficiently:

import tensorflow as tf

# Build a graph and create session passing the graph

with tf.Session() as sess:

x = tf.placeholder(tf.float32, name="x")

y = tf.placeholder(tf.float32, name="y")

z = tf.multiply(x,y)

# Put the values 8,9 on the placeholders x,y and execute the graph

z_output = sess.run(z,feed_dict={x: 8, y:9})

print(z_output)TensorFlow is not necessary for multiplying two numbers. Also, there are many lines of code for this simple operation. The purpose of the example is to clarify how to structure code, from the simplest (as in this instance) to the most complex. Furthermore, the example also contains some basic instructions that we will find in all the other examples given in this book.

This single import in the first line imports TensorFlow for your command; it can be instantiated with tf, as stated earlier. The TensorFlow operator will then be expressed by tf and by the name of the operator to use. In the next line, we construct the session object by means of the tf.Session() instruction:

with tf.Session() as sess:

This object contains the computation graph, which, as we said earlier, contains the calculations to be carried out. The following two lines define variables x and y, using a placeholder. Through a placeholder, you may define both an input (such as the variable x of our example) and an output variable (such as the variable y):

x = tf.placeholder(tf.float32, name="x") y = tf.placeholder(tf.float32, name="y")

To define a data or tensor (we will introduce you to the concept of tensor soon) via the placeholder function, three arguments are required:

- Data type is the type of element in the tensor to be fed.

- Shape of the placeholder is the shape of the tensor to be fed (optional). If the shape is not specified, you can feed a tensor of any shape.

- Name is very useful for debugging and code analysis purposes, but it is optional.

Note

For more on tensors, refer to https://www.tensorflow.org/api_docs/python/tf/Tensor.

So, we can introduce the model that we want to compute with two arguments, the placeholder and the constant, that were previously defined. Next, we define the computational model.

The following statement, inside the session, builds the data structure of the product of x and y, and the subsequent assignment of the result of the operation to tensor z. Then it goes as follows:

z = tf.multiply(x, y)

Since the result is already held by the placeholder z, we execute the graph through the sess.run statement. Here, we feed two values to patch a tensor into a graph node. It temporarily replaces the output of an operation with a tensor value:

z_output = sess.run(z,feed_dict={x: 8, y:9})In the final instruction, we print the result:

print(z_output)

This prints the output, 72.0.

As described earlier, with eager execution for TensorFlow enabled, we can execute TensorFlow operations immediately as they are called from Python in an imperative way.

With eager execution enabled, TensorFlow functions execute operations immediately and return concrete values. This is opposed to adding to a graph to be executed later in a tf.Session (https://www.tensorflow.org/versions/master/api_docs/python/tf/Session) and creating symbolic references to a node in a computational graph.

TensorFlow serves eager execution features through tf.enable_eager_execution, which is aliased with the following:

tf.contrib.eager.enable_eager_executiontf.enable_eager_execution

The tf.enable_eager_execution has the following signature:

tf.enable_eager_execution(

config=None,

device_policy=None

)In the above signature, config is a tf.ConfigProto used to configure the environment in which operations are executed but this is an optional argument. On the other hand, device_policy is also an optional argument used for controlling the policy on how operations requiring inputs on a specific device (for example, GPU0) handle inputs on a different device (for example, GPU1 or CPU).

Now invoking the preceding code will enable the eager execution for the lifetime of your program. For example, the following code performs a simple multiplication operation in TensorFlow:

import tensorflow as tf

x = tf.placeholder(tf.float32, shape=[1, 1]) # a placeholder for variable x

y = tf.placeholder(tf.float32, shape=[1, 1]) # a placeholder for variable y

m = tf.matmul(x, y)

with tf.Session() as sess:

print(sess.run(m, feed_dict={x: [[2.]], y: [[4.]]}))The following is the output of the preceding code:

>>> 8.

However, with the eager execution, the overall code looks much simpler:

import tensorflow as tf # Eager execution (from TF v1.7 onwards): tf.eager.enable_eager_execution() x = [[2.]] y = [[4.]] m = tf.matmul(x, y) print(m)

The following is the output of the preceding code:

>>> tf.Tensor([[8.]], shape=(1, 1), dtype=float32)

Can you understand what happens when the preceding code block is executed? Well, after eager execution is enabled, operations are executed as they are defined and Tensor objects hold concrete values, which can be accessed as numpy.ndarray through the numpy() method.

Note that eager execution cannot be enabled after TensorFlow APIs have been used to create or execute graphs. It is typically recommended to invoke this function at program startup and not in a library. Although this sounds fascinating, we will not use this feature in the upcoming chapters since this a new feature and not well explored yet.