Chapter 8

Communications and Operations Security

Chapter Objectives

After reading this chapter and completing the exercises, you will be able to do the following:

Create useful and appropriate standard operating procedures.

Implement change control processes.

Understand the importance of patch management.

Protect information systems against malware.

Consider data backup and replication strategies.

Recognize the security requirements of email and email systems.

Appreciate the value of log data and analysis.

Evaluate service provider relationships.

Understand the importance of threat intelligence and information sharing.

Write policies and procedures to support operational and communications security.

Section 3.3 of the NIST Cybersecurity Framework, “Communicating Cybersecurity Requirements with Stakeholders,” provides guidance to organizations to learn how to communicate requirements among interdependent stakeholders responsible for the delivery of essential critical infrastructure services.

Section 12 of ISO 27002:2013, “Operations Security,” and Section 13 of ISO 27002:2013, “Communications Security,” focus on information technology (IT) and security functions, including standard operating procedures, change management, malware protection, data replication, secure messaging, and activity monitoring. These functions are primarily carried out by IT and information security data custodians, such as network administrators and security engineers. Many companies outsource some aspect of their operations. Section 15 of ISO 27002:2013, “Supplier Relationships,” focuses on service delivery and third-party security requirements.

The NICE Framework introduced in Chapter 6, “Human Resources Security,” is particularly appropriate for this domain. Data owners need to be educated on operational risk so they can make informed decisions. Data custodians should participate in training that focuses on operational security threats so that they understand the reason for implementing safeguards. Users should be surrounded by a security awareness program that fosters everyday best practices. Throughout the chapter, we cover policies, processes, and procedures recommended to create and maintain a secure operational environment.

FYI: NIST Cybersecurity Framework and ISO/IEC 27002:2013

As previously mentioned in this chapter, Section 3.3 of the NIST Cybersecurity Framework, “Communicating Cybersecurity Requirements with Stakeholders,” provides guidance to organizations to learn how to communicate requirements among interdependent stakeholders. Examples include how organizations could use a Target Profile to express cybersecurity risk management requirements to an external service provider. These external service providers could be a cloud provider, such as Amazon Web Services (AWS), Google Cloud, or Microsoft Azure; or it can be a cloud service, such as Box, Dropbox, or any other service.

In addition, the NIST Framework suggests that an organization may express its cybersecurity state through a Current Profile to report results or to compare with acquisition requirements. Also, a critical infrastructure owner or operator may use a Target Profile to convey required Categories and Subcategories.

A critical infrastructure sector may establish a Target Profile that can be used among its constituents as an initial baseline Profile to build their tailored Target Profiles.

Section 12 of ISO 27002:2013, “Operations Security,” focuses on data center operations, integrity of operations, vulnerability management, protection against data loss, and evidence-based logging. Section 13 of ISO 27002:2013, “Communications Security,” focuses on protection of information in transit. Section 15 of ISO 27002:2013, “Supplier Relationships,” focuses on service delivery and third-party security requirements.

Additional NIST guidance is provided in the following documents:

“NIST Cybersecurity Framework” (covered in detail in Chapter 16)

SP 800-14: “Generally Accepted Principles and Practices for Securing Information Technology Systems”

SP 800-53: “Recommended Security Controls for Federal Information Systems and Organizations”

SP 800-100: “Information Security Handbook: A Guide for Managers”

SP 800-40: “Creating a Patch and Vulnerability Management Program”

SP 800-83: “Guide to Malware Incident Prevention and Handling for Desktops and Laptops’

SP 800-45: “Guidelines on Electronic Mail Security”

SP 800-92: “Guide to Computer Security Log Management”

SP 800-42: “Guideline on Network Security Testing”

Standard Operating Procedures

Standard operating procedures (SOPs) are detailed explanations of how to perform a task. The objective of an SOP is to provide standardized direction, improve communication, reduce training time, and improve work consistency. An alternate name for SOPs is standard operating protocols. An effective SOP communicates who will perform the task, what materials are necessary, where the task will take place, when the task will be performed, and how the person will execute the task.

Why Document SOPs?

The very process of creating SOPs requires us to evaluate what is being done, why it is being done that way, and perhaps how we could do it better. SOPs should be written by individuals knowledgeable about the activity and the organization’s internal structure. Once written, the details in an SOP standardize the target process and provide sufficient information that someone with limited experience or knowledge of the procedure, but with a basic understanding, can successfully perform the procedure unsupervised. Well-written SOPs reduce organizational dependence on individual and institutional knowledge.

It is not uncommon for an employee to become so important that losing that individual would be a huge blow to the company. Imagine that this person is the only one performing a critical task; no one has been cross-trained, and no documentation exists as to how the employee performs this task. The employee suddenly becoming unavailable could seriously injure the organization. Having proper documentation of operating procedures is not a luxury: It is a business requirement.

SOPs should be authorized and protected accordingly, as illustrated in Figure 8-1 and described in the following sections.

FIGURE 8-1 Authorizing and Protecting SOPs

Authorizing SOP Documentation

After a procedure has been documented, it should be reviewed, verified, and authorized before being published. The reviewer analyzes the document for clarity and readability. The verifier tests the procedure to make sure it is correct and not missing any steps. The process owner is responsible for authorization, publication, and distribution. Post-publication changes to the procedures must be authorized by the process owner.

Protecting SOP Documentation

Access and version controls should be put in place to protect the integrity of the document from both unintentional error and malicious insiders. Imagine a case where a disgruntled employee gets hold of a business-critical procedure document and changes key information. If the tampering is not discovered, it could lead to a disastrous situation for the company. The same holds true for revisions. If multiple revisions of the same procedure exist, there is a good chance someone is going to be using the wrong version.

Developing SOPs

SOPs should be understandable to everyone who uses them. SOPs should be written in a concise, step-by-step, plain language format. If not well written, SOPs are of limited value. It is best to use short, direct sentences so that the reader can quickly understand and memorize the steps in the procedure. Information should be conveyed clearly and explicitly to remove any doubt as to what is required. The steps must be in logical order. Any exceptions must be noted and explained. Warnings must stand out.

The four common SOP formats are Simple Step, Hierarchical, Flowchart, and Graphic. As shown in Table 8-1, two factors determine what type of SOP to use: how many decisions the user will need to make and how many steps are in the procedure. Routine procedures that are short and require few decisions can be written using the simple step format. Long procedures consisting of more than 10 steps, with few decisions, should be written in a hierarchical format or in a graphic format. Procedures that require many decisions should be written in the form of a flowchart. It is important to choose the correct format. The best-written SOPs will fail if they cannot be followed.

Many Decisions? |

More Than Ten Steps? |

Recommended SOP Format |

|---|---|---|

No |

No |

Simple Step |

No |

Yes |

Hierarchical or Graphic |

Yes |

No |

Flowchart |

Yes |

Yes |

Flowchart |

As illustrated in Table 8-2, the simple step format uses sequential steps. Generally, these rote procedures do not require any decision making and do not have any substeps. The simple step format should be limited to 10 steps.

Procedure |

Completed |

|---|---|

Note: These procedures are to be completed by the night operator by 6:00 a.m., Monday–Friday. Please initial each completed step.

|

|

As illustrated in the New User Account Creation Procedure example, shown in Table 8-3, the hierarchical format is used for tasks that require more detail or exactness. The hierarchical format allows the use of easy-to-read steps for experienced users while including substeps that are more detailed as well. Experienced users may refer to the substeps only when they need to, whereas beginners will use the detailed substeps to help them learn the procedure.

New User Account Creation Procedure |

|

|---|---|

Note: You must have the HR New User Authorization Form before starting this process. |

|

Procedures |

Detail |

Launch Active Directory Users and Computers (ADUC). |

|

Create a new user. |

|

Enter the required user information. |

|

Create an Exchange mailbox. |

|

Verify account information. |

|

Complete demographic profile. |

|

Add users to groups. |

|

Set remote control permissions. |

|

Advise HR regarding account creation. |

|

Pictures truly are worth a thousand words. The graphic format, shown in Figure 8-2, can use photographs, icons, illustrations, or screenshots to illustrate the procedure. This format is often used for configuration tasks, especially if various literacy levels or language barriers are involved.

FIGURE 8-2 Example of the Graphic Format

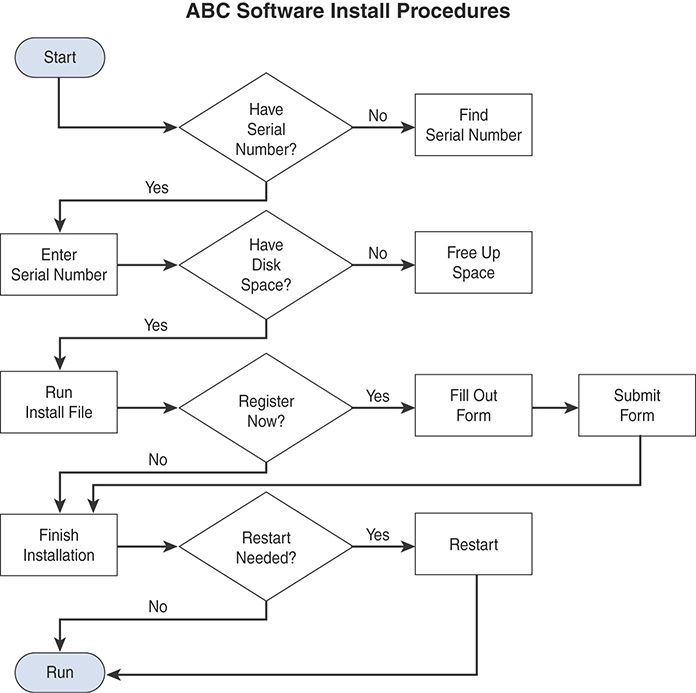

A flowchart, shown in Figure 8-3, is a diagrammatic representation of steps in a decision-making process. A flowchart provides an easy-to-follow mechanism for walking a worker through a series of logical decisions and the steps that should be taken as a result. When developing flowcharts, you should use the generally accepted flowchart symbols. ISO 5807:1985 defines symbols to be used in flowcharts and gives guidance for their use.

FIGURE 8-3 Flowchart Format

FYI: A Recommended Writing Resource

There are several resources for learning how to write procedures that, even if they are not related to cybersecurity, could be very beneficial to get started. An example is the North Carolina State University’s Produce Safety SOP template at: https://ncfreshproducesafety.ces.ncsu.edu/wp-content/uploads/2014/03/how-to-write-an-SOP.pdf.

Another example is the Cornell University “Developing Effective Standard Operating Procedures” by David Grusenmeyer.

In Practice

Standard Operating Procedures Documentation Policy

Synopsis: Standard operating procedures (SOPs) are required to ensure the consistent and secure operation of information systems.

Policy Statement:

SOPs for all critical information processing activities will be documented.

Information system custodians are responsible for developing and testing the procedures.

Information system owners are responsible for authorization and ongoing review.

The Office of Information Technology is responsible for the publication and distribution of information systems-related SOPs.

SOPs for all critical information security activities will be documented, tested, and maintained.

Information security custodians are responsible for developing and testing the procedures.

The Office of Information Security is responsible for authorization, publication, distribution, and review of information security–related SOPs.

Internal auditors will inspect actual practice against the requirements of the SOPs. Each auditor or an audit team creates audit checklists of the items to be covered. Corrective actions and suggestions for remediation may be raised following an internal or regulatory audit where discrepancies have been observed.

Operational Change Control

Operational change is inevitable. Change control is an internal procedure by which authorized changes are made to software, hardware, network access privileges, or business processes. The information security objective of change control is to ensure the stability of the network while maintaining the required levels of confidentiality, integrity, and availability (CIA). A change management process establishes an orderly and effective mechanism for submission, evaluation, approval, prioritization, scheduling, communication, implementation, monitoring, and organizational acceptance of change.

Why Manage Change?

The process of making changes to systems in production environments presents risks to ongoing operations and data that are effectively mitigated by consistent and careful management. Consider this scenario: Windows 8 is installed on a mission-critical workstation. The system administrator installs a service pack. A service pack often will make changes to system files. Now imagine that for a reason beyond the installer’s control, the process fails halfway through. What is the result? An operating system that is neither the original version, nor the updated version. In other words, there could be a mix of new and old system files, which would result in an unstable platform. The negative impact on the process that depends on the workstation would be significant. Take this example to the next level and imagine the impact if this machine were a network server used by all employees all day long. Consider the impact on the productivity of the entire company if this machine were to become unstable because of a failed update. What if the failed change impacted a customer-facing device? The entire business could come grinding to a halt. What if the failed change also introduced a new vulnerability? The result could be loss of confidentiality, integrity, and/or availability (CIA).

Change needs to be controlled. Organizations that take the time to assess and plan for change spend considerably less time in crisis mode. Typical change requests are a result of software or hardware defects or bugs that must be fixed, system enhancement requests, and changes in the underlying architecture such as a new operating system, virtualization hypervisor, or cloud provider.

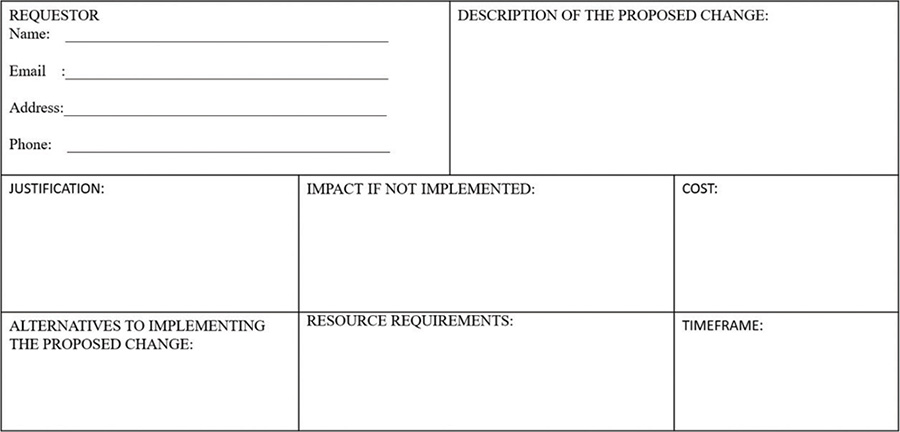

The change control process starts with an RFC (Request for Change). The RFC is submitted to a decision-making body (generally senior management). The change is then evaluated and, if approved, implemented. Each step must be documented. Not all changes should be subject to this process. In fact, doing so would negate the desired effect and in the end significantly impact operations. There should be an organization policy that clearly delineates the type(s) of change that the change control process applies to. Additionally, there needs to be a mechanism to implement “emergency” changes. Figure 8-4 illustrates the RFC process and divides it into three primary milestones or phases: evaluate, approve, and verify.

FIGURE 8-4 Flowchart Format

Submitting an RFC

The first phase of the change control process is an RFC submission. The request should include the following items:

Requestor name and contact information

Description of the proposed change

Justification of why the proposed changes should be implemented

Impact of not implementing the proposed change

Alternatives to implementing the proposed change

Cost

Resource requirements

Time frame

Figure 8-5 shows a template of the aforementioned RFC.

FIGURE 8-5 RFC Template

Taking into consideration the preceding information as well as organizational resources, budget, and priorities, the decision makers can choose to continue to evaluate, approve, reject, or defer until a later date.

Developing a Change Control Plan

After a change is approved, the next step is for the requestor to develop a change control plan. The complexity of the change as well as the risk to the organization will influence the level of detail required. Standard components of a change control plan include a security review to ensure that new vulnerabilities are not being introduced.

Communicating Change

The need to communicate to all relevant parties that a change will be taking place cannot be overemphasized. Different research studies have found that communicating the reason for change was identified as the number-one most important message to share with employees and the second most important message for managers and executives (with the number-one message being about their role and expectations). The messages to communicate to impacted employees fell into two categories: messages about the change and how the change impacts them.

Messages about the change include the following:

The current situation and the rationale for the change

A vision of the organization after the change takes place

The basics of what is changing, how it will change, and when it will change

The expectation that change will happen and is not a choice

Status updates on the implementation of the change, including success stories

Messages about how the change will affect the employee include the following:

The impact of the change on the day-to-day activities of the employee

Implications of the change on job security

Specific behaviors and activities expected from the employee, including support of the change

Procedures for getting help and assistance during the change

Projects that fail to communicate are doomed to fail.

Implementing and Monitoring Change

After the change is approved, planned, and communicated, it is time to implement. Change can be unpredictable. If possible, the change should first be applied to a test environment and monitored for impact. Even minor changes can cause havoc. For example, a simple change in a shared database’s filename could cause all applications that use it to fail. For most environments, the primary implementation objective is to minimize stakeholder impact. This includes having a plan to roll back or recover from a failed implementation.

Throughout the implementation process, all actions should be documented. This includes actions taken before, during, and after the changes have been applied. Changes should not be “set and forget.” Even a change that appears to have been flawlessly implemented should be monitored for unexpected impact.

Some emergency situations require organizations to bypass certain change controls to recover from an outage, incident, or unplanned event. Especially in these cases, it is important to document the change thoroughly, communicate the change as soon as possible, and have it approved post implementation.

In Practice

Operational Change Control Policy

Synopsis: To minimize harm and maximize success associated with making changes to information systems or processes.

Policy Statement:

The Office of Information Technology is responsible for maintaining a documented change control process that provides an orderly method in which changes to the information systems and processes are requested and approved prior to their installation and/or implementation. Changes to information systems include but are not limited to:

Vendor-released operating system, software application, and firmware patches, updates, and upgrades

Updates and changes to internally developed software applications

Hardware component repair/replacement

Implementations of security patches are exempt from this process as long as they follow the approved patch management process.

The change control process must take into consideration the criticality of the system and the risk associated with the change.

Changes to information systems and processes considered critical to the operation of the company must be subject to preproduction testing.

Changes to information systems and processes considered critical to the operation of the company must have an approved rollback and/or recovery plan.

Changes to information systems and processes considered critical to the operation of the company must be approved by the Change Management Committee. Other changes may be approved by the Director of Information Systems, Chief Technology Officer (CTO), or Chief Information Officer (CIO).

Changes must be communicated to all impacted stakeholders.

In an emergency scenario, changes may be made immediately (business system interruption, failed server, and so on) to the production environment. These changes will be verbally approved by a manager supervising the affected area at the time of change. After the changes are implemented, the change must be documented in writing and submitted to the CTO.

Why Is Patching Handled Differently?

A patch is software or code designed to fix a problem. Applying security patches is the primary method of fixing security vulnerabilities in software. The vulnerabilities are often identified by researchers or ethical hackers who then notify the software company so that they can develop and distribute a patch. A function of change management, patching is distinct in how often and how quickly patches need to be applied. The moment a patch is released, attackers make a concerted effort to reverse engineer the patch swiftly (measured in days or even hours), identify the vulnerability, and develop and release exploit code. The time immediately after the release of a patch is ironically a particularly vulnerable moment for most organizations because of the time lag in obtaining, testing, and deploying a patch.

FYI: Patch Tuesday and Exploit Wednesday

Microsoft releases new security updates and their accompanying bulletins on the second Tuesday of every month at approximately 10 a.m. Pacific Time, hence the name Patch Tuesday. The following day is referred to as Exploit Wednesday, signifying the start of exploits appearing in the wild. Many security researchers and threat actors reverse engineer the fixes (patches) to create exploits, in some cases within hours after disclosure.

Cisco also releases bundles of Cisco IOS and IOS XE Software Security Advisories at 1600 GMT on the fourth Wednesday in March and September each year. Additional information can be found on Cisco’s Security Vulnerability Policy at: https://www.cisco.com/c/en/us/about/security-center/security-vulnerability-policy.html.

Understanding Patch Management

Timely patching of security issues is generally recognized as critical to maintaining the operational CIA of information systems. Patch management is the process of scheduling, testing, approving, and applying security patches. Vendors who maintain information systems within a company network should be required to adhere to the organizational patch management process.

The patching process can be unpredictable and disruptive. Users should be notified of potential downtime due to patch installation. Whenever possible, patches should be tested prior to enterprise deployment. However, there may be situations where it is prudent to waive testing based on the severity and applicability of the identified vulnerability. If a critical patch cannot be applied in a timely manner, senior management should be notified of the risk to the organization.

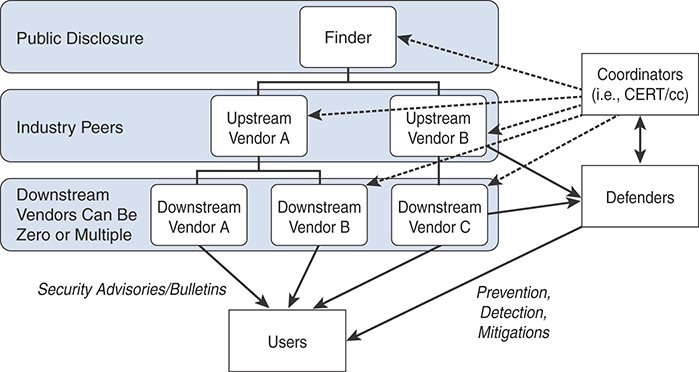

Today’s cybersecurity environment and patching dependencies call for substantial improvements in the area of vulnerability coordination. Open source software vulnerabilities like Heartbleed, protocol vulnerabilities like the WPA KRACK attacks, and others highlight coordination challenges among software and hardware providers.

The Industry Consortium for Advancement of Security on the Internet (ICASI) proposed to the FIRST Board of Directors that a Special Interest Group (SIG) be considered on vulnerability disclosure to review and update vulnerability coordination guidelines. Later, the National Telecommunications and Information Association (NTIA) convened a multistakeholder process to investigate cybersecurity vulnerabilities. The NTIA multiparty effort joined the similar effort underway within the FIRST Vulnerability Coordination SIG. Stakeholders created a document that derives multiparty disclosure guidelines and practices from common coordination scenarios and variations. This document can be found at https://first.org/global/sigs/vulnerability-coordination/multiparty/guidelines-v1.0.

Figure 8-6 shows the FIRST Vulnerability Coordination stakeholder roles and communication paths.

FIGURE 8-6 FIRST Vulnerability Coordination Stakeholder Roles and Communication Paths

The definitions of the different stakeholders used in the FIRST “Guidelines and Practices for Multi-Party Vulnerability Coordination and Disclosure” document are based on the definitions available in ISO/IEC 29147:2014 and used with minimal modification.

NIST Special Publication 800-40 Revision 3, Guide to Enterprise Patch Management Technologies, published July 2013, is designed to assist organizations in understanding the basics of enterprise patch management technologies. It explains the importance of patch management and examines the challenges inherent in performing patch management. The publication also provides an overview of enterprise patch management technologies and discusses metrics for measuring the technologies’ effectiveness and for comparing the relative importance of patches.

In Practice

Security Patch Management Policy

Synopsis: The timely deployment of security patches will reduce or eliminate the potential for exploitation.

Policy Statement:

Implementations of security patches are exempt from the organizational change management process as long as they follow the approved patch management process.

The Office of Information Security is responsible for maintaining a documented patch management process.

The Office of Information Technology is responsible for the deployment of all operating system, application, and device security patches.

Security patches will be reviewed and deployed according to applicability of the security vulnerability and/or identified risk associated with the patch or hotfix.

Security patches will be tested prior to deployment in the production environment. The CIO and the CTO have authority to waive testing based on the severity and applicability of the identified vulnerability.

Vendors who maintain company systems are required to adhere to the company patch management process.

If a security patch cannot be successfully applied, the COO must be notified. Notification must detail the risk to the organization.

Malware Protection

Malware, short for “malicious software,” is software (or script or code) designed to disrupt computer operation, gather sensitive information, or gain unauthorized access to computer systems and mobile devices. Malware is operating-system agnostic. Malware can infect systems by being bundled with other programs or self-replicating; however, the vast majority of malware requires user interaction, such as clicking an email attachment or downloading a file from the Internet. It is critical that security awareness programs articulate individual responsibility in fighting malware.

Malware has become the tool of choice for cybercriminals, hackers, and hacktivists. It has become easy for attackers to create their own malware by acquiring malware toolkits, such as Zeus, Shadow Brokers leaked exploits, and many more, and then customizing the malware produced by those toolkits to meet their individual needs. Examples are ransomware such as WannaCry, Nyetya, Bad Rabbit, and many others. Many of these toolkits are available for purchase, whereas others are open source, and most have user-friendly interfaces that make it simple for unskilled attackers to create customized, high-capability malware. Unlike most malware several years ago, which tended to be easy to notice, much of today’s malware is specifically designed to quietly and slowly spread to other hosts, gathering information over extended periods of time and eventually leading to exfiltration of sensitive data and other negative impacts. The term advanced persistent threats (APTs) is generally used to refer to this approach.

NIST Special Publication 800-83, Revision 1, Guide to Malware Incident Prevention and Handling for Desktops and Laptops, published in July 2012, provides recommendations for improving an organization’s malware incident prevention measures. It also gives extensive recommendations for enhancing an organization’s existing incident response capability so that it is better prepared to handle malware incidents, particularly widespread ones.

Are There Different Types of Malware?

Malware categorization is based on infection and propagation characteristics. The categories of malware include viruses, worms, Trojans, bots, ransomware, rootkits, and spyware/adware. Hybrid malware is code that combines characteristics of multiple categories—for example, combining a virus’s ability to alter program code with a worm’s ability to reside in live memory and to propagate without any action on the part of the user.

A virus is malicious code that attaches to and becomes part of another program. Generally, viruses are destructive. Almost all viruses attach themselves to executable files. They then execute in tandem with the host file. Viruses spread when the software or document they are attached to is transferred from one computer to another using the network, a disk, file sharing, or infected email attachments.

A worm is a piece of malicious code that can spread from one computer to another without requiring a host file to infect. Worms are specifically designed to exploit known vulnerabilities, and they spread by taking advantage of network and Internet connections. An early example of a worm was W32/SQL Slammer (aka Slammer and Sapphire), which was one of the fastest spreading worms in history. It infected the process space of Microsoft SQL Server 2000 and Microsoft SQL Desktop Engine (MSDE) by exploiting an unpatched buffer overflow. Once running, the worm tried to send itself to as many other Internet-accessible SQL hosts as possible. Microsoft had released a patch six months prior to the Slammer outbreak. Another example of a “wormable” malware was the WannaCry ransomware, which is discussed later in this chapter.

A Trojan is malicious code that masquerades as a legitimate benign application. For example, when a user downloads a game, he may get more than he expected. The game may serve as a conduit for a malicious utility such as a keylogger or screen scraper. A keylogger is designed to capture and log keystrokes, mouse movements, Internet activity, and processes in memory such as print jobs. A screen scraper makes copies of what you see on your screen. A typical activity attributed to Trojans is to open connections to a command and control server (known as a C&C). Once the connection is made, the machine is said to be “owned.” The attacker takes control of the infected machine. In fact, cybercriminals will tell you that after they have successfully installed a Trojan on a target machine, they actually have more control over that machine than the very person seated in front of and interacting with it. Once “owned,” access to the infected device may be sold to other criminals. Trojans do not reproduce by infecting other files, nor do they self-replicate. Trojans must spread through user interaction, such as opening an email attachment or downloading and running a file from the Internet. Examples of Trojans include Zeus and SpyEye. Both Trojans are designed to capture financial website login credentials and other personal information.

Bots (also known as robots) are snippets of code designed to automate tasks and respond to instructions. Bots can self-replicate (like worms) or replicate via user action (like Trojans). A malicious bot is installed in a system without the user’s permission or knowledge. The bot connects back to a central server or command center. An entire network of compromised devices is known as a botnet. One of the most common uses of a botnet is to launch distributed denial of service (DDoS) attacks. An example of a botnet that caused major outages in the past is the Mirai botnet, which is often referred to as the IoT Botnet. Threat actors were able to successfully compromise IoT devices, including security cameras and consumer routing devices, to create one of the most devastating botnets in history, launching numerous DDoS attacks against very high-profile targets.

Ransomware is a type of malware that takes a computer or its data hostage in an effort to extort money from victims. There are two types of ransomware: Lockscreen ransomware displays a full-screen image or web page that prevents you from accessing anything in your computer. Encryption ransomware encrypts your files with a password, preventing you from opening them. The most common ransomware scheme is a notification that authorities have detected illegal activity on your computer and you must pay a “fine” to avoid prosecution and regain access to your system. Examples of popular ransomware include WannaCry, Nyetya, Bad Rabbit, and others. Ransomware typically spreads or is delivered by malicious emails, malvertising (malicious advertisements or ads), and other drive-by downloads. However, in the case of WannaCry, this ransomware was the first one that spread in similar ways as worms (as previously defined in this chapter). Specifically, it used the EternalBlue exploit.

EternalBlue is an SMB exploit affecting various Windows operating systems from XP to Windows 7 and various flavors of Windows Server 2003 & 2008. The exploit technique is known as HeapSpraying and is used to inject shellcode into vulnerable systems, allowing for the exploitation of the system. The code is capable of targeting vulnerable machines by IP address and attempting exploitation via SMB port 445. The EternalBlue code is closely tied with the DoublePulsar backdoor and even checks for the existence of the malware during the installation routine.

Cisco Talos has created numerous articles covering in-depth technical details of numerous types of ransomware at http://blog.talosintelligence.com/search/label/ransomware.

A rootkit is a set of software tools that hides its presence in the lower layers of the operating system’s application layer, the operating system kernel, or in the device basic input/output system (BIOS) with privileged access permissions. Root is a UNIX/Linux term that denotes administrator-level or privileged access permissions. The word “kit” denotes a program that allows someone to obtain root/admin-level access to the computer by executing the programs in the kit—all of which is done without end-user consent or knowledge. The intent is generally remote C&C. Rootkits cannot self-propagate or replicate; they must be installed on a device. Because of where they operate, they are very difficult to detect and even more difficult to remove.

Spyware is a general term used to describe software that without a user’s consent and/or knowledge tracks Internet activity, such as searches and web surfing, collects data on personal habits, and displays advertisements. Spyware sometimes affects the device configuration by changing the default browser, changing the browser home page, or installing “add-on” components. It is not unusual for an application or online service license agreement to contain a clause that allows for the installation of spyware.

A logic bomb is a type of malicious code that is injected into a legitimate application. An attacker can program a logic bomb to delete itself from the disk after it performs the malicious tasks on the system. Examples of these malicious tasks include deleting or corrupting files or databases and executing a specific instruction after certain system conditions are met.

A downloader is a piece of malware that downloads and installs other malicious content from the Internet to perform additional exploitation on an affected system.

A spammer is a piece of malware that sends spam, or unsolicited messages sent via email, instant messaging, newsgroups, or any other kind of computer or mobile device communications. Spammers send these unsolicited messages with the primary goal of fooling users into clicking malicious links, replying to emails or other messages with sensitive information, or performing different types of scams. The attacker’s main objective is to make money.

How Is Malware Controlled?

The IT department is generally tasked with the responsibility of employing a strong antimalware defense-in-depth strategy. In this case, defense-in-depth means implementing prevention, detection, and response controls, coupled with a security awareness campaign.

Using Prevention Controls

The goal of prevention control is to stop an attack before it even has a chance to start. This can be done in a number of ways:

Impact the distribution channel by training users not to clink links embedded in email, open unexpected email attachments, irresponsibly surf the Web, download games or music, participate in peer-to-peer (P2P) networks, and allow remote access to their desktop.

Configure the firewall to restrict access.

Do not allow users to install software on company-provided devices.

Do not allow users to make changes to configuration settings.

Do not allow users to have administrative rights to their workstations. Malware runs in the security context of the logged-in user.

Do not allow users to disable (even temporarily) anti-malware software and controls.

Disable remote desktop connections.

Apply operating system and application security patches expediently.

Enable browser-based controls, including pop-up blocking, download screening, and automatic updates.

Implement an enterprise-wide antivirus/antimalware application. It is important that the antimalware solutions be configured to update as frequently as possible because many new pieces of malicious code are released daily.

You should also take advantage of sandbox-based solutions to provide a controlled set of resources for guest programs to run in. In a sandbox network, access is typically denied to avoid network-based infections.

Using Detection Controls

Detection controls should identify the presence of malware, alert the user (or network administrator), and in the best-case scenario stop the malware from carrying out its mission. Detection should occur at multiple levels—at the entry point of the network, on all hosts and devices, and at the file level. Detection controls include the following:

Real-time firewall detection of suspicious file downloads.

Real-time firewall detection of suspicious network connections.

Host and network-based intrusion detection systems (IDS) or intrusion prevention systems (IPS).

Review and analysis of firewalls, IDS, operating systems, and application logs for indicators of compromise.

User awareness to recognize and report suspicious activity.

Antimalware and antivirus logs.

Help desk (or equivalent) training to respond to malware incidents.

What Is Antivirus Software?

Antivirus (AV) software is used to detect, contain, and in some cases eliminate malicious software. Most AV software employs two techniques—signature-based recognition and behavior-based (heuristic) recognition. A common misconception is that AV software is 100% effective against malware intrusions. Unfortunately, that is not the case. Although AV applications are an essential control, they are increasingly limited in their effectiveness. This is due to three factors—the sheer volume of new malware, the phenomenon of “single-instance” malware, and the sophistication of blended threats.

The core of AV software is known as the “engine.” It is the basic program. The program relies on virus definition files (known as DAT files) to identify malware. The definition files must be continually updated by the software publisher and then distributed to every user. This was a reasonable task when the number and types of malware were limited. New versions of malware are increasing exponentially, thus making research, publication, and timely distribution a next-to-impossible task. Complicating this problem is the phenomenon of single-instance malware—that is, variants only used one time. The challenge here is that DAT files are developed using historical knowledge, and it is impossible to develop a corresponding DAT file for a single instance that has never been seen before. The third challenge is the sophistication of malware—specifically, blended threats. A blended threat occurs when multiple variants of malware (worms, viruses, bots, and so on) are used in concert to exploit system vulnerabilities. Blended threats are specifically designed to circumvent AV and behavioral-based defenses.

Numerous antivirus and antimalware solutions on the market are designed to detect, analyze, and protect against both known and emerging endpoint threats. The following are the most common types of antivirus and antimalware software:

ZoneAlarm PRO Antivirus+, ZoneAlarm PRO Firewall, and ZoneAlarm Extreme Security

F-Secure Anti-Virus

Kaspersky Anti-Virus

McAfee AntiVirus

Panda Antivirus

Sophos Antivirus

Norton AntiVirus

ClamAV

Immunet AntiVirus

There are numerous other antivirus software companies and products.

ClamAV is an open source antivirus engine sponsored and maintained by Cisco and non-Cisco engineers. You can download ClamAV from www.clamav.net. Immunet is a free community-based antivirus software maintained by Cisco Sourcefire. You can download Immunet from www.immunet.com

Personal firewalls and host intrusion prevention systems (HIPSs) are software applications that you can install on end-user machines or servers to protect them from external security threats and intrusions. The term personal firewall typically applies to basic software that can control Layer 3 and Layer 4 access to client machines. HIPS provide several features that offer more robust security than a traditional personal firewall, such as host intrusion prevention and protection against spyware, viruses, worms, Trojans, and other types of malware.

FYI: What Are the OSI and TCP/IP Models?

Two main models are currently used to explain the operation of an IP-based network. These are the TCP/IP model and the Open System Interconnection (OSI) model. The TCP/IP model is the foundation for most modern communication networks. Every day, each of us uses some application based on the TCP/IP model to communicate. Think, for example, about a task we consider simple: browsing a web page. That simple action would not be possible without the TCP/IP model.

The TCP/IP model’s name includes the two main protocols we discuss in the course of this chapter: Transmission Control Protocol (TCP) and Internet Protocol (IP). However, the model goes beyond these two protocols and defines a layered approach that can map nearly any protocol used in today’s communication.

In its original definition, the TCP/IP model included four layers, where each of the layers would provide transmission and other services for the level above it. These are the link layer, internet layer, transport layer, and application layer.

In its most modern definition, the link layer is split into two additional layers to clearly demark the physical and data link type of services and protocols included in this layer. The internet layer is also sometimes called the networking layer, which is based on another well-known model, the Open System Interconnection (OSI) model.

The OSI reference model is another model that uses abstraction layers to represent the operation of communication systems. The idea behind the design of the OSI model is to be comprehensive enough to take into account advancement in network communications and to be general enough to allow several existing models for communication systems to transition to the OSI model.

The OSI model presents several similarities with the TCP/IP model described above. One of the most important similarities is the use of abstraction layers. As with TCP/IP, each layer provides service for the layer above it within the same computing device while it interacts at the same layer with other computing devices. The OSI model includes seven abstract layers, each representing a different function and service within a communication network:

Physical layer—Layer 1 (L1): Provides services for the transmission of bits over the data link.

Data link layer—Layer 2 (L2): Includes protocols and functions to transmit information over a link between two connected devices. For example, it provides flow control and L1 error detection.

Network layer—Layer 3 (L3): This layer includes the function necessary to transmit information across a network and provides abstraction on the underlying means of connection. It defines L3 addressing, routing, and packet forwarding.

Transport layer—Layer 4 (L4): This layer includes services for end-to-end connection establishment and information delivery. For example, it includes error detection, retransmission capabilities, and multiplexing.

Session layer—Layer 5 (L5): This layer provides services to the presentation layer to establish a session and exchange presentation layer data.

Presentation layer—Layer 6 (L6): This layer provides services to the application layer to deal with specific syntax, which is how data is presented to the end user.

Application layer—Layer 7 (L7): This is the last (or first) layer of the OSI model (depending on how you see it). It includes all the services of a user application, including the interaction with the end user.

Figure 8-7 illustrates how each layer of the OSI model maps to the corresponding TCP/IP layer.

FIGURE 8-7 OSI and TCP/IP Models

Attacks are getting very sophisticated and can evade detection of traditional systems and endpoint protection. Today, attackers have the resources, knowledge, and persistence to beat point-in-time detection. These solutions provide mitigation capabilities that go beyond point-in-time detection. It uses threat intelligence to perform retrospective analysis and protection. These malware protection solutions also provide device and file trajectory capabilities to allow a security administrator to analyze the full spectrum of an attack.

FYI: CCleaner Antivirus Supply Chain Backdoor

Security researchers at Cisco Talos found a backdoor that was included with version 5.33 of the CCleaner antivirus application. During the investigation and when analyzing the delivery code from the command and control server, they found references to several high-profile organizations including Cisco, Intel, VMWare, Sony, Samsung, HTC, Linksys, Microsoft, and Google Gmail that were specifically targeted through delivery of a second-stage loader. Based on a review of the command and control tracking database, they confirmed that at least 20 victims were served specialized secondary payloads. Interestingly, the array specified contains different domains of high-profile technology companies. This would suggest a very focused actor after valuable intellectual property.

Another example of potential supply chain attacks are the allegations against security products like the Kaspersky antivirus. The United States Department of Homeland Security (DHS) issued a Binding Operational Directive 17-01 strictly calling on all U.S. government departments and agencies to identify any use or presence of Kaspersky products on their information systems and to develop detailed plans to remove and discontinue present and future use of these products. This directive can be found at https://www.dhs.gov/news/2017/09/13/dhs-statement-issuance-binding-operational-directive-17-01.

In Practice

Malicious Software Policy

Synopsis: To ensure a companywide effort to prevent, detect, and contain malicious software.

Policy Statement:

The Office of Information Technology is responsible for recommending and implementing prevention, detection, and containment controls. At a minimum, antimalware software will be installed on all computer workstations and servers to prevent, detect, and contain malicious software.

Any system found to be out of date with vendor-supplied virus definition and/or detection engines must be documented and remediated immediately or disconnected from the network until it can be updated.

The Office of Human Resources is responsible for developing and implementing malware awareness and incident reporting training.

All malware-related incidents must be reported to the Office of Information Security.

The Office of Information Security is responsible for incident management.

Data Replication

The impact of malware, computer hardware failure, accidental deletion of data by users, and other eventualities is reduced with an effective data backup or replication process that includes periodic testing to ensure the integrity of the data as well as the efficiency of the procedures to restore that data in the production environment. Having multiple copies of data is essential for both data integrity and availability. Data replication is the process of copying data to a second location that is available for immediate or near-time use. Data backup is the process of copying and storing data that can be restored to its original location. A company that exists without a tested backup-and-restore or data replication solution is like a flying acrobat working without a net.

When you perform data replication, you copy and then move data between different sites. Data replication is typically measured as follows:

Recovery Time Objective (RTO): The targeted time frame in which a business process must be restored after a disruption or a disaster.

Recovery Point Objective (RPO): The maximum amount of time in which data might be lost from an organization due to a major incident.

Is There a Recommended Backup or Replication Strategy?

Making the decision to back up or to replicate, and how often, should be based on the impact of not being able to access the data either temporarily or permanently. Strategic, operational, financial, transactional, and regulatory requirements must be considered. You should consider several factors when designing a replication or data backup strategy. Reliability is paramount; speed and efficiency are also very important, as are simplicity, ease of use, and, of course, cost. These factors will all define the criteria for the type and frequency of the process.

Data backup strategies primarily focus on compliance and granular recovery—for example, recovering a document created a few months ago or a user’s email a few years ago.

Data replication and recovery focus on business continuity and the quick or easy resumption of operations after a disaster or corruption. One of the key benefits of data replication is minimizing the recovery time objective (RTO). Additionally, data backup is typically used for everything in the organization, from critical production servers to desktops and mobile devices. On the other hand, data replication is often used for mission-critical applications that must always be available and fully operational.

Backed-up or replicated data should be stored at an off-site location, in an environment where it is secure from theft, the elements, and natural disasters such as floods and fires. The backup strategy and associated procedures must be documented.

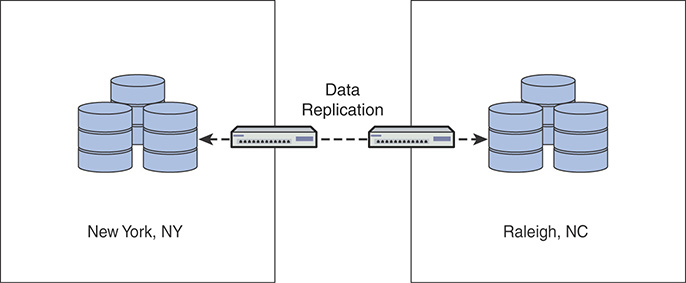

Figure 8-8 shows an example of data replication between two geographical locations. In this example, data stored at an office in New York, NY, is replicated to a site in Raleigh, North Carolina.

FIGURE 8-8 Data Replication Between Two Geographical Locations

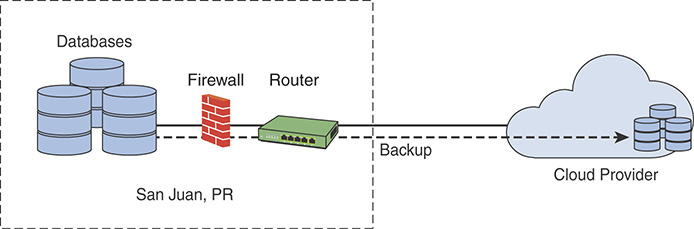

Organizations also can use data backups or replication to the cloud. Cloud storage refers to using Internet-based resources to store your data. A number of the cloud-based providers, such as Google, Amazon, Microsoft Azure, Box, Dropbox, and others offer scalable, affordable storage options that can be used in place of (or in addition to) local backup.

Figure 8-9 shows an example of an organization that has an office in San Juan, Puerto Rico, backing up its data in the cloud.

FIGURE 8-9 Cloud-Based Data Backup Example

Different data backup recovery types can be categorized as follows:

Traditional recovery

Enhanced recovery

Rapid recovery

Continuous availability

Figure 8-10 lists the benefits and elements of each data backup recovery type.

FIGURE 8-10 Data Backup Types

Understanding the Importance of Testing

The whole point of replicating or backing up data is that it can be accessed or restored if the data is lost or tampered with. In other words, the value of the backup or replication is the assurance that running a restore operation will yield success and that the data will again be available for production and business-critical application systems.

Just as proper attention must be paid to designing and testing the replication or backup solution, the accessibility or restore strategy must also be carefully designed and tested before being approved. Accessibility or restore procedures must be documented. The only way to know whether a replication or backup operation was successful and can be relied upon is to test it. It is recommended that testing access or restores of random files be conducted at least monthly.

In Practice

Data Replication Policy

Synopsis: Maintain the availability and integrity of data in the case of error, compromise, failure, or disaster.

Policy Statement:

The Office of Information Security is responsible for the design and oversight of the enterprise replication and backup strategy. Factors to be considered include but are not limited to impact, cost, and regulatory requirements.

Data contained on replicated or backup media will be protected at the same level of access control as the data on the originating system.

The Office of Information Technology is responsible for the implementation, maintenance, and ongoing monitoring of the replication and backup/restoration strategy.

The process must be documented.

The procedures must be tested on a scheduled basis.

Backup media no longer in rotation for any reason will be physically destroyed so that the data is unreadable by any means.

Secure Messaging

In 1971, Ray Tomlinson, a Department of Defense (DoD) researcher, sent the first ARPANET email message to himself. The ARPANET, the precursor to the Internet, was a United States (U.S.) Advanced Research Project Agency (ARPA) project intended to develop a set of communications protocols to transparently connect computing resources in various geographical locations. Messaging applications were available on ARPANET systems; however, they could be used only for sending messages to users with local system accounts. Tomlinson modified the existing messaging system so that users could send messages to users on other ARPANET-connected systems. After Tomlinson’s modification was available to other researchers, email quickly became the most heavily used application on the ARPANET. Security was given little consideration because the ARPANET was viewed as a trusted community.

Current email architecture is strikingly similar to the original design. Consequently, email servers, email clients, and users are vulnerable to exploit and are frequent targets of attack. Organizations need to implement controls that safeguard the CIA of email hosts and email clients. NIST Special Publication 800-177, Trustworthy Email, recommends security practices for improving the trustworthiness of email. NIST’s recommendations are aimed to help you reduce the risk of spoofed email being used as an attack vector and the risk of email contents being disclosed to unauthorized parties. The recommendations in the special publication apply to both the email sender and receiver.

What Makes Email a Security Risk?

When you send an email, the route it takes in transit is complex, with processing and sorting occurring at several intermediary locations before arriving at the final destination. In its native form, email is transmitted using clear-text protocols. It is almost impossible to know if anyone has read or manipulated your email in transit. Forwarding, copying, storing, and retrieving email is easy (and commonplace); preserving confidentiality of the contents and metadata is difficult. Additionally, email can be used to distribute malware and to exfiltrate company data.

Understanding Clear Text Transmission

Simple Mail Transfer Protocol (SMTP) is the de facto message transport standard for sending email messages. Jon Postel of the University of Southern California developed SMTP in August 1982. At the most basic level, SMTP is a minimal language that defines a communications protocol for delivering email messages. After a message is delivered, users need to access the mail server to retrieve the message. The two most widely supported mailbox access protocols are Post Office Protocol (now POP3), developed in 1984, and Internet Message Access Protocol (IMAP), developed in 1988. The designers never envisioned that someday email would be ubiquitous, and as with the original ARPANET communications, reliable message delivery, rather than security, was the focus. SMTP, POP, and IMAP are all clear-text protocols. This means that the delivery instructions (including access passwords) and email contents are transmitted in a human readable form. Information sent in clear text may be captured and read by third parties, resulting in a breach of confidentiality. Information sent in clear text may be captured and manipulated by third parties, resulting in a breach of integrity.

Encryption protocols can be used to protect both authentication and contents. Encryption protects the privacy of the message by converting it from (readable) plain text into (scrambled) cipher text. Late implementation of POP and IMAP support encryption. RFC 2595, “Using TLS with IMAP, POP3 and ACAP” introduces the use of encryption in these popular email standards.

We examine encryption protocols in depth in Chapter 10, “Information Systems Acquisition, Development, and Maintenance.” Encrypted email is often referred to as “secure email.” As we discussed in Chapter 5, “Asset Management and Data Loss Prevention,” email-handling standards should specify the email encryption requirements for each data classification. Most email encryption utilities can be configured to auto-encrypt based on preset criteria, including content, recipient, and email domain.

Understanding Metadata

Documents sent as email attachments or via any other communication or collaboration tools might contain more information than the sender intended to share. The files created by many office programs contain hidden information about the creator of the document, and may even include some content that has been reformatted, deleted, or hidden. This information is known as metadata.

Keep this in mind in the following situations:

If you recycle documents by making changes and sending them to new recipients (that is, using a boilerplate contract or a sales proposal).

If you use a document created by another person. In programs such as Microsoft Office, the document might list the original person as the author.

If you use a feature for tracking changes. Be sure to accept or reject changes, not just hide the revisions.

Understanding Embedded Malware

Email is an effective method to attack and ultimately infiltrate an organization. Common mechanisms include embedding malware in an attachment and directing the recipient to click a hyperlink that connects to a malware distribution site (unbeknownst to the user). Increasingly, attackers are using email to deliver zero-day attacks at targeted organizations. A zero-day exploit is one that takes advantage of a security vulnerability on the same day that the vulnerability becomes publicly or generally known.

Malware can easily be embedded in common attachments, such as PDF, Word, and Excel files, or even a picture. Not allowing any attachments would simplify email security; however, it would dramatically reduce the usefulness of email. Determining which types of attachments to allow and which to filter out must be an organizational decision. Filtering is a mail server function and is based on the file type. The effectiveness of filtering is limited because attackers can modify the file extension. In keeping with a defense-in-depth approach, allowed attachments should be scanned for malware at the mail gateway, email server, and email client.

A hyperlink is a word, phrase, or image that is programmatically configured to connect to another document, bookmark, or location. Hyperlinks have two components—the text to display (such as www.goodplace.com) and the connection instructions. Genuine-looking hyperlinks are used to trick email recipients into connecting to malware distribution sites. Most email client applications have the option to disable active hyperlinks. The challenge here is that hyperlinks are often legitimately used to direct the recipient to additional information. In both cases, users need to be taught to not click on links or open any attachment associated with an unsolicited, unexpected, or even mildly suspicious email.

Controlling Access to Personal Email Applications

Access to personal email accounts should not be allowed from a corporate network. Email that is delivered via personal email applications such as Gmail bypass all the controls that the company has invested in, such as email filtering and scanning. A fair comparison would be that you install a lock, lights, and an alarm system on the front door of your home but choose to leave the back door wide open all the time based on the assumption that the back door is really just used occasionally for friends and family.

In addition to outside threats, consideration needs to be given to both the malicious and unintentional insider threat. If an employee decides to correspond with a customer via personal email, or if an employee chooses to exfiltrate information and send it via personal email, there would be no record of the activity. From both an HR and a forensic perspective, this would hamper an investigation and subsequent response.

Understanding Hoaxes

Every year, a vast amount of money is lost, in the form of support costs and equipment workload, due to hoaxes sent by email. A hoax is a deliberately fabricated falsehood. An email hoax may be a fake virus warning or false information of a political or legal nature and often borders on criminal mischief. Some hoaxes ask recipients to take action that turns out to be damaging—deleting supposedly malicious files from their local computer, sending uninvited mail, randomly boycotting organizations for falsified reasons, or defaming an individual or group by forwarding the message.

Understanding the Risks Introduced by User Error

The three most common user errors that impact the confidentiality of email are sending email to the wrong person, choosing Reply All instead of Reply, and using Forward inappropriately.

It is easy to mistakenly send email to the wrong address. This is especially true with email clients that autocomplete addresses based on the first three or four characters entered. All users must be made aware of this and must pay strict attention to the email address entered in the To field, along with the CC and BCC fields when used.

The consequence of choosing Reply All instead of Reply can be significant. The best-case scenario is embarrassment. In the worst cases, confidentiality is violated by distributing information to those who do not have a “need to know.” In regulated sectors such as health care and banking, violating the privacy of patients and/or clients is against the law.

Forwarding has similar implications. Assume that two people have been emailing back and forth using the Reply function. Their entire conversation can be found online. Now suppose that one of them decides that something in the last email is of interest to a third person and forwards the email. In reality, what that person just did was forward the entire thread of emails that had been exchanged between the two original people. This may well have not been the person’s intent and may violate the privacy of the other original correspondent.

Are Email Servers at Risk?

Email servers are hosts that deliver, forward, and store email. Email servers are attractive targets because they are a conduit between the Internet and the internal network. Protecting an email server from compromise involves hardening the underlying operating system, the email server application, and the network to prevent malicious entities from directly attacking the mail server. Email servers should be single-purpose hosts, and all other services should be disabled or removed. Email server threats include relay abuse and DoS attacks.

Understanding Relay Abuse and Blacklisting

The role of an email server is to process and relay email. The default posture for many email servers is to process and relay any mail sent to the server. This is known as open mail relay. The ability to relay mail through a server can (and often is) taken advantage of by those who benefit from the illegal use of the resource. Criminals conduct Internet searches for email servers configured to allow relay. After they locate an open relay server, they use it for distributing spam and malware. The email appears to come from the company whose email server was misappropriated. Criminals use this technique to hide their identity. This is not only an embarrassment but can also result in legal and productivity ramifications.

In a response to the deluge of spam and email malware distribution, blacklisting has become a standard practice. A blacklist is a list of email addresses, domain names, or IP addresses known to send unsolicited commercial email (spam) or email-embedded malware. The process of blacklisting is to use the blacklist as an email filter. The receiving email server checks the incoming emails against the blacklist, and when a match is found, the email is denied.

Understanding Denial of Service Attacks

The SMTP protocol is especially vulnerable to DDoS attacks because, by design, it accepts and queues incoming emails. To mitigate the effects of email DoS attacks, the mail server can be configured to limit the amount of operating system resources it can consume. Some examples include configuring the mail server application so that it cannot consume all available space on its hard drives or partitions, limiting the size of attachments that are allowed, and ensuring log files are stored in a location that is sized appropriately.

Other Collaboration and Communication Tools

In addition, nowadays organizations use more than just email. Many organizations use Slack, Cisco Spark, WebEx, Telepresence, and many other collaboration tools that provide a way for internal communications. Most of these services or products provide different encryption capabilities. This includes encryption during the transit of the data and encryption of the data at rest. Most of these are also cloud services. You must have a good strategy when securing and understanding the risk of each of these solutions, including knowing the risks that you can control and the ones that you cannot.

Are Collaboration and Communication Services at Risk?

Absolutely! Just like email, collaboration tools like WebEx, Slack, and others need to be evaluated. This is why the United States Federal Government created the Federal Risk and Authorization Management Program, or FedRAMP. FedRAMP is a program that specifies a standardized approach to security assessment, authorization, and continuous monitoring for cloud products and services. This includes cloud services such as Cisco WebEx.

According to its website (https://www.fedramp.gov) the following are the goals of FedRAMP:

Accelerate the adoption of secure cloud solutions through reuse of assessments and authorizations

Increase confidence in security of cloud solutions

Achieve consistent security authorizations using a baseline set of agreed-upon standards to be used for cloud product approval in or outside of FedRAMP

Ensure consistent application of existing security practice

Increase confidence in security assessments

Increase automation and near real-time data for continuous monitoring

Also as defined in its website, the following are the benefits of FedRAMP:

Increase re-use of existing security assessments across agencies

Save significant cost, time, and resources—“do once, use many times”

Improve real-time security visibility

Provide a uniform approach to risk-based management

Enhance transparency between government and Cloud Service Providers (CSPs)

Improve the trustworthiness, reliability, consistency, and quality of the Federal security authorization process

In Practice

Email and Email Systems Security Policy

Synopsis: To recognize that email and messaging platforms are vulnerable to unauthorized disclosure and attack, and to assign responsibility to safeguarding said systems.

Policy Statement:

The Office of Information Security is responsible for assessing the risk associated with email and email systems. Risk assessments must be performed at a minimum biannually or whenever there is a change trigger.

The Office of Information Security is responsible for creating email security standards, including but not limited to attachment and content filtering, encryption, malware inspection, and DDoS mitigation.

External transmission of data classified as “protected” or “confidential” must be encrypted.

Remote access to company email must conform to the corporate remote access standards.

Access to personal web-based email from the corporate network is not allowed.

The Office of Information Technology is responsible for implementing, maintaining, and monitoring appropriate controls.

The Office of Human Resources is responsible for providing email security user training.

Activity Monitoring and Log Analysis

NIST defines a log as a record of the events occurring within an organization’s systems and networks. Logs are composed of log entries; each entry contains information related to a specific event that has occurred within a system or network. Security logs are generated by many sources, including security software, such as AV software, firewalls, and IDS/IPS systems; operating systems on servers, workstations, and networking equipment; and applications. Another example of “records” from network activity is NetFlow. NetFlow was initially created for billing and accounting of network traffic and to measure other IP traffic characteristics, such as bandwidth utilization and application performance. NetFlow has also been used as a network-capacity planning tool and to monitor network availability. Nowadays, NetFlow is used as a network security tool because its reporting capabilities provide nonrepudiation, anomaly detection, and investigative capabilities. As network traffic traverses a NetFlow-enabled device, the device collects traffic flow information and provides a network administrator or security professional with detailed information about such flows. The Internet Protocol Flow Information Export (IPFIX) is a network flow standard led by the Internet Engineering Task Force (IETF). IPFIX was created to create a common, universal standard of export for flow information from routers, switches, firewalls, and other infrastructure devices. IPFIX defines how flow information should be formatted and transferred from an exporter to a collector.

Logs are a key resource when performing auditing and forensic analysis, supporting internal investigations, establishing baselines, and identifying operational trends and long-term problems. Routine log analysis is beneficial for identifying security incidents, policy violations, fraudulent activity, and operational problems. Third-party security specialists should be engaged for log analysis if in-house knowledge is not sufficient.

Big data analytics is the practice of studying large amounts of data of a variety of types and a variety of courses to learn interesting patterns, unknown facts, and other useful information. Big data analytics can play a crucial role in cybersecurity. Many in the industry are changing the tone of their conversation, saying that it is no longer if or when your network will be compromised, but the assumption is that your network has already been hacked or compromised. They suggest focusing on minimizing the damage and increasing visibility to aid in identification of the next hack or compromise.

Advanced analytics can be run against very large diverse data sets to find indicators of compromise (IOCs). These data sets can include different types of structured and unstructured data processed in a “streaming” fashion or in batches. Any organization can collect data just for the sake of collecting data; however, the usefulness of such data depends on how actionable such data is to make any decisions (in addition to whether the data is regularly monitored and analyzed).

What Is Log Management?

Log management activities involve configuring the log sources, including log generation, storage, and security, performing analysis of log data, initiating appropriate responses to identified events, and managing the long-term storage of log data. Log management infrastructures are typically based on one of the two major categories of log management software: syslog-based centralized logging software and security information and event management software (SIEM). Syslog provides an open framework based on message type and severity. Security information and event management (SIEM) software includes commercial applications and often uses proprietary processes. NIST Special Publication SP 800-92, Guide to Computer Security Log Management, published September 2006, provides practical, real-world guidance on developing, implementing, and maintaining effective log management practices throughout an enterprise. The guidance in SP 800-92 covers several topics, including establishing a log management infrastructure.

Prioritizing and Selecting Which Data to Log

Ideally, data would be collected from every significant device and application on the network. The challenge is that network devices and applications can generate hundreds of events per minute. A network with even a small number of devices can generate millions of events per day. The sheer volume can overwhelm a log management program. Prioritization and inclusion decisions should be based on system or device criticality, data protection requirements, vulnerability to exploit, and regulatory requirements. For example, websites and servers that serve as the public face of the company are vulnerable specifically because they are Internet accessible. E-commerce application and database servers may drive the company’s revenue and are targeted because they contain valuable information, such as credit card information. Internal devices are required for day-to-day productivity; access makes them vulnerable to insider attacks. In addition to identifying suspicious activity, attacks, and compromises, log data can be used to better understand normal activity, provide operational oversight, and provide a historical record of activity. The decision-making process should include information system owners as well as information security, compliance, legal, HR, and IT personnel.

Systems within an IT infrastructure are often configured to generate and send information every time a specific event happens. An event, as described in NIST SP 800-61 revision 2, “Computer Security Incident Handling Guide,” is any observable occurrence in a system or network, whereas a security incident is an event that violates the security policy of an organization. One important task of a security operation center analyst is to determine when an event constitutes a security incident. An event log (or simply a log) is a formal record of an event and includes information about the event itself. For example, a log may contain a timestamp, an IP address, an error code, and so on.

Event management includes administrative, physical, and technical controls that allow for the proper collection, storage, and analysis of events. Event management plays a key role in information security because it allows for the detection and investigation of a real-time attack, enables incident response, and allows for statistical and trending reporting. If an organization lacks information about past events and logs, this may reduce its ability to investigate incidents and perform a root-cause analysis.