Probably the thing I dislike most about some games is how the computer cheats. I'm playing my strategy game and I have to spend 10 minutes finding their units while they automatically know where mine are, which type they are, their energies, and so on. It's not the fact that they cheat to make the game harder, it's the fact that they cheat because the artificial intelligence is very weak. The computer adversary should know just about the same information as the player. If you look at a unit, you don't see their health, their weapons, and their bullets. You just see a unit and, depending on your units, you respond to it. That's what the computer should do; that's what artificial intelligence is all about.

In this chapter I will first give you a quick overview of several types of artificial intelligence, and then you will see how you can apply one or two to games. In this chapter, I'm going to go against the norm for this book and explain the concepts with little snippets of code instead of complete programs. The reason I'm doing this is because the implementation of each field of artificial intelligence is very specific, and where is the fun in watching a graph give you the percentage of the decisions if you can't actually see the bad guy hiding and cornering you? Complete examples would basically require a complete game! For this reason, I will go over several concrete artificial intelligence examples, giving only the theory and some basic code for the implementation, and it will be up to you to choose the best implementation for what you want to do.

Here is a breakdown of the major topics in this chapter:

There are many fields of artificial intelligence; some are more game-oriented and others are more academic. Although it is possible to use almost any of them in games, there are a few that stand out, and they will be introduced and explained in this section.

Expert systems solve problems that are usually solved by specialized humans. For example, if you go to a doctor, he will analyze you (either by asking you a set of questions or doing some analysis himself), and according to his knowledge, he will give you a diagnosis.

An expert system could be the doctor if it had a broad enough knowledge base. It would ask you a set of questions, and depending on your answers, it would consult its knowledge base and give you a diagnosis. The system checks each of your answers with the possible answers in its knowledge base, and depending on your answer, it asks you other questions until it can easily give you a diagnosis.

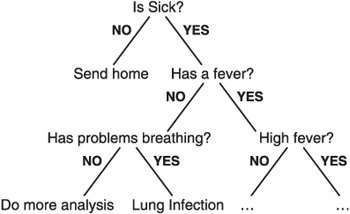

For a sample knowledge tree, take a look at Figure 20.1. As you can see, a few questions would be asked, and according to the answers, the system would follow the appropriate tree branch until it reached a leaf.

Very simple medical expert system this could be. Note that this is all just pseudocode, based on a fictional scripting language, and will not compile in a compiler like Dev-C++ or Visual C++. This is not intended to be a functional example, just a glimpse at what an expert system's scripting language might look like.

Answer = AskQuestion ("Do you have a fever?");

if (Answer == YES)

Answer = AskQuestion ("Is it a high fever (more than 105.8 F)?");

if (Answer == YES)

Solution = "Go to a hospital now!";

end if

Is Sick?

NO YES

Has a fever?

NO YES

Has problems breathing?

NO YES

High fever?

NO YES

Send home

. . . Lung Infection Do more analysis . . .

else

Answer = AskQuestion ("Do you feel tired?");

if (Answer == YES)

Solution = "You probably have a virus, rest a few days!";

else

Solution = "Knowledge base insufficient. Further diagnosis needed.";

end if

else

Answer = AskQuestion ("Do you have problems breathing?");

if (Answer == YES)

Solution = "Probably a lung infection, need to do exams."

else

Solution = "Knowledge base insufficient. Further diagnosis needed.";

end if

end ifAs you can see, the system follows a set of questions, and depending on the answers, either asks more questions or gives a solution.

Fuzzy logic expands on the concept of an expert system. While an expert system can give values of either true (1) or false (0) for the solution, a fuzzy logic system can give values in between. For example, to know whether a person is tall, an expert system would do the following (again, this is fictional script):

Answer = AskQuestion ("Is the person's height more than 50′ 7″?");

if (Answer == YES)

Solution = "The person is tall.";

else

Solution = "The person is not tall.";

end ifA fuzzy set would appear like so:

Answer = AskQuestion ("What is the person's height?");

if (Answer >= 5′ 7″)

Solution = "The person is tall.";

end if

if ((Answer < 5′ 7″) && (Answer < 5′ 3″))

Solution = "The person is almost tall.";

end if

if ((Answer < 5′ 3″) && (Answer < 4′ 11″))

Solution = "The person isn't

else

Solution = "The person isn't tall.";

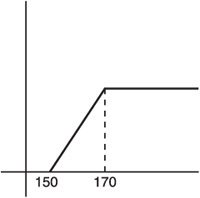

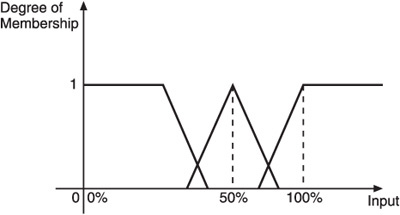

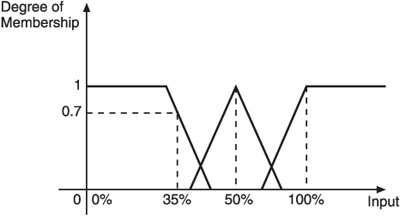

end ifThe result would be fuzzy. Usually a fuzzy set returns values from 0 (false) to 1 (true), representing the membership of the problem. In the last example, a more realistic fuzzy system would use the graph shown in Figure 20.2 to return a result.

As you can see from the graph, for heights greater than 5′ 7″, the function returns 1; for heights less than 4′ 11″, the function returns 0; and for values in between, it returns the corresponding value between 5′ 7″ and 4′ 11″. You could get this value by subtracting the height from 5′ 7″ (the true statement) and dividing by 20 (5′ 7″ –4′ 11″, which is the variance in the graph). In code, this would be something like the following:

Answer = AskQuestion ("What is the person's height?");

if (Answer >= 5′ 7″)

Solution = 1

end if

if (Answer <= 4′ 11″)

Solution = 0

else

Solution = (Answer - 5′ 7″) / (5′ 7′ - 4′ 11″)

end ifYou might be wondering why you don't simply use the equation only and discard the if clauses. The problem with doing so is that if the answer is more than 5′ 7″ or less than 4′ 11″, it will give values outside the 0 to 1 range, thus making the result invalid.

Fuzzy logic is extremely useful when you need reasoning in your game.

Using genetic algorithms is a method of computing solutions that relies on the concepts of real genetic concepts (such as evolution and hereditary logic). You might have had a biology class in high school that explained heredity, but in case you didn't, the field of biology studies the evolution of subjects when they reproduce. (Okay, maybe there is a little more to it than that, but we are only interested in this much.)

As you know, everyone has a blood type, with the possible types being A, B, AB, and O, and each of these types can be either positive or negative. When two people have a child, their types of blood will influence the type of blood the child has. All that you are is written in your DNA. Although the DNA is nothing more than a collection of bridges between four elements, it holds all the information about you, such as blood type, eye color, skin type, and so on. The little “creatures” that hold this information are called genes.

What you might not know is that although you have only one type of blood, you have two genes specifying which blood type you have. How can that be? If you have two genes describing two types of blood, how can you have only one type of blood?

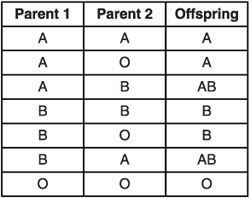

Predominance! Certain genes’ information is stronger (or more influential) than that of others, thus dictating the type of blood you have. What if the two genes’ information is equally strong? You get a hybrid of the two. For the blood type example, both type A and type B are equally strong, which makes the subject have a blood type AB. Figure 20.3 shows all the possible combinations of the blood types. From this table, you can see that both the A and B types are predominant, and the O type isn't. You can also see that positive is the predominant type.

So, how does this apply to the computer? There are various implementations that range from solving mathematical equations to fully generating artificial creatures for scientific research. Implementing a simple genetics algorithm in the computer isn't difficult. The necessary steps are described here:

Pick up a population and set up initial information values.

Order each of the information values to a flat bit vector.

Calculate the fitness of each member of the population.

Keep only the two with the highest fitness.

Mate the two to form a child.

And thus you will have a child that is the product of the two best subjects in the population. Of course, to make a nice simulator you wouldn't use only two of the subjects—you would group various subjects in groups of two and mate them to form various children, or offspring. Now I'll explain each of the steps.

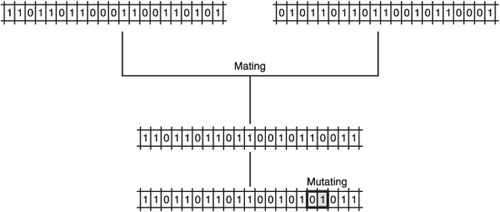

You first need to use the initial population (all the subjects, including creatures, structures, or mathematical variables) and set them up with their initial values. (These initial values can be universally known information, previous experiences of the subject, or completely random values.) Then you need to order the information to a bit vector, as shown in Figure 20.4.

Although some researchers say that an implementation of a genetic algorithm must be done with bit vectors, others say that the bit vectors can be replaced by a function or equation that will analyze each gene of the progenitors and generate the best one out of the two. To be consistent with the DNA discussion earlier, I will use bit vectors (see Figure 20.4).

You now have to calculate the fitness of each subject. The fitness value indicates whether you have a good subject (for a creature, this could be whether the creature was strong, smart, or fast, for example) or a bad subject. Calculating the fitness is completely dependent on the application, so you need to find some equation that will work for what you want to do.

After you calculate the fitness, get the two subjects with the highest fitness and mate them. You can do this by randomly selecting which gene comes from which progenitor or by intelligently selecting the best genes of each to form an even more perfect child. If you want to bring mutation to the game, you can switch a bit here and there after you get the final offspring. That's it—you have your artificial offspring ready to use. This entire process is shown in Figure 20.5.

A good use of this technology in games is to simulate artificial environments. Instead of keeping the same elements of the environment over and over, you could make elements (such as small programs) evolve to stronger, smarter, and faster elements (or objects) that can interact with the environment and you.

Neural networks attempt to solve problems by imitating the workings of a brain. Researchers started trying to mimic animal learning by using a collection of idealized neurons and applying stimuli to them to change their behavior. Neural networks have evolved much in the past few years, mostly due to the discovery of various new learning algorithms, which made it possible to implement the idea of neural networks with success. Unfortunately, there still aren't major discoveries in this field that make it possible to simulate the human brain efficiently.

The human brain is made of around 50 billion neurons (give or take a few billion). Each neuron can compute or process information and send this information to other neurons. Trying to simulate 50 billion neurons in a computer would be disastrous. Each neuron takes various calculations to be simulated, which would lead to around 200 billion calculations. You can forget about modeling the brain fully, but you can use a limited set of neurons (the human brain only uses around 10 percent of its capacity for conscious thought) to mimic basic actions of humans.

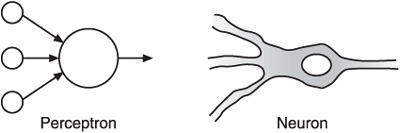

In 1962, Rosenblatt created something called a perceptron, one of the earliest neural network models. A perceptron is an attempt to simulate a neuron by using a series of inputs, weighted by some factor, which will output a value of 1 if the sum of all the weighted inputs is greater than a threshold, or 0 if it isn't. Figure 20.6 shows the idea of a perceptron and its resemblance to a neuron.

While a perceptron is just a simple way to model a neuron, many other ideas evolved from this, such as using the same values for various inputs, adding a bias or memory term, and mixing various perceptrons using the output of one as input for others. All of this together formed the current neural networks used in research today.

There are several ways to apply neural networks to games, but probably the most predominant is by using neural networks to simulate memory and learning. This field of artificial intelligence is probably one of its most interesting parts, but unfortunately, the topic is too vast to give a proper explanation of it here. Fortunately, neural networks are becoming more and more popular these days, and numerous publications are available about the subject.

Deterministic algorithms are more of a game technique than an artificial intelligence concept. Deterministic algorithms are predetermined behaviors of objects in relation to the universe problem. You will consider three deterministic algorithms in this section—random motion, tracking, and patterns. While some say that patterns aren't a deterministic algorithm, I've included them in this section because they are predefined behaviors.

Note

The universe (or universe problem) is the current state of the game that influences the subject, and it can range from the subject's health to the terrain slope, number of bullets, number of adversaries, and so on.

The first, and probably simplest, deterministic algorithm is random motion. Although random motion can't really be considered intelligence (because it's random), there are a few things you can make to simulate some simple intelligence.

As an example, suppose you are driving on a road, you reach a fork, and you really don't know your way home. You would usually take a random direction (unless you are superstitious and always take the right road). This isn't very intelligent, but you can simulate it in your games like so:

NewDirection = rand() % 2;

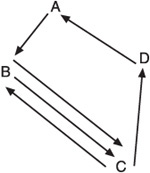

This will give a random value that is either 0 or 1, which would be exactly the same thing as if you were driving. You can use this kind of algorithm in your games, but it isn't very much fun. However, there are things to improve here. Another example? Okay. Suppose you are watching some guard patrolling an area. Two things might happen: The guard could move in a logical way, perhaps a circle or straight line, but most of the time he will move randomly. He will move from point A to B, then to C, then go to B, then C again, then D, then back to A, and repeat this in a totally different form. Take a look at Figure 20.7 to see this idea in action.

His movement can be described in code something like this:

Vector2D kGuardVelocity;

Vector2D kGuardPosition;

int kGuardCycles;

/* Initialize random velocity and cycles */

kGuardVelocity[0] = rand () % 10 - 5;

kGuardVelocity[1] = rand () % 10 - 5;

kGuardCycles = rand () % 20;

while (GameIsRunning)

{

// If we still have some cycles with the current movement

while (kGuardCycles- > 0)

{

A

D

C

B

kGuardPosition += kGuardVelocity;

}

// Change velocity and cycles

kGuardVelocity [0] = rand () % 10 - 5;

kGuardVelocity [1] = rand () % 10 - 5;

kGuardCycles = rand () % 20;

}And you have your guard. You might think this isn't very intelligent, but if you were only playing the game, you would simply see that the guard was patrolling the place, and you would think that he was being intelligent.

When you are trying to catch someone, there are a few things you must do. First, move faster than him, or else you will never catch him, and move in the direction he is from you. There is no logic in running south if he is north of you.

To solve this problem and add a little more intelligence to your games, you can use a tracking algorithm. Suppose the guard spots an intruder. He would probably start running toward him. If you wanted to do this in your game, you would use the following code:

Vector2D kGuardVelocity; Vector2D kGuardPosition; Vector2D kIntruderPosition; int iGuardSpeed; // Intruder was spotted, run to him Vector2D kDistance; kDistance = kIntruderPosition - kGuardPosition; kGuardVelocity = kDistance.Normalize(); kGuardVelocity *= iGuardSpeed; kGuardPosition += kGuardVelocity;

This code gets the direction from the intruder to the guard (the normalized distance) and moves the guard in that direction by a speed factor. Of course, there are several improvements you could make to this algorithm, such as taking into account the intruder's velocity and maybe doing some reasoning about the best route to take.

The last thing to learn with regard to tracking algorithms is about anti-tracking algorithms. An anti-tracking algorithm uses the same concepts as the tracking algorithm, but instead of moving toward the target, it runs away from the target. In the previous guard example, if you wanted the intruder to run away from the guard, you could do something like this:

mrVector2D kGuardVelocity; mrVector2D kGuardPosition; mrVector2D kIntruderPosition; mrUInt32 iGuardSpeed; // Guard has spotted the intruder, intruder run away from him mrVector2D kDistance; kDistance = kGuardPosition - kIntruderPosition; kGuardVelocity = -kDistance.Normalize(); kGuardVelocity *= iGuardSpeed; kGuardPosition += kGuardVelocity;

As you can see, the only thing you need to do is negate the distance to the target (the distance from the guard to the intruder). You could also use the distance from the intruder to the guard and not negate it, because it would produce the same final direction.

A pattern, as the name indicates, is a collection of actions. When those actions are performed in a determined sequence, a pattern (repetition) can be found. Take a look at my rice-cooking pattern, for example. There are several steps I take when I'm cooking rice:

Take the ingredients out of the cabinet.

Get the cooking pan from under the counter.

Add about two quarts of water to the pan.

Boil the water.

Add 250 grams of rice, a pinch of salt, and a little lemon juice.

Let the rice cook for 15 minutes.

And presto, I have rice ready to be eaten. (You don't mind if I eat while I write, do you?) Whenever I want to cook rice, I follow these steps or this pattern. In games, a pattern can be as simple as making an object move in a circle or as complicated as executing orders, such as attacking, defending, harvesting food, and so on. How is it possible to implement a pattern in a game? First you need to decide how a pattern is defined. For your small implementation, you can use a simple combination of two values—the action description and the action operator. The action description defines what the action does, and the action operator defines how it does it. The action operator can express the time to execute the action, how to execute it, or the target for the action, depending on what the action is.

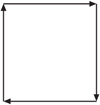

Of course, your game might need a few more arguments to an action than only these two; you can simply add the necessary parameters. Take another look at the guard example. Remember that there were two things the guard might be doing if he was patrolling the area—moving randomly (as you saw before) or in a logical way. For this example, assume the guard is moving in a logical way—that he is performing a square-styled movement, as shown in Figure 20.8.

As you can see, the guard moves around the area in a square-like pattern, which is more realistic than moving randomly. Now, doing this in code isn't difficult, but you first need to define how an action is represented. For simple systems like yours, you can define an action with a description and an operator. The description field describes the action (well, duh!), but the operator can have various meanings. It can be the time the action should be performed, the number of shots that should be fired, or anything else that relates to the action. For the guard example, the operator would be the number of feet to move. Although this system works for many actions, you might want to introduce more data to the pattern. Doing so is easy; you simply need to include more operators in the action definition. A simple example could be

class Action

{

public:

string Description;

string Operator;

};To make your guard pattern, you could do something like this:

Action GuardPattern [4]; GuardPattern[0].Description = “MoveUp"; GuardPattern[0].Operator = “10"; GuardPattern[1].Description = “MoveRight"; GuardPattern[1].Operator = “10"; GuardPattern[2].Description = “MoveDown"; GuardPattern[2].Operator = “10"; GuardPattern[3].Description = “MoveLeft"; GuardPattern[3].Operator = “10";

And your guard pattern would be defined. The last thing you need to do is the pattern processor. This isn't hard; you simply need to check the actual pattern description and, depending on the pattern description, do the action like so:

mrUInt32 iNumberOfActions = 4;

mrUInt32 iCurrentAction;

for (iCurrentAction = 0; iCurrentAction < iNumberOfActions;

iCurrentAction++)

{

if (GuardPattern [iCurrentAction].Description == "MoveUp";

{

kGuardPosition [1] += GuardPattern [iCurrentAction].Operator;

}

if (GuardPattern [iCurrentAction].Description == "MoveRight";

{

kGuardPosition [0] += GuardPattern [iCurrentAction].Operator;

}

if (GuardPattern [iCurrentAction].Description == "MoveDown";

{

kGuardPosition [1] -= GuardPattern [iCurrentAction].Operator;

}

if (GuardPattern [iCurrentAction].Description == "MoveUp";

{

kGuardPosition [0] -= GuardPattern [iCurrentAction].Operator;

}

}This would execute the pattern to make the guard move in a square. Of course, you might want to change this to only execute one action per frame or execute only part of the action per frame, but that's another story.

Random logic, tracking, and patterns should be enough to enable you to create some intelligent characters for your game, but they don't depend on the actual state of the problem to decide what to do. If for some reason a pattern tells the subject to fire the weapon and there isn't any enemy near, then the pattern doesn't seem very intelligent, does it? That's where finite state machines (or software) enter.

A finite state machine has a finite number of states that can be as simple as a light switch (either on or off) or as complicated as a VCR (idle, playing, pausing, recording, and more, depending on how much you spend on it).

A finite state software application has a finite number of states. These states can be represented as the state of the playing world. Of course, you won't create a state for each difference in an object's health. (If the object had a health ranging from 0 to 1,000, and you had 10 objects, that would mean 100,010 different states, and I don't even want to think about that case!) However, you can use ranges, such as whether an object's health is below a number, and only use the object's health for objects that are near the problem you are considering. This would reduce the states from 100,010 to about four or five.

Let's resume the guard example. If an intruder were approaching the area, until now you would only make your guard run to him. But what if the intruder is too far? Or too near? And what if the guard had no bullets in his gun? You might want to make the guard act differently. For example, consider the following cases:

Intruder is in a range of 1,000 feet: Just pay attention to the intruder.

Intruder is in a range of 500 feet: Run to him.

Intruder is in a range of 250 feet: Tell him to stop.

Intruder is in a range of 100 feet and has bullets: Shoot first, ask questions later.

Intruder is in a range of 100 feet and doesn't have bullets: Sound the alarm.

You have five scenarios, or more accurately, states. You could include more factors in the decision, such as whether there are any other guards in the vicinity, or you could get more complicated and use the guard's personality to decide. If the guard is too much of a coward, you probably never shoot, but just run away. The previous steps can be described in code like this:

// State 1

if ((DistanceToIntruder () > 500) && (DistanceToIntruder () < 1000))

{

Guard.TakeAttention ();

}

// State 2

if ((DistanceToIntruder () > 250) && (DistanceToIntruder () < 500))

{

Guard.RunToIntruder ();

}

// State 3

if ((DistanceToIntruder () > 100) && (DistanceToIntruder () < 250))

{

Guard.WarnIntruder ();

}

// State 4

if (DistanceToIntruder () < 100)

{

if (Guard.HasBullets ())

{

Guard.ShootIntruder();

}

// State 5

else

{

Guard.SoundAlarm();

}

}Not hard, was it? If you combine this with the deterministic algorithms you saw previously, you can make a very robust artificial intelligence system for your games.

I have already covered the basics of fuzzy logic, but this time I will go into several of the fuzzy logic techniques more deeply, and explain how to apply them to games.

Fuzzy logic uses some mathematical sets theory, called fuzzy set theory, to work. Fuzzy logic is based on the membership property of things. For example, while all drinks are included in the liquids group, they aren't the only things in the group; some detergents are liquids too, and you don't want to drink them, do you? The same way that drinks are a subgroup—or more accurately, a subset—of the liquids group, some drinks can also be subsets of other groups, such as wine and soft drinks. In the wine group, there are red and white varieties. In the soft drink group, there are carbonated and non-carbonated varieties.

All this talk about alcoholic and non-alcoholic drinks was for demonstration purposes only, so don't go out and drink alcohol just to see whether I'm right. Alcohol damages your brain and your capacity to code, so stay away from it (and drugs, too).

Okay, I'll stop being so paternal and get back to fuzzy logic. Grab a glass and fill it with some water (as much as you want). The glass can have various states—it can be empty, half full, or full (or anywhere in between). How do you know which state the glass is in? Take a look at Figure 20.9.

As you can see, when the glass has 0 percent water, it is totally empty; when it has 50 percent water, it is half full (or half empty, if you prefer). When it has 100 percent of its size in water, then it is full. What if you only poured 30 percent of the water? Or 10 percent? Or 99 percent? As you can see from the graph, the glass will have a membership value for each group. If you want to know the membership values of whatever percentage of water you have, you will have to see where the input (the percentage) meets the membership's graphs to get the degree of membership of each, as shown in Figure 20.10.

Membership graphs can be as simple as the one in Figure 20.10, or they can be trapezoids, exponentials, or other equation-derived functions. For the rest of this section, you will only use normal triangle shapes to define memberships. As in Figure 20.10, you can see that the same percentage of water can be part of two or more groups, where the greater membership value will determine the value's final membership.

You can also see that the final group memberships will range from zero to one. This is one of the requirements for a consistent system. To calculate the membership value on a triangle membership function, assuming that the value is inside the membership value (if it isn't, the membership is just zero), you can use the following code:

float fCenterOfTriangle = (fMaximumRange - fMinimumRange) / 2;

/* Value is in the center of the range */

if (fValue == fCenterTriangle)

{

fDegreeOfMembership = 1.0;

}

/* Value is in the first half of the range */

if (fValue < fCenterTriangle)

{

fDegreeOfMembership = (fValue - fMinimumRange) /

(fCenterTriangle - fMinimumRange);

}

/* Value is in the second half of the range */

if (fValue > fCenterTriangle)

{

fDegreeOfMembership = ((fMaximumRange - fCenterTriangle) - (fValue -

fCenterTriangle)) / (fMaximumRange - fCenterTriangle);

}And you have the degree of membership. If you paid close attention, what you did was use the appropriate line slope to check for the vertical intersection of fValue with the triangle.

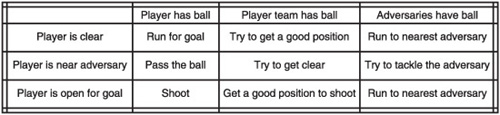

The last topic about fuzzy logic I want to cover is fuzzy matrices. This is what really makes you add intelligence to your games. First, I need to pick a game example to demonstrate this concept. Anyone like soccer?

You will be defining three states of the game.

The player has the ball.

The player's team has the ball.

The opposite team has the ball.

Although there are many other states, you will only be focusing on these three. For each of these states, there is a problem state for the player. You will be considering the following:

The player is clear.

The player is near an adversary.

The player is open for a goal.

Using these three states, as well as the previous three, you can define a matrix that will let you know which action the player should take when the two states overlap. Figure 20.11 shows the action matrix.

Using this matrix would make the player react like a normal player would. If he is clear and doesn't have the ball, he will try to get in a favorable position for a goal. If he has the ball at a shooting position, he will try to score. You get the idea.

But how do you calculate which state is active? It's easy—you use the group membership of each state for both inputs, and multiply the input row by the column row to get the final result for each cell. (It's not matrix multiplication; you simply multiply each row position by the column position to get the row column value.) This will give you the best values from which to choose. For example, if one cell has a value of 0.34 and the other cell has a value of 0.50, then the best choice is probably to do what the cell with 0.50 says. Although this isn't an exact action, it is the best you can take. There are several ways to improve this matrix, such as using randomness, evaluating the matrix with another matrix (such as the personality of the player), and many more.

Although programming a realistic model for memory and learning is hard, there is a method that I personally think is pretty simple to implement—you can store game states as memory patterns. This method will save the game state for each decision it makes and the outcome of that decision; it will store the decision result in a value from zero to one (with zero being a very bad result and one being a very good result).

For example, consider a fighting game. After every move the subject makes, the game logs the result (for example, whether the subject hit the target, missed the target, caused much damage, or was hurt after the attack). Calculate the result and adjust the memory result for that attack. This will make the computer learn what is good (or not) against a certain player, especially if the player likes to follow the same techniques over and over again.

You can use this method for almost any game, from Tic-Tac-Toe, for which you would store the player's moves and decide which would be the best counter-play using the current state of the game and the memory, to racing games, for which you would store the movement of the cars from point to point and, depending on the result, choose a new way to get to the path. The possibilities are infinite, of course. This only simulates memory, and using only memory isn't the best thing to do—but it is usually best to act based on memory instead of only pure logic.

There are various fields of artificial intelligence, and some are getting more advanced each day. The use of neural networks and genetic algorithms for learning is pretty normal in today's games. Even if all these techniques are being applied to games nowadays and all the hype is out, it doesn't mean you need to use it in your own games. If you need to model a fly, just make it move randomly. There is no need to apply the latest techniques in genetic algorithms to make the fly sound like a fly; random movement will do just as well (or better) than any other algorithm. There are a few rules I like to follow when I'm developing the artificial intelligence for a game.

If it looks intelligent, then your job is done.

Put yourself in the subject's place and code what you think you would do.

Sometimes the simpler technique is the needed one.

Always pre-design the artificial intelligence.

When nothing else works, use random logic.

This chapter has provided a small introduction to artificial intelligence. Such a broad topic could easily take a few sets of books to explain—and even then, many details would have to be left out. The use of artificial intelligence depends much on the type of game you are developing, so it is usually also very application-specific. While 3D engines can be used repeatedly, it is less likely that artificial intelligence code can. Although this chapter covered some of the basics of artificial intelligence, it was just a small subset of what you might use, so don't be afraid to experiment!

You can find the answers to this chapter quiz in Appendix A, “Chapter Quiz Answers.”