CHAPTER 13

Blind Waveform Estimation

Sensor arrays are often used in practice to separate and estimate the waveforms of superimposed signals that share similar frequency spectra but have different spatial structure. Source waveform recovery is traditionally a data communication problem, but the signal-processing techniques developed in this field also have a number of practical radar-related applications. For example, passive radar systems require a “clean copy” of the waveform transmitted by an uncooperative source to perform effective matched filtering. Such systems often receive signals from an emitter of opportunity that are contaminated by multipath arrivals and interference from independent sources. The ability to accurately estimate the signal emitted by an unknown source in the presence of multipath and other sources is not only relevant for passive radar, but is also of interest to active radar systems for interference cancelation.

In many problems of practical interest, the interfering signals do not originate from independent sources radiating on the same frequency channel, but rather arise from a single source due to multipath propagation. This gives rise to a resultant signal that is an additive mixture of amplitude-scaled, time-delayed, and possibly Doppler-shifted versions of the source waveform, which are typically incident from different directions of arrival (DOAs). In real-world environments, multipath propagation from source to receiver often occurs due to diffuse scattering from spatially extended regions of an irregular medium as opposed to ideal specular reflection. This scenario is often encountered in mobile communications, underwater acoustics, and radar systems, for example.

In particular, HF skywave signals usually consist of a relatively small number of dominant multipath components or “modes” that propagate from source to receiver along distinct paths. Each signal mode is in turn composed of many locally scattered “rays” that are clustered in some manner about the nominal mode propagation path. This phenomenon, often referred to as “micro-multipath,” gives each propagating mode its fine structure, and is a feature of so-called doubly-spread channels. The large-scale delay, Doppler, and DOA spread of the channel is due to the well-separated nominal paths of the dominant modes, while on a smaller scale, the spread is also due to the continuum of diffusely scattered rays distributed about each of these nominal paths.

Mutual interference among the different propagation modes may cause significant frequency-selective fading or signal envelope distortions at a single receiver output. This can significantly degrade or even impair the performance of systems that rely on accurate source-waveform estimation. The ability to separate the individual modes by spatial filtering in a narrowband single-input multiple-output (SIMO) system not only isolates the output of one or more useable paths for high fidelity waveform estimation, but may also enable the various signal modes to be combined constructively to benefit from the additional energy that each path provides. The waveform estimation problem may be generalized to multiple sources by considering a multiple-input multiple-output (MIMO) system. In this case, it is necessary to separate the different sources as well as the multipath components of each source for effective waveform estimation.

However, neither the propagation channel characteristics nor the signal properties may be known in practice. Moreover, parametric models that can accurately describe the received signal wavefronts may not be available due to environmental and instrumental uncertainties, including diffuse multipath scattering and array calibration errors, for example. The lack of a priori knowledge regarding both the source signal(s) and propagation channel(s) poses a major challenge for the task at hand. This situation calls for processing techniques that can recover the source waveforms from the received signal mixture in a strictly blind manner.

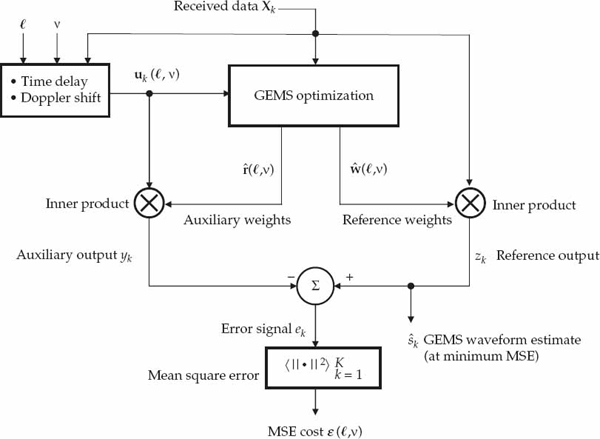

This chapter discusses blind waveform estimation techniques based on relatively mild assumptions regarding the source signal(s), propagation channel(s), and sensor array. Specifically, a new technique referred to as the Generalized Estimation of Multipath Signals (GEMS) algorithm is introduced. The ability of GEMS to estimate arbitrarily modulated source waveforms in narrowband finite-impulse-response (FIR) SIMO and MIMO systems is experimentally demonstrated and compared against benchmark approaches.

The first section formulates the problem by describing the data model, processing objectives, and main assumptions. The second section explains the relationship between existing blind signal-processing approaches and the specific problem considered to provide motivation for the GEMS technique. The third section introduces the GEMS algorithm and compares its computational complexity with a benchmark approach. The remaining sections present experimental results to illustrate the potential applications of GEMS for blind source waveform and propagation channel estimation in practical HF systems.

13.1 Problem Formulation

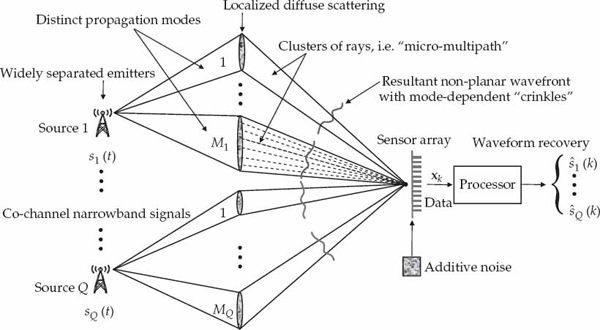

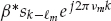

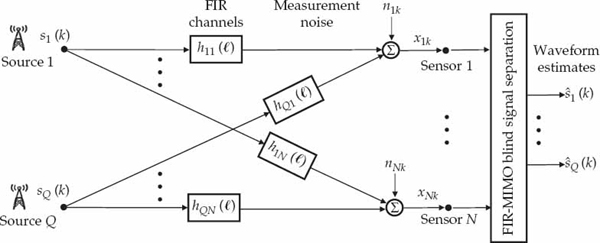

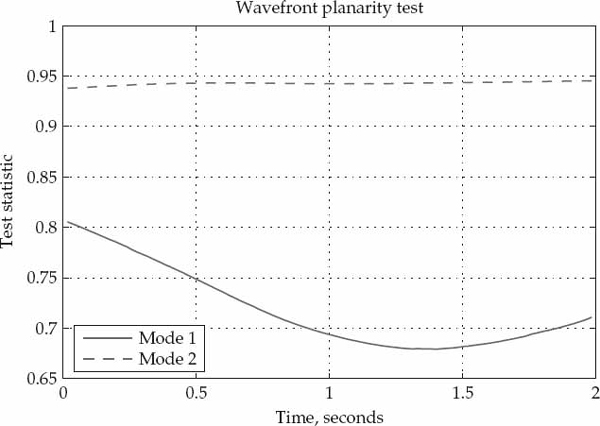

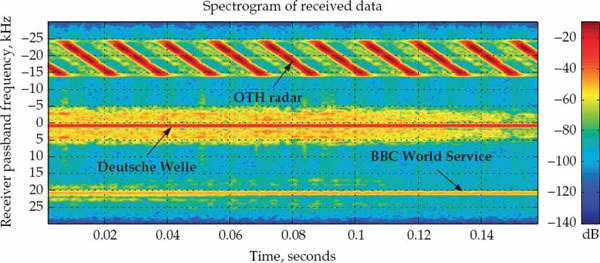

Figure 13.1 conceptually illustrates the problem considered. On the left, a number of independent sources are assumed to emit narrowband waveforms with overlapping power spectral densities. These waveforms propagate via different multipath channels before being received by a sensor array in the far-field. Multipath is due to a relatively small number of dominant signal modes, each being composed of possibly a large number of diffusely scattered rays that superimpose to produce distorted (non-planar) wavefronts with path-dependent “crinkles.”

FIGURE 13.1 Conceptual illustration of the blind waveform estimation problem. The illustration depicts a finite impulse response (FIR) multiple-input multiple-output (MIMO) system, which is the most general case considered. The FIR single-input multiple-output (SIMO) system, where there is only one source and multiple echoes is also of interest in a number of practical applications. © Commonwealth of Australia 2011.

It is assumed that the sensor array is connected to a multi-channel digital receiver that samples the incident signal mixture and additive noise in space and time. The objective of the processor is to recover a clean copy of each transmitted source waveform. Ideally, each waveform estimate is as free as possible of contamination from multipath, other signals, and noise.

The first part of this section describes the physical significance of this problem to HF systems that receive signals via skywave propagation, and develops a mathematical model for the space-time samples acquired by the receiver array as inputs to the processor. The data model is described in relatively general terms, and may also be appropriate in other applications not restricted to HF systems or electromagnetic signals. The second part of this section defines the two main tasks of the processor, namely, source waveform recovery and channel parameter estimation. The main assumptions are also summarized for convenience. The final part of this section provides a simple motivating example to show that wavefront distortions caused by diffuse multipath scattering can be exploited to spatially resolve signal modes with closely spaced nominal DOAs.

13.1.1 Multipath Model

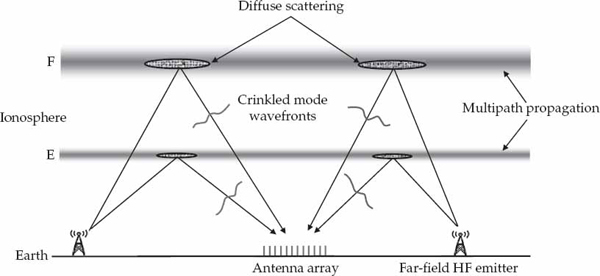

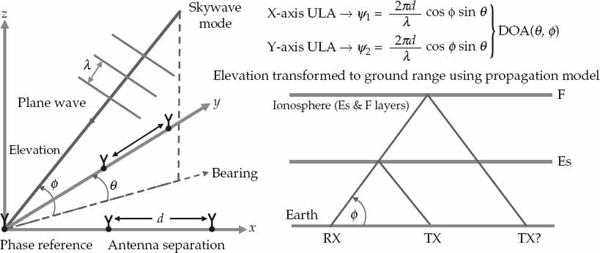

Figure 13.2 illustrates the reception of HF signals from distant sources on a ground-based antenna array via reflection from the ionosphere. Multipath arises due to a number of different “layers” or regions in the ionosphere that propagate the HF signal from source to receiver along well-separated (distinct) paths. However, each ionospheric layer does not act as a perfectly smooth specular reflection surface to the incident signal, but rather presents a spatially extended scattering region that transforms the far-field point source into a distributed signal mode at the receiver. The experimental analysis in Part II of this text confirms this characteristic of the ionospheric reflection process for individual HF signal modes.

FIGURE 13.2 Notional diagram showing the reception of HF signals via multiple skywave paths. The dominant modes arise from a relatively small number of localized scattering volumes in the E and F regions of the ionosphere. In practice, a layer may have one or more different scattering regions due to the presence of irregularities/disturbances, low- and high-angle modes, as well as magneto-ionic splitting, which produces ordinary and extraordinary waves. This simple diagram illustrates two dominant modes diffusely scattered by the E and F layers, for each source. © Commonwealth of Australia 2011.

Specifically, Figure 13.2 depicts two localized scattering regions at E and F layer heights in the ionosphere. For a particular HF source, each localized scattering region gives rise to an individual signal mode. The physical dimensions of these regions effectively depends on the “roughness” of the isoionic contours that scatter the source signal into the receiver. In practice, there is usually a relatively small number of dominant signal modes that are received from physically separated scattering regions. However, the sum of two or more propagation modes with comparable strength, but different time delays, Doppler shifts, and angles of arrival, can lead to significant frequency-selective fading of the signal received by the system.

The cone of diffusely scattered rays that emanate toward the receiver from each localized scattering region may combine coherently or incoherently with each other. The former case gives rise to a signal mode with a time-invariant crinkled wavefront that carries an amplitude-scaled, time-delayed, and possibly Doppler-shifted copy of the source waveform. In this case, the aim of signal processing at the receiver is to isolate a single ionospheric mode per source, as this recovers a suitable waveform estimate. For example, a spatial filter may be applied to preserve a selected mode from a certain source, while using spare degrees of freedom to null or attenuate other signals received by the array.

In a dynamic propagation medium, the magnitude and phase relationship between the rays diffusely scattered from a localized region will change over time. When the time scale of such changes is long compared to the observation interval, the signal mode impinges on the array as a crinkled wavefront that exhibits an effectively “frozen” spatial structure (approaching the coherently distributed case). However, if the time scale of such changes is short compared to the observation interval, the result is an incoherently distributed signal mode that is characterized by a time-varying crinkled wavefront.

The data model assumed for a multipath signal received by a sensor array will be described in four steps. The first derives a relatively general expression for the analytic continuous-time signal received from a single source. The second incorporates coherently distributed (CD) and incoherently distributed (ID) ray descriptions into this expression to derive a CD and ID signal model. The third converts the continuous-time model to a discrete-time model, which represents the digital samples input to the signal processor. The fourth generalizes this data model to the case of multiple sources. Although the end result is a signal-processing model that can be expressed in a relatively familiar mathematical form, several important steps in the derivation are included to highlight the underlying assumptions required for such a model to be valid in practice.

13.1.1.1 Received Signal

Let the scalar signal g(t) in Eqn. (13.1) be the analytic representation of the narrowband waveform emitted by a source of interest. Here, fc is the carrier frequency, and s(t) is a baseband complex envelope with effective bandwidth B. The narrowband assumption implies a small fractional bandwidth B/fc  1. An alternative definition of narrowband relevant to the development of the model is specified later. Attention is restricted to the single-source case first; the extension to multiple sources is considered later.

1. An alternative definition of narrowband relevant to the development of the model is specified later. Attention is restricted to the single-source case first; the extension to multiple sources is considered later.

(13.1)

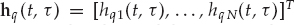

Define hn(t, τ) as the time-varying FIR function of the channel that links the source to receiving element n = 1, …, N of the sensor array at time t ∈ [0, To), where To is the observation interval. Note that the variable t denotes continuous time, while τ is the delay variable of the impulse response. For example, the impulse response at time t0 is hn(t0, τ) for τ ∈ [0, Tn), where Tn is the impulse response duration of channel n. The vector h(t, τ) ∈ CN in Eqn. (13.2) represents the multi-channel FIR system function with support over the delay interval τ ∈ [0, Tc), where  is the maximum impulse response duration over all N channels.

is the maximum impulse response duration over all N channels.

(13.2)

The set of complex scalar signals  received by the N elements of the sensor array may be assembled into a spatial snapshot vector x(t) = [x1(t), …, xN(t)]T. In Eqn. (13.3), this vector is defined as the convolution of the source signal with the multi-channel impulse response function, plus measurement noise n(t) ∈ CN. The blind waveform estimation problem assumes that the observations x(t) are accessible, but the system function h(t, τ), source waveform g(t), and additive noise n(t) are not.

received by the N elements of the sensor array may be assembled into a spatial snapshot vector x(t) = [x1(t), …, xN(t)]T. In Eqn. (13.3), this vector is defined as the convolution of the source signal with the multi-channel impulse response function, plus measurement noise n(t) ∈ CN. The blind waveform estimation problem assumes that the observations x(t) are accessible, but the system function h(t, τ), source waveform g(t), and additive noise n(t) are not.

(13.3)

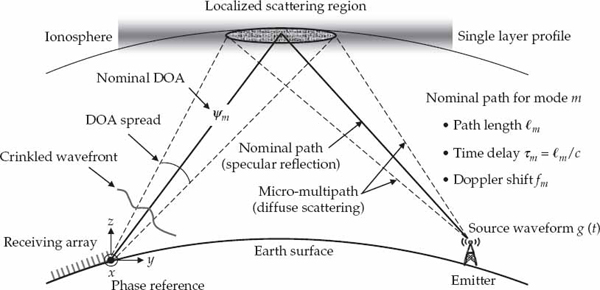

Figure 13.3 illustrates the nominal propagation path and cone of diffusely scattered rays for a single dominant mode referred to by the index m. The meaning of narrowband needs to be more carefully defined in two respects. First, it is assumed that the maximum separation between sensors in the array Da is such that the time-bandwidth product condition in Eqn. (13.5) is satisfied, where c is the speed of light in free space. In other words, the elements of the sensor array present a coherent aperture to the rays in a signal mode. Each of these rays is incident as a plane-wave component.

(13.4)

FIGURE 13.3 Schematic diagram showing the nominal path and cone of diffusely scattered rays for a single dominant propagation mode. The time delay, Doppler shift, and DOA of the nominal mode propagation path are indicated. A Doppler shift may arise due to the regular component of large-scale motion of the scattering region, and/or the movement of the source. © Commonwealth of Australia 2011.

Second, it is assumed that diffuse scattering for all dominant modes m = 1, …, M occurs within a localized region such that the maximum time-dispersion of the rays Δm relative to the nominal time delay τm of the mode propagation path is much less than the reciprocal of the signal bandwidth B. In other words, it is assumed that the condition in Eqn. (13.5) is also satisfied.

(13.5)

Provided these conditions are met, h(t, τ) may be written in the form of Eqn. (13.6), where δ(·) is the Dirac delta function. Here, the impulse response is described as the sum of M dominant signal modes with distinct nominal time delays  , where M is assumed to be less than N. Each mode is transferred from source to receiver by its own time-varying channel vector cm(t). This vector represents the instantaneous summation of a large number of rays diffusely scattered from a localized region. In other words, cm(t) represents the time-varying crinkled wavefront of mode m.

, where M is assumed to be less than N. Each mode is transferred from source to receiver by its own time-varying channel vector cm(t). This vector represents the instantaneous summation of a large number of rays diffusely scattered from a localized region. In other words, cm(t) represents the time-varying crinkled wavefront of mode m.

(13.6)

Substituting Eqn. (13.6) into Eqn. (13.3) yields the received signal model of Eqn. (13.7). In this model, within-mode ray interference gives rise to relatively “slow” flat-fading. The flat-fading process is embodied in the time-variation of the channel vector cm(t). On the other hand, the summation of M modes with time-delay differences that may significantly exceed 1/B produces relatively “fast” frequency-selective fading. The latter has the potential to significantly distort the temporal signature of the source waveform. Hence, the main objective is to remove frequency-selective fading by isolating one of the dominant signal modes for waveform estimation at the processor output.

(13.7)

For an infinite number (i.e., a continuum) of diffusely scattered rays, the channel vector may be expressed in the form of Eqn. (13.8). The complex scalar function fm(ψ, t) is the time-varying angular spectrum of mode m, where the DOA parameter vector ψ = [θ, φ] includes azimuth θ and elevation φ. The vector v(ψ) ∈ CN denotes the plane-wave array steering vector. This vector represents the spatial response of the sensor array to a single ray that emanates from a far-field point and is incident as a plane wave with DOA ψ.

(13.8)

In the presence of near-field scattering effects and/or array calibration errors, fm(ψ, t) may be interpreted as an equivalent angular spectrum which gives rise to the received channel vector cm(t). However, in this case, fm(ψ, t) loses its physical meaning as the angular spectrum of the scattered rays. Stated another way, this function would then no longer represent the received complex amplitudes of the downcoming rays that impinge on the sensor array from different DOAs.

13.1.1.2 Localized Scattering

A coherently distributed (CD) signal arises due to a scattering process that is effectively “frozen” or deterministic over the observation interval, such that fm(ψ, t) → fm(ψ) for t ∈ [0, To). A limitation of this description is that it does not capture Doppler frequency shifts, which are often significant in practice. Different mode Doppler shifts  may be incorporated into the CD model by assuming the temporal variation of the ray angular spectrum is separable and can be modeled as in Eqn. (13.9).

may be incorporated into the CD model by assuming the temporal variation of the ray angular spectrum is separable and can be modeled as in Eqn. (13.9).

(13.9)

The presence of a Doppler shift captures the regular component of large-scale movement of the scattering region and source over the observation interval by introducing a linear phase-path variation common to all rays. From Eqn. (13.8), the channel vector cm(t) may then be expressed in terms of a time-invariant mode wavefront am, and nominal mode Doppler shift fm in Eqn. (13.10). The vector am may be interpreted as a crinkled wavefront that does not lie on the plane-wave array manifold in general.

(13.10)

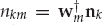

The mode wavefront may alternatively be written as am = αmvm, where αm is a complex amplitude, and vm ∈ CN is a spatial signature vector with fixed L2-norm  = N. The spatial signature may in turn be expressed as the Hadamard (element-wise) product in Eqn. (13.11), where dm ∈ CN is a multiplicative distortion vector that modulates an underlying plane wavefront v(ψm) parameterized by the nominal DOA ψm = [θm, φm] of mode m.

= N. The spatial signature may in turn be expressed as the Hadamard (element-wise) product in Eqn. (13.11), where dm ∈ CN is a multiplicative distortion vector that modulates an underlying plane wavefront v(ψm) parameterized by the nominal DOA ψm = [θm, φm] of mode m.

(13.11)

Substituting Eqn. (13.10) and Eqn. (13.11) into Eqn. (13.7) yields the CD diffuse multipath model in Eqn. (13.12), which has been extended to include mode Doppler shifts. The assumption of linearly independent mode wavefronts  is often justified for M < N dominant modes due to the different diffuse scattering processes involved, as well as the differences in the nominal mode DOAs.

is often justified for M < N dominant modes due to the different diffuse scattering processes involved, as well as the differences in the nominal mode DOAs.

(13.12)

The incoherently distributed (ID) signal model gives rise to channel vector variations that are not separable in space and time. After the nominal mode Doppler shift is factored out, this leaves a time-varying mode wavefront, denoted by am(t). If the channel is assumed Gaussian and wide-sense stationary over the observation interval, for example, the mode wavefronts may be statistically described by a mean vector am and covariance matrix Rm, as in Eqn. (13.13). Recall that ∼ CN denotes the complex normal distribution.

(13.13)

Although Rm may have full rank N, most of the energy in the wavefront fluctuations is typically contained in a small number of Im < N eigenvalues. Defining the effective subspace as  , where the columns of Qm are the Im dominant eigenvectors of Rm, the dynamic component of the mode wavefront is well-approximated by Qmςm(t), where

, where the columns of Qm are the Im dominant eigenvectors of Rm, the dynamic component of the mode wavefront is well-approximated by Qmςm(t), where  is a time-varying coordinate vector. In this case, the channel vector takes the form in Eqn. (13.14).

is a time-varying coordinate vector. In this case, the channel vector takes the form in Eqn. (13.14).

(13.14)

This description incorporates purely statistical ID signals with zero-mean (i.e., am = 0), and partially correlated distributed (PCD) signals, where ςm(t) changes smoothly over time as correlated (dependent) realizations. In the following, there is no requirement to invoke a specific model for am and Qm, such as the Gaussian model alluded to previously. The main assumption is that am is linearly independent of the columns of Qm. As stated earlier, linear independence is also required amongst the different modes. The ID signal model of x(t) is given by Eqn. (13.15).

(13.15)

In this chapter, particular emphasis is on waveform estimation using the CD model that was extended to include Doppler shifts in Eqn. (13.12). The additive noise n(t) may be of ambient or thermal origin, and is notionally considered to be spatially and temporally white. However, arguments are made later to justify the robustness of the developed approach for signals described by the ID model of Eqn. (13.15), and additive noise that is structured or “colored.”

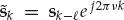

13.1.1.3 Acquired Data

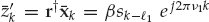

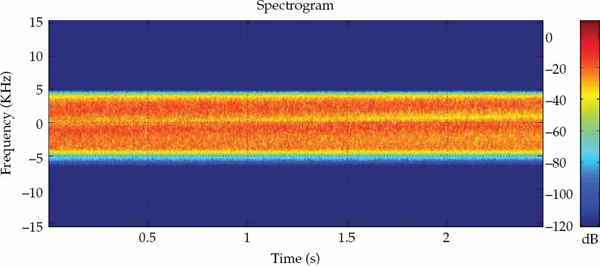

After down-conversion and baseband filtering of the received signal, the in-phase and quadrature (I/Q) outputs are uniformly sampled at time instants  . From Eqn. (13.1), it is straightforward to show that the samples of the source waveform are given by Eqn. (13.16). By ignoring the immaterial phase term

. From Eqn. (13.1), it is straightforward to show that the samples of the source waveform are given by Eqn. (13.16). By ignoring the immaterial phase term  , we may replace the continuous time signal g(t) by the sampled baseband sequence s(kTs). For the single-source case, the contracted notation sk = s(kTs) will be used.

, we may replace the continuous time signal g(t) by the sampled baseband sequence s(kTs). For the single-source case, the contracted notation sk = s(kTs) will be used.

(13.16)

Based on the CD model in Eqn. (13.12), the array snapshots xk are given by Eqn. (13.17), where νm = fm/fs is the Doppler shift normalized by the sampling frequency (fs = 1/Ts),  m = τm/Ts is the time delay normalized by the sampling period,

m = τm/Ts is the time delay normalized by the sampling period,  is the received mode wavefront, and nk is additive noise. Provided the time-bandwidth product BTs is smaller than unity (i.e., the signal is not under-sampled), τm is not required to coincide exactly with a time delay bin such that

is the received mode wavefront, and nk is additive noise. Provided the time-bandwidth product BTs is smaller than unity (i.e., the signal is not under-sampled), τm is not required to coincide exactly with a time delay bin such that  m is an integer). This case is adopted only to simplify the description of the model.

m is an integer). This case is adopted only to simplify the description of the model.

(13.17)

The spatial snapshots xk may be described by the familiar array signal processing model in Eqn. (13.18), where the columns of the multipath mixing matrix contain the M mode wavefronts A = [a1, …, aM], and the M-variate multipath signal vector  contains the source waveform propagated by the different modes. Note that the definition of this vector incorporates the nominal mode time delays and Doppler shifts.

contains the source waveform propagated by the different modes. Note that the definition of this vector incorporates the nominal mode time delays and Doppler shifts.

(13.18)

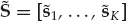

The overall data set consisting of K array snapshots acquired over a processing interval of To seconds may be represented using the matrix notation in Eqn. (13.19), where the N × K data matrix is X = [x1, …, xK], the M × K signal matrix is S = [s1, …, sK], and N = [n1, …, nK] is the noise matrix.

(13.19)

By defining h(k,  ) ∈ CN as the discrete-time multi-channel impulse response function, and

) ∈ CN as the discrete-time multi-channel impulse response function, and  as the FIR model order determined by the maximum duration of the channel

as the FIR model order determined by the maximum duration of the channel  , the array snapshots may be represented in the alternative form of Eqn. (13.20).

, the array snapshots may be represented in the alternative form of Eqn. (13.20).

(13.20)

It is readily shown that h(k,  ) = [h1(k,

) = [h1(k,  ), …, hN(k,

), …, hN(k,  )]T is given by Eqn. (13.21), where by analogy with the time-continuous FIR channel function hn(t, τ), the complex scalar hn(k,

)]T is given by Eqn. (13.21), where by analogy with the time-continuous FIR channel function hn(t, τ), the complex scalar hn(k,  ) denotes the impulse response that links the source to receiving element n at time k with relative delay

) denotes the impulse response that links the source to receiving element n at time k with relative delay  . This model is representative of an FIR-SIMO system with time-varying channel coefficients.

. This model is representative of an FIR-SIMO system with time-varying channel coefficients.

(13.21)

For a total number of samples K, a time-invariant FIR-SIMO model arises only for an observation interval To = KTs that is sufficiently short to negate the effect of mode Doppler shifts. In other words, the condition νmK  1 is required for all modes m, such that h(k,

1 is required for all modes m, such that h(k,  ) → h(

) → h( ). In this case, the time-invariant multi-channel FIR function is given by Eqn. (13.22).

). In this case, the time-invariant multi-channel FIR function is given by Eqn. (13.22).

(13.22)

Use of the CD model extended by Doppler shifts in Eqn. (13.18) suffices for describing the key elements of the waveform estimation problem in the following parts of this section. This model has been presented in the alternative mathematical forms of Eqns. (13.20) and (13.20) to explain its relationship to blind system identification (BSI) and blind signal separation (BSS) techniques described in Section 13.2. The ID version of this model will be considered in Section 13.3, where the GEMS algorithm is described.

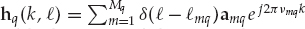

13.1.1.4 Multiple Sources

In the case of Q independent sources, the FIR-MIMO system data model of Eqn. (13.23) is a straightforward generalization of the FIR-SIMO model presented in Eqn. (13.3). The Q co-channel sources are assumed to emit different narrowband waveforms gq(t) for q = 1, …, Q. The multi-sensor channel impulse response function for source q is  with maximum time duration Tq. The sources are assumed to be widely separated, such that the FIR channels hqn(t, τ) for all sources q and receivers n are sufficiently diverse to be identifiable. More will be said on the topic of identifiability in Section 13.2.

with maximum time duration Tq. The sources are assumed to be widely separated, such that the FIR channels hqn(t, τ) for all sources q and receivers n are sufficiently diverse to be identifiable. More will be said on the topic of identifiability in Section 13.2.

(13.23)

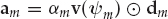

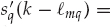

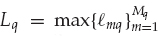

The same steps taken previously for the single-source case lead us to the discrete-time model of the received array data xk ∈ CN in Eqn. (13.24). The scalar waveform sq(k) to be recovered is the baseband-sampled version of gq(t), where the sample index k is left inside the brackets for the multiple-source case to avoid confusing notation. The number of dominant modes propagated along distinct paths for source q is denoted by Mq. The terms  mq, νmq, and amq are the mode time delays, Doppler shifts, and crinkled wavefronts, respectively. The additive noise nk is assumed to be uncorrelated with all sources.

mq, νmq, and amq are the mode time delays, Doppler shifts, and crinkled wavefronts, respectively. The additive noise nk is assumed to be uncorrelated with all sources.

(13.24)

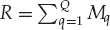

In the adopted FIR-MIMO model, the total number of signal components is  . It is further assumed that multipath is present for all sources, i.e., Mq > 1. With reference to Eqn. (13.18), the array snapshots xk resulting for Q sources may be expressed as in Eqn. (13.25). Here,

. It is further assumed that multipath is present for all sources, i.e., Mq > 1. With reference to Eqn. (13.18), the array snapshots xk resulting for Q sources may be expressed as in Eqn. (13.25). Here,  and

and  are the multipath mixing matrix and multipath signal vector for source q, respectively, defined analogously to the single-source case.

are the multipath mixing matrix and multipath signal vector for source q, respectively, defined analogously to the single-source case.

(13.25)

The data may be expressed as in Eqn. (13.26), where the N × R matrix H = [A1, …, AQ] contains the wavefronts of all R signal components, while the augmented (source and multipath) signal vector pk ∈ CR is a stacked vector of {s1(k), …, sQ(k)}. Recall that the multipath signal vector sq(k) incorporates the time delays { mq} and Doppler shifts {νmq} for all modes m = 1, …, Mq of source q.

mq} and Doppler shifts {νmq} for all modes m = 1, …, Mq of source q.

(13.26)

In this case, the totality of the data acquired during the processing interval may be represented in the matrix form of Eqn. (13.27), where the R × K augmented signal matrix P = [p1, …, pK]. This multiple-source model will not be considered in the following parts of this section, but will be used in Sections 13.2 and 13.3.

(13.27)

In anticipation of the discussion in Section 13.2, the FIR-MIMO model is also presented here in an alternative mathematical form. By defining hq(k,  ) ∈ CN as the discrete-time multi-channel impulse response function for source q, and

) ∈ CN as the discrete-time multi-channel impulse response function for source q, and  as the associated FIR model determined by

as the associated FIR model determined by  , the array snapshots may be represented by the convolutive mixture model in Eqn. (13.28).

, the array snapshots may be represented by the convolutive mixture model in Eqn. (13.28).

(13.28)

It is readily shown that hq(k,  ) = [hq1(k,

) = [hq1(k,  ), …, hqN(k,

), …, hqN(k,  )]T is given by Eqn. (13.29), where by analogy with the time-continuous FIR channel function hqn(t, τ), the complex scalar hqn(k,

)]T is given by Eqn. (13.29), where by analogy with the time-continuous FIR channel function hqn(t, τ), the complex scalar hqn(k,  ) denotes the impulse response component that links source q to receiver n at time k with relative delay

) denotes the impulse response component that links source q to receiver n at time k with relative delay  . This constitutes an FIR-MIMO system with time-varying channel coefficients.

. This constitutes an FIR-MIMO system with time-varying channel coefficients.

(13.29)

The time-invariant FIR-MIMO model arises for observation intervals that are sufficiently short to negate the effect of the largest signal Doppler shift over all sources and modes. In other words, the condition Kνmq  1 needs to be met for all (m, q), such that hq(k,

1 needs to be met for all (m, q), such that hq(k,  ) → hq(

) → hq( ) given by Eqn. (13.30).

) given by Eqn. (13.30).

(13.30)

13.1.2 Processing Objectives

In the blind signal-processing problem considered, the objective of the processor is to estimate the source input sequences and propagation channel parameters from noisy measurements of the received signals. In general, the sensor array receives signals from a number of different sources where there are multiple propagation paths per source. To maintain simplicity in the first instance, the processing objectives are described for the case of a single source and multiple echoes (modes) in this section. The more general case involving multiple sources will be dealt with in subsequent sections of this chapter.

Depending on the type of system in question, the primary objective of the processor is often either to estimate the source waveform or channel parameters, depending which of these unknowns is of more interest. However, once either has been estimated through the use of blind signal processing, it is usually straightforward to estimate the other in a subsequent (non-blind) processing step. The signal and channel estimation problems addressed in this chapter are described below. Several practical applications of blind signal and channel estimation not limited to radar are also described. Finally, the main assumptions related to the data model and processing task are summarized to complete the problem formulation.

13.1.2.1 Waveform Estimation

The waveform estimation task is to obtain a clean copy of the signal emitted from the source of interest, where this may be an amplitude-scaled, time-delayed, and possibly Doppler-shifted version of the transmitted baseband modulation envelope. Recovering the source waveform may be regarded as a complementary problem to those of signal detection and source localization. From an array processing perspective, the main aim of spatial filtering is to pass the dominant propagation mode for waveform estimation, and to reject all other interfering multipath signals based on differences in received wavefront structure. According to the signal model of Eqn. (13.18), waveform estimation is tantamount to a multipath separation problem for the single-source case.

Knowledge of the source waveform may be useful for several different reasons depending on the relationship between the signal of interest and system function. Examples of practical systems that can benefit from high-fidelity waveform estimation are mentioned below. Although the significance of the signal to the system is quite different in each case, a common thread is the underlying requirement for an accurate estimate of the source waveform. Fundamental techniques to address this problem therefore have a variety of practical applications, not limited to those described here.

• In communication systems, the primary interest is to extract the information that is encoded in the modulation of the transmitted signal. Minimizing frequency-selective fading caused by multipath at the receiver can reduce signal envelope distortions and significantly improve link performance.

• In passive radar systems, the source waveform is used as a reference signal for matched filtering. The data carried by a signal of opportunity is not of direct interest here, but a clean copy of the source waveform is required to effectively detect and localize echoes from man-made targets.

• In active radar systems, a co-channel source may represent an unwanted signal that can potentially mask useful signals. Knowledge of the source waveform can facilitate the mitigation of such interference, particularly when it is received through the main lobe of the antenna beam pattern.

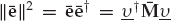

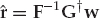

The clairvoyant1 signal-copy weight vector wm ∈ CN that perfectly isolates mode m from all other modes at the spatial processor output is given by the well-known minimum-norm solution in Eqn. (13.31). Recall that A is the multipath mixing matrix defined previously. The vector um ∈ CM has unity in position m and zeros elsewhere, while β is an arbitrary complex scalar that does not affect output signal-to-noise ratio (SNR). The symbol † denotes the Hermitian (conjugate-transpose) operator.

(13.31)

From the model in Eqn. (13.18), the clairvoyant signal-copy weights wm yield the output zk in Eqn. (13.32), where  is the desired estimate of the source waveform, and

is the desired estimate of the source waveform, and  is a residual noise contribution. This deterministic “null-steering” spatial filter estimates a copy of the source signal that is free of multipath contamination. Among all the linear combiners that produce a multipath-free estimate, wm maximizes the output SNR in the case of spatially white additive noise.

is a residual noise contribution. This deterministic “null-steering” spatial filter estimates a copy of the source signal that is free of multipath contamination. Among all the linear combiners that produce a multipath-free estimate, wm maximizes the output SNR in the case of spatially white additive noise.

(13.32)

The optimum filter maximizing the output signal-to-interference-plus-noise ratio (SINR) is given by Eqn. (13.33), where Qm is the statistically expected spatial covariance matrix of all the unwanted signal modes plus noise. The optimum filter is in general different to wm, but tends to the expression in Eqn. (13.31) when the interfering modes are not coherent and much more powerful than the additive noise.

(13.33)

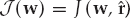

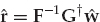

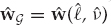

In the spatial processing context, the objective of blind source waveform recovery is to estimate the weights wm for a multipath-free output, or  for an output with minimum mean-square error, depending on which criterion is deemed most desirable. Application of the weight vector to the received array snapshots yields an amplitude-scaled, time-delayed, and possibly Doppler-shifted estimate of the source waveform at the spatial filter output, which fulfils the processing objective.

for an output with minimum mean-square error, depending on which criterion is deemed most desirable. Application of the weight vector to the received array snapshots yields an amplitude-scaled, time-delayed, and possibly Doppler-shifted estimate of the source waveform at the spatial filter output, which fulfils the processing objective.

The key point is that the mixing matrix A = [a1, …, aM] is unknown a priori, and supervised training to estimate Qm is not possible in the considered problem. The model order M and wavefronts am can be estimated under certain conditions, as described in Section 13.2. However, errors in the reconstruction of A due to estimation uncertainty or model-mismatch will lead to the output being corrupted by residuals of the interfering modes, which can significantly reduce the SINR, and hence quality of the waveform estimate.

13.1.2.2 Channel Estimation

The channel impulse response or system function is of more direct interest in certain applications than the source input sequence. In the model of Eqn. (13.20), the channel parameters are the number of modes M, the nominal delay, and Doppler shift of each mode {τm, fm}, and the mode wavefronts  from which the nominal mode DOAs ψm may be inferred.

from which the nominal mode DOAs ψm may be inferred.

In practice, it is not always possible to determine the absolute (as opposed to relative) values of certain channel parameters. This is due to the ambiguity in attributing absolute values uniquely to the channel or source. In addition to the obvious ambiguity in complex scale αm, the absolute time delays τm and Doppler shifts fm of the channel are unobservable from xk without further information about the source. Hence, the objective of channel parameter estimation is to estimate M and the relative mode complex-scales, time-delays, and Doppler-shifts, in addition to the wavefront structure and nominal DOA of each mode.

Knowledge of the propagation channel parameters can have a number of practical uses. In communication systems, traditional methods for multipath channel equalization require the transmission of training symbols or pilot sequences prior to the data frame. This enables the channel parameters to be estimated so that compensation for multipath can be applied to the information-carrying signals. However, training signals may be not be available in some cases, for instance when the source is uncooperative or occurs due to natural phenomena. This has motivated the development of blind system identification techniques that aim to estimate the channel parameters without a requirement for training sequences. Such techniques will be discussed in Section 13.2.

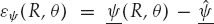

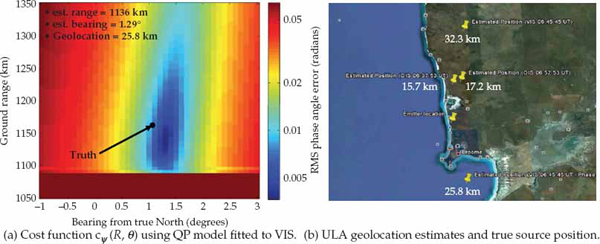

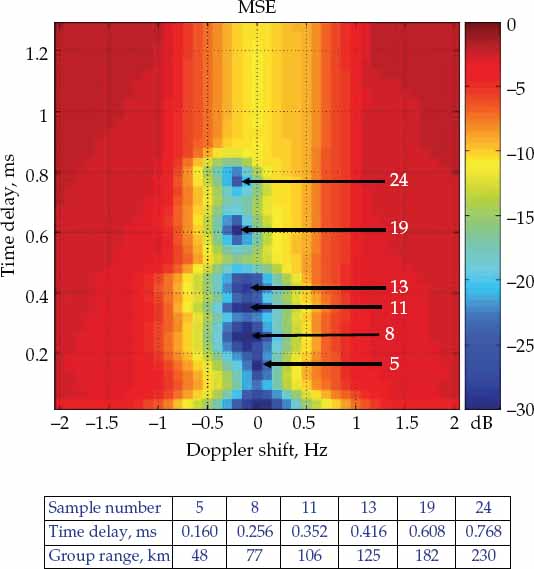

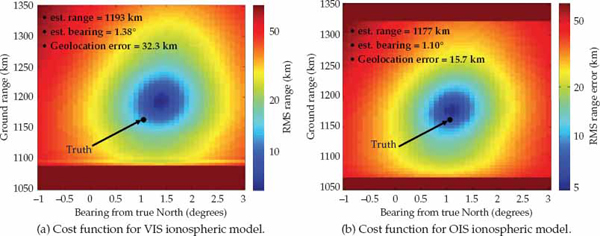

In the HF band, information about the nominal mode DOAs ψm and relative time delays τm may be used in conjunction with an ionospheric model to estimate the position of an uncooperative source. Geolocation of unknown HF sources from a single site is a problem of interest to the HF direction-finding community. The inverse problem of using uncooperative HF sources at known locations as reference points to estimate ionospheric reflection heights, and possibly tilts, is of significant interest for coordinate registration in OTH radar. Although the emphasis in this chapter is to address the blind source waveform recovery problem, blind estimation of the propagation channel parameters will be illustrated for an HF single-site location (SSL) application in Section 13.6.

13.1.2.3 Main Assumptions

The main assumptions related to the model of Eqn. (13.18) are summarized here to complete the problem formulation. With reference to Eqn. (13.18), these assumptions pertain to the source waveform sk, the mixing matrix A, the nominal mode delays τm, and Doppler shifts fm. The generalization of these assumptions for the multiple-source case will be described in Section 13.3.

1. Source Complexity: The narrowband assumption described earlier is a necessary but not sufficient condition as far as the source waveform is concerned. In order to ensure the blind signal estimation problem is identifiable, the source waveform is required to have a finite bandwidth, such that sk is not a constant or sinusoid, for example. Specifically, the input sequence is required to have a linear complexity P > 2L, where L is the maximum FIR model order over all N channels. Linear complexity of a finite-length deterministic sequence  is defined as the smallest integer P for which there exist coefficients

is defined as the smallest integer P for which there exist coefficients  that satisfy Eqn. (13.34) for all k = 1, …, K.

that satisfy Eqn. (13.34) for all k = 1, …, K.

(13.34)

As virtually all finite bandwidth signals of interest satisfy this linear complexity condition for realistic HF channels, the source waveform may be considered to have a practically arbitrary temporal signature. Importantly, no further information is assumed regarding the deterministic structure or statistical properties of the source waveform, which is therefore not restricted to having any particular modulation format. Clearly, the source is assumed to be uncooperative in the sense that training symbols or pilot signals are not available for estimation purposes.

2. Channel Diversity: The source waveform is assumed to be received as an unknown number M of diffusely scattered dominant modes, where 1 < M < N. In other words, multipath propagation is assumed to exist, but the number of dominant modes is less than the number of receiving elements. In addition, the mode wavefront vectors am ∈ CN for m = 1, …, M are assumed to form a linearly independent set, such that the multipath mixing matrix has full rank. These requirements are expressed in Eqn. (13.35), where the operator R{·} returns the rank of a matrix.

(13.35)

Apart from satisfying these conditions, which ensure the system is identifiable and that the problem is not ill-posed, no other information is assumed about the mixing matrix. This implies that {a1, …, aM} are otherwise arbitrary vectors, which are not confined to lie on or close to a parametrically defined spatial signature manifold, such as the plane-wave steering vector model. It follows that the sensor array is not restricted to a particular geometry, and that array manifold uncertainties due to nonidentical element gain and phase responses, sensor position errors, and mutual coupling can be tolerated.

3. Sample Support: This assumption relates to the acquisition of sufficient data in the processing interval, such that enough samples exist to determine the number of system unknowns. From Abed-Meraim, Qui, and Hua (1997), the number of samples K required to ensure identifiability needs to satisfy the condition in Eqn. (13.36), where L is the maximum channel impulse response duration defined previously. More will be said on the subject of identifiability in Section 13.2.

(13.36)

4. Distinct Modes: Besides the quite mild conditions assumed for A, sk, and K above, the number of modes M and the associated delay-Doppler parameters  are also assumed to be unknown. The differences between the mode parameter tuples ρm = [

are also assumed to be unknown. The differences between the mode parameter tuples ρm = [ m, νm] are assumed to be distinct, as in Eqn. (13.37). Note that this condition applies to the relatively small number of dominant signal modes and not the diffusely scattered rays within each mode.

m, νm] are assumed to be distinct, as in Eqn. (13.37). Note that this condition applies to the relatively small number of dominant signal modes and not the diffusely scattered rays within each mode.

(13.37)

Except for certain contrived scenarios, the differential delay and Doppler between two distinct modes (i ≠ j) will in general not be identical to that of another pair of modes (n ≠ m) when attention is restricted to the few dominant modes.

13.1.3 Motivating Example

Diffusely scattered signals are present in a variety of fields. In wireless communications, they are produced by “local scattering” in the vicinity of mobile transmitters, particularly where there is no line-of-sight between the transmitter and receiving base-station, see Zetterberg and Ottersten (1995); Pedersen, Mogensen, Fleury, Frederiksen, Olesen, and Larsen (1997); Adachi, Feeney, Williamson, and Parsons (1986); and Ertel, Cardieri, Sowerby, Rappaport, and Reed (1998) for example.

In sonar, large hydrophone arrays are used to localize spatially distributed acoustic sources that have distorted wavefronts due to propagation in the heterogeneous underwater channel (Owsley 1985). Distributed signals with non-stationary wavefront amplitude and phase “abberations” are also observed in ultrasonics due to irregular propagation through tissue, where wavefronts received over different paths are noticed to experience different distortions; see Liu and Waag (1995, 1998) and Flax and O’Donnell (1988). A similar phenomenon is encountered in radio astronomy due to “scintillation” of signals as they pass through nonuniform plasma profiles (Yen 1985). Blind spatial processing techniques based on distributed multipath signal models may, therefore, find uses in diverse applications.

Before proceeding to a brief review of existing techniques for BSI and BSS, a simple example is used to illustrate how wavefront distortions may assist to separate signal modes with closely spaced nominal DOAs. This is a common problem due to the limited spatial resolution of practical sensor arrays, the fact that multipath components are often received from very similar directions, and that super-resolution techniques for DOA estimation are highly sensitive to model mismatch. A notorious example in microwave radar is the low-elevation multipath encountered over seawater between an airborne source and the receiving antenna array on a surface vessel.

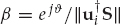

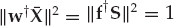

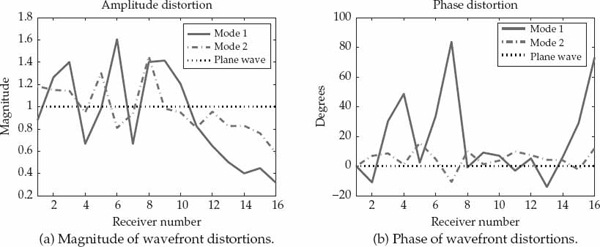

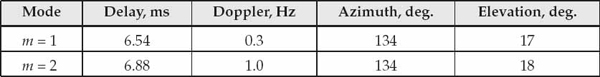

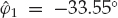

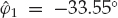

The motivating example described below is relevant to HF radar and directly related to a real-world scenario where data has been collected and processed in Section 13.4. Suppose there are M = 2 signal modes, and the goal is to pass mode m = 1 and perfectly cancel mode m = 2. The clairvoyant minimum-norm signal-copy weight vector that accomplishes this task is given by w1 = βA(A†A)−1u1, where A = [v1, v2] may be defined in terms of the spatial signatures vm instead of the mode wavefronts am without loss of generality.

The SNR gain in white noise, defined as the SNR of mode 1 at the linear combiner output SNRo relative to that in the reference (first) receiver of the array SNRi, is given by SNRg = SNRo/SNRi = |β|2/|| w1||2. The L2-norm || w1||2 may be evaluated by substituting A = [v1, v2] and u1 = [1, 0]T into Eqn. (13.38), and noting that the determinant of (A†A) is given by ∇ = ad − bc, where a = || v1||2,  ,

,  , and d = || v2||2.

, and d = || v2||2.

(13.38)

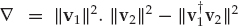

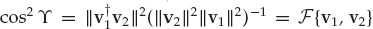

From Eqn. (13.38), SNRg = ∇/|| v2||2, and after evaluating the determinant  , it is straightforward to show that SNRg is given by Eqn. (13.39). The maximum SNR gain with which mode 1 can be estimated with no contamination from mode 2 in white noise depends on the magnitude-squared coherence (MSC) between the spatial signatures:

, it is straightforward to show that SNRg is given by Eqn. (13.39). The maximum SNR gain with which mode 1 can be estimated with no contamination from mode 2 in white noise depends on the magnitude-squared coherence (MSC) between the spatial signatures:  , where ϒ is the angle between v1 and v2 in N-dimensional space. The highest SNR gain subject to the constraint of no multipath interference at the output is || v1||2. This is precisely the matched filter gain, attainable only when the spatial signatures are orthogonal

, where ϒ is the angle between v1 and v2 in N-dimensional space. The highest SNR gain subject to the constraint of no multipath interference at the output is || v1||2. This is precisely the matched filter gain, attainable only when the spatial signatures are orthogonal  , such that cos2 ϒ = 0. For all other cases, the SNR gain is smaller and tends to zero as the spatial signatures align, i.e., as cos2 ϒ → 1.

, such that cos2 ϒ = 0. For all other cases, the SNR gain is smaller and tends to zero as the spatial signatures align, i.e., as cos2 ϒ → 1.

(13.39)

The improvement in SNR gain due to the presence of “crinkled wavefronts,” relative to the hypothetical case of specular reflection which gives rise to plane waves with the same DOAs (nominal DOAs for diffuse scattering), is denoted by SNRIF in Eqn. (13.40). This is simply the ratio of SNR gains in Eqn. (13.39) occurring for the two cases. Here, cos2 Φ = F{v(ψ1), v(ψ2)} is the MSC for plane waves at the nominal mode DOAs, and cos2 ϒ is the MSC for the mode spatial signatures  with multiplicative distortions {dm}m=1,2. When the nominal mode DOAs are closely spaced (ψ1 → ψ2), we have that cos2 Φ → 1 and hence the denominator Δ = (1 − cos2 Φ) → 0. As ψ1 → ψ2, cos2 ϒ → cos2 Θ, where cos2 Θ = F{d1, d2} is the MSC of the multiplicative distortion vectors. Hence, SNRIF → sin2 Θ/Δ, where Δ → 0, as ψ1 → ψ2. Large improvements in output SNR can result provided that the distortions {dm}m=1,2 are sufficiently different, so that sin2 Θ

with multiplicative distortions {dm}m=1,2. When the nominal mode DOAs are closely spaced (ψ1 → ψ2), we have that cos2 Φ → 1 and hence the denominator Δ = (1 − cos2 Φ) → 0. As ψ1 → ψ2, cos2 ϒ → cos2 Θ, where cos2 Θ = F{d1, d2} is the MSC of the multiplicative distortion vectors. Hence, SNRIF → sin2 Θ/Δ, where Δ → 0, as ψ1 → ψ2. Large improvements in output SNR can result provided that the distortions {dm}m=1,2 are sufficiently different, so that sin2 Θ  Δ.

Δ.

(13.40)

Thus, when the mode nominal DOAs are closely spaced, different distortions caused by independent diffuse scattering processes can be exploited to estimate the waveform with no multipath contamination at higher output SNR.2 This situation is particularly relevant for an HF source located close to boresight of a linear array, where ionospheric modes reflected from different layers share similar nominal cone angles, but are likely to exhibit different wavefront distortions due to the independent diffuse scattering processes.

This illustrative example motivates the use of manifold-free procedures that can take advantage of wavefront distortions for separating signal modes with closely spaced nominal DOAs. Moreover, reliance on the plane-wave model when distortions are actually present would lead to higher SINR improvements than those predicted by Eqn. (13.40).

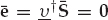

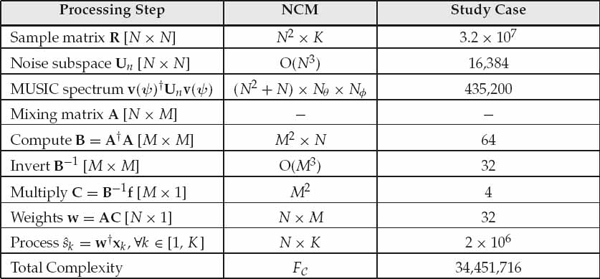

13.2 Standard Techniques

Standard techniques relevant to the formulated problem fall under two main classes, namely, multi-channel blind system identification (BSI), and blind signal separation (BSS). The former typically assumes the presence of a single source and models the propagation channel linking the source to each receiving element of the array by a different finite impulse response (FIR) function. In the standard multi-channel BSI problem, the source input sequence and FIR system function are both assumed to be unknown. At times, the main intent is to estimate the system function, as opposed to the input sequence, which may be viewed merely as a probing signal. However, the problem can be easily recast to estimate the input sequence directly. In any case, multi-channel BSI techniques often involve the joint processing of a block of space-time data to estimate the unknown parameters.

As its acronym suggests, BSS techniques assume the presence of multiple sources, where the emitted signals cannot be separated by simple operations, such as bandpass filtering. In the array processing context, many BSS techniques assume an instantaneous mixture model, wherein the signals propagate directly from source to receiver without multipath reflections. This is called an instantaneous multiple-input multiple-output (I-MIMO) system. Such a system is appropriate for applications that involve line-of-sight propagation, for example. Alternative BSS techniques address the problem of convolutive signal mixtures, where the presence of multipath gives rise to an FIR-MIMO system. In any case, the aim of multi-channel BSS techniques is to separate and estimate the different source signals from the received array data. This is commonly achieved by spatial-only processing, although space-time processing may be used.

The first purpose of this section is to provide background and reference material on the subjects of multi-channel BSI and BSS. This includes a description of the data models often adopted in standard BSI and BSS approaches, with both the I-MIMO and FIR-MIMO systems considered in the latter case. The second purpose is to provide an overview of the underlying assumptions upon which many existing BSI and BSS techniques are based. This is undertaken with a view to determining whether such techniques are applicable to the previously formulated problem. A discussion at the end of this section motivates the development of a new blind waveform estimation technique, referred to as Generalized Estimation of Multipath Signals (GEMS).

13.2.1 Blind System Identification

Traditional approaches for multipath equalization require the scheduling of training data sequences on transmit, which can significantly consume channel capacity and system resources in a time-varying environment (Paulraj and Papadias 1997). Moreover, training data is obviously unavailable when the signal of interest is transmitted by an uncooperative source. These factors have led to the development of BSI techniques, also known as blind channel equalization or blind deconvolution. A detailed description of BSI techniques is beyond the scope of this text, but comprehensive treatments can be found in the excellent review articles of Abed-Meraim et al. (1997), Tong and Perreau (1998), as well as the text of Haykin (1994), for example.

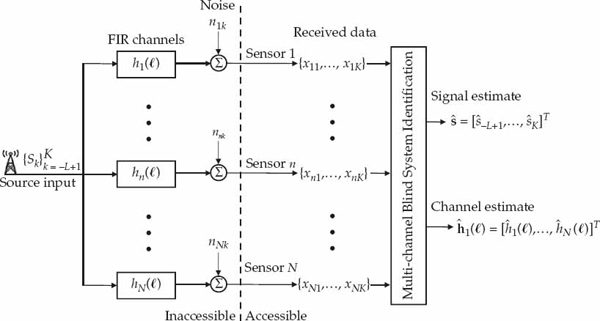

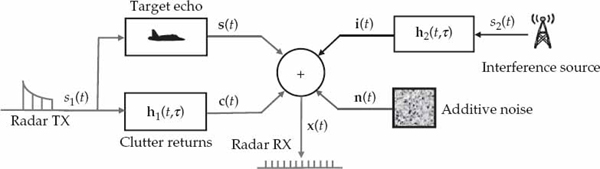

Figure 13.4 shows a discrete-time representation of the standard multi-channel BSI problem. The source produces a scalar input sequence sk, which is received at sensors n = 1, …, N after propagation through a linear channel with an impulse response function hn( ). Multi-channel BSI is traditionally based on a time-invariant FIR model of each channel hn(

). Multi-channel BSI is traditionally based on a time-invariant FIR model of each channel hn( ) with support over a delay interval

) with support over a delay interval  = 0, …, L. Recall that L is defined as the maximum FIR model order for the N-channel system. According to this model, the complex data sample xnk received by sensor n at time k is given by Eqn. (13.41), where nnk is additive noise independent of the signal.

= 0, …, L. Recall that L is defined as the maximum FIR model order for the N-channel system. According to this model, the complex data sample xnk received by sensor n at time k is given by Eqn. (13.41), where nnk is additive noise independent of the signal.

FIGURE 13.4 Representation of the standard multi-channel BSI architecture as a time-invariant FIR-SIMO system. The FIR model order is L, the number of sensors is N, and the number of data samples received by each sensor is K. The source input sequence, channel impulse responses, and additive noise processes are assumed to be inaccessible. The objective of the BSI processor is to jointly identify the source signal and channel coefficients from the observed space-time data to within a complex scale ambiguity. © Commonwealth of Australia 2011.

(13.41)

The source input sequence sk and multi-channel system function h( ) = [h1(

) = [h1( ), …, hN(

), …, hN( )]T are assumed to be inaccessible. Only the time-series data yn = [xn1, …, xnK]T received by the N-sensor array is deemed to be observable. The vector yn ∈ CK may be expressed in the form of Eqn. (13.42).

)]T are assumed to be inaccessible. Only the time-series data yn = [xn1, …, xnK]T received by the N-sensor array is deemed to be observable. The vector yn ∈ CK may be expressed in the form of Eqn. (13.42).

(13.42)

In Eqn. (13.42), Hn ∈ CK×(K+L) is the Sylvester matrix containing the impulse response of channel n, as defined in Eqn. (13.43). For K output samples in yn, it follows that s = [s−L+1, …, sK]T is the (K + L)-dimensional vector of input samples extended by the maximum FIR model order L, and ∈n = [nn1, …, nnK]T is the K-dimensional vector of additive noise.

(13.43)

Alternatively, the data vector yn may be written in the form of Eqn. (13.44), where S ∈ CK×(L+1) is the Toeplitz matrix of the input sequence extended by the maximum FIR model order L, and hn = [hn(0), …, hn(L)]T is the (L + 1)-dimensional vector of the impulse response coefficients of channel n.

(13.44)

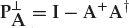

To be clear, S ∈ CK×(L+1) is defined in Eqn. (13.45). This representation is relevant to supervised channel estimation using a known input sequence. In this case, S is known during the training interval, and R{S} = L + 1 by design. The channel coefficients may be estimated from the received data as  n = S+yn = hn + S+∈n, where S+ = (S†S)−1S†.

n = S+yn = hn + S+∈n, where S+ = (S†S)−1S†.

(13.45)

The total data acquired by the time-invariant FIR-SIMO system over the processing interval may be written as Eqn. (13.46), where  is the NK-dimensional stacked vector of space-time samples, H ∈ CNK×(K+L) is the generalized Sylvester matrix formed by stacking {H1, …, HN}, and ε ∈ CNK is the stacked vector of additive noise, constructed similar to y.

is the NK-dimensional stacked vector of space-time samples, H ∈ CNK×(K+L) is the generalized Sylvester matrix formed by stacking {H1, …, HN}, and ε ∈ CNK is the stacked vector of additive noise, constructed similar to y.

(13.46)

Using Eqn. (13.44), it is also possible to write y in the form of Eqn. (13.47), where  is the stacked vector of the N channel impulse response functions, and the NK × N(L + 1) matrix SN = diag{S, …, S} is formed as N diagonal blocks each containing the Toeplitz matrix of the input sequence S defined in Eqn. (13.45).

is the stacked vector of the N channel impulse response functions, and the NK × N(L + 1) matrix SN = diag{S, …, S} is formed as N diagonal blocks each containing the Toeplitz matrix of the input sequence S defined in Eqn. (13.45).

(13.47)

When training data is available to estimate the channel coefficients as  , linear space-time equalization may be applied to estimate the input sequence from the received data as in Eqn. (13.48). This assumes NK > (K + L) and that H has full column-rank (K + L). However, when the channel and source are both unknown, the variables H and s need to be estimated jointly from the observations y (i.e., blindly).

, linear space-time equalization may be applied to estimate the input sequence from the received data as in Eqn. (13.48). This assumes NK > (K + L) and that H has full column-rank (K + L). However, when the channel and source are both unknown, the variables H and s need to be estimated jointly from the observations y (i.e., blindly).

(13.48)

For additive white Gaussian noise, application of the maximum-likelihood (ML) criterion for the joint estimation of the system matrix H and input sequence s, when neither is known, requires finding the solution to Eqn. (13.49), which is a nonlinear optimization problem. An elegant two-step ML method for calculating ( ,

,  )ML is given in Hua (1996). If the FIR-SIMO system is time-varying, because of Doppler shifts for example, frequent updates of this procedure are needed to counter channel variations.

)ML is given in Hua (1996). If the FIR-SIMO system is time-varying, because of Doppler shifts for example, frequent updates of this procedure are needed to counter channel variations.

(13.49)

An attractive feature of the BSI approach is that the source input sequence and polynomial channel coefficients can be estimated under relatively general identifiability conditions. The FIR-SIMO system described by Eqn. (13.46) is considered identifiable when a given output y implies a unique solution for the system matrix H and the input sequence s up to an unknown complex scalar in the absence of noise. In accordance with Hua and Wax (1996), the sufficient identifiability conditions for a time-invariant FIR-SIMO system of known model order L are

• The N polynomial sub-channels do not share a common zero. This condition reflects the need for coprime FIR sub-channels (i.e., sufficient channel diversity).

• The input sequence has linear complexity P > 2L. This implies that the input cannot be a constant or sinusoid for example (i.e., sufficient signal complexity).

• The total number of samples K > 3L. This reflects the need for enough data to determine the number of system unknowns (i.e., sufficient sample support).

However, standard BSI approaches also have some known limitations. For example, the FIR model order is often unknown in practice, and its estimation is a challenging problem (Abed-Meraim et al. 1997). BSI performance can be sensitive to poor estimation of L. Moreover, sparse channels that are highly time-dispersive may require large values of L for equalization, despite the possible presence of few dominant propagation modes, i.e., L  M. This leads to multi-channel equalizers of large dimension N(L + 1), which increases demands on finite sample support, not to mention computational load.

M. This leads to multi-channel equalizers of large dimension N(L + 1), which increases demands on finite sample support, not to mention computational load.

In addition, large Doppler shifts may stipulate the use of very short data frames to satisfy the time-invariant channel assumption. This restricts the observation interval that can be coherently processed (Hua 1996). The requirement for fast updates may also reduce sample support and increase computational load. These factors can limit the practical performance of standard BSI approaches. This motivates the search for alternative methods that are less prone to these drawbacks, yet strive to retain the general identifiability conditions of the BSI problem formulation.

It is evident from Figure 13.4 that the spatial snapshot data vector xk = [x1k, …, xNk]T is given by Eqn. (13.50), where h( ) = [h1(

) = [h1( ), …, hN(

), …, hN( )]T The standard BSI model may be reconciled with the CD data model in Eqn. (13.20) by substituting

)]T The standard BSI model may be reconciled with the CD data model in Eqn. (13.20) by substituting  into Eqn. (13.50). This impulse response function model was derived in Eqn. (13.22) for the case of a narrowband source, local scattering, and M dominant modes received by a coherent sensor array, where the length of the data record is sufficiently short to neglect the mode Doppler shifts.

into Eqn. (13.50). This impulse response function model was derived in Eqn. (13.22) for the case of a narrowband source, local scattering, and M dominant modes received by a coherent sensor array, where the length of the data record is sufficiently short to neglect the mode Doppler shifts.

(13.50)

Two comparative remarks are made. First, the multi-channel impulse response function h( ) is assumed to be time-invariant in the standard BSI model, whereas Doppler shifts give rise to a time-varying impulse response function h(k,

) is assumed to be time-invariant in the standard BSI model, whereas Doppler shifts give rise to a time-varying impulse response function h(k,  ). Importantly, standard BSI approaches are not designed to handle a time-varying impulse response function during the observation interval. Recall that the CD model was extended to include the effect of mode Doppler shifts, which may be significant in the problem considered.

). Importantly, standard BSI approaches are not designed to handle a time-varying impulse response function during the observation interval. Recall that the CD model was extended to include the effect of mode Doppler shifts, which may be significant in the problem considered.

Second, standard BSI approaches are not designed for the case of multiple sources, where it is required to estimate the waveform of each source. On the other hand, the FIR model of h( ) in the BSI problem is general in the sense that it is also applicable to broadband signals, extended scattering, and receiving arrays with widely spaced sensors. In other words, the FIR structure of h(

) in the BSI problem is general in the sense that it is also applicable to broadband signals, extended scattering, and receiving arrays with widely spaced sensors. In other words, the FIR structure of h( ) in Figure 13.4 is not restricted to the form of Eqn. (13.22).

) in Figure 13.4 is not restricted to the form of Eqn. (13.22).

13.2.2 Blind Signal Separation

Multi-channel BSS methods are applied to separate and recover the waveforms of multiple sources received by a sensor array, where the different signals cannot be discriminated readily in time or frequency. In the spatial processing context, the vast majority of BSS techniques, also referred to as “unsupervised” or “self-recovering” methods, fall into two main categories: (1) those which make certain assumptions regarding the propagation channel and sensor array in order to characterize the spatial signatures of the signals to be estimated, i.e., manifold-based methods, and (2) those which are not based on a manifold model but instead utilize a priori information regarding some known deterministic or statistical properties of the waveforms to be separated, i.e., source-based methods. In-depth treatments of manifold- and source-based BSS methods can be found in Van Der Veen (1998) and Cardoso (1998), respectively.

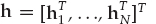

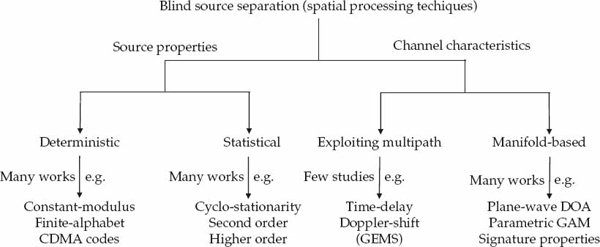

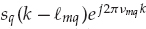

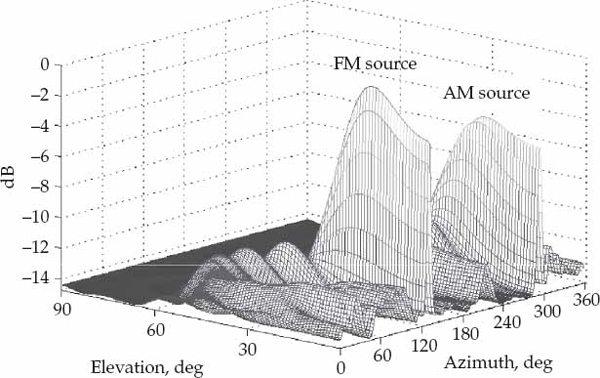

BSS using spatial processing is a topic that has received enormous attention in the literature. Figure 13.5 shows a top-level breakdown of BSS techniques, including source-based methods, manifold-based methods, and the alternative of exploiting multipath as the enabling physical mechanism. A brief overview of the many works existing on manifold-and source-based BSS methods is provided in this section. By comparison, relatively few works in the open literature have directly exploited multipath as the mechanism for enabling blind source separation.

FIGURE 13.5 Taxonomy of standard BSS approaches in terms of underlying assumptions used as the basis for separation. The lack of information about the source signals has in many works been remedied by assuming partial knowledge about the propagation channel and sensor array, such that parametric spatial signature models may be used for separation. On the other hand, alternative approaches assume that certain deterministic or statistical properties are known about the source waveforms in order to compensate for the lack of knowledge regarding the propagation channel and sensor array characteristics. In the spatial processing context, relatively few works have directly exploited multipath as the physical mechanism to enable BSS. The GEMS algorithm is based on the concept that this alternative approach may be used to relax the assumptions required for the source waveforms and array manifold, jointly, rather than separately. © Commonwealth of Australia 2011.

Importantly, it will be shown that this approach allows certain assumptions regarding both the source and manifold to be relaxed. The GEMS algorithm exploits multipath for signal separation under relatively mild assumptions regarding both the mode wavefront and source waveform properties. It is precisely the mildness of these assumptions that makes the GEMS approach noteworthy and robust.

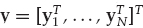

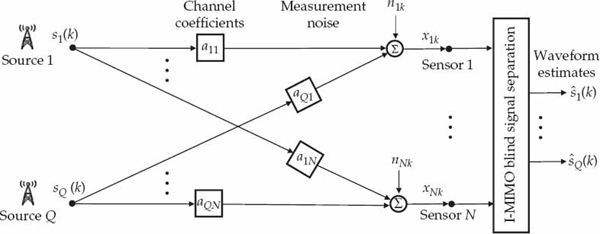

The I-MIMO system is schematically depicted in Figure 13.6, where source q is linked to channel n by a complex scalar transfer coefficient aqn. The received array snapshot xk = [x1k, …, xNk]T can be represented in the standard form of Eqn. (13.51) where the N × Q instantaneous source mixing matrix A = [a1, …, aQ] contains the Q channel vectors, denoted by aq = [aq1, …, aqN]T for q = 1, …, Q, while the Q-dimensional source signal vector s(k) = [s1(k), …, sQ(k)]T contains the different input sequences.

(13.51)

FIGURE 13.6 Illustration of the instantaneous multiple-input multiple-output (I-MIMO) system model. The source input sequences, complex-scalar channel transfer coefficients, and additive noise processes are inaccessible. The objective of the BSS processor is to jointly estimate all of the source waveforms to within an unknown complex scale by spatially weighing and combining the received array data. © Commonwealth of Australia 2011.

The source signal vector s(k) should not be confused with the multipath signal vector sk in Eqn. (13.18). The instantaneous source mixing matrix A also has a different physical interpretation to the multipath mixing matrix A in Eqn. (13.18). However, it is apparent that Eqn. (13.51) has an equivalent mathematical form to Eqn. (13.18) in the special case where the Q sources are assumed to emit signals that are time-delayed and Doppler-shifted versions of a common input sequence, as in Eqn. (13.52). From the BSS viewpoint, there is clearly no distinction between Eqns. (13.18) and (13.18) in this special case.

(13.52)

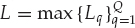

The FIR-MIMO system model illustrated in Figure 13.7 is of more direct interest for multiple sources propagated over multipath channels. Indeed, the FIR-MIMO framework generalizes the BSI problem in Figure 13.4 to the multiple-source case. In Figure 13.7, hqn( ) for

) for  = 0, …, L denotes the FIR function of the channel that links source q to sensor n. It follows from the single-source expression in Eqn. (13.50) that the FIR-MIMO spatial snapshots xk are given by Eqn. (13.53), where hq(

= 0, …, L denotes the FIR function of the channel that links source q to sensor n. It follows from the single-source expression in Eqn. (13.50) that the FIR-MIMO spatial snapshots xk are given by Eqn. (13.53), where hq( ) = [hq1(

) = [hq1( ), …, hqN(

), …, hqN( )]T and

)]T and  is the maximum FIR model order.

is the maximum FIR model order.

(13.53)

FIGURE 13.7 Illustration of the FIR multiple-input multiple-output (FIR-MIMO) system model. The source input sequences, channel impulse responses, and additive noise processes are inaccessible. The objective of the BSS processor is to jointly estimate all of the source waveforms to within an unknown complex scale by spatially weighting and combining the received array data. © Commonwealth of Australia 2011.

The connection between Eqn. (13.53) and the multiple-source model of Eqn. (13.51) developed in the previous subsection becomes evident if we substitute the time-varying impulse response  for hq(

for hq( ) in Eqn. (13.53). Making this substitution yields the received data snapshots in Eqn. (13.54), where

) in Eqn. (13.53). Making this substitution yields the received data snapshots in Eqn. (13.54), where

. This expression is consistent with the model of Eqn. (13.24). Note that the definition of

. This expression is consistent with the model of Eqn. (13.24). Note that the definition of  incorporates the Doppler shift of each mode in the waveform to be estimated. This effectively accounts for channel variations by modifying the waveform of each mode by a different Doppler shift.

incorporates the Doppler shift of each mode in the waveform to be estimated. This effectively accounts for channel variations by modifying the waveform of each mode by a different Doppler shift.

(13.54)

The form of Eqn. (13.54) indicates that BSS techniques based on a time-invariant channel model are in principle applicable to the formulated problem since each mode waveform may be considered as a different signal to be estimated. The critical issue here is whether the assumptions required by standard BSS techniques to recover suitable waveform estimates in such a situtation are compatable with those previously set out in the problem formulation. This point will be considered in Section 13.2.3 with reference to the multiple-source model reproduced in Eqn. (13.55), and the single-source model X = AS + N described in the previous section.

(13.55)

In the single-source case, the identifiability condition relating to channel diversity implies that the mode wavefronts in the mixing matrix A are linearly independent so that A has full column-rank M, while the identifiability condition relating to input sequence linear complexity implies that the signal matrix S has full row-rank M. This leads to the fundamental property upon which nearly all BSS techniques are based, namely, that the column span of X provides a basis for the column span of A, and that the row span of X provides a basis for the row span of S. Similar concepts apply for the multiple-source case.

13.2.2.1 Manifold-Based Methods

A popular manifold-based method is to discriminate the signals on the basis of differences in DOA by assuming a plane-wave model. Such a model is valid for narrowband signals and point sources in the far-field of a well-calibrated sensor array. This approach may be used to resolve a number of independent sources, or multiple propagation modes from a single source, that impinge on the array as plane waves. In the latter case, specular reflection of the multipath components is often assumed.

Super-resolution techniques such as MUSIC (Schmidt 1981), ESPRIT (Roy and Kailath 1989), MODE (Stoica and Sharman 1990b), WSF (Viberg, Ottersten, and Kailath 1991), ML (Stoica and Sharman 1990a), and their variants described in Krim and Viberg (1996), may be used to resolve signals with closely spaced DOAs. These techniques may be considered “blind” in the sense that the source properties and mixing matrix are not known a priori. In this case, lack of knowledge regarding the source properties is compensated for by assuming the spatial signatures in the mixing matrix lie on a manifold with a known parametric form. Specifically, the plane-wave manifold is defined by the DOA parameter alone.

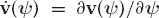

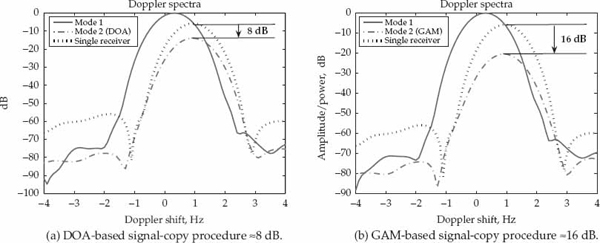

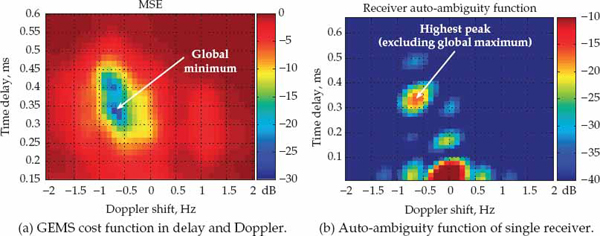

DOA estimates of the incident signals are used to reconstruct the mixing matrix, which allows a deterministic null-steering weight vector to estimate the individual source waveforms with reduced contamination from multipath components and other signal sources. This is the classic “signal-copy” procedure. Ideally, the first step estimates the exact DOAs of all signal components, while the second step adjusts the weights of the linear combiner to perfectly null all interfering signals, leaving only the desired source waveform and measurement noise at the output. This signal-copy procedure is effective provided that the number of plane-wave signals is less than the number of receivers, the signal DOAs are not too closely spaced, the model order is selected appropriately, and the SNR is adequate for the amount of training data available. In this event, performance is limited mainly by statistical errors.

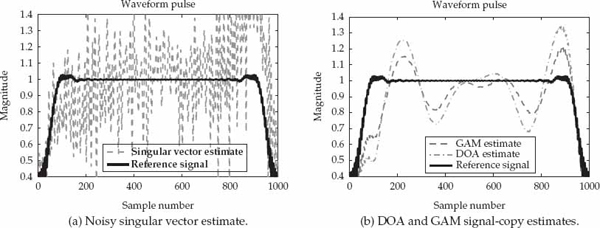

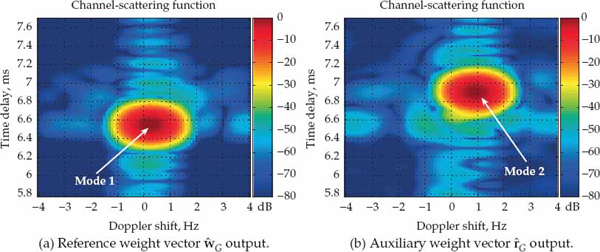

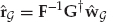

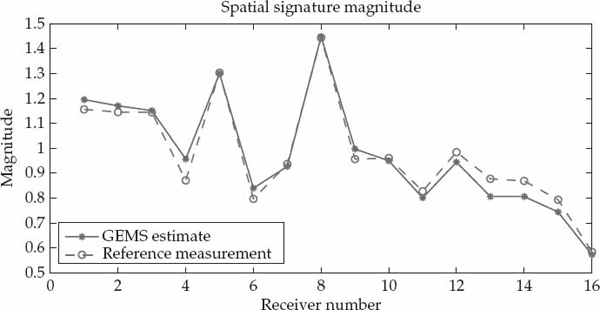

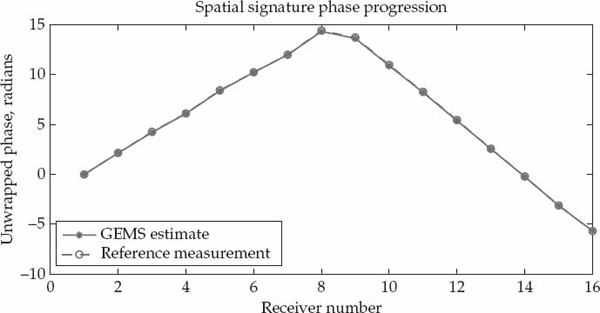

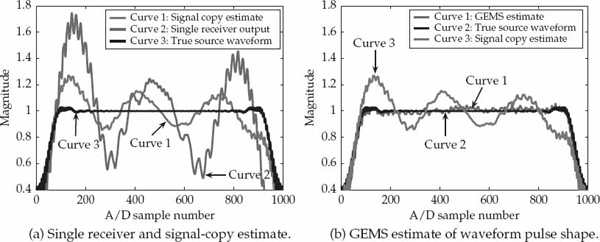

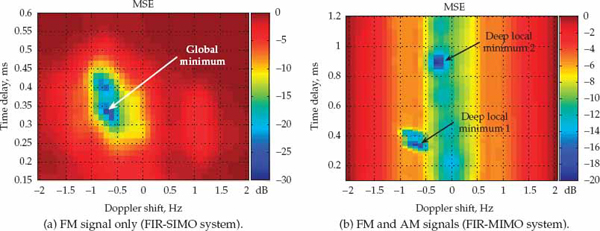

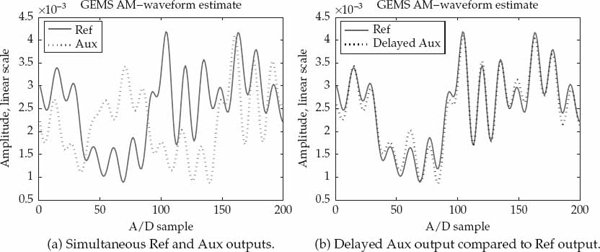

However, the plane-wave assumption is rather strong and seldom holds in practical scenarios. In particular, diffuse scattering caused by an irregular propagation medium, combined with the presence of array calibration errors, may lead to significant deviations between the spatial signatures of the signals received by the system and the presumed plane-wave manifold model. Diffuse scattering has been observed and analyzed in a number of different fields not limited to wireless communications (Zetterberg and Ottersten 1995), radio astronomy (Yen 1985), underwater acoustics (Gershman, Turchin, and Zverev 1995), speech recognition (Juang, Perdue, and Thompson 1995), medical imaging (Flax and O’Donnell 1988), seismology (Wood and Treitel 1975), and radar (Barton 1974).