Chapter 9. MDM Paradigms and Architectures

9.1. Introduction

The value of master data lies in the ability for multiple business applications to access a trusted data asset that can serve up the unique representation of any critical entity relevant within the organization. However, while we have focused on the concepts of a virtual means for managing that unique representation (the “what” of MDM), we have been relatively careful so far in asserting an architectural framework for implementing both the logical and the physical structure for managing master data (the “how” of MDM). This caution is driven by the challenges of not just designing and building the master data environment and its service layers, but also of corralling support from all potential stakeholders, determining the road map for implementation, and, most critically, applying migration to use the master data environment.

In fact, many organizations have already seen large-scale technical projects (e.g., Customer Relationship Management, data warehouses) that drain budgets, take longer than expected to bring into production, and do not live up to their expectations, often because the resulting technical solution does not appropriately address the business problems. To avoid this trap, it is useful to understand the ways that application architectures adapt to master data, to assess the specific application's requirements, and to characterize the usage paradigms. In turn, this will drive the determination of the operational service-level requirements for data availability and synchronization that will dictate the specifics of the underlying architecture.

In this chapter, we look at typical master data management usage scenarios as well as the conceptual architectural paradigms. We then look at the variable aspects of the architectural paradigms in the context of the usage scenarios and enumerate the criteria that can be used to determine the architectural framework that best suits the business needs.

9.2. MDM Usage Scenarios

The transition to using a master data environment will not hinge on an “all or nothing” approach; rather it will focus on particular usage situations or scenarios, and the services and architectures suitable to one scenario may not necessarily be the best suited to another scenario. Adapting MDM techniques to qualify data used for analytical purposes within a data warehouse or other business intelligence framework may inspire different needs than an MDM system designed to completely replace all transaction operations within the enterprise.

In Chapter 1, we started to look at some of the business benefits of MDM, such as comprehensive customer knowledge, consistent and accurate reporting, improved risk management, improved decision making, regulatory compliance, quicker results, and improved productivity, among others. A review of this list points out that the use of master data spans both the analytical and the operational contexts, and this is accurately reflected in the categorization of usage scenarios to which MDM is applied.

It is important to recognize that master data objects flow into the master environment through different work streams. However, business applications treat their use of what will become master data in ways that are particular to the business processes they support, and migrating to MDM should not impact the way those applications work. Therefore, although we anticipate that our operational processes will directly manipulate master data as those applications are linked into the master framework, there will always be a need for direct processes for creating, acquiring, importing, and maintaining master data. Reviewing the ways that data is brought into the master environment and then how it is used downstream provides input into the selection of underlying architectures and service models.

9.2.1. Reference Information Management

In this usage scenario, the focus is on the importation of data into the master data environment and the ways that the data is enhanced and modified to support the dependent downstream applications. The expectation is that as data is incorporated into the master environment, it is then available for “publication” to client applications, which in turn may provide feeds back into the master repository.

For example, consider a catalog merchant that resells product lines from numerous product suppliers. The merchant's catalog is actually composed of entries imported from the catalogs presented by each of the suppliers. The objective in this reference information usage scenario is to create the master catalog to be presented to the ultimate customers by combining the product information from the supplier catalogs. The challenges associated with this example are largely driven by unique identification: the same products may be provided by more than one supplier, whereas different suppliers may use the same product identifiers for different products within their own catalogs. The desired outcome is the creation of a master catalog that allows the merchant to successfully complete its order-to-cash business process.

In this scenario, data enter the master repository from multiple streams, both automated and manual, and the concentration is on ensuring the quality of the data on entry. Activities that may be performed within this scenario include the following:

Creation. Creating master records directly. This is an entry point for data analysts to input new data into the master repository, such as modifying enterprise metadata (definitions, reference tables, value formats) or introducing new master records into an existing master table (e.g., customer or product records).

Import. Preparing and migrating data sets. This allows the data analysts to convert existing records from suitable data sources into a table format that is acceptable for entry into the master environment. This incorporates the conversion process that assesses the mapping between the original source and the target model and determines what data values need to be input and integrated with the master environment. The consolidation process is discussed in detail in Chapter 10.

Categorization. Arranging the ways that master objects are organized. Objects are grouped based on their attributes, and this process allows the data analyst to specify the groupings along with their related metadata and taxonomies, as well as defining the rules by which records are grouped into their categories. For example, products are categorized in different ways, depending on their type, use, engineering standards, target audience, size, and so on.

Classification. Directly specifying the class to which a master record will be assigned. The data steward can employ the rules for categorization to each record and verify the assignment into the proper classes.

Quality validation. Ensuring that the data meet defined validity constraints. Based on data quality and validity rules managed within the metadata repository, both newly created master records and modifications to existing records are monitored to filter invalid states before committing the value to the master repository.

Modification. Directly adjusting master records. Changes to master data (e.g., customer address change, update to product characteristics) may be made. In addition, after being alerted to a flaw in the data, the data steward may modify the record to “correct” it or otherwise bring it into alignment with defined business data quality expectations.

Retirement/removal. Records that are no longer active may be assigned an inactive or retired status, or the record may be removed from the data set. This may also be done as part of a stewardship process to adjust a situation in which two records referring to the same object were not resolved into a single record and must be manually consolidated, with one record surviving and the other removed.

Synchronization. Managing currency between the master repository and replicas. In environments where applications still access their own data sets that are periodically copied back and synchronized with the master, the data steward may manually synchronize data values directly within the master environment.

9.2.2. Operational Usage

In contrast to the reference information scenario, some organizations endeavor to ultimately supplement all application data systems with the use of the master environment. This means that operational systems must execute their transactions against the master data environment instead of their own data systems, and as these transactional business applications migrate to relying on the master environment as the authoritative data resource, they will consume and produce master data as a by-product of their processing streams.

For example, an organization may want to consolidate its views of all customer transactions across multiple product lines to enable real-time up-sell and cross-sell recommendations. This desire translates into a need for two aspects of master data management: managing the results of customer analytics as master profile data and real-time monitoring of all customer transactions (including purchases, returns, and all inbound call center activities). Each customer transaction may trigger some event that requires action within a specified time period, and all results must be logged to the master repository in case adjustments to customer profile models must be made.

Some of the activities in this usage scenario are similar to those in Section 9.2.1, but here it is more likely to be an automated application facilitating the interaction between the business process and the master repository. Some activities include the following:

Access. The process of searching the master repository. This activity is likely to be initiated frequently, especially by automated systems validating a customer's credentials using identity resolution techniques, classifying and searching for similar matches for product records, or generally materializing a master view of any identified entity for programmatic manipulation.

Quality validation. The process of ensuring data quality business rules. Defined quality characteristics based on business expectations can be engineered into corresponding business data rules to be applied at various stages of the information processing streams. Quality validation may be as simple as verifying conformance of a data element's value within a defined data domain to more complex consistency rules involving attributes of different master objects.

Access control. Monitoring individual and application access. As we describe in Chapter 6 on metadata, access to specific master objects can be limited via assignment of roles, even at a level as granular as particular attributes.

Publication and sharing. Making the master view available to client applications. In this scenario, there may be an expectation that as a transaction is committed, its results are made available to all other client applications. In reality, there may be design constraints that may drive the definition of service level agreements regarding the timeliness associated with publication of modifications to the master data asset.

Modification. Making changes to master data. In the transactional scenario, it is clear that master records will be modified. Coordinating multiple accesses (reads and writes) by different client applications is a must and should be managed within the master data service layer.

Synchronization. Managing coherence internally to the master repository. In this situation, many applications are touching the master repository in both querying and transactional modes, introducing the potential for consistency issues between reads and writes whose serialization has traditionally been managed within the database systems themselves. The approach to synchronizing any kinds of accesses to master data becomes a key activity to ensure a consistent view, and we address this issue in greater detail in Chapter 11.

Transactional data stewardship. Direct interaction of data stewards with data issues as they occur within the operational environment. Addressing data issues in real time supports operational demands by removing barriers to execution, and combining hands-on stewardship with the underlying master environment will lead to a better understanding of the ways that business rules can be incorporated to automatically mitigate issues when possible and highlight data issues when a data steward is necessary.

9.2.3. Analytical Usage

Analytical applications can interact with master data two ways: as the dimensional data supporting data warehousing and business intelligence and for embedded analytics for inline decision support. One of the consistent challenges of data warehousing is a combination of the need to do the following:

▪ Cleanse data supplied to the warehouse to establish trustworthiness for downstream reporting and analysis

▪ Manage the issues resulting from the desire to maintain consistency with upstream systems

Analytical applications are more likely to use rather than create master data, but these applications also must be able to contribute information to the master repository that can be derived as a result of analytical models. For example, customer data may be managed within a master model, but a customer profiling application may analyze customer transaction data to classify customers into threat profile categories. These profile categories, in turn, can be captured as master data objects for use by other analytical applications (fraud protection, bank secrecy act compliance, etc.) in an embedded or inlined manner. In other words, integrating the results of analytics within the master data environment supplements analytical applications with real-time characteristics.

Aside from the activities mentioned in previous sections, some typical activities within the analytical usage scenario include the following:

Access. The process of searching and extracting data from the master repository. This activity is invoked as part of the process for populating the analytical subsystems (e.g., the fact tables in a data warehouse).

Creation. The creation of master records. This may represent the processes invoked as a result of analytics so that the profiles and enhancements can be integrated as master data. Recognize that in the analytical scenario, the creation of master data is a less frequent activity.

Notification. Forwarding information to business end clients. Embedded analytical engines employ master data, and when significant events are generated, the corresponding master data are composed with the results of the analytics (customer profiles, product classifications, etc.) to inform the end client about actions to be taken.

Classification. Applying analytics to determine the class to which a master record will be assigned. Rules for classification and categorization are applied to each record, and data stewards can verify that the records were assigned to the proper classes.

Validation. Ensuring that the data meet defined validity constraints. Based on data quality and validity rules managed within the metadata repository, both newly created master records and modifications to existing records are monitored to filter invalid states before committing the value to the master repository.

Modification. The direct adjustment of master records. Similarly to the reference data scenario, changes to master data (e.g., customer address change, update to product characteristics) may be made. Data stewards may modify the record to bring it into alignment with defined business data quality expectations.

9.3. MDM Architectural Paradigms

All MDM architectures are intended to support transparent, shared access to a unique representation of master data, even if the situations for use differ. Therefore, all architecture paradigms share the fundamental characteristics of master data access, namely fostered via a service layer that resolves identities based on matching the core identifying data attributes used to distinguish one instance from all others.

However, there will be varying requirements for systems supporting different usage situations. For example, with an MDM system supporting the reference data scenario, the applications that are the sources of master data might operate at their own pace, creating records that will (at some point) be recognized as master data. If the reference MDM environment is intended to provide a common “system of record” that is not intricately integrated into real-time operation, the actual data comprising the “master record” may still be managed by the application systems, using the MDM environment solely as an index for searching and identification.

In this case, the identifying attributes can be rationalized on a periodic basis, but there is no need for real-time synchronization. An architecture to support this approach may require a master model that manages enough identifying information to provide the search/index/identification capabilities, as long as the remainder of the master data attributes can be composed via pointers from that master index.

In the fully operational usage scenario, all interactions and transactions are applied to the master version, and the consistency requirements must be enforced at a strict level. As opposed to the reference scenario, this situation is much less tolerant of replicating master data and suggests a single master repository accessed only through a service layer that manages serialization and synchronization.

The analytical scenario presents less stringent requirements for control over access. Much of the data that populates the “master record” are derived from source application data systems, but only data necessary for analysis might be required in the master repository. Because master records are created less frequently, there are fewer stringent synchronization and consistency constraints. In this environment, there may be some combination of a master index that is augmented with the extra attributes supporting real-time analytics or embedded monitoring and reporting. These concepts resolve into three basic architectural approaches for managing master data (see sidebar).

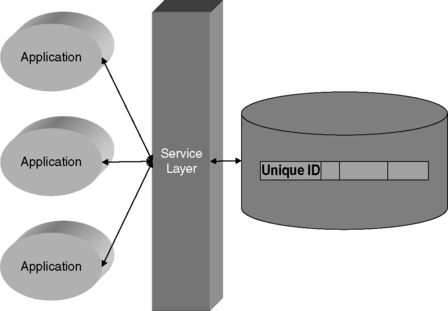

9.3.1. Virtual/Registry

In a registry-style architecture (as shown in Figure 9.1), a thin master data index is used that captures the minimal set of identifying attribute values. Any presented record's identifying values are indexed by identity search and match routines (which are discussed in greater detail in Chapter 10). The master index provided in a registry model contains pointers from each uniquely identified entity to the source systems maintaining the balance of the master data attributes (see Figure 9.1). Accessing the “master record” essentially involves the following tasks:

▪ Evaluating similarity of candidates to the sought-after record to determine a close match

▪ Once a match has been found, accessing the registry entries for that entity to retrieve the pointers into the application data systems containing records for the found entity

▪ Accessing the application data systems to retrieve the collection of records associated with the entity

▪ Applying a set of survivorship rules to materialize a consolidated master record

|

| ▪Figure 9.1 A registry-style architecture provides a thin index that maps to data instances across the enterprise. |

The basic characteristics of the registry style of MDM are as follows:

▪ A thin table is indexed by identifying attributes associated with each unique entity.

▪ Each represented entity is located in the registry's index by matching values of identifying attributes.

▪ The registry's index maps from each unique entity reference to one or more source data locations.

The following are some assumptions regarding the registry style:

▪ Data items forwarded into the master repository are linked to application data in a silo repository as a result of existing processing streams.

▪ All input records are cleansed and enhanced before persistence.

▪ The registry includes only attributes that contain the identifying information required to disambiguate entities.

▪ There is a unique instance within the registry for each master entity, and only identifying information is maintained within the master record.

▪ The persistent copies for all attribute values are maintained in (one or more) application data systems.

▪ Survivorship rules on consolidation are applied at time of data access, not time of data registration.

9.3.2. Transaction Hub

Simply put, a transaction hub (see Figure 9.2) is a single repository used to manage all aspects of master data. No data items are published out to application systems, and because there is only one copy, all applications are modified to interact directly with the hub. Applications invoke access services to interact with the data system managed within the hub, and all data life cycle events are facilitated via services, including creation, modification, access, and retirement.

|

| ▪Figure 9.2 All accesses to a transaction hub are facilitated via a service layer. |

Characteristics of the transaction hub include the following:

▪ All attributes are managed within the master repository.

▪ All access to master data is managed through the services layer.

▪ All aspects of the data instance life cycle are managed through transaction interfaces deployed within the services layer.

▪ The transaction hub functions as the only version of the data within the organization.

The following are some assumptions that can be made regarding the development of a repository-based hub:

▪ There is a unique record for each master entity.

▪ Data quality techniques (parsing, standardization, normalization, cleansing) are applied to all input records.

▪ Identifying information includes attributes required to disambiguate entities.

▪ Data quality rules and enhancements are applied to each attribute of any newly created master record.

9.3.3. Hybrid/Centralized Master

A happy medium in contrast to both the “thin” model of the registry and the “thick” transaction hub provides a single model to manage the identifying attributes (to serve as the master data index) as well as common data attributes consolidated from the application data sets. In this approach, shown in Figure 9.3, a set of core attributes associated with each master data model is defined and managed within a single master system. The centralized master repository is the source for managing these core master data objects, which are subsequently published out to the application systems. In essence, the centralized master establishes the standard representation for each entity. Within each dependent system, application-specific attributes are managed locally but are linked back to the master instance via a shared global primary key.

|

| ▪Figure 9.3 Common attributes are managed together in a mapped master. |

The integrated view of common attributes in the master repository provides the “source of truth,” with data elements absorbed into the master from the distributed business applications' local copies. In this style, new data instances may be created in each application, but those newly created records must be synchronized with the central system. Yet if the business applications continue to operate on their own local copies of data also managed in the master, there is no assurance that the values stored in the master repository are as current as those values in application data sets. Therefore, the hybrid or centralized approach is reasonable for environments that require harmonized views of unique master objects but do not require a high degree of synchronization across the application architecture.

Some basic characteristics of the hybrid or centralized master architecture include the following:

▪ Applications maintain their own full local copies of the master data.

▪ Instances in the centralized master are the standardized or harmonized representations, and their values are published out to the copies or replicas managed by each of the client applications.

▪ A unique identifier is used to map from the instance in the master repository to the replicas or instances stored locally by each application.

▪ Application-specific attributes are managed by the application's data system.

▪ Consolidation of shared attribute values is performed on a periodic basis.

▪ Rules may be applied when applications modify their local copies of shared master attributes to notify the master or other participating applications of the change.

9.4. Implementation Spectrum

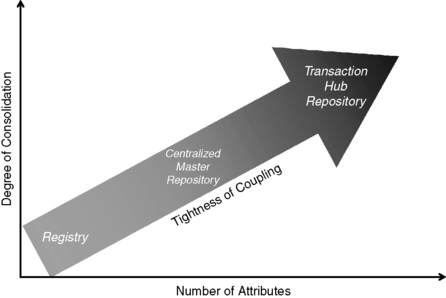

There may be differences between the ways that each of these architectures supports business process requirements, and that may suggest that the different architecture styles are particularly distinct in terms of design and implementation. In fact, a closer examination of the three approaches reveals that the styles represent points along a spectrum of design that ranges from thin index to fat repository. All of the approaches must maintain a registry of unique entities that is indexed and searchable by the set of identifying attributes, and each maintains a master version of master attribute values for each unique entity.

As Figure 9.4 shows, the styles are aligned along a spectrum that is largely dependent on three dimensions:

1 The number of attributes maintained within the master data system

2 The degree of consolidation applied as data are brought into the master repository

3 How tightly coupled applications are to the data persisted in the master data environment

|

| ▪Figure 9.4 MDM architectural styles along a spectrum. |

At one end of the spectrum, the registry, which maintains only identifying attributes, is suited to those application environments that are loosely coupled and where the drivers for MDM are based more on harmonization of the unique representation of master objects on demand. The transaction hub, at the other end of the spectrum, is well suited to environments requiring tight coupling of application interaction and a high degree of data currency and synchronization. The central repository or hybrid approach can lie anywhere between these two ends, and the architecture can be adjusted in relation to the business requirements as necessary; it can be adjusted to the limitations of each application as well. Of course, these are not the only variables on which the styles are dependent; the complexity of the service layer, access mechanics, and performance also add to the reckoning, as well as other aspects that have been covered in this book.

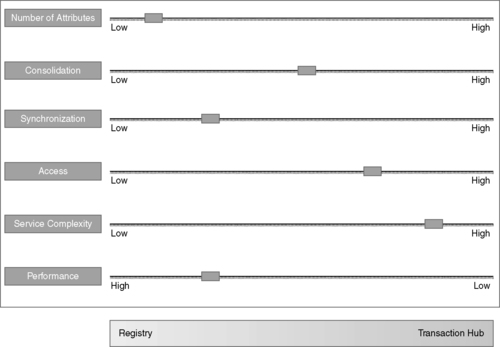

9.5. Applications Impacts and Architecture Selection

To some extent, this realization simplifies some of the considerations regarding selection of an underlying architecture. Instead of assessing the organization's business needs against three apparently “static” designs, one may look at the relative needs associated with these variables and use that assessment as selection criteria for the underlying MDM architecture. As an example, a template like the one shown in Figure 9.5 can be used to score the requirements associated with the selection criteria. The scores can be weighted based on identified key aspects, and the resulting assessment can be gauged to determine a reasonable point along the implementation spectrum that will support the MDM requirements.

|

| ▪Figure 9.5 Using the requirements variables as selection criteria. |

This template can be adjusted depending on which characteristics emerge as most critical. Variables can be added or removed, and corresponding weights can be assigned based on each variable's relative importance.

9.5.1. Number of Master Attributes

The first question to ask focuses on the number of data attributes expected to be managed as master data. Depending on the expected business process uses, the master index may be used solely for unique identification, in which case the number of master attributes may be limited to the identifying attributes. Alternatively, if the master repository is used as a shared reference table, there may be an expectation that as soon as the appropriate entry in the registry is found, all other attributes are immediately available, suggesting that there be an expanded number of attributes. Another consideration is that there may be a limited set of shared attributes, even though each system may have a lot of attributes that it alone uses. In this case, you may decide to limit the number of attributes to only the shared ones.

9.5.2. Consolidation

The requirement for data consolidation depends on two aspects: the number of data sources that feed the master repository and the expectation for a “single view” of each master entity. From the data input perspective, with a greater number of sources to be fed into the master environment there is greater complexity in resolving the versions into the surviving data instance. From the data access perspective, one must consider whether or not there is a requirement for immediate access to all master records such as an analytics application. Immediate access to all records would require a higher degree of record consolidation, which would take place before registration in the master environment, but the alternative would allow the survivorship and consolidation to be applied on a record-by-record basis upon request. Consolidation is discussed at great length in Chapter 10.

9.5.3. Synchronization

As we will explore in greater detail in Chapter 11, the necessary degrees of timeliness and coherence (among other aspects of what we will refer to as “synchronization”) of each application's view of master data are also critical factors in driving the decisions regarding underlying architectures. Tightly coupled environments, such as those that are intended to support transactional as well as analytical applications, may be expected to ensure that the master data, as seen from every angle, looks exactly the same. Environments intended to provide the “version of the truth” for reference data may not require that all applications be specifically aligned in their view simultaneously, as long as the values are predictably consistent within reason. In the former situation, controlling synchronization within a transaction hub may be the right answer. In the latter, a repository that is closer to the registry than the full-blown hub may suffice.

9.5.4. Access

This aspect is intended to convey more of the control of access rather than its universality. As mentioned in Chapter 6, master data management can be used to both consolidate and segregate information.

The creation of the master view must not bypass observing the policies associated with accessing authorization, authenticating clients, managing and controlling security, and protecting private personal information. The system designers may prefer to engineer observance of policies restricting and authorizing access to protected information into the service layer. Controlling protected access through a single point may be more suited to a hub style. On the other hand, there are environments whose use of master data sets might not necessarily be subject to any of these policies. Therefore, not every business environment necessarily has as strict a requirement for managing access rights and observing access control. The absence of requirements for controlling access enables a more flexible and open design, such as the registry approach. In general, the hub-style architecture is better suited for environments with more constrained access control requirements.

9.5.5. Service Complexity

A reasonable assessment of the business functionality invoked by applications should result in a list of defined business services. A review of what is necessary to implement these services in the context of MDM suggests the ways that the underlying master objects are accessed and manipulated using core component services. However, the degree of complexity associated with developing business services changes depending on whether the MDM system is deployed as a thin repository or as a heavyweight hub. For example, complex services may be better suited to a hub, because the business logic can be organized within the services and imposed on the single view instead of attempting to oversee the application of business rules across the source data systems as implemented in a registry.

9.5.6. Performance

Applications that access and modify their own underlying data sets are able to process independently. As designers engineer the consolidation and integration of replicated data into a master view, though, they introduce a new data dependence across the enterprise application framework. An MDM program with a single consolidated data set suddenly becomes a bottleneck, as accesses from multiple applications must be serialized to ensure consistency. This bottleneck can impact performance if the access patterns force the MDM services to continually (and iteratively) lock the master view, access or modify, then release the lock. A naively implemented transaction hub may be subject to performance issues.

Of course, an application environment that does not execute many transactions touching the same master objects may not be affected by the need for transaction serialization. Even less constrained systems that use master data for reference (and not transactional) purposes may be best suited to the registry or central repository approaches.

9.6. Summary

There has been a deliberate delay in discussing implementation architectures for master data management because deciding on a proper approach depends on an assessment of the business requirements and the way these requirements are translated into characteristic variables that impact the use, sustainability, and performance of the system. Understanding how master data are expected to be used by either human or automated business clients in the environment is the first step in determining an architecture, especially when those uses are evaluated in relation to structure.

While there are some archetypical design styles ranging from a loosely coupled, thin registry, to a tightly coupled, thick hub, these deployments reflect a spectrum of designs and deployments providing some degree of flexibility over time. Fortunately, a reasonable approach to MDM design will allow for adjustments to be made to the system as more business applications are integrated with the master data environment.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.