CHAPTER

5

Collaborative scripting for the Web

Allen Cypher,1 Clemens Drews,1 Eben Haber,1 Eser Kandogan,3 James Lin,4 Tessa Lau,1

Gilly Leshed,2 Tara Matthews,1 Eric Wilcox1

1IBM Research – Almaden

2Cornell University

3MIT CISAIL

4Google, Inc.

ABSTRACT

Today’s knowledge workers interact with a variety of Web-based tasks in the course of their jobs. We have found that two of the challenges faced by these workers are automation of repetitive tasks, and support for complex or hard-to-remember tasks. This chapter presents CoScripter, a system that enables users to capture, share, and automate tasks on the Web. CoScripter’s most notable features include ClearScript, a scripting language that is both human-readable and machine-understandable, and built-in support for sharing via a Web-based script repository. CoScripter has been used by tens of thousands of people on the Web. Our user studies show that CoScripter has helped people both automate repetitive Web tasks, and share how-to knowledge inside the enterprise.

INTRODUCTION

Employees in modern enterprises engage in a wide variety of complex, multistep processes as part of their daily work. Some of these processes are routine and tedious, such as reserving conference rooms or monitoring call queues. Other processes are performed less frequently but involve intricate domain knowledge, such as making travel arrangements, ordering new equipment, or selecting insurance beneficiaries. Knowledge about how to complete these processes is distributed throughout the enterprise; for the more complex or obscure tasks, employees may spend more time discovering how to do a process than to actually complete it.

However, what is complex or obscure for one employee may be routine and tedious for another. Someone who books conference rooms regularly should be able to share this knowledge with someone for whom reserving rooms is a rare event. The goal of our CoScripter project is to provide tools that facilitate the capture of how-to knowledge around enterprise processes, share this knowledge as human-readable scripts in a centralized repository, and make it easy for people to run these scripts to learn about and automate these processes.

Our “social scripting” approach has been inspired by the growing class of social bookmarking tools such as del.icio.us (Lee, 2006) and dogear (Millen, Feinberg, & Kerr, 2006). Social bookmarking systems began as personal information management tools to help users manage their bookmarks. However they quickly demonstrated a social side-effect, where the shared repository of bookmarks became a useful resource for others to consult about interesting pages on the Web. In a similar vein, we anticipate CoScripter being used initially as a personal task automation tool, for early adopters to automate tasks they find repetitive and tedious. When shared in a central repository, these automation scripts become a shared resource that others can consult to learn how to accomplish common tasks in the enterprise.

RELATED WORK

CoScripter was inspired by Chickenfoot (Bolin et al., 2005), which enabled end users to customize Web pages by writing simplified JavaScript commands that used keyword pattern matching to identify Web page components. CoScripter uses similar heuristics to label targets on Web pages, but CoScripter’s natural language representation for scripts requires less programming ability than Chickenfoot’s JavaScript-based language.

Sloppy programming (Little & Miller, 2006) allowed users to enter unstructured text which the system would interpret as a command to take on the current Web page (by compiling it down to a Chickenfoot statement). Although a previous version of CoScripter used a similar approach to interpret script steps, feedback from users indicated that the unstructured approach produced too many false interpretations, leading to the current implementation that requires steps to obey a specific grammar.

Tools for doing automated capture and replay of Web tasks include iMacros1 and Selenium.2 Both tools function as full-featured macro recorder and playback systems. However CoScripter is different in that scripts are recorded as natural language scripts that can be modified by the user without having to understand a programming language.

CoScripter’s playback functionality was inspired by interactive tutorial systems such as Eager (Cypher, 1991) and Stencils (Kelleher & Pausch, 2005a). Both systems used visual cues to direct user attention to the right place on the screen to complete their task. CoScripter expands upon both of those systems by providing a built-in sharing mechanism for people to create and reuse each others’ scripts.

THE COSCRIPTER SYSTEM

CoScripter interface

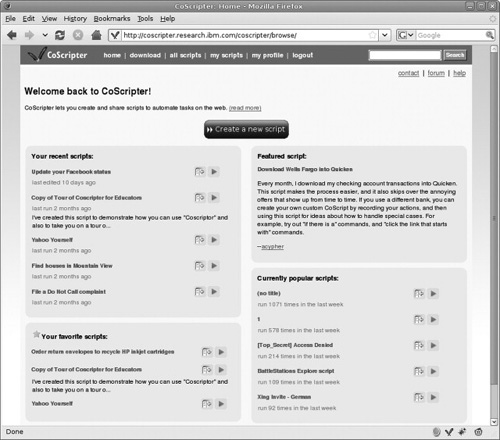

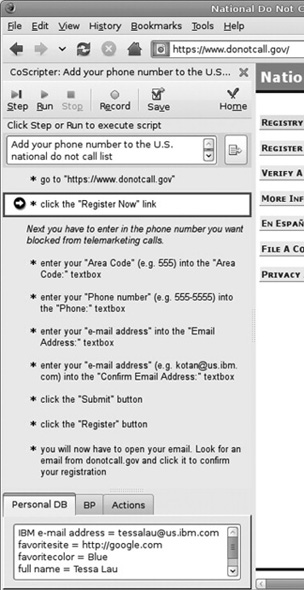

CoScripter consists of two main parts: a centralized, online repository of scripts (Figure 5.1), and a Firefox extension that facilitates creating and running scripts (Figure 5.2). The two work together to provide a seamless experience. Users start by browsing the repository to find interesting scripts; the site supports full-text search as well as user-contributed tags and various sort mechanisms to help users identify scripts of interest.

FIGURE 5.1

CoScripter script repository.

Once a script has been found, the user can click a button to load the script into the CoScripter sidebar. The sidebar provides an interface to step through the script, line by line. At each step, CoScripter highlights the step to be performed by drawing a green box around the target on the Web page. By clicking CoScripter’s “step” button, the user indicates that CoScripter should perform this step automatically and the script advances to the following step.

Scripts can also be run automatically to completion. The system iterates through the lines in the script, automatically performing each action until either a step is found that cannot be completed, or the script reaches the end. Steps that cannot be completed include steps that instruct the user to click a button that does not exist, or to fill in a field without providing sufficient information to do so.

FIGURE 5.2

CoScripter sidebar (Firefox extension).

CoScripter also provides the ability to record scripts by demonstration. Using the same sidebar interface, users can create a new blank script. The user may then start demonstrating a task by performing it directly in the Web browser. As the user performs actions on the Web such as clicking on links, filling out forms, and pushing buttons, CoScripter records a script describing the actions being taken. The resulting script can be saved directly to the repository. Scripts can be either public (visible to all) or private (visible only to the creator).

Scripting language

CoScripter’s script representation consists of a structured form of English which we call ClearScript. ClearScript is both human-readable and machine-interpretable. Because scripts are human-readable, they can double as written instructions for performing a task on the Web. Because they are machine-interpretable, we can provide tools that can automatically record and understand scripts in order to execute them on a user’s behalf.

For example, here is a script to conduct a Web search:

• go to “http://google.com”

• enter “sustainability” into the “Search” textbox

• click the “Google Search” button

Recording works by adding event listeners to clicks and other events on the HTML DOM. When a user action is detected, we generate a step in the script that describes the user’s action. Each step is generated by filling out a template corresponding to the type of action being performed. For example, click actions always take the form “click the TargetLabel TargetType”. The TargetType is derived from the type of DOM node on which the event listener was triggered (e.g., an anchor tag has type “link”, whereas an <INPUT type="text"> has type “textbox”). The TargetLabel is extracted from the source of the HTML page using heuristics, as described later in the “Human-readable labels” section.

Keyword-based interpreter

The first generation CoScripter (described in (Little et al., 2007), previously known as Koala) used a keyword-based algorithm to parse and interpret script steps within the context of the current Web page. The algorithm begins by enumerating all elements on the page with which users can interact (e.g., links, buttons, text fields). Each element is annotated with a set of keywords that describe its function (e.g., click and link for a hypertext link; enter and field for a textbox). It is also annotated with a set of words that describe the element, including its label, caption, accessibility text, or words nearby. A score is then assigned to each element by comparing the bag of words in the input step with the bag of words associated with the element; words that appear in both contribute to a higher score. The highest scoring element is output as the predicted target; if multiple elements have the same score, one is chosen at random.

Once an element has been found, its type dictates the action to be performed. For example, if the element is a link, the action will be to click on it; if the element is a textbox, the action will be to enter something into it. Certain actions, such as enter, also require a value. This value is extracted by removing words from the instruction that match the words associated with the element, along with common stopwords such as “the” and “in”; the remaining words are predicted to be the value.

The design of the keyword-based algorithm caused it to always predict some action, regardless of the input given. Whereas this approach worked surprisingly well at guessing which command to execute on each page, the user study results below demonstrate that this approach often produced unpredictable results.

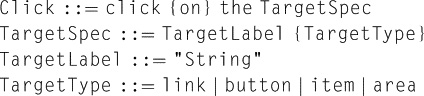

Grammar-based interpreter

The second generation CoScripter used a grammar-based approach to parse script steps. Script steps are parsed using a LR(1) parser, which parses the textual representation of the step into an object representing a Web command, including the parameters necessary to interpret that command on a given Web page (such as the label and type of the interaction target).

The verb at the beginning of each step indicates the action to perform. Optionally a value can be specified, followed by a description of the target label and an optional target type. Figure 5.3 shows an excerpt of the BNF grammar recognized by this parser, for click actions.

Human-readable labels

CoScripter’s natural language script representation requires specialized algorithms to be able to record user actions and play them back. These algorithms are based on a set of heuristics for accurately generating a human-readable label for interactive widgets on Web pages, and for finding such a widget on a page given the human-readable label.

CoScripter’s labeling heuristics take advantage of accessibility metadata, if available, to identify labels for textboxes and other fields on Web pages. However, many of today’s Web pages are not fully accessible. In those situations, CoScripter incorporates a large library of heuristics that traverse the DOM structure to guess labels for a variety of elements. For example, to find a label for a textbox, we might look for text to the left of the box (represented as children of the textbox’s parents that come before it in the DOM hierarchy), or we might use the textbox’s name or tooltip.

Our algorithm for interpreting a label relative to a given Web page consists of iterating through all candidate widgets of the desired type (e.g., textboxes) and generating the label for the widget. If the label matches the target label, then we have found the target, otherwise we keep searching until we have exhausted all the candidates on the current page.

FIGURE 5.3

Excerpt from CoScripter’s grammar.

Some labels may not be unique in the context of a given page, for example, if a page has multiple buttons all labeled “Search”. Once a label has been generated, we check to see whether the same label can also refer to other elements on the page. If this is the case, then the system generates a disambiguation term to differentiate the desired element from the rest. The simplest form of disambiguator, which is implemented in the current version of CoScripter, is an ordinal (e.g., the second “Search” button). We imagine other forms of disambiguators could be implemented that leverage higher-level semantic features of the Web page, such as section headings (e.g., the “Search” button in the “Books” section); however, these more complex disambiguators are an item for future work.

Variables and the personal database

CoScripter provides a simple mechanism to generalize scripts, using a feature we call the “personal database.” Most scripts include some steps that enter data into forms. This data is often user-specific, such as a name or a phone number. A script that always entered “Joe Smith” when prompted for a name would not be useful to many people besides Joe. To make scripts reusable across users, we wanted to provide an easy mechanism for script authors to generalize their scripts and abstract out variables that should be user-dependent, such as names and phone numbers.

Variables are represented using a small extension to the ClearScript language. Anywhere a literal text string can appear, we also support the use of the keyword “your” followed by a variable name reference. For example:

• Enter your “full name” into the “Search” textbox

Steps that include variables are recorded at script creation time if the corresponding variable appears in the script author’s personal database. The personal database is a text file containing a list of name-value pairs (lower left corner of Figure 5.2). Each name–value pair is interpreted as the name of a variable and its value, so that, for example, the text “full name = Mary Jones” would be interpreted as a variable named “full name” with value “Mary Jones”. During recording, if the user enters into a form field a value that matches the value of one of the personal database variables, the system automatically records a step containing a variable as shown above, rather than recording the user’s literal action. Users can also add variables to steps post hoc by editing the text of the script and changing the literal string to a variable reference.

Variables are automatically dereferenced at runtime in order to insert each user’s personal information into the script during execution. When a variable reference is encountered, the personal database is queried for a variable with the specified name. If found, the variable’s value will be substituted as the desired value for that script step. If not found, script execution will pause and ask the user to manually enter in the desired information (either directly into the Web form, or into the personal database where it will be picked up during the next execution).

Debugging and mixed-initiative interaction

CoScripter supports a variety of interaction styles for using scripts. The basic interaction is to step through a script, line by line. Before each step, CoScripter highlights what it is about to do; when the “Step” button is clicked, it takes that action and advances to the next step. Scripts can also run to completion; steps are automatically advanced until the end of the script is reached, or until a step is reached that either requires user input, or triggers an error.

In addition to these basic modes, CoScripter also supports a mixed-initiative mode of interaction. The mixed-initiative mode helps people learn to use scripts. Instead of allowing CoScripter to perform script steps on the user’s behalf, the user may perform actions herself. She may either deviate from the script entirely, or may choose to follow the instructions described by the script. If she follows the instructions and manually performs an action recommended by CoScripter as the next step (e.g., clicking on the button directly in the Web page, rather than on CoScripter’s “Step” button), then CoScripter will detect that she has performed the recommended script step and advance to the following step.

CoScripter can be used in this way as a teaching tool, to instruct users on how to operate a user interface. CoScripter’s green page highlighting helps users identify which targets should be clicked on, which can be extremely useful if a page is complex and targets are difficult to find in a visual scan. After the user interacts with the target, CoScripter advances to the next step and highlights the next target. In this way, a user can learn how to perform a task using CoScripter as an active guide.

Mixed-initiative interaction is also useful for debugging or on-the-fly customization of scripts. During script execution, a user may choose to deviate from the script by performing actions directly on the Web page itself. Users can use this feature to customize the script’s execution by overriding what the script says to do, and taking their own action instead. For example, if the script assumes the user is already logged in, but the user realizes that she needs to log in before proceeding with the script, she can deviate from the script by logging herself in, then resume the script where it left off.

EVALUATING COSCRIPTER’S EFFECTIVENESS

We report on two studies that measure CoScripter’s effectiveness at facilitating knowledge sharing for Web-based tasks across the enterprise. For our first study, we interviewed 18 employees of a large corporation to understand what business processes were important in their jobs, and to identify existing practices and needs for learning and sharing these processes.

In a second study, we deployed CoScripter in a large organization for a period of several months. Based on usage log analysis and interviews with prominent users, we were able to determine how well CoScripter supported the needs discovered in the first study, and learn how users were adapting the tool to fit their usage patterns. We also identified opportunities for future development.

STUDY 1: UNDERSTANDING USER NEEDS

Participants

We recruited 18 employees of the large corporation in which CoScripter was deployed. Since our goal was to understand business process use and sharing, we contacted employees who were familiar with the organization’s business processes and for whom procedures were a substantial part of their everyday work. Our participants had worked at the corporation for an average of 19.4 years (ranging from a few weeks to 31 years, with a median of 24 years). Twelve participants were female. Seven participants served as assistants to managers, either administrative or technical; six held technical positions such as engineers and system administrators; three were managers; and two held human resource positions. The technology inclination ranged from engineers and system administrators on the high end to administrative assistants on the low end. All but two worked at the same site within the organization.

Method

We met our participants in their offices. Our interviews were semistructured; we asked participants about their daily jobs, directing them to discuss processes they do both frequently and infrequently. We prompted participants to demonstrate how they carry out these procedures, and probed to determine how they obtained the knowledge to perform them and their practices for sharing them. Sessions lasted approximately one hour and were video-recorded with participants’ permission (only one participant declined to be recorded).

Results

We analyzed data collected in the study by carefully examining the materials: video-recordings, their transcripts, and field notes. We coded the materials, marking points and themes that referred to our exploratory research goals: (1) common, important business processes; and (2) practices and needs for learning and sharing processes.

Note that with our study method, we did not examine the full spectrum of the interviewees’ practices, but only those that they chose to talk about and demonstrate. Nonetheless, for some of the findings we present quantified results based on the coded data. In the rest of this chapter, wherever a reference is made to a specific participant, they are identified by a code comprised of two letters and a number.

What processes do participants do?

We asked our participants to describe business processes they perform both frequently and infrequently. The tasks they described included Web-based processes, other online processes (e.g., processes involving company-specific tools, email, and calendars), and non-computer-based processes. Given that CoScripter is limited to a Firefox Web browser, we focused on the details of Web-based tasks, but it is clear that many business processes take place outside a browser.

We found that there was a significant amount of overlap in the processes participants followed. Seventeen participants discussed processes that were mentioned by at least one other participant. Further, we found that a core set of processes was performed by many participants. For example, 14 participants described their interactions with the online expense reimbursement system. Some other frequently mentioned processes include making travel arrangements in an online travel reservation system, searching for and reserving conference rooms, and purchasing items in the procurement system. These findings show that there exists a common base of processes that many employees are responsible for performing.

Despite a common base of processes, we observed considerable personal variation, both within a single process and across the processes participants performed. A common cause for variation within a single process was that the exact input values to online tools were often different for each person or situation. For example, DS1 typically travels to a specific destination, whereas LH2 flies to many different destinations. We observed variations like these for all participants. Secondly, there were a number of processes that were used by only a small number of people. Eleven participants used Web-based processes not mentioned by others. For instance, TD1, a human resource professional, was the only participant to mention using an online system for calculating salary base payments. These findings suggest that any process automation solution would need to enable personalization for each employee’s particular needs.

Our participants referred to their processes using various qualities, including familiarity, complexity, frequency, involvement, etc. Some of these qualities, such as complexity, were dependent on the task. For example, purchasing items on the company’s procurement system was a challenging task for most participants. Other qualities, such as familiarity and frequency, varied with the user. For example, when demonstrating the use of the online travel system, we saw CP1, a frequent user, going smoothly through steps which DM1 struggled with and could not remember well. We observed that tasks that were frequent or hard-to-remember for a user may be particularly amenable to automation.

Frequent processes. Participants talked about 26 different processes they performed frequently, considering them tedious and time-consuming. For example, JC1 said: “[I] pay my stupid networking bill through procurement, and it’s the same values every month, nothing ever changes.” Automation of frequent processes could benefit users by speeding up the execution of the task.

Hard-to-remember processes. At least eight participants mentioned processes they found hard to remember. We observed two factors that affected procedure recall: its complexity and its frequency (though this alignment was not absolute). In general, tasks completed infrequently, or which required the entry of arcane values, were often considered hard to remember. For example, this company’s procurement system required the entry of “major codes” and “minor codes” to complete a procurement request. One user of that system said, “It’s not so straightforward, so I always have to contact my assistant, who contacts someone in finance to tell me these are the right codes that you should be using.” Alternatively, although AB1 frequently needed to order business cards for new employees, it involved filling out multiple data fields she found hard to remember. Automation could benefit users of hard-to-remember tasks by relieving the need to memorize their steps.

How do participants share knowledge?

An important goal of our interviews was to develop an understanding of the sharing practices that exist for procedural knowledge. Thus, we asked participants how they learned to perform important processes, how they captured their procedural knowledge, and how and with whom they shared their own procedural knowledge.

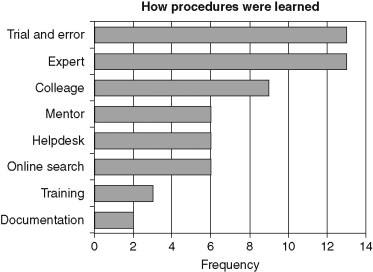

Learning. Participants listed a variety of ways by which they learned procedures, most of them listing more than one approach. Figure 5.4 shows the different ways people learned procedures. Note that the categories are not mutually exclusive. Rather, the boundaries between contacting an expert, a colleague, and a mentor were often blurred. For example, KR1 needed help with a particular system and mentioned contacting her colleague from next door, who was also an expert with the system. Participants often said they would start with one learning approach and move to another if they were unsuccessful. Interestingly, although each participant had developed a network of contacts from which to learn, they still use trial and error as a primary way of getting through procedures: 13 out of 18 participants mentioned this approach for obtaining how-to knowledge. This finding indicates that learning new procedures can be difficult, and people largely rely on trial and error.

FIGURE 5.4

Frequency of ways participants learned new processes.

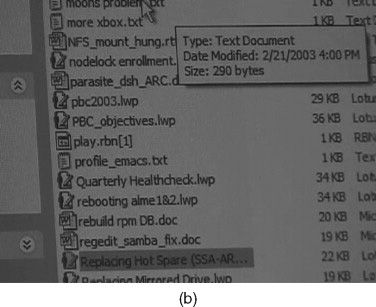

For maintaining the acquired knowledge, 15 out of 18 participants kept or consulted private and/or public repositories for maintaining their knowledge. For the private repositories, participants kept bookmarks in their browsers as pointers for procedures, text files with “copy-paste” chunks of instructions on their computer desktops, emails with lists of instructions, as well as physical binders, folders, and cork-boards with printouts of how-to instructions. Figure 5.5 shows sample personal repositories. Users create their own repositories to remember how to perform tasks.

One participant noted an important problem with respect to capturing procedural knowledge: “Writing instructions can be pretty tedious. So, if you could automatically record a screen movie or something, that would make it easier to [capture] some of the instructions. It would be easy to get screenshots instead of having to type the stories.” This feedback indicates that an automatic procedure recording mechanism would ease the burden of capturing how-to knowledge (for personal or public use).

Sharing. Eleven participants reported that they maintain public repositories of processes and “best practices” they considered useful for their community of practice or department. However, though these repositories were often made available to others, others did not frequently consult them. DS2 said, “I developed this, and I sent a link to everyone. People still come to me, and so I tell them: well you know it is posted, but let me tell you.” Having knowledge spread out across multiple repositories also makes it harder to find. In fact, seven participants reported that they had resorted to more proactive sharing methods, such as sending colleagues useful procedures via email.

Our results show that experts clearly seek to share their how-to knowledge within the company. Nonetheless, we observe that sharing is time-consuming for experts and shared repositories are seldom used by learners, suggesting that sharing could be bolstered by new mechanisms for distributing procedural knowledge.

FIGURE 5.5

Ways participants maintain their procedural knowledge: a physical binder with printouts of emails (a); a folder named “Cookbook” with useful procedures and scripts (b).

SUMMARY

Our initial study has shown that there is a need for tools that support the automation and sharing of how-to knowledge in the enterprise. We observed a core set of processes used by many participants, as well as less-common processes. Processes that participants used frequently were considered routine and tedious, whereas others were considered hard to remember. Automating such procedures could accelerate frequent procedures and overcome the problem of recalling hard-to-remember tasks. Our data also suggest that existing solutions do not adequately support the needs of people learning new processes. Despite rich repositories and social ties with experts, mentors, and colleagues, people habitually apply trial and error in learning how to perform their tasks. New mechanisms are needed for collecting procedural knowledge to help people find and learn from it.

We also found that people who were sources of how-to knowledge needed better ways for capturing and sharing their knowledge. These people were overloaded by writing lengthy instructions, maintaining repositories with “best practices,” and responding to requests from others. Also, distribution of their knowledge was restricted due to limited use of repositories and bounds on the time they could spend helping others. As such, automating the creation and sharing of instructions could assist experts in providing their knowledge to their community and colleagues.

STUDY 2: REAL-WORLD USAGE

In the second study, we analyzed usage logs and interviewed users to determine how well CoScripter supported the needs and practices identified in the first study. The purpose of this study was threefold: (1) determine how well CoScripter supported the user needs discovered in Study 1, (2) learn how users had adapted CoScripter to their needs, and (3) uncover outstanding problems to guide future CoScripter development.

Log analysis: recorded user activity

CoScripter was available for use within the IBM corporation starting from November 2006 through the time of this research (September 2007). Usage logs provided a broad overview of how CoScripter had been used inside the company, while the interviews in the following section provided more in-depth examples of use. In this section we present an initial analysis of quantitative usage, with a content analysis left to future work. The data reported here excludes the activities of CoScripter developers.

Script usage patterns

Users were able to view scripts on the CoScripter repository anonymously. Registration was only required to create, modify, or run scripts. Of the 1200 users who registered, 601 went on to try out the system. A smaller subset of those became regular users.

We defined active users as people who have run scripts at least five times with CoScripter, used it for a week or more, and used it within the past two months. We identified 54 users (9% of 601 users) as active users. These users, on average, created 2.1 scripts, ran 5.4 distinct scripts, ran scripts 28.6 times total, and ran a script once every 4.5 days. Although 9% may seem to be a relatively low fraction, we were impressed by the fact that 54 people voluntarily adopted CoScripter and derived enough value from it to make it a part of their work practices.

We defined past users as those who were active users in the past, but had not used CoScripter in the past two months. This category included 43 users (7%). Finally, we defined experimenters as those who tried CoScripter without becoming active users, which included 504 users (84%). The logs suggested that automating frequent tasks was a common use, with 23 scripts run 10 or more times by single users at a moderate interval (ranging from every day to twice per month).

Collaborating over scripts

One of the goals of CoScripter is to support sharing of how-to knowledge. The logs implied that sharing was relatively common: 24% of 307 user-created scripts were run by two or more different users, and 5% were run by six or more users. People often ran scripts created by others: 465 (78%) of the user population ran scripts they did not create, running 2.3 scripts created by others on average. There is also evidence that users shared knowledge of infrequent processes: we found 16 scripts that automated known business processes within our company (e.g., updating emergency contact info), that were run once or twice each by more than ten different users.

In addition to the ability to run and edit others’ scripts, CoScripter supports four other collaborative features: editing others’ scripts, end-user rating of scripts, tagging of scripts, and adding freeform comments to scripts. We found little use of these collaborative features: fewer than 10% of the scripts were edited by others, rated, tagged, or commented on. Further research is needed to determine whether and how these features can be made more valuable in a business context.

Email survey of lapsed users

To learn why employees stopped using CoScripter, we sent a short email survey to all the experimenters and past users, noting their lack of recent use and asking for reasons that CoScripter might not have met their needs. Thirty people replied, and 23 gave one or more reasons related to CoScripter. Of the topics mentioned, ten people described reliability problems where CoScripter did not work consistently, or did not handle particular Web page features (e.g., popup windows and image buttons); five people said their tasks required advanced features not supported in CoScripter (most commonly requested were parameters, iteration, and branching); three people reported problems coordinating mixed initiative scripts, where the user and CoScripter alternate actions; and two had privacy concerns (i.e., they did not like scripts being public by default). Finally, seven people reported that their jobs did not involve enough tasks suitable for automation using CoScripter.

INTERVIEWS WITH COSCRIPTER USERS

As part of Study 2, we explored the actual usage of CoScripter by conducting a set of interviews with users who had made CoScripter part of their work practices.

Participants

Based on usage log analysis, we chose people who had used one or more scripts at least 30 times. We also selected a few people who exhibited interesting behavior (e.g., editing other peoples’ scripts or sharing a script with others). We contacted 14 people who met these usage criteria; 8 agreed to an interview.

Seven interviewees were active CoScripter users, one had used the tool for 5 months and stopped 3.5 months before the interview. Participants had used CoScripter for an average of roughly 4 months (minimum of 1, maximum of 9). They had discovered the tool either via email from a co-worker or on an internal Web site promoting experimental tools for early adopters. Seven participants were male, and they worked in 8 sites across 4 countries, with an average of 10 years tenure at the company. We interviewed four managers, one communications manager, one IT specialist, one administrative services representative, and one technical assistant to a manager/software engineer. Overall, our participants were technology savvy, and five of them had created a macro or scripts before CoScripter. However, only two of them claimed software development expertise.

Method

We conducted all but one of our interviews over the phone, because participants were geographically dispersed, using NetMeeting to view the participant’s Firefox browser. Interviews lasted between 30 and 60 minutes and were audio-recorded with participants’ permission in all cases but one.

We conducted a semistructured interview based on questions that were designed to gather data about how participants used CoScripter, why they used it, and what problems they had using the tool. In addition to the predetermined questions, we probed additional topics that arose during the interview, such as script privacy and usability. We also asked participants to run and edit their scripts so we could see how they interacted with CoScripter.

Results

Automating frequent or hard-to-remember tasks

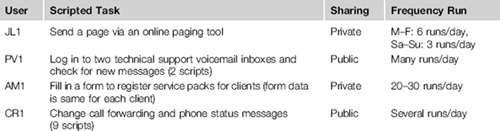

Four participants described CoScripter as very useful for automating one or more frequent, routine tasks. Each person had different tasks to automate, highlighting various benefits of CoScripter as an automation tool. Table 5.1 lists the most frequent routine tasks that were automated.

Those four subjects described instances where CoScripter saved them time and reduced their effort. For example, CR 1 said, “Two benefits: one, save me time – click on a button and it happens – two, I wouldn’t have to worry about remembering what the address is of the messaging system here.” PV1 also appreciated reduced effort to check voicemail inboxes: “I set up [CoScripter] to with one click get onto the message center.” AM1 used his service pack registration script for a similar reason, saying it was “really attractive not to have to [enter the details] for every single service pack I had to register.” JL1 runs his script many times during non-business hours. He would not be able to do this without some script (unless, as he said, “I didn’t want to sleep”).

These participants, none of whom have significant software development experience, also demonstrate an important benefit of CoScripter: it lowered the barrier for automation, requiring minimal programming language skills to use.

Though most participants interacted with CoScripter via the sidebar or the wiki, JL1 and PV1 invoked CoScripter in unexpected ways. JL1 used the Windows Task Scheduler to automatically run his script periodically in the background. Thus, after creating his script, JL1 had no contact with the CoScripter user interface. PV1 created a bookmark to automatically run each of his two scripts, and added them to his Firefox Bookmarks Toolbar. To run the scripts, he simply clicked on the bookmarks – within a few seconds he could check both voicemail inboxes.

In addition to automating frequent tasks, CoScripter acted as a memory aid for hard-to-remember tasks. For example, DG1 created scripts for two new processes he had to do for his job. Both scripts used an online tool to create and send reports related to customer care. Creating the report involved a number of complicated steps, and CoScripter made them easier to remember:

[CoScripter] meant I didn’t have to remember each step. There were probably six or seven distinct steps when you have to choose drop-downs or checkboxes. It meant I didn’t have to remember what to do on each step. Another benefit is I had to choose eight different checkboxes from a list of about forty. To always scan the list and find those eight that I need was a big pain, whereas [CoScripter] finds them in a second. It was very useful for that.

After using CoScripter to execute these scripts repeatedly using the step-by-step play mode (at least 28 times for one script, 9 times for the other) for five months, DG1 stopped using them. He explained, “It got to the point that I memorized the script, so I stopped using it.” This highlights an interesting use case for CoScripter: helping a user memorize a process that they want to eventually perform on their own.

Participant LH1 found CoScripter useful in supporting hard-to-remember tasks, by searching the wiki and finding scripts others had already recorded: “I found the voicemail one, it’s really useful because I received a long email instruction on how to check my voicemail. It was too long so I didn’t read it and after a while I had several voicemails and then I found the [CoScripter] script. It’s really useful.”

Automation limitations and issues. Despite generally positive feedback, participants cited two main issues that limited their use of CoScripter as an automation tool, both of which were named by lapsed users who were surveyed by email: reliability problems and a need for more advanced features.

Four out of eight participants noted experiencing problems with the reliability and robustness of CoScripter’s automation capability. All of these participants reported problems related to CoScripter misinterpreting instructions. Misinterpretation was a direct result of the original keyword-based interpreter (Little et al., 2007), which did not enforce any particular syntax, but instead did a best-effort interpretation of arbitrary text. For example, one user reported running a script that incorrectly clicked the wrong links and navigated to unpredictable pages (e.g., an instruction to “click the Health link,” when interpreted on a page that does not have a link labeled “Health”, would instead click a random link on the page). Another user reported that his script could not find a textbox named in one of the instructions and paused execution. Misinterpretation problems were exacerbated by the dynamic and unpredictable nature of the Web, where links and interface elements can be renamed or moved around in the site.

Five users reported wanting more advanced programming language features, to properly automate their tasks. In particular, participants expressed a desire for automatic script restart after mixed-initiative user input, iteration, script debugging help, and conditionals.

Sharing how-to knowledge

Our interviews revealed some of the different ways CoScripter has been used to share how-to knowledge. These included teaching tasks to others, promoting information sources and teaching people how to access them, and learning how to use CoScripter itself by examining or repurposing other peoples’ scripts.

Participants used CoScripter to teach other people how to complete tasks, but in very different ways. The first person, LH1, recorded scripts to teach her manager how to do several tasks (e.g., creating a blog). LH1 then emailed her manager a link to the CoScripter script, and the manager used CoScripter to complete the task. The second person, DG1, managed support representatives and frequently sent them how-to information about various topics, from generating reports online to using an online vacation planner. DG1 used CoScripter to record how to do the tasks, and then copied and pasted CoScripter’s textual step-by-step instructions into emails to his colleagues.

DG1’s use of CoScripter highlights a benefit of its human-readable approach to scripting. The scripts recorded by CoScripter are clearly readable, since DG1’s colleagues were able to use them as textual instructions.

Another participant, MW1, a communications manager, used CoScripter to promote information that is relevant to employees in a large department and to teach them how to access that information. He created one script for adding a news feed to employees’ custom intranet profiles and a second script to make that feed accessible from their intranet homepage. He promoted these scripts to 7000 employees by posting a notice on a departmental homepage and by sending links to the scripts in an email. Before using CoScripter, MW1 said he did not have a good method for sharing this how-to information with a wide audience. With such a large audience, however, correctly generalizing the script was a challenge; MW1 said he spent 2 to 3 hours getting the scripts to work for others. Still, MW1 was so pleased that he evangelized the tool to one of his colleagues, who has since used CoScripter to share similar how-to knowledge with a department of 5000 employees.

Participants used CoScripter for a third sharing purpose: three people talked about using other peoples’ scripts to learn how to create their own scripts. For example, PV1 told us that his first bug-free script was created by duplicating and then editing someone else’s script. Sharing limitations and issues. Though some participants found CoScripter a valuable tool for sharing, these participants noted limitations of critical mass and others raised issues about the sharing model, generalizability, and privacy, which were barriers to sharing.

Sharing has been limited by the narrow user base (so far) within the enterprise. As CR1 said, critical mass affected his sharing (“I haven’t shared [this script] with anyone else. But there are other people I would share it with if they were CoScripter users.”), and his learning (“I think I would get a lot more value out of it if other people were using it. More sharing, more ideas. Yet most of the people I work with are not early adopters. These people wouldn’t recognize the difference between Firefox and Internet Explorer.”). DG1, who sent CoScripter-generated instructions to his co-workers via email, could have more easily shared the scripts if his coworkers had been CoScripter users.

We also saw problems when users misunderstood the wiki-style sharing model. For example, PV1 edited and modified another person’s script to use a different online tool. He did not realize that his edits would affect the other person’s script until later: “I started out by editing someone else’s script and messing them up. So I had to modify them so they were back to what they were before they were messed up, and then I made copies.” A second participant, PC1, had deleted all the contents of a script and did not realize this until the script was discussed in the interview: “That was an accident. I didn’t know that [I deleted it]. When I look at those scripts, I don’t realize that they are public and that I can blow them away. They come up [on the sidebar] and they look like examples.”

Easy and effective ways to generalize scripts so that many people can use them is essential to sharing. Though users are able to generalize CoScripter scripts, participants told us it is not yet an easy process. For example, MW1 created a script for 7000 people, but spent a few hours getting it to work correctly for others. Also, although CoScripter’s personal database feature allows users to write scripts that substitute user-specified values at runtime, not all processes can be generalized using this mechanism. For example, PV1 copied another person’s script for logging into voicemail in one country and modified it to log in to voicemail in his country, because each country uses a different Web application.

Finally, sharing was further limited when participants were concerned about revealing private information. Three participants created private scripts to protect private information they had embedded in the scripts. AC1 said: “There is some private information here – my team liaison’s telephone number, things like that. I don’t know, I just felt like making it private. It’s not really private, but I just didn’t feel like making it public.” Others used the personal database variables to store private information and made their scripts public. This privacy mechanism was important to them: “Without the personal variables, I would not be able to use the product [CoScripter]. I have all this confidential information like PIN numbers. It wouldn’t be very wise to put them in the scripts.” However, one participant was wary of the personal variables: “The issue I have with that is that I don’t know where that is stored…If I knew the data was encrypted, yeah.”

Summary

These findings show that, while not perfect, CoScripter is beginning to overcome some of the barriers to sharing procedural knowledge uncovered in Study 1. First, CoScripter provides a single public repository of procedural knowledge that our interviewees used (e.g., several participants used scripts created by other people). Second, CoScripter eliminates the tedious task of writing instructions (e.g., DG1 used it to create textual instructions for non-CoScripter users). Third, CoScripter provides mechanisms to generalize instructions to a broad audience so that experts can record their knowledge once for use by many learners (e.g., MW1 generalized his scripts to work for 7000 employees).

GENERAL ISSUES AND FUTURE WORK

By helping users automate and share how-to knowledge in a company, CoScripter is a good starting point for supporting the issues uncovered in Study 1. User feedback, however, highlights several opportunities for improvement. While some of the feedback pointed out usability flaws in our particular implementation, a significant number of comments addressed more general issues related to knowledge sharing systems based on end user programming. Participants raised two issues that highlight general automation challenges – reliability challenges and the need for advanced features – and four collaboration issues that will need to be addressed by any knowledge sharing system – the sharing model, script generalization, privacy, and critical mass.

One of the most common complaints concerned the need for improved reliability and robustness of the system’s automation capability. These errors affected both users relying on the system to automate repetitive tasks, and those relying on the system to teach them how to complete infrequent tasks. Without correct and consistent execution, users fail to gain trust in the system and adoption is limited.

Users also reported wanting more advanced programming language features, such as iteration, conditionals, and script debugging help, to properly automate their tasks. These requests illustrate a tradeoff between simplicity – allowing novice users to learn the system easily – and a more complex set of features to support the needs of advanced users. For example, running a script that has iterations or conditionals might be akin to using a debugger, which requires significant programming expertise. A challenge for CoScripter or any similar system will be to support the needs of advanced users while enabling simple script creation for the broader user base.

Our studies also raise several collaboration issues that must be addressed by any PBD-based knowledge sharing tool: the sharing model, privacy, script generalization, and critical mass. The wiki-style sharing model was confusing to some users. Users should be able to easily tell who can see a script and who will be affected by their edits, especially given the common base of processes being performed by many employees. A more understandable sharing model could also help address privacy concerns, as would a more fine-grained access control mechanism that enables users to share scripts with an explicit list of authorized persons. Finer-grained privacy controls might encourage more users to share scripts with those who have a business need to view them. For generalization, we learned that personal database variables were a good start, but we are uncertain as to what degree this solution appropriately supports users with no familiarity of variables and other programming concepts. One way to better support generalization and personalization could be to enable users to record different versions of a script for use in different contexts, and automatically redirect potential users to the version targeted for their particular situation. Finally, a small user base limited further use of the system. We hope that solving all the issues above will lower the barriers to adoption and improve our chances of reaching critical mass.

Finally, one important area for future work is to study the use of CoScripter outside the enterprise. While we conducted the studies in a very large organization with diverse employees and we believe their tasks are representative of knowledge workers as a whole, the results we have obtained may not be generalized to users of CoScripter outside the enterprise. CoScripter was made available to the public in August 2007 (see http://coscripter.researchlabs.ibm.com/coscripter), and more research on its use is needed to examine automation and sharing practices in this larger setting.

SUMMARY

In summary, we have presented the CoScripter system, a platform for capturing, sharing, and automating how-to knowledge for Web-based tasks. We have described CoScripter’s user interface and some of its key technical features. The empirical studies we have presented show that CoScripter has made it easier for people to share how-to knowledge inside the enterprise.

We thank Greg Little for the initial inspiration for the CoScripter project and Jeffrey Nichols for invaluable discussions and architectural design.

COSCRIPTER

Intended users: |

All users |

Domain: |

All Web sites |

Description: |

CoScripter is a system for recording, automating, and sharing browser-based activities. Actions are recorded as easy-to-read text and stored on a public wiki so that anyone can use them. |

Example: |

Look for houses for sale in your area. The user records visiting a real estate Web site and filling in number of bedrooms and maximum price. Then, every few days, the user can run the recorded script with a single click and see what houses are currently available. |

Automation: |

Yes. Mainly used to automate repetitive activities. |

Mashups: |

Possibly. Not its main use. Some support for copying and pasting information between Web sites. |

Scripting: |

Users can record CoScript commands or write them manually in the CoScripter editor. |

Natural language: |

No, although the scripting language is designed to be English-like, with commands such as click the “Submit” button. |

Recordability: |

Yes. Most scripts are created by recording actions in the Web browser. |

Inferencing: |

Heuristics are used to label Web page elements based on nearby text. This technique was invented in the Chickenfoot system. |

Sharing: |

Yes. Scripts are shared on a public wiki, but they can be marked “private.” A personal database allows a shared script to use values particular to the individual running the script. |

Comparison to other systems: |

The scripting language is based on Chickenfoot’s language. CoScripter excels at recording arbitrary actions in the browser in a way that is easily understood by people and is also executable by CoScripter. |

Platform: |

Implemented as an extension to the Mozilla Firefox Web browser and a custom wiki built with Ruby on Rails. |

Availability: |

Freely available at http://coscripter.researchlabs.ibm.com/coscripter. Code also available as open source under the Mozilla Public License. |