A Tutorial on Model Selection

Enes Makalic*, Daniel Francis Schmidt* and Abd-Krim Seghouane†, *Centre for M.E.G.A. Epidemiology, The University of Melbourne, Australia, †Department of Electrical and Electronic Engineering, The University of Melbourne, Australia

Abstract

Model selection is a key problem in many areas of science and engineering. Given a data set, model selection involves finding the statistical model that best captures the properties and regularities present in the data. This chapter examines three broad approaches to model selection: (i) minimum distance estimation criteria, (ii) Bayesian statistics, and (iii) model selection by data compression. Model selection in the context of linear regression is used as a running example throughout the chapter

Keywords

Model selection; Maximum likelihood; Akaike information criterion (AIC); Bayesian information criterion (BIC); Minimum message length (MML); Minimum description length (MDL)

1.25.1 Introduction

A common and widespread problem in science and engineering is the determination of a suitable model to describe or characterize an experimental data set. This determination consists of two tasks: (i) the choice of an appropriate model structure, and (ii) the estimation of the associated model parameters. Given the structure or dimension of the model, the task of parameter estimation is generally done by maximum likelihood or least squares procedures. The choice of the dimension of a model is often facilitated by the use of a model selection criterion. The key idea underlying most model selection criteria is the parsimonity principle which says that there should be a tradeoff between data fit and complexity. This chapter examines three broad approaches to model selection commonly applied in the literature: (i) methods that attempt to estimate the distance between the fitted model and the unknown distribution that generated the data (see Section 1.25.2); (ii) Bayesian approaches which use the posterior distribution of the parameters to make probabilistic statements about the plausibility of the fitted models (see Section 1.25.3), and (iii) information theoretic model selection procedures that measure the quality of the fitted models by how well these models compress the data (see Section 1.25.4).

Formally, we observe a sample of n data points ![]() from an unknown probability distribution

from an unknown probability distribution ![]() . The model selection problem is to learn a good approximation to

. The model selection problem is to learn a good approximation to ![]() using only the observed data

using only the observed data ![]() . This problem is intractable unless some assumptions are made about the unknown distribution

. This problem is intractable unless some assumptions are made about the unknown distribution ![]() . The assumption made in this chapter is that

. The assumption made in this chapter is that ![]() can be adequately approximated by one of the distributions from a set of parametric models

can be adequately approximated by one of the distributions from a set of parametric models ![]() where

where ![]() defines the model structure and the parameter vector

defines the model structure and the parameter vector ![]() indexes a particular distribution from the model

indexes a particular distribution from the model ![]() . The dimensionality of

. The dimensionality of ![]() will always be clear from the context. As an example, if we are interested in inferring the order of a polynomial in a linear regression problem, the set

will always be clear from the context. As an example, if we are interested in inferring the order of a polynomial in a linear regression problem, the set ![]() may include first-order, second-order and third-order polynomials. The parameter vector

may include first-order, second-order and third-order polynomials. The parameter vector ![]() then represents the coefficients for a particular polynomial; for example, the intercept and linear term for the first-order model. The model selection problem is to infer an appropriate model structure

then represents the coefficients for a particular polynomial; for example, the intercept and linear term for the first-order model. The model selection problem is to infer an appropriate model structure ![]() and parameter vector

and parameter vector ![]() that provide a good approximation to the data generating distribution

that provide a good approximation to the data generating distribution ![]() .

.

This chapter largely focuses on the inference of the model structure ![]() . A common approach to estimating the parameters

. A common approach to estimating the parameters ![]() for a given model

for a given model ![]() is the method of maximum likelihood. Here, the parameter vector is chosen such that the probability of the observed data

is the method of maximum likelihood. Here, the parameter vector is chosen such that the probability of the observed data ![]() is maximized, that is

is maximized, that is

![]() (25.1)

(25.1)

where ![]() denotes the likelihood of the data under the distribution indexed by

denotes the likelihood of the data under the distribution indexed by ![]() . This is a powerful approach to parameter estimation that possesses many attractive statistical properties [1]. However, it is not without its flaws, and as part of this chapter we will examine an alternative method of parameter estimation that should sometimes be preferred to maximum likelihood (see Section 1.25.4.2). The statistical theory underlying model selection is often abstract and difficult to understand in isolation, and it is helpful to consider these concepts within the context of a practical example. To this end, we have chosen to illustrate the application of the model selection criteria discussed in this chapter to the important and commonly used linear regression model.

. This is a powerful approach to parameter estimation that possesses many attractive statistical properties [1]. However, it is not without its flaws, and as part of this chapter we will examine an alternative method of parameter estimation that should sometimes be preferred to maximum likelihood (see Section 1.25.4.2). The statistical theory underlying model selection is often abstract and difficult to understand in isolation, and it is helpful to consider these concepts within the context of a practical example. To this end, we have chosen to illustrate the application of the model selection criteria discussed in this chapter to the important and commonly used linear regression model.

1.25.1.1 Nested and non-nested sets of candidate models

The structure of the set of candidate models ![]() can have a significant effect on the performance of model selection criteria. There are two general types of candidate model sets: nested sets of candidate models, and non-nested sets of candidate models. Nested sets of candidate models have special properties that make model selection easier and this structure is often assumed in the derivation of model selection criteria, such as in the case of the distance based methods of Section 1.25.2. Let us partition the model set

can have a significant effect on the performance of model selection criteria. There are two general types of candidate model sets: nested sets of candidate models, and non-nested sets of candidate models. Nested sets of candidate models have special properties that make model selection easier and this structure is often assumed in the derivation of model selection criteria, such as in the case of the distance based methods of Section 1.25.2. Let us partition the model set ![]() , where the subsets

, where the subsets ![]() are the set of all candidate models with k free parameters. A candidate set

are the set of all candidate models with k free parameters. A candidate set ![]() is considered nested if: (1) there is only one candidate model with k parameters (

is considered nested if: (1) there is only one candidate model with k parameters (![]() for all k), and (2) models

for all k), and (2) models ![]() with k parameters can exactly represent all distributions indexed by models with less than k parameters. That is, if

with k parameters can exactly represent all distributions indexed by models with less than k parameters. That is, if ![]() is a model with k free parameters, and model

is a model with k free parameters, and model ![]() is a model with l free parameters, where

is a model with l free parameters, where ![]() , then

, then

![]()

A canonical example of a nested model structure is the polynomial order selection problem. Here, a second-order model can exactly represent any first-order model by setting the quadratic term to zero. Similarly, a third-order model can exactly represent any first-order or second-order model by setting the appropriate parameters to zero, and so on. In the non-nested case, there is not necessarily only a single model with k parameters, and there is no requirement that models with more free parameters can exactly represent all simpler models. This can introduce difficulties for some model selection criteria, and it is thus important to be aware of the structure of the set ![]() before choosing a particular criterion to apply. The concept of nested and non-nested model sets will be made clearer in the following linear regression example.

before choosing a particular criterion to apply. The concept of nested and non-nested model sets will be made clearer in the following linear regression example.

1.25.1.2 Example: the linear model

Linear regression is of great importance in engineering and signal processing as it appears in a wide range of problems such as smoothing a signal with polynomials, estimating the number of sinusoids in noisy data and modeling linear dynamic systems. The linear regression model for explaining data ![]() assumes that the means of the observations are a linear combination of

assumes that the means of the observations are a linear combination of ![]() measured variables, or covariates, that is,

measured variables, or covariates, that is,

![]() (25.2)

(25.2)

where ![]() is the full design matrix,

is the full design matrix, ![]() are the parameter coefficients and

are the parameter coefficients and ![]() are independent and identically distributed Gaussian variates

are independent and identically distributed Gaussian variates ![]() . Model selection in the linear regression context arises because it is common to assume that only a subset of the covariates is associated with the observations; that is, only a subset of the parameter vector is non-zero. Let

. Model selection in the linear regression context arises because it is common to assume that only a subset of the covariates is associated with the observations; that is, only a subset of the parameter vector is non-zero. Let ![]() denote an index set determining which of the covariates comprise the design submatrix

denote an index set determining which of the covariates comprise the design submatrix ![]() ; the set of all candidate subsets is denoted by

; the set of all candidate subsets is denoted by ![]() . The linear model indexed by

. The linear model indexed by ![]() is then

is then

![]() (25.3)

(25.3)

where ![]() is the parameter vector of size

is the parameter vector of size ![]() and

and

![]()

The total number of unknown parameters to be estimated for model ![]() , including the noise variance parameter, is

, including the noise variance parameter, is ![]() . The negative log-likelihood for the data

. The negative log-likelihood for the data ![]() is

is

![]() (25.4)

(25.4)

The maximum likelihood estimates for the coefficient ![]() and the noise variance

and the noise variance ![]() are

are

![]() (25.5)

(25.5)

![]() (25.6)

(25.6)

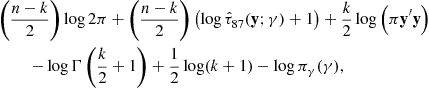

which coincide with the estimates obtained by minimising the sum of squared errors (the least squares estimates). The negative log-likelihood evaluated at the maximum likelihood estimates is given by

![]() (25.7)

(25.7)

The structure of the set ![]() in the context of the linear regression model depends on the nature and interpretation of the covariates. In the case that the covariates are polynomials of increasing order, or sinusoids of increasing frequency, it is often convenient to assume a nested structure on

in the context of the linear regression model depends on the nature and interpretation of the covariates. In the case that the covariates are polynomials of increasing order, or sinusoids of increasing frequency, it is often convenient to assume a nested structure on ![]() . For example, let us assume that the

. For example, let us assume that the ![]() covariates are polynomials such that

covariates are polynomials such that ![]() is the zero-order term (constant),

is the zero-order term (constant), ![]() the linear term,

the linear term, ![]() the quadratic term and

the quadratic term and ![]() the cubic term. To use a model selection criterion to select an appropriate order of polynomial, we can form the set of candidate models

the cubic term. To use a model selection criterion to select an appropriate order of polynomial, we can form the set of candidate models ![]() as

as

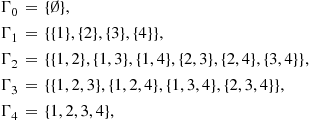

![]()

where

![]()

From this structure it is obvious that there is only one model with k free parameters, for all k, and that models with more parameters can exactly represent all models with less parameters by setting the appropriate coefficients to zero. In contrast, consider the situation in which the covariates have no natural ordering, or that we do not wish to impose an ordering; for example, in the case of polynomial regression, we may wish to determine which, if any, of the individual polynomial terms are associated with the observations. In this case there is no clear way of imposing a nested structure, as we cannot a priori rule out any particular combination of covariates being important. This is usually called the all-subsets regression problem, and the candidate model set then has the following structure

where ![]() denotes the empty set, that is, none of the covariates are to be used. It is clear that models with more parameters can not necessarily represent all models with less parameters; for example, the model

denotes the empty set, that is, none of the covariates are to be used. It is clear that models with more parameters can not necessarily represent all models with less parameters; for example, the model ![]() cannot represent the model

cannot represent the model ![]() as it is lacking covariate

as it is lacking covariate ![]() . Further, there is now no longer just a single model with k free parameters; in the above example, there are four models with one free parameter, and six models with two free parameters. In general, if there are q covariates in total, the number of models with k free parameters is given by

. Further, there is now no longer just a single model with k free parameters; in the above example, there are four models with one free parameter, and six models with two free parameters. In general, if there are q covariates in total, the number of models with k free parameters is given by ![]() , which may be substantially greater than one for moderate q.

, which may be substantially greater than one for moderate q.

Throughout the remainder of this chapter, the linear model example will be used to demonstrate the practical application of the model selection criteria that will be discussed.

1.25.2 Minimum distance estimation criteria

Intuitively, model selection criteria can be derived by quantifying “how close” the probability density of the fitted model is to the unknown, generating distribution. Many measures of distance between two probability distributions exist in the literature. Due to several theoretical properties, the Kullback-Leibler divergence [2], and its variants, is perhaps the most frequently used information theoretic measure of distance.

As the distribution ![]() that generated the observations

that generated the observations ![]() is unknown, the basic idea underlying the minimum distance estimation criteria is to construct an estimate of how close the fitted distributions are to the truth. In general, these estimators are constructed to satisfy the property of unbiasedness, at least for large sample sizes n. In particular, the distance-based methods we examine are the well known Akaike information criterion (AIC) (see Section 1.25.2.1) and symmetric Kullback information criterion (KIC) (see Section 1.25.2.2), as well as several corrected variants that improve the performance of these criteria when the sample size is small.

is unknown, the basic idea underlying the minimum distance estimation criteria is to construct an estimate of how close the fitted distributions are to the truth. In general, these estimators are constructed to satisfy the property of unbiasedness, at least for large sample sizes n. In particular, the distance-based methods we examine are the well known Akaike information criterion (AIC) (see Section 1.25.2.1) and symmetric Kullback information criterion (KIC) (see Section 1.25.2.2), as well as several corrected variants that improve the performance of these criteria when the sample size is small.

1.25.2.1 The Akaike information criterion

Recall the model selection problem as introduced in Section 1.25.1. Suppose a collection of n observations ![]() has been sampled from an unknown distribution

has been sampled from an unknown distribution ![]() having density function

having density function ![]() . Estimation of

. Estimation of ![]() is done within a set of nested candidate models

is done within a set of nested candidate models ![]() , characterized by probability densities

, characterized by probability densities ![]() , where

, where ![]() and

and ![]() is the number of free parameters possessed by model

is the number of free parameters possessed by model ![]() . Let

. Let ![]() denote the vector of estimated parameters obtained by maximizing the likelihood

denote the vector of estimated parameters obtained by maximizing the likelihood ![]() over

over ![]() , that is,

, that is,

![]()

and let ![]() denote the corresponding fitted model. To simplify notation, we introduce the shorthand notation

denote the corresponding fitted model. To simplify notation, we introduce the shorthand notation ![]() to denote the maximum likelihood estimator, and

to denote the maximum likelihood estimator, and ![]() to denote the maximized likelihood for model

to denote the maximized likelihood for model ![]() .

.

To determine which candidate density model best approximates the true unknown model ![]() , we require a measure which provides a suitable reflection of the separation between

, we require a measure which provides a suitable reflection of the separation between ![]() and an approximating model

and an approximating model ![]() . The Kullback-Leibler divergence, also known as the cross-entropy, is one such measure. For the two probability densities

. The Kullback-Leibler divergence, also known as the cross-entropy, is one such measure. For the two probability densities ![]() and

and ![]() , the Kullback-Leibler divergence,

, the Kullback-Leibler divergence, ![]() , between

, between ![]() and

and ![]() with respect to

with respect to ![]() is defined as

is defined as

where

![]() (25.8)

(25.8)

and ![]() represents the expectation with respect to

represents the expectation with respect to ![]() , that is,

, that is, ![]() . Ideally, the selection of the best model from the set of candidate models

. Ideally, the selection of the best model from the set of candidate models ![]() would be done by choosing the model with the minimum KL divergence from the data generating distribution

would be done by choosing the model with the minimum KL divergence from the data generating distribution ![]() , that is

, that is

![]()

where ![]() is the maximum likelihood estimator of the parameters of model

is the maximum likelihood estimator of the parameters of model ![]() based on the observed data

based on the observed data ![]() . Since

. Since ![]() does not depend on the fitted model

does not depend on the fitted model ![]() , any ranking of the candidate models according to

, any ranking of the candidate models according to ![]() is equivalent to ranking the models according to

is equivalent to ranking the models according to ![]() . Unfortunately, evaluating

. Unfortunately, evaluating ![]() is not possible since doing so requires the knowledge of

is not possible since doing so requires the knowledge of ![]() which is the aim of model selection in the first place.

which is the aim of model selection in the first place.

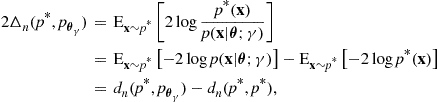

As noted by Takeuchi [3], twice the negative maximized log-likelihood, ![]() , acts as a downwardly biased estimator of

, acts as a downwardly biased estimator of ![]() and is therefore not suitable for model comparison by itself. An unbiased estimate of the KL divergence is clearly of interest and could be used as a tool for model selection. It can be shown that the bias in estimation is given by

and is therefore not suitable for model comparison by itself. An unbiased estimate of the KL divergence is clearly of interest and could be used as a tool for model selection. It can be shown that the bias in estimation is given by

![]() (25.9)

(25.9)

which, under suitable regularity conditions can be asymptotically estimated by [4,5]

![]() (25.10)

(25.10)

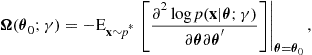

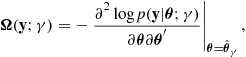

where

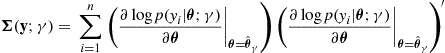

(25.11)

(25.11)

![]() (25.12)

(25.12)

and ![]() denotes the trace of a matrix. The parameter vector

denotes the trace of a matrix. The parameter vector ![]() defined by

defined by

![]() (25.13)

(25.13)

indexes the distribution in the model ![]() that is closest to the data generating distribution

that is closest to the data generating distribution ![]() in terms of KL divergence. Unfortunately, the parameter vector

in terms of KL divergence. Unfortunately, the parameter vector ![]() is unknown and so one cannot compute the required bias adjustment. However, Takeuchi [3] noted that under suitable regularity conditions, the maximum likelihood estimate can be used in place of

is unknown and so one cannot compute the required bias adjustment. However, Takeuchi [3] noted that under suitable regularity conditions, the maximum likelihood estimate can be used in place of ![]() to derive an approximation to (25.10), leading to the Takeuchi Information Criterion (TIC)

to derive an approximation to (25.10), leading to the Takeuchi Information Criterion (TIC)

![]() (25.14)

(25.14)

where

(25.15)

(25.15)

(25.16)

(25.16)

are empirical estimates of the matrices (25.11) and (25.12), respectively. To use the TIC for model selection, we choose the model ![]() with the smallest TIC score, that is

with the smallest TIC score, that is

![]()

The model with the smallest TIC score corresponds to the model we believe to be the closest in terms of KL divergence to the data generating distribution ![]() . Under suitable regularity conditions, the TIC is an unbiased estimate of the KL divergence between the fitted model

. Under suitable regularity conditions, the TIC is an unbiased estimate of the KL divergence between the fitted model ![]() and the data generating model

and the data generating model ![]() , that is

, that is

![]()

where ![]() is a quantity that vanishes as

is a quantity that vanishes as ![]() . The empirical matrices (25.15) and (25.16) are a good approximation to (25.11) and (25.12) only if the sample size n is very large. As this is often not the case in practice, the TIC is rarely used.

. The empirical matrices (25.15) and (25.16) are a good approximation to (25.11) and (25.12) only if the sample size n is very large. As this is often not the case in practice, the TIC is rarely used.

The dependence of the TIC on the empirical matrices can be avoided if one assumes that the data generating distribution ![]() is a distribution contained in the model

is a distribution contained in the model ![]() . In this case, the matrices (25.11) and (25.12) coincide. Thus,

. In this case, the matrices (25.11) and (25.12) coincide. Thus, ![]() reduces to the number of parameters k, and we obtain the widely known and extensively used Akaike’s Information Criterion (AIC) [6]

reduces to the number of parameters k, and we obtain the widely known and extensively used Akaike’s Information Criterion (AIC) [6]

![]() (25.17)

(25.17)

An interesting way to view the AIC (25.17) is as a penalized likelihood criterion. For a given model ![]() , the negative maximized log-likelihood measures how well the model fits the data, while the “

, the negative maximized log-likelihood measures how well the model fits the data, while the “![]() ” penalty measures the complexity of the model. Clearly, the complexity is an increasing function of the number of free parameters, and this acts to naturally balance the complexity of a model against its fit. Practically, this means that a complex model must fit the data well to be preferred to a simpler model with a similar quality of fit.

” penalty measures the complexity of the model. Clearly, the complexity is an increasing function of the number of free parameters, and this acts to naturally balance the complexity of a model against its fit. Practically, this means that a complex model must fit the data well to be preferred to a simpler model with a similar quality of fit.

Similar to the TIC, the AIC is an asymptotically unbiased estimator of the KL divergence between the generating distribution ![]() and the fitted distribution

and the fitted distribution ![]() , that is

, that is

![]()

When the sample size is large and the number of free parameters is small relative to the sample size, the ![]() term is approximately zero, and the AIC offers an excellent estimate of the Kullback-Leibler divergence. However, in the case that n is small, or k is large relative to n, the

term is approximately zero, and the AIC offers an excellent estimate of the Kullback-Leibler divergence. However, in the case that n is small, or k is large relative to n, the ![]() term may be quite large, and the AIC does not adequately correct the bias, making it unsuitable for model selection.

term may be quite large, and the AIC does not adequately correct the bias, making it unsuitable for model selection.

To address this issue, Hurvich and Tsai [7] proposed a small sample correction to the AIC in the linear regression setting. This small sample AIC, referred to as AICc, is given by

![]() (25.18)

(25.18)

for a model ![]() with k free parameters, and has subsequently been applied to models other than linear regressions. From (25.18) it is clear that the penalty term is substantially larger than

with k free parameters, and has subsequently been applied to models other than linear regressions. From (25.18) it is clear that the penalty term is substantially larger than ![]() when the sample size n is not significantly larger than k, and that as

when the sample size n is not significantly larger than k, and that as ![]() the AICc is equivalent to the regular AIC (25.17). Practically, a large body of empirical evidence strongly suggests that the AICc performs significantly better as a model selection tool than the AIC, largely irrespective of the problem. The approach taken by Hurvich and Tsai to derive their AICc criterion is not the only way of deriving small sample minimum distance estimators based on the KL divergence; for example, the work in [8] derives alternative AIC-like criteria based on variants of the KL divergence, while [9] offers an alternative small sample criterion obtained through bootstrap approximations.

the AICc is equivalent to the regular AIC (25.17). Practically, a large body of empirical evidence strongly suggests that the AICc performs significantly better as a model selection tool than the AIC, largely irrespective of the problem. The approach taken by Hurvich and Tsai to derive their AICc criterion is not the only way of deriving small sample minimum distance estimators based on the KL divergence; for example, the work in [8] derives alternative AIC-like criteria based on variants of the KL divergence, while [9] offers an alternative small sample criterion obtained through bootstrap approximations.

For the reader interested in the theoretical details and complete derivations of the AIC, including all regularity conditions, we highly recommend the excellent text by Linhart and Zucchini [5]. We also recommend the text by McQuarrie and Tsai [10] for a more practical treatment of AIC and AICc.

1.25.2.2 The Kullback information criterion

Since the Kullback-Leibler divergence is an asymmetric measure, an alternative directed divergence can be obtained by reversing the roles of the two models in the definition of the measure. A undirected measure of model dissimilarity can be obtained from the sum of the two directed divergences. This measure is known as Kullback’s symmetric divergence, or J-divergence [11]. Since the J-divergence combines information about model dissimilarity through two distinct measures, it functions as a gauge of model disparity which is arguably more sensitive than either of its individual components. The J-divergence, ![]() , between the true generating distribution

, between the true generating distribution ![]() and a distribution

and a distribution ![]() is given by

is given by

where

![]() (25.19)

(25.19)

![]() (25.20)

(25.20)

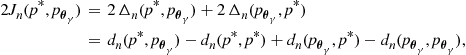

As in Section (1.25.2.1), we note that the term ![]() does not depend on the fitted model

does not depend on the fitted model ![]() and may be dropped when ranking models. The quantity

and may be dropped when ranking models. The quantity

![]()

may then be used as a surrogate for the J-divergence, and leads to an appealing measure of dissimilarity between the generating distribution and the fitted candidate model. In a similar fashion to the AIC, twice the negative maximized log-likelihood has been shown to be a downwardly biased estimate of the J-divergence. Cavanaugh [12] derives an estimate of this bias, and uses this correction to define a model selection criterion called KIC (symmetric Kullback information criterion), which is given by

![]() (25.21)

(25.21)

Comparing (25.21) to the AIC (25.17), we see that the KIC complexity penalty is slightly greater. This implies that the KIC tends to prefer simpler models (that is, those with less parameters) than those selected by minimising the AIC. Under suitable regularity conditions, the KIC satisfies

![]()

Empirically, the KIC has been shown to outperform AIC in large sample linear regression and autoregressive model selection, and tends to lead to less overfitting than AIC. Similarly to the AIC, when the sample size n is small the bias correction term ![]() is too small, and the KIC performs poorly as a model selection tool. Seghouane and Bekara [13] derived a corrected KIC, called KICc, in the context of linear regression that is suitable for use when the sample size is small relative to the total number of parameters k. The KICc is

is too small, and the KIC performs poorly as a model selection tool. Seghouane and Bekara [13] derived a corrected KIC, called KICc, in the context of linear regression that is suitable for use when the sample size is small relative to the total number of parameters k. The KICc is

![]() (25.22)

(25.22)

where ![]() denotes the digamma function. The digamma function can be difficult to compute, and approximating the digamma function by a second-order Taylor series expansion yields the so called AKICc, which is given by

denotes the digamma function. The digamma function can be difficult to compute, and approximating the digamma function by a second-order Taylor series expansion yields the so called AKICc, which is given by

![]() (25.23)

(25.23)

The second term in (25.22) appears as the complexity penalty in the AICc(25.18), which is not surprising given that the J-divergence is a sum of two KL divergence functions. Empirically, the KICc has been shown to outperform the KIC as a model selection tool when the sample size is small.

1.25.2.3 Example application: linear regression

We now demonstrate the application of the minimum distance estimation criteria to the problem of linear regression introduced in Section 1.25.1.2. Recall that in this setting, ![]() specifies which covariates from the full design matrix

specifies which covariates from the full design matrix ![]() should be used in the fitted model. The total number of parameters is therefore

should be used in the fitted model. The total number of parameters is therefore ![]() , where the additional parameter accounts for the fact that we must estimate the noise variance. The various model selection criteria are listed below:

, where the additional parameter accounts for the fact that we must estimate the noise variance. The various model selection criteria are listed below:

![]() (25.24)

(25.24)

![]() (25.25)

(25.25)

![]() (25.26)

(25.26)

![]() (25.27)

(25.27)

It is important to stress that all these criteria are derived within the context of a nested set of candidate models ![]() (see Section 1.25.1.1). In the next section we will briefly examine the problems that can arise if these criteria are used in situations where the candidates are not nested.

(see Section 1.25.1.1). In the next section we will briefly examine the problems that can arise if these criteria are used in situations where the candidates are not nested.

1.25.2.4 Consistency and efficiency

Let ![]() denote the model that generated the observed data

denote the model that generated the observed data ![]() and assume that

and assume that ![]() is a member of the set of candidate models

is a member of the set of candidate models ![]() which is fixed and does not grow with sample size n; recall that the model

which is fixed and does not grow with sample size n; recall that the model ![]() contains the data generating distribution

contains the data generating distribution ![]() as a particular distribution. Furthermore, of all the models that contain

as a particular distribution. Furthermore, of all the models that contain ![]() , the model

, the model ![]() has the least number of free parameters. A model selection criterion is consistent if the probability of selecting the true model

has the least number of free parameters. A model selection criterion is consistent if the probability of selecting the true model ![]() approaches one as the sample size

approaches one as the sample size ![]() . None of the distance based criteria examined in this chapter are consistent model selection procedures. This means that even for extremely large sample sizes, there is a non-zero probability that these distance based criteria will overfit and select a model with more free parameters than

. None of the distance based criteria examined in this chapter are consistent model selection procedures. This means that even for extremely large sample sizes, there is a non-zero probability that these distance based criteria will overfit and select a model with more free parameters than ![]() . Consequently, if the aim of the experiment is to discover the true model, the aforementioned distance based criteria are inappropriate. For example, in the linear regression setting, if the primary aim of the model selection exercise is to determine only those covariates that are associated with the observations, one should not use the AIC or KIC (and their variants).

. Consequently, if the aim of the experiment is to discover the true model, the aforementioned distance based criteria are inappropriate. For example, in the linear regression setting, if the primary aim of the model selection exercise is to determine only those covariates that are associated with the observations, one should not use the AIC or KIC (and their variants).

In contrast, if the true model is of infinite order, which in many settings may be deemed more realistic in practice, then the true model lies outside the class of candidate models. In this case, we cannot hope to discover the true model, and instead would like our model selection criterion to at least provide good predictions about future data arising from the same source. A criterion that minimizes the one-step mean squared error between the fitted model and the generating distribution ![]() with high probability as

with high probability as ![]() is termed efficient. The AIC and KIC, and their corrected variants are all asymptotically efficient [4]. This suggests that for large sample sizes, model selection by distance based criteria will lead to adequate prediction errors even if the true model lies outside the set of candidate models

is termed efficient. The AIC and KIC, and their corrected variants are all asymptotically efficient [4]. This suggests that for large sample sizes, model selection by distance based criteria will lead to adequate prediction errors even if the true model lies outside the set of candidate models ![]() .

.

1.25.2.5 The AIC and KIC for non-nested sets of candidate models

The derivations of the AIC and KIC, and their variants, depend on the assumption that the candidate model set ![]() is nested. We conclude this section by briefly examining the behavior of these criteria when they are used to select a model from a non-nested candidate model set. First, consider the case that

is nested. We conclude this section by briefly examining the behavior of these criteria when they are used to select a model from a non-nested candidate model set. First, consider the case that ![]() forms a nested set of models, and let

forms a nested set of models, and let ![]() be the true model, that is, the model that generated the data; this means that

be the true model, that is, the model that generated the data; this means that ![]() is the model in

is the model in ![]() with the least number of free parameters, say

with the least number of free parameters, say ![]() , that contains the data generating distribution

, that contains the data generating distribution ![]() as a particular distribution. If n is sufficiently large, the probability that the model selected by minimising the AIC will have

as a particular distribution. If n is sufficiently large, the probability that the model selected by minimising the AIC will have ![]() parameters is at least 16%, with the equivalent probability for KIC being approximately 8% [10].

parameters is at least 16%, with the equivalent probability for KIC being approximately 8% [10].

Consider now the case in which ![]() forms a non-nested set of candidate models. A recent paper by Schmidt and Makalic [14] has demonstrated that in this case, the AIC is a downwardly biased estimator of the Kullback-Leibler divergence even asymptotically in n, and this downward bias is determined by the size of the sets

forms a non-nested set of candidate models. A recent paper by Schmidt and Makalic [14] has demonstrated that in this case, the AIC is a downwardly biased estimator of the Kullback-Leibler divergence even asymptotically in n, and this downward bias is determined by the size of the sets ![]() . This implies, that in the case of all-subsets regression, the probability of overfitting is an increasing function of the number of covariates under consideration, q, and this probability cannot be reduced even by increasing the sample size.

. This implies, that in the case of all-subsets regression, the probability of overfitting is an increasing function of the number of covariates under consideration, q, and this probability cannot be reduced even by increasing the sample size.

As an example of how great this probability may be, we consider an all-subsets regression in which the generating distribution is the null model; that is, none of the measured covariates ![]() are associated with the observations

are associated with the observations ![]() . Even if there is only as few as

. Even if there is only as few as ![]() covariates available for selection, the probability of erroneously preferring a non-null model to the null model using the AIC is approximately

covariates available for selection, the probability of erroneously preferring a non-null model to the null model using the AIC is approximately ![]() , and by the time

, and by the time ![]() , this probability rises to

, this probability rises to ![]() . Although [14] examines only the AIC, similar arguments follow easily for the KIC. It is therefore recommended that while both criteria are appropriate for nested model selection, neither of these distance based criteria should be used for model selection in a non-nested situation, such as the all-subsets regression problem.

. Although [14] examines only the AIC, similar arguments follow easily for the KIC. It is therefore recommended that while both criteria are appropriate for nested model selection, neither of these distance based criteria should be used for model selection in a non-nested situation, such as the all-subsets regression problem.

1.25.2.6 Applications

Minimum distance estimation criteria have been widely applied in the literature, and we present below an inexhaustive list of successful applications:

• univariate linear regression models [7,13],

• multivariate linear regression models with arbitrary noise covariance matrices [15,16],

• autoregressive model selection [7,12,17],

• selection of smoothing parameters in nonparametric regression [18],

• selection of the size of a multilayer perceptron network [21].

• model selection in the presence of missing data [22].

For details of many of these applications the reader is referred to [10].

1.25.3 Bayesian approaches to model selection

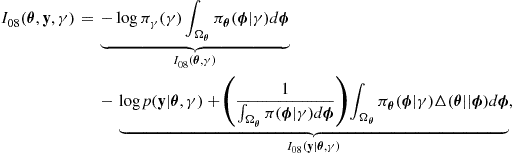

Section 1.25.2 has described model selection criteria that are designed to explicitly minimise the distance between the estimated models and the true model that generated the observed data ![]() . An alternative approach, based on Bayesian statistics [23], is now discussed. The Bayesian approach to statistical analysis differs from the “classical” approach through the introduction of a prior distribution that is used to quantify an experimenter’s a priori (that is, before the observation of data) beliefs about the source of the observed data. A central quantity in Bayesian analysis is the so-called posterior distribution; given a prior distribution,

. An alternative approach, based on Bayesian statistics [23], is now discussed. The Bayesian approach to statistical analysis differs from the “classical” approach through the introduction of a prior distribution that is used to quantify an experimenter’s a priori (that is, before the observation of data) beliefs about the source of the observed data. A central quantity in Bayesian analysis is the so-called posterior distribution; given a prior distribution, ![]() , over the parameter space

, over the parameter space ![]() , and the likelihood function,

, and the likelihood function, ![]() , the posterior distribution of the parameters given the data may be found by applying Bayes’ theorem, yielding

, the posterior distribution of the parameters given the data may be found by applying Bayes’ theorem, yielding

![]() (25.28)

(25.28)

where

![]() (25.29)

(25.29)

is known as the marginal probability of ![]() for model

for model ![]() , and is a normalization term required to render (25.28) a proper distribution. In general, the specification of the prior distribution may itself depend on further parameters, usually called hyperparameters, but for the purposes of the present discussion this possibility will not be considered. The main strength of the Bayesian paradigm is that it allows uncertainty about models and parameters to be defined directly in terms of probabilities; there is no need to appeal to more abstruse concepts, such as the “confidence interval” of classical statistics. However, there is in general no free lunch in statistics, and this strength comes at a price: the specification and origin of the prior distribution. There are essentially two main schools of thought on this topic: (i) subjective Bayesianism, where prior distributions are viewed as serious expressions of subjective belief about the process that generated the data, and (ii) objective Bayesianism [24], where one attempts to express prior ignorance through the use of uninformative, objective distributions, such as the Jeffreys’ prior [11], reference priors [25] and intrinsic priors [26]. The specification of the prior distribution is the basis of most criticism leveled at the Bayesian school, and an extensive discussion on the merits and drawbacks of Bayesian procedures, and the relative merits of subjective and objective approaches, is beyond the scope of this tutorial (there are many interesting discussions on this topic in the literature, see for example, [27,28]). However, in this section of the tutorial, an approach to the problem of prior distribution specification based on asymptotic arguments will be presented.

, and is a normalization term required to render (25.28) a proper distribution. In general, the specification of the prior distribution may itself depend on further parameters, usually called hyperparameters, but for the purposes of the present discussion this possibility will not be considered. The main strength of the Bayesian paradigm is that it allows uncertainty about models and parameters to be defined directly in terms of probabilities; there is no need to appeal to more abstruse concepts, such as the “confidence interval” of classical statistics. However, there is in general no free lunch in statistics, and this strength comes at a price: the specification and origin of the prior distribution. There are essentially two main schools of thought on this topic: (i) subjective Bayesianism, where prior distributions are viewed as serious expressions of subjective belief about the process that generated the data, and (ii) objective Bayesianism [24], where one attempts to express prior ignorance through the use of uninformative, objective distributions, such as the Jeffreys’ prior [11], reference priors [25] and intrinsic priors [26]. The specification of the prior distribution is the basis of most criticism leveled at the Bayesian school, and an extensive discussion on the merits and drawbacks of Bayesian procedures, and the relative merits of subjective and objective approaches, is beyond the scope of this tutorial (there are many interesting discussions on this topic in the literature, see for example, [27,28]). However, in this section of the tutorial, an approach to the problem of prior distribution specification based on asymptotic arguments will be presented.

While (25.28) specifies a probability distribution over the parameter space ![]() , conditional on the observed data, it gives no indication of the likelihood of the model

, conditional on the observed data, it gives no indication of the likelihood of the model ![]() given the observed data. A common approach is based on the marginal distribution (25.29). If a further prior distribution,

given the observed data. A common approach is based on the marginal distribution (25.29). If a further prior distribution, ![]() , over the set of candidate models

, over the set of candidate models ![]() is available, one may apply Bayes’ theorem to form a posterior distribution over the models themselves, given by

is available, one may apply Bayes’ theorem to form a posterior distribution over the models themselves, given by

![]() (25.30)

(25.30)

Model selection then proceeds by choosing the model ![]() that maximizes the probability (25.30), that is

that maximizes the probability (25.30), that is

![]() (25.31)

(25.31)

where the normalizing constant may be safely ignored. An interesting consequence of the posterior distribution (25.30) is that the posterior-odds in favor of some model, ![]() , over another model

, over another model ![]() , usually called the Bayes factor [29,30], can be found from the ratio of posterior probabilities, that is,

, usually called the Bayes factor [29,30], can be found from the ratio of posterior probabilities, that is,

![]() (25.32)

(25.32)

This allows for a straightforward and highly interpretable quantification of the uncertainty in the choice of any particular model. The most obvious weakness in the Bayesian approach, ignoring the origin of the prior distributions, is computational. As a general rule, the integral in the definition of the marginal distribution (25.29), does not admit a closed-form solution and one must resort to numerical approaches or approximations. The next section will discuss a criterion based on asymptotic arguments that circumvents both the requirement to specify an appropriate prior distribution as well as the issue of integration in the computation of the marginal distribution.

1.25.3.1 The Bayesian information criterion (BIC)

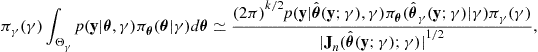

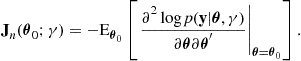

The Bayesian Information Criterion (BIC), also sometimes known as the Schwarz Information Criterion (SIC), was proposed by Schwarz [31]. However, the resulting criterion is not unique and was also derived from information theoretic considerations by Rissanen [32], as well as being implicit in the earlier work of Wallace and Boulton [33]; the information theoretic arguments for model selection are discussed in detail in the next section of this chapter. The BIC is based on the Laplace approximation for high dimensional integration [34]. Essentially, under certain regularity conditions, the Laplace approximation works by replacing the distribution to be integrated by a suitable multivariate normal distribution, which results in a straightforward integral. Making the assumptions that the prior distribution is such that its effects are “swamped” by the evidence in the data, and that the maximum likelihood estimator converges on the posterior mode as ![]() , one may apply the Laplace approximation to (25.29) yielding

, one may apply the Laplace approximation to (25.29) yielding

(25.33)

(25.33)

where ![]() denotes that the ratio of the left and right hand side approaches one as the sample size

denotes that the ratio of the left and right hand side approaches one as the sample size ![]() . In (25.33),

. In (25.33), ![]() is the total number of continuous, free parameters for model

is the total number of continuous, free parameters for model ![]() is the maximum likelihood estimate, and

is the maximum likelihood estimate, and ![]() is the

is the ![]() expected Fisher information matrix for n data points, given by

expected Fisher information matrix for n data points, given by

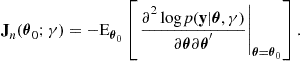

(25.34)

(25.34)

The technical conditions under which (25.33) holds are detailed in [31]; in general, if the maximum likelihood estimates are consistent, that is, they converge on the true, generating value of ![]() as

as ![]() , and also satisfy the central limit theorem, the approximation will be satisfactory, at least for large sample sizes. To find the BIC, begin by taking negative logarithms of the right hand side of (25.33)

, and also satisfy the central limit theorem, the approximation will be satisfactory, at least for large sample sizes. To find the BIC, begin by taking negative logarithms of the right hand side of (25.33)

![]() (25.35)

(25.35)

A crucial assumption made is that the Fisher information satisfies

![]() (25.36)

(25.36)

where ![]() is the asymptotic per sample Fisher information matrix satisfying

is the asymptotic per sample Fisher information matrix satisfying ![]() , and is free of dependency on n. This assumption is met by a large range of models, including linear regression models, autoregressive moving-average (ARMA) models and generalized linear models (GLMs) to name a few. This allows the determinant of the Fisher information matrix for n samples to be rewritten as

, and is free of dependency on n. This assumption is met by a large range of models, including linear regression models, autoregressive moving-average (ARMA) models and generalized linear models (GLMs) to name a few. This allows the determinant of the Fisher information matrix for n samples to be rewritten as

![]()

where ![]() denotes a quantity that is constant in n. Using this result, (25.35) may be dramatically simplified by assuming that n is large and discarding terms that are

denotes a quantity that is constant in n. Using this result, (25.35) may be dramatically simplified by assuming that n is large and discarding terms that are ![]() with respect to the sample size, yielding the BIC

with respect to the sample size, yielding the BIC

![]() (25.37)

(25.37)

Model selection using BIC is then done by finding the ![]() that minimises the BIC score (25.37), that is,

that minimises the BIC score (25.37), that is,

![]() (25.38)

(25.38)

It is immediately obvious from (25.37) that a happy consequence of the approximations that are employed is the removal of the dependence on the prior distribution ![]() . However, as usual, this comes at a price. The BIC satisfies

. However, as usual, this comes at a price. The BIC satisfies

![]() (25.39)

(25.39)

where the ![]() term is only guaranteed to be negligible for large n. For any finite n, this term may be arbitrarily large, and may have considerable effect on the behavior of BIC as a model selection tool. Thus, in general, BIC is only appropriate for use when the sample size is quite large relative to the number of parameters k. However, despite this drawback, when used correctly the BIC has several pleasant, and important, properties that are now discussed. The important point to bear in mind is that like all approximations, it can only be employed with confidence in situations that meet the conditions under which the approximation was derived.

term is only guaranteed to be negligible for large n. For any finite n, this term may be arbitrarily large, and may have considerable effect on the behavior of BIC as a model selection tool. Thus, in general, BIC is only appropriate for use when the sample size is quite large relative to the number of parameters k. However, despite this drawback, when used correctly the BIC has several pleasant, and important, properties that are now discussed. The important point to bear in mind is that like all approximations, it can only be employed with confidence in situations that meet the conditions under which the approximation was derived.

1.25.3.2 Properties of BIC

1.25.3.2.1 Consistency of BIC

A particularly important, and defining, property of BIC is the consistency of ![]() as

as ![]() . Let

. Let ![]() be the true model, that is, the model that generated the data; this means that

be the true model, that is, the model that generated the data; this means that ![]() is the model in

is the model in ![]() with the least number of free parameters that contains the data generating distribution

with the least number of free parameters that contains the data generating distribution ![]() as a particular distribution. Further, let the set of all models

as a particular distribution. Further, let the set of all models ![]() be independent of the sample size n; this means that the number of candidate models that are being considered does not increase with increasing sample size. Then, if all models in

be independent of the sample size n; this means that the number of candidate models that are being considered does not increase with increasing sample size. Then, if all models in ![]() satisfy the regularity conditions under which BIC was derived, and in particular (25.36), the BIC estimate satisfies

satisfy the regularity conditions under which BIC was derived, and in particular (25.36), the BIC estimate satisfies

![]() (25.40)

(25.40)

as ![]() [35,36]. In words, this says that the BIC estimate of

[35,36]. In words, this says that the BIC estimate of ![]() converges on the true, generating model with probability one in the limit as the sample size

converges on the true, generating model with probability one in the limit as the sample size ![]() , and this property holds irrespective of whether

, and this property holds irrespective of whether ![]() forms a nested or non-nested set of candidate models. Practically, this means that as the sample size grows the probability that the model selected by the minimising the BIC score overfits or underfits the true, generating model, tends to zero. This is an important property if the discovery of relevant parameters is of more interest than making good predictions, and is not shared by any of the distance-based criteria discussed in Section 1.25.2. An argument against the importance of model selection consistency stems from the fact that the statistical models under consideration are abstractions of reality, and that the true, generating model is not be a member of the set

forms a nested or non-nested set of candidate models. Practically, this means that as the sample size grows the probability that the model selected by the minimising the BIC score overfits or underfits the true, generating model, tends to zero. This is an important property if the discovery of relevant parameters is of more interest than making good predictions, and is not shared by any of the distance-based criteria discussed in Section 1.25.2. An argument against the importance of model selection consistency stems from the fact that the statistical models under consideration are abstractions of reality, and that the true, generating model is not be a member of the set ![]() . Regardless of this criticism, empirical studies suggest that the BIC tends to perform well in comparison to asymptotically efficient criteria such as AIC and KIC when the underlying generating process may be described by a small number of strong effects [10], and thus occupies a useful niche in the gallery of model selection techniques.

. Regardless of this criticism, empirical studies suggest that the BIC tends to perform well in comparison to asymptotically efficient criteria such as AIC and KIC when the underlying generating process may be described by a small number of strong effects [10], and thus occupies a useful niche in the gallery of model selection techniques.

1.25.3.2.2 Bayesian Interpretations of BIC

Due to the fact that the BIC is an approximation of the marginal probability of data ![]() (25.29), it admits the full range of Bayesian interpretations. For example, by computing the BIC scores for two models

(25.29), it admits the full range of Bayesian interpretations. For example, by computing the BIC scores for two models ![]() and

and ![]() , one may compute an approximate negative log-Bayes factor of the form

, one may compute an approximate negative log-Bayes factor of the form

![]()

Therefore, the exponential of the negative difference in BIC scores for ![]() and

and ![]() may be interpreted as an approximate posterior-odds in favor of

may be interpreted as an approximate posterior-odds in favor of ![]() . Further, the BIC scores may be used to perform model averaging. Selection of a single best model from a candidate set is known to be statistically unstable [37], particularly if the sample size is small and many models have similar criterion scores. Instability in this setting is defined as the variability in the choice of the single best model under minor perturbations of the observed sample. It is clear that if many models are given similar criterion scores, then changing a single data point in the sample can lead to significant changes in the ranking of the models. In contrast to model selection, model averaging aims to improve predictions by using a weighted mixture of all the estimated models, the weights being proportional to the strength of evidence for the particular model. By noting that the BIC score is approximately equal to the negative log-posterior probability of the model, a Bayesian predictive density for future data

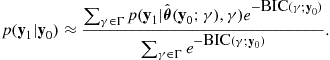

. Further, the BIC scores may be used to perform model averaging. Selection of a single best model from a candidate set is known to be statistically unstable [37], particularly if the sample size is small and many models have similar criterion scores. Instability in this setting is defined as the variability in the choice of the single best model under minor perturbations of the observed sample. It is clear that if many models are given similar criterion scores, then changing a single data point in the sample can lead to significant changes in the ranking of the models. In contrast to model selection, model averaging aims to improve predictions by using a weighted mixture of all the estimated models, the weights being proportional to the strength of evidence for the particular model. By noting that the BIC score is approximately equal to the negative log-posterior probability of the model, a Bayesian predictive density for future data ![]() , conditional on an observed sample

, conditional on an observed sample ![]() , may be found by marginalising out the model class indicator

, may be found by marginalising out the model class indicator ![]() , that is,

, that is,

(25.41)

(25.41)

Although such a mixture often outperforms a single best model when used to make predictions about future data [38], there are several drawbacks to the model averaging approach. The first is that the resulting density lacks the property of parsimonity, as no model is excluded from the mixture, and therefore all model parameters contribute to the mixture. In the case of linear regression, for example, this means the ability to decide whether particular covariates are “relevant” is lost. The second drawback is that the predictive density (25.41) is in general not of the same form as any its component models, which can lead to interpretability issues.

1.25.3.3 Example application: linear regression

We conclude our examination of the BIC with an example of an application to linear regression models. Recalling that in this context the model indicator ![]() specifies which covariates, if any, should be used from the full design matrix

specifies which covariates, if any, should be used from the full design matrix ![]() to explain the observed samples

to explain the observed samples ![]() . The BIC score for a particular covariate set

. The BIC score for a particular covariate set ![]() is given by

is given by

![]() (25.42)

(25.42)

where ![]() is the maximum likelihood estimator of the noise variance for model

is the maximum likelihood estimator of the noise variance for model ![]() , and the

, and the ![]() accounts for the fact that the variance must be estimated from the data along with the k regression parameters. The BIC score (25.42) contains the terms

accounts for the fact that the variance must be estimated from the data along with the k regression parameters. The BIC score (25.42) contains the terms ![]() which are constant with respect to

which are constant with respect to ![]() and may be omitted if the set of candidate models only consists of linear regressions; if the set

and may be omitted if the set of candidate models only consists of linear regressions; if the set ![]() also contains models from alternative classes, such as artificial neural networks, then these terms are required to fully characterize the marginal probability in comparison to this alternative class of models. The consistency property of BIC is particularly useful in this setting, as it guarantees that if the data were generated from (25.42) then as

also contains models from alternative classes, such as artificial neural networks, then these terms are required to fully characterize the marginal probability in comparison to this alternative class of models. The consistency property of BIC is particularly useful in this setting, as it guarantees that if the data were generated from (25.42) then as ![]() , minimising the BIC score will recover the true model, and thus determine exactly which covariates are associated with

, minimising the BIC score will recover the true model, and thus determine exactly which covariates are associated with ![]() .

.

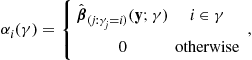

Given the assumption of Gaussian noise, the Bayesian mixture distribution is a weighted mixture of Gaussian distributions and thus has a particularly simple conditional mean. Let ![]() denote a

denote a ![]() vector with entries

vector with entries

that is, the vector ![]() contains the maximum likelihood estimates for the covariates in

contains the maximum likelihood estimates for the covariates in ![]() , and zeros for those covariates that are not in

, and zeros for those covariates that are not in ![]() . Then, the conditional mean of the predictive density for future data with design matrix

. Then, the conditional mean of the predictive density for future data with design matrix ![]() , conditional on an observed sample

, conditional on an observed sample ![]() , is simply a linear combination of the

, is simply a linear combination of the ![]()

![]()

which is essentially a linear regression with a special coefficient vector. While this coefficient vector will be dense, in the sense that none of its entries will be exactly zero, it retains the high degree of interpretibility that makes linear regression models so attractive.

1.25.3.4 Priors for the model structure

One of the primary assumptions made in the derivation of the BIC is that the effects of the prior distribution for the model, ![]() , can be safely neglected. However, in some cases the number of models contained in

, can be safely neglected. However, in some cases the number of models contained in ![]() is very large relative to the sample size, or may even grow with growing sample size, and this assumption may no longer be valid. An important example is that of regression models in the context of genetic datasets; in this case, the number of covariates is generally several orders of magnitude greater than the number of samples. In this case, it is possible to use a modified BIC of the form

is very large relative to the sample size, or may even grow with growing sample size, and this assumption may no longer be valid. An important example is that of regression models in the context of genetic datasets; in this case, the number of covariates is generally several orders of magnitude greater than the number of samples. In this case, it is possible to use a modified BIC of the form

![]() (25.43)

(25.43)

which requires specification of the prior distribution ![]() . In the setting of regression models, there are two general flavors of prior distributions for

. In the setting of regression models, there are two general flavors of prior distributions for ![]() depending on the structure represented by

depending on the structure represented by ![]() . The first case is that of nested model selection. A nested model class has the important property that all models with k parameters contain all models with less than k parameters as special cases. A standard example of this is polynomial regression in which the model selection criterion must choose the order of the polynomial. A uniform prior over

. The first case is that of nested model selection. A nested model class has the important property that all models with k parameters contain all models with less than k parameters as special cases. A standard example of this is polynomial regression in which the model selection criterion must choose the order of the polynomial. A uniform prior over ![]() is an appropriate, and standard, choice of prior for nested model classes. Use of such a prior in (25.43) inflates the BIC score by a term of

is an appropriate, and standard, choice of prior for nested model classes. Use of such a prior in (25.43) inflates the BIC score by a term of ![]() ; this term is constant for all

; this term is constant for all ![]() , and may consequently be ignored when using the BIC scores to rank the models.

, and may consequently be ignored when using the BIC scores to rank the models.

In contrast, the second general case, known as all-subsets regression, is less straightforward. In this setting, there are q covariates, and ![]() contains all possible subsets of

contains all possible subsets of ![]() . This implies that the model class is no longer nested. It is tempting to assume a uniform prior over the members of

. This implies that the model class is no longer nested. It is tempting to assume a uniform prior over the members of ![]() , as this would appear to be an uninformative choice. Unfortunately, such a prior is heavily biased towards subsets containing approximately half of the covariates. To see this, note that

, as this would appear to be an uninformative choice. Unfortunately, such a prior is heavily biased towards subsets containing approximately half of the covariates. To see this, note that ![]() contains a total of

contains a total of ![]() subsets that include k covariates. This number may be extremely large when k is close to

subsets that include k covariates. This number may be extremely large when k is close to ![]() , even for moderate q, and thus these subsets will be given a large proportion of the prior probability. For example, if

, even for moderate q, and thus these subsets will be given a large proportion of the prior probability. For example, if ![]() then

then ![]() and

and ![]() . Practically, this means that subsets with

. Practically, this means that subsets with ![]() covariates are given approximately one-fifth of the total prior probability; in contrast, subsets with a single covariate receive less than one percent of the prior probability. To address this issue, an alternative approach is to use a prior which assigns equal prior probability to each set of subsets of k covariates, for all k, that is,

covariates are given approximately one-fifth of the total prior probability; in contrast, subsets with a single covariate receive less than one percent of the prior probability. To address this issue, an alternative approach is to use a prior which assigns equal prior probability to each set of subsets of k covariates, for all k, that is,

![]() (25.44)

(25.44)

This prior (or ones very similar) also arise from both empirical Bayes [39] and information theoretic arguments [27,40], and have been extensively studied by Scott and Berger [41]. This work has demonstrated that priors of the form (25.44) help Bayesian procedures overcome the difficulties associated with all-subsets model selection that adversely affect distance based criteria such as AIC and KIC [14].

In fact, Chen and Chen [42] have proposed a generalization of this prior as part of what they call the “extended BIC.” An important (specific) result of this work is the proof that using the prior (25.44) relaxes conditions required for the consistency of the BIC. Essentially, the total number of covariates may now be allowed to depend on the sample size n in the sense that ![]() , where

, where ![]() is a constant satisfying

is a constant satisfying ![]() , implying that

, implying that ![]() grows with increasing n. Then, under certain identifiability conditions on the complete design matrix

grows with increasing n. Then, under certain identifiability conditions on the complete design matrix ![]() detailed in [42], and assuming that

detailed in [42], and assuming that ![]() , the BIC given by (25.43) using the prior (25.44) almost surely selects the true model

, the BIC given by (25.43) using the prior (25.44) almost surely selects the true model ![]() as

as ![]() .

.

1.25.3.5 Markov-Chain Monte-Carlo Bayesian methods

We conclude this section by briefly discussing several alternative approaches to Bayesian model selection based on random sampling from the posterior distribution, ![]() , which fall under the general umbrella of Markov Chain Monte Carlo (MCMC) methods [43]. The basic idea is to draw a sample of parameter values from the posterior distribution, using a technique such as the Metropolis-Hastings algorithm [44,45] or the Gibbs sampler [46,47]. These techniques are in general substantially more complex than the information criteria based approaches, both computationally and in terms of implementation,and this complexity generally brings with it greater flexibility in the specification of prior distributions as well as less stringent operating assumptions.

, which fall under the general umbrella of Markov Chain Monte Carlo (MCMC) methods [43]. The basic idea is to draw a sample of parameter values from the posterior distribution, using a technique such as the Metropolis-Hastings algorithm [44,45] or the Gibbs sampler [46,47]. These techniques are in general substantially more complex than the information criteria based approaches, both computationally and in terms of implementation,and this complexity generally brings with it greater flexibility in the specification of prior distributions as well as less stringent operating assumptions.

The most obvious way to apply MCMC methods to Bayesian model selection is through direct evaluation of the marginal (25.29). Unfortunately, this is a very difficult problem in general, and the most obvious approach, the so called harmonic mean estimator, suffers from statistical instability and convergence problems and should be avoided. In the particular case that Gibbs sampling is possible, and that the posterior is unimodal, Chib [48] has provided an algorithm to compute the approximate marginal probability from posterior samples.

An alternative to attempting to directly compute the marginal probability is to view the model indicator ![]() as the parameter of interest and randomly sample from the posterior

as the parameter of interest and randomly sample from the posterior ![]() . This allows for the space of models

. This allows for the space of models ![]() to be explored by simulation, and approximate posterior probabilities for each of the models to be determined by the frequency at which a particular model appears in the sample. There have been a large number of papers published that discuss this type of approach, and important contributions include the reversible jump MCMC algorithm of Green [49], the stochastic search variable selection algorithm [50], the shotgun stochastic search algorithm [51], and an interesting paper from Casella and Moreno [28] that combines objective Bayesian priors with a stochastic search procedure for regression models.

to be explored by simulation, and approximate posterior probabilities for each of the models to be determined by the frequency at which a particular model appears in the sample. There have been a large number of papers published that discuss this type of approach, and important contributions include the reversible jump MCMC algorithm of Green [49], the stochastic search variable selection algorithm [50], the shotgun stochastic search algorithm [51], and an interesting paper from Casella and Moreno [28] that combines objective Bayesian priors with a stochastic search procedure for regression models.

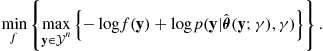

Finally, there has been a large amount of recent interest in using regularisation and “sparsity inducing” priors to combine Bayesian model selection with parameter estimation. In this approach, special priors over the model parameters are chosen that concentrate prior probability on “sparse” parameter vectors, that is, parameter vectors in which some of the elements are exactly zero. These methods have been motivated by the Bayesian connections with non-Bayesian penalized regression procedures such as the non-negative garotte [52] and the LASSO [53]. A significant advantage of this approach is that one needs only to sample from, or maximize over, the posterior of a single model containing all parameters of interest, and there is no requirement to compute marginal probabilities or to explore discrete model spaces. This is a rapidly growing area of research, and some important contributions of note include the relevance vector machine [54], Bayesian artificial neural networks [55] and the Bayesian LASSO [56,57].

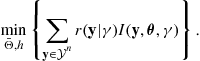

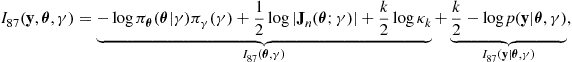

1.25.4 Model selection by compression