Chapter 6. Essays About CMMI for Services

This chapter consists of essays written by invited contributing authors. All of these essays are related to CMMI for Services. Some of them are straightforward applications of CMMI-SVC to a particular field; for example, reporting a pilot use of the model in an IT organization or in education. In addition, we sought some unusual treatments of CMMI-SVC: How can CMMI-SVC be used in development contexts? Can CMMI-SVC be used with Agile methods—or are services already agile? Finally, several essayists describe how to approach known challenges using CMMI-SVC: adding security concerns to an appraisal, using the practices of CMMI-SVC to capitalize on what existing research tells us about superior IT service, and reminding those who buy services to be responsible consumers, even when buying from users of CMMI-SVC.

In this way, we have sought to introduce ideas about using CMMI-SVC both for those who are new to CMMI and for those who are very experienced with prior CMMI models. These essays demonstrate the promise, applicability, and versatility of CMMI for Services.

A Changing Landscape

By Peter Flower

Book authors’ comments: During the past three years, Trinity, where Peter Flower is a founder and managing director, has been working with a major aerospace and defense contractor that is transforming its business from one that develops and sells products to one that provides the services necessary to keep the products operational and to ensure that they are available when and where customers want them. This new form of “availability contracting” supports the company’s strategic move to grow its readiness and sustainment capabilities and support its customers through partnering agreements.

During Peter’s work to help make this transformation happen, he has become aware that this is not just a strategic change in direction by one organization, but is part of a global phenomenon that is becoming known as the “servitization” of products. With this realization, Peter sets the larger stage, or rather, paints the landscape, in which process improvement for service fits.

“It is not the strongest of the species that survives, nor the most intelligent, but the one most responsive to change.”

—Charles Darwin

Changing Paradigms

Services are becoming increasingly important in the global economy; indeed, the majority of current business activity is in the service sector. Services constitute more than 50 percent of the GDP in low-income countries, and as the economies of these countries continue to develop, the importance of services continues to grow. In the United States, the current list of Fortune 500 companies contains more service companies and fewer manufacturers than in previous decades.

At the same time, many products are being transformed into services, with today’s products containing a higher service component than in previous decades. In management literature, this is referred to as the “servitization” of products. Virtually every product today has a service component to it; the old dichotomy between product and service has been replaced with a service-product continuum.

For limited rates of change in the past, it has been sufficient for management to seek incremental improvements in performance for existing products and processes. This is generally the domain in which benchmarking has been most effective. However, change in the business, social, and natural environments has been accelerating. A major concern for top management, especially in large and established companies, is the need to expand the company’s scope, not only to ensure survival and success in the present competitive arena, but also to make an effective transition to a turbulent future environment. The transition of an established organization from the present to the future competitive environment is often described in terms of a “paradigm shift.” Think of a paradigm shift as a change from one way of thinking to another. It’s a revolution, a transformation, a sort of metamorphosis. It does not just happen, but rather is driven by agents of change. To adopt a new paradigm, management needs to start thinking differently, to start thinking “outside the box.”

Throughout history, significant paradigm shifts have almost always been led by those at the fringes of a paradigm, not those with a vested interest (intellectual, financial, or otherwise) in maintaining the current paradigm, regardless of its obvious shortcomings. Paradigm shifts do not involve simply slight modifications to the existing model. They instead replace and render the old model obsolete.

The current change that is engulfing every industry is unprecedented, and many organizations, even in the Fortune 500, may not survive the next five years. This shift is not to be mistaken as a passing storm, but rather is a permanent change in the weather. Understanding the reasons behind this change and managing them has become one of the primary tasks of management if a business is to survive.

The Principal Agents of Change

Globalization or global sourcing is an irreversible trend in delivery models, not a cyclical shift. Its potential benefits and risks require enterprise leaders to examine what roles and functions in a given business model can be delivered or distributed remotely, whether from nearby or from across the globe. The political, economic, and social ramifications of increased global sourcing are enormous. With China and India taking a much larger slice of the world economy, and with labor-intensive production processes continuing to shift to lower-cost economies, traditional professional IT services jobs are now being delivered by people based in emerging markets. India continues to play a leading role in this regard, but significant additional labor is now being provided by China and Russia, among other countries.

IP telephony and VoIP communications technologies, made possible by the Internet, are driving voice and data convergence activity in most major companies. In turn, this is leading to a demand for new classes of business applications. Developers are finally shifting their focus toward business processes and away from software functionality. In turn, software has become a facilitator of rapid business change, not an inhibitor. The value creation in software has shifted toward subscription services and composite applications, and away from monolithic suites of packaged software. With increased globalization and continued improvements in networking technologies, enterprises will be able to use the world as their supply base for talent and materials. The distinction among different functions, organizations, software integrators and vendors, and even industries will be increasingly blurred as packaged applications are deconstructed and delivered as service-oriented business applications.

Open source software (OSS) and service-oriented architecture (SOA) are revolutionizing software markets by moving revenue streams from license fees to services and support. In doing so, they are a catalyst for restructuring the IT industry. OSS refers to software whose code is open and can be extended and freely distributed. In contrast to proprietary software, OSS allows for collaborative development and a continuous cycle of development, review, and testing. Most global organizations now have formal open source acquisition and management strategies, and OSS applications are directly competing with closed-source products in every software infrastructure market. The SOA mode of computing, based on real-time infrastructure (RTI) architecture, enables companies to increase their flexibility in delivering IT business services. Instead of having to dedicate resources to specific roles and processes, companies can rely more on pools of multifaceted resources. These improvements in efficiency have enabled large organizations to reduce their IT hardware costs by 10 percent to 30 percent and their labor costs by 30 percent to 60 percent as service quality improves and agility increases. Large companies are now looking to fulfill their application demands through shared, rather than dedicated, sources, and to deliver their software by external pay-as-you-go providers. For example, Amazon.com now sells its back-office functions to other online businesses, allowing its IT processes to be made available to other companies at a price, as a separate business from that of selling goods to consumers.

A problem for those moving from one paradigm to another is their inability to see what is before them. The current, but altering, paradigm consists of rules and expectations, many of which have been in place for decades. Any new data or phenomena that fail to fit those assumptions and expectations are often rejected as errors or impossibilities. Therefore, the focus of management’s attention must shift to innovation and customer service, where personal chemistry or creative insight matters more than rules and processes. Improving the productivity of knowledge workers through technology, training, and organizational change needs to be at the top of the agenda in most boardrooms in the forthcoming years. Indeed, a large percentage of senior executives think that knowledge management will offer the greatest potential for productivity gains in the next 15 years and that knowledge workers will be their most valuable source of competitive advantage. We are experiencing a transition from an era of competitive advantage gained through information to one gained from knowledge creation. Investment in information technology may yield information, but the interpretation of the information and the value added to it by the human mind is knowledge. Knowledge is the prerogative of the human mind, and not of the machines.

Unlike the quality of a tangible product, which can be measured in many ways, the quality of a service is only apparent at the point of delivery and is dependent on the customer’s point of view. There is no absolute measure of quality. Quality of service is mostly intangible, and may be perceived from differing viewpoints as the timely delivery of a service, delivery of the right service, convenient ordering and receipt of the service, and providing value for money. This intangibility requires organizations to adopt a new level of communication with their customers about the services they can order, ensuring that customers know how to request services and report incidents and that they are able to do so. They need to define and formally agree on the exact service parameters, ensure that their customers understand the pricing of the service, ensure the security and integrity of their customers’ data, and provide agreed-upon information regarding service performance in a timely and meaningful manner. Information, therefore, is not only a key input in services production, but also a key output as well.

In addition, an organization’s “online customers” are literally invisible, and this lack of visual and tactile presence makes it even more crucial to create a sense of personal, human-to-human connection in the online arena. While price and quality will continue to matter, personalization will increasingly play a role in how people buy products and services, and many executives believe that customer service and support will offer the greatest potential for competitive advantage in the new economy.

Management’s Challenge

As a result of this paradigm shift, a number of key challenges face many of today’s senior business managers. The major challenges include management’s visibility of actual performance and control of the activities that deliver the service, to ensure that customers get what they want. Controlling costs by utilizing the opportunities presented by the globalization of IT resources; compliance with internal processes and rules, applicable standards, regulatory requirements, and local laws; and continuous service improvement must become the order of the day.

Operational processes need to be managed in a way that provides transparency of performance, issues, and risks. Strategic plans must integrate and align IT and business goals while allowing for constant business and IT change. Business and IT partnerships and relationships must be developed that demonstrate appropriate IT governance and deliver the required, business-justified IT services (i.e., what is required, when required, and at an agreed cost). Service processes must work smoothly together to meet the business requirements and must provide for the optimization of costs and total cost of ownership (TCO) while achieving and demonstrating an appropriate return on investment (ROI). A balance must be established between outsourcing, insourcing, and smart sourcing.

Following the economic crisis, the social environment is considerably less trusting and less secure, and the public is wary of cascading risks and seems to be supportive of legislation and litigation aimed at reducing those risks, including those posed by IT. As a result, it is very likely that IT products and services will soon be subject to regulation, and organizations must be prepared to meet the requirements that regulated IT will impose on their processes, procedures, and performance.

Organizations will need to measure their effectiveness and efficiency, and demonstrate to senior management that they are improving delivery success, the business value of IT, and how they are using IT to gain a competitive advantage. Finally, organizations must understand how they are performing in comparison to a meaningful peer group, and why.

Benchmarking: A Management Tool for Change

Benchmarking is the continuous search for, and adaptation of, significantly better practices that leads to superior performance by investigating the performance and practices of other organizations (benchmark partners). In addition, benchmarking can create a crisis to facilitate the change process.

The term benchmark refers to the reference point against which performance is measured. It is the indicator of what can and is being achieved. The term benchmarking refers to the actual activity of establishing benchmarks.

Most of the early work in the area of benchmarking was done in manufacturing. However, benchmarking is now a management tool that is being applied almost everywhere. Benefits of benchmarking include providing realistic and achievable targets, preventing companies from being industry-led, challenging operational complacency, creating an atmosphere conducive to continuous improvement, allowing employees to visualize the improvement (which can, in itself, be a strong motivator for change), creating a sense of urgency for improvement, confirming the belief that there is a need for change, and helping to identify weak areas and indicating what needs to be done to improve. As an example, quality performance in the 96 percent to 98 percent range was considered excellent in the early 1980s. However, Japanese companies, in the meantime, were measuring quality by a few hundred parts per million, by focusing on process control to ensure quality consistency. Thus, benchmarking is the only real way to assess industrial competitiveness and to determine how one company’s process performance compares to that of other companies.

Benchmarking goes beyond comparisons with competitors to understanding the practices that lie behind performance gaps. It is not a method for copying the practices of competitors, but a way of seeking superior process performance by looking outside the industry. Benchmarking makes it possible to gain competitive superiority rather than competitive parity.

With the CMMI for Services reference model, the SCAMPI appraisal method, and the Partner Network of certified individuals such as instructors, appraisers, and evaluators, the Software Engineering Institute (SEI) provides a set of tools and a cadre of appropriately experienced and qualified people that organizations can use to benchmark their own business processes against widely established best practices. It must be noted, however, that there will undoubtedly be difficulties encountered when benchmarking. Significant effort and attention to detail is required to ensure that problems are minimized.

The SEI has developed a document to assist in identifying or developing appraisal methods that are compatible with the CMMI Product Suite. This document is the Appraisal Requirements for CMMI (ARC). The ARC describes a full benchmarking class of appraisals as class A. However, other CMMI-based appraisal methods might be more appropriate for a given set of needs, including self-assessments, initial appraisals, quick-look or mini-appraisals, incremental appraisals, and external appraisals. Of course, this has always been true, but the ARC formalizes these appraisals into three classes by mapping requirements to them, providing a consistency and standardization rarely seen in other appraisal methods. This is important because it recognizes that an organization can get benefits from internal appraisals with various levels of effort.

A CMMI appraisal is the process of obtaining, analyzing, evaluating, and recording information about the strengths and weaknesses of an organization’s processes and the successes and failures of its delivery. The objective is to define the problems, find solutions that offer the best value for the money, and produce a formal recommendation for action. A CMMI appraisal breaks the organization down into discrete areas that are the targets for benchmarking, and it is therefore a more focused study than other benchmarking methods as it attempts to benchmark not only business processes but also the management practices behind them. Some business processes are the same, regardless of the type of industry.

The Standard CMMI Appraisal Method for Process Improvement (SCAMPI) is the official SEI method to provide a benchmarking appraisal. Its objectives are to understand the implemented processes, identify process weaknesses (called “findings”), determine the level of satisfaction against the CMMI model (“gap analysis”), reveal development, acquisition, and service risks, and (if requested) assign ratings. Ratings are the extent to which the corresponding practices are present in the planned and implemented processes of the organization and are made based on the aggregate of evidence available to the appraisal team.

SCAMPI appraisals help in prioritizing areas of improvement and facilitate the development of a strategy for consolidating process improvements on a sustainable basis; hence SCAMPI appraisals are predominantly used as part of a process improvement program. Unless an organization measures its process strengths and weaknesses, it will not know where to focus its process improvement efforts. By knowing its actual strengths and weaknesses, it is easier for an organization to establish an effective and more focused action plan.

Summary

Driven by unprecedented technological and economic change, a paradigm shift is underway in the servitization of products. The greatest barrier in some cases is an inability or refusal to see beyond the current models of thinking; however, for enterprises to survive this shift, they must be at the forefront of change and understand how they perform within the new paradigm.

Benchmarking is the process of determining who is the very best, who sets the standard, and what that standard is. If an organization doesn’t know what the standard is, how can it compare itself against it?

One way to achieve this knowledge and to establish the necessary continuous improvement that is becoming a prerequisite for survival

is to adopt CMMI for Services.

“To change is difficult. Not to change is fatal.”

—Ed Allen

Expanding Capabilities across the “Constellations”

By Mike Phillips

Book authors’ comments: We frequently hear from users who are concerned about the three separate CMMI models and looking for advice on which is the best for them to use and how to use them together. In this essay, Mike Phillips, who is the program manager for CMMI, considers the myriad ways to get value from the multiple constellations.

As we are finishing the details of the current collection of process areas that span three CMMI constellations, this essay is my opportunity to encourage “continuous thinking.” My esteemed mentor as we began and then evolved the CMMI Product Suite was our chief architect, Dr. Roger Bate. Roger left us with an amazing legacy. He imagined that organizations could look at a collection of “process areas” and choose ones they might wish to use to facilitate their process improvement journey.

Maturity levels for organizations were all right, but not as interesting to him as being able to focus attention on a collection of process areas for business benefit. Small businesses have been the first to see the advantage of this approach, as they often find the full collection of process areas in any constellation daunting. An SEI report, “CMMI Roadmaps,” describes some ways to construct thematic approaches to effective use of process areas from the CMMI for Development constellation. This report can be found on the SEI website at www.sei.cmu.edu/library/abstracts/reports/08tn010.cfm.

As we created the two new constellations, we took care to refer back to the predecessor collection of process areas in CMMI for Development. For example, in CMMI for Acquisition, we note that some acquisition organizations might need more technical detail in the requirements development effort than what we provided in Acquisition Requirements Development (ARD), and to “reach back” to CMMI-DEV’s Requirements Development (RD) process area for more assistance.

In CMMI for Services, we suggest that the Service System Development (SSD) process area is useful when the development efforts are appropriately scoped, but the full Engineering process area category in CMMI-DEV may be useful if sufficiently complex service systems are being created and delivered.

Now, with three full constellations to consider when addressing the complex organizations many of you have as your process improvement opportunities, many additional “refer to” possibilities exist. With the release of the V1.3 Product Suite, we will offer the option to declare satisfaction of process areas from any of the process areas in the CMMI portfolio. What are some of the more obvious expansions?

We have already mentioned two expansions—ARD using RD, and SSD expanded to capture RD, TS, PI, VER, and VAL. What about situations in which most of the development is done outside the organization, but final responsibility for effective systems integration remains with your organization? Perhaps a few of the acquisition process areas would be useful beyond SAM. A simple start would be to investigate using SSAD and AM as a replacement for SAM to get the additional detailed help. And ATM might give some good technical assistance in monitoring the technical progress of the elements being developed by specific partners.

As we add the contributions of CMMI-SVC to the mix, several process areas offer more ways to expand. In V1.2 of CMMI-DEV, for example, we added informative material in Risk Management to begin to address beforehand concerns about continuity of operations after some significant disruption occurs. Now, with CMMI-SVC, we have a full process area, Service Continuity (SCON), to provide robust coverage of continuity concerns. (And for those who need even more coverage, the SEI now has the Resilience Management Model [RMM] to give the greater attention that some financial institutions and similar organizations have expressed as necessary for their process improvement endeavors. For more, see www.cert.org/resilience/rmm.html.)

Another expansion worthy of consideration is to include the Service System Transition (SST) process area. Organizations that are responsible for development of new systems—and maintenance of existing systems until the new system can be brought to full capability—may find the practices contained in SST to be a useful expansion since the transition part of the lifecycle has limited coverage in CMMI-DEV. (See the essay by Lynn Penn and Suzanne Garcia Miller for more information on this approach.) In addition, CMMI-ACQ added two practices to PP and PMC to address planning for and monitoring transition into use, so the CMMI-ACQ versions of these two core process areas might couple nicely with SST.

A topic that challenged the development team for V1.3 was improved coverage of “strategy.” Those of us with acquisition experience knew the criticality of an effective acquisition strategy to program success, so the practice was added to the CMMI-ACQ version of PP. In the CMMI-SVC constellation, Strategic Service Management (STSM) has as its objective “to get the information needed to make effective strategic decisions about the set of standard services the organization maintains.” With minor interpretation, this process area could assist a development organization in determining what types of development projects should be in its product development line. The SVC constellation authors also added a robust strategy establishment practice in the CMMI-SVC version of PP (Work Planning) to “provide the business framework for planning and managing the work.”

Two process areas essential for service work were seriously considered for insertion into CMMI-DEV, V1.3: Capacity and Availability Management (CAM) and Incident Resolution and Prevention (IRP). In the end, expansion of the CMMI-DEV constellation from 22 to 24 process areas was determined to be less valuable than continuing our efforts to streamline coverage. In any case, these two process areas offer another opportunity for the type of expansion I am exploring in this essay.

Those of you who have experienced appraisals have likely seen the use of target profiles that gather the collection of process areas to be examined. Often these profiles specifically address the necessary collections of process areas associated with maturity levels, but this need not be the case. With the release of V1.3, we have ensured that the reporting system (SCAMPI Appraisal System or SAS) is robust enough to allow depiction of process areas from multiple CMMI constellations. As use of other architecturally similar SEI models, such as the RMM mentioned earlier as well as the People CMM, grows, we will be able to depict profiles using mixtures of process areas or even practices from multiple models, giving greater value to the process improvement efforts of a growing range of complex organizations.

CMMI for Services, with a Dash of CMMI for Development

By Maggie Pabustan and Mary Jenifer

Book authors’ comments: Maggie Pabustan and Mary Jenifer implement process improvements at a Washington-area organization, AEM, that many would consider a development house, given that its mission is applied engineering. They have been strong users of the CMMI-DEV model, yet they have found that CMMI-SVC works admirably in their setting for some contracts. Here they provide an early-adopter account of how to use both models and how to switch from one to the other to best advantage.

How do you apply CMMI best practices to a call center that provides a variety of services and develops software? This essay describes the adaptation of the CMMI for Services model to a call center that exists within a larger organization having extensive experience in using the CMMI for Development model. The call center provides a mix of services, from more traditional software support services to more technical software development expertise. Since software development is not the primary work of the call center, however, applying the CMMI for Development model to its processes was insufficient. The CMMI for Services framework helped to provide all of the elements needed for standardized service delivery and software development processes.

The Development Environment

Applied Engineering Management (AEM) Corporation is a 100 percent woman-owned company, founded by Sharon deMonsabert, Ph.D, in 1986. It is located in the Washington Metropolitan Area and historically has focused on engineering, business, and software solutions. Over the years, AEM has contributed significant effort toward implementing processes based on the CMMI for Development model. Its corporate culture of process improvement is one that values the CMMI model and the benefits of using it. Two of AEM’s federal contracts have been appraised and rated as maturity level 3 using the CMMI for Development model. Both of these contracts focus on software development and maintenance. When considering the feasibility of an appraisal for a third federal contract that focuses on customer support, AEM management determined that the nature of the work was a closer match to the CMMI for Services model, so it proceeded with plans for a CMMI for Services appraisal. After considerable preparation, the organization participated in a Standard CMMI Appraisal Method for Process Improvement (SCAMPI) appraisal in 2010.

The Services Environment

This third contract provides 24/7 customer support to the worldwide users of a software application for a federal client. The customer support team, known as the Support Office, is a staff of 16 people who are tasked with six main areas of customer support.

• Site visits: These site visits are in the form of site surveys, software installation and training, and revisits/refresher training. Tasks may include user training, data analysis, data migration preparation and verification, report creation, and bar coding equipment setup.

• Support requests: Requests for assistance are received from users primarily via e-mail and telephone.

• Data analysis: Data analysis occurs in relation to the conduct of site visits and in the resolution of requests. Support Office team members provide data migration assistance and verification during site visits and identify and correct the source of data problems received via requests.

• Reporting: The Support Office creates and maintains custom reports for each site. In addition, the Support Office provides software development expertise for the more robust reporting features in the application, including universe creation and ad hoc functionality.

• Configuration Change Board (CCB) participation: Team members participate in CCB meetings. Tasks associated with these meetings include clarifying requirements with users and supporting change request analysis.

• Testing: As new versions of the software are released, the Support Office provides testing expertise to ensure correct implementation of functionality.

The Support Office has been in existence in various forms for more than 20 years. It has been a mainstay of customer service and product support for both AEM and its federal clients, and has been recognized for its dedication. The work has expanded in the past several years to include two more federal clients, and is set to expand to include additional federal clients in the coming months.

Implementing CMMI for Services

The CMMI for Services model has proven to be an excellent choice of a process framework for the Support Office. Its inclusion of core CMMI process areas and its emphasis on product and service delivery allows the Support Office to focus on its standard operating procedures, project management efforts, product development, product quality, and customer satisfaction.

Work Management Processes

Since the services provided by the Support Office are so varied, the project planning and management efforts have had to mature to cover all of these services in detail. Even though the Work Planning and Work Monitoring and Control process areas exist in both the CMMI for Development and CMMI for Services models, the maintenance of project or work strategy continues to be a key practice for Support Office management. Support Office management continuously identifies constraints and approaches to handling them, plans for resources and changes to resource allocation, and manages all associated risks. The project or work strategy drives the day-to-day management activities. Work activities within the CMMI for Services realm typically do not have fixed end dates. This is very much the case with the work performed by the Support Office. When the process implementers were reviewing the Support Office processes, much thought was given to the nature and workflow of the services they provide. As a result, the group’s processes support the continuous occurrence of work management tasks and resource scheduling. Additionally, Support Office management not only answers to multiple federal clients, but in this capacity also has to manage the site visits, support operations, data tasks, product development, and product testing for these clients. As such, the planning and monitoring of these efforts are performed with active client involvement, usually on a daily basis.

During the SCAMPI appraisal, Project Planning (in CMMI-SVC, V1.3, this becomes Work Planning) was rated at capability level 3, which was higher than the capability levels achieved by the organization for any of the other process areas in the appraisal scope. In addition, the extensive involvement of the clients was noted as a significant strength for the group.

Measurement and Analysis Processes

Over the years, the measurements collected by Support Office management have evolved significantly—in both quantity and quality. These measures contribute to the business decisions made by AEM and its clients. Measures are tracked for all of the customer support areas. Examples include travel costs, user training evaluation results, request volume, request completion rate, request type, application memory usage, application availability, change request volume, and defects from testing. Although the Measurement and Analysis process area exists in both the CMMI for Development and CMMI for Services models, of particular use to the Support Office is the relationship between Measurement and Analysis and Capacity and Availability Management in the CMMI for Services model. As they provide 24/7 customer support, both capacity and availability are highly important. The measures selected and tracked enable Support Office management and its clients to monitor status and proactively address potential incidents.

Quality Assurance Processes

Quality assurance checkpoints are found throughout the processes used by the Support Office. Each type of service includes internal review and approval, at both a process level and a work product level. Process implementers found that in the CMMI for Services environment, as in the CMMI for Development environment, the quality of the work products is highly visible to clients and users. They implemented processes to ensure high-quality work products. For example, the successful completion of site visits is of high importance to clients, in terms of both cost and customer satisfaction. Because of this, the work involved with planning for site visits is meticulously tracked. The processes for planning site visits are based on a 16-week cycle and occur in phases, with each phase having a formal checkpoint. At any point in any phase, if the plans and preparations have encountered obstacles and the checkpoint cannot be completed, then management works with the client to make a “go/no-go” decision. As another quality assurance task, process reviews are conducted by members of AEM’s corporate-level Process Improvement Team. Members of this team are external to the Support Office and conduct the reviews using their knowledge and experience with CMMI for Development process reviews and SCAMPI appraisals. They review the work products and discuss processes with members of the Support Office regularly.

Software Development Processes

A benefit of using the CMMI for Services model is that even though the model focuses on service establishment and delivery, it is still broad enough to apply to the software development work performed by the Support Office. The model allowed the Support Office to ensure that they have managed and controlled processes in place to successfully and consistently develop quality software that meets their clients’ needs. Using the CMMI for Development model, AEM has been able to address software development activities in a very detailed manner for its other contracts. In applying the CMMI for Services model to the Support Office contract, AEM’s process implementers found that the essential elements of software development still existed in the Services model. They found that these elements, including Configuration Management, Requirements Management and Development, Technical Solution, Product Integration, Verification, and Validation are addressed sufficiently in the Services model, though not necessarily as separate process areas. Given that the scope of the Support Office’s SCAMPI appraisal was focused on maturity level 2 process areas, Service System Development was not included. As the Support Office improves its processes and strives to achieve higher maturity, Service System Development will come into play.

Organizational Processes

In the past, one of the Support Office’s weaknesses arguably could have been its employee training processes. An individual team member’s knowledge was gained primarily through his or her experiences with handling user requests and working on-site with users. The experiences and relationships built with other team members in the office were also a great source of knowledge. This caused some problems for newer team members who did not have the same exposure and learning experiences as the other team members. The process of applying the CMMI for Services model helped the Support Office focus on documenting standard processes and making them available to all team members. All team members have access to the same repository of information. Lessons learned from site visits are now shared with all members of the team, and lessons learned by one team member more quickly and systematically become lessons learned by all team members. This focus has allowed the Support Office to turn a weakness into a strength. This strength was noted during the SCAMPI appraisal.

Implementation Results

Using continuous representation, the Support Office received a maturity level 2 rating with the CMMI for Services model. One of the greatest challenges the Support Office faces in its attempt to achieve advanced process improvement and higher capability and maturity ratings is an organizational issue. While the nature of the Support Office work is services rather than development, the team must integrate itself with AEM’s overall process improvement efforts, which are more in line with development. The Support Office, AEM’s CMMI for Development groups, and its Process Improvement Team will have to work together to achieve greater cohesiveness and synergy. Interestingly, the Support Office may become the first AEM group to measure its processes against higher maturity levels. Its processes as a service organization, rather than as a development organization, lead it closer to achieving quantitative management and optimization of its processes.

In summary, the CMMI for Services model was an excellent fit for the Support Office. It provides a framework for work management, including capacity and availability management, as well as measurement and analysis opportunities. Its emphasis on service delivery matches the customer support services provided by the group, and helps the group to provide higher-quality and more consistent customer service. The CMMI for Services model is flexible enough to encompass the work involved with developing software products, and also ensures that the processes to produce software and services sufficiently correspond to those used by AEM’s software development contracts.

Enhancing Advanced Use of CMMI-DEV with CMMI-SVC Process Areas for SoS

By Suzanne Miller and Lynn Penn

Book authors’ comments: Suzanne Miller has been working for several years with process improvement and governance for systems of systems. Lynn Penn works at Lockheed Martin, where she leads the process improvement work in a setting with a long history of successful improvement for its development work. Suzanne posited in the last edition of this book that systems engineering can be usefully conceived of as a service. Lynn most recently led a team to improve service processes at Lockheed Martin. Once her team had learned about the CMMI-SVC model, they recognized that service process areas could also provide a next level of capability to already high-performing development teams. In other words, Lynn proved in the field what Suzanne had conceptualized.

The term system of systems (SoS) has long been a privileged term to define development programs that are a collection of task-oriented systems that, when combined, produce a system whose functionality surpasses the sum of its constituent systems. The developers of these “mega-systems” require discipline beyond the engineering development cycle, extending throughout the production and operations lifecycles. In the U.S. Department of Defense context, many programs like these were the early adopters of CMMI-DEV. The engineering process areas found within the CMMI-DEV model reflect a consistently useful roadmap for developing the independent “subsystems.” Given a shared understanding of the larger vision, CMMI-DEV can also help to maintain a focus on the ultimate functionality and quality attributes of the larger system of systems.

However, the operators of these complex systems of systems are more likely to see the deployment and evolution of these SoS as a service that includes products, people to train them on the use of the SoS components, procedures for technology refresh, and other elements of a typical service system. From their viewpoint, an engineering organization that doesn’t have a service mindset is only giving them one component of the service system they need. From the development organization’s viewpoint, participating in a system of systems context also feels more like providing a service that contains a product more than the single product developments of the past. They are expected to provide much more skill and knowledge about their product to other constituents in the system of systems; they have much more responsibility for coordinating updates and upgrades than was typical in single product deliveries; and they are expected to understand the operational context deeply enough to help their customers make best use of their products within the end-user context, including adapting their product to changing needs. Changing the organizational mindset to include a services perspective can make it easier for a traditional development organization to transition to being an effective system of systems service provider.

With the introduction of CMMI-SVC, development programs involved in the system of systems effort are able to further enhance their product development discipline even within the context of their existing engineering lifecycle. As an example, the engineering process area of Product Integration (PI) has always been a cornerstone in effecting the combination of subsystems and the production of the final system. However, as the understanding of the operational context of a system of systems matures, it is also necessary to have the ability to add, modify, or delete subsystems while maintaining the enhanced functionality of the larger system. The Service System Transition (SST) process area provides a useful answer to this need. This CMMI-SVC process area now gives the system of systems developer guidance on methodically improving the product with new or improved functionality, while considering and managing the impact. Impact awareness is important internally to management, test and integration organization, configuration management, and quality, as well as externally to the ultimate users. All stakeholders share in the benefits of effective transition of new capabilities into the operational context. The planning of the transition coupled with product integration engineering provides both the producer and the ultimate customer with the confidence that a trusted system will continue to operate effectively as the context changes.

Another obvious gap in the development of these systems of systems is the absence of a system continuity plan. At Lockheed Martin, we have found that the CMMI-SVC process area of Service Continuity (SCON) can be effectively translated to “System” Continuity. The practices remain the same, but the object of continuity is the system or subsystem as well as the services that are typical in a sustainment activity. The ability of the producer to plan for subsystem failure, while maintaining the critical functions of the ultimate system, is a focus that can easily be missed during the SoS lifecycle. The prioritization of critical functionality (a practice within SCON) emphasizes the need for the customer and users to focus on the requirements that must not fail even under critical circumstances. Test engineers have always identified and run scenarios to ensure that the system can continue to function under adverse conditions. However, the Service Continuity guidance looks at the entire operations context, which focuses test engineering on the larger system of systems context and the potential for failure there, rather than just the adverse conditions of a particular subsystem.

Capacity and Availability Management (CAM) provides guidance to ensure that all subsystems, as well as deployment and sustainment services, meet their expected functionality and usage. When we use CAM and translate resources called for into subsystems and functionality, it becomes a valuable tool in system development. Management can use CAM practices to make sure costs that are associated with subsystem development and maintenance are within budget. Developers can use CAM practices to monitor whether the required subsystems are sufficient and available when needed. Risk Management (RSKM), a core process area, can be used to build on CAM to ensure that subsystem failures are minimized. Understanding capacity and availability is critical during SoS stress and endurance testing, since this kind of testing requires an accurate representation of the system within its intended operational environment, as called for in CAM.

Although these examples of translating CMMI-SVC process areas into the engineering context are specific to large systems of systems, they can be adapted to any development environment. Looking at the CMMI-SVC process areas as an extension to CMMI-DEV should be encouraged, especially for organizations whose product commitments extend into the sustainment and operations portions of the lifecycle. The ability of the one constellation to enhance the guidance from other constellations makes CMMI even more versatile as a process improvement tool than when it is operating as a single constellation. Proactively selecting process areas to meet a specific customer need—for either a product or service, or both—demonstrates both internally and externally a true understanding of the processes necessary to solve customer problems, not just provide a product customers have to adapt to obtain optimal performance. Shifting our mental model from pure product development to product development within the service of supporting an operational need demonstrates a multidimensional commitment to quality throughout the development and operations lifecycle that end users particularly value.

Multiple Paths to Service Maturity

By Gary Coleman

Book authors’ comments: Gary Coleman provides insight from CACI, an organization that has faced a challenge shared by many: which of the CMMI models to use, and also, whether CMMI and other frameworks, such as ISO, be used together, or whether an organization must choose one framework and adapt it to all circumstances. Gary demonstrates how CACI has adapted to using the various models and frameworks based on the context of the line of business, following multiple paths to maturity.

CACI is a 13,000-person company that provides professional services and IT solutions in the defense, intelligence, homeland security, and federal civilian government arenas. One of our greatest distinctions is the process improvement program we began more than 15 years ago to achieve industry-recognized credentials that will bring the greatest value and innovation to our clients. This has resulted in CACI earning CMM-SW, CMMI-DEV, ISO-9001, and ISO-20000 qualifications. Across the company, CACI has executed a multiyear push to implement the best practices of the ITIL framework, and the Project Management Institute’s PMBOK. Most recently, CACI achieved an enterprise-wide maturity level 3 rating against CMMI-DEV.

One aspect of the multimodel environment in which we operate is the competing nature of these models. We are becoming adept at dealing with this competition, and being able to select the bits and pieces of each model that will support the needs of a given program. Our organizational Process Asset Library contains process assets that support all of these models, as well as best practice examples that we collect and evaluate as we proceed through our project work.

This essay presents three cases of groups within CACI that have pursued credentials supporting their service related business. None of these teams were starting from scratch, and each of them had different starting points based on prior process work, customer interest, and management vision. They arrived at different end points as well.

• Case 1: adapted CMMI-DEV to its service areas

• Case 2: uses ISO-9001 to cover services, and may move to ISO-20000

• Case 3: has made the choice to move to CMMI-SVC

All three cases exist in the multimodel world, and have had to make their choices based on a variety of factors, under a range of competitive, technical, and customer pressures.

Case 1: CMMI-DEV Maturity Level to CMMI-DEV Maturity Level 3 Adapted for Services, 2004–2007

CACI’s first move into model-based services took place in a group that was developing products and delivering services. In most cases, the services were not related to the developed product, and while the distinct product and service teams shared a management team and some organizational functions, they had little need to organize between teams or to coordinate joint activities.

The drive to pursue a credential came about as management anticipated their customers’ interest in CMMI credentials. They had already installed an ISO-9001 quality management system to cover their management practices, and that had been a foundation for the first CMMI-DEV effort covering their engineering projects at maturity level 2, which they achieved in 2005.

As the service content of their business grew, it became clear to management that they could incorporate some of their lessons learned in the engineering areas, and the discipline of the CMMI, to their service activities. In fact, they came to realize that it would be easier to include these service functions in their process activities than it would be to work them by exception or exclusion. This insight led them to apply the CMMI-DEV model across the board.

There were challenges, of course, especially where the development of common standard processes was concerned. It was not always easy to see how some of the development engineering practices could be applied to services, and there was not much prior industry experience to rely upon. Attempts to write processes that could be applied in both development and service settings sometimes resulted in processes that were so generic in nature that significant interpretational guidance, and complex tailoring guidance, would be needed to support them. A large but very helpful effort went into building this guidance so that the project teams would know what was expected of them in their contexts of either products or services. This guidance was also very useful in the effort to put together the practice implementation indicators for the appraisal, and as supporting materials for the appraisal team members during the appraisal to remind them of the interpretations that were being relied upon.

In spite of the challenges, there were areas that were easily shared, and their experience showed that many of the project management areas and organizational functions could be defined in a way that allowed for their use in both engineering and service related projects. They defined methods and tools for the management of requirements and risks, for example, that could be easily visualized, taught, and executed in both domains. These successes have encouraged them to continue to look for other areas where their process efforts can be shared, and to concentrate on improvements that can be leveraged across both their services and their product development programs.

Case 2: CMM-SW to CMMI-DEV and ISO 9001

This group consisted of two main organizations: one that developed and maintained a suite of software products, and another that supported the customer’s use of those products in the field (installation, training, help desk, etc.). These two programs shared a common senior management function and an overarching quality assurance support function, but little else. In fact, initially the two programs were under different contracts and interacted with very different client communities.

The drive to CMM for their engineering side began as a result of the customer’s interest, and the CACI team worked closely with the customer teams so that both CACI and the customer achieved separate CMM ratings, and eventually CMMI ratings, at nearly the same time. Successes with the results of CMM-based process improvement led enlightened management to recognize the potential benefit of applying process improvement techniques on the service side. ISO-9001 was chosen at that time because the company had a considerable experience base in implementing ISO-9001-based Quality Management Systems in other areas, and no other suitable frameworks could be found at that time.

As both the ISO-9001 and CMMI systems developed in isolation at first, senior management noticed that similar processes, tools, reporting methods, and training existed in both areas. This revelation was the drive that started an effort to consolidate similar approaches for economies of scale. Even though the types of work being done in each area were quite different, there were many areas of commonality. This was especially true in the management and support processes, where the common process improvement team could define single approaches. A side benefit of this effort was that a new mindset that emphasized the potential for common processes overcame an older mindset that focused on the differences between the development and service teams.

When the customer proposed that the two contracts be brought together under a single umbrella contract, CACI was ready to propose a unified approach that not only included shared processes in areas that made sense, but also showed how the customer would benefit from the increased interconnectedness of the programs under CACI’s management. Tighter connection between the service-side help desk staff and the development-side requirements analysts would improve the refinement of existing requirements and uncover new requirements that would enhance both the products and their support. That same connection improved the testing capabilities because the early interactions of the development and service sides ensured better understanding of customers’ needs. The help desk and other customer-facing services could be more responsive to customers through improved communications with developers, and much of this would be due to the synergy of a common language of process and product.

This group has watched other groups within CACI that have implemented ISO-20000 on their service activities, and has also begun to evaluate whether the CMMI-SVC model offers benefits to them and their customer. They wonder if they have already achieved many of the benefits of the common set of foundation processes that the CMMI constellations share. They appreciate the flexibility of the ISO-20000 standard, and its relative clarity of interpretation. In addition, the sustainment aspects of the ISO-20000 external surveillance audits (missing in the CMMI world) appeal to the people who have relied on this in the ISO-9001 world. In the end, the decision of which way to go next will be heavily influenced by the customer’s direction.

Case 3: CMM-SW to CMMI-DEV Maturity Level 3 and Maturity Level 5 to CMMI-SVC

This is another group with a long history of process improvement within CACI, dating back to the days of CMM for Software. Their engineering and development efforts cover a range of maintenance of legacy software applications, re-platforming of some of those legacy programs, and development of new programs. In addition, this same group provides a variety of specialty services to their customers that include consulting in Six Sigma, and range to the installation and maintenance of servers and data centers.

The drive to CMM, and subsequently to CMMI-DEV, came initially from the customer, but was fueled by CACI management’s recognition that a credential would represent a “stake in the ground,” establishing their capability. At the same time, it would also be support for improvement and growth of their capability to satisfy their customer’s growing needs for higher-quality deliverables. When the customer raised the bar and identified a requirement for maturity level 4, this team had already begun their move to high maturity, targeting maturity level 5. They achieved level 5 and continue to grow their quantitative skills to this day.

This foundation in process improvement, and a culture of always looking to get better, was the basis for new inquiries into where they could apply their skills, and the obvious candidates were the services areas of their business. By now, the company had achieved several ISO-20000 credentials in a number of other IT groups, and there was certainly a motivation to take advantage of these prior successes. However, the strong CMMI awareness of this group inclined them to consider the CMMI-SVC model. The fact that the CMMI-SVC model covered a broader range of service types was important to the team in their decision, and the fact that it was organized in a way that allowed them to “reuse” and “retool” existing process assets and tools for the shared process areas is what forced the decision to go with CMMI-SVC.

A formal analysis was done and the results were shared with both CACI management and with the customer in a kickoff meeting where the plan to move forward with CMMI-SVC was presented. They anticipate that other customers will eventually see the value of the credential. They expect that CMMI-SVC will help them to grow their process excellence into the services areas, giving them the benefits that they have had for so long in their engineering and development areas.

With these three cases, all within one company, it’s clear that the claims of the CMMI product team at the SEI have merit. Adopters can use CMMI-DEV in service domains and CMMI-SVC in development, as well as in their primary intended discipline. In addition, CMMI models coexist in a compatible fashion in organizations using ISO, PMBOK, and other frameworks.

Using CMMI-DEV and ISO 20000 Assets in Adopting CMMI-SVC

By Alison Darken and Pam Schoppert

Book authors’ comments: SAIC has been a participant on both versions of the CMMI for Services model teams, and Alison Darken and Pam Schoppert worked on the initiative to adopt CMMI-SVC at SAIC. Like the prior essay authors, they found that it is feasible. They also found that it required care, even with their deep knowledge of a new model. They offer some detailed experience on what kinds of services seem to suit CMMI-SVC content best and what terminology differences among the models may call for additional understanding and adaptation of even a robust process asset set.

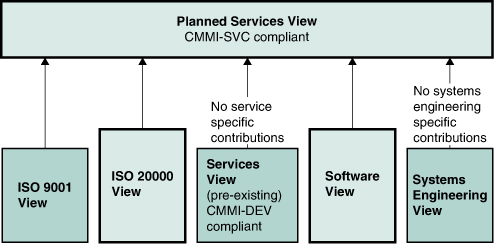

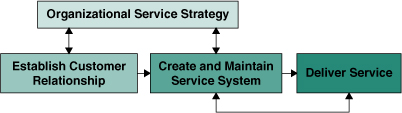

Upon release of Version 1.2 of the CMMI-SVC model, SAIC began developing a corporate-level CMMI-SVC compliant set of process documents, templates, and training as part of SAIC’s EngineeringEdge assets. The objective was to develop a CMMI-SVC compliant asset set consistent with existing process assets without exceeding CMMI-SVC requirements. The expected relationship between CMMI-SVC and the other models and standards already implemented in corporate asset collections was key to planning this development effort. At the time development began, SAIC had asset sets compliant with CMMI for Development (V1.2), ISO 20000, and ISO 9001:2001 (see Figure 6.1).

Figure 6.1 Preexisting SAIC Asset Sources

SAIC’s earlier work in organizational process development and adoption provided the CMMI-SVC initiative with the following:

• An understanding of the overall CMMI architecture and approach

• ITIL expertise

• Expectations of reuse of assets in existing “views” (e.g., asset collections)

Nine months after the project started, the CMMI-SVC compliant view was released, on time and within budget, using a part-time development team of four process engineers and managers. We learned that our existing understanding and resources, based on CMMI-DEV and ISO 20000, while helpful overall, were also the source of many unexpected challenges. Following are some of the key lessons we learned from our effort.

Understanding the Service Spectrum

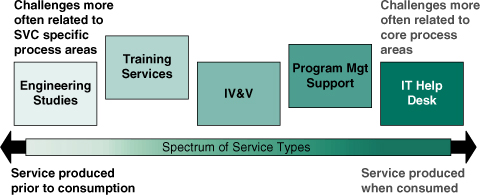

It became apparent very early on that CMMI-SVC was not a standard, cookie-cutter fit for the myriad service types performed by SAIC and other companies. Some services better match the CMMI-SVC definition in that they are simultaneously produced and consumed (e.g., help desk and technical support services). On the other side of the spectrum of service types are those that are more developmental in nature, but do not involve software or system engineering full-lifecycle development. Examples include training development, engineering studies, and independent verification and validation (IV&V).

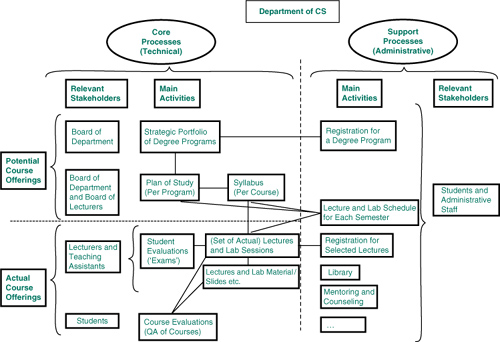

Organizations need to understand their particular service types and realize that service type can affect application of the CMMI-SVC model (see Figure 6.2). We found that the model’s service specific process areas were easiest to apply on the right side of the spectrum where service delivery was immediate upon request. Process areas such as Service Delivery (SD) and Incident Resolution and Prevention (IRP) were a natural fit; core process areas in the area of project management required more manipulation. In contrast, services to the left of the spectrum required up-front development before a service request could be satisfied. Often, considerable design, development, and verification were necessary to deliver just a single instance of a service, such as the development and delivery of a training class. We also had to address development of tangible products often accompanying these services and requiring the same level of rigor as products produced under CMMI-DEV. The model assumes such products are part of the service system, but in some cases they are unique to that one service request and, at SAIC, are not properly treated as part of the service system (e.g., an engineering study). Because of the developmental feel and reduced volume of service instances, service specific process areas such as Service System Development (SSD) and Capacity and Availability Management (CAM) required more massaging. Understanding the service spectrum and the specific service catalog of an organization can ease adoption and implementation of CMMI-SVC.

Figure 6.2 Applying CMMI-SVC to the Service Spectrum

Rethinking the Core Process Areas

Understanding the CMMI model required a paradigm shift. Prior knowledge of CMMI can be both an asset and a handicap in dealing with CMMI-SVC. To avoid going down the wrong path, we had to make a very conscious effort to avoid over-generalizing from past experience to CMMI-SVC. For instance, for some core process areas (Process Management process areas, Measurement and Analysis [MA], and Configuration Management [CM]), while the practices were the same, they needed to be viewed from a different perspective. The following are the areas in which we encountered a particular challenge.

• It was necessary to move from the project-centric view of CMMI-DEV and shift to a “program”-oriented process where a program was an ongoing activity that accommodated many specific projects, tasks, or individual service requests. ISO 20000 reflects this perspective, but with CMMI-SVC we were fighting the slant of the core process areas and our own past history with CMMI.

• An appreciation of the two-phase nature of a program was key. During establishment, the program matches well with a project and with the practices in CMMI-DEV. This phase produces an actual product, the service system, at its conclusion. After service delivery begins, the picture shifts away from development.

• A centralized notion of metrics as applied to software projects required rethinking as a distributed activity in terms of collection, analysis, and use. Metrics also refocused from measuring the progress of a project, applicable during service system development, to measuring performance, once delivery began.

• The CM process area in CMMI provided too little guidance and asked too little to responsibly serve an IT service project. We struggled with the tension between maintaining our goal of not exceeding the expectations of the standard and the knowledge that an IT services program would get in a lot of trouble with only that minimal level of rigor.

• It took some retraining to avoid conflating defects with incidents.

• As explained in the next section, we adopted the term problem to refer to underlying causes. The goal in IRP related to this activity did not require the full application of Causal Analysis and Resolution (CAR), even CAR without a quantitative management component. We had to make a particular effort not to require more in this area than CMMI-SVC required.

• CMMI-SVC required more-detailed, lower level assets for IT services. We had to find a solution for this while still maintaining a flexible, generic, corporate-level set. We adopted a template for manuals that contains shells for the needed detailed work instructions.

Understanding Customer Relationships

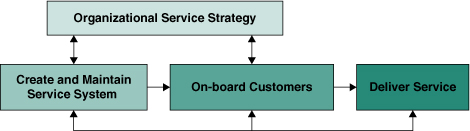

SAIC works through contracts with government and commercial customers. In a company such as SAIC, two situations need to be addressed: SAIC provides a standard set of services for many customers (see Figure 6.3), and SAIC performs a dedicated service(s) under contract for a particular customer (see Figure 6.4). Our approach to establishing the service system and on-boarding customers varied based on for whom and how the service was provisioned. This led to distinct service lifecycles.

Figure 6.3 Service Provider Paradigm

Figure 6.4 Contract-Based Paradigm

The lifecycles shown in Figure 6.3 and Figure 6.4 represent a unique paradigm related to our services business.

• An organization may use the “field of dreams” approach of “If you build it, they will come.” In this paradigm, the organization has a vision for a service before obtaining customer commitments. The activities related to on-boarding customers come after the service catalog in STSM is developed and after the service system is established in SSD. This situation was addressed by a service provider lifecycle.

• Organizations may provision services in response to a defined service agreement. This flow begins with identification and contractual negotiation with a customer, followed by establishment of the service system and service delivery. In this situation, the contract-based lifecycle is employed. It still mandates alignment to strategic organizational service management.

Both lifecycle models have the same key elements, but the order of activities varies.

To better understand the decisions made, it helps to be aware that, overall, our service asset view, as well as our other asset views, have the following features.

• They are primarily intended for work efforts that support external customers.

• They must comply with internal SAIC corporate policies including program management, contract management, and pre-award policies. Some of these fall outside of CMMI, but are essential to the way SAIC does business.

Some work efforts or contracts are equivalent to fulfilling a single service request (e.g., one engineering study, one training course). This presents some challenges since some process areas such as STSM, SSD, and IRP are not cost-effective or practical for a single request. Our solution was to develop an organization approach for these efforts to avoid over-burdening such small contracts with the requirements of these process areas and to shift the overhead to the organization owning the contract.

Understanding the New Terminology

We found that some terminology used in CMMI was not in sync with ISO 20000 and ITIL as already implemented in our environment. Sometimes the ITIL and ISO terminology was a better fit on its own merits. There weren’t many instances, but they were pervasive.

• “Service request” has a very specific meaning in the ITIL and ISO 20000 world, and it is not the same as the way it is defined in CMMI. An ITIL service request is a preapproved, standard request (e.g., change out a printer ribbon), as opposed to the use in CMMI to encompass not just preapproved, standard requests, but all customer requests. Because ISO 20000 and ITIL were already present in the organization, we were concerned about confusion. We also thought it was very useful to have a specific term for preapproved, standard requests since their handling differs from nonstandard requests.

• A term was needed to easily refer to the pursuit and management of addressing underlying causes. The term problem is used in ITIL and works well to make it easier to talk about the subject.

• As discussed earlier, management needed to be addressed in terms of programs, not just projects. With CMMI-SVC, Version 1.3, the term work is introduced in place of project. While this is helpful in avoiding some of the assumptions associated with project, in the SAIC environment, we see a need to address both program type management and project management in a service context. The ongoing service effort is managed as a program and larger individual service requests or service system modifications are managed as projects.

Understanding How to Reuse Existing CMMI Process Assets

At the time we developed our CMMI-SVC compliant set SAIC had three existing CMMI-DEV compliant asset views from which to draw: Software, Systems Engineering, and Services. The latter attempted to fit services to the CMMI-DEV model. None of the assets unique to the preexisting Services View were reused nor were any unique to the Systems Engineering View. All CMMI-DEV view assets that proved useful for CMMI-SVC were found in the Software View or were standard assets shared across multiple views. The exact relationship with the Software View is as follows.

• Sixteen percent of the Service View assets, mostly organizational assets, could be shared between views without modification.

• Twenty-seven percent, mostly from PM, QA, CM, and peer review, could be shared with relatively minor modification.

• Twenty-seven percent, unique to the Services View, were developed from a Software View source.

• The Software View was indicated in the Services View as an optional source for assets to support service system development (i.e., the Software Test Specification Template).

The types of modifications required to share were almost exclusively due to the following.

• Creation of assets unique to the Services View: References were now needed to cite both the Software View version and the Services View (e.g., citations might be required to both the Configuration Management Plan Template used in Software and Systems Engineering and the Service Configuration Management Plan Template used only in Services).

• Differences in terminology: Even in describing core process area activities, the same terminology would not always work for both software and services. For instance, using problem to describe underlying causes overloaded a term that had always been used as a synonym for defect in SAIC CMMI-DEV views. This meant we could not share our change tracking form between views.

There were some cases, such as metrics, that differed enough to require a unique asset with a different approach. In a significant number of cases, however, we didn’t feel that an asset could be shared despite often directing virtually the same activities and corresponding to the same practices. The motive for not sharing, in the majority of cases, was to increase user convenience. Our philosophy is that ease of use is more important than reducing complexity from the organization’s perspective. User push back is much more difficult to address than finding ways around maintaining multiple versions of the same thing, each suited to a different user community. We wanted to spare users from plowing through too many references to alternative versions of specific documents and so forth. This was the main reason, for instance, for developing a configuration management plan template for services distinct from that used for software and systems engineering.

We were also able to share or use as a source several of the customized training classes supporting the Software View process. Twenty-nine percent of the classes for the Services View had sources in the Software View. Forty-three percent were shared with the Software View after some modification.

Understanding How to Use and Reuse ISO 20000 Assets

Five percent of the assets unique to the Services View, all related to CMMI-SVC specific process areas, were developed from an ISO 20000 source. The small number of ISO 20000 sources is misleading. The assets developed from them were among the most significant in the revised Services View and would have required a great deal of effort to create without a source. They included plan templates for capacity and availability management, continuity management, and service transition, and a request, incident, and problem manual.

We were surprised that we couldn’t share service specific assets and had to do so much work to create the revised Services View version from the ISO 20000 source. This was due to the following factors.

• Terminology differences. This was not as serious as it might have been, because in many cases, we adopted the ISO 20000 or ITIL terminology (e.g., service request, problem management).

• References to other ISO 20000 assets not included in the Services View.

• Lack of overlap between CMMI and ISO 20000 as to what functions received more detail, rigor, or elaboration. This was a serious issue. Some examples include the following.

• QA: ISO 20000 appears to leave the definition of QA as understood in CMMI to the ISO 9001 standard. The only auditing discussed in the ISO 20000 standard refers to auditing against the ISO 20000 standard itself. Our ISO 9001 View did not contribute to our CMMI-SVC effort. Where the ISO 9001 scope overlapped with CMMI-SVC, we had better-matching CMMI-DEV resources available.

• CM: ISO 20000, as an IT standard, focuses on some recordkeeping issues that are essential to IT services (e.g., a configuration management database [CMDB]), but wouldn’t necessarily be needed in all services. It also delves more deeply into change management. Document management is specifically addressed.

• PM: ISO 20000 frequently focuses on different aspects of project management than CMMI, such as the customer complaint process and financial management.

• Organizational process: In ISO 20000, the organization and the service program are essentially identical; therefore, OPF, OPD, and OT occur within the service program.

• Structural differences as to how activities were grouped. Although this might not seem to make any difference—a practice is a practice no matter how you organize the discussion—it does result in certain natural groupings of responsibilities and practitioner ways of thinking. The main examples are inter-workings of availability, continuity, and capacity, and the definition of CM.

• In ISO 20000, availability and continuity are paired whereas CMMI joins availability and capacity. Furthermore, the expectations for what would be discussed within each differed in some aspects. We had created separate ISO 20000 plan templates for each of these three areas as well as a manual template for capacity management—four documents in all. For the Services View, a Capacity and Availability Plan Template and a Continuity Plan Template were sufficient to address the practices. The requirement for model representations of the service isn’t included in ISO 20000.

• In ISO 20000, the CMMI CM practices are distributed among three functional areas: Release, Change Management, and Configuration Management. Configuration Management is confined to the recordkeeping and repository maintenance function. Attention is also given to document management. These factors introduce a larger number of practices that need to be met and a multiplicity of roles that in the CMMI-SVC View would be assigned to the CM manager and maybe to the CM team.

Specialized ISO 20000 process training courses were heavily used as sources for 29 percent of the CMMI-SVC process classes.

Conclusion

Going into the development effort, we had initially expected a relatively painless exercise in which we would be able to reuse our CMMI-DEV assets for CMMI-SVC core process areas with relatively little modification, and base our assets associated with service specific activities directly on ISO 20000. We discovered that these transitions were not as effortless as we had hoped, and that, in fact, without exercising some care, prior knowledge of CMMI-DEV and ISO 20000 could take us down the wrong paths. On balance, it was very valuable to have a foundation in CMMI-DEV before attempting to take on CMMI-SVC, but implementers should exercise caution about applying what they know, and approach process adoption for services from a fresh perspective.

Experience-Based Expectations for CMMI-SVC

By Takeshige Miyoshi