Appendix D

Asymptotic Behavior of Functions

Asymptotic functions or notations are used to represent the growth rate of functions which are necessary in many applications in computing, communications, networking, and signal processing. Asymptotic notations represent certain characteristics of growth functions. They are also called order notations or Landau’s notations. One of the most relevant applications is estimation of computational time complexity of algorithms. In fact, for any algorithm, the resource requirements, such as time taken to complete the execution, amount of memory required for operation, or number of messages to be exchanged for functioning, can be represented as a mathematical function. Usually, such functions are represented in terms of the input size (n) where input can be the number of items to be processed by an algorithm or the number of nodes in a network. Once the resource required is estimated as a function, its growth rate can be represented using the asymptotic notations. The three most widely used notations, Big Oh (), Big Omega (), and Big Theta (), are briefly presented in this appendix.

D.1 Big Oh () Notation

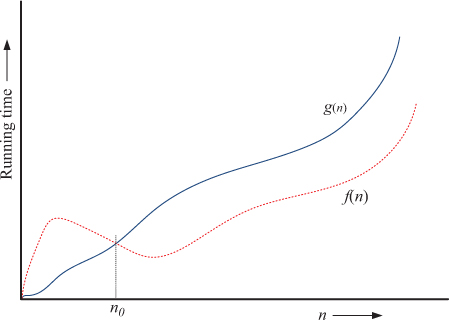

Big Oh () notation is one of the popularly used asymptotic notations. captures the upper bound of a growth function, such as the representation of time complexity of an algorithm, as a function of the input size or the resources required for the operation of a system.

For a given function, f (n), if there exist positive real numbers c > 0 and n0 such that for all values of n > n0, f (n) ≤ cg(n), then it can be represented as . That is, the function g(n) can be considered as an upper bound of the given function f (n) as the value of n grows larger. For example, a network algorithm that takes an execution time of f (n) = 5n2 + 3n + 34 can be said to be of . An algorithm that takes a constant amount of time irrespective of the input size is said to be of constant time complexity, represented as . For example, for a cellular base station that broadcasts a message to all n mobile devices in the network, the number of transmissions required for message transmissions, typically known as message complexity, can be said to be because message complexity is independent of the number of mobile devices that exist in the network. When considering, in the same example, the number of unicast transmissions to the mobile devices, one can find that the message complexity can be .

Figure D.1 shows a graphical representation of notation.

Figure D.1. A graphical representation of Big Oh () notation

D.2 Big Omega () Notation

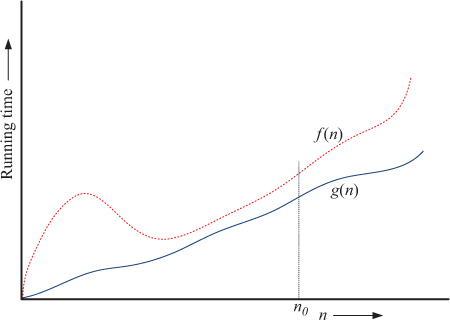

For a given function, f (n), if there exist positive real numbers c > 0 and n0 such that for all values of n > n0, f (n) ≥ cg(n), then it can be represented as f (n) = Ω(g(n)). That is, the function g(n) can be considered as a lower bound of the given function f (g). For example, a network algorithm that takes an execution time of f (n) = 5n2 + 3n + 34 can be said to be of either Ω(n2) or Ω(n). Of these two lower bounds, the tighter lower bound is Ω(n2). Figure D.2 shows a graphical representation of Ω(·) notation.

Figure D.2. A graphical representation of Big Omega (Ω) notation

D.3 Big Theta () Notation

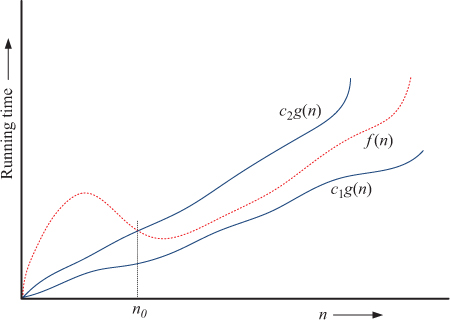

For a given function, f (n), if there exist positive real numbers c1, c2 > 0, and n0 such that for all values of n > n0, c1g(x) ≤ f (n) ≤ c2g(n), then it can be represented as . That is, the function g(n) can be considered both as an upper as well as a lower bound of the given function f (n). Therefore, the Θ(·) notation is used to represent tight bound.

Figure D.3 shows a representation of Θ(·) notation. Consider the same example we mentioned in the previous sections of a network algorithm that takes an execution time of f (n) = 5n2 + 3n + 34. Since the algorithm’s upper and lower bounds, respectively, are and Ω(n2), it can be said to be Θ(n2), which is a very tight bound.

Figure D.3. A graphical representation of Big Theta (Θ) notation

Readers can refer to more details on asymptotic notations, algorithmic time complexities, and algorithm design in [20].