On the Numerical Solution of Equilibria in Auction Models with Asymmetries within the Private-Values Paradigm

Timothy P. Hubbarda and Harry J. Paarschb, aDepartment of Economics, Colby College, USA, bDepartment of Economics, University of Melbourne, Australia, [email protected], [email protected]

Abstract

We describe and compare numerical methods used to approximate equilibrium bid functions in models of auctions as games of incomplete information. In such games, private values are modeled as draws from bidder-specific type distributions and pay-your-bid rules are used to determine transactions prices. We provide a formal comparison of the performance of these numerical methods (based on speed and accuracy) and suggest ways in which they can be improved and extended as well as applied to new settings.

Keywords

First-price auctions; Asymmetric auctions; Private-values model; Numerical solutions

JEL Classification Codes

C20; D44; L1

1 Motivation and Introduction

During the past half century, economists have made considerable progress in understanding the theoretical structure of strategic behavior under market mechanisms, such as auctions, when the number of potential participants is relatively small; see Krishna (2010) for a comprehensive presentation and evaluation of progress.

Perhaps the most significant breakthrough in understanding behavior at auctions was made by Vickrey (1961) who modeled auctions as noncooperative games of incomplete information where bidders have private information concerning their type that they exploit when tendering offers for the good for sale. One analytic device commonly used to describe bidder motivation at auctions is a continuous random variable that represents individual-specific heterogeneity in types, which is typically interpreted as heterogeneity in valuations. The conceptual experiment involves each potential bidder receiving an independent draw from a distribution of valuations. Conditional on his draw, a bidder is assumed to act purposefully, maximizing either the expected profit or the expected utility of profit from winning the good for sale. Another frequently made assumption is that the bidders are ex ante symmetric, their independent draws coming from the same distribution of valuations, an assumption that then allows the researcher to focus on a representative agent’s decision rule when characterizing the Bayes-Nash equilibrium to the auction game, particularly under the pay-your-bid pricing rule, often referred to as first-price auctions, at least by economists.1

The assumption of symmetry is made largely for computational convenience. When the draws of potential bidders are independent, but from different distributions—urns, if you like—then the system of first-order differential equations that characterizes a Bayes-Nash equilibrium usually does not have a convenient closed-form solution: typically, approximate solutions can only be calculated numerically.2

Asymmetries may exist in practice for any number of reasons in addition to the standard heterogeneous-distributions case. For example, an asymmetric first-price model is relevant when bidders are assumed to draw valuations from the same distribution, but have different preferences (for instance, risk-averse bidders might differ by their Arrow-Pratt coefficient of relative risk aversion), when bidders collude and form coalitions, and when the auctioneer (perhaps the government) grants preference to a class of bidders. Bid preferences are particularly interesting because the auctioneer, for whatever reason, deliberately introduces an asymmetry when evaluating bids, even though bidders may be symmetric. In addition, admitting several objects complicates matters considerably; see, for example, Weber (1983). In fact, economic theorists distinguish between multi-object and multi-unit auctions. At multi-unit auctions, it matters not which unit a bidder wins, but rather the aggregate number of units he wins, while at multi-object auctions it matters which specific object(s) a bidder wins. An example of a multi-object auction would involve the sale of an apple and an orange, while an example of a multi-unit auction would involve the sale of two identical apples. At the heart of characterizing Bayes-Nash equilibria in private-values models of sequential, multi-unit auctions (under either first-price or second-price rules) are the solution to an asymmetric-auction game of incomplete information.

When asymmetries exist, canonical and important results from auction theory are not guaranteed to hold. For example, asymmetries can lead to inefficient allocations—outcomes in which the bidder who values the item most does not win the auction, which violates a condition required of the important Revenue Equivalence Theorem; see Myerson (1981). Identifying conditions under which auction mechanisms can be ranked in terms of expected revenue for the seller is an active area of theoretical research; Kirkegaard (2012) has recently shown that the first-price auction yields more revenue than the second-price auction when (roughly) the strong bidder’s distribution is “flatter” and “more dispersed” than the weak bidder’s distribution. Likewise, borrowing elements from first-price auctions, Lebrun (2012) demonstrated that introducing a small pay-your-bid element into second-price auctions can increase expected revenues garnered by the seller in asymmetric settings. Solving various asymmetric models and calculating expected revenue (perhaps through simulations) can provide other directions in which to investigate. Thus, understanding how to solve for equilibria in models of asymmetric auctions is of central importance to economic theory as well as empirical analysis and policy evaluation.

Computation time is of critical importance to structural econometricians who often need to solve for the equilibrium (inverse-) bid functions within an estimation routine for each candidate vector of parameters when recovering the distributions of the latent characteristics, which may be conditioned on covariates as well.3 Most structural econometric work is motivated by the fact that researchers would like to consider counterfactual exercises to make policy recommendations. Because users of auctions are interested in raising as much revenue as possible (or, in the case of procurement, saving as much money as possible), the design of an optimal auction is critical to raising (saving) money. If applied models can capture reality sufficiently well, then they can influence policies at auctions in practice.4 Unfortunately poor approximations to the bidding strategies can lead to biased and inconsistent estimates of the structural elements of the model. Consequently, both accuracy and speed are important when solving for equilibria in models of asymmetric first-price auctions. It will be important to keep both of these considerations in mind as we investigate ways for solving such models.

Our chapter is in six additional sections: in the next, we develop the notation that is used in the remainder of the chapter, then introduce some known results, and, finally, demonstrate how things work within a well-understood environment. We apologize in advance for abusing the English language somewhat: specifically, when we refer to a first-price auction, we mean an auction at which either the highest bidder wins the auction and pays his bid or, in the procurement context, the lowest bidder wins the auction and is paid his bid. This vocabulary is standard among researchers concerned with auctions; see, for example, Paarsch and Hong (2006). When the distinction is important, we shall be specific concerning what we mean.

Subsequently, in Section 3, we describe some well-known numerical strategies that have been used to solve two-point boundary-value problems that are similar to ones researchers face when investigating models of asymmetric first-price auctions. We use this section not just as a way of introducing the strategies, but so we can refer to them later when discussing what researchers concerned with solving for equilibrium (inverse-) bid functions at asymmetric first-price auctions have done. In Section 4, we then discuss research that either directly or indirectly contributed to improving computational strategies to solve for bidding strategies at asymmetric first-price auctions. In particular, we focus on the work of Marshall et al. (1994), Bajari (2001), Fibich and Gavious (2003), Li and Riley (2007), Gayle and Richard (2008), Hubbard and Paarsch (2009), Fibich and Gavish (2011) as well as Hubbard et al. (2013). In Section 5, we depict the solutions to some examples of asymmetric first-price auctions to illustrate how the numerical strategies can be used to investigate problems that would be difficult to analyze analytically. In fact, following Hubbard and Paarsch (2011), we present one example that has received very little attention thus far—asymmetric auctions within the affiliated private-values paradigm (APVP). In Section 6, we compare the established strategies and suggest ways in which they can be extended or improved by future research. We summarize and conclude our work in Section 7. We have also provided the computer code used to solve the examples of asymmetric first-price auctions presented below at the following website: http://www.colby.edu/economics/faculty/thubbard/code/hpfpacode.zip.

2 Theoretical Model

In this section, we first develop our notation, then introduce some known results, and finally demonstrate how the methods work within a well-understood environment.

2.1 Notation

Suppose that potential bidders at the auction are members of a set ![]() where the letter

where the letter ![]() indexes the members. Because the main focus in auction theory is asymmetric information, which economic theorists have chosen to represent as random variables, the bulk of our notation centers around a consistent way to describe random variables. Typically, we denote random variables by uppercase Roman letters—for example,

indexes the members. Because the main focus in auction theory is asymmetric information, which economic theorists have chosen to represent as random variables, the bulk of our notation centers around a consistent way to describe random variables. Typically, we denote random variables by uppercase Roman letters—for example, ![]() or

or ![]() . Realizations of random variables are then denoted by lowercase Roman letters; for example,

. Realizations of random variables are then denoted by lowercase Roman letters; for example, ![]() is a realization of

is a realization of ![]() . Probability density and cumulative distribution functions are denoted

. Probability density and cumulative distribution functions are denoted ![]() and

and ![]() , respectively. When there are different distributions (urns), we again use the subscript to refer to a given bidder’s distribution, but use the set

, respectively. When there are different distributions (urns), we again use the subscript to refer to a given bidder’s distribution, but use the set ![]() numbering. Hence,

numbering. Hence, ![]() and

and ![]() for specific bidders, but

for specific bidders, but ![]() and

and ![]() , in general. If necessary, a vector

, in general. If necessary, a vector ![]() of random variables is denoted

of random variables is denoted ![]() , while a realization, without bidder

, while a realization, without bidder ![]() , is denoted

, is denoted ![]() . The vectors

. The vectors ![]() and

and ![]() are denoted

are denoted ![]() and

and ![]() , respectively.

, respectively.

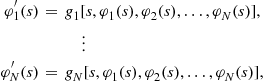

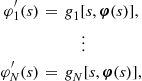

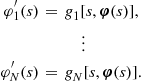

The lowercase Greek letters ![]() and

and ![]() are used to denote equilibrium bid functions:

are used to denote equilibrium bid functions: ![]() for a bid at a first-price auction where the choice variable is

for a bid at a first-price auction where the choice variable is ![]() . Again, if necessary, we use

. Again, if necessary, we use ![]() to collect all strategies, and

to collect all strategies, and ![]() to collect the choice variables, while

to collect the choice variables, while ![]() is used to collect all the strategies except that of bidder

is used to collect all the strategies except that of bidder ![]() and

and ![]() collects all the choices except that of bidder

collects all the choices except that of bidder ![]() . We use

. We use ![]() to denote the inverse-bid function and

to denote the inverse-bid function and ![]() to collect all of the inverse-bid functions. Now,

to collect all of the inverse-bid functions. Now, ![]() denotes a tender at a low-price auction, where the choice variable is

denotes a tender at a low-price auction, where the choice variable is ![]() . We use

. We use ![]() to collect all strategies and

to collect all strategies and ![]() to collect the choice variables, while

to collect the choice variables, while ![]() is used to collect all the strategies except that of bidder

is used to collect all the strategies except that of bidder ![]() and

and ![]() collects all the choices except that of bidder

collects all the choices except that of bidder ![]() .

.

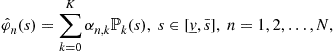

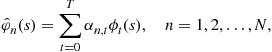

We denote by ![]() a general family of polynomials, and use

a general family of polynomials, and use ![]() for Chebyshev polynomials, and

for Chebyshev polynomials, and ![]() for Bernstein polynomials.

for Bernstein polynomials.

We use ![]() to collect the parameters of the approximate equilibrium inverse-bid functions.

to collect the parameters of the approximate equilibrium inverse-bid functions.

2.2 Derivation of Symmetric Bayes-Nash Equilibrium

Consider a seller who seeks to divest a single object at the highest price. The seller invites sealed-bid tenders from ![]() potential buyers. After the close of tenders, the bids are opened more or less simultaneously and the object is awarded to the highest bidder. The winner then pays the seller what he bid.

potential buyers. After the close of tenders, the bids are opened more or less simultaneously and the object is awarded to the highest bidder. The winner then pays the seller what he bid.

Suppose each potential buyer has a private value for the object for sale. Assume that each potential buyer knows his private value, but not those of his competitors. Assume that ![]() , the value of potential buyer

, the value of potential buyer ![]() , is an independent draw from the cumulative distribution function

, is an independent draw from the cumulative distribution function ![]() , which is continuous, having an associated probability density function

, which is continuous, having an associated probability density function ![]() that is positive on the compact interval

that is positive on the compact interval ![]() where

where ![]() is weakly greater than zero. Assume that the number of potential buyers

is weakly greater than zero. Assume that the number of potential buyers ![]() as well as the cumulative distribution function of values

as well as the cumulative distribution function of values ![]() and the support

and the support ![]() are common knowledge. This environment is often referred to as the symmetric independent private-value paradigm (IPVP).

are common knowledge. This environment is often referred to as the symmetric independent private-value paradigm (IPVP).

Suppose potential buyers are risk neutral. Thus, when buyer ![]() , who has valuation

, who has valuation ![]() , submits bid

, submits bid ![]() , he receives the following pay-off:

, he receives the following pay-off:

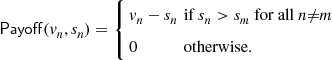

(1)

(1)

Assume that buyer ![]() chooses

chooses ![]() to maximize his expected profit

to maximize his expected profit

![]() (2)

(2)

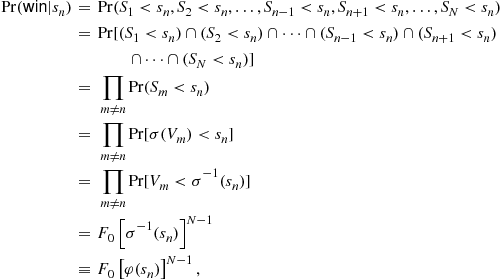

What is the structure of ![]() ? Within this framework, the identity of bidders (their subscript

? Within this framework, the identity of bidders (their subscript ![]() ) is irrelevant because all bidders are ex ante identical. Thus, without loss of generality, we can focus on the problem faced by bidder

) is irrelevant because all bidders are ex ante identical. Thus, without loss of generality, we can focus on the problem faced by bidder ![]() . Suppose the opponents of bidder

. Suppose the opponents of bidder ![]() use a bid strategy that is a strictly increasing, continuous, and differentiable function

use a bid strategy that is a strictly increasing, continuous, and differentiable function ![]() . Bidder

. Bidder ![]() will win the auction with tender

will win the auction with tender ![]() when all of his opponents bid less than him because their valuations of the object are less than his. Thus,

when all of his opponents bid less than him because their valuations of the object are less than his. Thus,

so Eq. (2) can be written as

![]() (3)

(3)

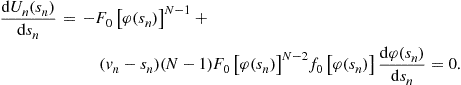

where ![]() is the inverse-bid function. Differentiating Eq. (3) with respect to

is the inverse-bid function. Differentiating Eq. (3) with respect to ![]() yields the following first-order condition:

yields the following first-order condition:

(4)

(4)

In a Bayes-Nash equilibrium, ![]() equals

equals ![]() . Also, under monotonicity, we know from the inverse function theorem that

. Also, under monotonicity, we know from the inverse function theorem that ![]() equals

equals ![]() , so dropping the

, so dropping the ![]() subscript yields

subscript yields

![]() (5)

(5)

Note that, within the symmetric IPVP, optimal behavior is characterized by a first-order ordinary differential equation (ODE); that is, the differential equation involves only the valuation ![]() , the bid function

, the bid function ![]() , and the first derivative of the bid function

, and the first derivative of the bid function ![]() , which we shall often denote in short-hand by

, which we shall often denote in short-hand by ![]() , below. Although the valuation

, below. Although the valuation ![]() enters nonlinearly through the functions

enters nonlinearly through the functions ![]() and

and ![]() , the differential equation is considered linear because

, the differential equation is considered linear because ![]() can be expressed as a linear function of

can be expressed as a linear function of ![]() . These features make the solution to this differential equation tractable, but as we shall see in the subsection that follows, they only hold within the symmetric IPVP.

. These features make the solution to this differential equation tractable, but as we shall see in the subsection that follows, they only hold within the symmetric IPVP.

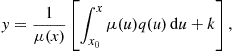

Equation (5) is among the few differential equations that have a closed-form solution. Following Boyce and DiPrima (1977) and using a notation that will be familiar to students of calculus, we note that when differential equations are of the following form:

![]()

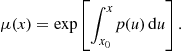

there exists a function ![]() such that

such that

![]()

Thus,

![]()

When ![]() is positive, as it will be in the auction case because it is the ratio of two positive functions multiplied by a positive integer,

is positive, as it will be in the auction case because it is the ratio of two positive functions multiplied by a positive integer,

![]()

so

![]()

whence

Therefore,

![]()

where ![]() is chosen to satisfy an initial condition

is chosen to satisfy an initial condition ![]() equals

equals ![]() .

.

To solve Eq. (5) in a closed-form, a condition relating ![]() and

and ![]() must be known. Fortunately, economic theory provides us with this known relationship at one critical point: in the absence of a reserve price, the minimum price that must be bid,

must be known. Fortunately, economic theory provides us with this known relationship at one critical point: in the absence of a reserve price, the minimum price that must be bid, ![]() equals

equals ![]() . That is, a potential buyer having the lowest value

. That is, a potential buyer having the lowest value ![]() will bid his value. In the presence of a reserve price

will bid his value. In the presence of a reserve price ![]() , one has

, one has ![]() equals

equals ![]() . The appropriate initial condition, together with the differential equation, constitute an initial-value problem which has the following unique solution:

. The appropriate initial condition, together with the differential equation, constitute an initial-value problem which has the following unique solution:

(6)

(6)

This is the symmetric Bayes-Nash equilibrium bid function of the ![]() th bidder; it was characterized by Holt (1980) as well as Riley and Samuelson (1981).

th bidder; it was characterized by Holt (1980) as well as Riley and Samuelson (1981).

We next consider the case where bidders are ex ante asymmetric, proceeding in stages. In an asymmetric environment, a number of complications arise. In particular, unlike the model with identical bidders presented above, typically no closed-form expression for the bidding strategies exists in an asymmetric environment (except in a few special cases described below), so numerical methods are required.

2.3 Bidders from Two Different Urns

Consider a first-price auction with just two potential buyers in the absence of a reserve price and assuming risk neutrality. We present the two-bidder case first to highlight the interdependence among bidders and to characterize explicitly many features of the first-price auction within the IPVP when bidders are asymmetric. In particular, we contrast features of this problem with those of the symmetric case presented in the previous subsection. Suppose that bidder 1 gets an independent draw from urn 1, denoted ![]() , while bidder 2 gets an independent draw from urn 2, denoted

, while bidder 2 gets an independent draw from urn 2, denoted ![]() . Assume that the two valuation distributions have the same support

. Assume that the two valuation distributions have the same support ![]() . The largest of the two bids wins the auction, and the winner pays what he bid.

. The largest of the two bids wins the auction, and the winner pays what he bid.

Now, ![]() , the expected profit of bid

, the expected profit of bid ![]() to player 1, can be written as

to player 1, can be written as

![]()

while ![]() , the expected profit of bid

, the expected profit of bid ![]() to player 2, can be written as

to player 2, can be written as

![]()

Assuming each potential buyer ![]() is using a bid

is using a bid ![]() equal to

equal to ![]() that is monotonically increasing in his value

that is monotonically increasing in his value ![]() , we can write the probability of winning the auction as

, we can write the probability of winning the auction as

Thus, the expected profit function for bidder 1 is

![]()

while the expected profit function for bidder 2 is

![]()

As in the symmetric case, the presence of bidder ![]() ’s inverse-bid function in bidder

’s inverse-bid function in bidder ![]() ’s objective makes clear the trade-off bidder

’s objective makes clear the trade-off bidder ![]() faces: by submitting a lower bid, he increases the profit he receives when he wins the auction, but he decreases his probability of winning the auction.

faces: by submitting a lower bid, he increases the profit he receives when he wins the auction, but he decreases his probability of winning the auction.

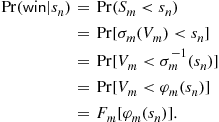

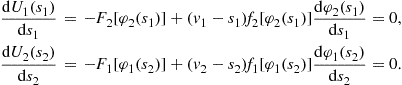

To construct the pair of Bayes-Nash equilibrium bid functions, first maximize each expected profit function with respect to its argument. The necessary first-order condition for these maximization problems are:

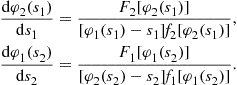

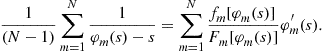

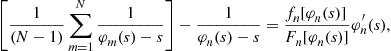

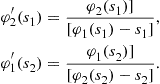

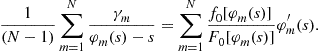

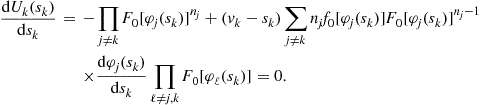

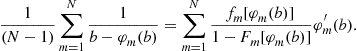

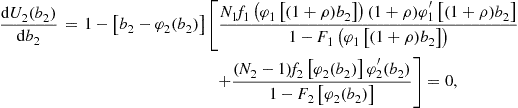

Now, a Bayes-Nash equilibrium is characterized by the following pair of differential equations:5

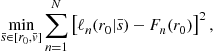

(7)

(7)

These differential equations allow us to describe some essential features of the problem. First, as within the symmetric IPVP, each individual equation constitutes a first-order differential equation because the highest derivative term in each equation is the first derivative of the function of interest. Unlike within the symmetric IPVP, however, we now have a system of differential equations, one for each bidder. Moreover, note that the functions in this system are the inverse-bid functions ![]() , not the bid functions

, not the bid functions ![]() themselves. Within the symmetric IPVP, however, we were concerned with an equilibrium in which all (homogeneous) bidders adopted the same bidding strategy

themselves. Within the symmetric IPVP, however, we were concerned with an equilibrium in which all (homogeneous) bidders adopted the same bidding strategy ![]() . This, together with monotonicity of the bid function, allowed us to map the first-order condition from a differential equation characterizing the inverse-bid function

. This, together with monotonicity of the bid function, allowed us to map the first-order condition from a differential equation characterizing the inverse-bid function ![]() to a differential equation characterizing the bid function

to a differential equation characterizing the bid function ![]() .6 In the asymmetric environment, it is typically impossible to do this because, in general,

.6 In the asymmetric environment, it is typically impossible to do this because, in general,

![]()

While we would like to solve for the bid functions, it is typically impossible to do this directly within the asymmetric IPVP.

The inverse-bid functions ![]() are helpful because they allow us to express the probability of winning the auction for any choice

are helpful because they allow us to express the probability of winning the auction for any choice ![]() ; bidder

; bidder ![]() considers the probability that the other bidder will draw a valuation that will induce him to submit a lower bid in equilibrium than the bid player

considers the probability that the other bidder will draw a valuation that will induce him to submit a lower bid in equilibrium than the bid player ![]() submits. Because the bidders draw valuations from different urns, they do not use the same bidding strategy; the valuation for which it is optimal to submit a bid

submits. Because the bidders draw valuations from different urns, they do not use the same bidding strategy; the valuation for which it is optimal to submit a bid ![]() is, in general, different for the two bidders. Furthermore, the differential equations we obtain are no longer linear. Finally, note that each differential equation involves a bid

is, in general, different for the two bidders. Furthermore, the differential equations we obtain are no longer linear. Finally, note that each differential equation involves a bid ![]() , the derivative of the inverse-bid function for one of the players, which we shall denote hereafter by

, the derivative of the inverse-bid function for one of the players, which we shall denote hereafter by ![]() , and the inverse-bid functions of each of the bidders

, and the inverse-bid functions of each of the bidders ![]() as well as

as well as ![]() . Mathematicians would refer to this system of ODEs as nonautonomous because the system involves the bid

. Mathematicians would refer to this system of ODEs as nonautonomous because the system involves the bid ![]() explicitly.7 This last fact highlights the interdependence among players that is common to game-theoretic models. Thus, in terms of deriving the equilibrium inverse-bid functions within the asymmetric IPVP, we must solve a nonlinear system of first-order ODEs.

explicitly.7 This last fact highlights the interdependence among players that is common to game-theoretic models. Thus, in terms of deriving the equilibrium inverse-bid functions within the asymmetric IPVP, we must solve a nonlinear system of first-order ODEs.

The case in which each of two bidders draws his valuation from a different urn has allowed us to contrast the features of the problem with those of the symmetric environment in a transparent way. There are also conditions that the equilibrium bid functions must satisfy, and which allow us to solve the pair of differential equations, but we delay that discussion until after we present the ![]() -bidder case.

-bidder case.

2.4 General Model

We now extend the model of the first-price auction presented above to one with ![]() potential buyers in the absence of a reserve price and assuming risk neutrality. Suppose that bidder

potential buyers in the absence of a reserve price and assuming risk neutrality. Suppose that bidder ![]() gets an independent draw from urn

gets an independent draw from urn ![]() , denoted

, denoted ![]() . Assume that all valuation distributions have a common, compact support

. Assume that all valuation distributions have a common, compact support ![]() .8 Then the largest of the

.8 Then the largest of the ![]() bids wins the auction, and the bidder pays what he bid.

bids wins the auction, and the bidder pays what he bid.

Again, ![]() , the expected profit of bid

, the expected profit of bid ![]() to player

to player ![]() , can be written as

, can be written as

![]()

Assuming each potential buyer ![]() is using a bid

is using a bid ![]() that is monotonically increasing in his value

that is monotonically increasing in his value ![]() , we can write the probability of winning the auction as

, we can write the probability of winning the auction as

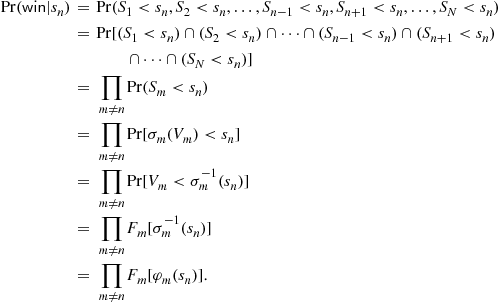

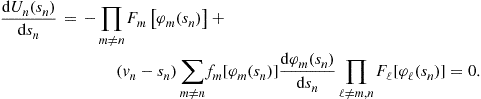

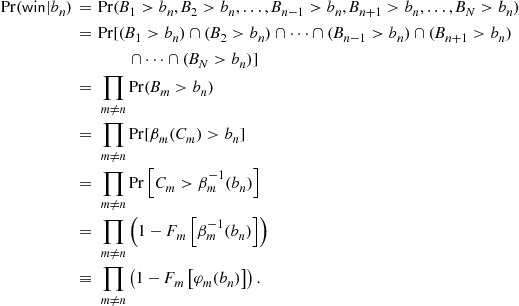

Thus, the expected profit function for bidder ![]() is

is

![]()

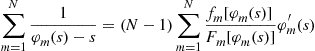

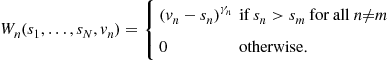

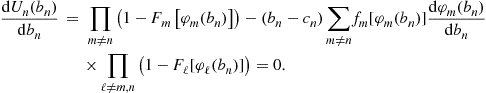

To construct the Bayes-Nash equilibrium bid functions, first maximize each expected profit function with respect to its argument. The necessary first-order condition for a representative maximization problem is:

Replacing ![]() with a generic bid

with a generic bid ![]() and noting that

and noting that ![]() equals

equals ![]() , we can rearrange this first-order condition as

, we can rearrange this first-order condition as

![]() (8)

(8)

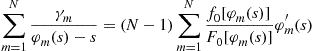

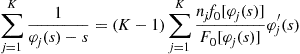

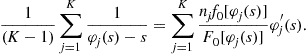

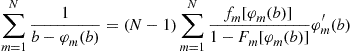

which can be summed over all ![]() bidders to yield

bidders to yield

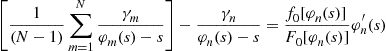

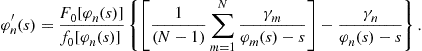

or

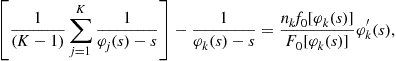

Subtracting Eq. (8) from this latter expression yields

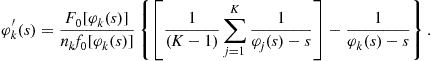

which leads to the, perhaps traditional, differential equation formulation

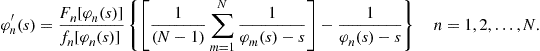

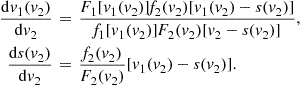

(9)

(9)

In addition to this system of differential equations (or system (7) in the two-bidder case presented above), two types of boundary conditions exist. The first generalizes the initial condition from the symmetric environment to an asymmetric one:

Left-Boundary Condition on Bid Functions: ![]() for all

for all ![]() .This left-boundary condition simply requires any bidder who draws the lowest valuation possible to bid his valuation. It extends the condition from the environment where there was only one type of bidder to one where there are

.This left-boundary condition simply requires any bidder who draws the lowest valuation possible to bid his valuation. It extends the condition from the environment where there was only one type of bidder to one where there are ![]() types of bidders.9 We shall need to use the boundary condition(s) with the system of differential equations to solve for the inverse-bid functions, as discussed above. Specifically, we shall be interested in solving for a monotone pure-strategy equilibrium (MPSE) in which each bidder adopts a bidding strategy that maximizes expected pay-offs given the strategies of the other players. Given this focus, we can translate the left-boundary condition defined above into the following boundary condition which involves the inverse-bid functions:

types of bidders.9 We shall need to use the boundary condition(s) with the system of differential equations to solve for the inverse-bid functions, as discussed above. Specifically, we shall be interested in solving for a monotone pure-strategy equilibrium (MPSE) in which each bidder adopts a bidding strategy that maximizes expected pay-offs given the strategies of the other players. Given this focus, we can translate the left-boundary condition defined above into the following boundary condition which involves the inverse-bid functions:

Left-Boundary Condition on Inverse-Bid Functions: ![]() for all

for all ![]() . The second type of condition obtains at the right-boundary. Specifically,

. The second type of condition obtains at the right-boundary. Specifically,

Right-Boundary Condition on Bid Functions: ![]() for all

for all ![]() . The reader may find this condition somewhat surprising: even though the bidders may adopt different bidding strategies, all bidders will choose to submit the same bid when they draw the highest valuation possible. For specific details and proofs of this condition, see part (2) of Theorem 1 of Lebrun (1999), Lemma 10 of Maskin and Riley (2003), and, for a revealed preference-style argument, Footnote 12 of Kirkegaard (2009).10 Informally, at least in the two-bidder case, no bidder will submit a bid that exceeds the highest bid chosen by his opponent because the bidder could strictly decrease the bid by some small amount

. The reader may find this condition somewhat surprising: even though the bidders may adopt different bidding strategies, all bidders will choose to submit the same bid when they draw the highest valuation possible. For specific details and proofs of this condition, see part (2) of Theorem 1 of Lebrun (1999), Lemma 10 of Maskin and Riley (2003), and, for a revealed preference-style argument, Footnote 12 of Kirkegaard (2009).10 Informally, at least in the two-bidder case, no bidder will submit a bid that exceeds the highest bid chosen by his opponent because the bidder could strictly decrease the bid by some small amount ![]() and still win the auction with certainty, and increase his expected profits. This right-boundary condition also has a counterpart which involves the inverse-bid functions.

and still win the auction with certainty, and increase his expected profits. This right-boundary condition also has a counterpart which involves the inverse-bid functions.

Right-Boundary Condition on Inverse-Bid Functions: ![]() for all

for all ![]() .

.

A few comments are in order here: first, because we now have conditions at both low and high valuations (bids), the problem is no longer an initial-value problem, but rather a boundary-value problem. Thus, we are interested in the solution to the system of differential equations which satisfies both the left-boundary condition on the inverse-bid function and the right-boundary condition on the inverse-bid function. In the mathematics literature, this is referred to as a two-point boundary-value problem. The critical difference between an initial-value problem and a boundary-value problem is that auxiliary conditions concern the solution at one point in an initial-value problem, while auxiliary conditions concern the solution at several points (in this case, two) in a boundary-value problem. The other challenging component of this problem is that the common high bid ![]() is unknown a priori, and is determined endogenously by the behavior of bidders. This means that the high bid

is unknown a priori, and is determined endogenously by the behavior of bidders. This means that the high bid ![]() must be solved for as part of the solution to the system of differential equations. That is, we have a system of differential equations that are defined over a domain (because we are solving for the inverse-bid functions) that is unknown a priori. In this sense, the problem is considered a free boundary-value problem. Note, too, that this system is overidentified: while there are

must be solved for as part of the solution to the system of differential equations. That is, we have a system of differential equations that are defined over a domain (because we are solving for the inverse-bid functions) that is unknown a priori. In this sense, the problem is considered a free boundary-value problem. Note, too, that this system is overidentified: while there are ![]() differential equations, there are

differential equations, there are ![]() boundary conditions as well. In addition, some properties of the solution are known beforehand: bidders should not submit bids that exceed their valuations and the (inverse-) bid functions must be monotonic.

boundary conditions as well. In addition, some properties of the solution are known beforehand: bidders should not submit bids that exceed their valuations and the (inverse-) bid functions must be monotonic.

One feature of this system of differential equations that makes them interesting to computational economists, and challenging to economic theorists, is that the Lipschitz condition does not hold. A function ![]() satisfies the Lipschitz condition on a

satisfies the Lipschitz condition on a ![]() -dimensional interval

-dimensional interval ![]() if there exists a Lipschitz constant

if there exists a Lipschitz constant ![]() (greater than zero) such that

(greater than zero) such that

![]()

for a given vector norm ![]() and for all

and for all ![]() and

and ![]() . To get a better understanding of this, assume

. To get a better understanding of this, assume ![]() and rewrite the Lipschitz condition as

and rewrite the Lipschitz condition as

![]()

where ![]() equals

equals ![]() and we have chosen to use the

and we have chosen to use the ![]() norm.11 If we assume that

norm.11 If we assume that ![]() is differentiable and we let

is differentiable and we let ![]() , then the Lipschitz condition means that

, then the Lipschitz condition means that

![]()

so the derivative is bounded by the Lipschitz constant.12 The system (9) does not satisfy the Lipschitz condition in a neighborhood of ![]() because a singularity obtains at

because a singularity obtains at ![]() . To see this, note that the left-boundary condition requires that

. To see this, note that the left-boundary condition requires that ![]() equals

equals ![]() for all bidders

for all bidders ![]() equal to

equal to ![]() . This condition implies that the denominator terms in the right-hand side of these equations which involve

. This condition implies that the denominator terms in the right-hand side of these equations which involve ![]() go to zero. Note, too, that the numerators contain

go to zero. Note, too, that the numerators contain ![]() s, which equal zero at

s, which equal zero at ![]() . Because the Lipschitz condition is not satisfied for the system, much of the theory concerning systems of ODEs no longer applies.

. Because the Lipschitz condition is not satisfied for the system, much of the theory concerning systems of ODEs no longer applies.

While not the focus of this research, our presentation would be incomplete were we to ignore the results concerning existence and uniqueness of equilibria developed by economic theorists. The issue of existence is critical to resolve before solution methods can be applied. While computational methods could be used to approximate numerically a solution that may not exist, the value of a numerical solution is far greater when we know a solution exists than when not. The issue of uniqueness is essential to empirical researchers using data from first-price auctions. Without uniqueness, an econometrician would have a difficult task justifying that the data observed are all derived from the same equilibrium. Because the Lipschitz condition fails, one of the sufficient conditions of the Picard-Lindelöf theorem, which guarantees that a unique solution exists to an initial-value problem, does not hold. Consequently, fundamental theorems for systems of differential equations cannot be applied to the system (9).

Despite this difficulty, Lebrun (1999) proved that the inverse-bid functions are differentiable on ![]() and that a unique Bayes-Nash equilibrium exists when all valuation distributions have a common support (as we have assumed above) and a mass point at

and that a unique Bayes-Nash equilibrium exists when all valuation distributions have a common support (as we have assumed above) and a mass point at ![]() . He also provided sufficient conditions for uniqueness when there are no mass points, under assumptions which are quite mild and easy for a researcher to verify. Existence was also demonstrated by Maskin and Riley (2000b), while Maskin and Riley (2000a) investigated some equilibrium properties of asymmetric first-price auctions. The discussion above is most closely related to the approach taken by Lebrun (1999) as well as Lizzeri and Persico (2000), for auctions with two asymmetric bidders; these researchers established existence by showing that a solution exists to the system of differential equations.13 Reny (1999) proved existence in a general class of games, while Athey (2001) proved that a pure strategy Nash equilibrium exists for first-price auctions with heterogeneous bidders under a variety of circumstances, some of which we consider below. For a discussion on the existence of an equilibrium in first-price auctions, see Appendix G of Krishna (2002).

. He also provided sufficient conditions for uniqueness when there are no mass points, under assumptions which are quite mild and easy for a researcher to verify. Existence was also demonstrated by Maskin and Riley (2000b), while Maskin and Riley (2000a) investigated some equilibrium properties of asymmetric first-price auctions. The discussion above is most closely related to the approach taken by Lebrun (1999) as well as Lizzeri and Persico (2000), for auctions with two asymmetric bidders; these researchers established existence by showing that a solution exists to the system of differential equations.13 Reny (1999) proved existence in a general class of games, while Athey (2001) proved that a pure strategy Nash equilibrium exists for first-price auctions with heterogeneous bidders under a variety of circumstances, some of which we consider below. For a discussion on the existence of an equilibrium in first-price auctions, see Appendix G of Krishna (2002).

2.5 Special Case

As we noted above, an explicit solution to the system (9), or system (7) in the two-bidder case, exists only in a few special cases. The special case we present here involves two bidders who draw valuations from asymmetric uniform distributions.14 For two uniform distributions to be different, they must have different supports, which requires us to modify slightly the model we presented above. Specifically, consider a first-price auction involving two risk-neutral bidders at which no reserve price exists. Suppose that bidder ![]() gets an independent draw from a uniform distribution

gets an independent draw from a uniform distribution ![]() having support

having support ![]() . For convenience, we assume the lowest possible valuation

. For convenience, we assume the lowest possible valuation ![]() is zero, and is common to all bidders: the bidders only differ by the highest possible valuation they can draw. The largest of the two bids wins the auction, and the bidder pays what he bid.

is zero, and is common to all bidders: the bidders only differ by the highest possible valuation they can draw. The largest of the two bids wins the auction, and the bidder pays what he bid.

Within this environment,

![]()

so the probability of bidder ![]() winning the auction with a bid

winning the auction with a bid ![]() equals

equals

![]()

Thus, the expected profit function for bidder 1 is

![]()

while the expected profit function for bidder 2 is

![]()

Taking the first-order conditions for maximization of each bidder’s expected profit and setting them equal to zero yields:

The following pair of differential equations characterizes the Bayes-Nash equilibrium:

(10)

(10)

As described above, the equilibrium inverse-bid functions solve this pair of differential equations, subject to the following boundary conditions:

![]()

and

![]()

Together, these conditions imply that, while the domains of the bid functions differ, the domains of the inverse-bid functions are the same for both bidders.

This system can be solved in closed-form. The first step is to find the common and, a priori unknown, high bid ![]() . To do this, following Krishna (2002), we can rewrite the equation describing

. To do this, following Krishna (2002), we can rewrite the equation describing ![]() by subtracting one from both sides and rearranging to obtain

by subtracting one from both sides and rearranging to obtain

![]()

Adding the two equations yields

![]()

Note, however, that

![]()

so

![]()

Integrating both sides yields

![]() (11)

(11)

where we have used the left-boundary condition that ![]() equals zero to determine the constant of integration. This equation can be used to solve for

equals zero to determine the constant of integration. This equation can be used to solve for ![]() using the right-boundary condition

using the right-boundary condition

![]()

so

![]()

Following Krishna (2002), we can use a change of variables by setting

![]()

for which

![]()

Note, too, that the change of variables implies

![]()

The key to solving the system of differential equations is that each differential equation in the system (10) can be expressed as an alternative differential equation which depends only on the inverse-bid function, and its derivative, for a single bidder: it does not involve the other bidder’s inverse-bid function. To see this, solve for ![]() using Eq. (11) and substitute it into the equation defining

using Eq. (11) and substitute it into the equation defining ![]() in the system (10) to obtain

in the system (10) to obtain

![]()

Now, using the change of variables proposed above, as well as the relationships obtaining from it, this differential equation can be written as

![]()

which can be rewritten as

![]()

The solution to this differential equation is

![]()

where ![]() is the constant of integration. Using the change of variables, the inverse-bid function is

is the constant of integration. Using the change of variables, the inverse-bid function is

![]()

where

![]()

is determined by the right-boundary condition that ![]() equals

equals ![]() . Likewise,

. Likewise,

![]()

where

![]()

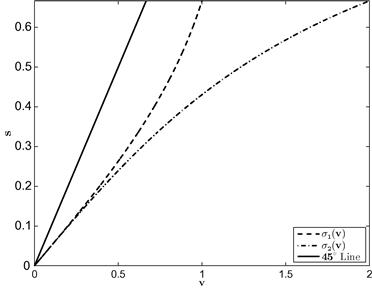

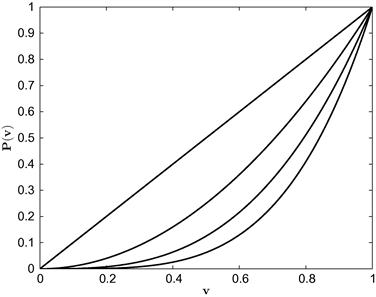

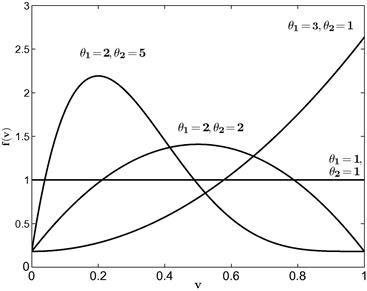

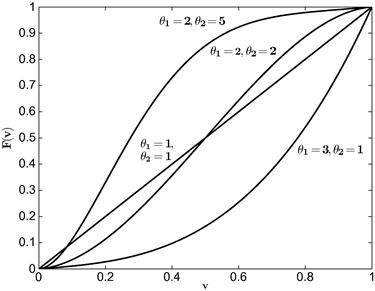

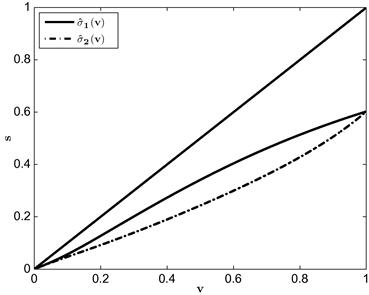

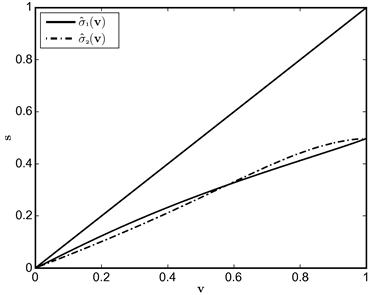

which completes the closed-form solution for the inverse-bid functions in this special case. The associated bid functions for the case where ![]() is Uniform[0,1] and

is Uniform[0,1] and ![]() is Uniform[0, 2] are depicted in Figure 1.

is Uniform[0, 2] are depicted in Figure 1.

In this example, tractability obtains because ![]() , the inverse of the Mills’ ratio, is a convenient function of the inverse-bid function: it equals

, the inverse of the Mills’ ratio, is a convenient function of the inverse-bid function: it equals ![]() . Thus, the pair of differential equations in the two-bidder case can be expressed as a pair of independent ODEs. That is, the relationship among bidders is so special we can use the approach we used to solve for the equilibrium at a symmetric first-price auction. In short, we are able to derive closed-form expressions for the inverse-bid functions (or, likewise, for the bid functions). In general, inverses of the Mills’ ratio will involve terms that prevent such isolation and will require using numerical methods.

. Thus, the pair of differential equations in the two-bidder case can be expressed as a pair of independent ODEs. That is, the relationship among bidders is so special we can use the approach we used to solve for the equilibrium at a symmetric first-price auction. In short, we are able to derive closed-form expressions for the inverse-bid functions (or, likewise, for the bid functions). In general, inverses of the Mills’ ratio will involve terms that prevent such isolation and will require using numerical methods.

2.6 Extensions

The model presented above is relevant to a number of different research questions. In this subsection, we discuss some extensions to the model which also require the use of computational methods.15 While it may seem reasonable empirically to assume that there may exist more than one type of bidder at auction, the asymmetric first-price model we have presented can arise even when bidders draw valuations from the same distribution. In particular, we consider first the case of risk-averse bidders and then the case in which bidders collude and form coalitions. We then cast the model presented above in a procurement environment in which the lowest bidder is awarded the contract. Finally, given this procurement setting, we consider a case in which the auctioneer (in this case, the government) grants preference to a class of bidders.

2.6.1 Risk Aversion

In the discussion of asymmetric first-price auctions that we presented above, we assumed (as researchers most commonly do) that the asymmetry was relevant because bidders drew valuations from different distributions. Alternatively, we could assume that bidders are symmetric in that they all draw valuations from the same distribution, but asymmetric in that they have heterogeneous preferences. Assume that buyer ![]() ’s value

’s value ![]() is an independent draw from the (common) cumulative distribution function

is an independent draw from the (common) cumulative distribution function ![]() , which is continuous, having an associated positive probability density function

, which is continuous, having an associated positive probability density function ![]() that has compact support

that has compact support ![]() where

where ![]() is weakly greater than zero. Assume that the number of potential buyers

is weakly greater than zero. Assume that the number of potential buyers ![]() as well as the cumulative distribution function of values

as well as the cumulative distribution function of values ![]() and the support

and the support ![]() are common knowledge.

are common knowledge.

We relax the assumption that bidders are risk neutral and, instead, assume that the bidders have different degrees of risk aversion. While individual valuations are private information, all bidders know that valuations are drawn from ![]() and know each bidder’s utility function. Consider the case in which bidders have constant relative risk aversion (CRRA) utility functions but differ in their Arrow-Pratt coefficient of relative risk aversion

and know each bidder’s utility function. Consider the case in which bidders have constant relative risk aversion (CRRA) utility functions but differ in their Arrow-Pratt coefficient of relative risk aversion

![]()

where ![]() for all bidders

for all bidders ![]() .16 Thus, when buyer

.16 Thus, when buyer ![]() submits bid

submits bid ![]() , he receives the following pay-off:

, he receives the following pay-off:

(12)

(12)

Under risk neutrality the profit bidder ![]() receives when he wins the auction is linear in his bid

receives when he wins the auction is linear in his bid ![]() so the pay-off is additively separable from the bidder’s valuation. This breaks down under risk aversion as utility becomes nonlinear—utility is concave in the CRRA case.17

so the pay-off is additively separable from the bidder’s valuation. This breaks down under risk aversion as utility becomes nonlinear—utility is concave in the CRRA case.17

Assuming each potential buyer ![]() is using a bid

is using a bid ![]() that is monotonically increasing in his value

that is monotonically increasing in his value ![]() , the expected utility function for bidder

, the expected utility function for bidder ![]() is

is

![]()

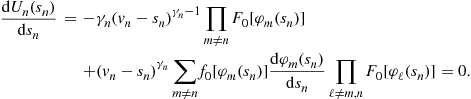

The necessary first-order condition for a representative utility maximization problem is:

Replacing ![]() with a general bid

with a general bid ![]() and noting that

and noting that ![]() equals

equals ![]() , we can rearrange this first-order condition as

, we can rearrange this first-order condition as

![]() (13)

(13)

which can be summed over all ![]() bidders to yield

bidders to yield

or

Subtracting Eq. (13) from this latter expression yields

which leads to the following differential equation formulation:

(14)

(14)

In addition to this system of ODEs, there are two types of boundary conditions on the equilibrium (inverse-) bid functions which mirror those of the asymmetric first-price auction:

Left-Boundary Condition (on Inverse-Bid Functions): ![]() for all

for all ![]() .

.

We are interested in the solution to the system (14) which satisfies the right- and left-boundary conditions on the inverse-bid functions. In general, no closed-form solution exists and the Lipschitz condition does not hold in a neighborhood around ![]() because of a singularity. Consequently, numerical methods are again required.

because of a singularity. Consequently, numerical methods are again required.

2.6.2 Collusion or Presence of Coalitions

Consider instead a model in which all ![]() potential bidders have homogeneous, risk-neutral preferences. Furthermore, assume that all bidders draw independent valuations from the same distribution

potential bidders have homogeneous, risk-neutral preferences. Furthermore, assume that all bidders draw independent valuations from the same distribution ![]() , having an associated positive probability density function

, having an associated positive probability density function ![]() that has compact support

that has compact support ![]() . Suppose, however, subsets of bidders join (collude to form) coalitions. Introducing collusion into an otherwise symmetric auction is what motivated the pioneering research of Marshall et al. (1994). Bajari (2001) proposed using numerical methods to understand better collusive behavior in a series of comparative static-like computational experiments. We discuss the contributions of these researchers later in this chapter. First, however, it is important to recognize that the symmetric first-price auction with collusion, as is typically modeled, is equivalent to the standard asymmetric first-price auction model presented above. Specifically, if the bidders form coalitions of different sizes, a distributional asymmetry is created and the model is just like the case in which each coalition is considered a bidder which draws its valuation from a different distribution.

. Suppose, however, subsets of bidders join (collude to form) coalitions. Introducing collusion into an otherwise symmetric auction is what motivated the pioneering research of Marshall et al. (1994). Bajari (2001) proposed using numerical methods to understand better collusive behavior in a series of comparative static-like computational experiments. We discuss the contributions of these researchers later in this chapter. First, however, it is important to recognize that the symmetric first-price auction with collusion, as is typically modeled, is equivalent to the standard asymmetric first-price auction model presented above. Specifically, if the bidders form coalitions of different sizes, a distributional asymmetry is created and the model is just like the case in which each coalition is considered a bidder which draws its valuation from a different distribution.

The ![]() potential bidders form

potential bidders form ![]() coalitions with a representative coalition

coalitions with a representative coalition ![]() having size

having size ![]() with

with

![]()

and

where ![]() is less than or equal to

is less than or equal to ![]() . We are not concerned with how the coalition divides up the profit if it wins the item at auction. Instead, we are simply concerned with how each coalition behaves in this case. Note, too, that we allow for coalitions to be of size 1; that is, a bidder may choose not to belong to a coalition, and thus behaves independently (noncooperatively).

. We are not concerned with how the coalition divides up the profit if it wins the item at auction. Instead, we are simply concerned with how each coalition behaves in this case. Note, too, that we allow for coalitions to be of size 1; that is, a bidder may choose not to belong to a coalition, and thus behaves independently (noncooperatively).

Assume that each coalition ![]() chooses its bid

chooses its bid ![]() to maximize its (aggregate) expected profit

to maximize its (aggregate) expected profit

![]()

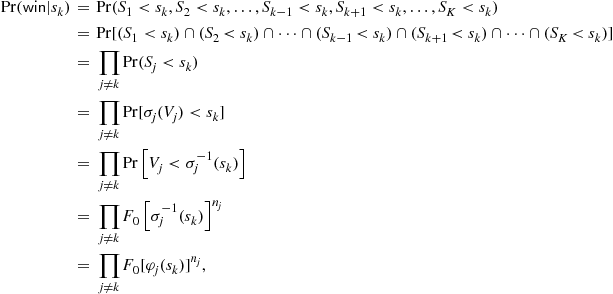

Coalition ![]() will win the auction with tender

will win the auction with tender ![]() when all other coalitions bid less than

when all other coalitions bid less than ![]() because the highest valuation of the object for each rival coalition induces bids that are less than that of coalition

because the highest valuation of the object for each rival coalition induces bids that are less than that of coalition ![]() . Assuming each coalition

. Assuming each coalition ![]() adopts a bidding strategy

adopts a bidding strategy ![]() that is monotonically increasing in its value

that is monotonically increasing in its value ![]() , we can write the probability of winning the auction as

, we can write the probability of winning the auction as

where, again, ![]() is the inverse-bid function. Thus, the expected profit function of coalition

is the inverse-bid function. Thus, the expected profit function of coalition ![]() is

is

![]()

When the number of bidders in each coalition is different for at least two coalitions (when ![]() for some

for some ![]() ), then even though all bidders draw valuations from the same distribution, an asymmetry obtains. Thus, for a given bid, each coalition faces a different probability of winning the auction. This probability of winning differs across coalitions because, when choosing its bid, each coalition

), then even though all bidders draw valuations from the same distribution, an asymmetry obtains. Thus, for a given bid, each coalition faces a different probability of winning the auction. This probability of winning differs across coalitions because, when choosing its bid, each coalition ![]() must consider the distribution of the maximum of

must consider the distribution of the maximum of ![]() draws for each rival coalition

draws for each rival coalition ![]() . If all coalitions are of the same size, then this model collapses to the symmetric IPVP with

. If all coalitions are of the same size, then this model collapses to the symmetric IPVP with ![]() bidders for which we can solve for the (common) bidding strategy which has a closed-form solution, as shown above. When, however, the number of bidders in each coalition is different for at least two of the coalitions, the model is just like the asymmetric first-price model.

bidders for which we can solve for the (common) bidding strategy which has a closed-form solution, as shown above. When, however, the number of bidders in each coalition is different for at least two of the coalitions, the model is just like the asymmetric first-price model.

Each coalition will choose its bid, given its (highest) valuation, to maximize its expected profit. The necessary first-order condition for a representative maximization problem is:

Replacing ![]() with a general bid

with a general bid ![]() and noting that

and noting that ![]() equals

equals ![]() , we can rearrange this first-order condition as

, we can rearrange this first-order condition as

![]() (15)

(15)

which can be summed over all ![]() coalitions to yield

coalitions to yield

or

Subtracting Eq. (15) from this latter expression yields

which leads to the, perhaps traditional, differential equation formulation

In addition to this system of ODEs, there are two types of boundary conditions on the equilibrium (inverse-) bid functions which mirror those of the asymmetric first-price auction:

Left-Boundary Condition (on Inverse-Bid Functions): ![]() for all

for all ![]() .

.

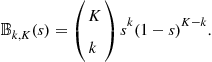

In this collusive environment, where bidders form coalitions, there is almost never a closed-form solution to the system of ODEs: one exception is when the bidders all draw valuations from a common uniform distribution. In such an environment, it is as if each coalition ![]() receives a draw from a power distribution with parameter (power)

receives a draw from a power distribution with parameter (power) ![]() and the coalition game is like an asymmetric first-price auction in which each bidder (coalition) receives a draw from a different power distribution. Plum (1992) derived the explicit equilibrium bid functions within an environment when there are two bidders (or, in this case, coalitions of equal size) at auction with different valuation supports.18 This uniform/power distribution example constitutes another very special case of an asymmetric auction. In general, no closed-form solution exists and the Lipschitz condition does not hold in a neighborhood around

and the coalition game is like an asymmetric first-price auction in which each bidder (coalition) receives a draw from a different power distribution. Plum (1992) derived the explicit equilibrium bid functions within an environment when there are two bidders (or, in this case, coalitions of equal size) at auction with different valuation supports.18 This uniform/power distribution example constitutes another very special case of an asymmetric auction. In general, no closed-form solution exists and the Lipschitz condition does not hold in a neighborhood around ![]() because of a singularity. Again, numerical methods are required.

because of a singularity. Again, numerical methods are required.

2.6.3 Procurement

We can modify the above analysis of the first-price auction with ![]() potential buyers to analyze a procurement environment in which a government agency seeks to complete an indivisible task at the lowest cost. The agency invites sealed-bid tenders from

potential buyers to analyze a procurement environment in which a government agency seeks to complete an indivisible task at the lowest cost. The agency invites sealed-bid tenders from ![]() potential suppliers—firms. The bids are opened more or less simultaneously and the contract is awarded to the lowest bidder who wins the right to perform the task. The agency then pays the winning firm its bid on completion of the task. Assume that there is no price ceiling—a maximum acceptable bid that has been imposed by the buyer—and assume bidders (firms) are risk neutral. Suppose that bidder

potential suppliers—firms. The bids are opened more or less simultaneously and the contract is awarded to the lowest bidder who wins the right to perform the task. The agency then pays the winning firm its bid on completion of the task. Assume that there is no price ceiling—a maximum acceptable bid that has been imposed by the buyer—and assume bidders (firms) are risk neutral. Suppose that bidder ![]() gets an independent cost draw

gets an independent cost draw ![]() from urn

from urn ![]() , denoted

, denoted ![]() . Assume that all cost distributions have a common, compact support

. Assume that all cost distributions have a common, compact support ![]() .

.

Now, ![]() , the expected profit of bid

, the expected profit of bid ![]() to player

to player ![]() , can be written as

, can be written as

![]()

Assuming each potential buyer ![]() is using a bid

is using a bid ![]() that is monotonically increasing in his cost

that is monotonically increasing in his cost ![]() , we can write the probability of winning the auction as

, we can write the probability of winning the auction as

Thus, the expected profit function for bidder ![]() is

is

![]()

To construct the Bayes-Nash, equilibrium bid functions, first maximize each expected profit function with respect to its argument. The necessary first-order condition for a representative maximization problem is:

Replacing ![]() with a general bid

with a general bid ![]() and noting that

and noting that ![]() equals

equals ![]() , we can rearrange this first-order condition as

, we can rearrange this first-order condition as

![]() (16)

(16)

which can be summed over all ![]() bidders to yield

bidders to yield

or

Subtracting Eq. (16) from this latter expression yields

which leads to the, perhaps traditional, differential equation formulation

(17)

(17)

In addition to this system of differential equations, as in the asymmetric first-price auction, there are two types of boundary conditions on the equilibrium bid functions at an asymmetric procurement auction.

Right-Boundary Condition on Bid Functions: ![]() for all

for all ![]() . This right-boundary condition requires any bidder who draws the highest cost possible to bid his cost.19 We shall use the boundary condition(s) with the system of differential equations to solve for the MPSE inverse-bid functions, as discussed above. Given this focus, we can translate this right-boundary condition into the following boundary condition which involves the inverse-bid functions:

. This right-boundary condition requires any bidder who draws the highest cost possible to bid his cost.19 We shall use the boundary condition(s) with the system of differential equations to solve for the MPSE inverse-bid functions, as discussed above. Given this focus, we can translate this right-boundary condition into the following boundary condition which involves the inverse-bid functions:

Right-Boundary Condition on Inverse-Bid Functions: ![]() for all

for all ![]() . The second type of condition obtains at the left-boundary and is analogous to the right-boundary conditions from the asymmetric first-price auction. Specifically,

. The second type of condition obtains at the left-boundary and is analogous to the right-boundary conditions from the asymmetric first-price auction. Specifically,

Left-Boundary Condition on Bid Functions: ![]() for all

for all ![]() . This condition requires that, even though the bidders may adopt different bidding strategies, all bidders will choose to submit the same bid if they draw the lowest cost possible. Consider two firms: any bid by one that is below

. This condition requires that, even though the bidders may adopt different bidding strategies, all bidders will choose to submit the same bid if they draw the lowest cost possible. Consider two firms: any bid by one that is below ![]() would be suboptimal because the firm could strictly increase the bid by some small amount

would be suboptimal because the firm could strictly increase the bid by some small amount ![]() and still win the auction with certainty, while at the same time increasing its profits. See the formal arguments provided in the citations given above for the asymmetric first-price model. This left-boundary condition also has a counterpart which involves the inverse-bid functions,

and still win the auction with certainty, while at the same time increasing its profits. See the formal arguments provided in the citations given above for the asymmetric first-price model. This left-boundary condition also has a counterpart which involves the inverse-bid functions,

Thus, we are interested in the solution to the system of differential equations which satisfies both the right-boundary condition on the inverse-bid functions and the left-boundary condition on the inverse-bid functions. Because we have conditions on the inverse-bid functions at both ends of the domain, we have a two-point boundary-value problem. In the procurement environment, because the common low bid is unknown a priori, the lower boundary constitutes the free boundary.

The system (17) does not satisfy the Lipschitz condition in a neighborhood of ![]() because a singularity obtains at

because a singularity obtains at ![]() . To see this, note that right-boundary condition requires that

. To see this, note that right-boundary condition requires that ![]() equals

equals ![]() for all bidders

for all bidders ![]() equal to

equal to ![]() . This condition implies that the denominator terms in the right-hand side of these equations which involve

. This condition implies that the denominator terms in the right-hand side of these equations which involve ![]() vanish. Likewise, the numerators involve a survivor function which equals zero at

vanish. Likewise, the numerators involve a survivor function which equals zero at ![]() . Thus, again, because the Lipschitz condition is not satisfied, much of the theory concerning systems of ODEs no longer applies.

. Thus, again, because the Lipschitz condition is not satisfied, much of the theory concerning systems of ODEs no longer applies.

2.6.4 Bid Preferences

Even when bidders draw valuations or costs from the same distribution, buyers (sellers) sometimes invoke policies or rules that introduce asymmetries. Bid preference policies are a commonly studied example; see, for example, Marion (2007), Hubbard and Paarsch (2009) as well as Krasnokutskaya and Seim (2011). We shall continue with the procurement model by considering the effect of a bid preference policy. Specifically, consider the most commonly used preference program under which the bids of preferred firms are treated differently for the purposes of evaluation only. In particular, the bids of preferred firms are typically scaled by some discount factor which is one plus a preference rate denoted ![]() . Suppose there are

. Suppose there are ![]() preferred bidders and

preferred bidders and ![]() typical (nonpreferred) bidders, where

typical (nonpreferred) bidders, where ![]() equals

equals ![]() . The preference policy reduces the bids of class 1 firms for the purposes of evaluation only; a winning firm is still paid its bid, on completion of an awarded contract.

. The preference policy reduces the bids of class 1 firms for the purposes of evaluation only; a winning firm is still paid its bid, on completion of an awarded contract.

Each bidder draws a firm-specific cost independently from a potentially asymmetric cost distribution ![]() where

where ![]() corresponds to the class the firm belongs to

corresponds to the class the firm belongs to ![]() . Each firm then chooses its bid

. Each firm then chooses its bid ![]() to maximize

to maximize

![]()

Suppose that all bidders of class ![]() use a (class-symmetric) monotonically increasing strategy

use a (class-symmetric) monotonically increasing strategy ![]() . This assumption imposes structure on the probability of winning an auction, conditional on a particular strategy

. This assumption imposes structure on the probability of winning an auction, conditional on a particular strategy ![]() , which then determines the bid

, which then determines the bid ![]() given a class

given a class ![]() firm’s cost draw. In particular, for a class 1 bidder,

firm’s cost draw. In particular, for a class 1 bidder,

![]()

while for a class 2 bidder

![]()

where ![]() equals

equals ![]() . These probabilities follow the derivations we have presented above after accounting for the fact that preferred (nonpreferred) bidders inflate (discount) tenders from bidders in the rival class in considering the valuation required of opponents from that class to induce a bid that would win the auction. Substituting these probabilities into the expected profit for a firm belonging to class

. These probabilities follow the derivations we have presented above after accounting for the fact that preferred (nonpreferred) bidders inflate (discount) tenders from bidders in the rival class in considering the valuation required of opponents from that class to induce a bid that would win the auction. Substituting these probabilities into the expected profit for a firm belonging to class ![]() and taking first-order conditions yields

and taking first-order conditions yields

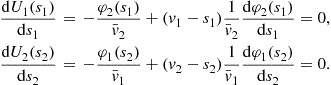

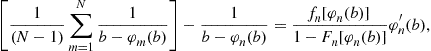

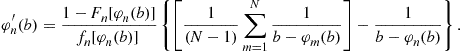

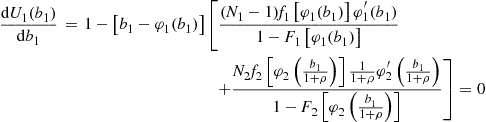

and

which characterize equilibrium behavior for bidders who choose to participate in such auctions.20

Most observed preference policies use a constant preference rate to adjust the bids of qualified firms for the purposes of evaluation only. To incorporate bid preferences in the model, using this common preference rule, the standard boundary conditions must be adjusted to depend on the class of the firm. Reny and Zamir (2004) have extended the results concerning existence of equilibrium bid functions in a general asymmetric environment; these results apply to the bid-preference case. Under the most common preference policy, the equilibrium inverse-bid functions will satisfy the class-specific conditions which are revised from the general procurement model presented above. Specifically,

Right-Boundary Conditions (on Inverse-Bid Functions):

a. for all nonpreferred bidders of class ![]() ;

;

b. for all preferred bidders of class ![]() , where

, where ![]() if

if ![]() , but when

, but when ![]() , then

, then ![]() is determined by

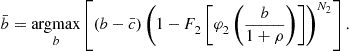

is determined by

These right-boundary conditions specify that with a preference policy a nonpreferred bidder will bid its cost when it has the highest cost. When just one preferred firm competes with nonpreferred firms, that firm finds it optimal to submit a bid that is greater than the highest cost because the preference rate will reduce the bid and allow the preferred firm to win the auction with some probability. When, however, more than one firm receives preference, it is optimal for preferred firms to bid their costs at the right-boundary. These arguments are demonstrated in Appendix A of Hubbard and Paarsch (2009).

The left-boundary conditions will also be class-specific when the preference rate ![]() is positive. Specifically,

is positive. Specifically,

Left-Boundary Conditions (on Inverse-Bid Functions): there exists an unknown bid ![]() such that

such that

These left-boundary conditions require that, when a nonpreferred firm draws the lowest cost, it tenders the lowest possible bid ![]() , whereas a preferred firm submits

, whereas a preferred firm submits ![]() . This condition can be explained by a similar argument to the standard left-boundary condition, taking into account that preferred bids get adjusted using

. This condition can be explained by a similar argument to the standard left-boundary condition, taking into account that preferred bids get adjusted using ![]() .

.

Note, too, that to ensure consistency across solutions, Hubbard and Paarsch (2009) as well as Krasnokutskaya and Seim (2011) assumed that nonpreferred players bid their costs if those costs are in the range ![]() . Because of the preferential treatment (and assuming more than one bidder receives preferential treatment), nonpreferred players cannot win the auction when they bid higher than

. Because of the preferential treatment (and assuming more than one bidder receives preferential treatment), nonpreferred players cannot win the auction when they bid higher than ![]() . Thus, any bidding strategy will be acceptable in a Bayes-Nash equilibrium, which is why the assumption is needed for consistency.21

. Thus, any bidding strategy will be acceptable in a Bayes-Nash equilibrium, which is why the assumption is needed for consistency.21

In the above model, we have allowed the firms to draw costs from different distributions. If the bidders draw costs from symmetric distributions, but are treated asymmetrically, then we still must solve an asymmetric (low-price) auction as the discrimination among classes of bidders induces them to behave in different ways. Note, too, that unlike the canonical asymmetric auctions presented above, where the asymmetry is exogenously fixed (the distributions and utility functions are set for the bidders), in an environment with bid preferences and symmetric bidders, an asymmetry obtains which is endogenous as the preference rate ![]() is typically a choice variable of the procuring agency. Regardless of the reason, no closed-form solution exists. The Lipschitz condition again does not hold in a neighborhood around

is typically a choice variable of the procuring agency. Regardless of the reason, no closed-form solution exists. The Lipschitz condition again does not hold in a neighborhood around ![]() , so numerical methods are required.

, so numerical methods are required.

3 Primer on Relevant Numerical Strategies

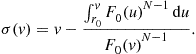

In this section, we describe several numerical strategies that have been used to solve two-point boundary-value problems that are similar to the ones researchers face in models of asymmetric first-price auctions. We use this section not just as a way of introducing the strategies, but so we can refer to them later when discussing what researchers concerned with solving for (inverse-) bid functions at asymmetric first-price auctions have done.

3.1 Shooting Algorithms