Chapter 13. Competitor Research and Analysis

Competitor research and analysis may seem like a copycat activity, but it is not (at least not entirely). It is about understanding what your competitors are doing. It is about examining their keyword and linking strategies while realizing their current strengths and weaknesses. The bottom line is that you are trying to understand what is making your competitors rank.

This activity may comprise elements of emulation, but what you are really trying to do is be better than your competition. Being better (in a general sense) means providing a site with more value and better perception. In an SEO sense, it means understanding all the things that make your competitors rank.

When learning about your competition, it is good to examine their past and present activities, as well as implement ways to track their future activities. Although many tools are available for you to use to learn about your competition, for most of your work you can get by with the free tools that are available. This chapter emphasizes the free tools and research that you can use in your work.

Finding Your Competition

Before you can analyze your competitors, you need to know who your competitors are. How do you find your biggest competitors? One way to do this is to find sites that rank for your targeted keywords. The sites that sell or provide products and services similar to yours are your competitors.

There is a catch if you’re relying only on keywords, though. How do you know whether you are targeting the right keywords? What if you miss important keywords? You may be starting off in the wrong direction. This makes it all the more important to do your keyword research properly. No tool or activity will ever be perfect. You have to start somewhere.

When starting your competitor research, you will almost always want to start with your local competition. Visit the local online business directories in your niche to find potential competition. Research the Yellow Pages (Local Directory) or your area equivalent. Use your local version of Google to find businesses in your area by using your targeted keywords. Also visit the Google Local Business Center to find current advertisers. Visit the Yahoo! Directory, Dmoz.org, and BBB.org to find more competitors in your niche. Note their directory descriptions and analyze the keywords they use in these descriptions. Create a list of your top 10 to 20 local competitors.

If you have global competitors, see whether you can learn anything from their online presence. Just focusing on your local competition can be a mistake, especially if you will be providing products and services globally.

Even if your business or clients are all local, you can still pick up tips from your global equivalents. Chances are someone is doing something similar somewhere else in the world. Create a list of 10 to 20 global competitors.

You can find your competitors using a variety of online tools as well. Later in this chapter, we will explore several tools and methods that can help you identify your competitors.

Keyword-Based Competitor Research

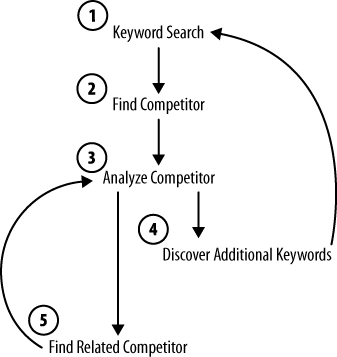

When researching your competitors, you will likely be doing many things at once. Figure 13-1 depicts the basic manual process of competitor research. You also have the option of using some of the online tools that are available to help you along the way—not only to find your competitors, but also to save you time.

The manual approach

With the manual approach, you run all the keywords on your keyword list through at least two of the major search engines (Google, Yahoo!, or Bing). Of course, not all of the search results will be those of your direct competitors. You will need to browse and research these sites to make that determination.

You might need to browse through several SERPs to get to your competition. If you do, this may mean the keywords you are targeting may not be good. If you find no competitor sites, you may have hit the right niche.

While going through your keyword list, analyze each competitor

while finding any new keywords you may have missed. For each

competitor URL, run Google’s related:

command to find any additional competitors you may have missed.

Repeat this process until you have run out of keywords.

Analyze your competitors’ sites using the information we already discussed regarding internal and external ranking factors. When analyzing each competitor site, pay attention to each search result and to the use of keywords within each result. Expand your research by browsing and analyzing your competitor’s site.

You can determine the kind of competitor you have just by doing some basic inspections. For starters, you may want to know whether they are using SEO. Inspect their HTML header tags for keywords and description meta tags, as these can be indications of a site using SEO.

Although the use of meta keyword tags is subsiding, many sites still use them, as they

may have done their SEO a long time ago when meta keyword tags

mattered. The meta description tag is important, though, as it

appears on search engine search results. The same can be said of the

HTML <title> tag.

Examine those carefully.

Continue by inspecting the existence or the lack of a robots.txt file. If the file exists, this could indicate that your competitor cares about web spiders. If your competitor is using a robots.txt file, see whether it contains Sitemap file definition.

Sites syndicating their content will typically expose their RSS link(s) on many pages within their site. You may also subscribe to those feeds to learn of any new developments so that you can keep an eye on your competition. If a site contains a Sitemap (HTML, XML, etc.), scan through it, as it typically will contain a list of your competitor’s important URLs as well as important keywords.

For each competitor URL you find, you should do more research to determine the URL’s Google PageRank and Alexa rank, the age of the site and the page, the page copy keywords, the meta description, the size of the Google index, and the keyword search volume.

As you can see, doing all of this manually will take time. There ought to be a better (easier) way! And there is.

Utilizing SEO tools and automation

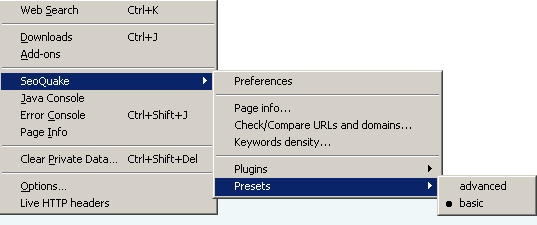

Automation cannot replace everything. You still need to visit each competitor’s site to do proper research and analysis. But automation does help in the collection of data such as Google PageRank, Google index size, and so forth. This is where tools such as SeoQuake can be quite beneficial.

You can install SeoQuake in either Firefox or Internet Explorer. It is completely free and very easy to install. It comes preconfigured with basic features, as shown in Figure 13-2.

The best way to learn about SeoQuake is to see it in action. For this small exercise, we’ll search for winter clothes in Google using the basic method as well as with the help of SeoQuake. Figure 13-3 shows how the Google SERP looks with and without SeoQuake.

SeoQuake augments each search result with its own information (at the bottom of each search result). This includes the Google PageRank value, the Google index size, the cached URL date, the Alexa rank, and many others.

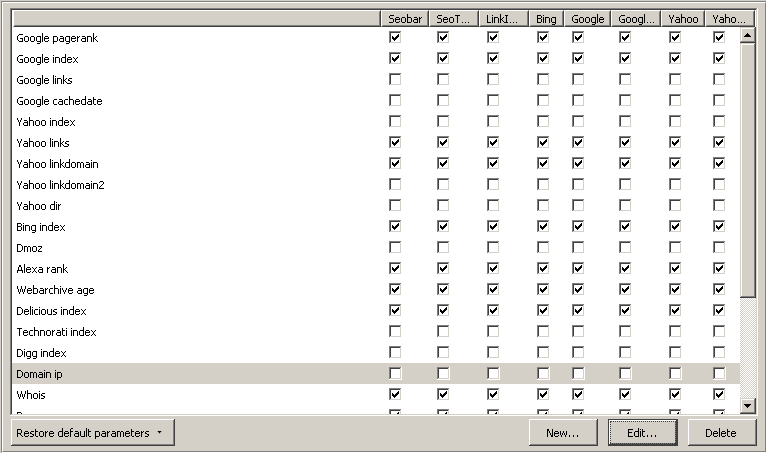

When running SeoQuake, you will see the SERP mashup with many value-added bits of information, which SeoQuake inserts on the fly. You can easily change the SeoQuake preferences by going to the Preferences section, as shown in Figure 13-4.

The beauty of the SeoQuake plug-in is in its data export feature. You can export all of the results in a CSV file that you can then view and reformat in Excel.

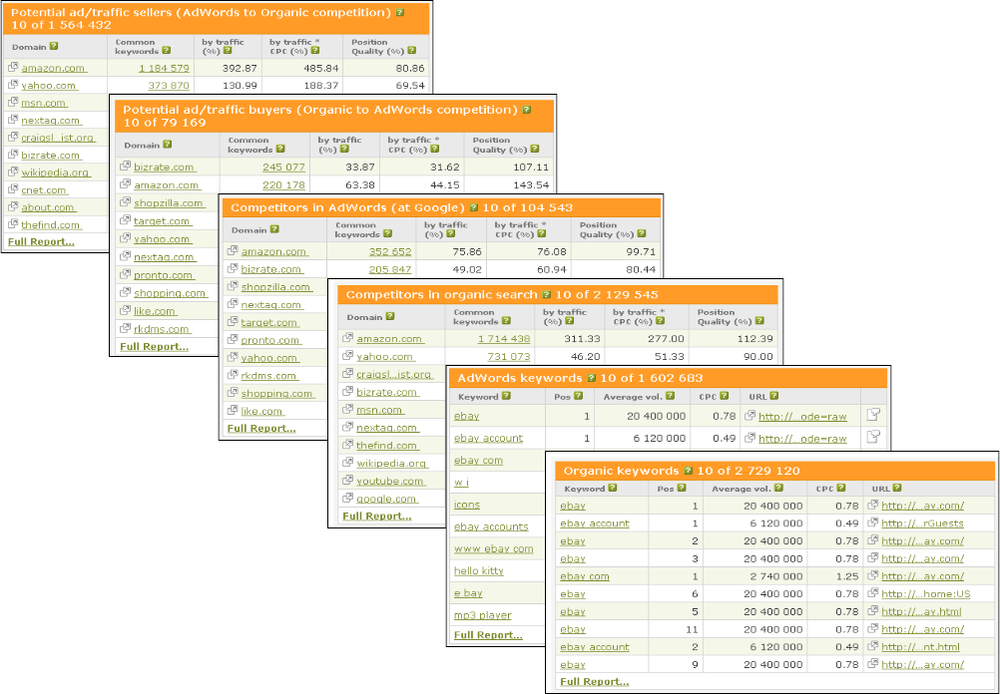

The creators of SeoQuake are also the creators of SEMRush. SEMRush provides competitor intelligence data that is available either free or commercially. You can find lots of useful information via SEMRush. Figure 13-5 shows sample output when browsing http://www.semrush.com/info/ebay.com.

You can do the same thing with your competitor URLs. Simply use the following URL format in your web browser:

| http://www.semrush.com/info/CompetitorURL.com |

SEMRush provides two ways to search for competitor data. You can enter a URL or search via keywords. If you search via keywords, you will see a screen similar to Figure 13-6.

Finding Additional Competitor Keywords

Once you have your competitor list, you can do further keyword research. Many tools are available for this task.

Using the Google AdWords Keyword Tool Website Content feature

Google offers a Website Content feature in its free AdWords Keyword Tool. Using this feature, you can enter a specific URL to get competitive keyword ideas based on the URL. So far, I have found this feature to be of limited use.

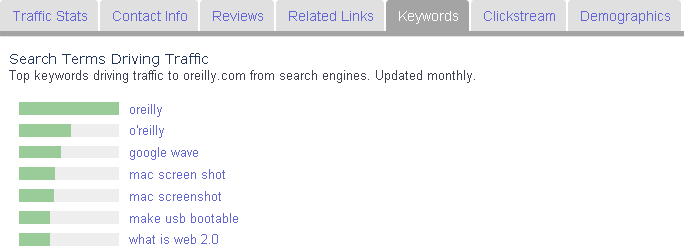

Using Alexa keywords

You can also use Alexa to find some additional keyword ideas. More specifically, Alexa can give you keywords driving traffic to a particular site. Figure 13-7 shows a portion of the results of a keyword search for oreilly.com. The actual Alexa screen shows the top 30 keyword queries (as of this writing).

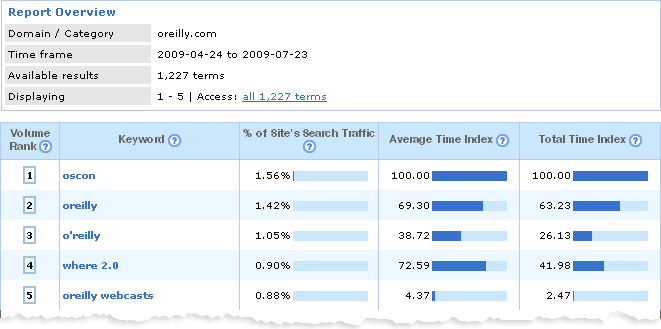

Using Compete keywords

You can use Compete to get similar data. The professional version gets you all of the data in the report. Figure 13-8 shows similar keyword data when searching for oreilly.com.

The free version of Compete gives you the top five keywords based on search volume. Although you can get by with free tools for obtaining competitor keyword data, if you do SEO on a daily basis you will need to get professional (paid) access to Compete.com. You may also want to consider using Hitwise.

Additional competitive keyword discovery tools

In addition to free tools, a variety of commercial tools are available for conducting competitor keyword research. Table 13-1 lists some of the popular ones.

Many other tools are available that focus on keyword research. Refer to Chapter 11 for more information.

Competitor Backlink Research

Backlinks are a major key ranking factor. Finding your competitors’ backlinks is one of the most important aspects of competitor analysis. The following subsections go into more details.

Basic ways to find competitor backlinks

The most popular command for finding backlinks is

the link: command,

which you can use in both Google and Yahoo! Site Explorer.

In addition, you can use many other commands to find references to your competitor links. The following commands may be helpful:

"www.competitorURL.com" inurl:www.competitorURL.com inanchor:www.competitorURL.com intitle:www.competitorURL.com allintitle:www.competitorURL.com allintitle:www.competitorURL.com site:.org site:.edu inanchor:www.competitorURL.com site:.gov inanchor:www.competitorURL.com

You can also use variations of these commands. Although some of these commands may not yield backlinks per se, you will at least be able to see where these competitors are mentioned. The more you know about your competition, the better. To expedite the process of finding your competitors’ backlinks, you can use free and commercially available backlink checker tools.

Free backlink checkers

Some of the free backlink checker tools available today are just mashups of Yahoo! Site Explorer data. With the latest agreement between Yahoo! and Microsoft, it will be interesting to see what happens to Yahoo! Site Explorer, as Bing will become the search provider for Yahoo!’s users.

Table 13-2 provides a

summary of some of the most popular backlink checker tools currently

available on the Internet. When choosing a tool, you may want to

make sure it shows information such as associated Google PageRank, use of nofollow, and link anchor text.

Commercially available backlink checkers

Free tools may be sufficient for what you are looking for. However, the best of the commercially available tools give you the bigger picture. You get to see more data. You also save a lot of time. However, no tool will be perfect, especially for low-volume keywords. Table 13-3 lists some of the popular commercially available backlink checkers.

Analyzing Your Competition

You can use many tools to analyze your research data. Many of these tools provide similar information. It is best to use several tools to gauge your competitors. Even Google’s AdWords Keyword Tool is not perfect.

It will take some time for you to find what works and what doesn’t (especially with the free tools). Whatever the case may be, make sure you use several tools before drawing your conclusions. Try out the free tools and see whether you find them to be sufficient.

Historical Analysis

After you know who some of your competitors are, you will be ready to roll up your sleeves and conduct a more in-depth competitor analysis. We’ll start by examining how your competitors got to where there are, by doing some historical research.

The Internet Archive site provides an easy way to see historical website snapshots. You can see how each site evolved over time, in addition to being able to spot ownership changes. Figure 13-9 shows an example.

Note

Web archives do not store entire sites. Nonetheless, they can help you spot significant changes over time (at least when it comes to a website’s home page).

To find historical Whois information, you can use the free Who.Is service at http://www.who.is/. Alternatively, you can use the paid service offered by Domain Tools.

Web Presence and Website Traffic Analysis

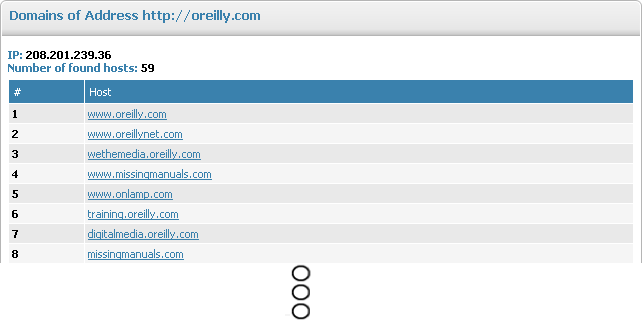

To scope your competitors’ web presence, you need to determine how many websites and subdomains they are running. Additional tools are available for estimating this traffic.

Number of sites

Using the domain lookup tool available from LinkVendor, you can enter a domain name or IP address to get all domains running on that IP address. Figure 13-10 shows partial results from a search for all domains running on the Oreilly.com IP address (208.201.239.36).

Finding subdomains

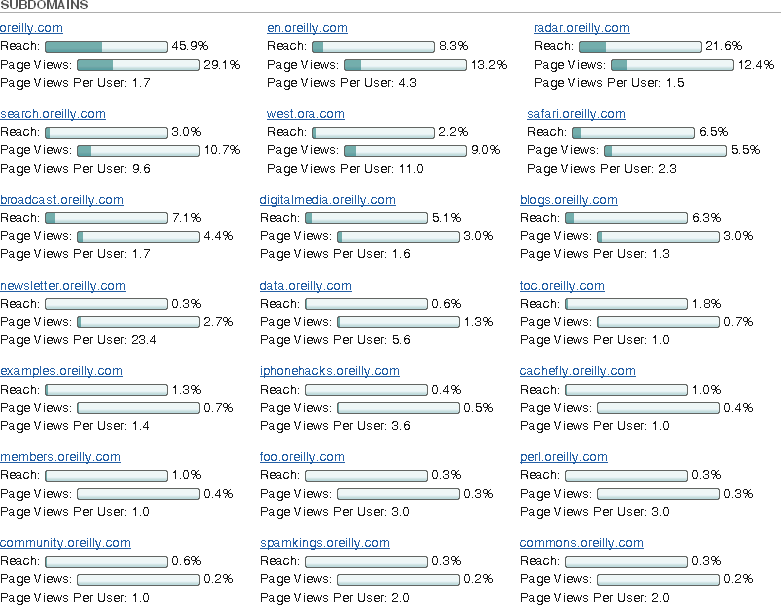

The method we discussed in the preceding section covered both domains and subdomains. If you are interested only in subdomains, you can visit the Who.Is page at http://www.who.is/website-information/. Figure 13-11 shows a portion of all the subdomains of Oreilly.com.

Figure 13-11 also shows the reach percentage, which is calculated based on the number of unique visitors to the website from a large sample of web users; the page view percentage, which represents the number of pages viewed on a website for each 1 million pages viewed on the Internet (these numbers are based on a large sample of web users); and the number of page views per user. As this data is aggregated by analyzing web usage from a large user pool, these are only approximated values. For more information, visit http://www.whois.is. The benefit of these numbers is that you can compare each subdomain in terms of popularity and relative importance.

Hosting and ownership information

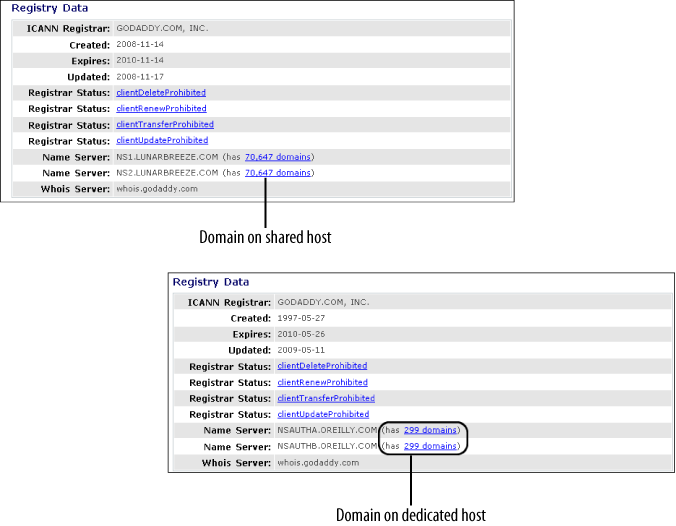

By looking at IP-related data, you can deduce several things. For instance, you can make educated guesses as to the type of hosting your competitors are using. If your competitors are running on shared hosts, this should give you a good indication of their web budget and what stage of the game they are in. There is nothing wrong with hosting sites on shared hosts. However, the big players will almost always use a dedicated host.

Figure 13-12 illustrates the two extremes. Utilizing the Registry Data section of the service provided at http://whois.domaintools.com/, we can see that the top screen fragment belongs to a shared hosting site, as its parent DNS server is also home to another 70,646 domains. In the bottom part of the figure, we can see the Oreilly.com information. In this case, even the DNS name servers are of the same domain (Oreilly.com), which indicates a more serious intent and, probably, dedicated hosting. Most big players will have their own DNS servers.

Geographical location information

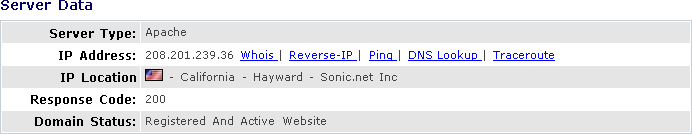

Using the same (free) Whois.domaintools.com site, you can find out your competitor’s hosting location. Figure 13-13 is a portion of the Server Data section of the results.

Estimating domain worth

Estimating the net worth of a domain is not an exact science. Many companies conduct domain name appraisals. These appraisals are typically based on a subjective formula that takes into account several factors, including the number of inbound links, Google PageRank, domain trust, and domain age.

When using this sort of data, you may be interested in seeing which websites are getting the highest appraisal (giving you another way to rank your opponents). Although these numbers are likely off, at the very least you should compare competitor domains using the same vendor to ensure that the same formula is used. Unless you are trying to buy your competitor, there is no significance in knowing the competitor’s relative worth other than to use these numbers to help you support other competitor data and findings. Both free and paid domain name appraisers are available. You can see what’s available by visiting the sites we already discussed in Chapter 3.

Determining online size

If you are dealing with small competitor sites, you can

download these sites using any offline browser software, including

HTTrack Website

Copier, BackStreet

Browser, and Offline

Explorer Pro. You can also find their relative search engine

index size by using the site: command

on Google.com, Yahoo.com,

and Bing.com.

Estimating Website Traffic

The following subsections discuss details of using Alexa and Compete to estimate competitor traffic.

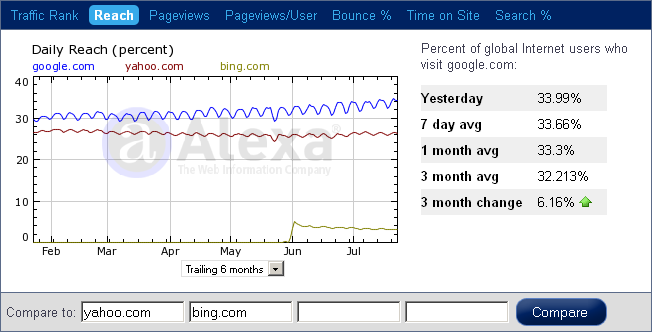

Alexa Reach

Although some people question the accuracy of data provided by Alexa, you can get some comparative data from Alexa. Figure 13-14 compares Google.com, Yahoo.com, and Bing.com. As you can see in the figure, Alexa provides several different tools. It collects its data from users who have installed the Alexa toolbar. In this example, we are showing the Reach functionality. According to Alexa:

Reach measures the number of users. Reach is typically expressed as the percentage of all Internet users who visit a given site. So, for example, if a site like yahoo.com has a reach of 28%, this means that of all global Internet users measured by Alexa, 28% of them visit yahoo.com. Alexa’s one-week and three-month average reach are measures of daily reach, averaged over the specified time period. The three-month change is determined by comparing a site’s current reach with its values from three months ago.

Compete traffic estimates

Compete provides several free and commercially available tools for competitor research. It is one of the top competitor research tools available on the Internet. When comparing the sites of Google, Yahoo!, and Bing, we get a graph similar to Figure 13-15.

As you can see, the data is much different as compared to Alexa’s graph. This highlights the need to use several tools when doing your research to make better estimates.

Estimating Social Networking Presence

The social web is a web of the moment. What is hot now may not be hot one day, one week, or one year from now. You should examine the major social networking sites to see whether your competitors are generating any buzz.

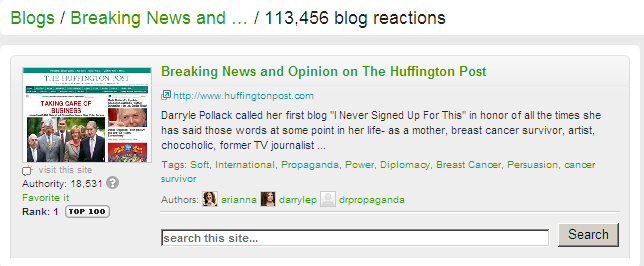

Technorati blog reactions and Authority

You can visit the Technorati website (http://technorati.com/) and enter your competitor’s URL in the search box to see whether your competitor received any blog reactions. Technorati defines blog discussions as “the number of links to the blog home page or its posts from other blogs in the Technorati index.” Figure 13-16 illustrates the concept of blog reactions.

Technorati also offers an Authority feature, which it defines as “...the number of unique blogs linking to this blog over the last six months. The higher the number, the more Technorati Authority the blog has.” You can see the Technorati Authority number in Figure 13-17 just under the blog thumbnail. This number will almost always be lower than the number of blog reactions.

Digg.com (URL history)

You can also go to http://digg.com and search for your competitor URLs to find URLs that got “dugg.” To simplify things, you can search for the root (competitor) domain URL to get multiple results (if any). Figure 13-17 illustrates the concept.

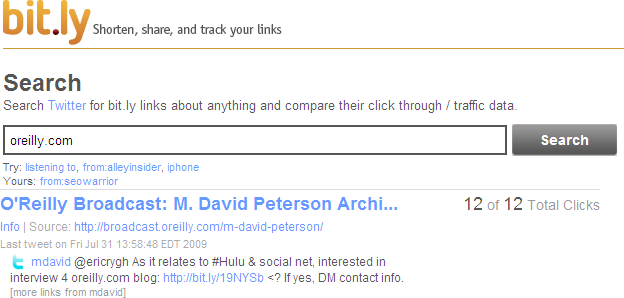

Additional tips

In addition to using Technorati and Digg, you can also go to URL shortener sites (e.g., http://bit.ly/) and search for your competitor root domain URL. You will get results similar to those shown in Figure 13-18 (if any). From there, you can see the popular links that were shortened by anyone interested.

Go to http://search.twitter.com/ and enter the base URL. See whether you get any tweet results. Continue your search on Facebook, MySpace, and others.

Competitor Tracking

In the previous sections, we discussed the different tools and methodologies of researching and analyzing your competitors. In this section, we’ll discuss how to track your competitors. You can track your competitors in many ways. First we’ll address how you may want to track a competitor’s current state, and then we’ll talk about future tracking.

Current State Competitor Auditing

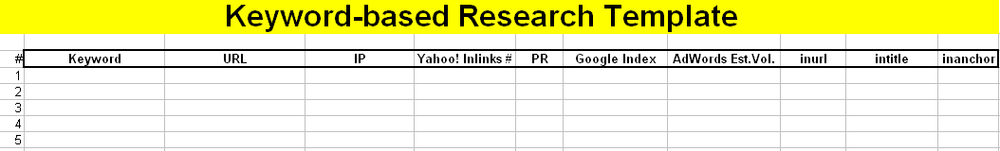

When collecting current state competitor data, it helps to use an Excel spreadsheet. For example, you can create a spreadsheet to capture keywords alongside the top URLs and other related metrics. Figure 13-19 shows one such example.

In this template, you can enter the keyword, URL, IP address, Yahoo! inlinks, Google PageRank, Google index size, AdWords estimated monthly volume, “inurl” index count, “intitle” index count, and “inanchor” index count. Note that the URL would be the top URL for a particular keyword on a particular search engine. You can reuse this template for all of the major search engines.

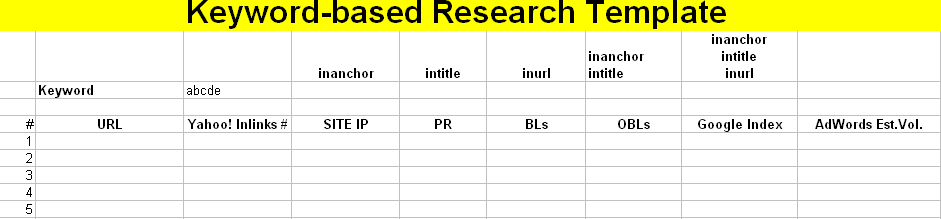

You can also take a much more detailed approach by using the template shown in Figure 13-20. In this case, you are using similar information with several major differences. You are recording the top 10 or 20 competitors for every keyword. You are also recording the number of backlinks (BLs) as well as the number of other backlinks (OBLs) found on the same backlink page. You would have to replicate this form for all of the keywords, and that would take much more effort.

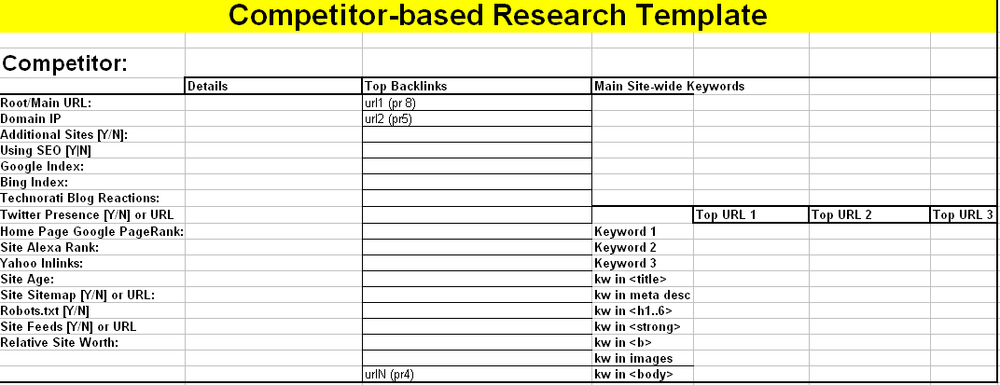

To make your research tracking more complete, you should also spend some time analyzing each competitor manually (in more detail). Figure 13-21 shows an example template you could use in this case. The spreadsheet contains several major areas, each detailing different aspects of a particular competitor.

You don’t have to follow this exact format; you can make many different permutations when documenting competitor data. Once you become familiar with your chosen tool, you can customize these templates to your own style.

You should be familiar with the metrics in Figure 13-21, as we already covered all of them throughout the chapter. The spreadsheet is available for download at http://book.seowarrior.net.

Future State Tracking

Performing regular competitor audits should be your homework. You should watch your existing competitors, but also any new ones that might show up unexpectedly. The more proactive you are, the faster you can react.

Existing competitor tracking

So, you’ve done your due diligence and have documented your (current state) competitor research. At this point, if you want to be proactive, you should think of ways to follow your competition. You want to be right on their tail by watching their every move.

As we already discussed, you could subscribe to their feeds. You could also set up a daily monitor script to watch for changes in their home pages or any other pages of interest. You could alter the monitor script (as we discussed in Chapter 6) by checking for content size changes or a particular string that could signal a change in a page.

New competitor detection

Typically, you can detect new competitors by performing competitor research and analysis again and again. You can also monitor social networking buzz by setting up feeds that key off of particular keywords of interest.

For example, you can use custom feeds from Digg.com to monitor newly submitted URLs based on any keyword. The general format is:

http://digg.com/rss_search?s=keyword |

So, if you want to subscribe to a feed on the keyword loans, you could add the Digg.com feed to your feed reader by using the following link:

| http://digg.com/rss_search?s=loans |

The sky is the limit when it comes to competitor tracking and detection. All you need is a bit of creativity and the desire to be proactive. Digg.com is only one of the available platforms; you can do similar things on all the other tracking and detection platforms we discussed in this chapter.

Automating Search Engine Rank Checking

We’ll end this chapter by designing a simple automated rank-checking script. Other programs on the Internet can accomplish the same thing. Most of them will stop working anytime search engines change their underlying HTML structure, however.

A few years ago, Google provided the SOAP API toolkit to query its search index database. To use this service, webmasters had to register on the Google website to obtain their API key, which would allow them to make 1,000 queries per day. This service is no longer available.

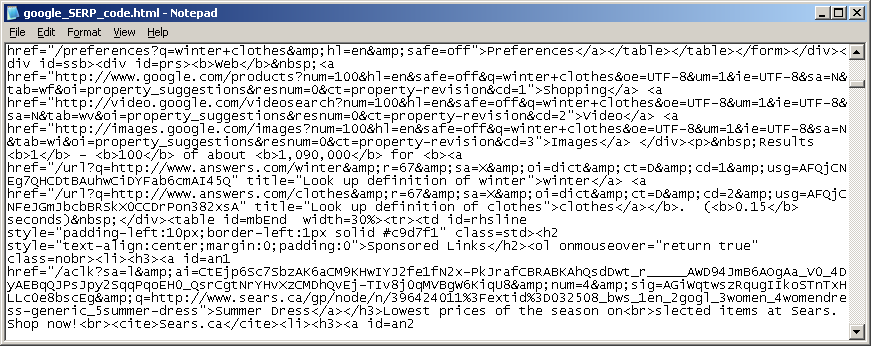

But all search engines are not the same, and finding rankings on several search engines for specific keywords and URLs can be a tedious exercise if done manually. Fortunately, there is an easier way: web scraping. To do this, it is necessary that you understand the underlying HTML structure of SERPs.

If you try to view the HTML code of Google and Bing, you will see something similar to Figure 13-22. As you can see, this is barely readable. You need to reformat this code so that you can analyze it properly. Many applications can accomplish this. One such application is Microsoft FrontPage.

Microsoft FrontPage has a built-in HTML formatter that is available in Code view by simply right-clicking over any code fragment. After you format the HTML code, things start to make sense. Identifying HTML patterns is the key task. At the time of this writing, Google’s organic search results follow this pattern:

<!-- start of organic result 1 --> <li class="g"> <h3 class="r"> <a href="http://www.somedomain.com/" class="l" onmousedown="return clk(this.href,'','','res','7','')"> Some text <em>Some Keyword</em> </a></h3><!--some other text and tags--> </li> <!--end of organic result 1 --> ... ... <!-- start of organic result N --> <li class="g"> <h3 class="r"> <a href="http://www.somedomain.com/" class="l" onmousedown="return clk(this.href,'','','res','7','')"> Some text <em>Some Keyword</em> </a></h3><!--some other text and tags--> </li> <!--end of organic result N -->

The HTML comments (as indicated in the code fragment) are there only for clarity and do not appear in the actual Google search results page. Similarly, the Bing search results page has the following pattern:

<!-- start of organic result 1 --> <li> <div class="sa_cc"> <div class="sb_tlst"> <h3><a href="http://somedomain.com/" onmousedown="return si_T('&ID=SERP,245')">search result description...</a></h3> <!--other code--> </div> </div> </li> <!-- end of organic result 1 --> ... ... <!-- start of organic result N --> <li> <div class="sa_cc"> <div class="sb_tlst"> <h3><a href="http://somedomain.com/" onmousedown="return si_T('&ID=SERP,246')">search result description...</a></h3> <!--other code--> </div> </div> </li> <!-- end of organic result N -->

Taking all these patterns into consideration, it should now be possible to create scripts to automate ranking checking. That is precisely what the getRankings.pl Perl script does, which you can find in Appendix A.

Before running this script, ensure that you have the wget.exe utility in your execution path. You can run the script by supplying a single URL and keyword, or by supplying a keyword phrase as shown in the following fragment:

perl getRanking.pl [TargetURL] [Keyword]

OR

perl getRanking.pl [TargetURL] [Keyword1] [Keyword2] ...Here are real examples:

perl getRanking.pl www.randomhouse.com best books perl getRanking.pl en.wikipedia.org/best-selling_books best books

If you run the first example, the eventual output would look like the following:

perl getRankings.pl www.randomhouse.com best books Ranking Summary Report ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Keyword/Phrase: best books Target URL: www.randomhouse.com ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Google.....: 1,2 Bing.......: 40 Note: Check with specific SE to ensure correctness.

The output of the script provides the rankings summary. The summary information should be self-explanatory. The http://www.randomhouse.com URL shows up on Google in positions 1 and 2, while on Bing it shows up in position 40.

It should be clear that search engine algorithms vary among search engines. Also note that the script uses the top 100 search results for each of the two search engines. You can expand the script to handle 1,000 results or to parse stats from other search engines using similar web scraping techniques.

Warning

Do not abuse the script by issuing too many calls. If you do, Google and others will temporarily ban your IP from further automated queries, and you will be presented with the image validation (CAPTCHA) form when you try to use your browser for manual searches.

The intent of this script is to simplify the gathering of search engine rankings. Depending on the number of websites you manage, you may still be hitting search engines significantly. Consider using random pauses between each consecutive query. Also, search engine rankings do not change every second or every minute. Running this script once a week or once a month should be more than plenty in most cases. Use common sense while emulating typical user clicks.

Summary

This chapter focused on competitor research and analysis and many of the tools you can use to perform these tasks properly and efficiently. First we examined ways to find your competitors. We talked about the manual approach, and then explored ways to automate some of the manual tasks. We explored the SeoQuake plug-in in detail.

We also examined ways to find competitor keywords as well as competitor backlinks. In addition, we talked about different ways to analyze your competition. We discussed historical analysis, web presence, and website traffic analysis.

The last section covered competitor tracking. We explored different ways to audit your competitors, and at the end of the chapter we discussed a script that can automate the process of checking competitor search engine rankings for specific keywords.