Maintaining storage infrastructure

As an IT environment grows and is renewed, so must the storage infrastructure. One of the many benefits that the IBM FlashSystem family software (IBM Spectrum Virtualize) provides, is to greatly simplify the storage management tasks that system administrators need to perform.

This chapter highlights guidance for the maintenance activities of storage administration by using the IBM FlashSystem family software that is installed on the product. This guidance can help you to maintain your storage infrastructure with the levels of availability, reliability, and resiliency that are demanded by today’s applications, and to keep up with storage growth needs.

This chapter concentrates on the most important topics to consider in IBM FlashSystem administration so that you can use it as a checklist. It also provides best practice tips and guidance. To simplify the Storage Area Network (SAN) storage administration tasks that you use often, such as adding new users, storage allocation and removal, or adding and removing a host from the SAN, create step-by-step, standard procedures for them.

The discussion in this chapter focuses on the IBM FlashSystem 9200 for the sake of simplicity, using screen captures and command outputs from this model. The recommendations and practices that are discussed in this chapter are applicable to the following models:

•IBM FlashSystem 5010

•IBM FlashSystem 5015

•IBM FlashSystem 5030

•IBM FlashSystem 5035

•IBM FlashSystem 5100

•IBM FlashSystem 5200

•IBM FlashSystem 7200

•IBM FlashSystem 9100

•IBM FlashSystem 9200

|

Note: The practices that are described in this chapter were effective in many installations of different models of the IBM FlashSystem family. These installations were performed in various business sectors for various international organizations. They all had one common need: to manage their storage environment easily, effectively, and reliably.

|

This chapter includes the following topics:

10.1 User interfaces

The IBM FlashSystem family provides several user interfaces to allow you to maintain your system. The interfaces provide different sets of facilities to help resolve situations that you might encounter. The interfaces for servicing your system connect through the 1 Gbps Ethernet ports that are accessible from port 1 of each canister.

•Use the management graphical user interface (GUI) to monitor and maintain the configuration of storage that is associated with your clustered systems.

•Use the service assistant tool GUI to complete service procedures.

•Use the command-line interface (CLI) to manage your system.

The best practice recommendation is to use the interface most appropriate to the task you are attempting to complete. For example, a manual software update is best performed using the service assistant GUI or the CLI. Running fix procedures to resolve problems or configuring expansion enclosures can only be performed using the management GUI. The creation of many volumes with customized names is best performed using the CLI using a script. To ensure efficient storage administration, become familiar with all available user interfaces.

10.1.1 Management GUI

The management GUI is the primary tool that is used to service your system. Regularly monitor the status of the system using the management GUI. If you suspect a problem, use the management GUI first to diagnose and resolve the problem. Use the views that are available in the management GUI to verify the status of the system, the hardware devices, the physical storage, and the available volumes.

To access the Management GUI, start a supported web browser and go to https://<flashsystem_ip_address>, where the <flashsystem_ip_address> is the management IP address set when the clustered system is created.

For more information about the task menus and functions of the Management GUI, see Chapter 4 of Implementing the IBM System Storage SAN Volume Controller with IBM Spectrum Virtualize Version 8.4, SG24-8491.

10.1.2 Service assistant tool GUI

The service assistant interface is a browser-based GUI that can be used to service individual node canisters in the control enclosures.

|

Important: If used incorrectly, the service actions that are available through the service assistant can cause loss of access to data or even data loss.

|

You connect to the service assistant on one node canister by entering the service IP address. If there is a working communications path between the node canisters, you can view status information and perform service tasks on the other node canister by making the other node canister the current node. You do not have to reconnect to the other node. On the system itself, you can also access the service assistant interface by using the technician port.

The service assistant only provides facilities to help you service control enclosures. Always service the expansion enclosures by using the management GUI.

You can also complete the following actions using the service assistant:

•Collect logs to create and download a package of files to send to support personnel.

•Provide detailed status and error summaries.

•Remove the data for the system from a node.

•Recover a system if it fails.

•Install a code package from the support site or rescue the code from another node.

•Update code on node canisters manually.

•Configure a control enclosure chassis after replacement.

•Change the service IP address that is assigned to Ethernet port 1 for the current node canister.

•Install a temporary SSH key if a key is not installed and CLI access is required.

•Restart the services used by the system.

To access the Service Assistant Tool GUI, start a supported web browser and go to: https://<flashsystem_ip_address>/service, where <flashsystem_ip_address> is the service IP address for the node canister or the management IP address for the system on which you want work.

10.1.3 Command-line interface

The system CLI is intended for use by advanced users who are confident using a CLI. Up to 32 simultaneous interactive Secure Shell (SSH) sessions to the management IP address are supported.

Nearly all the functions that is offered by the CLI is available through the management GUI. However, the CLI does not provide the fix procedures that are available in the management GUI. Alternatively, use the CLI when you require a configuration setting that is unavailable in the management GUI.

Entering help in a CLI displays a list of all available commands. You have access to a few other UNIX commands in the restricted shell, such as grep and more, which are useful in formatting output from the CLI commands. Reverse-i-search (Ctrl+R) is also available. Table 10-1 shows a list of UNIX commands:

Table 10-1 UNIX commands available in the CLI

|

UNIX command

|

Description

|

|

grep

|

Filter output by keywords

|

|

more

|

Moves through output one page at a time

|

|

sed

|

Filters output

|

|

sort

|

Sorts output

|

|

cut

|

Removes individual columns from output

|

|

head

|

Display only first lines

|

|

less

|

Moves through the output one page at a time

|

|

tail

|

Display only last lines

|

|

uniq

|

Hides any duplicates in the output

|

|

tr

|

Translates characters

|

|

wc

|

Counts lines, words and characters in the output

|

|

history

|

Display command history

|

|

scp

|

Secure copy protocol

|

For more information about command reference and syntax, see the following resources:

Service command-line interface

Service CLI commands also can be run on a specific node. To run such a command in this way, log in to the service IP address of the node that requires servicing.

For more information about the use of the service command line, see this IBM Documentation web page.

USB command interface

When a USB flash drive is inserted into one of the USB ports on a node, the software searches for a control file (satask.txt) on the USB flash drive and runs the command that is specified in the file. Using the USB flash drive is required in the following situations:

•When you cannot connect to a node canister in a control enclosure using the service assistant and you want to see the status of the node.

•When you do not know, or cannot use, the service IP address for the node canister in the control enclosure and must set the address.

•When you have forgotten the superuser password and must reset the password.

For more information about the use of the USB port, see this IBM Documentation web page.

Technician port

The technician port is an Ethernet port on the back window of the IBM FlashSystem product that you can use to configure the node. You can use the technician port to do most of the system configuration operations, which includes the following tasks:

•Defining a management IP address

•Initializing a new system

•Servicing the system

For more information about the use of the Technician port, see this IBM Documentation web page.

10.2 Users and groups

Almost all organizations have IT security policies that enforce the use of password-protected user IDs when their IT assets and tools are used. However, some storage administrators still use generic shared IDs, such as superuser, admin or root, in their management consoles to perform their tasks. They might even use a factory-set default password. Their justification might be a lack of time, forgetfulness, or the fact that their SAN equipment does not support the organization’s authentication tool.

SAN storage equipment management consoles often do not provide direct access to stored data, but one can easily shut down (accidentally or deliberately) a shared storage controller and any number of critical applications along with it. Moreover, having individual user IDs set for your storage administrators allows much better auditing of changes if you must analyze your logs.

IBM FlashSystem supports the following authentication methods:

•Local authentication using a password

•Local authentication using SSH keys

•Remote authentication using Lightweight Directory Access Protocol (LDAP) (Microsoft Active Directory or IBM Security Directory Server)

Local authentication is appropriate for small, single enclosure environments. Larger environments with multiple clusters and enclosures benefit from the ease of maintenance that is achieved by using single sign-on (SSO) that uses remote authentication using LDAP, for example.

By default, the following user groups are defined:

•Monitor: Users with this role can view objects but cannot manage the system or its resources. Support personnel can be assigned this role to monitor the system and to determine the cause of problems. This role must be assigned to the IBM Storage Insights user. For more information about IBM Storage Insights, see Chapter 9, “Implementing a storage monitoring system” on page 387.

•Copy Operator: Users with this role have monitor role privileges and can create, change, and manage all Copy Services functions.

•Service: Users can set the time and date on the system, delete dump files, add and delete nodes, apply service, and shut down the system. Users can also perform the same tasks as users in the monitor role.

•Administrator: Users with this role can access all functions on the system except those that deal with managing users, user groups, and authentication.

•Security Administrator: Users with this role can access all functions on the system, including managing users, user groups, and user authentication.

•Restricted Administrator: Users with this role can complete some tasks, but are restricted from deleting certain objects. Support personnel can be assigned this role to solve problems.

•3-Site Administrator: Users with this role can configure, manage, and monitor 3-site replication configurations through certain command operations only available on the 3-Site Orchestrator.

•vStorage Application Programming Interface (API) for Storage Awareness (VASA) Provider: Users with this role can manage virtual volumes (vVols) that are used by VMware vSphere and managed through IBM Spectrum Control software.

•FlashCopy Administrator: Use the FlashCopy commands to work with FlashCopy system methods and functions. For more information, see this IBM Documentation web page.

In addition to standard groups, you can also configure ownership groups to manage access to resources on the system. An ownership group defines a subset of users and objects within the system. You can create ownership groups to further restrict access to specific resources that are defined in the ownership group.

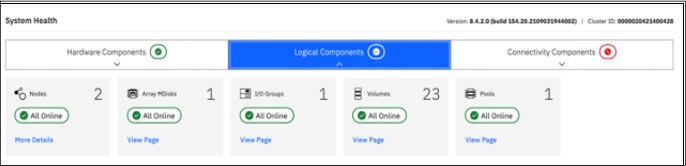

Users within an ownership group can view or change only resources within the ownership group in which they belong. For example, you can create an ownership group for database administrators to provide monitor-role access to a single pool used by their databases. Their views and privileges in the management GUI are automatically restricted, as shown in Figure 10-1.

Figure 10-1 System Health Logical Components view

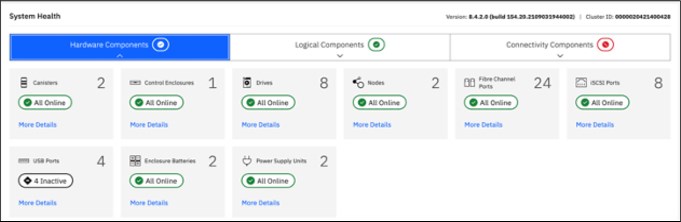

Figure 10-2 shows the Dashboard System Health hardware components view.

Figure 10-2 System Health Hardware Components view

Regardless of the authentication method you choose, complete the following tasks:

•Create individual user IDs for your Storage Administration staff. Choose user IDs that easily identify the user and meet your organization’s security standards.

•Include each individual user ID into the UserGroup with only enough privileges to perform the required tasks. For example, your first-level support staff probably only require Monitor group access to perform their daily tasks, whereas second-level support might require Restricted Administrator access. Consider using Ownership groups to further restrict privileges.

•If required, create generic user IDs for your batch tasks, such as Copy Services or Monitoring. Include them in a Copy Operator or Monitor UserGroup. Never use generic user IDs with the SecurityAdmin privilege in batch tasks.

•Create unique SSH public and private keys for each administrator requiring local access.

•Store your superuser password in a safe location in accordance to your organization’s security guidelines and use it only in emergencies.

10.3 Volumes

A volume is a logical disk that is presented to a host by an I/O group (pair of nodes), and within that group a preferred node serves I/O requests to the volume.

When you allocate and deallocate volumes to hosts, consider the following guidelines:

•Before you allocate new volumes to a server with redundant disk paths, verify that these paths are working well, and that the multipath software is free of errors. Fix disk path errors that you find in your server before you proceed.

•When you plan for future growth of space efficient volumes (VDisks), determine whether your server’s operating system supports the particular volume to be extended online. AIX V6.1 TL2 and lower, for example, do not support online expansion of rootvg logical unit numbers (LUNs). Test the procedure in a nonproduction server first.

•Always cross-check the host LUN ID information with the vdisk_UID of the IBM FlashSystem. Do not assume that the operating system recognizes, creates, and numbers the disk devices in the same sequence or with the same numbers as you created them in the IBM FlashSystem.

•Ensure that you delete any volume or LUN definition in the server before you unmap it in the IBM FlashSystem. For example, in AIX, remove the hdisk from the volume group (reducevg) and delete the associated hdisk device (rmdev).

•Consider enabling volume protection by using chsystem vdiskprotectionenabled yes -vdiskprotectiontime <value_in_minutes>. Volume protection ensures that some CLI actions (most of those that either explicitly or implicitly remove host-volume mappings or delete volumes) are policed to prevent the removal of mappings to volumes or deletion of volumes that are considered active; the system detected I/O activity to the volume from any host within a specified time period (15 - 1440 minutes).

|

Note: Volume protection cannot be overridden using the -force flag in the affected CLI commands. Volume protection must be disabled to carry on an activity that is blocked.

|

•Ensure that you explicitly remove a volume from any volume-to-host mappings and any copy services relationship to which it belongs before you delete it.

|

Attention: You must avoid the use of the -force parameter in rmvdisk.

|

•If you issue the svctask rmvdisk command and it still has pending mappings, the IBM FlashSystem prompts you to confirm the action and this is a hint that you might have done something incorrectly.

•When you are deallocating volumes, plan for an interval between unmapping them to hosts (rmvdiskhostmap) and deleting them (rmvdisk). The IBM internal Storage Technical Quality Review Process (STQRP) asks for a minimum of a 48-hour period, and having at least a one business day interval so that you can perform a quick backout if you later realize you still need some data on that volume.

For more information about volumes, see Chapter 5, “Volume types” on page 199.

10.4 Hosts

A host is a computer that is connected to the SAN switch through Fibre Channel (FC), iSCSI, and other protocols.

When you add and remove hosts in the IBM FlashSystem, consider the following guidelines:

•Before you map new servers to the IBM FlashSystem, verify that they are all error free. Fix errors that you find in your server and IBM FlashSystem before you proceed. In the IBM FlashSystem, pay special attention to anything inactive in the lsfabric command.

•Plan for an interval between updating the zoning in each of your redundant SAN fabrics, such as at least 30 minutes. This interval allows for failover to occur and stabilize, and for you to be notified if unexpected errors occur.

•After you perform the SAN zoning from one server’s host bus adapter (HBA) to the IBM FlashSystem, you should list its worldwide port name (WWPN) by using the lshbaportcandidate command. Use the lsfabric command to certify that it was detected by the IBM FlashSystem nodes and ports that you expected. When you create the host definition in the IBM FlashSystem (mkhost), try to avoid the -force parameter. If you do not see the host’s WWPNs, it might be necessary to scan fabric from the host. For example, use the cfgmgr command in AIX.

For more information about hosts, see Chapter 8, “Configuring hosts” on page 367.

10.5 Software updates

Because the IBM FlashSystem might be at the core of your disk and SAN storage environment, the software update procedure requires planning, preparation, and verification. However, with the appropriate precautions, an update can be conducted easily and transparently to your servers and applications. This section highlights applicable guidelines for the IBM FlashSystem update.

Most of the following sections explain how to prepare for the software update. These sections also present version-independent guidelines on how to update the IBM FlashSystem family systems and flash drives.

Before you update the system, ensure that the following requirements are met:

•The latest update test utility is downloaded from IBM Fix Central to your management workstation. For more information, see this IBM Fix Central web page.

•The latest system update package is downloaded from IBM Fix Central to your management workstation.

•All node canisters are online.

•All errors in the system event log are addressed and marked as fixed.

•There are no volumes, MDisks, or storage systems with Degraded or Offline status.

•The service assistant IP is configured on every node in the system.

•The system superuser password is known.

•The system configuration is backed up and saved (preferably off-site), as shown in Example 10-11 on page 512.

•You can physically access the hardware.

The following actions are not required, but are recommended to reduce unnecessary load on the system during the update:

•Stop all Metro Mirror, Global Mirror, or HyperSwap operations.

•Avoid running FlashCopy operations.

•Avoid migrating or formatting volumes.

•Stop collecting IBM Spectrum Control performance data for the system.

•Stop automated jobs that access the system.

•Ensure that no other processes are running on the system.

•If you want to update without host I/O, then shut down all hosts.

|

Note: For customers who purchased the IBM FlashSystem 9200 with a three-year warranty (9848 Models AG8 and UG8), with Enterprise Class Support (ECS) is included. This support entitles the customer to two code upgrades per year, which are performed by IBM (for total of six across the three-year warranty).

These upgrades are done by the IBM dedicated Remote Code Load (RCL) team or, where remote support is not allowed or enabled, by an onsite Systems Service Representative (SSR). A similar optional service is available for the IBM FlashSystem 7200.

For more information about ECS, see IBM FlashSystem 9200 8.4.2 Documentation - Enterprise Class Support (ECS).

|

10.5.1 Deciding the target software level

The first step is to determine your current and your target IBM FlashSystem software level.

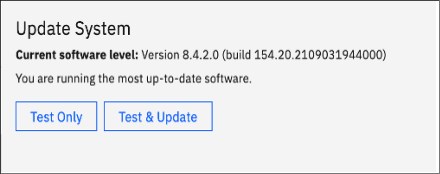

Using the example of an IBM FlashSystem 9200, log in to the web-based GUI and find the current version. Either from the right side of the top menu drop-down line, click the question mark symbol (?) and select About IBM FlashSystem 9200 to display the current version or select Settings → System → Update System to display both current and target levels.

Figure 10-3 shows the Update System output window and displays the code levels. In this example, the software level is 8.4.2.0.

Figure 10-3 Update System output window

Alternatively, if you use the CLI, run the svcinfo lssystem command. Example 10-1 shows the output of the lssystem CLI command and where the code level output can be found.

Example 10-1 lssystem command

IBM_FlashSystem:IBM Redbook FS:superuser>lssystem|grep code

Version 8.4.2.0 (build 154.20.2109031944000)

IBM FlashSystem software levels are specified by four digits in the following format (in our example V.R.M.F = 8.4.2.0):

•V is the major version number

•R is the release level

•M is the modification level

•F is the fix level

Use the latest IBM FlashSystem release unless you have a specific reason not to update, such as the following examples:

•The specific version of an application or other component of your SAN Storage environment has a known problem or limitation.

•The latest IBM FlashSystem software release is not yet cross-certified as compatible with another key component of your SAN storage environment.

•Your organization has mitigating internal policies, such as the use of the “latest release minus 1” or requiring “seasoning” in the field before implementation in a production environment.

•For more information, see Spectrum Virtualize Family of Products Upgrade Planning.

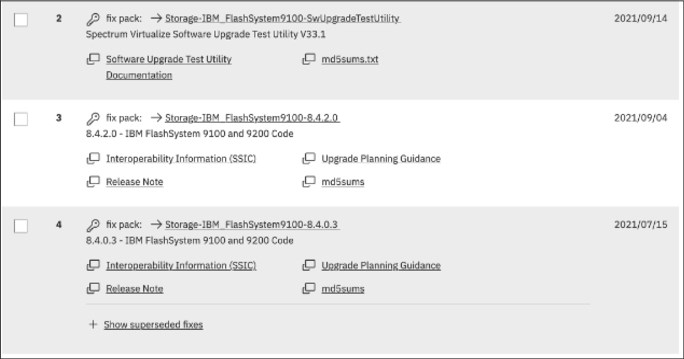

Obtaining the software packages

To obtain a new release of software for a system update, see IBM Fix Central and follow these steps:

1. From the Product selector list, type IBM FlashSystem 9200 (or whatever model is appropriate in your environment).

2. From the Installed Version list, select the current software version level that was determined in 10.5.1, “Deciding the target software level” on page 456.

3. Select Continue.

Figure 10-4 Fix Central software packages

5. Select Continue.

6. Click the option button for your preferred download options and click Continue.

7. Enter your machine type and serial number.

8. Select Continue.

9. Read the terms and conditions and then, select I Agree.

10. Select Download Now and save the three files onto your management computer.

10.5.2 Hardware considerations

Before you start the update process, always check whether your IBM FlashSystem hardware and target code level are compatible.

If part or all your current hardware is not supported at the target code level that you want to update to, replace the unsupported hardware with newer models before you update to the target code level.

Conversely, if you plan to add or replace hardware with new models to an existing cluster, you might have to update your IBM FlashSystem code first.

10.5.3 Update sequence

Check the compatibility of your target IBM FlashSystem code level with all components of your SAN storage environment (SAN switches, storage controllers, server HBAs) and its attached servers (operating systems and eventually, applications).

Applications often certify only the operating system that they run under and leave to the operating system provider the task of certifying its compatibility with attached components (such as SAN storage). However, various applications might use special hardware features or raw devices and certify the attached SAN storage. If you have this situation, consult the compatibility matrix for your application to certify that your IBM FlashSystem target code level is compatible.

The IBM FlashSystem Supported Hardware List provides the complete information for using your IBM FlashSystem SAN storage environment components with the current and target code level. For links to the Supported Hardware List, Device Driver, Firmware, and Recommended Software Levels for different products and different code levels, see this IBM Support web page.

By cross-checking the version of IBM FlashSystem is compatible with the versions of your SAN environment components, you can determine which one to update first. By checking a component’s update path, you can determine whether that component requires a multistep update.

If you are not making major version or multi-step updates in any components, the following update order is recommended to avoid eventual problems:

1. SAN switches or directors

2. Storage controllers

3. Servers HBAs microcode and multipath software

4. IBM FlashSystem

5. IBM FlashSystem internal Non-Volatile Memory express (NVMe) drives

6. IBM FlashSystem Serial Attached SCSI (SAS) attached solid-state drive (SSD)

|

Attention: Do not update two components of your IBM FlashSystem SAN storage environment simultaneously, such as an IBM FlashSystem 9200 and one storage controller. This caution is true even if you intend to do it with your system offline. An update of this type can lead to unpredictable results, and an unexpected problem is more difficult to debug.

|

10.5.4 SAN fabrics preparation

If you are using symmetrical, redundant, independent SAN fabrics, preparing these fabrics for an IBM FlashSystem update can be safer than hosts or storage controllers. This statement is true assuming that you follow the guideline of a 30-minute minimum interval between the modifications that you perform in one fabric to the next. Even if an unexpected error brings down your entire SAN fabric, the IBM FlashSystem environment continues working through the other fabric and your applications remain unaffected.

Because you are updating your IBM FlashSystem, also update your SAN switches code to the latest supported level. Start with your principal core switch or director, continue by updating the other core switches, and update the edge switches last. Update one entire fabric (all switches) before you move to the next one so that a problem you might encounter affects only the first fabric. Begin your other fabric update only after you verify that the first fabric update has no problems.

If you are not running symmetrical, redundant, independent SAN fabrics, fix this problem as a high priority because it represents a single point of failure.

10.5.5 Storage controllers preparation

As critical as with the attached hosts, the attached storage controllers must correctly handle the failover of MDisk paths. Therefore, they must be running supported microcode versions and their own SAN paths to IBM FlashSystem must be free of errors.

10.5.6 Hosts preparation

If the appropriate precautions are taken, the IBM FlashSystem update is not apparent to the attached servers and their applications. The automated update procedure updates one IBM FlashSystem node at a time, while the other node in the I/O group covers for its designated volumes.

However, to ensure that this feature works, the failover capability of your multipath software must be working correctly. This capability can be mitigated by enabling NPIV if your current code level supports this function. For more information about N_Port ID Virtualization (NPIV), see Chapter 8, “Configuring hosts” on page 367.

Before you start IBM FlashSystem update preparation, check the following items for every server that is attached to IBM FlashSystem that you update:

•The operating system type, version, and maintenance or fix level

•The make, model, and microcode version of the HBAs

•The multipath software type, version, and error log

For information about troubleshooting, see this IBM Documentation web page.

Fix every problem or “suspect” that you find with the disk path failover capability. Because a typical IBM FlashSystem environment can have hundreds of servers that are attached to it, a spreadsheet might help you with the Attached Hosts Preparation tracking process. If you have some host virtualization, such as VMware ESX, AIX Logical Partitions (LPARs), IBM Virtual I/O Server (VIOS), or Solaris containers in your environment, verify the redundancy and failover capability in these virtualization layers.

10.5.7 Copy services considerations

When you update an IBM FlashSystem family product that participates in an intercluster Copy Services relationship, do not update both clusters in the relationship simultaneously. This situation is not verified or monitored by the automatic update process and might lead to a loss of synchronization and unavailability.

You must successfully finish the update in one cluster before you start the next one. Try to update the next cluster as soon as possible to the same code level as the first one. Avoid running them with different code levels for extended periods.

10.5.8 Running the Upgrade Test Utility

It is a requirement that you install and run the latest IBM FlashSystem Upgrade Test Utility before you update the IBM FlashSystem software. For more information, see Software Upgrade Test Utility.

This tool verifies the health of your IBM FlashSystem storage array for the update process. It also checks for unfixed errors, degraded MDisks, inactive fabric connections, configuration conflicts, hardware compatibility, drive firmware, and many other issues that might otherwise require cross-checking a series of command outputs.

|

Note: The Upgrade Test Utility does not log in to storage controllers or SAN switches. Instead, it reports the status of the connections of the IBM FlashSystem to these devices. It is the users’ responsibility to check these components for internal errors.

|

You can use the management GUI or the CLI to install and run the Upgrade Test Utility.

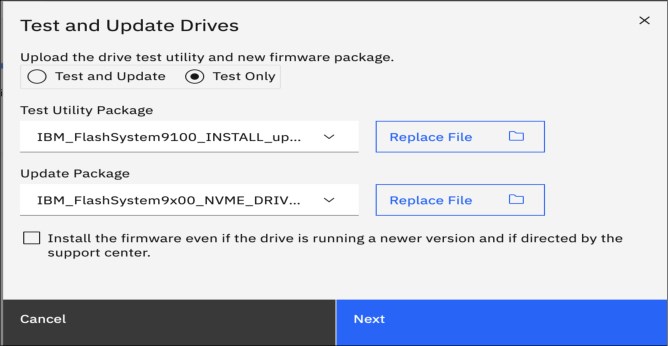

Using the management GUI

To test the software on the system, complete these steps:

1. In the management GUI, select Settings → System → Update System.

2. Click Test Only.

3. Select the test utility that you downloaded from the Fix Central support site. Upload the Test utility file and enter the code level that you are planning to update to. Figure 10-5 shows the IBM FlashSystem management GUI window that is used to install and run the Upgrade Test Utility.

Figure 10-5 IBM FlashSystem Upgrade Test Utility using the GUI

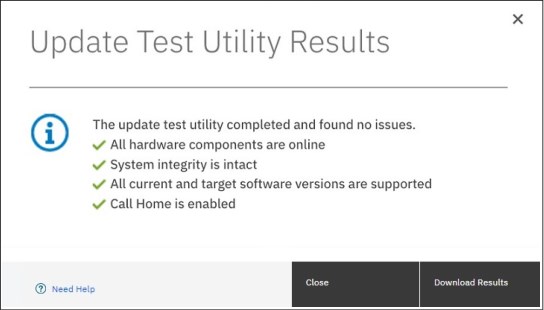

4. Click Test. The test utility verifies that the system is ready to be updated. After the Update Test Utility completes, you are presented with the results. The results state that no warnings or problems were found, or directs you to more information about known issues that were discovered on the system.

Figure 10-6 shows a successful completion of the update test utility.

Figure 10-6 IBM FlashSystem Upgrade Test Utility completion panel

5. Click Download Results to save the results to a file.

6. Click Close.

Using the command line

To test the software on the system, complete these steps:

1. Using OpenSSH scp or PuTTY pscp, copy the software update file and the Software Update Test Utility package to the /home/admin/upgrade directory by using the management IP address of the IBM FlashSystem. Documentation and online help might refer to the /home/admin/update directory, which points to the same location on the system.

An example for the IBM FlashSystem 9200 is shown in Example 10-2.

Example 10-2 Copying the upgrade test utility to IBM FlashSystem 9200

C:>pscp -v -P 22 IBM_FlashSystem9200_INSTALL_upgradetest_33.1 [email protected]:/home/admin/upgrade

Looking up host "9.10.11.12" for SSH connection

Connecting to 9.10.11.12 port 22

We claim version: SSH-2.0-PuTTY_Release_0.74

Remote version: SSH-2.0-OpenSSH_8.0

Using SSH protocol version 2

No GSSAPI security context available

Doing ECDH key exchange with curve Curve25519 and hash SHA-256 (unaccelerated)

Server also has ssh-rsa host key, but we don't know it

Host key fingerprint is:

ecdsa-sha2-nistp521 521 a8:f0:de:cf:eb:fd:b4:74:9e:95:c7:bd:5c:f1:3b:b5

Initialised AES-256 SDCTR (AES-NI accelerated) outbound encryption

Initialised HMAC-SHA-256 (unaccelerated) outbound MAC algorithm

Initialised AES-256 SDCTR (AES-NI accelerated) inbound encryption

Initialised HMAC-SHA-256 (unaccelerated) inbound MAC algorithm

Using username "superuser".

Attempting keyboard-interactive authentication

Keyboard-interactive authentication prompts from server:

| Password:

End of keyboard-interactive prompts from server

Access granted

Opening main session channel

Opened main channel

Primary command failed; attempting fallback

Started a shell/command

Using SCP1

Connected to 9.10.11.12

Sending file IBM_FlashSystem9200_INSTALL_upgradetest_33.1, size=333865

Sink: C0644 333865 IBM_FlashSystem9100_INSTALL_upgradetest_33.1

IBM_FlashSystem9200_INSTA | 326 kB | 326.0 kB/s | ETA: 00:00:00 | 100%

Session sent command exit status 0

Main session channel closed

All channels closed

C:>

2. Ensure that the update file was successfully copied as shown by the exit status 0 return code or you can use the lsdumps -prefix /home/admin/upgrade command.

3. Install and run Upgrade Test Utility in the CLI, as shown in Example 10-3. In this case, the Upgrade Test Utility found no errors and completed successfully.

Example 10-3 Upgrade test using the CLI

IBM_FlashSystem:IBM Redbook FS:superuser>svctask applysoftware -file IBM_FlashSystem9200_INSTALL_upgradetest_33.1

CMMVC9001I The package installed successfully.

IBM_FlashSystem:IBM Redbook FS:superuser>svcupgradetest -v 8.4.2.0

svcupgradetest version 33.1

Please wait, the test may take several minutes to complete.

Results of running svcupgradetest:

==================================

The tool has found 0 errors and 0 warnings.

The tool has not found any problems with the cluster.

|

Note: The return code for the applysoftware command always is 1, whether the installation succeeded or failed. However, the message that is returned when the command completes reports the correct installation result.

|

Review the output to check whether there have been any problems found by the utility. The output from the command either states that there have been no problems found, or directs you to details about known issues that have been discovered on the system.

10.5.9 Updating the software

FlashSystem software can be updated by using one of the following methods:

•GUI: During a standard update procedure in the management GUI, the system updates each of the nodes systematically. This is the recommended method for updating software on nodes.

•CLI: The command-line interface gives you more control over the automatic upgrade process. You have the ability to resolve multipathing issues when nodes go offline for updates. You can also override the default 30-minute mid-point delay, pause an update, and resume a stalled update.

•Manual: To provide even more flexibility in the update process, you can manually update each node individually using the Service Assistant Tool GUI. When upgrading the software manually, you remove a node from the system, update the software on the node, and return the node to the system. You repeat this process for the remaining nodes until the last node is removed from the system. At this point, the remaining nodes switch to running the new software. When the last node is returned to the system, it updates and runs the new level of software. This action cannot be performed on an active node. To update software manually, the nodes must either be candidate nodes (a candidate node is a node that is not in use by the system and cannot process I/O) or in a service state. During this procedure, every node must be updated to the same software level and the node becomes unavailable during the update.

Whichever method (GUI, CLI, or manual) that you choose to perform the update, make sure you adhere to the following guidelines for your IBM FlashSystem software update:

•Schedule the IBM FlashSystem software update for a low I/O activity time. The update process puts one node at a time offline. It also disables the write cache in the I/O group that node belongs to until both nodes are updated. Therefore, with lower I/O, you are less likely to notice performance degradation during the update.

•Never power off, reboot, or reset an IBM FlashSystem node during software update unless you are instructed to do so by IBM Support. Typically, if the update process encounters a problem and fails, it backs out. Bear in mind that the update process can take one hour per node with a further, optional, 30-minute mid-point delay.

•If you are planning for a major IBM FlashSystem version update, update your current version to its latest fix level before you run the major update.

•Check whether you are running a web browser type and version that is supported by the IBM FlashSystem target software level on every computer that you intend to use to manage your IBM FlashSystem.

This section describes the steps required to update the software.

Using the management GUI

To update the software on the system automatically, complete these steps:

1. In the management GUI, select Settings → System → Update System.

2. Click Test & Update.

3. Select the test utility and the software package that you downloaded from the Fix Central support site. The test utility verifies (again) that the system is ready to be updated.

4. Click Next. Select Automatic update.

5. Select whether you want to create intermittent pauses in the update to verify the process. Select one of the following options.

– Fully automatic update without pauses (recommended)

– Pausing the update after half of the nodes are updated

– Pausing the update before each node updates

6. Click Finish. As the canisters on the system are updated, the management GUI displays the progress for each canister.

7. Monitor the update information in the management GUI to determine when the process is complete.

Using the command line

To update the software on the system automatically, complete these steps:

1. You must run the latest version of the test utility to verify that no issues exist with the current system. See Example 10-3 on page 463.

2. Copy the software package to the IBM FlashSystem using the same method as described in Example 10-2 on page 462.

3. Before you begin the update, you must be aware of the following situations:

– The installation process fails under the following conditions:

• If the software that is installed on the remote system is not compatible with the new software or if an inter-system communication error does not allow the system to check that the code is compatible.

• If any node in the system has a hardware type that is not supported by the new software.

• If the system determines that one or more volumes in the system would be taken offline by rebooting the nodes as part of the update process. You can find details about which volumes would be affected by using the lsdependentvdisks command. If you are prepared to lose access to data during the update, you can use the force flag to override this restriction.

– The update is distributed to all the nodes in the system by using internal connections between the nodes.

– Nodes are updated one at a time.

– Nodes run the new software concurrently with normal system activity.

– While the node is updated, it does not participate in I/O activity in the I/O group. As a result, all I/O activity for the volumes in the I/O group is directed to the other node in the I/O group by the host multipathing software.

– There is a thirty-minute delay between node updates. The delay allows time for the host multipathing software to rediscover paths to the nodes that are updated. There is no loss of access when another node in the I/O group is updated.

– The update is not committed until all nodes in the system are successfully updated to the new software level. If all nodes are successfully restarted with the new software level, the new level is committed. When the new level is committed, the system vital product data (VPD) is updated to reflect the new software level.

– Wait until all member nodes are updated and the update is committed before you invoke the new functions of the updated software.

– Because the update process takes some time, the installation command completes as soon as the software level is verified by the system. To determine when the update is completed, you must either display the software level in the system VPD or look for the Software update complete event in the error/event log. If any node fails to restart with the new software level or fails at any other time during the process, the software level is backed off.

– During an update, the version number of each node is updated when the software is installed and the node is restarted. The system software version number is updated when the new software level is committed.

– When the update starts, an entry is made in the error or event log and another entry is made when the update completes or fails.

4. Issue the following CLI command to start the update process:

applysoftware -file <software_update_file>

where <software_update_file> is the filename of the software update file. If the system identifies any volumes that would go offline as a result of rebooting the nodes as part of the system update, the software update does not start. An optional force parameter can be used to indicate that the update continues regardless of the problem identified. If you use the force parameter, you are prompted to confirm that you want to continue.

5. Issue the following CLI command to check the status of the update process:

lsupdate

This command displays success when the update is complete.

6. To verify that the update has successfully completed, issue the lsnodecanistervpd command for each node in the system. The code_level field displays the new code level for each node.

10.6 Drive firmware updates

The updating of drive firmware is concurrent process that can be performed online while the drive is in use, whether it is NVMe or SCM drives in the control enclosure or the SSD drives in any SAS-attached expansion enclosures.

When used on an array member drive the update checks for volumes that are dependent on the drive and refuses to run if any are found. Drive dependent volumes are usually caused by non-redundant or degraded RAID arrays. Where possible you should restore redundancy to the system by replacing any failed drives before upgrading drive firmware. When this is not possible, you can either add redundancy to the volume by adding a second copy in another pool or use the -force parameter to bypass the dependent volume check. Use -force only if you are willing to accept the risk of data loss on dependent volumes (if the drive fails during the firmware update).

|

Note: Due to some system constraints, it is not possible to produce a single NVMe firmware package that works on all NVMe drives on all Spectrum Virtualize code levels. Therefore, you find three different NVMe firmware files available for download depending on the size of the drives you have installed.

|

Using the management GUI

To update the drive firmware automatically, complete the following steps:

1. Select Pools → Internal Storage → Actions → Upgrade All.

2. As shown in Figure 10-7, in the Upgrade Package text box, browse to the drive firmware package you downloaded as described in “Obtaining the software packages” on page 457.

Figure 10-7 Drive firmware upgrade

3. Click Upgrade. Each drive upgrade takes approximately 6 minutes to complete.

4. You can also update individual drives by right-clicking a single drive and selecting Upgrade.

5. To monitor the progress of the upgrade, select Monitoring → Background Tasks.

Using the command line

To update the software on the system manually, complete these steps:

1. Copy the drive firmware package to the IBM FlashSystem by using the same method as described in Example 10-2 on page 462.

2. Issue the following CLI command to start the update process for all drives:

applydrivesoftware -file <software_update_file> -type firmware -all

where <software_update_file> is the filename of the software update file. The use of the -all option updates firmware on all eligible drives including quorum drives, which is a slight risk. To avoid this risk, use the -drive option instead and make sure the quorum is moved using the lsquorum and chquorum commands in between applydrivesoftware invocations.

|

Note: The maximum number of drive IDs that can be specified on a command line using the -drive option is 128. If you have more than 128 drives, use the -all option or run multiple invocations of applydrivesoftware to complete the update.

|

3. Issue the following CLI command to check the status of the update process:

lsdriveupgradeprogress

This command displays success when the update is complete.

4. To verify that the update has successfully completed, issue the lsdrive command for each drive in the system. The firmware_level field displays the new code level for each drive. Example 10-4 demonstrates how to list the firmware level for four specific drives:

Example 10-4 List firmware level for drives 0,1, 2 and 3

IBM_FlashSystem:GLTL-FS9K:superuser>for i in 0 1 2 3; do echo "Drive $i = `lsdrive $i|grep firmware`"; done

Drive 0 = firmware_level 1_2_11

Drive 1 = firmware_level 1_2_11

Drive 2 = firmware_level 1_2_11

Drive 3 = firmware_level 1_2_11

For more information, see this IBM Documentation web page.

10.7 Remote Code Load

Remote Code Load (RCL) is a service offering provided by IBM, which allows code updates to be performed by remote support engineers, as opposed to an onsite Support Services Representative (SSR).

IBM Assist on-site (AOS) or remote support center or Secure Remote Access (SRA) including Call Home enablement are required to enable Remote Code Load. With the Assist on-site enabled, the live remote-assistance tool, a member of IBM support team can view your desktop and share control of your mouse and keyboard to get you on your way to a solution. Rather than the RCL the tool can also speed up problem determination, collection of data, and ultimately, your problem solution.

For more information about configuring support assistance, see this IBM Documentation web page.

Before the Assist On-site application is used, you can test your connectivity to the Assist On-site network by downloading the IBM connectivity testing tool. For more information, see this IBM Support web page.

To request the RCL for your system, see this IBM Support web page and select your product type. Then, complete the following steps:

1. At the IBM Remote Code Load web page, select Product type → Book Now - FlashSystem 9200 and 7200 Remote Code Load.

Figure 10-8 shows the RCL Schedule Service page.

Figure 10-8 FlashSystem RCL Schedule Service page

2. Click Schedule Service to start scheduling the service.

3. Next is the Product type selection for RCL. Go to FlashSystem 9200 option and click Select (see Figure 10-9).

Figure 10-9 RCL Product type page

4. In the RCL time frame option, select the date (see Figure 10-10) and time frame (see Figure 10-11).

Figure 10-10 Time Frame selection page

Figure 10-11 RCL Time selection page

5. Enter your booking details in the RCL booking information form (see Figure 10-12).

Figure 10-12 RCL Booking information page

10.8 Replacing Flash Core Module

Replacing a Flash Core Module (FCM) in your IBM FlashSystem requires special attention to avoid out-of-scope procedures that can damage your system. Before you start the IBM FlashSystem FCM replacement procedure, review the following items to prevent any damage to your system and FCM or to your system data:

•Do not replace, re-seat, or run any task on your FlashSystem if you are not sure or not comfortable with the procedure, Always engage IBM support Level 1/2 in case of issues or any problem during any procedure you are running.

•Call IBM Support to ensure that the logs were verified and a correct action plan was provided to replace the failed FCM.

•Confirm with the IBM System Service Representative (SSR) if the correct FRU number was shipped to be replaced and that the action plan that was provided by IBM Support was revised.

•Confirm that your system does not have any other FCM failure or error messages in the error log tab before conducting the replacement procedure.

If you have the IBM Expert Care coverage feature for your FlashSystem, make sure that your Technical Account Manager (TAM) is aware of the procedure and engaged with the service team to proceed with the replacement.

For more information, see this IBM Documentation web page.

|

Note: Re-seating an FCM can reformat the module in specific instances. All FCM drive failure alerts must be addressed before any re-seat or replacement procedure is done. Upon receiving any error message for the FCM drives, it is recommended to escalate the problem to IBM Support.

|

10.9 SAN modifications

When you administer shared storage environments, human error can occur when a failure is fixed, or a change is made that affects one or more servers or applications. That error can then affect other servers or applications because suitable precautions were not taken.

Human error can include some the following examples:

•Disrupting or disabling the working disk paths of a server while trying to fix failed ones.

•Disrupting a neighbor SAN switch port while inserting or removing an FC cable or small form-factor pluggable (SFP).

•Disabling or removing the working part in a redundant set instead of the failed one.

•Making modifications that affect both parts of a redundant set without an interval that allows for automatic failover during unexpected problems.

Adhere to the following guidelines to perform these actions with assurance:

•Uniquely and correctly identify the components of your SAN.

•Use the proper failover commands to disable only the failed parts.

•Understand which modifications are necessarily disruptive, and which can be performed online with little or no performance degradation.

10.9.1 Cross-referencing WWPN

With the WWPN of an HBA, you can uniquely identify one server in the SAN. If the name of the server is changed at the operating system level and not at the IBM FlashSystem host definitions, it continues to access its mapped volumes exactly because the WWPN of the HBA did not change.

Alternatively, if the HBA of a server is removed and installed in a second server and the SAN zones for the first server and the IBM FlashSystem host definitions are not updated, the second server can access volumes that it probably should not access.

Complete the following steps to cross-reference HBA WWPNs:

1. In your server, verify the WWPNs of the HBAs that are used for disk access. Typically, you can complete this task by using the SAN disk multipath software of your server.

If you are using SDDPCM, run the pcmpath query WWPN command to see output similar to what is shown in Example 10-5.

Example 10-5 Output of the pcmpath query WWPN command

[root@Server127]> pcmpath query wwpn

Adapter Name PortWWN

fscsi0 10000090FA021A13

fscsi1 10000090FA021A12

If you are using server virtualization, verify the World Wide Port Names (WWPNs) in the server that is attached to the SAN, such as AIX Virtual Input/Output (VIO) or VMware ESX. Cross-reference with the output of the IBM FlashSystem lshost <hostname> command, as shown in Example 10-6 on page 473.

Example 10-6 Output of the lshost <hostname> command

IBM_FlashSystem:IBM Redbook FS:superuser>svcinfo lshost Server127

id 0

name Server127

port_count 2

type generic

mask 1111111111111111111111111111111111111111111111111111111111111111

iogrp_count 4

status active

site_id

site_name

host_cluster_id

host_cluster_name

protocol scsi

WWPN 10000090FA021A13

node_logged_in_count 1

state active

WWPN 10000090FA021A12

node_logged_in_count 1

state active

2. If necessary, cross-reference information with your SAN switches, as shown in Example 10-7. In Brocade switches use the nodefind <WWPN> command.

Example 10-7 Cross-referencing information with SAN switches

blg32sw1_B64:admin> nodefind 10:00:00:90:FA:02:1A:13

Local:

Type Pid COS PortName NodeName SCR

N 401000; 2,3;10:00:00:90:FA:02:1A:13;20:00:00:90:FA:02:1A:13; 3

Fabric Port Name: 20:10:00:05:1e:04:16:a9

Permanent Port Name: 10:00:00:90:FA:02:1A:13

Device type: Physical Unknown(initiator/target)

Port Index: 16

Share Area: No

Device Shared in Other AD: No

Redirect: No

Partial: No

Aliases: nybixtdb02_fcs0

b32sw1_B64:admin>

For storage allocation requests that are submitted by the server support team or application support team to the storage administration team, always include the server’s HBA WWPNs to which the new LUNs or volumes are supposed to be mapped. For example, a server might use separate HBAs for disk and tape access or distribute its mapped LUNs across different Has for performance. You cannot assume that any new volume is supposed to be mapped to every WWPN that server logged in the SAN.

If your organization uses a change management tracking tool, perform all your SAN storage allocations under approved change requests with the servers’ WWPNs that are listed in the Description and Implementation sections.

10.9.2 Cross-referencing LUN ID

Always cross-reference the IBM FlashSystem vdisk_UID with the server logical unit number (LUN) ID before you perform any modifications that involve IBM FlashSystem volumes. Example 10-8 shows an AIX server that is running Subsystem Device Driver Path Control Module (SDDPCM). The IBM FlashSystem vdisk_name has no relation to the AIX device name. Also, the first SAN LUN mapped to the server (SCSI_id=0) shows up as hdisk4 in the server because it had four internal disks (hdisk0 - hdisk3).

Example 10-8 Results of running the lshostvdiskmap command

IBM_FlashSystem:IBM Redbook FS:superuser>lshostvdiskmap NYBIXTDB03

id name SCSI_id vdisk_id vdisk_name vdisk_UID

0 NYBIXTDB03 0 0 NYBIXTDB03_T01 60050768018205E12000000000000000

root@nybixtdb03::/> pcmpath query device

Total Dual Active and Active/Asymmetric Devices : 1

DEV#: 4 DEVICE NAME: hdisk4 TYPE: 2145 ALGORITHM: Load Balance

SERIAL: 60050768018205E12000000000000000

==========================================================================

Path# Adapter/Path Name State Mode Select Errors

0* fscsi0/path0 OPEN NORMAL 7 0

1 fscsi0/path1 OPEN NORMAL 5597 0

2* fscsi2/path2 OPEN NORMAL 8 0

3 fscsi2/path3 OPEN NORMAL 5890 0

If your organization uses a change management tracking tool, include the vdisk_UID and LUN ID information in every change request that performs SAN storage allocation or reclaim.

|

Note: Because a host can have many volumes with the same scsi_id, always cross-reference the IBM FlashSystem volume UID with the host volume UID and record the scsi_id and LUN ID of that volume.

|

10.10 Server HBA replacement

Replacing a failed HBA in a server is a fairly trivial and safe operation if it is performed correctly. However, more precautions are required if your server has multiple, redundant HBAs on different SAN fabrics and the server hardware permits you to “hot” replace it (with the server still running).

Complete the following steps to replace a failed HBA and retain the working HBA:

1. In your server, identify the failed HBA and record its WWPNs. (For more information, see 10.9.1, “Cross-referencing WWPN” on page 472.) Then, place this HBA and its associated paths offline (gracefully if possible). This approach is important so that the multipath software stops attempting to recover it. Your server might even show a degraded performance while you perform this task.

2. Some HBAs have an external label that shows the WWPNs. If you have this type of label, record the WWPNs before you install the new HBA in the server.

3. If your server does not support HBA hot-swap, power off your system, replace the HBA, connect the used FC cable into the new HBA, and power on the system.

If your server does support hot-swap, follow the appropriate procedures to perform a “hot” replace of the HBA. Do not disable or disrupt the working HBA in the process.

4. Verify that the new HBA successfully logged in to the SAN switch. If it logged in successfully, you can see its WWPNs logged in to the SAN switch port. Otherwise, fix this issue before you continue to the next step.

Cross-check the WWPNs that you see in the SAN switch with the one you noted in step 1, and make sure that you did not record the wrong WWNN.

5. In your SAN zoning configuration tool, replace the old HBA WWPNs for the new ones in every alias and zone to which they belong. Do not touch the other SAN fabric (the one with the working HBA) while you perform this task.

Only one alias should use each WWPN, and zones must reference this alias.

If you are using SAN port zoning (though you should not be) and you did not move the new HBA FC cable to another SAN switch port, you do not need to reconfigure zoning.

6. Verify that the new HBA’s WWPNs appear in the IBM FlashSystem by using the lsfcportcandidate command.

If the WWPNs of the new HBA do not appear, troubleshoot your SAN connections and zoning.

7. Add the WWPNs of this new HBA in the IBM FlashSystem host definition by using the addhostport command. It is important that you do not remove the old one yet. Run the lshost <servername> command. Then, verify that the working HBA shows as active, while the failed HBA should show as inactive or offline.

8. Use software to recognize the new HBA and its associated SAN disk paths. Certify that all SAN LUNs have redundant disk paths through the working HBA and the new HBA.

9. Return to the IBM FlashSystem and verify again (by using the lshost <servername> command) that both the working and the new HBA’s WWPNs are active. In this case, you can remove the old HBA WWPNs from the host definition by using the rmhostport command.

10. Do not remove any HBA WWPNs from the host definition until you ensure that you have at least two active ones that are working correctly.

By following these steps, you avoid removing your only working HBA by mistake.

10.11 Hardware upgrades

The IBM FlashSystem scalability features allow significant flexibility in its configuration. As discussed in previous chapters, the IBM FlashSystem family has two different types of enclosures: control enclosures and expansion enclosures.

•Control Enclosures manage your storage systems, communicate with the host, and manage interfaces. In addition, they can also house up to 24 NVMe-capable flash drives.

•Expansion Enclosures increase the available capacity of an IBM FlashSystem cluster. They communicate with the control enclosure through a dual pair of 12 Gbps serial-attached SCSI (SAS) connections. These expansion enclosures can house many of flash (solid-state drive (SSD)) SAS type drives,

A basic configuration of an IBM FlashSystem storage platform consists of one IBM FlashSystem control enclosure. For a balanced increase of performance and scale, up to four (depending on model) IBM FlashSystem control enclosures can be clustered into a single storage system. Similarly, to increase capacity, up to two chains (depending on model) of expansion enclosures can be added per control enclosure. Therefore, several scenarios are possible for its growth.

These processes are described next.

10.11.1 Adding control enclosures

If your IBM FlashSystem cluster is below the maximum I/O groups limit for your specific product and you intend to upgrade it, you can install another control enclosure. It is also feasible that you might have a cluster of IBM Storwize V7000 nodes that you want to add the IBM FlashSystem enclosures to because the latter are more powerful than your existing ones. Therefore, your cluster can include different node models in different I/O groups.

To install these control enclosures, determine whether you need to upgrade your IBM FlashSystem first (or Storwize V7000 code level if you are merging an existing Storwize V7000 Gen2 cluster with a IBM FlashSystem 9200 for example).

For more information, see 10.5.2, “Hardware considerations” on page 458.

|

Note: If exactly two control enclosures are in a system, you must set up a quorum disk or application outside of the system. If the two control enclosures lose communication with each other, the quorum disk prevents both I/O groups from going offline.

|

IBM FlashSystem 9200

To add a control enclosure to an existing FlashSystem 9200 system, the IBM SSR engineer must first install the new control enclosure in the rack and cable it to SAN or Ethernet switches or directly to the existing control enclosure. You are then able to add it to the system using the management GUI where it should automatically appear if cabled correctly. For more information, see this IBM Documentation web page.

IBM FlashSystem 9100

To add a control enclosure to an existing FlashSystem 9100 system, the IBM SSR engineer must first install the new control enclosure in the rack and cable it to SAN or Ethernet switches or directly to the existing control enclosure. You are then able to add it to the system using the management GUI where it should automatically appear if cabled correctly. For more information, see this IBM Documentation web page.

IBM FlashSystem 7200

To add a control enclosure to an existing FlashSystem 7200 system, you must first install it in the rack. Then, you must connect it to the system through a zone in the SAN or by using RDMA over Ethernet. Finally, you can add it to the system using the management GUI where it should automatically appear if cabled correctly. For more information, see this IBM Documentation web page.

IBM FlashSystem 5x00

To add a control enclosure to an existing FlashSystem 5100 system, you must first install it in the rack. Then, you must connect it to the system through a zone in the SAN or by using RDMA over Ethernet. Finally, you can add it to the system using the management GUI where it should automatically appear if cabled correctly. For more information, see this IBM Documentation web page.

After you install the new nodes, you might need to redistribute your servers across the I/O groups. Consider the following points:

•Moving a server’s volume to different I/O groups can be done online because of a feature called Non-Disruptive Volume Movement (NDVM). Although this process can be done without stopping the host, careful planning and preparation is advised. For more information about supported operating systems, see this IBM Documentation web page.

|

Note: You cannot move a volume that is in a type of Remote Copy relationship.

|

•If each of your servers is zoned to only one I/O group, modify your SAN zoning configuration as you move its volumes to another I/O group. As best you can, balance the distribution of your servers across I/O groups according to I/O workload.

•Use the -iogrp parameter in the mkhost command to define which I/O groups of the IBM FlashSystem that the new servers use. Otherwise, IBM FlashSystem maps by default the host to all I/O groups, even if they do not exist and regardless of your zoning configuration.

Example 10-9 shows this scenario and how to resolve it by using the rmhostiogrp and addhostiogrp commands.

Example 10-9 Mapping the host to I/O groups

IBM_FlashSystem:IBM Redbook FS:superuser>lshost

id name port_count iogrp_count status site_id site_name host_cluster_id host_cluster_name protocol

0 Win2012srv1 2 4 online scsi

1 linuxsrv3 1 4 online scsi

IBM_FlashSystem:IBM Redbook FS:superuser>lshost Win2012srv1

id 0

name Win2012srv1

port_count 2

type generic

mask 1111111111111111111111111111111111111111111111111111111111111111

iogrp_count 4

status online

site_id

site_name

host_cluster_id

host_cluster_name

protocol scsi

WWPN 10000090FAB386A3

node_logged_in_count 2

state inactive

WWPN 10000090FAB386A2

node_logged_in_count 2

state inactive

IBM_FlashSystem:IBM Redbook FS:superuser>lsiogrp

id name node_count vdisk_count host_count site_id site_name

0 io_grp0 2 11 2

1 io_grp1 0 0 2

2 io_grp2 0 0 2

3 io_grp3 0 0 2

4 recovery_io_grp 0 0 0

?IBM_FlashSystem:IBM Redbook FS:superuser>lshostiogrp Win2012srv1

id name

0 io_grp0

1 io_grp1

2 io_grp2

3 io_grp3

IBM_FlashSystem:IBM Redbook FS:superuser>rmhostiogrp -iogrp 3 Win2012srv1

IBM_FlashSystem:IBM Redbook FS:superuser>

IBM_FlashSystem:IBM Redbook FS:superuser>lshostiogrp Win2012srv1

id name

0 io_grp0

1 io_grp1

2 io_grp2

IBM_FlashSystem:IBM Redbook FS:superuser>

IBM_FlashSystem:IBM Redbook FS:superuser>addhostiogrp -iogrp io_grp3 Win2012srv1

IBM_FlashSystem:IBM Redbook FS:superuser>

IBM_FlashSystem:IBM Redbook FS:superuser>lshostiogrp Win2012srv1

id name

0 io_grp0

1 io_grp1

2 io_grp2

3 io_grp3

IBM_FlashSystem:IBM Redbook FS:superuser>lsiogrp

id name node_count vdisk_count host_count site_id site_name

0 io_grp0 2 11 2

1 io_grp1 0 0 2

2 io_grp2 0 0 2

3 io_grp3 0 0 2

4 recovery_io_grp 0 0 0

•If possible, avoid setting a server to use volumes from different I/O groups that have different node types for extended periods of time. Otherwise, as this server’s storage capacity grows, you might experience a performance difference between volumes from different I/O groups. This mismatch makes it difficult to identify and resolve eventual performance problems.

10.11.2 Upgrading nodes in an existing cluster

If you want to upgrade the nodes or canisters of your existing IBM FlashSystem, there is the option to increase the cache memory size or the adapters in each node. This can be done, one node at a time, and so as to be nondisruptive to the systems operations. For more information, see this IBM Documentation web page.

When evaluating cache memory upgrades, consider the following points:

•As your working set and total capacity increases, you should consider increasing your cache memory size. A working set is the most accessed workloads, excluding snapshots and backups. Total capacity implies more or larger workloads and a larger working set.

•If you are consolidating from multiple controllers, consider at least matching the amount of cache memory across those controllers.

•When externally virtualizing controllers (such as switched virtual circuit), a large cache can accelerate older controllers with smaller caches.

•If you are using DRP, then maximize the cache size and consider adding SCM drives with Easy Tier for the best performance.

•If you are making heavy use of copy services, consider increasing the cache beyond just your working set requirements.

•A truly random working set might not benefit greatly from the cache.

|

Important: Do not power on a node that is shown as offline in the management GUI, if you powered off the node to add memory to increase total memory. Before you increase memory, you must remove a node from the system so that it is not showing in the management GUI or in the output from the svcinfo lsnode command.

Do not power on a node that is still in the system and showing as offline with more memory than the node had when it powered off. Such a node can cause an immediate outage or an outage when you update the system software.

|

When evaluating adapter upgrades, consider the following points:

•A single 32 Gb Fibre Channel port can deliver over 3 GBps (allowing for overheads).

•A 32 Gb FC card in each canister, with 8 ports can deliver more than 24 GBps.

•An FCM NVMe device can perform at over 1 GBps.

•A single 32 Gb Fibre Channel port can deliver 80,000 to 125,000 IOPS with a 4k block size.

•A 32 Gb FC card in each canister, with 8 ports can deliver up to 1,000,000 IOPS.

•A FlashSystem 9200 can deliver 1,200,000 4k read miss input/output operations per second (IOPS) and up to 4,500,000 4k read hit IOPS.

•If you have more than 12 NVMe devices, consider 2 Fibre Channel cards per canister. A third Fibre Channel card allows you to achieve up to 45 GBps.

•If you want to achieve more than 800,000 IOPS, use at least 2 Fibre Channel cards per canister.

•If the FlashSystem is performing Remote Copy or clustering, consider using separate ports to ensure that there is no conflict with host traffic.

•iSER by way of 25 GbE ports has similar capabilities as 16 Gb FC ports, but with less overall ports available. If you are planning on using 10 Gb iSCSI, ensure it can service your expected workloads.

Real-time performance statistics are available in the management GUI from the Monitoring → Performance menu, as shown in Figure 10-13.

Figure 10-13 IBM FlashSystem performance statistics (IOPS)

Memory options for an IBM FlashSystem 9200 control enclosure

A CPU processor has six memory channels, which are labeled A-F. Each memory channel has two dual inline memory module (DIMM) slots, numbered 0-1. For example, DIMM slots A0 and A1 are in memory channel A.

On the system board, the DIMM slots are labeled according to their memory channel and slot. They are associated with the CPU nearest to their DIMM slots. You can install three distinct memory configurations in those 24 DIMM slots in each node canister.

|

Important: The memory in both node canisters must be configured identically to create the total enclosure memory size

|

Table 10-2 shows the available memory configuration for each FlashSystem 9200 control enclosure. Each column gives the valid configuration for each total enclosure memory size. DIMM slots are listed in the same order that they appear in the node canister.

To ensure proper cooling and a steady flow of air from the fan modules in each node canister, blank DIMMs must be inserted in any slot that does not contain a memory module.

Table 10-2 Available memory configuration for one node in a control enclosure

|

DIMM Slot

|

Total enclosure memory 256 GB

|

Total enclosure memory 768 GB

|

Total enclosure memory 1536 GB

|

|

F0 (CPU0)

|

Blank

|

32 GB

|

32 GB

|

|

F1 (CPU0)

|

Blank

|

Blank

|

32 GB

|

|

E0 (CPU0)

|

Blank

|

32 GB

|

32 GB

|

|

E1 (CPU0)

|

Blank

|

Blank

|

32 GB

|

|

D0 (CPU0)

|

32 GB

|

32 GB

|

32 GB

|

|

D1 (CPU0)

|

Blank

|

Blank

|

32 GB

|

|

A1 (CPU0)

|

Blank

|

Blank

|

32 GB

|

|

A0 (CPU0)

|

32 GB

|

32 GB

|

32 GB

|

|

B1 (CPU0)

|

Blank

|

Blank

|

32 GB

|

|

B0 (CPU0)

|

Blank

|

32 GB

|

32 GB

|

|

C1 (CPU0)

|

Blank

|

Blank

|

32 GB

|

|

C0 (CPU0)

|

Blank

|

32 GB

|

32 GB

|

|

C0 (CPU1)

|

Blank

|

32 GB

|

32 GB

|

|

C1 (CPU1)

|

Blank

|

Blank

|

32 GB

|

|

B0 (CPU1)

|

Blank

|

32 GB

|

32 GB

|

|

B1 (CPU1)

|

Blank

|

Blank

|

32 GB

|

|

A0 (CPU1)

|

32 GB

|

32 GB

|

32 GB

|

|

A1 (CPU1)

|

Blank

|

Blank

|

32 GB

|

|

D1 (CPU1)

|

Blank

|

Blank

|

32 GB

|

|

D0 (CPU1)

|

32 GB

|

32 GB

|

32 GB

|

|

E1 (CPU1)

|

Blank

|

Blank

|

32 GB

|

|

E0 (CPU1)

|

Blank

|

32 GB

|

32 GB

|

|

F1 (CPU1)

|

Blank

|

Blank

|

32 GB

|

|

F0 (CPU1)

|

Blank

|

32 GB

|

32 GB

|

Memory options for an IBM FlashSystem 9100 control enclosure

Each of the six memory channels in each CPU has two DIMM slots, for a total of 12 DIMM slots per CPU, which means 24 DIMM slots per node canister and 48 DIMM slots per enclosure. You can install six distinct memory configurations in those 24 DIMM slots in each node canister. (Each canister must have the same amount of memory and the same configuration).

Initially, each control enclosure ships with one of the following features, depending on what has been ordered, as shown in Table 10-3.

Table 10-3 Base memory features

|

Feature

|

Memory per enclosure

|

Maximum per enclosure

|

|

ACG0

|

128 GB base cache memory (eight 16 GB DIMMs - 2 per CPU)

|

1

|

|

ACG1

|

768 GB base cache memory (twenty-four 32 GB DIMMs - 6 per CPU)

|

1

|

You can order the features that are listed in Table 10-4 to upgrade to more memory at any time.

Table 10-4 Memory features

|

Feature

|

Memory per enclosure

|

Maximum per enclosure

|

|

ACGA

|

128 GB memory upgrade (eight 16 GB DIMMs)

|

3

|

|

ACGB

|

768 GB memory upgrade (twenty-four 32 GB DIMMs)

|

2

|

Memory options for an IBM FlashSystem 7200 control enclosure

Table 10-5 lists the various memory options available for the IBM FlashSystem 7200 by feature code.

Table 10-5 IBM FlashSystem 7200 memory options

|

Base memory (GB)

|

Field Upgrade

ACGJ (GB) |

Field Upgrade

ACGB (GB) |

Total memory (GB)

|

|

256

|

N/A

|

N/A

|

256

|

|

256

|

512

|

N/A

|

768

|

|

256

|

512

|

768

|

1536

|

Memory options for an IBM FlashSystem 5000 control enclosure

The IBM FlashSystem 5000 family consists of different models, and each model type provides a different set of features. Table 10-6 lists the memory features of the FlashSystem 5000 and 5100 models.

Table 10-6 Memory options

|

Platform

|

FS5010

|

FS5015

|

FS5030

|

FS5035

|

FS5100

|

|

Option 1 per node

|

1 x 8 GB

|

1 x 16 GB

|

1 x 16 GB

|

1 x 16 GB

|

2 x 16 GB

|

|

Option 2 per node

|

1 x 16 GB

|

2 x 16 GB

|

2 x 16 GB

|

2 x 16 GB

|

6 x 16 GB

|

|

Option 3 per node

|

2 x 16 GB

|

N/A

|

N/A

|

N/A

|

6 x 16 GB +

6 x 32 GB

|

|

Maximum per I/O Group

|

64 GB

|

64 GB

|

64 GB

|

64 GB

|

576 GB

|

Memory options for an IBM FlashSystem 5200 control enclosure