Connecting IBM Spectrum Virtualize and IBM Storwize in storage area networks

The storage area network (SAN) is one of the most important aspects when implementing and configuring IBM Spectrum Virtualize and IBM FlashSystem.

This chapter does not describe how to design and build a flawless SAN from the beginning. Rather, it provides guidance about how to connect IBM Spectrum Virtualize and Storwize in an SAN to achieve a stable, redundant, resilient, scalable, and performance-likely environment. However, you can take the principles here into account when building your SAN.

|

Note: Important: This chapter was written specifically for IBM FlashSystem 9200, however most of the general principles apply to the IBM FlashSystem 9100.

If you are in doubt as to whether the principles are applicable to the FlashSystem 9100, contact your local IBM representative.

For more information, see IBM FlashSystem 9200 Product Guide, REDP-5586.

|

This chapter includes the following sections:

2.1 SAN topology general guidelines

The SAN topology requirements for IBM FlashSystem do not differ too much from any other SAN. Remember that a well-sized and designed SAN allows you to build a redundant and failure-proof environment, and minimizing performance issues and bottlenecks. Therefore, before installing any of the products covered by this book, ensure that your environment follows an actual SAN design and architecture, with vendor recommended SAN devices and code levels.

For more SAN design and preferred practices, see the Brocade publication SAN Fabric Administration Best Practices Guide Support Perspective.

A topology is described in terms of how the switches are interconnected. Several different SAN topologies exist, such as core-edge, edge-core-edge, and full MeSH. Each topology has its uses, scalability, and also its cost, so one topology will be a better fit for some SAN demands than others. Independent of the environment demands, there are a few best practices that must be followed to keep your SAN working correctly, performing well, redundant, and resilient.

2.1.1 SAN performance and scalability

Regardless of the storage and the environment, planning and sizing the SAN makes a difference when growing your environment and when troubleshooting problems.

Because most SAN installations continue to grow over the years, the main SAN industry-lead companies design their products in a way to support a certain growth. Keep in mind that your SAN must be designed to accommodate both short-term and medium-term growth.

From the performance standpoint, the following topics must be evaluated and considered:

•Host-to-storage fan-in fan-out ratios

•Host to inter-switch link (ISL) oversubscription ratio

•Edge switch to core switch oversubscription ratio

•Storage to ISL oversubscription ratio

•Size of the trunks

•Monitor for slow drain device issues

From the scalability standpoint, ensure that your SAN will support the new storage and host traffic. Make sure that the chosen topology will also support a growth not only in performance, but also in port density.

If new ports need to be added to the SAN, you might need to drastically modify the SAN to accommodate a larger-than-expected number of hosts or storage. Sometimes these changes increase the number of hops on the SAN, and so cause performance and ISL congestion issues. For additional information, see 2.1.2, “ISL considerations” on page 39.

Consider the use of SAN director-class switches. They reduce the number of switches in a SAN and provide the best scalability available. Most of the SAN equipment vendors provide high port density switching devices.

Therefore, if possible, plan for the maximum size configuration that you expect your IBM FlashSystem installation to reach. Planning for the maximum size does not mean that you must purchase all of the SAN hardware initially. It only requires you to design the SAN to be able to reach the expected maximum size.

2.1.2 ISL considerations

ISLs are responsible for interconnecting the SAN switches, creating SAN flexibility and scalability. For this reason, they can be considered as the core of a SAN topology. Consequently, they are sometimes the main cause of issues that can affect a SAN. For this reason it is important to take extra caution when planning and sizing the ISL in your SAN.

Regardless of your SAN size, topology, or the size of your FlashSystem installation, consider the following practices to your SAN Inter-switch link design:

•Beware of the ISL oversubscription ratio

The standard recommendation is up to 7:1 (seven hosts using a single ISL). However, it can vary according to your SAN behavior. Most successful SAN designs are planned with an oversubscription ratio of 7:1 and some extra ports are reserved to support a 3:1 ratio. However, high-performance SANs start at a 3:1 ratio.

Exceeding the standard 7:1 oversubscription ratio requires you to implement fabric bandwidth threshold alerts. If your ISLs exceed 70%, schedule fabric changes to distribute the load further.

•Avoid unnecessary ISL traffic

If you plan to use external virtualized storages, connect all FlashSystem canister ports in a clustered system to the same SAN switches or Directors as all of the storage devices with which the clustered system of FlashSystem is expected to communicate. Conversely, storage traffic and internode traffic must never cross an ISL, except during migration scenarios.

Keep high-bandwidth utilization servers and I/O Intensive application on the same SAN switches as the FlashSystem host ports. Placing these servers on a separate switch can cause unexpected ISL congestion problems. Also, placing a high-bandwidth server on an edge switch wastes ISL capacity.

•Properly size the ISLs on your SAN. They must have adequate bandwidth and buffer credits to avoid traffic or frames congestion. A congested inter-switch link can affect the overall fabric performance.

•Always deploy redundant ISLs on your SAN. Using an extra ISL avoids congestion if an ISL fails because of certain issues, such as a SAN switch line card or port blade failure.

•Use the link aggregation features, such as Brocade Trunking or Cisco Port Channel, to obtain better performance and resiliency.

•Avoid exceeding two hops between the FlashSystem and the hosts. More than two hops are supported. However, when ISLs are not sized properly, more than two hops can lead to ISL performance issues and buffer credit starvation (SAN congestion).

When sizing over two hops, consider that all the ISLs going to the switch where the Flash

System is connected will also handle the traffic coming from the switches on the edges, as shown in Figure 2-1.

System is connected will also handle the traffic coming from the switches on the edges, as shown in Figure 2-1.

Figure 2-1 ISL data flow

•If possible, use SAN directors to avoid many ISL connections. Problems that are related to oversubscription or congestion are much less likely to occur within SAN director fabrics.

•When you interconnect SAN directors through ISL, spread the Inter-Switch Link (ISL) cables across different directors’ blades. In a situation where an entire blade fails, the ISL will still be redundant through the links connected to other blades.

•Plan for the peak load, not for the average load.

2.2 SAN topology-specific guidelines

Some preferred practices apply to all SANs, as described in 2.1, “SAN topology general guidelines” on page 38. However, each SAN topology has its own specific preferred practices requirements. In this section, we discuss the difference between the different types of topology and highlight the specific considerations for each.

This section covers the following topologies:

•Single switch fabric

•Core-edge fabric

•Edge-core-edge

•Full MeSH

2.2.1 Single switch SANs

The most basic IBM FlashSystem topology consists of a single switch per SAN fabric. This switch can range from a 24-port 1U switch for a small installation of a few hosts and storage devices, to a director with hundreds of ports. This low-cost design solution has the advantage of simplicity and is a sufficient architecture for small-to-medium FlashSystem installations.

One of the advantages of a single switch SAN is that when all servers and storages are connected to the same switches, there is no hop.

|

Note: To meet redundancy and resiliency requirements, a single switch solution needs at least two SAN switches or SAN directors, one per fabric.

|

The preferred practice is to use a multi-slot director-class single switch rather than setting up a core-edge fabric that is made up solely of lower-end switches, as described in 2.1.1, “SAN performance and scalability” on page 38.

The single switch topology, as shown in Figure 2-2, has only two switches; therefore, the FlashSystem ports must be equally distributed on both fabrics.

Figure 2-2 Singe Switch topology

|

Note: To correctly size your network, always calculate the short-term and mid-term growth to avoid lack of ports (on this topology, the limit of ports is based on the switch size). If other switches are added to the network, doing so automatically changes the topology type.

|

2.2.2 Basic core-edge topology

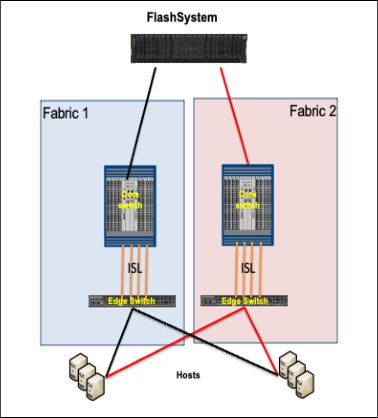

The core-edge topology (as shown in Figure 2-3) is easily recognized by most SAN architects. This topology consists of a switch in the center (usually, a director-class switch), which is surrounded by other switches. The core switch contains all FlashSystem and high-bandwidth hosts. It is connected by using ISLs to the edge switches. The edge switches can be of any size from 24 port switches up to multi-slot directors.

Figure 2-3 Core/Edge Topology

When the FlashSystem and servers are connected to different switches, the hop count for this topology is one.

|

Note: This topology is commonly used to easily growth your SAN network by adding edge switches to the core switch. Consider the ISL ratio and use of physical ports from the core switch when adding new edge switches to your network.

|

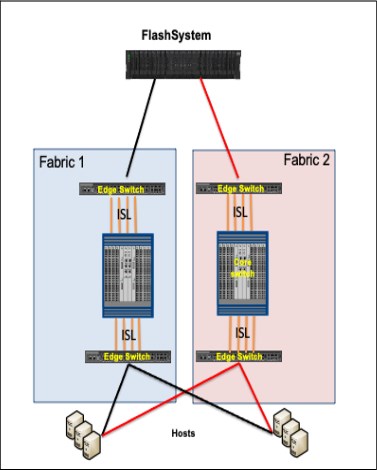

2.2.3 Edge-core-edge topology

Edge-core-edge is the most scalable topology. It is used for installations where a core-edge fabric made up of multi-slot director-class SAN switches is insufficient. This design is useful for large, multi-clustered system installations. Similar to a regular core-edge, the edge switches can be of any size, and multiple ISLs must be installed per switch.

Figure 2-4 shows an edge-core-edge topology with two different edges, one of which is exclusive for the FlashSystem and high-bandwidth servers. The other pair is exclusively for servers.

Figure 2-4 Edge-Core-Edge topology

Performance can be slightly affected if the number of hops increases, depending on the total number of switches and the distance between host and IBM FlashSystem.

Edge-core-edge fabrics allow better isolation between tiers. For more information, see 2.2.6, “Device placement” on page 46.

2.2.4 Full-mesh topology

In a full-mesh topology (see Figure 2-5), all switches are interconnected to all other switches on the same fabric. Therefore, the server and storage placement is not a concern if the number of hops is not more than one.

Figure 2-5 Full-mesh topology

|

Note: Each ISL use one physical port. Depending on the total number of switches, you can ran out of ports easily based on the switch size, it is recommended that the port usage is calculated before a full-mesh SAN network is configured.

|

2.2.5 IBM FlashSystem as a multi SAN storage

IBM FlashSystem now features a maximum of 24 ports. In addition to the increased throughput capacity, this number of ports enables new possibilities and allows different kinds of topologies and migration scenarios.

One of these topologies is the use of a FlashSystem as a multi SAN storage between two isolated SANs. This configuration is useful for storage migration or sharing resources between SAN environments without merging them.

To use an external storage with IBM FlashSystem, this external storage must be attached to IBM FlashSystem through a zoning configuration and set up it as virtualized storage. This feature can be used for storage migration and decommission processes and speed up host migration. In some cases, based on the external storage configuration, virtualizing external storage with IBM FlashSystem can increase performance based on the cache capacity and processing.

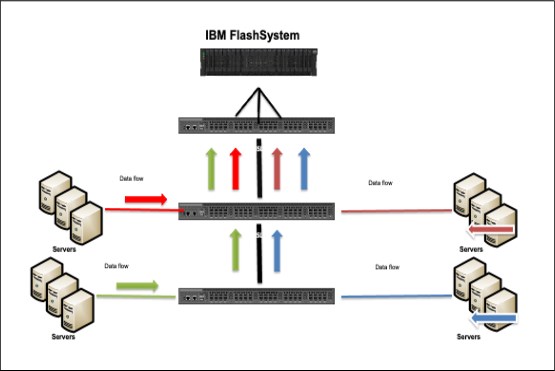

Figure 2-6 shows an example of an FlashSystem as a multi SAN storage.

Figure 2-6 FlashSystem as a multi SAN storage.

Notice in Figure 2-6 that both SANs (shown in blue and red) are isolated. When connected to both SAN networks, IBM FlashSystem can allocate storage to hosts on both SAN networks and can virtualize storages from each SAN networks. This way, you can have older storage on the red SAN that is attached to IBM FlashSystem and providing disks to hosts on the blue network. This configuration is commonly used for migration purposes or in cases where the older storage has a lower performance compared to IBM FlashSystem.

2.2.6 Device placement

In a well-sized environment, it is not usual to experience frame congestion on the fabric. Device placement seeks to balance the traffic across the fabric to ensure that the traffic is flowing in a certain way to avoid congestion and performance issues. The ways to balance the traffic consist of isolating traffic by using zoning, virtual switches, or traffic isolation zoning.

Keeping the traffic local to the fabric is a strategy to minimize the traffic between switches (and ISLs) by keeping storages and hosts attached to the same SAN switch, as shown in Figure 2-7.

Figure 2-7 Storage and hosts attached to the same SAN switch

This solution can fit perfectly in small and medium SANs. However, it is not as scalable as other available topologies. As stated in 2.2.3, “Edge-core-edge topology” on page 43, the most scalable SAN topology is the edge-core-edge. Besides scalability, this topology provides various resources to isolate the traffic and reduce possible SAN bottlenecks.

Figure 2-8 shows an example of traffic segregation on the SAN using edge-core-edge topology.

Figure 2-8 Segregation using edge-core-edge

Even when sharing the same core switches, it is possible to use virtual switches (see 2.2.7, “SAN partitioning” on page 47 for details) to isolate one tier from the other. This configuration helps avoid traffic congestion caused by slow drain devices that are connected to the backup tier switch.

2.2.7 SAN partitioning

SAN partitioning is a hardware-level feature that allows SAN switches to share hardware resources by partitioning its hardware into different and isolated virtual switches. Both Brocade and Cisco provide SAN partitioning features called, respectively, Virtual Fabric and Virtual SAN (VSAN).

Hardware-level fabric isolation is accomplished through the concept of switch virtualization, which allows you to partition physical switch ports into one or more “virtual switches.” Virtual switches are then connected to form virtual fabrics.

As the number of available ports on a switch continues to grow, partitioning switches allow storage administrators to take advantage of high port density switches by dividing physical switches into different virtual switches. From a device perspective, SAN partitioning is completely transparent; therefore, the same guidelines and practices that apply to physical switches apply also to the virtual guidelines and practices.

While the main purposes of SAN partitioning are port consolidation and environment isolation, this feature also is instrumental in the design of a business continuity solution that is based on FlashSystem.

|

Note: When director-class switches are used, use ports from different blades to seek load balance and avoid single point of failure.

|

For more information about IBM FlashSystem business continuity solutions, see Chapter 7, “Ensuring business continuity” on page 357.

2.3 IBM FlashSystem 9200 controller ports

Port connectivity options are significantly increased with IBM FlashSystem 9200 hardware. Models 9846-AG8 and 9848-AG8 deliver up to 12x16 Gb or 12x32GbFC ports per node canister, as listed in Table 2-1.

Table 2-1 FlashSystem 9200

|

Feature

|

FlashSystem 9200

|

|

Fibre Channel HBA

|

3x Quad 16 Gb or 3x Quad 32Gb

|

|

Ethernet I/O

|

2x Dual 25Gb iWARP/RoCE for iSCSI or iSER

|

|

Built in ports

|

4x 10 Gb for internet small computer systems interface (iSCSI)

|

|

Serial attached SCSI (SAS) expansion ports

|

1x Quad 12 Gb SAS (2 ports active)

|

|

Note: FlashSystem 9200 node canisters feature three peripheral component interconnect express (PCIe) slots that you can combine the cards as needed. If expansions are used, one of the slots must have the SAS expansion card. Then, two ports are left for Fibre Channel Host Bus Adapter (HBA) cards, Internet Wide-area RDMA Protocol (iWARP) or RDMA over Converged Ethernet (RoCE) Ethernet cards.

For more information, see this IBM Documentation web page.

|

This section describes some preferred practices and use cases that show how to connect a FlashSystem on the SAN to use this increased capacity.

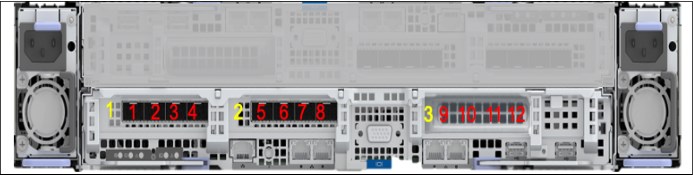

2.3.1 Slots and ports identification

The IBM FlashSystem 9200 can have up to three quad Fibre Channel (FC) HBA cards (12 FC ports) per node canister. Figure 2-9 shows the port location in the rear view of the FlashSystem 9200 node canister.

Figure 2-9 Port location in FlashSystem 9200 rear view

For maximum redundancy and resiliency, spread the ports across different fabrics. Because the port count varies according to the number of cards included in the solution, try to keep the port count equal on each fabric.

2.3.2 Port naming and distribution

In the field, fabric naming conventions vary. However, it is common to find fabrics with names such as PROD_SAN_1 and PROD_SAN_2, or PROD_SAN_A and PROD_SAN_B. This type of naming convention is used to simplify the management and troubleshooting, after their denomination followed by 1 and 2 or A and B, which specifies that the devices connected to those fabrics contains the redundant paths of the same servers and SAN devices.

To simplify the SAN connection identification and troubleshooting, keep all odd ports on the odd fabrics, or “A” fabrics and the even ports on the even fabric or “B” fabrics, as shown in Figure 2-10.

Figure 2-10 FlashSystem 9200 port distribution

As a preferred practice, assign specific uses to specific FlashSystem 9200 ports. This technique helps to optimize the port utilization by aligning the internal allocation of hardware CPU cores and software I/O threads to those ports.

Figure 2-11 shows the specific port use guidelines for the FlashSystem 9200.

Figure 2-11 Port masking configuration on FlashSystem 9200

|

Note: Consider the following points:

•If you use an IBM FlashSystem 9200 system with a single I/O group, the system keeps localfcportmask set to 111111111111 and does not allow you to change this setting. However, this issue is not a problem because the inter-node traffic occurs on the internal PCI mid-plane link. The port-masking recommendations that are shown in Figure 2-11 apply to systems with more than one I/O group.

•Depending on the workload or number of I/O groups, you can reserve ports 1 and 2 from card 3 for inter-node traffic. In this case, ports are available for inter-node traffic, and the localfcportmask is set to 001100001100.

|

Host and storage ports have different traffic behavior, so keeping host and storage ports together produces maximum port performance and utilization by benefiting from its full duplex bandwidth. For this reason, sharing host and storage traffic in the same ports is generally the preferred practice. However, traffic segregation can also provide some benefits in terms of troubleshooting and host zoning management. Consider, for instance, SAN congestion conditions due to a slow draining device.

In this case, segregating the ports simplifies the identification of the device causing the problem. At the same time, it limits the effects of the congestion to the hosts or back-end ports only. Furthermore, dedicating ports for host traffic reduces the possible combinations of host zoning and simplifies SAN management. It is advised to implement the port traffic segregation with configurations with 12 ports only.

Buffer credits

FlashSystem 9200 has a predefined number of buffer credits. The number of buffer credits determines the available throughput over distances: 4-port 16 Gbps adapters have 40 credits available per port, saturating links at up to 5 km (3.1 miles) at 16 Gbps.

|

Notes: Consider the following points:

•For stretched cluster and IBM HyperSwap configurations that do note use ISLs for the internode communication, set the switch port buffer credits to match the IBM FlashSystem 9200 port.

•Balance your bandwidth and make sure you have enough incoming bandwidth to saturate the back-end bandwidth. Attempt to balance your I/O across the ports.

|

2.4 Zoning

This section describes the zoning recommendations for FlashSystem 9200. For more information about external storage virtualization zoning, see IBM System Storage SAN Volume Controller, IBM Storwize V7000, and IBM FlashSystem 7200 Best Practices and Performance Guidelines, SG24-7521 (the recommendations are the same).

|

Important: Errors that are caused by incorrect FlashSystem 9200 zoning often are difficult to isolate and the steps to fix them can affect the SAN environment. Therefore, create your zoning configuration carefully.

|

The initial configuration for FlashSystem 9200 requires the following three zone types:

•Internode and intra-cluster zones

•Replication zones (if using replication)

•Host to FlashSystem 9200 zoning

Each zone type has its own guidelines, which are described in 2.4.1, “Types of zoning” on page 51.

|

Note: Although an internode or intra-cluster zone is not necessary for non-clustered FlashSystem 9200 family, it is generally preferred that one zone is used.

|

2.4.1 Types of zoning

Modern SAN switches have two types of zoning available: Port zoning, and worldwide port name (WWPN) zoning. The preferred method is to use only WWPN zoning. A common misconception is that WWPN zoning provides poorer security than port zoning, which is not the case. Modern SAN switches enforce the zoning configuration directly in the switch hardware. Also, you can use port binding functions to enforce a WWPN to be connected to a particular SAN switch port.

|

Zoning types and NPIV: Avoid the use of a zoning configuration that has a mix of port and WWPN zoning. For NPIV configurations, host zoning must use the WWPN zoning type.

|

Traditional zone design preferred practice calls for single initiator zoning. This means that a zone can consist of many target devices but only one initiator. This is because target devices will usually wait for an initiator device to connect to them, while initiators will actively attempt to connect to each device to which they are zoned. The singe-initiator approach removes the possibility that a misbehaving initiator will affect other initiators.

The drawback to single initiator zoning is that on a large SAN having many zones can make the SAN administrators job more difficult, and the number of zones on a large SAN can exceed the zone database size limits.

Cisco and Brocade have both developed features that can reduce the number of zones by allowing the SAN administrator to control which devices in a zone can talk to other devices in the zone. The features are called Cisco Smart Zoning and Brocade Peer Zoning. Both Cisco Smart Zoning and Brocade Peer Zoning are supported with IBM Spectrum Virtualize and Storwize systems. A brief overview of both is provided below.

Cisco Smart Zoning

Cisco Smart Zoning is a feature that, when enabled, restricts the initiators in a zone to communicating only with target devices in the same zone. For our cluster example, this would allow a SAN administrator to zone all of the host ports for a VMware cluster in the same zone with the storage ports that all the hosts need access to. Smart Zoning configures the access control lists in the fabric routing table to only allow the hosts to communicate with target ports.

Brocade Peer Zoning

Brocade Peer Zoning is a feature that provides a similar functionality of restricting what devices can see other devices within the same zone. However, Peer Zoning is implemented such that some devices in the zone are designated as principal devices. The non-principal devices can only communicate with the principal device, not with each other.

As with Cisco, the communication is enforced in the fabric routing table. You can see more information about Peer Zoning on chapter 4.2.3 of Modernizing Your IT Infrastructure with IBM b-type Gen 6 Storage Networking and IBM Spectrum Storage Products, SG24-8415.

|

Note: Use Smart and Peer zoning for the host zoning only. For intra-cluster, back-end, and replication zoning, use traditional zoning instead.

|

Simple zone for small environments

As an option for small environments, the IBM FlashSystem-based system supports a simple set of zoning rules that enable a small set of host zones to be created for different environments. For systems with fewer than 64 hosts that are attached, zones that contain host HBAs must contain no more than 40 initiators, including the ports that acts as initiators, such as the IBM Spectrum Virtualize-based system ports that are target + initiator.

Therefore, a valid zone can be 32 host ports plus 8 IBM FlashSystem based system ports. Include only one port from each node in the I/O groups that are associated with this host.

|

Note: Do not place more than one HBA port from the same host in the same zone. Also, do not place dissimilar hosts in the same zone. Dissimilar hosts are hosts that are running different operating systems or are different hardware products.

|

2.4.2 Pre-zoning tips and shortcuts

Several tips and shortcuts are available for FlashSystem 9200 zoning, as described in this section.

Naming convention and zoning scheme

When you create and maintain a FlashSystem 9200 zoning configuration, you must have a defined naming convention and zoning scheme. If you do not define a naming convention and zoning scheme, your zoning configuration can be difficult to understand and maintain.

Remember that environments have different requirements, which means that the level of detailing in the zoning scheme varies among environments of various sizes. Therefore, ensure that you have an easily understandable scheme with an appropriate level of detail. Then make sure that you use it consistently and adhere to it whenever you change the environment.

For more information about FlashSystem 9200 naming convention, see 10.14.1, “Naming conventions” on page 506.

Aliases

Use zoning aliases when you create your FlashSystem 9200 zones if they are available on your specific type of SAN switch. Zoning aliases makes your zoning easier to configure and understand, and causes fewer possibilities for errors (see Table 2-2).

Table 2-2 Alias names examples

|

Port/WWPN

|

Use

|

Alias

|

|

Card 1 Port 1 physical WWPN

|

External Storage back-end

|

FS9200_N1P1_STORAGE

|

|

Card 1 Port 1 NPIV WWPN

|

Host attachment

|

FS9200_N1P1_HOST_NPIV

|

|

Card 1 Port 2 physical WWPN

|

External Storage back-end

|

FS9200_N1P2_STORAGE

|

|

Card 1 Port 2 NPIV WWPN

|

Host attachment

|

FS9200_N1P2_HOST_NPIV

|

|

Card 1 Port 3 physical WWPN

|

Inter-node traffic

|

FS9200_N1P3_CLUSTER

|

|

Card 1 Port 3 NPIV WWPN

|

No use

|

No alias

|

|

Card 1 Port 4 physical WWPN

|

Inter-node traffic

|

FS9200_N1P4_CLUSTER

|

|

Card 1 Port 4 NPIV WWPN

|

No use

|

No alias

|

|

Card 2 Port 3 physical WWPN

|

Replication traffic

|

FS9200_N1P7_REPLICATION

|

|

Card 2 Port 3 NPIV WWPN

|

No use

|

No alias

|

|

Card 2 Port 4 physical WWPN

|

Replication traffic

|

FS9200_N1P8_REPLICATION

|

|

Card 2 Port 4 NPIV WWPN

|

No use

|

No alias

|

|

Note: In Table 2-2, not all ports are used as example for aliases. Remember that NPIV ports can be used for host attachment only. If you are using external virtualized back-ends, use the physical port WWPN. For replication and inter-node, also use the physical WWPN. On the alias examples that are listed in Table 2-2, the N is for node, and all examples are from node 1. An N2 example is FS9200_N2P4_CLUSTER.

|

One approach is to create template zones from the host to the FlashSystem 9200. The zoning should contain one alias from the host. This alias must contain one initiator, and one alias from each node canister from the FlashSystem 9200 (preferably the same port).

As an example, create the following zone aliases:

•One zone alias for each FlashSystem 9200 port

•One alias for each host initiator

•One host initiator alias to FlashSystem 9200 port 1 from node 1, and to port 1 from node 2. Then, name this zone HOST1_HBA1_T1_FS92009200.

•(Optional) A second host initiator alias to FlashSystem 9200 port 3 from node 1 and to port 3 from node 2. Then, name this zone HOST2_HBA1_T2_FS9200.

By creating template zones, you keep the number of paths on the host side to four for each volume and a good workload balance among the FlashSystem 9200 ports. Table 2-3 shows how the aliases are distributed if you create template zones described in the example.

Table 2-3 Template examples

|

Template

|

FS9200 ports on Fabric A

|

FS9200 ports on Fabric B

|

|

T1

|

Node 1 port 1

Node 2 port 1

|

Node 1 port 2

Node 2 port 2

|

|

T2

|

Node 1 port 3

Node 2 port 3

|

Node 1 port 4

Node 2 port 4

|

|

T3

|

Node 1 port 5

Node 2 port 5

|

Node 1 port 6

Node 2 port 6

|

|

T4

|

Node 1 port 7

Node 2 port 7

|

Node 1 port 8

Node 2 port 8

|

|

Note: The number of templates varies depending on how many fiber ports are in your system, and how many are dedicated to host access. The port numbers are examples; you can use different ports depending on the number of HBA cards. Plan accordingly.

|

2.4.3 IBM FlashSystem 9200 internode communications zones

Internode (or intra-cluster) communication is critical to the stable operation of the cluster. The ports that carry internode traffic are used for mirroring write cache and metadata exchange between node canisters.

In the FlashSystem family, internode communication occurs primarily through the internal PCI connectivity between the two canisters of a control enclosure. However, for the clustered IBM FlashSystem, the internode communication requirements are similar to those requirements of the SAN Volume Controller.

To establish efficient, redundant, and resilient intra-cluster communication, the intra-cluster zone must contain at least two ports from each node/canister.

For FlashSystem 9200 clusters with two I/O groups or more with eight ports, isolate the intra-cluster traffic by dedicating node ports specifically to inter-node communication. The ports to be used for intra-cluster communication varies according to the port count. See Figure 2-12 on page 55 for port assignment recommendations.

|

Note: On NPIV-enabled configurations, use the physical WWPN for the intra-cluster zoning.

|

Only 16 port logins are allowed from one node to another node in a SAN fabric. Ensure that you apply the proper port masking to restrict the number of port logins. For FlashSystem 9200 clusters with two or more I/O groups, without port masking, any FlashSystem 9200 port and any member of the same zone can be used for intra-cluster communication. This includes the port members of FlashSystem 9200 that connect to host and external virtualized back-ends.

2.4.4 IBM FlashSystem 9200 host zones

The preferred practice to connect a host into a FlashSystem 9200 is to create a single zone to each host port. This zone must contain the host port and one port from each FlashSystem 9200 node canister that the host must access, as shown in Figure 2-12.

Figure 2-12 Typical host to FlashSystem 9200 zoning

This configuration provides four paths to each volume: two preferred paths (one per fabric) and two non-preferred paths. Multipathing software (such as AIXPCM, SDDDSM, VMWare NMP, and the FlashSystem 9200) is optimized to work such with four paths per volume.

|

NPIV consideration: The recommendations in this section also apply to NPIV-enabled configurations. For a list of the systems supported by the NPIV, see this IBM Support web page.

|

When the recommended number of paths to a volume are exceeded, path failures sometimes are not recovered in the required amount of time. In some cases, too many paths to a volume can cause excessive I/O waits, resulting in application failures and, under certain circumstances, it can reduce performance.

|

Note: The option of having eight paths by volume is also supported. However, this design provides no performance benefit and, in some circumstances, can reduce performance. Also, it does not significantly improve reliability nor availability. However, fewer than four paths do not satisfy the minimum redundancy, resiliency, and performance requirements.

|

To obtain the best overall performance of the system and to prevent overloading, the workload to each FlashSystem 9200 port must be equal. Having the same amount of workload typically involves zoning approximately the same number of host FC ports to each FlashSystem 9200 FC port.

Hosts with four or more host bus adapters

If you have four HBAs in your host instead of two HBAs, more planning is required. Since eight paths is not an optimum number, configure your FlashSystem 9200 host definitions (and zoning) as though the single host is two separate hosts. During volume assignment, you alternate which volume was assigned to one of the “pseudo hosts.”

The reason for not assigning one HBA to each path is because the FlashSystem 9200 I/O group works as a cluster. When a volume is created, one node is assigned as preferred and the other node solely serves as a backup node for that specific volume. It means that using one HBA to each path will never balance the workload for that particular volume. Therefore, it is better to balance the load by I/O group instead so that the volume is assigned to nodes automatically.

Figure 2-13 shows an example of a four port host zoning.

Figure 2-13 Four port host zoning

Because the optimal number of volume paths is four, you must create two or more hosts on FlashSystem 9200. During volume assignment, alternate which volume is assigned to each of the “pseudo-hosts” in a round-robin fashion.

|

Note: Pseudo-hosts is not a defined function or feature of SAN Volume Controller/ Storwize. To create a pseudo-host, you simply need to add another host ID to the SAN Volume Controller and Storwize host configuration. Instead of creating one host ID with four WWPNs, you define two hosts with two WWPNs, therefore you need to pay extra attention to the scsi ids assigned to each of the pseudo-hosts to avoid having 2 different volumes from the same storage subsystem with the same scsi id.

|

ESX Cluster zoning

For ESX Clusters, you must create separate zones for each host node in the ESX Cluster as shown in Figure 2-14.

Figure 2-14 ESX Cluster zoning

Ensure that you apply the following preferred practices to your ESX VMware clustered hosts configuration:

•Zone a single ESX cluster in a manner that avoids ISL I/O traversing.

•Spread multiple host clusters evenly across the FlashSystem 9200 node ports and I/O groups.

•Create one host entity for each host node in FlashSystem 9200 and group them in a hostcluster entity.

•Create separate zones for each host node in FlashSystem 9200 and on the ESX cluster.

When you allocate a LUN or volume to a clustered system, use a host cluster on FlashSystem 9200. By doing so, your hosts have the same SCSI ID for every volume, which avoids outages that are caused by SCSI mismatch.

AIX VIOs: LPM zoning

When zoning IBM AIX® VIOs to IBM FlashSystem 9200, you must plan carefully. Because of its complexity, it is common to create more than four paths to each Volume or not provide for proper redundancy. The following preferred practices can help you to have a non-degraded path error on IBM Spectrum Virtualize/Storwize with four paths per volume:

•Create two separate and isolated zones on each fabric for each LPAR.

•Do not put the active and inactive LPAR WWPNs in the same zone or same IBM FlashSystem 9200 host definition.

•Map LUNs to the virtual host FC HBA port WWPNs, not the physical host FCA adapter WWPN.

•When using NPIV, generally make no more than a ratio of one physical adapter to eight Virtual ports. This configuration avoids I/O bandwidth oversubscription to the physical adapters.

•Create a pseudo host in IBM Spectrum Virtualize/FlashSystem host definitions that contain only two virtual WWPNs, one from each fabric as shown in Figure 2-15.

Figure 2-15 shows a correct SAN connection and zoning for LPARs.

Figure 2-15 LPARs SAN connections

During Live Partition Migration (LPM), both inactive and active ports are active. When LPM is complete, the previously active ports show as inactive and the previously inactive ports show as active.

Figure 2-16 shows a Live partition migration from the hypervisor frame to another frame.

Figure 2-16 Live partition migration

|

Note: During LPM, the number of paths doubles from 4 to 8. Starting with eight paths per LUN or volume results in an unsupported 16 paths during LPM, which can lead to I/O interruption.

|

2.5 Distance extension for Remote Copy services

To implement Remote Copy services over distance, the following options are available:

•Optical multiplexors, such as Dense Wavelength Division Multiplexing (DWDM) or Coarse Wavelength Division Multiplexing (CWDM) devices

•Long-distance SFPs and XFPs

•FC-to-IP conversion boxes

•Native IP-based replication with Spectrum Virtualize code

Of these options, the optical varieties of distance extension are preferred. IP distance extension introduces more complexity, is less reliable, and has performance limitations. However, optical distance extension is impractical in many cases because of cost or unavailability.

2.5.1 Optical multiplexors

Optical multiplexors can extend your SAN up to hundreds of kilometers at high speeds. For this reason, they are the preferred method for long-distance expansion. When you are deploying optical multiplexing, make sure that the optical multiplexor is certified to work with your SAN switch model. The FlashSystem 9200 has no allegiance to a particular model of optical multiplexor.

If you use multiplexor-based distance extension, closely monitor your physical link error counts in your switches. Optical communication devices are high-precision units. When they shift out of calibration, you start to see errors in your frames.

2.5.2 Long-distance SFPs or XFPs

Long-distance optical transceivers have the advantage of extreme simplicity. Although no expensive equipment is required, a few configuration steps are necessary. Ensure that you use transceivers that are designed for your particular SAN switch only. Each switch vendor supports only a specific set of small form-factor pluggable (SFP) or Ten Gigabit Small Form Factor Pluggable (XFP) transceivers, so it is unlikely that Cisco SFPs will work in a Brocade switch.

2.5.3 Fibre Channel over IP

Fibre Channel over IP (FCIP) conversion is by far the most common and least expensive form of distance extension. FCIP is a technology that allows FC routing to be implemented over long distances by using the TCP/IP protocol. In most cases, FCIP is implemented in Disaster Recovery scenarios with some kind of data replication between the primary and secondary site.

FCIP is a tunneling technology, which means FC frames are encapsulated in the TCP/IP packets. As such, it is not apparent to devices that are connected through the FCIP link. To use FCIP, you need some kind of tunneling device on both sides of the TCP/IP link that integrates FC and Ethernet connectivity. Most of the SAN vendors offer FCIP capability through stand-alone devices (Multiprotocol routers) or by using blades that are integrated in the director class product. FlashSystem 9200 supports FCIP connection.

An important aspect of the FCIP scenario is the IP link quality. With IP-based distance extension, you must dedicate bandwidth to your FC to IP traffic if the link is shared with other IP traffic. Because the link between two sites is low-traffic or used only for email, do not assume that this is the only type of traffic. The design of FC is sensitive to congestion. You do not want a spyware problem or a DDOS attack on an IP network to disrupt your FlashSystem 9200.

Also, when you are communicating with your organization’s networking architects, distinguish between megabytes per second (MBps) and megabits per second (Mbps). In the storage world, bandwidth often is specified in MBps, but network engineers specify bandwidth in Mbps. If you fail to specify MB, you can end up with an impressive-sounding 155 Mbps OC-3 link, which supplies only approximately 15 MBps to your FlashSystem 9200. If you include the safety margins, this link is not as fast as you might hope; therefore, ensure that the terminology is correct.

Consider the following steps when you are planning for your FCIP TCP/IP links:

•For redundancy purposes use as many TCP/IP links between sites as you have fabrics in each site that you want to connect. In most cases, there are two SAN FC fabrics in each site, so you need two TCP/IP connections between sites.

•Try to dedicate TCP/IP links only for storage interconnection. Separate them from other LAN/WAN traffic.

•Make sure that you have a service level agreement (SLA) with your TCP/IP link vendor that meets your needs and expectations.

•If you do not use Global Mirror with Change Volumes (GMCV), make sure that you have sized your TCP/IP link to sustain peak workloads.

•The use of FlashSystem 9200 internal Global Mirror (GM) simulation options can help you test your applications before production implementation. You can simulate the GM environment within one FlashSystem 9200 system without partnership with another. Use the chsystem command with the following parameters to perform GM testing:

– gminterdelaysimulation

– gmintradelaysimulation

For more information about GM planning, see Chapter 6, “IBM FlashCopy services capabilities” on page 243.

•If you are not sure about your TCP/IP link security, enable Internet Protocol Security (IPSec) on the all FCIP devices. IPSec is enabled on the Fabric OS level, so you do not need any external IPSec appliances.

In addition to planning for your TCP/IP link, consider adhering to the following preferred practices:

•Set the link bandwidth and background copy rate of partnership between your replicating FlashSystem 9200 to a value lower than your TCP/IP link capacity. Failing to set this rate can cause an unstable TCP/IP tunnel, which can lead to stopping all your Remote Copy relations that use that tunnel.

•The best case is to use GMCV when replication is done over long distances.

•Use compression on corresponding FCIP devices.

•Use at least two ISLs from your local FC switch to local FCIP router.

•On a Brocade SAN, use the Integrated Routing feature to avoid merging fabrics from both sites.

For more information about FCIP, see IBM/Cisco Multiprotocol Routing: An Introduction and Implementation, SG24-7543.

2.5.4 SAN extension with Business Continuity configurations

FlashSystem 9200 HyperSwap technology provides Business Continuity solutions over metropolitan areas with distances up to 300 km. Usually these solutions are achieved using SAN extension over WDM technology. Furthermore, multiple WDMs and physical links are implemented to avoid single points of failure. When implementing these solutions, particular attention must be paid in the intercluster connectivity set up.

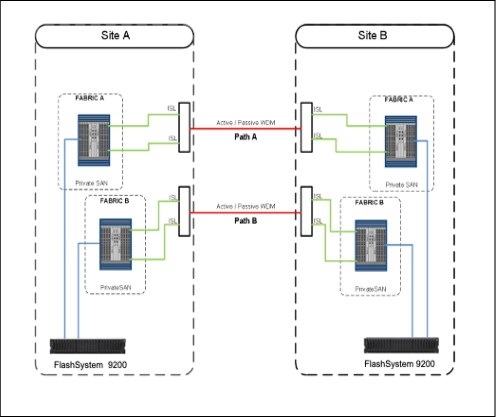

In this configuration, the intercluster communication is isolated in a Private SAN that interconnects Site A and Site B through a SAN extension infrastructure consisting of two DWDMs. Let’s assume that, for redundancy reasons, two ISLs are used for each fabric for the Private SAN extension.

Two possible configurations to interconnect the Private SANs are as follows:

•In Configuration 1, shown in Figure 2-17, one ISL per fabric is attached to each DWDM. In this case, the physical paths Path A and Path B are used to extend both fabrics.

Figure 2-17 Configuration 1: Physical Paths Shared Among Fabrics

•In Configuration 2, shown in Figure 2-18, ISLs of fabric A are attached only to Path A, while ISLs of fabric B are attached only to Path B. In this case, the physical paths are not shared between the fabrics.

Figure 2-18 Configuration 2: physical paths not shared among the fabrics

With Configuration 1, in case of failure of one of the physical paths, both fabrics are simultaneously affected and a fabric reconfiguration occurs because of an ISL loss. This situation could lead to a temporary disruption of the intra-cluster communication and, in the worst case, to a split brain condition. To mitigate this situation, link aggregation features like Brocade ISL trunking can be implemented.

With Configuration 2, a physical path failure leads to a fabric segmentation of one of the two fabrics, leaving the other fabric unaffected. In this case, the intra-cluster communication would be guaranteed through the unaffected fabric.

To summarize, the recommendation is to fully understand the implication of a physical path or DWDM loss in the SAN extension infrastructure and implement the appropriate architecture to avoid a simultaneous impact.

2.5.5 Native IP replication

To enable native IP replication, FlashSystem 9200 implements the Bridgeworks SANSlide network optimization technology. For more information about this solution, see IBM SAN Volume Controller and Storwize Family Native IP Replication, REDP-5103.

It is possible to implement native IP-based replication on the FlashSystem 9200. Native means the FlashSystem 9200 does not need any FCIP routers to create a replication partnership. This partnership is based on the Internet Protocol network (IP) instead of the Fibre Channel (FC) network.

The main design point for the initial SANSlide implementation and subsequent enhancements, including the addition of replication compression is to reduce link utilization to allow the links to run closer to their respective line speed at distance and over poor quality links. IP replication compression does not significantly increase the effective bandwidth of the links beyond the physical line speed of the links.

If bandwidths are required that exceed the line speed of the physical links, alternative technologies that should be considered (such as FCIP), where compression is done in the tunnel and often yields an increase in effective bandwidth of 2:1 or more.

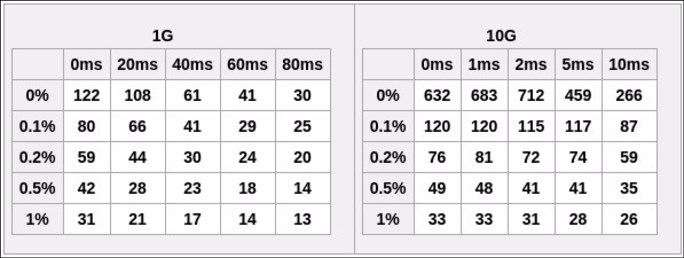

It is important to understand that the effective bandwidth of an IP link is highly dependent on latency and the quality of the link in terms of the rate of packet loss. Even a small amount of packet loss and resulting retransmits will significantly degrade the bandwidth of the link.

Figure 2-19 shows the effects distance and packet loss have on the effective bandwidth of the links in MBps. Numbers reflect pre-compression data rate with compression on and 50% compressible data. These numbers are as tested and can vary depending on specific link and data characteristics.

Figure 2-19 Effect of distance on packet loss

|

Notes: Consider the following points:

•The maximum bandwidth for a typical IP replication configuration that consists of two 1-Gb links is approximately 244 MBps at zero latency and zero packet loss.

•When two links are used, replication performs at twice the speed of the lower performing link. For example, the maximum combined data rate for two 1 Gb IP links at 0 latency and 0% packet loss on link A and 0.1% packet loss on link B is 160 MBps.

•10 Gb links should not be used with latencies beyond 10 ms. Beyond 10 ms, a 1 Gb link begins to outperform a 10 Gb link.

•The FlashSystem 9200 supports volume compression. However, replication runs above volume compression in the IBM Spectrum Virtualize software stack, which means volumes are replicated at their full uncompressed capacity. This behavior differs from some storage systems, such as the IBM XIV, where replication runs below volume compression and therefore replicate the compressed capacity of the volumes. This difference must be considered when sizing workloads that are moving from one storage system technology to another.

|

2.6 Tape and disk traffic that share the SAN

If you have free ports on your core switch, you can place tape devices (and their associated backup servers) on the FlashSystem 9200 SAN. However, do not put tape and disk traffic on the same FC HBA.

To avoid affecting ISL links and congestion on you SAN, do not put tape ports and backup servers on different switches; modern tape devices have high-bandwidth requirements.

During your backup SAN configuration, use the switch virtualization to separate the traffic type. Backup processes have different frames than production and can affect performance.

Backup requests often use all network resources available to finish writing on its destination target. Until the request is finished, the bandwidth is occupied and does not allow other frames access the network.

Figure 2-20 shows the difference between this two types of frames.

Figure 2-20 Access methods - FC Frames

Backup frames use the sequential method to write data (it releases only the path after it is done writing), while production frames write and read data randomly. Writing and reading is constantly occurring with the same physical path. If backup and production are setup on the same environment, production frames (read and write) can run only tasks when backup frames are complete, which causes latency on your production SAN network.

Figure 2-21 shows one example of a backup and production SAN configuration to avoid congestion that is caused by high bandwidth use from the backup process.

Figure 2-21 Production and Backup Fabric

2.7 Switch interoperability

|

Note: For more information about interoperability, see IBM System Storage Interoperation Center (SSIC).

|

FlashSystem 9200 is flexible regarding switch vendors. All of the node canister connections on a specific FlashSystem 9200 single or clustered system must go to the switches of a single vendor. That is, you must not have several nodes or node ports plugged into vendor A and several nodes or node ports plugged into vendor B.

FlashSystem 9200 supports combinations of SANs that are made up of switches from multiple vendors in the same SAN. However, this approach is not preferred in practice. Despite years of effort, interoperability among switch vendors is less than ideal because FC standards are not rigorously enforced. Interoperability problems between switch vendors are notoriously difficult and disruptive to isolate.

Also, it can take a long time to obtain a fix. For these reasons, run only multiple switch vendors in the same SAN long enough to migrate from one vendor to another vendor, if this setup is possible with your hardware.

You can run a mixed-vendor SAN if you have agreement from both switch vendors that they fully support attachment with each other.

Interoperability between Cisco switches and Brocade switches is not recommended, except during fabric migrations, and then only if you have a back-out plan in place. Also, when connecting BladeCenter switches to a core switch, consider the use of the N-Port ID Virtualization (NPIV) technology.

When you have SAN fabrics with multiple vendors, pay special attention to any particular requirements. For example, observe from which switch in the fabric the zoning must be performed.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.