Planning storage pools

This chapter describes considerations for planning storage pools for an IBM FlashSystem implementation. It explains various pool configuration options, including Easy Tier and data reduction pools (DRPs). It provides and provides best practices on implementation and an overview of some typical operations with MDisks.

This chapter includes the following topics:

4.1 Introduction to pools

In general, a storage pool or pool, sometimes referred to as managed disk group, is a grouping of storage capacity that is used to provision volumes and logical units (LUNs) that can subsequently be made visible to hosts.

IBM FlashSystem supports the following types of pools:

•Standard pools: Parent pools and child pools

•DRPs: Parent pools and quotaless child pools

Standard pools were available since the initial release of IBM Spectrum Virtualize in 2003 and can include fully allocated or thin-provisioned volumes.

Real-time Compression (RtC) is allowed only with standard pools on some older IBM SAN Volume Controller hardware models and should not be implemented in new configurations.

|

Note: The latest node hardware does not support RtC.

SA2 and SV2 IBM SAN Volume Controller node hardware do not support the use of RtC volumes. To migrate a system to use these node types, all RtC volumes must be removed (migrated) to uncompressed standard pool volumes, or into a DRP.

|

IBM FlashSystems that use standard pools cannot be configured by using RtC.

DRPs represent a significant enhancement to the storage pool concept because the virtualization layer is primarily a simple layer that runs the task of lookups between virtual and physical extents. With the introduction of data reduction technology, compression, and deduplication, it has become more of a requirement to have an uncomplicated way to stay thin.

DRPs increase infrastructure capacity usage by employing new efficiency functions and reducing storage costs. The pools enable you to automatically de-allocate (not to be confused with deduplicate) and reclaim capacity of thin-provisioned volumes containing deleted data. In addition, for the first time, the pools enable this reclaimed capacity to be reused by other volumes.

Either pool type can be made up of different tiers. A tier defines a performance characteristic of that subset of capacity in the pool. Often, no more than three tier types are be defined in a pool (fastest, average, and slowest). The tiers and their usage are managed automatically by the Easy Tier function.

4.1.1 Standard pool

Standard pools (also referred to as traditional storage pools), provide a way of providing storage in IBM FlashSystem. They use a fixed allocation unit of an extent. Standard pools are still a valid method to providing capacity to hosts. For more information about guidelines for implementing standard pools, see 4.2, “Storage pool planning considerations” on page 132.

IBM FlashSystem can define parent and child pools. A parent pool has all the capabilities and functions of a normal IBM FlashSystem pool. A child pool is a logical subdivision of a storage pool or managed disk group. Like a parent pool, a child pool supports volume creation and migration.

When you create a child pool in a standard parent pool the user must specify a capacity limit for the child pool. This limit allows for a quota of capacity to be allocated to the child pool. This capacity is reserved for the child pool and detracts from the available capacity in the parent pool. This process is different than the method with which child pools are implemented in a DRP. For more information, see “Quotaless data reduction child pool” on page 118.

A child pool inherits its tier setting from the parent pool. Changes to a parent’s tier setting are inherited by child pools.

A child pool supports the Easy Tier function if Easy Tier is enabled on the parent pool. The child pool also inherits Easy Tier status, pool status, capacity information, and back-end storage information. The I/O activity of parent pool is the sum of the I/O activity of itself and the child pools.

Parent pools

Parent pools receive their capacity from MDisks. To track the space that is available on an MDisk, the system divides each MDisk into chunks of equal size. These chunks are called extents and are indexed internally. The choice of extent size affects the total amount of storage that is managed by the system. The extent size remains constant throughout the lifetime of the parent pool.

All MDisks in a pool are split into extents of the same size. Volumes are created from the extents that are available in the pool. You can add MDisks to a pool at any time to increase the number of extents that are available for new volume copies or to expand volume copies. The system automatically balances volume extents between the MDisks to provide the best performance to the volumes.

You cannot use the volume migration functions to migrate volumes between parent pools that feature different extent sizes. However, you can use volume mirroring to move data to a parent pool that has a different extent size.

Choose extent size wisely according to your future needs. A small extent size limit your overall usable capacity, but a larger extent size can waste storage. For example, if you select an extent size of 8 GiB, but then only create a 6 GiB volume, one entire extent is allocated to this volume (8 GiB) and hence 2 GiB are unused.

When you create or manage a parent pool, consider the following general guidelines:

•Ensure that all MDisks that are allocated to the same tier of a parent pool are the same RAID type. This configuration ensures that the same resiliency is maintained across that tier. Similarly, for performance reasons, do not mix RAID types within a tier. The performance of all volumes is reduced to the lowest achiever in the tier and a mismatch of tier members can result in I/O convoying effects where everything is waiting on the slowest member.

•An MDisk can be associated with only one parent pool.

•You should specify a warning capacity for a pool. A warning event is generated when the amount of space that is used in the pool exceeds the warning capacity. The warning threshold is especially useful with thin-provisioned volumes that are configured to automatically use space from the pool.

•Volumes are associated with just one pool, except for the duration of any migration between parent pools.

•Volumes that are allocated from a parent pool are by default striped across all the storage that is placed into that parent pool. Wide striping can provide performance benefits.

•You can only add MDisks that are in unmanaged mode to a parent pool. When MDisks are added to a parent pool, their mode changes from unmanaged to managed.

•You can delete MDisks from a parent pool under the following conditions:

– Volumes are not using any of the extents that are on the MDisk.

– Enough free extents are available elsewhere in the pool to move extents that are in use from this MDisk.

– The system ensures that all extents that are used by volumes in the child pool are migrated to other MDisks in the parent pool to ensure that data is not lost.

|

Important: Before you remove MDisks from a parent pool, ensure that the parent pool has enough capacity for child pools that are associated with the parent pool.

|

•If the parent pool is deleted, you cannot recover the mapping that existed between extents that are in the pool or the extents that the volumes use. If the parent pool includes associated child pools, you must delete the child pools first and return its extents to the parent pool. After the child pools are deleted, you can delete the parent pool. The MDisks that were in the parent pool are returned to unmanaged mode and can be added to other parent pools. Because the deletion of a parent pool can cause a loss of data, you must force the deletion if volumes are associated with it.

|

Note: Deleting a child or parent pool is unrecoverable.

If you force-delete a pool, all volumes in that pool are deleted, even if they are mapped to a host and are still in use. Use extreme caution when force-deleting pool objects because volume-to-extent mapping cannot be recovered after the delete is processed.

Force-deleting a storage pool is possible only with the command line tools. See the rmmdiskgrp command-help for details.

|

•When you delete a pool with mirrored volumes, consider the following points:

– if the volume is mirrored and the synchronized copies of the volume are all in the same pool, the mirrored volume is destroyed when the storage pool is deleted.

– If the volume is mirrored and a synchronized copy exists in a different pool, the volume remains after the pool is deleted.

You might not be able to delete a pool or child pool if Volume Delete Protection is enabled. In code versions 8.3.1 and later, Volume Delete Protection is enabled by default. However, the granularity of protection is improved; you can now specify Volume Delete Protection to be enabled or disabled on a per-pool basis, rather than on a system basis as was previously the case.

Child pools

Instead of being created directly from MDisks, child pools are created from existing capacity that is allocated to a parent pool. As with parent pools, volumes can be created that specifically use the capacity that is allocated to the child pool. Child pools are similar to parent pools with similar properties and can be used for volume copy operation.

Child pools are created with fully-allocated physical capacity; that is, the physical capacity that is applied to the child pool is reserved from the parent pool, as though you created a fully-allocated volume of the same size in the parent pool.

The allocated capacity of the child pool must be smaller than the free capacity that is available to the parent pool. The allocated capacity of the child pool is no longer reported as the free space of its parent pool. Instead, the parent pool reports the entire child pool as used capacity. You must monitor the used capacity (instead of the free capacity) of the child pool instead.

When you create or work with a child pool, consider the following general guidelines:

•Child pools are created automatically by IBM Spectrum Connect VASA client to implement VMware vVols.

•As with parent pools, you can specify a warning threshold that alerts you when the capacity of the child pool is reaching its upper limit. Use this threshold to ensure that access is not lost when the capacity of the child pool is close to its allocated capacity.

•On systems with encryption enabled, child pools can be created to migrate existing volumes in a non-encrypted pool to encrypted child pools. When you create a child pool after encryption is enabled, an encryption key is created for the child pool even when the parent pool is not encrypted. You can then use volume mirroring to migrate the volumes from the non-encrypted parent pool to the encrypted child pool.

•Ensure that any child pools that are associated with a parent pool have enough capacity for the volumes that are in the child pool before removing MDisks from a parent pool. The system automatically migrates all extents that are used by volumes to other MDisks in the parent pool to ensure data is not lost.

•You cannot shrink the capacity of a child pool to less than its real capacity. The system uses reserved extents from the parent pool that use multiple extents. The system also resets the warning level when the child pool is shrunk, and issues a warning if the level is reached when the capacity is shrunk.

•The system supports migrating a copy of volumes between child pools within the same parent pool or migrating a copy of a volume between a child pool and its parent pool. Migrations between a source and target child pool with different parent pools are not supported. However, you can migrate a copy of the volume from the source child pool to its parent pool. The volume copy can then be migrated from the parent pool to the parent pool of the target child pool. Finally, the volume copy can be migrated from the target parent pool to the target child pool.

•Migrating a volume between parent and child pool (with the same encryption key or no encryption) results in a nocopy migration. That is, the data does not move. Instead, the extents are re-allocated to the child or parent pool and the accounting of the used space is corrected. That is, the free extents are reallocated to the child or parent to ensure the total capacity allocated to the child pool remains unchanged.

•A special form of quotaless data reduction child pool can be created from a data reduction parent pool. For more information, see “Quotaless data reduction child pool” on page 118

Small Computer System Interface unmap in a standard pool

A standard pool can use Small Computer System Interface (SCSI) unmap space reclamation, but not as efficiently as a DRP.

When a host submits a SCSI unmap command to a volume in a standard pool, the system changes the unmap command into a write_same command of zeros. This unmap command becomes an internal special command and can be handled accordingly by different layers in the system.

For example, the cache does not mirror the data; instead, it passes the special reference to zeros. The RtC functions reclaim those areas (assuming 32 KB or larger) and shrink the volume allocation.

The back-end layers also submit the write_same command of zeros to the internal or external MDisk devices. For a Flash or SSD-based MDisk this process results in the device freeing the capacity back to its available space. Therefore, it shrinks the used capacity on Flash or SSD, which helps to improve the efficiency of garbage collection on the device and performance. The process of reclaiming space is called garbage collection.

For Nearline SAS drives, the write_same of zeros commands can overload the drives themselves, this can result in performance problems.

|

Important: A standard pool does shrink its used space as the result of a SCSI unmap command. The backend capacity might shrink its used space, but the pool used capacity does not shrink.

The exception is with RtC volumes where the reused capacity of the volume might shrink; however, the pool allocation to that RtC volume remains unchanged. It means that an RtC volume can reuse that unmapped space first before requesting more capacity from the thin provisioning code.

|

Thin-provisioned volumes in a standard pool

A thin-provisioned volume presents a different capacity to mapped hosts than the capacity that the volume uses in the storage pool. IBM FlashSystem supports thin-provisioned volumes in standard pools.

|

Note: While DRPs fundamentally support thin-provisioned volumes, they are used in conjunction with compression and deduplication. With DRPs, you should avoid the use of thin-provisioned volumes without additional data reduction.

|

In standard pools, thin-provisioned volumes are created as a specific volume type; that is, based on capacity-savings criteria. These properties are managed at the volume level. The virtual capacity of a thin-provisioned volume is typically significantly larger than its real capacity. Each system uses the real capacity to store data that is written to the volume, and metadata that describes the thin-provisioned configuration of the volume. As more information is written to the volume, more of the real capacity is used.

The system identifies read operations to unwritten parts of the virtual capacity and returns zeros to the server without the use of any real capacity. For more information about storage system, pool, and volume capacity metrics, see Chapter 9, “Implementing a storage monitoring system” on page 387.

Thin-provisioned volumes can also help simplify server administration. Instead of assigning a volume with some capacity to an application and increasing that capacity as the needs of the application change, you can configure a volume with a large virtual capacity for the application. You can then increase or shrink the real capacity as the application needs change, without disrupting the application or server.

It is important to monitor physical capacity if you want to provide more space to your hosts than is physically available in your IBM FlashSystem. For more information about monitoring the physical capacity of your storage, and an explanation of the difference between thin provisioning and over-allocation, see 9.5, “Creating alerts for IBM Spectrum Control and IBM Storage Insights” on page 425.

Thin provisioning on top of Flash Core Modules

If you use the compression functions that are provided by the IBM Flash Core Modules (FCMs) in your FlashSystem as a mechanism to add data reduction to a standard pool while maintaining the maximum performance, take care to understand the capacity reporting, in particular if you want to thin provision on top of the FCMs.

The FCM RAID array reports its theoretical maximum capacity, which can be as large as 4:1. This capacity is the maximum that can be stored on the FCM array. However, it might not reflect the compression savings that you achieve with your data.

It is recommended that you start conservatively, especially if you are allocating this capacity to IBM SAN Volume Controller or another IBM FlashSystem (the virtualizer).

You must first understand your expected compression ratio. In an initial deployment, allocate approximately 50% fewer savings. You can easily add “volumes” to the back-end storage system to present as new external “MDisk” capacity to the virtualizer later if your compression ratio is met or bettered.

For example, you have 100 TiB of physical usable capacity in an FCM RAID array before compression. Your comprestimator results show savings of approximately 2:1, which suggests that you can write 200 TiB of volume data to this RAID array.

Start at 150 TiB of volumes that are mapped to as external MDisks to the virtualizer. Monitor the real compression rates and usage and over time add in the other 50 TiB of MDisk capacity to the same virtualizer pool. Be sure to leave spare space for unexpected growth, and consider the guidelines that are outlined in 3.2, “Arrays” on page 78

If you often over-provision your hosts at much higher rates, you can use a standard pool and create thin-provisioned volumes in that pool. However, be careful that you do not run out of space. You now need to monitor the backend controller pool usage and the virtualizer pool usage in terms of volume thin provisioning over-allocation. In essence, you are double accounting with the thin provisioning; that is, expecting 2:1 on the FCM compression, and then whatever level you over-provision at the volumes.

If you know that your hosts rarely grow to use the provisioned capacity, this process can be safely done; however, the risk comes from run-away applications (writing large amounts of capacity) or an administrator suddenly enabling application encryption and writing to fill the entire capacity of the thin-provisioned volume.

4.1.2 Data reduction pools

IBM FlashSystem uses innovative DRPs that incorporate deduplication and hardware-accelerated compression technology, plus SCSI unmap support. It also uses all of the thin provisioning and data efficiency features that you expect from IBM Spectrum Virtualize-based storage to potentially reduce your CAPEX and OPEX. Also, all of these benefits extend to over 500 heterogeneous storage arrays from multiple vendors.

DRPs were designed with space reclamation being a fundamental consideration. DRPs provide the following benefits:

•Log Structured Array allocation (re-direct on all overwrites)

•Garbage collection to free whole extents

•Fine-grained (8 KB) chunk allocation/de-allocation within an extent.

•SCSI unmap and write same (Host) with automatic space reclamation

•Support for “back-end” unmap and write same

•Support for compression

•Support for deduplication

•Support for traditional fully allocated volumes

Data reduction can increase storage efficiency and reduce storage costs, especially for flash storage. Data reduction reduces the amount of data that is stored on external storage systems and internal drives by compressing and deduplicating capacity and providing the ability to reclaim capacity that is no longer in use.

The potential capacity savings that compression alone can provide are shown directly in the GUI interfaces by way of the included “comprestimator” functions. Since version 8.4 of the Spectrum Virtualize software, comprestimator is always on and you can see the overall expected savings in the dashboard summary view. The specific savings per volume in the volumes views also are available.

To estimate potential total capacity savings that data reduction technologies (compression and deduplication) can provide on the system, use the Data Reduction Estimation Tool (DRET). This tool is a command line, host-based utility that analyzes user workloads that are to be migrated to a new system. The tool scans target workloads on all attached storage arrays, consolidates these results, and generates an estimate of potential data reduction savings for the entire system.

You download DRET and its readme file to a Windows client and follow the installation instructions in the readme. The readme file also describes how to use DRET on a variety of host servers.

The DRET can be downloaded from this IBM Support web page.

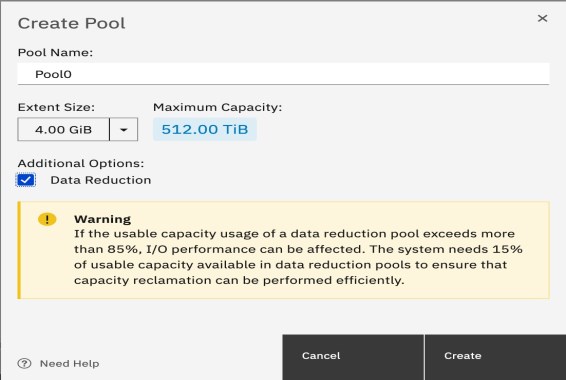

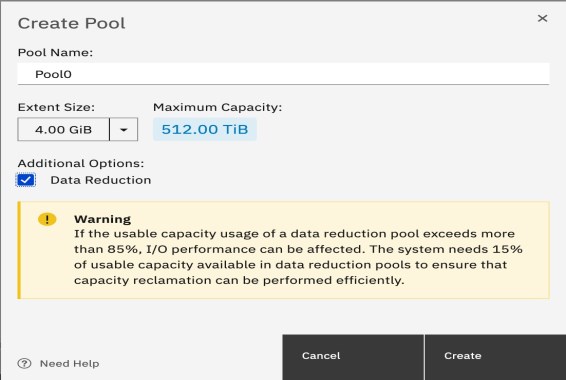

To use data reduction technologies on the system, you must create a DRP, and create compressed or compressed and deduplicated volumes.

For more information, see 4.1.4, “Data reduction estimation tools” on page 125.

Quotaless data reduction child pool

From version 8.4, DRP added support for a special type of child pool, known as a quotaless child pool.

The concepts and high-level description of parent-child pools are the same as for standard pools with a few major exceptions.

•You cannot define a capacity or quota for a DRP child pool.

•A DRP child pool shares the same encryption key as its parent.

•Capacity warning levels cannot be set on a DRP child pool. Instead, you must rely on the warning levels of the DRP parent pool.

•A DRP child pool consumes space from the DRP parent pool as volumes are written to it.

•Child and parent pools share the same data volume; therefore, data is de-duplicated between parent and child volumes.

•A DRP child pool can use 100% of the capacity of the parent pool.

•The migratevdisk commands can now be used between parent and child pools. Because they share the encryption key, this operation becomes a “nocopy” operation.

•From code level 8.4.2.0 throttling is supported on DRP child pools.

To create a DRP child pool, use the new pool type of child_quotaless.

Because a DRP share capacity between volumes (when deduplication is used), it is virtually impossible to attribute capacity ownership of a specific grain to a specific volume because it might be used by two more volumes, which the is value proposition of deduplication. This process results in the differences between standard and DRP child pools.

Object-based access control (OBAC) or multi-tenancy can now be applied to DRP child pools or volumes as OBAC requires a child pool to function.

VMware vVols for DRP is not yet supported or certified at the time of this writing.

SCSI unmap

DRPs support end-to-end unmap functionality. Space that is freed from the hosts by means of a SCSI unmap command results in the reduction of the used space in the volume and pool.

For example, a user deletes a small file on a host, which the operating system turns into a SCSI unmap for the blocks that made up the file. Similarly, a large amount of capacity can be freed if the user deletes (or Vmotions) a volume that is part of a data store on a host. This process might result in many contiguous blocks being freed. Each of these contiguous blocks results in a SCSI unmap command being sent to the storage device.

n a DRP, when the IBM FlashSystem receives a SCSI unmap command, the result is that the capacity is freed that is allocated within that contiguous chunk. The deletion is asynchronous, and the unmapped capacity is first added to the “reclaimable” capacity, which is later physically freed by the garbage collection code. For more information, see 4.1.5, “Understanding capacity use in a data reduction pool” on page 130.

Similarly, deleting a volume at the DRP level frees all of the capacity back to the pool. The DRP also marks those blocks as “reclaimable” capacity, which the garbage collector later frees back to unused space. After the garbage collection frees an entire extent, a new SCSI unmap command is issued to the backend MDisk device.

Unmapping can help ensure good MDisk performance; for example, Flash drives can reuse the space for wear-leveling and to maintain a healthy capacity of “pre-erased” (ready to be used) blocks.

Virtualization devices like IBM FlashSystem with external storage can also forward unmap information (such as when extents are deleted or migrated) to other storage systems.

Enabling, monitoring, throttling, and disabling SCSI unmap

By default, host-based unmap support is disabled on all product other than the FlashSystem 9000 series. Backend unmap is enabled by default on all products.

To enable or disable host-based unmap, run the following command:

chsystem -hostunmap on|off

To enable or disable backend unmap run the following command:

chsystem -backendunmap on|off

You can check how much SCSI unmap processing is occurring on a per volume or per-pool basis by using the performance statistics. This information can be viewed with Spectrum Control or Storage Insights.

|

Note: SCSI unmap might add more workload to the backend storage.

Performance monitoring helps to notice possible effects and if SCSI unmap workload is affecting performance, consider taking the necessary steps and consider the data rates that are observed. It might be expected to see GiBps of unmap if you just deleted many volumes.

|

You can throttle the amount of “offload” operations (such as the SCSI unmap command) using the per-node settings for offload throttle. For example:

mkthrottle -type offload -bandwidth 500

This setting limits each node to 500MiBps of offload commands.

You can also stop the IBM FlashSystem from processing SCSI unmap operations for one or more host systems. You might find an over-zealous host, or not have the ability to configure the settings on some of your hosts. To modify a host to disable unmap, change the host type:

chhost -type generic_no_unmap <host_id_or_name>

If you experience severe performance problems as a result of SCSI unmap operations, you can disable SCSI unmap on the entire IBM FlashSystem for the front end (host), backend, or both.

Fully allocated volumes in a DRP

It is possible to create fully allocated volumes in a DRP.

A fully allocated volume uses the entire capacity of the volume. That is, when created that space is reserved (used) from the DRP and is not available for other volumes in the DRP.

Data will not be deduplicated or compressed in a fully allocated volume. Similarly, because it does not use the internal fine-grained allocation functions, the allocation and performance are the same or better than a fully allocated volume in a standard pool.

Compressed and deduplicated volumes in a DRP

It is possible to create compressed only volumes in a DRP.

A compressed volume is by its nature thin-provisioned. A compressed volume uses only its compressed data size in the pool. The volume grows only as you write data to it.

It is possible, but not recommended that you create a deduplicated-only volume in a DRP. A deduplicated volume is thin-provisioned in nature. The additional processing that is required to also compress the de-duplicated block is minimal; therefore, it is recommended that you create a compressed and de-duplicated volume rather than only a de-duplicated volume.

The DRP will first look for deduplication matches; then, it compress the data before writing to the storage.

Thin-provisioned only volumes in a DRP

It is not recommended that you create a thin-provisioned only volume in a DRP.

Thin-provisioned volumes use the fine-grained allocation functions of DRP. The main benefit of DRP is in the data reduction functions (compression and deduplication). Therefore, if you want to create a thin-provisioned volume in a DRP, create a compressed volume.

|

Note: In some cases, when the backend storage is thin-provisioned or data reduced, the GUI might not offer the option to create only thin-provisioned volumes in a DRP. This issue occurs because it is highly recommended that you do not use this option because it can cause extreme capacity-monitoring problems with a high probability of running out of space.

|

DRP internal details

DRPs consists of various internal metadata volumes and it is important to understand how these metadata volumes are used and mapped to user volumes. Each user volume has a corresponding journal, forward lookup, and directory volume.

The internal layout of a DRP is different from a standard pool. A standard pool creates volume objects within the pool. Some fine grained internal metadata is stored within a thin-provisioned or real-time-compressed volume in a standard pool. Overall, the pool contains volume objects.

A DRP reports volumes to the user in the same way as a standard pool. However, internally it defines a Directory Volume for each user volume that is created within the pool. The directory points to grains of data that are stored in the Customer Data Volume. All volumes in a single DRP use the same Customer Data Volume to actually store their data. Therefore, deduplication is possible across volumes in a single DRP.

Other internal volumes are created, one per DRP. There is one Journal Volume per I/O group that can be used for recovery purposes, to replay metadata updates if needed. There is one Reverse Lookup Volume per I/O group that is used by garbage collection.

Figure 4-1 shows the difference between DRP volumes and volumes in standard pools.

Figure 4-1 Standard and data reduction pool - volumes

The Customer Data Volume uses greater than 97% of pool capacity. The I/O pattern is a large sequential write pattern (256 KB) that is coalesced into full stride writes, and you typically see a short random read pattern.

Directory Volumes occupy approximately 1% of pool capacity. They typically have a short 4 KB random read and write I/O. The Journal Volume occupies approximately 1% of pool capacity, and shows large sequential write I/O (256 KB typically).

Journal Volumes are only read for recovery scenarios (for example, T3 recovery). Reverse Lookup Volumes are used by the garbage-collection process and occupy less than 1% of pool capacity. Reverse Lookup Volumes have a short, semi-random read/write pattern.

The primary task of garbage collection (see Figure 4-2) is to reclaim space; that is, to track all of the regions that were invalidated, and to make this capacity usable for new writes. As a result of compression and deduplication, when you overwrite a host-write, the new data does not always use the same amount of space that the previous data. This issue leads to the writes always occupying new space on back-end storage while the old data is still in its original location.

Figure 4-2 Garbage Collection principle

For garbage collection, stored data is divided into regions. As data is overwritten, a record is kept of which areas of those regions have been invalidated. Regions that have many invalidated parts are potential candidates for garbage collection. When the majority of a region has invalidated data, it is fairly inexpensive to move the remaining data to another location, therefore freeing the whole region.

DRPs include built-in services to enable garbage collection of unused blocks. Therefore, many smaller unmaps end up enabling a much larger chunk (extent) to be freed back to the pool. Trying to fill small holes is inefficient because too many I/Os are needed to keep reading and rewriting the directory. Therefore, garbage collection waits until an extent has many small holes and moves the remaining data into the extent, compacts the data, and rewrites the data. When there is an empty extent, it can be freed back to the virtualization layer (and back-end with unmap) or start writing into the extent with new data (or rewrites).

The reverse lookup metadata volume tracks the extent usage, or more importantly the holes created by overwrites or unmaps. garbage collection looks for extents with the most unused space. After a whole extent has had all valid data moved elsewhere, it can be freed back to the set of unused extents in that pool, or it can be reused for new written data.

Because garbage collection needs to move data to free regions, it is suggested that you size pools to keep a specific amount of free capacity available. This practice ensures that some free space for garbage collection. For more information, see 4.1.5, “Understanding capacity use in a data reduction pool” on page 130.

4.1.3 Standard pools versus data reduction pools

When it comes to designing pools during the planning of an IBM FlashSystem project, it is important to know all requirements, and to understand the upcoming workload of the environment. The IBM FlashSystem is flexible in creating and using pools. This section describes how to figure out which types of pool or setup you can use.

Some of the information that you should be aware of in the planned environment is as follows:

•Is your data compressible?

•Is your data deduplicable?

•What are the workload and performance requirements?

– Read/write ratio

– Block size

– Input/Output Operations per Second (IOPS), MBps, and response time

•Flexibility for the future

•Thin provisioning

Determine if your data is compressible

Compression is one option of DRPs. The deduplication algorithm is used to reduce the on-disk footprint of data that is written-to by thin provisioning. In IBM FlashSystem, this compression is an inline compression or a deduplication approach rather than an attempt to compress data as a background task. DRP provides unmap support at the pool and volume level. Out-of-space situations can be managed at the DRP pool level.

Compression can be enabled in DRPs on a per-volume basis, and thin provisioning is a prerequisite. The input IO is split into a fixed 8 KiB block for internal handling, and compression is performed on each 8 K block. These compressed blocks are then consolidated into 256 K chunks of compressed data for consistent write performance by allowing the cache to build full stride writes enabling the most efficient RAID throughput.

Data compression techniques depend on the type of data that must be compressed and on the desired performance. Effective compression savings generally rely on the accuracy of your planning and the understanding if the specific data is compressible or not. Several methods are available to help you decide whether your data is compressible, including the following examples:

•General assumptions

•Tools

General assumptions

IBM FlashSystem compression is lossless; that is, data is compressed without losing any of the data. The original data can be recovered after the compress or expend cycle. Good compression savings might be achieved in the following environments (and others):

•Virtualized Infrastructure

•Database and Data Warehouse

•Home Directory, Shares, and shared project data

•CAD/CAM

•Oil and Gas data

•Log data

•SW development

•Text and some picture files

However, if the data is compressed in some cases, the savings are less, or even negative. Pictures (for example, GIF, JPG, and PNG), audio (MP3 and WMA) and video or audio (AVI and MPG) and even compressed databases data might not be good candidates for compression.

Table 4-1 lists the compression ratio of common data types and applications that provide high compression ratios

Table 4-1 Compression ratios of common data types

|

Data Types/Applications

|

Compression Ratio

|

|

Databases

|

Up to 80%

|

|

Server or Desktop Virtualization

|

Up to 75%

|

|

Engineering Data

|

Up to 70%

|

|

Email

|

Up to 80%

|

Also, do not compress encrypted data (for example, compression on host or application). Compressing already encrypted data does not result in many savings, because the data contains pseudo random data. The compression algorithm relies on patterns in order to gain efficient size reduction. Because encryption destroys such patterns, the compression algorithm would be unable to provide much data reduction.

For more information about compression, see 4.1.4, “Data reduction estimation tools” on page 125.

|

Note: Saving assumptions that are based on the type of data are imprecise. Therefore, you should determine compression savings with the proper tools.

|

Determine if your data is a deduplication candidate

Deduplication is done by using hash tables to identify previously written copies of data. If duplicate data is found, instead of writing the data to disk, the algorithm references the previously found data.

•Deduplication uses 8 KiB deduplication grains and an SHA-1 hashing algorithm.

•Data reduction pools build 256 KiB chunks of data consisting of multiple de-duplicated and compressed 8 KiB grains.

•Data reduction pools will write contiguous 256 KiB chunks allowing for efficient write streaming with the capability for cache and RAID to operate on full stride writes.

•Data reduction pools provide deduplication then compress capability.

•The scope of deduplication is within a DRP within an I/O Group.

General assumptions

Some environments have data with high deduplication savings, and are therefore candidates for deduplication.

Good deduplication savings can be achieved in several environments, such as virtual desktop and some virtual machine environments. Therefore, these environments might be good candidates for deduplication.

IBM provides the Data Reduction Estimate Tool (DRET) to help determine the deduplication capacity-saving benefits.

4.1.4 Data reduction estimation tools

IBM provides two tools to estimate the savings when you use data reduction technologies.

•Comprestimator

This tool is built into the IBM FlashSystem. It reports the expected compression savings on a per-volume basis in the GUI and command line.

•Data Reduction Estimation Tool (DRET)

The DRET tool must be installed on and used to scan the volumes that are mapped to a host and is primarily used to assess the deduplication savings. The DRET tool is the most accurate way to determine the estimated savings. However, it must scan all of your volumes to provide an accurate summary.

Comprestimator

Comprestimator is provided in the following ways:

•As a stand-alone, host-based command-line utility. It can be used to estimate the expected compression for block volumes where you do not have an IBM Spectrum Virtualize product providing those volumes.

•Integrated into the IBM FlashSystem. In software versions before 8.4, triggering a volume sampling (or all volumes) was done manually.

•Integrated into the IBM FlashSystem and always on, in versions 8.4 and later.

Host-based Comprestimator

The tool can be downloaded from this IBM Support web page.

IBM FlashSystem Comprestimator is a command-line and host-based utility that can be used to estimate an expected compression rate for block devices.

Integrated Comprestimator for software levels before 8.4.0

IBM FlashSystem also features an integrated Comprestimator tool that is available through the management GUI and CLI. If you are considering to apply compression on existing non-compressed volumes in an IBM FlashSystem, you can use this tool to evaluate if compression will generate capacity savings.

To access the Comprestimator tool in management GUI, select Volumes → Volumes.

If you want to analyze all the volumes in the system, click Actions → Capacity Savings → Estimate Compression Savings.

If you want to select a list of volumes and click Actions → Capacity Savings → Analyze to evaluate only the capacity savings of the selected volumes, as shown in Figure 4-3.

Figure 4-3 Capacity savings analysis

To display the results of the capacity savings analysis, click Actions → Capacity Savings → Download Savings Report, as shown in Figure 4-3, or enter the command lsvdiskanalysis in the command line, as shown in Example 4-1.

Example 4-1 Results of capacity savings analysis

IBM_FlashSystem:superuser>lsvdiskanalysis TESTVOL01

id 64

name TESTVOL01

state estimated

started_time 201127094952

analysis_time 201127094952

capacity 600.00GB

thin_size 47.20GB

thin_savings 552.80GB

thin_savings_ratio 92.13

compressed_size 21.96GB

compression_savings 25.24GB

compression_savings_ratio 53.47

total_savings 578.04GB

total_savings_ratio 96.33

margin_of_error 4.97

IBM_FlashSystem:superuser>

The following actions are preferred practices:

•After you run Comprestimator, consider applying compression only on those volumes that show greater than or equal to 25% capacity savings. For volumes that show less than 25% savings, the trade-off between space saving and hardware resource consumption to compress your data might not make sense. With DRPs, the penalty for the data that cannot be compressed is no longer seen. However, the DRP includes overhead in grain management.

•After you compress your selected volumes, review which volumes have the most space-saving benefits from thin provisioning rather than compression. Consider moving these volumes to thin provisioning only. This configuration requires some effort, but saves hardware resources that are then available to give better performance to those volumes, which achieves more benefit from compression than thin provisioning.

You can customize the Volume view to view the metrics you might need to help make your decision, as shown in Figure 4-4.

Figure 4-4 Customized view

Integrated comprestimator for software version 8.4 and onwards

Because the newer code levels include an always-on comprestimator, you can view the expected capacity savings in the main dashboard view, pool views. volume views. You do not need to first trigger the “estimate” or “analyze” tasks; these are performed automatically as background tasks.

Data Reduction Estimation Tool

IBM provides the Data Reduction Estimation Tool (DRET) to support both deduplication and compression. The host-based CLI tool scans target workloads on various older storage arrays (from IBM or another company), merges all scan results, and then provides an integrated system-level data reduction estimate for your IBM FlashSystem planning.

The DRET uses advanced mathematical and statistical algorithms to perform an analysis with a low memory “footprint”. The utility runs on a host that can access the devices to be analyzed. It performs only read operations, so it has no effect on the data stored on the device. Depending on the configuration of the environment, in many cases the DRET is used on more than one host to analyze additional data types.

It is important to understand block device behavior, when analyzing traditional (fully allocated) volumes. Traditional volumes that were created without initially zeroing the device might contain traces of old data on the block device level. Such data is not accessible or viewable on the file system level. When the DRET is used to analyze such volumes, the expected reduction results reflect the savings rate to be achieved for all the data on the block device level, including traces of old data.

Regardless of the block device type being scanned, it is also important to understand a few principles of common file system space management. When files are deleted from a file system, the space they occupied before the deletion becomes free and available to the file system. The freeing of space occurs even though the data on disk was not actually removed, but rather the file system index and pointers were updated to reflect this change.

When the DRET is used to analyze a block device used by a file system, all underlying data in the device is analyzed, regardless of whether this data belongs to files that were already deleted from the file system. For example, you can fill a 100 GB file system and use 100% of the file system, then delete all the files in the file system making it 0% used. When scanning the block device used for storing the file system in this example, the DRET (or any other utility) can access the data that belongs to the files that are deleted.

To reduce the impact of the block device and file system behavior, it is recommended that you use the DRET to analyze volumes that contain as much active data as possible rather than volumes that are mostly empty of data. The use increases the accuracy level and reduces the risk of analyzing old data that is deleted, but might still have traces on the device.

The DRET can be downloaded from this IBM Support web page.

Example 4-2 shows the DRET command line.

Example 4-2 DRET command line

Data-Reduction-Estimator –d <device> [-x Max MBps] [-o result data filename] [-s Update interval] [--command scan|merge|load|partialscan] [--mergefiles Files to merge] [--loglevel Log Level] [--batchfile batch file to process] [-h]

The DRET can be used on the following client operating systems:

•Windows 2008 Server, Windows 2012

•Red Hat Enterprise Linux Version 5.x, 6.x, 7.x (64-bit)

•UBUNTU 12.04

•ESX 5.0, 5.5, 6.0

•AIX 6.1, 7.1

•Solaris 10

|

Note: According to the results of the DRET, use DRPs to use the available data deduplication savings, unless performance requirements exceed what DRP can deliver.

Do not enable deduplication if the data set is not expected to provide deduplication savings.

|

Determining the workload and performance requirements

An important factor of sizing and planning for an IBM FlashSystem environment is the knowledge of the workload characteristics of that specific environment.

Sizing and performance is affected by the following workloads, among others:

•Read/Write ratio

Read/Write (%) ratio will affect performance because higher writes cause more IOPS to the DRP. To effectively size an environment, the Read/Write ratio should be considered. During a write I/O, when data is written to the DRP, it is stored on the data disk, the forward lookup structure is updated, and the I/O is completed.

DRPs use metadata. Even when volumes are not in the pool, some of the space in the pool is used to store the metadata. The space that is allocated to metadata is relatively small. Regardless of the type of volumes that the pool contains, metadata is always stored separately from customer data.

In DRPs, the maintenance of the metadata results in I/O amplification. I/O amplification occurs when a single host-generated read or write I/O results in more than one back-end storage I/O request because of advanced functions. A read request from the host results in two I/O requests, a directory lookup and a data read. A write request from the host results in three I/O requests, a directory lookup, a directory update, and a data write. Therefore, keep in mind that DRPs create more IOPS on the FCMs or drives.

•Block size

The concept of a block size is simple and the impact on storage performance might be distinct. Block size effects might have an impact on overall performance. Therefore, consider that larger blocks affect performance more than smaller blocks. Understanding and considering for block sizes in the design, optimization, and operation of the storage system-sizing leads to more predictable behavior of the entire environment.

|

Note: Where possible limit the maximum transfer size sent to the IBM FlashSystem to no more than 256 KiB. This limitation is general best practice and not specific to only DRP.

|

•IOPS, MBps, and response time

Storage constraints are IOPS, throughput, and latency, and it is crucial to correctly design the solution or plan for a setup for speed and bandwidth. Suitable sizing requires knowledge about the expected requirements.

•Capacity

During the planning of an IBM FlashSystem environment, capacity (physical) must be sized accordingly. Compression and deduplication might save space, but metadata uses little space. For optimal performance, our recommendation is to use the DRP to a maximum of 85%.

Before planning a new environment, consider monitoring the storage infrastructure requirements with monitoring or management software (such as IBM Spectrum Control or IBM Storage Insights). At busy times, the peak workload (such as IOPS or MBps) and peak response time provide you with an understanding of the required workload plus expected growth. Also, consider allowing enough room for the performance that is required during planned and unplanned events (such as, upgrades and possible defects or failures).

It is important to understand the relevance of application response time rather than internal response time with required IOPS or throughput. Typical OLTP applications require IOPS and low latency.

Do not place capacity over performance while designing or planning a storage solution. Even if capacity might be sufficient, the environment can suffer from low performance. Deduplication and compression might satisfy capacity needs, but aim for performance and robust application performance.

To size an IBM FlashSystem environment, your IBM account team or IBM Business Partner must access IBM Storage Modeller (StorM). The tool can be used to determine if DRPs can provide suitable bandwidth and latency. If the data does not deduplicate (according to the DRET), the volume can be either fully allocated or compressed only.

Flexibility for the future

During the planning and configuration of storage pools, you must decide which pools to create. Because the IBM FlashSystem enables you to create standard pools or DRPs, you must decide which type best fits the requirements.

Verify whether performance requirements meet the capabilities of the specific pool type. For more information, see “Determining the workload and performance requirements” on page 128.

For more information about the dependencies with child pools regarding vVols, see 4.3.3, “Data reduction pool configuration limits” on page 142, and “DRP restrictions” on page 143.

If other important factors do not lead you to choose standard pools, then DRPs are the right choice. Using DRPs can increase storage efficiency and reduce costs because it reduces the amount of data that is stored on hardware and reclaims previously used storage resources that are no longer needed by host systems.

DRPs provide great flexibility for future use because they add the ability of compression and deduplication of data at the volume level in a specific pool, even if these features are initially not used at creation time.

Note that it is not possible to convert a pool. If you must change the pool type (from standard pool to DRP, or vice versa), it will be an offline process and you will have to migrate your data as described in 4.3.6, “Data migration with DRP” on page 145.

|

Note: We recommend the use of DRPs pools with fully allocated volumes if the restrictions and capacity do not affect your environment. For more information about the restrictions, see “DRP restrictions” on page 143.

|

4.1.5 Understanding capacity use in a data reduction pool

This section describes capacity terms associated with DRPs.

After a reasonable period of time, the DRP will have approximately 15-20% of overall free space. The garbage collection algorithm must balance the need to free space with the overhead of performing garbage collection. Therefore, the incoming write/overwrite rates and any unmap operations will dictate how much “reclaimable space” is present at any given time. The capacity in a DRP consists of the components that are listed in Table 4-2 on page 131.

Table 4-2 DRP capacity uses

|

Use

|

Description

|

|

Reduced Customer Data

|

The data that is written to the DRP, in compressed and de-duplicated form.

|

|

Fully Allocated Data

|

The amount of capacity allocated to fully allocated volumes (assumed to be 100% written)

|

|

Free

|

The amount of free space, not in use by any volume

|

|

Reclaimable Data

|

The amount of garbage in the pool. This is either old (overwritten) yet to be freed data or data that has is unmapped but not yet freed or associated with recently deleted volumes

|

|

Metadata

|

Approximately 1 - 3% overhead for DRP metadata volumes

|

Balancing how much garbage collection is done versus how much free space is available dictates how much reclaimable space is present at any time. The system dynamically adjusts the target rate of garbage collection to maintain a suitable amount of free space.

Figure 4-5 Data reduction pool capacity use example

Consider the following points:

•If you create a large capacity of fully allocated volumes in a DRP, you are taking this capacity directly from free space only. This could result in triggering heavy garbage collection if there is little free space remaining and a large amount of reclaimable space, as shown in Figure .

•If you create a large number of fully allocated volumes and experience degraded performance due to garbage collection, you can reduce the required work by temporarily deleting unused fully-allocated volumes.

•When deleting a fully-allocated volume, the capacity is returned directly to free space.

•When deleting a thin-provisioned volume (compressed or deduplicated), the following is a two-phase approach can be used:

a. The grain must be inspected to determine if this was the last volume that referenced this grain (deduplicated):

• If so, the grains can be freed.

• If not, the grain references need to be updated and the grain might need to be re-homed to belong to one of the remaining volumes that still require this grain.

b. When all grains that are to be deleted are identified, these grains are returned to the “reclaimable” capacity. It is the responsibility of garbage collection to convert them to free space.

c. The garbage-collection process runs in the background, attempting to maintain a sensible amount of free space. If there is little free space and you delete a large number of volumes, the garbage-collection code might trigger a large amount of backend data movement and could result in performance issues.

•Deleting a volume might not immediately create free space.

•If you are at risk of running out of space, but a lot of reclaimable space exists, you can force garbage collection to work harder by creating a temporary fully allocated volume to reduce the amount of real free space and trigger more garbage collection.

|

Important: Use extreme caution when using up all or most of the free space with fully-allocated volumes. Garbage collection requires free space to coalesce data blocks into whole extents and hence free capacity. If little free space is available, the garbage collector must to work harder to free space.

|

•It might be worth creating some “get out of jail free” fully-allocated volumes in a DRP. This type of volume reserves some space that you can quickly return to the free space resources if you reach a point where you are almost out of space, or when garbage collection is struggling to free capacity in an efficient manner.

Consider these points:

– This type of volume should not be mapped to hosts.

– This type of volume should be labeled accordingly. For example, “RESERVED_CAPACITY_DO_NOT_USE”

4.2 Storage pool planning considerations

The implementation of storage pools in an IBM FlashSystem requires an holistic approach that involves application availability and performance considerations. Usually a trade-off between these two aspects must be taken into account.

The main best practices in the storage pool planning activity are described in this section. Most of these practices apply to both standard and DRP pools, except where otherwise specified. For additional specific best practices for DRPs, see 4.6, “Easy Tier, tiered and balanced storage pools” on page 169. For more information, see specific practices for high-availability solutions.

4.2.1 Planning for availability

By design, IBM Spectrum Virtualize based storage systems take the entire storage pool offline if a single MDisk in that storage pool goes offline. This means that the storage pool’s quantity and size define the failure domain. Reducing the hardware failure domain for back-end storage is only part of your considerations. When you are determining the storage pool layout, you must also consider application boundaries and dependencies to identify any availability benefits that one configuration might have over another.

Sometimes, reducing the hardware failure domain, such as placing the volumes of an application into a single storage pool, is not always an advantage from the application perspective. Alternatively, splitting the volumes of an application across multiple storage pools increases the chances of having an application outage if one of the storage pools that is associated with that application goes offline.

Finally, increasing the number of pools to reduce the failure domain is not always a viable option. For instance, in IBM FlashSystems configurations that do not include expansion enclosures, the number of physical drives is limited (up to 24), and creating more arrays reduces the usable space because of spare and protection capacity.

Consider, for instance, a single I/O group FlashSystem configuration with 24 7.68 TB NVMe drives. In a case of a single array DRAID 6 creation, the available physical capacity would be 146.3 TB, while creating two arrays DRAID 6 would provide 137.2 TB of available physical capacity with a reduction of 9.1 TB.

When virtualizing external storage, remember that the failure domain is defined by the external storage itself, rather than by the pool definition on the front-end system. For instance, if you provide 20 MDisks from external storage and all of these MDisks are using the same physical arrays, the failure domain becomes the total capacity of these MDisks, no matter how many pools you have distributed them across.

The following actions are the starting preferred practices when planning storage pools for availability:

•Create separate pools for internal storage and external storage, unless you are creating a hybrid pool managed by Easy Tier (see 4.2.5, “External pools” on page 138).

•Create a storage pool for each external virtualized storage subsystem, unless you are creating a hybrid pool managed by Easy Tier (see 4.2.5, “External pools” on page 138).

|

Note: If capacity from different external storage is shared across multiple pools, provisioning groups are created.

IBM SAN Volume Controller detects that resources (MDisks) share physical storage and monitors provisioning group capacity; however, monitoring physical capacity must still be done. MDisks in a single provisioning group should not be shared between storage pools because capacity consumption on one pool can affect free capacity on other pools. IBM SAN Volume Controller detects this condition and shows that the pool contains shared resources.

|

•Use dedicated pools for image mode volumes.

|

Limitation: Image Mode volumes are not supported with DRPs.

|

•For Easy Tier-enabled storage pools, always allow free capacity for Easy Tier to deliver better performance.

•Consider implementing child pools when you must have a logical division of your volumes for each application set. Cases often exist where you want to subdivide a storage pool but maintain a larger number of MDisks in that pool. Child pools are logically similar to storage pools, but allow you to specify one or more subdivided child pools. Thresholds and throttles can be set independently per child pool.

|

Note: Throttling is supported on DRP child pools in code versions 8.4.2.0 and later.

|

When you are selecting storage subsystems, the decision often comes down to the ability of the storage subsystem to be more reliable and resilient, and meet application requirements. While IBM Spectrum Virtualize does not provide any physical level-data redundancy for virtualized external storages, the availability characteristics of the storage subsystems’ controllers have the most impact on the overall availability of the data that is virtualized by IBM Spectrum Virtualize.

4.2.2 Planning for performance

When planning storage pools for performance the capability to stripe across disk arrays is one of the most important advantages IBM Spectrum Virtualize provides. To implement performance-oriented pools, create large pools with many arrays rather than more pools with few arrays. This approach usually works better for performance than spreading the application workload across many smaller pools, because typically the workload is not evenly distributed across the volumes, and then across the pools.

Adding more arrays to a pool, rather than creating a new pool, can be a way to improve the overall performance if the added arrays have the same or better performance characteristics than the existing ones.

Note that in IBM FlashSystem configurations arrays built from FCM and SAS SSD drives have different characteristic, both in terms of performance and data reduction capabilities. Therefore, when using FCM and SAS SSD arrays in the same pool, follow these recommendations:

•Enable the Easy Tier function (see 4.6, “Easy Tier, tiered and balanced storage pools” on page 169). The Easy Tier treats the two-array technologies as different tiers (tier0_flash for FCM arrays and tier1_flash for SAS-SSD arrays), so the resulting pool is a multi-tiered pool with inter-tier balancing enabled.

•Strictly monitor the FCM physical usage. As Easy Tier moves the data between the tiers, the compression ratio can vary frequently and an out-of-space condition can be reached without changing the data contents.

The number of arrays that are required in terms of performance must be defined in the pre-sales or solution design phase, but when sizing the environment remember that adding too many arrays to a single storage pool increases the failure domain, and therefore it is important to find the trade-off between the performance, availability, and scalability cost of the solution.

Using the following external virtualization capabilities, you can boost the performance of the back-end storage systems:

•Using wide-striping across multiple arrays

•Adding additional read/write cache capability

It is typically understood that wide-striping can add approximately 10% additional Input/Output Processor (IOP) performance to the backend-system by using these mechanisms.

Another factor is the ability of the virtualized-storage subsystems to be scaled up or scaled out. For example, IBM System Storage DS8000 series is a scale-up architecture that delivers the best performance per unit, and the IBM FlashSystem series can be scaled out with enough units to deliver the same performance.

With a virtualized system, there is debate as to whether to scale out back-end system, or add them as individual systems behind IBM FlashSystem. Either case is valid. However, adding individual controllers is likely to allow IBM FlashSystem to generate more I/O, based on queuing and port-usage algorithms. It is recommended that you add each controller (I/O Group) of an IBM FlashSystem back-end as its own controller; that is, do not cluster the IBM FlashSystem when it acts as an external storage controller behind another Spectrum Virtualize product, such as IBM SAN Volume Controller. Adding each controller (I/O Group) of an IBM FlashSystem backend as its own controller adds additional management IP addresses and configuration. However, it provides the best scalability in terms of IBM FlashSystem performance.

A significant consideration when you compare native performance characteristics between storage subsystem types is the amount of scaling that is required to meet the performance objectives. Although lower-performing subsystems can typically be scaled to meet performance objectives, the additional hardware that is required lowers the availability characteristics of the IBM FlashSystem cluster.

All storage subsystems possess an inherent failure rate. Therefore, the failure rate of a storage pool becomes the failure rate of the storage subsystem times the number of units.

The following actions are the starting preferred practices when planning storage pools for performance:

•Create a dedicated storage pool with dedicated resources if there is a specific performance application request.

•When using external storage in an Easy Tier enabled pool, do not intermix MDisks in the same tier with different performance characteristics.

•In a FlashSystem clustered environment, create storage pools with IOgrp or Control Enclosure affinity. That means you have to use only arrays or MDisks supplied by the internal storage that is directly connected to one IOgrp SAS chain only. This configuration avoids unnecessary IOgrp-to-IOgrp communication traversing the SAN and consuming Fibre Channel bandwidth.

•Use dedicated pools for image mode volumes.

|

Limitation: Image Mode volumes are not supported with DRPs.

|

•For Easy Tier-enabled storage pools, always allow free capacity for Easy Tier to deliver better performance.

•Consider implementing child pools when you must have a logical division of your volumes for each application set. Cases often exist where you want to subdivide a storage pool but maintain a larger number of MDisks in that pool. Child pools are logically similar to storage pools, but allow you to specify one or more subdivided child pools. Thresholds and throttles can be set independently per child pool.

|

Note: Before code version 8.4.2.0, throttling is not supported on DRP child pools.

|

Cache partitioning

The system automatically defines a logical cache partition per storage pool. Child pools do not count towards cache partitioning. The cache partition number matches the storage pool ID.

A cache partition is a logical threshold that stops a single partition from consuming the entire cache resource. This partition is provided as a protection mechanism and does not affect performance in normal operations. Only when a storage pool becomes overloaded, does the partitioning kick in and essentially slow down write operations in the pool to the same speed that the backend can handle. Overloaded means that the front-end write throughput is greater than back-end storage that the pool can sustain. This situation should be avoided.

In recent versions of IBM Spectrum Control, the fullness of the cache partition is reported and can be monitored. You should not see partitions reaching 100% full. If you do, then it suggest the corresponding storage pool is in an overload situation and workload should be moved from that pool, or additional storage capability should be added to that pool.

4.2.3 Planning for capacity

Capacity planning is never an easy task. Capacity monitoring has become more complex with the advent of data reduction. It is important to understand the terminology used to report usable, used, and free capacity.

The terminology and its reporting in the GUI changed in recent versions and is listed Table 4-3.

Table 4-3 Capacity terminology in 8.4.0

|

Old term

|

New term

|

Meaning

|

|

Physical Capacity

|

Usable Capacity

|

The amount of capacity that is available for storing data on a system, pool, array, or MDisk after formatting and RAID techniques are applied.

|

|

Volume Capacity

|

Provisioned Capacity

|

The total capacity of all volumes in the system.

|

|

N/A

|

Written Capacity

|

The total capacity that is written to the volumes in the system. This is shown as a percentage of the provisioned capacity and is reported before any data reduction.

|

The usable capacity describes the amount of capacity that can be written-to on the system and includes any backend data reduction (that is, the “virtual” capacity is reported to the system).

|

Note: In DRP, the rsize parameter, used capacity, and tier capacity are not reported per volume. These items are reported only at the parent pool level because of the complexities of deduplication capacity reporting.

|

Figure 4-6 Example dashboard capacity view

For FlashCore Modules (FCM), this will be the maximum capacity that can be written to the system. However, for the smaller capacity drives (4.8 TB), this will report 20 TiB as usable. The actual usable capacity might be lower because of the actual data reduction achieved from the FCM compression.

Plan to achieve the default 2:1 compression, which is approximately an average of 10 TiB of usable space. Careful monitoring of the actual data reduction should be considered if you plan to provision to the maximum stated usable capacity when the small capacity FCMs are used.

The larger FCMs, 9.6 TB and above, report just over 2:1 usable capacity. Therefore 22, 44, and 88 for the 9.6, 19.2, and 38.4 TB modules respectively.

The provisioned capacity shows the total provisioned capacity in terms of the volume allocations. This is the “virtual” capacity that is allocated to fully-allocated, and thin-provisioned volumes. Therefore, it is in theory that the capacity could be written to if all volumes were filled 100% by the using system.

The written capacity is the actual amount of data that has been written into the provisioned capacity.

•For fully-allocated volumes, the written capacity is always 100% of the provisioned capacity.

•For thin-provisioned (including data reduced volumes), the written capacity is the actual amount of data the host writes to the volumes.

The final set of capacity numbers relates to the data reduction. This is reported in two ways:

•As the savings from DRP (compression and deduplication) provided at the DRP level, as shown in Figure 4-7 on page 138.

•As the FCM compression.

Figure 4-7 Compression Savings dashboard report

4.2.4 Extent size considerations

When adding MDisks to a pool they are logically divided into chunks of equal size. These chunks are called extents and are indexed internally. Extent sizes can be 16, 32, 64, 128, 256, 512, 1024, 2048, 4096, or 8192 MB. IBM Spectrum Virtualize architecture can manage 2^22 extents for a system, and therefore the choice of extent size affects the total amount of storage that can be addressed. For the capacity limits per extent, see V8.4.2.x Configuration Limits and Restrictions for IBM FlashSystem 9200.

When planning for the extent size of a pool, remember that you cannot change the extent size later, it must remain constant throughout the lifetime of the pool.

For pool-extent size planning, consider the following recommendations:

•For standard pools, usually 1 GB is suitable.

•For DRPs, use 4 GB (see 4.6, “Easy Tier, tiered and balanced storage pools” on page 169 for further considerations on extent size on DRP).

•With Easy Tier enabled hybrid pools, consider smaller extent sizes to better utilize the higher tier resources and therefore provide better performance.

•Keep the same extent size for all pools if possible. The extent-based migration function is not supported between pools with different extent sizes. However, you can use volume mirroring to create copies between storage pools with different extent sizes.

|

Limitation: Extent-based migrations from standard pools to DRPs are not supported unless the volume is fully allocated.

|

4.2.5 External pools

IBM FlashSystem-based storage systems have the ability to virtualize external storage systems. This section describes special considerations when configuring storage pools with external storage.

Availability considerations

IBM FlashSystem external storage virtualization feature provides many advantages through consolidation of storage. You must understand the availability implications that storage component failures can have on availability domains within the IBM FlashSystem cluster.

IBM Spectrum Virtualize offers significant performance benefits through its ability to stripe across back-end storage volumes. However, consider the effects that various configurations have on availability.

When you select MDisks for a storage pool, performance is often the primary consideration. However, in many cases, the availability of the configuration is traded for little or no performance gain.

Remember that IBM FlashSystem must take the entire storage pool offline if a single MDisk in that storage pool goes offline. Consider an example where you have 40 external arrays of 1 TB each for a total capacity of 40 TB with all 40 arrays in the same storage pool.

In this case, you place the entire 40 TB of capacity at risk if one of the 40 arrays fails (which causes the storage pool to go offline). If you then spread the 40 arrays out over some of the storage pools, the effect of an array failure (an offline MDisk) affects less storage capacity, which limits the failure domain.

To ensure optimum availability to well-designed storage pools, consider the following preferred practices:

•It is recommended that each storage pool must contain only MDisks from a single storage subsystem. An exception exists when you are working with Easy Tier hybrid pools. For more information, see 4.6, “Easy Tier, tiered and balanced storage pools” on page 169.

•It is suggested that each storage pool contains only MDisks from a single storage tier (SSD or Flash, Enterprise, or NL_SAS) unless you are working with Easy Tier hybrid pools. For more information, see 4.6, “Easy Tier, tiered and balanced storage pools” on page 169.

IBM Spectrum Virtualize does not provide any physical-level data redundancy for virtualized external storages. The availability characteristics of the storage subsystems’ controllers have the most impact on the overall availability of the data that is virtualized by IBM Spectrum Virtualize.

Performance considerations

Performance is a determining factor, where adding IBM FlashSystem as a front-end results in considerable gains. Another factor is the ability of your virtualized storage subsystems to be scaled up or scaled out. For example:

•IBM System Storage DS8000 series is a scale-up architecture that delivers the best performance per unit.

•IBM FlashSystem series can be scaled out with enough units to deliver the same performance.

A significant consideration when you compare native performance characteristics between storage subsystem types is the amount of scaling that is required to meet the performance objectives. Although lower-performing subsystems can typically be scaled to meet performance objectives, the additional hardware that is required lowers the availability characteristics of the IBM FlashSystem cluster.

All storage subsystems possess an inherent failure rate. Therefore, the failure rate of a storage pool becomes the failure rate of the storage subsystem times the number of units.

Number of MDisks per pool

The number of MDisks per pool also can effect availability and performance.

The backend storage access is controlled through MDisks where the IBM FlashSystem acts like a host to the backend controller systems. Just as you have to consider volume queue depths when accessing storage from a host, these systems must calculate queue depths to maintain high throughput capability while ensuring the lowest possible latency.

For more information about the queue depth algorithm, and the rules about how many MDisks to present for an external pool, see “Volume considerations” on page 100.

This section describes how many volumes to create on the backend controller (that are seen as MDisks by the virtualizing controller) based on the type and number of drives (such as HDD and SSD).

4.3 Data reduction pools best practices

This section describes the DRP planning and implementation best practices.

For information about estimating the deduplication ratio for a specific workload, see “Determine if your data is a deduplication candidate” on page 124.

4.3.1 Data reduction pools with IBM FlashSystem NVMe attached drives

|

Important: If you plan to use DRP with deduplication and compression enabled with FCM storage, assume zero extra compression from the FCMs. That is, use the reported physical or usable capacity from the RAID array as the usable capacity in the pool and ignore the above maximum effective capacity.

The reason for assuming zero extra compression from the FCMs is because the DRP function is sending compressed data to the FCMs, which cannot be further compressed. Therefore, the data reduction (effective) capacity savings are reported at the front-end pool level and the backend pool capacity is almost 1:1 for the physical capacity.

Some small amount of other compression savings might be seen because of the compression of the DRP metadata on the FCMs.

|