Chapter 15

Presenting Evidence and Justifying Opinions

In This Chapter

![]() Spotting everyday unreliable evidence

Spotting everyday unreliable evidence

![]() Questioning scientists

Questioning scientists

![]() Struggling with statistics

Struggling with statistics

Physicists have shown that all matter consists of a few basic particles ruled by a few basic forces. Scientists have also stitched their knowledge into an impressive, if not terribly detailed, narrative of how we came to be . . . I believe that this map of reality that scientists have constructed, and this narrative of creation, from the big bang through the present, is essentially true. It will thus be as viable 100 or even 1,000 years from now as it is today. I also believe that, given how far science has already come, and given the limits constraining further research, science will be hard-pressed to make any truly profound additions to the knowledge it has already generated. Further research may yield no more great revelations or revolutions but only incremental returns.

—John Horgan (The End of Science: Facing the Limits of Knowledge in the Twilight of the Scientific Age, 1996, Addison-Wesley)

Sounds pretty authoritative? Since John Hogan, sometime editor of Scientific American, wrote these words, astronomers have decided that about 90 per cent of the universe consists of previously unappreciated ‘dark matter’, not the stuff John Horgan was thinking of at all. Plus, even familiar ‘facts’ such as the number of planets in the Solar System have been put up for debate.

Astronomy's a good example of a ‘hard’ science where many people think that ‘facts are facts’ and opinions are at their best ‘facts in waiting’. But in plenty of cases, the facts turn out to be a matter of opinion and the consensus view changes over time.

In this chapter I have a thorough look at the difference between facts and opinions, in everyday life as well as the realm of ‘scientific knowledge’, to try to separate those that deserve your respect from those that don't. I also cover the confusions that can result from numbers and statistics and give you a chance to test your own Critical Thinking skills with a look at the debate on whether smoke alarms save lives.

Challenging Received Wisdom about the World

I don't want to startle you (well maybe a little!) but an awful lot of what people tell you they know for certain is wrong. This section will give you some new perspectives on how facts and opinions become blurred and impact on the decisions you take in your everyday life, such as what to do if you have a cold, who you vote for or what you choose to eat.

Lesson One in Critical Thinking is that you need to always be aware that what you think on any issue may be wrong. For most people, that bit's easy. That's why students often look things up in encylopedias instead — as do scholarly authors and journalists.

Even prestigious academic journals regularly publish papers that are factually challenged. Some of the views ought to be suspect, because they're facilitated by large research grants, or based on studies conducted by activist campaigners. Remember that claim that the Himalayas would melt in 30 years? That went past the highest and most distinguished panel of scientific experts ever — the International Panel on Climate Change. Yet the original research was the work of a couple of green campaigners and amounted to little more than speculation.

Investigating facts and opinions in everyday life

Definitely, at some point or other, in order to be a Critical Thinker, you have to decide what is a ‘fact’ and what is a subjective opinion. The need to do this touches every area of life, far more than you probably realise. (See the nearby sidebar ‘Defending society against science’ for some warnings.)

Part of living in a modern technological age is that nobody understands the world around them — how it works, who runs it and why — and so they rely on other people to understand it for them. That's why when you research a topic, you head straight for a book, and why that book relies on the views of other books and other people, in a long chain of research and opinions. Read enough such things and you become an expert — but only in a tiny area. Very few areas are left to ‘ordinary folk’ to have an opinion.

In all areas of life — not just in the artificial, protected world of student essays — people need Critical Thinking skills. In this section I give you some examples to illustrate why. In other words, I construct an argument using hypothetical examples as evidence.

Treating a troubled child

If you're a parent and you have children who just don't seem to want to behave in school or at home, an expert opinion from a psychoanalyst is often part of the solution. In the US particularly, these experts frequently diagnose medical disorders such as attention deficit disorder (usually abbreviated to ADD or sometimes ADHD) and recommend drugs such as Ritalin or Adderall to alter the behaviour of children and young people. Some of the children are as young as age 3!

The drugs, despite being stimulants, are considered to have desirable effects in terms of curbing ‘hyperactivity’ and helping the individual to focus, work and learn. Experts have prescribed them for millions of children, as well as school and college students too. With so many experts agreeing, presumably the drugs work, and at the very least the disorder is real enough. But where's the line between fact and opinion here?

Sceptical voices certainly exist, but more remarkably, in 2014, the most consulted professional reference work for psychiatry itself decided that the ‘disorder’ didn't really exist. One of the most respected experts in the area, Dr Bruce Perry, told the London newspaper The Observer that the label of ADHD covered such a broad set of symptoms that it would cover perfectly normal people. You can't cure people of being normal!

Choosing who to trust

My second everyday example is that big issue of ‘who to trust’. Which programs or columnists to rely on to find out about what's really going on around the world? Where to turn for medical advice? And, of course, which journalist to read for good advice on who to vote for. Rather than decide for yourself or maybe chat to some friends, wouldn't it be better to take some of the insightful analysis from highly respected (and highly paid) newspaper columnists or TV and radio pundits?

Actually, research shows that pundits have less influence than we (or indeed they) think. The reason is that people choose the pundit (or the newspaper) that says the kind of things they think anyway. We all do this, don't be ashamed! But nonetheless deciding to read certain newspaper columns or watch certain TV programs means you start to soak up a flood of information that, well, you can't check, but you assume is true anyway.

Advice offered by a newspaper columnist, or in an editorial for that matter, that the way to prosperity lies in building lots more houses and sending Polish plumbers home, will be presented as sound reasoning — but may really be windy rhetoric and hot air, maybe fuelled by errors or gaps in the writer's research and topped by popular prejudices. Even if the articles are better than that, readable summaries of complex issues — even then, they're just not things to rely on for accuracy or as evidence.

Fixing a sickly car

My final example involves another everyday problem — who do you believe when your car breaks down? Yourself, the neighbour or the garage mechanic?

This example illustrates how being much more knowledgeable (as someone who works everyday with cars certainly is) does not necessarily equate with being right.

I admit I'd take my car straight to the garage — no tinkering under the hood with a pair of nylon tights (not to wear, that is, but to replace the fan belt) or poking a long piece of wire into engine orifices to spot any build-up of carbon.

But although I rely on garage mechanics to mend my car, I certainly don't believe automatically what they say. I've had too many completely contradictory opinions on the same car to imagine that whatever mechanics do know, it's somehow infallible and beyond sceptical challenge. As many a consumer TV programme has shown, a car secretly prepared to have no faults or (more excitingly) to have one dangerous one, can be taken around as many garages as you like and have as many different expert diagnoses (and expensive repairs) as you choose to pay for. Only very occasionally, it seems, does the diagnosis and repair correspond to the fault. Put another way, it pays to be a bit sceptical next time someone says your ‘big end’ needs replacing.

However, if people sometimes suspect that scruffy dungaree-wearing mechanics aren't as expert as they seem, the experts in suits still get automatic respect. Expertise is all about appearances. Check out the nearby sidebar ‘Trust me — I have a PhD’ for more examples.

‘Eat my (fatty) shorts!’: What is a healthy diet?

This section describes some food controversies to illustrate how medical experts sometimes present opinions as facts.

Don't be confused by its constant claims to the contrary: medicine is just as much a creature of ‘fashion’ as any teenager buying into the latest fad. Take one myth you probably assume is a fact: fat in food is dangerous for you. A consensus built up around this idea during the 1970s (along with that conviction that enormous flares on trouser legs were cool). This consensus — the food one! — still exists, even though it never had any scientific basis, by which I mean hard facts and careful research. (You can read more about this in Chapter 2.) The key point however is this: the evidence for fatty goods causing heart disease involved cherry-picking the research. Countries where there seemed to be the expected ‘fatty diets = high levels of heart disease’ were included in the final survey, and countries where the evidence pointed the other way were excluded.

This research preference for ‘positive’ outcomes, often also findings which fit the researcher's (or company's) requirements, is the elephant in the room for ‘evidence-based — medicine’.

When a view becomes so widespread that everyone you come across thinks it must be true, you have a ‘consensus’. But unfortunately, it still doesn't make the view true. Notice that no one is lying about the drugs — but the evidence has been skewed and distorted.

Digging into Scientific Thinking

It's easy to assume that with so many things being discovered every day, and the internet apparently providing one-click answers to everything you can think of to ask, that there must be a simple, and hence ‘knowable’ answers to everything. This section is about how, in fact, many questions have no answer. Paradoxes and contradictions sit at the heart of maths and physics — every bit as much as they do in other areas of life where you might more expect to find them — areas such as politics or even human relationships. Sounds weird? Read on! In the process you will pick up some big ideas for the evaluation of even little debates. One such idea, invaluable for Critical Thinkers, is to recognise that arguments that neglect the complexity of issues often mislead, and generalisations need to be openly admitted — and abandoned where necessary.

Changing facts in a changing world

The idea that facts are facts and fixed forever is one of the things that makes them so useful and seem so very different from opinions (which people change all the time). That's certainly one of the assumptions in the famous claim (well, within philosophy anyway) of the French mathematician Pierre Simon Laplace back in the 18th century that knowledge of facts bestows almost God-like powers. He wrote:

We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which nature is composed, if this intellect were also vast enough to submit these data to analysis, it would embrace in a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes.

—Pierre Simon Laplace (A Philosophical Essay on Probabilities, 1951, translated into English by Truscott, FW and Emory, FL, Dover Publications)

If Laplace were alive today, Google's immense collection of facts would appear to be getting near to his dream! Or would it? Problems exist with the idea that facts are facts and that you can dream one day of collecting so many of them that you can start to predict everything else from the ones that you have already.

Take the work of Edward Lorenz, a mathematician and a meteorologist. In the 1960s, as weather modelling was starting, he quite by chance found that if he adjusted the numbers entered into his weather models by even a tiny fraction, the weather predicted could change from another sunny day in Nevada to a devastating cyclone in Texas.

Or take the length of the lunar month. You've got a calendar on your wall that seems pretty reliable, but (as Hindu priests realised thousands of years ago) the exact time between two full moons as seen from Earth is actually impossible to ever calculate — because of what scientists call ‘feedback effects’. The moon is affected by both the Earth and the Sun, and in turn affects the movements of the Earth are a very different matter.

Clearly, many things in the world aren't predictable in practice or in theory. In fact a lot of things that affect the world aren't just hard to express precisely — they're impossible, from weather patterns to lunar months. And chaos reigns from stock movement fluctuations to the rise and fall of populations or the spreading of diseases.

In terms of weather, as Edward Lorenz memorably put it, the mere flap of a butterfly's wing in one country can ‘cause’ a hurricane a week later somewhere else, as a cascade of tiny effects change outcomes at higher and higher levels.

Here's a quote to muse on:

The truth is that science was never really about predicting. Geologists do not really have to predict earthquakes; they have to understand the process of earthquakes. Meteorologists don't have to predict when lightning will strike. Biologists do not have to predict future species. What is important in science and what makes science significant is explanation and understanding.

—Noson Yanofsky (The Outer Limits of Reason: What Science, Mathematics, and Logic Cannot Tell Us, 2013, MIT Press)

Teaching facts or indoctrinating?

Sceptical philosopher Paul Feyerabend wrote that facts, especially ‘scientific facts’, are taught at a very early age and in the same manner as religious ‘facts’ were taught to children for many, many years. Nowadays, people frown at children being fed ‘dogma’ by priests in religious schools, yet the truths of science and maths are held in such high respect that in many subject areas no attempt is made to awaken the critical abilities of children or students. Indeed, Feyerabend, a university teacher himself, says that in his experience at universities the situation is even worse, because what he calls the indoctrination is carried out in a much more systematic manner.

You probably think that scientists are quite above indoctrination — forcing their views on others — and that such things are objective, neutral and, well ‘scientific’. Aren't their theories, according to the influential account of Karl Popper, only accepted after they're thoroughly tested?

. . . we shall always find what we want: we shall look for, and find, confirmations, and we shall look away from, and not see, whatever might be dangerous to our pet theories.

—Karl Popper (Poverty of Historicism)

Popper described himself as a critical rationalist, meaning that he was critical of philosophers who looked for certainty through logic. He argued that no ‘theory-free’, infallible observations exist, but instead that all observation is theory-laden, and involves seeing the world through the distorting glass (and filter) of a pre-existing conceptual scheme. To cut a long story short, all measurements and observations are a matter of opinion.

All scientific theories are like this, making universal claims for their truth unverifiable.

Tackling the assertibility question

How do you separate out cranky views that aren't supported by evidence from reasonable theories that maybe worth serious consideration? This problem is sometimes called the assertibility question (AQ), because you're asking what evidence allows you to assert that the claim is true.

Here's a useful checklist for scientific theories from a recent book called Nine Crazy Ideas in Science (Princeton UP 2002). Professor Robert Ehrlich advises everyone to test theories considered controversial by ‘mainstream’ scientists, as he puts it, on a ‘Cuckoo scale’. He offers various questions to ask about theories such as ‘radiation exposure is good for you’, or ‘distributing more guns reduces crime’, which I sum up as follows:

How well does the idea fit with common sense? Is the idea nutty?

How well does the idea fit with common sense? Is the idea nutty?- Who proposed the idea, and does the person have a built-in bias towards it being true?

- Do proposers use statistics in an honest way? Do they back it up with references to other work that supports the approach?

- Does the idea explain too much — or too little — to be useful?

- How open are the proponents of the idea about their methods and data?

- How many free parameters exist (see the nearby sidebar ‘Parameters: The elephant in the theory’ for an explanation of the term)?

Ehrlich thinks that these questions will root out dodgy theories, and most likely they would, along with all other new ideas. But Ehrlich has his own dangerous assumptions. He seem to assume that orthodox opinion is to be preferred to new ideas, and thereby shows a surprising blindness to the true history of science. Yesterday's cuckoo theory is today's orthodoxy and today's orthodoxy is tomorrow's cuckoo theory.

Resisting the pressure to conform

A much-cited experiment by the American social psychologist, Solomon Asch, back in the 1950s, found that people are quite prepared to change their minds on even quite straightforward factual matters in order to ‘go along with the crowd’ or in many cases, the experts.

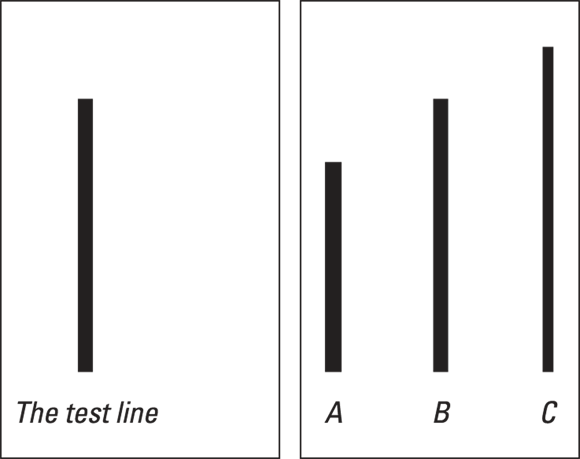

Dr Asch showed a group of volunteers a card with a line on it, and then a card with three lines drawn on it, and asked them to determine which of the lines matched the first card (see Figure 15-1). Unknown to one of the group, all the other participants weren't, in fact, volunteers, but stooges. These people had been previously instructed to assert things that were obviously not the case, for instance, by choosing a line that was obviously shorter than the one sought, or that was a bit longer. Revealingly, when enough of their companions told them to do so, around one third of people were prepared to ‘change their minds’ and (disregarding all the evidence) bend pliantly to peer pressure.

Figure 15-1: The lines test: Which line do you think is the match? Sure?

Cheating when choosing lines is one thing, but changing your answer to fit in with everyone else on complicated issues you don't really understand is well, sort of understandable. You can't really blame people for doing so, especially when to do otherwise would mean exploring scientific issues they're unused to processing.

On the other hand, most things aren't as complicated as particular experts like to make out. Experts such as Albert Einstein, and the founding father of modern atomic theory, Ernest Rutherford, were highly concerned to ensure, at least in principle, that anyone could understand their theories.

Following the evidence, not the crowd

In many areas of life, people are in the position of having to make irrevocable decisions on the strength of others’ advice.

China has a popular new antiques TV show called Collection World in which amateur collectors meet professional experts. The amateurs get their most prized Qing dynasty vases, or delicately carved wooden chests, authenticated (authoritatively dated and valued by the experts). The twist is, however, that if the experts say the work is a modern reproduction (a fake) the owner must immediately take a sledgehammer to their pride and joy, and smash it to pieces!

But the ‘expert panel’ is in reality constructed of people who had no knowledge of antiquities: just people who happened to be handy — researchers or technicians. How many ancient vases and works of art have been smashed for the amusement of TV audiences, no one will ever know. That's showbiz! If watching it makes you want to cry out — ‘Stop! That's a work of art, you cynical frauds!’ — then the programme is twice as much fun. After all, even the best experts make mistakes, don't they. . . . Tune in next week!

Take big questions like: When does life begin? When does life end? What should people do in between those two points? These are scientific questions, yes, but they are also ethical, human issues. An interaction is necessary — a kind of democratic interaction — between those who say they know, and those of us who rely on them to be our guides.

Rules of the scientific journal: Garbage-in, garbage-out

One of the most downloaded papers in recent years on scientific method is ‘Why Most Published Research Findings Are False’, by John Ioannidis, a Greek-American professor of Hygiene and Epidemiology. He writes that across the whole range of supposedly precise, objective sciences a research claim is more likely to be false than true. Moreover, he adds that in many scientific areas of investigation today, research findings are more often simply accurate reflections of the latest fashionable view (and bias) in the area.

A number of reasons exist for this situation, which all can be shown quite objectively, by considering the context of modern scientific research. Much hinges on the correct use of statistics, and scientists are no better at this than anyone else. In particular, the smaller the studies conducted and the smaller the effect sizes in a scientific field, the less likely the research findings are to be true. The later section ‘Counting on the Fact that People Don't Understand Numbers: Statistical Thinking’ illustrates how statistics can mislead.

Finally, Professor Ioannidis warns, the greater the flexibility in designs, definitions, outcomes and analytical modes in a scientific field, the more dodgy the research findings. This is simply because flexibility increases the potential for transforming what would be ‘negative’ results into ‘positive’ results. All this explains why you often read in the paper or see on the TV news about some major new discovery making scientists very excited — followed some months later by a teeny-weeny story about the findings being ‘not quite all they seemed at first to be’.

Proving it!

All reasons for claims must answer the assertibility question (AQ) (see the earlier section ‘Tackling the assertibility question’): ‘How do you know that such-and-such a claim is true?’ You're asking what evidence allows someone to assert that the claim is true. You ask it when you're presented with a claim and the proponent should respond with a reason to believe the claim is true.

Claims are rarely as objective as they seem. In some cases, no evidence is produced — because none is needed. Instead, the effort is put into showing that the conclusion follows from the certain stated assumptions.

- As neutral observer: Looking at an argument put by someone else.

- As participant: Trying to judge your own argument.

- As referee: Looking at arguments being debated and perhaps evaluated by others, say, in a text.

If it's your argument, you need to provide sufficient evidence to support it. Sufficient is something of a value judgement though — do you really need to prove that say, water flows downhill, in order to argue that the collapse of a dam will threaten the village just underneath it?

In both cases you need to judge whether evidence offered is true and relevant. In my collapsing dam example, discussing the political situation in the country isn't relevant unless you can provide there are direct links between that and the issue at hand. There may well be! For example, the government may be in the habit of ignoring earthquake risks in order to increase the amount of electricity generated. In this case, politics is part of the apparently objective task of evaluating an argument.

The other part of evaluating argument is checking that the structure of the argument is valid. This is much less of a matter of judgement, and much more a matter of applying a rulebook. A valid, sound and logical argument has to be free of fallacies (as I discuss in Chapter 13).

Critical Thinking is about bringing things upfront that may otherwise remain in the back of your head. Another advantage of putting them upfront is to make sure that they're going on and have not been overlooked or forgotten!

- Get the feel of the shape of the argument — is it a chain of reasoning or a piecing together of a jigsaw of evidence? Assign weights to reasons and note any weak points in the logic of the argument.

- Reverse the conclusion to see how this perspective changes your view of the argument and evidence presented. It should be in direct conflict: if not, the reasons aren't persuasive after all.

- Sort the reasons into similar kinds. Look for purely logical reasons to support the form of the argument, but also examine the quality of the evidence and the methodology behind any statistics.

- Treat methodological assumptions with especial care. In many, many practical areas, the methodology chosen determines the results that emerge — yet the validity of the methods themselves isn't challenged. Make sure to look for bias in the starting points that decide the methodology.

- Use mind maps (see Chapter 7) and doodles.

Counting on the Fact that People Don't Understand Numbers: Statistical Thinking

If a man stands with his left foot on a hot stove and his right foot in a refrigerator, the statistician would say that, on the average, he's comfortable.

—Walter Heller (quoted in Harry Hopkins, The Numbers Game: the Bland Totalitarianism, 1973, Brown & Co)

Strangely, many people find statistical flukes perturbing and extraordinary: for example, a run of 40 ‘tails’ when tossing a coin or a set of perfect hands dealt out in Bridge (all spades to one, all hearts to another and so on).

I say ‘strangely’ because such events and such arrangements are no less likely than any other: the significance is only in people's minds and yet they think it extraordinary. (In a sense, any sequence is unique, but only some of them seem to form a pattern.) Plus, people do put an absurd amount of faith in ‘rare events’ never happening. As George Carlin, the US social critic, puts it: ‘Think about how stupid the average person is; now realise half of them are dumber than that.’

George mocks those of us whose eyes glaze over a bit when statistics are introduced. But well, facts and stats are hard to separate so everyone really has to learn strategies for dealing with numerical claims. The first step though is to become aware of the issue. So now try assessing this real-life issue.

The fire service estimates that you are twice as likely to die in a house fire that has no smoke alarm than a house that does. The figure is based on US research that shows that between 1975 and 2000 the use of smoke alarms rose from less than 10 per cent to at least 95 per cent, and that over the same period the number of home fire deaths was cut in half.

In the average year in the UK, the fire service is called out to over 600,000 fires. These result in over 800 deaths and over 17,000 injuries. Many of the fires are in houses with no smoke alarm fitted. If people had an early warning and were able to get out in time many lives could be saved and injuries prevented. Smoke alarms give this kind of early warning. Conclusion: Smoke alarms save lives.

Assuming of course that the argument does contain a flaw, which of the following objections best describes that flaw:

- 1: A factual objection: Actually, only about 50,000 of the 600,000 emergency call outs to fires are household ones in the home, and so the great bulk of lives and injuries can't possibly be saved no matter how many alarms are fitted in homes.

- 2: An objection on the principles: Millions of people never experience a household fire. Why should they be told that they have to fit a smoke alarm because of the tiny minority who do?

- 3: A logical objection: Correlation isn't causation. The drop in lives lost in domestic fires could be for other reasons than that of more smoke alarms being fitted.

- 4: A ‘causal’ objection: This argument assumes that having a smoke alarm means that an early warning will sound, but no one may be around when the alarm goes off! Even if someone is, the alarm may make next to no difference as to how quickly the person spots the fire and it certainly doesn't mean that the fire service gets to the house in time.

- 5: Another logical objection: The argument is a non-sequitur, because it assumes that having a smoke alarm gives early warning of fires, whereas, in fact, the alarm may be broken. A broken alarm may lull residents into a false sense of security by their presence and thus make them more likely ignore early signs of fires and sensible procedures in general.

- 6: A practical objection: The argument overlooks the possibility that fires may not be anywhere near the room with the alarm.

- 7: Another practical objection: The argument ignores the fact of different kinds of smoke and that only some kinds (burning toast, for example) set fire alarms off.

Answers to Chapter 15’s Exercise

The real objection and weakness to the argument is Objection 3 — correlation isn't causation — and it's a very common error!

The argument doesn't allow for the fact that during the 20th century a steady trend downwards already existed in deaths from fires. In the decades before smoke alarms started to be installed the trend downward was actually steeper! In the 1920s and 1930s, homes used open fires for heating rooms and water, as well as candles or gas for light. By 1950 electricity had taken over these functions in most houses, and solid fuel or gas boilers were providing hot water in posher homes. These changes clearly reduced the likelihood of domestic fires, and thus saved lives.

Here's my view of the other objections:

- Objections 1, 4, 6 and 7: All miss the point — a reduction is claimed for deaths in fires in homes thanks to smoke alarms — despite many of them not working, different kinds of smoke and so on.

- Objection 2: Although I agree with the principle of not forcing people to have the alarms, this wasn't the argument, which is about whether or not alarms save lives.

- Objection 5: More of a counter-argument than a non-sequitur. It seems to say that if smoke alarms save some lives by giving early warning, they may cost some lives by lulling people into a false sense of security. As I say above, some evidence for this makes it my ‘second best answer’.

Lesson Two is harder learnt: what you read others saying may be wrong too. A lot of people never seem to quite get to grips with this idea.

Lesson Two is harder learnt: what you read others saying may be wrong too. A lot of people never seem to quite get to grips with this idea. Take child psychology, my first example as to why you should be sceptical of what may at first appear to be a settled consensus. Because even if all the experts agree on something today, that doesn't mean they will tomorrow (and maybe they already don't if you look a bit further).

Take child psychology, my first example as to why you should be sceptical of what may at first appear to be a settled consensus. Because even if all the experts agree on something today, that doesn't mean they will tomorrow (and maybe they already don't if you look a bit further). Critical Thinking is all about explanation and understanding — and even the best explanation involves both facts and opinions.

Critical Thinking is all about explanation and understanding — and even the best explanation involves both facts and opinions. Test your skills on the following argument on smoke alarm advice:

Test your skills on the following argument on smoke alarm advice: