The three R's, Reading, wRiting and aRithmetic, are considered the basis of the skills children learn in schools. When you think about it, these same concepts apply to software as well. The foundations of any programs—whether web applications, batch jobs, or anything else—are the input of data, the processing of it in some way, and the output of data.

This concept is no more obvious than when you use Spring Batch. Each step consists of an ItemReader, an ItemProcessor, and an ItemWriter. Reading in any system isn't always straight forward, however. There are a number of different formats in which input can be provided; flat files, XML, and databases are just some of the potential input sources.

Spring Batch provides standard ways to handle most forms of input without the need to write code as well as the ability to develop your own readers for formats that are not supported, like reading a web service. This chapter will walk through the different features ItemReaders provide within the Spring Batch framework.

Up to this chapter we have vaguly discussed the concept of an ItemReader but we have not looked at the interface that Spring Batch uses to define input operations. The org.springframework.batch.item.ItemReader<T> interface defines a single method, read that is used to provide input for a step. Listing 7-1 shows the ItemReader interface.

Example 7.1. org.springframework.batch.item.ItemReader<T>

package org.springframework.batch.item;

public interface ItemReader<T> {

T read() throws Exception, UnexpectedInputException, ParseException,

NonTransientResourceException;

}The ItemReader interface shown in Listing 7-1 is a strategy interface. Spring Batch provides a numer of implementations based on the type of input to be processed. Flat files, databases, JMS resources and other sources of input all have implementations provided by Spring Batch. You can also implement your own ItemReader by implementing the ItemReader or any one of its subinterfaces.

The read method of the ItemReader interface returns a single item to be processed by your step as it is called by Spring Batch. This item is what your step will count as it maintains how many items within a chunk has been processed. The item will be passed to any configured ItemProcessor before being sent as part of a chunk to the ItemWriter.

The best way to understand how to use the ItemReader interface is to put it to use. In the next section you will begin to look at the many ItemReader implementations provided by Spring Batch by working with the FlatFileItemReader.

When I think of file IO in Java, I can't help but cringe. The API for IO is marginally better than the API for handling dates in this language, and you all know how good that is. Luckily, the guys at Spring Batch have addressed most of this by providing a number of declarative readers that allow you to declare the format of what you're going to read and they handle the rest. In this section, you'll be looking at the declarative readers that Spring Batch provides and how to configure them for file-based IO.

When I talk about flat files in the case of batch processes, I'm talking about any file that has one or more records. Each record can take up one or more lines. The difference between a flat file and an XML file is that the data within the file is non-descriptive. In other words, there is no meta information within the file itself to define the format or meaning of the data. In contrast, in XML, you use tags to give the data meaning.

Before you get into actually configuring an ItemReader for a flat file, let's take a look at the pieces of reading a file in Spring Batch. The authors of the framework did a good job in creating an API that makes sense and can be easily related to concepts that most of us already know.

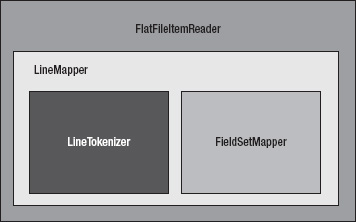

Figure 7-1 shows the components of the FlatFileItemReader. The org.springframework.batch.item.file.FlatFileItemReader consists of two main components: a Spring Resource that represents the file to be read and an implementation of the org.springfamework.batch.item.file.LineMapper interface. The LineMapper serves a similar function as the RowMapper does in Spring JDBC. When using a RowMapper in Spring JDBC, a ResultSet representing a collection of fields is provided for you map to objects.

The FlatFileItemReader allows you to configure a number of attributes about the file you're reading. Table 7-1 shows the options that you are likely to use and explains their meanings.

Table 7.1. FlatFileItemReader Configuration Options

Option | Type | Default | Description |

|---|---|---|---|

Comments | String [] | null | This array of strings indicates what prefixes will be considered line comments and skipped during file parsing. |

Encoding | String | ISO-8859-1 | The character encoding for the file. |

lineMapper | LineMapper | null (required) | This class will take each line of a file as a String and convert it into a domain object (item) to be processed. |

int | 0 | When running a job, the FlatFileItemReader can be configured to skip lines at the beginning of the file before parsing. This number indicates how many. | |

RecordSeparatorPolicy | DefaultRecordSeparatorPolicy | Used to determine the end of each record. By default, an end of line character indicates the end of a record; however, this can be used to determine how to handle things like quoted strings across lines. | |

Resource | Resource | null (required) | The resource to be read. |

LineCallbackHandler | null | Callback interface called with the line skipped. Every line skipped will be passed to this callback. | |

Strict | boolean | false | An Exception will be thrown if the resource is not found in strict mode. |

With Spring Batch's LineMapper interface, a String is provided, representing a single record from a file. With the raw String from the file, there is a two-step process for getting it to the domain object you will later work with. These two steps are handled by the LineTokenizer and FieldSetMapper.

A LineTokenizer implementation parses the line into a

org.springframework.batch.item.file.FieldSet. The provided String represents the entire line from the file. In order to be able to map the individual fields of each record to your domain object, you need to parse the line into a collection of fields. The FieldSet in Spring Batch represents that collection of fields for a single row.The FieldSetMapper implementation maps the FieldSet to a domain object. With the line divided into individual fields, you can now map each input field to the field of your domain object just like a RowMapper would map a ResultSet row to the domain object.

Sounds simple doesn't it? It really is. The intricacies come from how to parse the line and when you look at objects that are built out of multiple records from your file. Let's take a look at reading files with fixed-width records first.

When dealing with legacy mainframe systems, it is common to have to work with fixed-width files due to the way COBOL and other technologies declare their storage. Because of this, you need to be able to handle fixed with files as well.

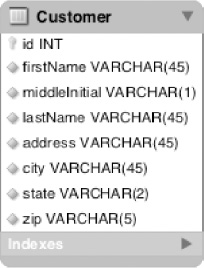

You can use a customer file as your fixed-width file. Consisting of a customer's name and address, Table 7-2 outlines the format of your customer file.

Table 7.2. Customer File Format

Field | Length | Description |

|---|---|---|

First Name | 10 | Your customer's first name. |

Middle Initial | 1 | The customer's middle initial. |

Last Name | 10 | The last name of the customer. |

Address Number | 6 | The street number piece of the customer's address. |

Street | 20 | The name of the street where the customer lives. |

City | 10 | The city the customer is from. |

State | 2 | The two letter state abbreviation. |

Zip Code | 5 | The customer's postal code. |

Defining the format for a fixed with file is important. A delimited file describes its fields with its delimiters. XML or other structured files are self-describing given the metadata the tags provide. Database data has the metadata from the database describing it. However, fixed-width files are different. They provide zero metadata to describe their format. If you look at Listing 7-1, you can see an example of what the previous description looks like as your input file.

Example 7.1. customer.txt, the Fixed-Width File

Michael TMinella 123 4th Street Chicago IL60606 Warren QGates 11 Wall Street New York NY10005 Ann BDarrow 350 Fifth Avenue New York NY10118 Terrence HDonnelly 4059 Mt. Lee Drive HollywoodCA90068

To demonstrate how each of these readers work, you will create a single-step job that reads in a file and writes it right back out. For this job, copyJob, you will create a copyJob.xml file with the following beans:

customerFile: The input file.

outputFile: The file you will copy the input file to.

customerReader: The FlatFileItemReader.

outputWriter: The FlatFileItemWriter.

copyStep: The step definition for your job.

copyJob: The job definition.

Your customerFile and outputFile beans will be nothing more than Spring's org.springframework.core.io.FileSystemResource beans. Each of the file-related beans has the scope step because they can't be bound until the step begins (unlike normal Spring beans, which are instantiated and wired upon the application's startup)[18]. The customerReader is an instance of the FlatFileItemReader. As covered previously, the FlatFileItemReader consists of two pieces, a resource to read in (in this case, the customerFile) and a way to map each line of the file (a LineMapper implementation).

Note

The "step" scope is a new bean scope provided by Spring Batch. This scope allows bean properties to be set when the step is excuted instead of on application startup (as is the default in Spring).

For the LineMapper implementation, you are going to use Spring Batch's org.springframework.batch.item.file.DefaultLineMapper. This LineMapper implementation is intended for the two-step process of mapping lines to domain objects you talked about previously: parsing the line into a FieldSet and then mapping the fields of the FieldSet to a domain object, the Customer object in your case.

To support the two step mapping process, the DefaultLineMapper takes two dependencies: a LineTokenizer implementation which will parse the String that is read in from your file into a FieldSet and a FieldSetMapper implementation to map the fields in your FieldSet to the fields in your domain object. Listing 7-2 shows the customerFile and customerReader bean definitions.

Example 7.2. customerFile and customerReader in copyJob.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans:beans xmlns="http://www.springframework.org/schema/batch"

xmlns:beans="http://www.springframework.org/schema/beans"

xmlns:util="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/util

http://www.springframework.org/schema/util/spring-util.xsd

http://www.springframework.org/schema/batch

http://www.springframework.org/schema/batch/spring-batch-2.1.xsd">

<beans:import resyource="../launch-context.xml"/>

<beans:bean id="customerFile"

class="org.springframework.core.io.FileSystemResyource" scope="step">

<beans:constructor-arg value="#{jobParameters[customerFile]}"/>

</beans:bean>

<beans:bean id="customerReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resyource" ref="customerFile" />

<beans:property name="lineMapper">

<beans:bean

class="org.springframework.batch.item.file.mapping.

DefaultLineMapper">

<beans:property name="lineTokenizer">

<beans:bean

class="org.springframework.batch.item.file.transform.

FixedLengthTokenizer">

<beans:property name="names"

value="firstName,middleInitial,lastName,addressNumber,street,

city,state,zip"/>

<beans:property name="columns"

value="1-10,11,12-21,22-27,28-47,48-56,57-58,59-63"/>

</beans:bean>

</beans:property>

<beans:property name="fieldSetMapper">

<beans:beanclass="org.springframework.batch.item.file.mapping.

BeanWrapperFieldSetMapper">

<beans:property name="prototypeBeanName" value="customer"/>

</beans:bean>

</beans:property>

</beans:bean>

</beans:property>

</beans:bean>

<beans:bean id="customer" class="com.apress.springbatch.chapter7.Customer"

scope="prototype"/>

...Listing 7-2 begins with the customerFile, which is a reference to the file that will be read in by the customerReader. Note that the actual name of the customer file will be passed in as a job parameter at runtime.

From there you have your customerReader. The reader, as noted previously, consists of two pieces: the file to be read in and a LineMapper instance. When you look at the LineMapper interface, as shown in Listing 7-3, you can see that it's nearly identical to Spring's RowMapper.

Example 7.3. The LineMapper Interface

package org.springframework.batch.item.file;

import org.springframework.batch.item.file.mapping.FieldSetMapper;

import org.springframework.batch.item.file.transform.LineTokenizer;

public interface LineMapper<T> {

T mapLine(String line, int lineNumber) throws Exception;

}For each line in your file, Spring Batch will call the mapLine method of the LineMapper implementation configured. In your case, that method will do two things; first, it will use the org.springframework.batch.item.file.transform.FixedLengthTokenizer to divide the string up into a FieldSet based upon the columns you configured. Then it will pass the FieldSet to the org.springframework.batch.item.file.mapping.BeanWrapperFieldSetMapper, which will use the names of each field to map the fields to the bean you requested (Customer in this case).

When working with fixed-width files, you use the FixedLengthTokenizer to parse your records into FieldSets. This implementation of the LineTokenizer interface takes three parameters:

columns (required): The column number ranges that define each field.

names (optional): A name to associate with each of the ranges specified in the list of columns.

strict (optional): A Boolean telling the reader if an exception should be thrown if a line of an invalid length is passed in (fixed-width files are expected to have all records be the same length).

With the LineTokenizer configured, you have a way to parse your line into a FieldSet. Now you need to map the FieldSet into the fields of your domain object. In this case, you are going to use the BeanWrapperFieldSetMapper. This implementation of the FieldSetMapper interface uses the bean spec to map fields in the FieldSet to fields in the domain object by name (the Customer object will have a getFirstName() and a setFirstName(String name), etc). The only thing you need to supply for the BeanWrapperFieldSetMapper is a reference to the bean it will be using, in your case it is the reference to the customer bean.

Note

The FixedLengthTokenizer doesn't trim any leading or trailing characters (spaces, zeros, etc) within each field. To do this, you'll have to implement your own LineTokenizer or you can trim in your own FieldSetMapper.

To put your reader to use, you need to configure your step and job. You will also need to configure a writer so that you can see that everything works. You will be covering writers in depth in the next chapter so you can keep the writer for this example simple. Listing 7-4 shows how to configure a simple writer to output the domain objects to a file.

Example 7.4. A Simple Writer

...

<beans:bean id="outputFile"

class="org.springframework.core.io.FileSystemResyource" scope="step">

<beans:constructor-arg value="#{jobParameters[outputFile]}"/>

</beans:bean>

<beans:bean id="outputWriter"

class="org.springframework.batch.item.file.FlatFileItemWriter">

<beans:property name="resource" ref="outputFile" />

<beans:property name="lineAggregator">

<beans:bean class="org.springframework.batch.item.file.transform.

FormatterLineAggregator">

<beans:property name="fieldExtractor">

<beans:bean class="org.springframework.batch.item.file.transform.

BeanWrapperFieldExtractor">

<beans:property name="names" value="firstName,middleInitial,

lastName,addressNumber,street,city,state,zip" />

</beans:bean>

</beans:property>

<beans:property name="format" value=" %s %s. %s, %s %s, %s %s %s" />

</beans:bean>

</beans:property>

</beans:bean>

...Looking at the output file resource and the writer in Listing 7-4, you can see a pattern between the readers and writers. The writer has two dependencies: the file resource to write to and a lineAggregator. The lineAggregator is used to take an object and convert it to the string that will be written to the file.

Your job configuration is very simple. As shown in Listing 7-5, a simple step that consists of the reader and writer with a commit count of 10 records is all you need. Your job uses that single step.

Example 7.5. The copyFileStep and copyFileJob

...

<step id="copyFileStep">

<tasklet>

<chunk reader="customerFileReader" writer="outputWriter"

commit-interval="10"/>

</tasklet>

</step>

<job id="copyJob">

<step id="step1" parent="copyFileStep"/>

</job>

</beans:beans>The interesting piece of all of this is the small amount of code required to read and write this file. In this example, the only code you need to write is the domain object itself (Customer). Once you build your application, you can execute it with the command shown in Listing 7-6.

Example 7.6. Executing the copyJob

java -jar copyJob.jar jobs/copyJob.xml copyJob customerFile=/input/customer.txt outputFile=/output/output.txt

The output of the job is the same contents of the input file formatted according to the format string of the writer, as shown in Listing 7-7.

Example 7.7. Results of the copyJob

Michael T. Minella, 123 4th Street, Chicago IL 60606 Warren Q. Gates, 11 Wall Street, New York NY 10005 Ann B. Darrow, 350 Fifth Avenue, New York NY 10118 Terrence H. Donnelly, 4059 Mt. Lee Drive, Hollywood CA 90068

Fixed-width files are a form of input provided for batch processes in many enterprises. As you can see, parsing the file into objects via FlatFileItemReader and FixedLengthTokenizer makes this process easy. In the next section you will look at a file format that provides a small amount of metadata to tell us how the file is to be parsed.

Delimited files are files that provide a small amount of metadata within the file to tell us what the format of the file is. In this case, a character acts as a divider between each field in your record. This metadata provides us with the ability to not have to know what defines each individual field. Instead, the file dictates to use what each field consists of by dividing each record with a delimiter.

As with fixed-width records, the process is the same to read a delimited record. The record will first be tokenized by the LineTokenizer into a FieldSet. From there, the FieldSet will be mapped into your domain object by the FieldSetMapper. With the process being the same, all you need to do is update the LineTokenizer implementation you use to parse your file based upon a delimiter instead of premapped columns. Let's start by looking at an updated customerFile that is delimited instead of fixed-width. Listing 7-8 shows your new input file.

Example 7.8. A Delimited customerFile

Michael,T,Minella,123,4th Street,Chicago,IL,60606 Warren,Q,Gates,11,Wall Street,New York,NY,10005 Ann,B,Darrow,350,Fifth Avenue,New York,NY,10118 Terrence,H,Donnelly,4059,Mt. Lee Drive,Hollywood,CA,90068

You'll notice right away that there are two changes between the new file and the old one. First, you are using commas to delimit the fields. Second, you have trimmed all of the fields. Typically when using delimited files, each field is not padded to a fixed-width like they are in fixed-width files. Because of that, the record length can vary, unlike the fixed-width record length.

As mentioned, the only configuration update you need to make to use the new file format is how each record is parsed. For fixed-width records, you used the FixedLengthTokenizer to parse each line. For the new delimited records, you will use the org.springframework.batch.item.file.transform.DelimitedLineTokenizer to parse the records into a FieldSet. Listing 7-9 shows the configuration of the reader updated with the DelimitedLineTokenizer.

Example 7.9. customerFileReader with the DelimitedLineTokenizer

...

<beans:bean id="customerFile"

class="org.springframework.core.io.FileSystemResyource" scope="step">

<beans:constructor-arg value="#{jobParameters[customerFile]}"/>

</beans:bean>

<beans:bean id="customerFileReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resyource" ref="customerFile" />

<beans:property name="lineMapper">

<beans:bean

class="org.springframework.batch.item.file.mapping.DefaultLineMapper">

<beans:property name="lineTokenizer">

<beans:bean

class="org.springframework.batch.item.file.transform.DelimitedLineTokenizer">

<beans:property name="names"

value="firstName,middleInitial,lastName,addressNumber,street,city,state,zip"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

</beans:property>

<beans:property name="fieldSetMapper">

<beans:bean class="org.springframework.batch.item.file.mapping.

BeanWrapperFieldSetMapper">

<beans:property name="prototypeBeanName" value="customer"/>

</beans:bean>

</beans:property>

</beans:bean>

</beans:property>

</beans:bean><beans:bean id="customer" class="com.apress.springbatch.chapter7.Customer" scope="prototype"/> ...

The DelimitedLineTokenizer allows for two options that you'll find very useful. The first is the ability to configure the delimiter. A comma is the default value; however, any single character can be used. The second option is the ability to configure what value will be used as a quote character. When this option is used, that value will be used instead of " as the character to indicate quotes. This character will also be able to escape itself. Listing 7-10 shows an example of how a string is parsed when you use # character as quote character.

Example 7.10. Parsing a Delimited File with the Quote Character Configured

Michael,T,Minella,#123,4th Street#,Chicago,IL,60606 Is parsed as Michael T Minella 123,4th Street Chicago IL 60606

Although that's all that is required to process delimited files, it's not the only option you have. The current example maps address numbers and streets to two different fields. However, what if you wanted to map them together into a single field as represented in the domain object in Listing 7-11?

Example 7.11. Customer with a Single Street Address Field

package com.apress.springbatch.chapter7;

public class Customer {

private String firstName;

private String middleInitial;

private String lastName;

private String addressNumber;

private String street;

private String city;

private String state;

private String zip;

// Getters & setters go here

...

}With the new object format, you will need to update how the FieldSet is mapped to the domain object. To do this, you will create your own implementation of the org.springframework.batch.item.file.mapping.FieldSetMapper interface. The FieldSetMapper interface, as shown in Listing 7-12, consists of a single method, mapFieldSet, that allows you to map the FieldSet as it is returned from the LineTokenizer to the domain object fields.

Example 7.12. The FieldSetMapper Interface

package org.springframework.batch.item.file.mapping;

import org.springframework.batch.item.file.transform.FieldSet;

import org.springframework.validation.BindException;

public interface FieldSetMapper<T> {

T mapFieldSet(FieldSet fieldSet) throws BindException;

}To create your own mapper, you will implement the FieldSetMapper interface with the type defined as Customer. From there, as shown in Listing 7-13, you can map each field from the FieldSet to the domain object, concatenating the addressNumber and street fields into a single address field per your requirements.

Example 7.13. Mapping Fields from the FieldSet to the Customer Object

package com.apress.springbatch.chapter7;

import org.springframework.batch.item.file.mapping.FieldSetMapper;

import org.springframework.batch.item.file.transform.FieldSet;

import org.springframework.validation.BindException;

public class CustomerFieldSetMapper implements FieldSetMapper<Customer> {

public Customer mapFieldSet(FieldSet fieldSet) throws BindException {

Customer customer = new Customer();

customer.setAddress(fieldSet.readString("addressNumber") +

" " + fieldSet.readString("street"));

customer.setCity(fieldSet.readString("city"));

customer.setFirstName(fieldSet.readString("firstName"));

customer.setLastName(fieldSet.readString("lastName"));

customer.setMiddleInitial(fieldSet.readString("middleInitial"));

customer.setState(fieldSet.readString("state"));

customer.setZip(fieldSet.readString("zip"));

return customer;

}

}The FieldSet methods are very similar to the ResultSet methods of the JDBC realm. Spring provides a method for each of the primitive data types, String (trimmed or untrimmed), BigDecimal, and java.util.Date. Each of these different methods has two different varieties. The first takes an integer as the parameter where the integer represents the index of the field to be retrieved in the record. The other version, shown in Listing 7-14, takes the name of the field. Although this approach requires you to name the fields in the job configuration, it's a more maintainable model in the long run. Listing 7-14 shows the FieldSet interface.

Example 7.14. FieldSet Interface

package org.springframework.batch.item.file.transform;

import java.math.BigDecimal;

import java.sql.ResultSet;

import java.util.Date;

import java.util.Properties;

public interface FieldSet {

String[] getNames();

boolean hasNames();

String[] getValues();

String readString(int index);

String readString(String name);

String readRawString(int index);

String readRawString(String name);

boolean readBoolean(int index);

boolean readBoolean(String name);

boolean readBoolean(int index, String trueValue);

boolean readBoolean(String name, String trueValue);

char readChar(int index);

char readChar(String name);

byte readByte(int index);

byte readByte(String name);

short readShort(int index);

short readShort(String name);

int readInt(int index);

int readInt(String name);

int readInt(int index, int defaultValue);

int readInt(String name, int defaultValue);

long readLong(int index);

long readLong(String name);

long readLong(int index, long defaultValue);

long readLong(String name, long defaultValue);

float readFloat(int index);

float readFloat(String name);

double readDouble(int index);

double readDouble(String name);

BigDecimal readBigDecimal(int index);

BigDecimal readBigDecimal(String name);

BigDecimal readBigDecimal(int index, BigDecimal defaultValue);

BigDecimal readBigDecimal(String name, BigDecimal defaultValue);

Date readDate(int index);

Date readDate(String name);

Date readDate(int index, Date defaultValue);

Date readDate(String name, Date defaultValue);

Date readDate(int index, String pattern);

Date readDate(String name, String pattern);

Date readDate(int index, String pattern, Date defaultValue);Date readDate(String name, String pattern, Date defaultValue);

int getFieldCount();

Properties getProperties();

}Note

Unlike the JDBC ResultSet, which begins indexing columns at 1, the index used by Spring Batch's FieldSet is zero-based.

To put the CustomerFieldSetMapper to use, you need to update the configuration to use it. Replace the BeanWrapperFieldSetMapper reference with your own bean reference, as shown in Listing 7-15.

Example 7.15. customerFileReader Configured with the CustomerFieldSetMapper

...

<beans:bean id="customerFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[customerFile]}"/>

</beans:bean>

<beans:bean id="customerFileReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resource" ref="customerFile" />

<beans:property name="lineMapper">

<beans:bean

class="org.springframework.batch.item.file.mapping.DefaultLineMapper">

<beans:property name="lineTokenizer">

<beans:bean class="org.springframework.batch.item.file.transform.

DelimitedLineTokenizer">

<beans:property name="names"

value="firstName,middleInitial,lastName,addressNumber,street,

city,state,zip"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

</beans:property>

<beans:property name="fieldSetMapper">

<beans:bean

class="com.apress.springbatch.chapter7.CustomerFieldSetMapper"/>

</beans:property>

</beans:bean>

</beans:property>

</beans:bean>

...Note that with your new CustomerFieldSetMapper, you don't need to configure the reference to the Customer bean. Since you handle the instantiation yourselves, this is no longer needed.

Parsing files with the standard Spring Batch parsers, as you have shown, requires nothing more than a few lines of XML. However, not all files consist of Unicode characters laid out in a format that is easy for Java to understand. When dealing with legacy systems, it's common to come across data storage techniques that require custom parsing. In the next section, you will look at how to implement your own LineTokenizer to be able to handle custom file formats.

In the previous section you looked at how to address the ability to tweak the mapping of fields in your file to the fields of your domain object by creating a custom FieldSetMapper implementation. However, that is not the only option. Instead, you can create your own LineTokenizer implementation. This will allow you to parse each record however you need.

Like the FieldSetMapper interface, the org.springframework.batch.item.file.transform.LineTokenizer interface has a single method: tokenize. Listing 7-16 shows the LineTokenizer interface.

Example 7.16. LineTokenizer interface

package org.springframework.batch.item.file.transform;

public interface LineTokenizer {

FieldSet tokenize(String line);

}For this approach you will use the same delimited input file you used previously; however, since the domain object has the address number and the street combined into a single field, you will combine those two tokens into a single field in the FieldSet. Listing 7-17 shows the CustomerFileLineTokenizer.

Example 7.17. CustomerFileLineTokenizer

package com.apress.springbatch.chapter7;

import java.util.ArrayList;

import java.util.List;

import org.springframework.batch.item.file.transform.DefaultFieldSetFactory;

import org.springframework.batch.item.file.transform.FieldSet;

import org.springframework.batch.item.file.transform.FieldSetFactory;

import org.springframework.batch.item.file.transform.LineTokenizer;

public class CustomerFileLineTokenizer implements LineTokenizer {

private String delimiter;

private String names;

private FieldSetFactory fieldSetFactory = new DefaultFieldSetFactory();

public FieldSet tokenize(String record) {

String[] fields = record.split(delimiter);

List<String> parsedFields = new ArrayList<String>();for (int i = 0; i < fields.length; i++) {

if (i == 4) {

parsedFields.set(i - 1,

parsedFields.get(i - 1) + " " + fields[i]);

} else {

parsedFields.add(fields[i]);

}

}

FieldSet fieldSet =

fieldSetFactory.create(parsedFields.toArray(new String [0]),

names.split(","));

return fieldSet;

}

public void setDelimiter(String delimiter) {

this.delimiter = delimiter;

}

public void setNames(String names) {

this.names = names;

}

}The tokenize method of the CustomerFileLineTokenizer takes each record and splits it based upon the delimiter that was configured with Spring. You loop through the fields, combining the third and fourth fields together so that they are a single field. You then create a FieldSet using the DefaultFieldSetFactory, passing it the one required parameter (an array of values to be your fields) and one optional parameter (an array of names for the fields). This LineTokenizer names your fields so that you can use the BeanWrapperFieldSetMapper to do your FieldSet to domain object mapping without any additional code.

Configuring the CustomerFileLineTokenizer is identical to the configuration for the DelimitedLineTokenizer with only the class name to change. Listing 7-18 shows the updated configuration.

Example 7.18. Configuring the CustomerFileLineTokenizer

...

<beans:bean id="customerFile"

class="org.springframework.core.io.FileSystemResyource" scope="step">

<beans:constructor-arg value="#{jobParameters[customerFile]}"/>

</beans:bean>

<beans:bean id="customerFileReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resource" ref="customerFile" />

<beans:property name="lineMapper">

<beans:bean

class="org.springframework.batch.item.file.mapping.DefaultLineMapper">

<beans:property name="lineTokenizer">

<beans:bean class="com.apress.springbatch.chapter7.CustomerFileLineTokenizer">

<beans:property name="names"

value="firstName,middleInitial,lastName,address,city,state,zip"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

</beans:property>

<beans:property name="fieldSetMapper">

<beans:bean class="org.springframework.batch.item.file.mapping.

BeanWrapperFieldSetMapper">

<beans:property name="prototypeBeanName" value="customer"/>

</beans:bean>

</beans:property>

</beans:bean>

</beans:property>

</beans:bean>

<beans:bean id="customer" class="com.apress.springbatch.chapter7.Customer"

scope="prototype"/>

...The sky's the limit with what you can do with your own LineTokenizer and FieldSetMapper. Other uses for custom LineTokenizers could include:

Parsing legacy file encodings like EBCDIC.

Parsing third party file formats like Microsoft's Excel Worksheets.

Handling special type conversion requirements.

However, not all files are as simple as the customer one you have been working with. What if your file contains multiple record formats? The next section will discuss how Spring Batch can choose the appropriate LineTokenizer to parse each record it comes across.

Up to this point you have been looking at a customer file that contains a collection of customer records. Each record in the file has the exact same format. However, what if you received a file that had customer information as well as transaction information? Yes, you could implement a single custom LineTokenizer. However there are two issues with this approach.

Complexity: If you have a file that has three, four, five, or more line formats—each with a large number of fields—this single class can get out of hand quickly.

Separation of concerns: The LineTokenizer is intended to parse a record. That's it. It should not need to determine what the record type is prior to the parsing.

With this in mind, Spring Batch provides another LineMapper implementation: the org.springframework.batch.item.file.mapping.PatternMatchingCompositeLineMapper. The previous examples used the DefaultLineMapper, which provided the ability to use a single LineTokenizer and a single FileSetMapper. With the PatternMatchingCompositeLineMapper, you will be able to define a Map of LineTokenizers and a corresponding Map of FieldSetMappers. The key for each map will be a pattern that the LineMapper will use to identify which LineTokenizer to use to parse each record.

Let's start this example by looking at the updated input file. In this case, you still have the same customer records. However, interspersed between each customer record is a random number of transaction records. To help identify each record, you have added a prefix to each record. Listing 7-19 shows the updated input file.

Example 7.19. The Updated customerInputFile

CUST,Warren,Q,Darrow,8272 4th Street,New York,IL,76091 TRANS,1165965,2011-01-22 00:13:29,51.43 CUST,Ann,V,Gates,9247 Infinite Loop Drive,Hollywood,NE,37612 CUST,Erica,I,Jobs,8875 Farnam Street,Aurora,IL,36314 TRANS,8116369,2011-01-21 20:40:52,-14.83 TRANS,8116369,2011-01-21 15:50:17,-45.45 TRANS,8116369,2011-01-21 16:52:46,-74.6 TRANS,8116369,2011-01-22 13:51:05,48.55 TRANS,8116369,2011-01-21 16:51:59,98.53

In the file shown in Listing 7-19, you have two comma-delimited formats. The first consists of the standard customer format you have been working to up to now with the concatenated address number and street. These records are indicated with the prefix CUST. The other records are transaction records; each of these records, prefixed with the TRANS, prefix, are also comma-delimited, with the following three fields:

Account number: The customer's account number.

Date : The date the transaction occurred. The transactions may or may not be in date order.

Amount: The amount in dollars for the transaction. Negative values symbolize debits and positive amounts symbolize credits.

Listing 7-20 shows the code for the Transaction domain object.

Example 7.20. Transaction Domain Object Code

package com.apress.springbatch.chapter7;

import java.text.DateFormat;

import java.text.SimpleDateFormat;

import java.util.Date;

public class Transaction {

private String accountNumber;

private Date transactionDate;

private Double amount;

private DateFormat formatter = new SimpleDateFormat("MM/dd/yyyy");

public String getAccountNumber() {

return accountNumber;

}

public void setAccountNumber(String accountNumber) {

this.accountNumber = accountNumber;

}

public Date getTransactionDate() {

return transactionDate;

}

public void setTransactionDate(Date transactionDate) {

this.transactionDate = transactionDate;

}

public Double getAmount() {

return amount;

}

public void setAmount(Double amount) {

this.amount = amount;

}

public String getDateString() {

return formatter.format(transactionDate);

}

}With the record formats identified, you can look at the reader. Listing 7-21 shows the configuration for the updated customerFileReader. As mentioned, using the PatternMatchingCompositeLineMapper, you map two instances of the DelimitedLineTokenizer, each with the correct record format configured. You'll notice that you have an additional field named prefix for each of the LineTokenizers. This is to address the string at the beginning of each record (CUST and TRANS). Spring Batch will parse the prefix and name it prefix in your FieldSet; however, since you don't have a prefix field in either of your domain objects, it will be ignored in the mapping.

Example 7.21. Configuring the customerFileReader with Multiple Record Formats

...

<beans:bean id="customerFile"

class="org.springframework.core.io.FileSystemResyource" scope="step">

<beans:constructor-arg value="#{jobParameters[customerFile]}"/>

</beans:bean>

<beans:bean id="customerFileReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resyource" ref="customerFile" />

<beans:property name="lineMapper">

<beans:bean class="org.springframework.batch.item.file.mapping.

PatternMatchingCompositeLineMapper">

<beans:property name="tokenizers">

<beans:map>

<beans:entry key="CUST*" value-ref="customerLineTokenizer"/>

<beans:entry key="TRANS*" value-ref="transactionLineTokenizer"/>

</beans:map>

</beans:property>

<beans:property name="fieldSetMappers">

<beans:map>

<beans:entry key="CUST*" value-ref="customerFieldSetMapper"/>

<beans:entry key="TRANS*" value-ref="transactionFieldSetMapper"/>

</beans:map>

</beans:property>

</beans:bean>

</beans:property>

</beans:bean>

<beans:bean id="customerLineTokenizer"

class="org.springframework.batch.item.file.transform.

DelimitedLineTokenizer">

<beans:property name="names"

value="prefix,firstName,middleInitial,lastName,address,city,state,zip"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

<beans:bean id="transactionLineTokenizer"

class="org.springframework.batch.item.file.transform.

DelimitedLineTokenizer">

<beans:property name="names"

value="prefix,accountNumber,transactionDate,amount"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

<beans:bean id="customerFieldSetMapper"

class="org.springframework.batch.item.file.mapping.

BeanWrapperFieldSetMapper">

<beans:property name="prototypeBeanName" value="customer"/>

<beans:property name="strict" value="false"/></beans:bean> <beans:bean id="transactionFieldSetMapper" class="com.apress.springbatch.chapter7.TransactionFieldSetMapper"/> <beans:bean id="customer" class="com.apress.springbatch.chapter7.Customer" scope="prototype"/> ...

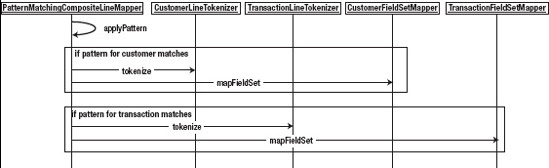

The configuration of the customerFileReader is beginning to get a bit verbose. Let's walk through what will actually happen when this reader is executed. If you look at Figure 7-2, you can follow the flow of how the customerFileReader will process each line.

As Figure 7-2 shows, the PatternMatchingCompositeLineMapper will look at each record of the file and apply your pattern to it. If the record begins with CUST,* (where * is zero or more characters), it will pass the record to the customerLineTokenizer for parsing. Once the record is parsed into a FieldSet, it will be passed to the customerFieldSetMapper to be mapped to the domain object. However, if the record begins with TRANS,*, it will be passed to the transactionLineTokenizer for parsing with the resulting FieldSet being passed to the custom transactionFieldSetMapper.

But why do you need a custom FieldSetMapper? It's necessary for custom type conversion. By default, the BeanWrapperFieldSetMapper doesn't do any special type conversion. The Transaction domain object consists of an accountNumber field, which is a String; however, the other two fields, transactionDate and amount, are a java.util.Date and a Double, respectively. Because of this, you will need to create a custom FieldSetMapper to do the required type conversions. Listing 7-22 shows the TransactionFieldSetMapper.

Example 7.22. TransactionFieldSetMapper

package com.apress.springbatch.chapter7;

import org.springframework.batch.item.file.mapping.FieldSetMapper;

import org.springframework.batch.item.file.transform.FieldSet;

import org.springframework.validation.BindException;

public class TransactionFieldSetMapper implements FieldSetMapper<Transaction> {

public Transaction mapFieldSet(FieldSet fieldSet) throws BindException {

Transaction trans = new Transaction();trans.setAccountNumber(fieldSet.readString("accountNumber"));

trans.setAmount(fieldSet.readDouble("amount"));

trans.setTransactionDate(fieldSet.readDate("transactionDate",

"yyyy-MM-dd HH:mm:ss"));

return trans;

}

}As you can see, the FieldSet interface, like the ResultSet interface of the JDBC world, provides custom methods for each data type. In the case of the Transaction domain object, you use the readDouble method to have the String in your file converted into a Java.lang.Double and you use the readDate method to parse the string contained in your file into a Java.util.Date. For the date conversion, you specify not only the field's name but also the format of the date to be parsed.

Unfortunately, with two different item types now being processed by the step at the same time, you won't be able to use the same ItemWriter you have been up to now. I would love to be able to tell you that Spring Batch has the equivalent delegator for the writer side as it does with the reader side and the PatternMatchingCompositeLineMapper. Unfortunately, it doesn't. Instead, you will need to create a custom ItemWriter that will delegate to the appropriate writer based upon the type of item to be printed. Chapter 9 covers the details of this writer implementation. However, to be able to see the results of the job, Listing 7-23 shows the implementation of the LineAggregator interface that will delegate the items accordingly.

Example 7.23. CustomerLineAggregator

package com.apress.springbatch.chapter7;

import org.springframework.batch.item.file.transform.LineAggregator;

public class CustomerLineAggregator implements LineAggregator<Object> {

private LineAggregator<Customer> customerLineAggregator;

private LineAggregator<Transaction> transactionLineAggregator;

public String aggregate(Object record) {

if(record instanceof Customer) {

return customerLineAggregator.aggregate((Customer) record);

} else {

return transactionLineAggregator.aggregate((Transaction) record);

}

}

public void setCustomerLineAggregator(

LineAggregator<Customer> customerLineAggregator) {

this.customerLineAggregator = customerLineAggregator;

}

public void setTransactionLineAggregator(

LineAggregator<Transaction> transactionLineAggregator) {

this.transactionLineAggregator = transactionLineAggregator;} }

The LineAggregator implementation in Listing 7-23 is quite simple. It takes an item, determines its type, and passes the item to the appropriate LineAggregator implementation based upon the type. The configuration for the previous LineAggregator is shown in Listing 7-24.

Example 7.24. outputWriter Configuration

...

<beans:bean id="outputFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[outputFile]}"/>

</beans:bean>

<beans:bean id="outputWriter"

class="org.springframework.batch.item.file.FlatFileItemWriter">

<beans:property name="resource" ref="outputFile" />

<beans:property name="lineAggregator">

<beans:bean

class="com.apress.springbatch.chapter7.CustomerLineAggregator">

<beans:property name="customerLineAggregator"

ref="customerLineAggregator" />

<beans:property name="transactionLineAggregator"

ref="transactionLineAggregator" />

</beans:bean>

</beans:property>

</beans:bean>

<beans:bean id="customerLineAggregator"

class="org.springframework.batch.item.file.transform.

FormatterLineAggregator">

<beans:property name="fieldExtractor">

<beans:bean class="org.springframework.batch.item.file.transform.

BeanWrapperFieldExtractor">

<beans:property name="names" value="firstName,middleInitial,lastName,

address,city,state,zip" />

</beans:bean>

</beans:property>

<beans:property name="format" value="%s %s. %s, %s, %s %s %s" />

</beans:bean>

<beans:bean id="transactionLineAggregator" class="org.springframework.batch.

item.file.transform.FormatterLineAggregator">

<beans:property name="fieldExtractor">

<beans:bean class="org.springframework.batch.item.file.transform.

BeanWrapperFieldExtractor">

<beans:property name="names" value="accountNumber,amount,dateString" />

</beans:bean>

</beans:property>

<beans:property name="format" value="%s had a transaction of %.2f on %s" /></beans:bean> ...

As you can see from Listing 7-24, configuring each of the two LineAggregators to which the CustomerLineAggregator delegates are based upon the same configuration that you used previously. The only difference is you have a delegation step in front of them.

When you execute the job, you're able to read in the two different record formats, parse them into their respective domain objects, and print them out into two, different record formats. A sample of the results of this job is shown in Listing 7-25.

Example 7.25. Results of Running the copyJob Job with Multiple Record Formats

Warren Q. Darrow, 8272 4th Street, New York IL 76091 1165965 had a transaction of 51.43 on 01/22/2011 Ann V. Gates, 9247 Infinite Loop Drive, Hollywood NE 37612 Erica I. Jobs, 8875 Farnam Street, Aurora IL 36314 8116369 had a transaction of −14.83 on 01/21/2011 8116369 had a transaction of −45.45 on 01/21/2011 8116369 had a transaction of −74.60 on 01/21/2011 8116369 had a transaction of 48.55 on 01/22/2011 8116369 had a transaction of 98.53 on 01/21/2011

The ability to process multiple records from a single file is a common requirement in batch processing. However, this example assumes that there was no real relationship between the different records. What if there is? The next section will look at how to read multiline records into a single item.

In the last example, you looked at the processing of two different record formats into two different, unrelated items. However, if you take a closer look at the file format you were using, you can see that the records you were reading were actually related (as shown in the output of the job). While not related by a field in the file, the transaction records are the transaction records for the customer record above it. Instead of processing each record independently, doesn't it make more sense to have a Customer object that has a collection of Transaction objects on it?

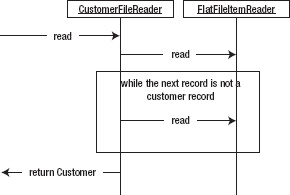

To make this work, you will need to perform a small bit of trickery. The examples provided with Spring Batch use a footer record to identify the true end of a record. Although convenient, many files seen in batch do not have that trailer record. With your file format, you run into the issue of not knowing when a record is complete without reading the next row. To get around this, you can implement your own ItemReader that adds a bit of logic around the customerFileReader you configured in the previous section. Figure 7-3 shows the flow of logic you will use within your custom ItemReader.

As Figure 7-3 shows, your read method will begin by determining if a Customer object has already been read. If it hasn't, it will attempt to read one from the FlatFileItemReader. Assuming you read a record (you won't have read one once you reach the end of the file), you will initialize the transaction List on the Customer object. While the next record you read is a Transaction, you will add it to the Customer object. Listing 7-26 shows the implementation of the CustomerFileReader.

Example 7.26. CustomerFileReader

package com.apress.springbatch.chapter7;

import Java.util.ArrayList;

import org.springframework.batch.item.ExecutionContext;

import org.springframework.batch.item.ItemStreamException;

import org.springframework.batch.item.ItemStreamReader;

import org.springframework.batch.item.ParseException;

import org.springframework.batch.item.UnexpectedInputException;

public class CustomerFileReader implements ItemStreamReader<Object> {

private Object curItem = null;

private ItemStreamReader<Object> delegate;

public Object read() throws Exception {

if(curItem == null) {

curItem = (Customer) delegate.read();

}

Customer item = (Customer) curItem;

curItem = null;

if(item != null) {

item.setTransactions(new ArrayList<Transaction>());

while(peek() instanceof Transaction) {item.getTransactions().add((Transaction) curItem);

curItem = null;

}

}

return item;

}

public Object peek() throws Exception, UnexpectedInputException,

ParseException {

if (curItem == null) {

curItem = delegate.read();

}

return curItem;

}

public void setDelegate(ItemStreamReader<Object> delegate) {

this.delegate = delegate;

}

public void close() throws ItemStreamException {

delegate.close();

}

public void open(ExecutionContext arg0) throws ItemStreamException {

delegate.open(arg0);

}

public void update(ExecutionContext arg0) throws ItemStreamException {

delegate.update(arg0);

}

}The CustomerFileReader has two key methods that you should look at. The first is the read() method. This method is responsible for implementing the logic involved in reading and assembling a single Customer item including its child transaction records. It does so by reading in a customer record from the file you are reading. It then reads the related transaction records until the next record is the next customer record. Once the next customer record is found, the current customer is considered complete and returned by your ItemReader. This type of logic is called control break logic.

The other method of consequence is the peak method. This method is used to read ahead while still working on the current Customer. It caches the current record. If the record has been read but not processed, it will return the same record again. If the record has been processed (indicated to this method by setting curItem to null), it will read in the next record[19].

You should notice that your custom ItemReader does not implement the ItemReader interface. Instead, it implements on of its subinterfaces, the ItemStreamReader interface. The reason for this is that when using one of the Spring Batch ItemReader implementations, they handle the opening and closing of the resource being read as well as maintaining the ExecutionContext as records are being read. However, if you implement your own, you need to manage that yourself. Since you are just wrapping a Spring Batch ItemReader (the FlatFileItemReader), you can use it to maintain those resources.

To configure the CustomerFileReader, the only dependency you have is the delegate. The delegate in this case is the reader that will do the actual reading and parsing work for you. Listing 7-27 shows the configuration for the CustomerFileReader.

Example 7.27. CustomerFileReader Configuration

...

<beans:bean id="customerFile"

class="org.springframework.core.io.FileSystemResyource" scope="step">

<beans:constructor-arg value="#{jobParameters[customerFile]}"/>

</beans:bean>

<beans:bean id="customerFileReader" class="com.apress.springbatch.chapter7.CustomerFileReader">

<beans:property name="delegate" ref="trueCustomerFileReader"/>

</beans:bean>

<beans:bean id="trueCustomerFileReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resource" ref="customerFile" />

<beans:property name="lineMapper">

<beans:bean class="org.springframework.batch.item.file.mapping.

PatternMatchingCompositeLineMapper">

<beans:property name="tokenizers">

<beans:map>

<beans:entry key="CUST*" value-ref="customerLineTokenizer"/>

<beans:entry key="TRANS*" value-ref="transactionLineTokenizer"/>

</beans:map>

</beans:property>

<beans:property name="fieldSetMappers">

<beans:map>

<beans:entry key="CUST*" value-ref="customerFieldSetMapper"/>

<beans:entry key="TRANS*" value-ref="transactionFieldSetMapper"/>

</beans:map>

</beans:property>

</beans:bean>

</beans:property>

</beans:bean>

<beans:bean id="customerLineTokenizer"

class="org.springframework.batch.item.file.transform.

DelimitedLineTokenizer">

<beans:property name="names" value="prefix,firstName,middleInitial,

lastName,address,city,state,zip"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

<beans:bean id="transactionLineTokenizer"

class="org.springframework.batch.item.file.transform.DelimitedLineTokenizer">

<beans:property name="names"

value="prefix,accountNumber,transactionDate,amount"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

<beans:bean id="customerFieldSetMapper"

class="org.springframework.batch.item.file.mapping.

BeanWrapperFieldSetMapper">

<beans:property name="prototypeBeanName" value="customer"/>

</beans:bean>

<beans:bean id="transactionFieldSetMapper"

class="com.apress.springbatch.chapter7.TransactionFieldSetMapper"/>

<beans:bean id="customer" class="com.apress.springbatch.chapter7.Customer"

scope="prototype"/>

...The configuration in Listing 7-27 should look familiar. It's essentially the exact same as the configuration you used for multiple record formats (see Listing 7-19). The only addition, as highlighted in bold, is the configuration of your new CustomerFileReader with its reference to the old ItemReader and renaming the old ItemReader.

With the updated object model, the previous method for writing to your output file won't work for this example. Because of this, I chose to use Spring Batch's PassThroughLineAggregator to write the output for this example. It calls the item's toString() method and writes the output to the output file. Listing 7-28 shows the updated ItemWriter configuration.

Example 7.28. Updated outputWriter Configuration

...

<beans:bean id="outputFile"

class="org.springframework.core.io.FileSystemResyource" scope="step">

<beans:constructor-arg value="#{jobParameters[outputFile]}"/>

</beans:bean>

<beans:bean id="outputWriter"

class="org.springframework.batch.item.file.FlatFileItemWriter">

<beans:property name="resource" ref="outputFile" />

<beans:property name="lineAggregator">

<beans:bean class="org.springframework.batch.item.file.transform.

PassThroughLineAggregator"/>

</beans:property>

</beans:bean>

...For each Customer object, it will print how many transactions the user has. This will provide enough detail for you to verify that your reading worked correctly. With the PassThroughLineAggregator configured as it is, you only need to override the Customer's toString() method to format the output. Listing 7-29 shows the updated method.

Example 7.29. Customer's toString() Method

...

@Override

public String toString() {

StringBuilder output = new StringBuilder();

output.append(firstName);

output.append(" ");

output.append(middleInitial);

output.append(". ");

output.append(lastName);

if(transactions != null&& transactions.size() > 0) {

output.append(" has ");

output.append(transactions.size());

output.append(" transactions.");

} else {

output.append(" has no transactions.");

}

return output.toString();

}

...With a run of the job, you can see each of your customers and the number of transaction records you read in. It's important to note that when reading records in this way, the customer record and all the subsequent transaction records are considered a single item. The reason for this is that Spring Batch considers an item to be any object that is returned by the ItemReader. In this case, the Customer object is the object returned by the ItemReader so it is the item used for things like commit counts, etc. Each Customer object will be processed once by any configured ItemProcessor you add and once by any configured ItemWriter. The output from the job configured with the new ItemReaders can be seen in Listing 7-30.

Example 7.30. Output from Multiline Job

Warren Q. Darrow has 1 transactions. Ann V. Gates has no transactions. Erica I. Jobs has 5 transactions.

Multiline records are a common element in batch processing. Although they are a bit more complex than basic record processing, as you can see from this example, there is still only a minimal amount of actual code that needs to be written to handle these robust situations.

The last piece of the flat file puzzle is to look at input situations where you read in from multiple files. This is a common requirement in the batch world and it's covered in the next section.

The examples up to this point have been based around a customer file with transactions for each customer. Many companies have multiple departments or locations that sell things. Take, for example, a restaurant chain with restaurants nationwide. Each location may contribute a file with the same format to be processed. If you were to process each one with a separate writer like you have been up to now, there would be a number of issues from performance to maintainability. So how does Spring Batch provide for the ability to read in multiple files with the same format?

Using a similar pattern to the one you just used in the multiline record example, Spring Batch provides an ItemReader called the MultiResourceItemReader. This reader wraps another ItemReader like the CustomerFileItemReader did; however, instead of defining the resource to be read as part of the child ItemReader, a pattern that defines all of the files to be read is defined as a dependency of the MultiResourceItemReader. Let's take a look.

You can use the same file format as you did in your multi-record example (as shown in Listing 7-19), which will allow you to use the same ItemReader configuration you created in the multiline example as well. However, if you have five of these files with the filenames customerFile1.txt, customerFile2.txt, customerFile3.txt, customerFile4.txt, and customerFile5.txt, you need to make two small updates. The first is to the configuration. You need to tweak your configuration to use the MultiResyourceItemReader with the correct resource pattern. You will also remove the reference to the input resource (<beans:property name="resource" ref="customerFile" />) from the FlatFileItemReader that you have used up to this point. Listing 7-31 shows the updated configuration.

Example 7.31. Configuration to Process Multiple Customer Files

...<beans:bean id="customerFileReader"class="org.springframework.batch.item.file.MultiResourceItemReader"><beans:property name="resources" value="file:/Users/mminella/temp/customerFile*.csv"/><beans:property name="delegate" ref="fullCustomerFileReader"/></beans:bean><beans:bean id="fullCustomerFileReader"class="com.apress.springbatch.chapter7.CustomerFileReader"> <beans:property name="delegate" ref="trueCustomerFileReader"/> </beans:bean> <beans:bean id="trueCustomerFileReader" class="org.springframework.batch.item.file.FlatFileItemReader"> <beans:property name="lineMapper"> <beans:bean class="org.springframework.batch.item.file.mapping. PatternMatchingCompositeLineMapper"> <beans:property name="tokenizers"> <beans:map> <beans:entry key="CUST*" value-ref="customerLineTokenizer"/> <beans:entry key="TRANS*" value-ref="transactionLineTokenizer"/> </beans:map> </beans:property> <beans:property name="fieldSetMappers"> <beans:map> <beans:entry key="CUST*" value-ref="customerFieldSetMapper"/> <beans:entry key="TRANS*" value-ref="transactionFieldSetMapper"/> </beans:map> </beans:property> </beans:bean> </beans:property>

</beans:bean>

<beans:bean id="customerLineTokenizer"

class="org.springframework.batch.item.file.transform.

DelimitedLineTokenizer">

<beans:property name="names" value="prefix,firstName,middleInitial,

lastName,address,city,state,zip"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

<beans:bean id="transactionLineTokenizer"

class="org.springframework.batch.item.file.transform.

DelimitedLineTokenizer">

<beans:property name="names"

value="prefix,accountNumber,transactionDate,amount"/>

<beans:property name="delimiter" value=","/>

</beans:bean>

<beans:bean id="customerFieldSetMapper"

class="org.springframework.batch.item.file.mapping.

BeanWrapperFieldSetMapper">

<beans:property name="prototypeBeanName" value="customer"/>

<beans:property name="strict" value="false"/>

</beans:bean>

<beans:bean id="transactionFieldSetMapper"

class="com.apress.springbatch.chapter7.TransactionFieldSetMapper"/>

<beans:bean id="customer" class="com.apress.springbatch.chapter7.Customer"

scope="prototype"/>

...The other change you need to make is to the CustomerFileReader code. Previously, you were able to use the ItemStreamReader interface as what you implemented and the delegate's type. However, that won't be specific enough this time around. Instead, you are going to need to use one of the ItemStreamResource's sub interfaces. The ResourceAwareItemReaderItemStream interface is for any ItemReader that reads its input from resources. The reason you will want to make the two changes is that you will need to be able to inject multiple Resources into the ItemReader.

By implementing org.springframework.batch.item.file.ResourceAwareItemStreamItemReader, you will be required to add one additional method: setResource. Like the open, close and update methods of the ItemStreamReader interface, you will just be calling the setResource method on the delegate in your implementation. The other change you need to make is to have your delegate be of the type ResourceAwareItemStreamItemReader. Since you are using the FlatFileItemReader as your delegate, you won't need to use a different ItemReader as the delegate. The updated code is listed in Listing 7-32.

Example 7.32. CustomerFileReader

package com.apress.springbatch.chapter7; import java.util.ArrayList; import org.springframework.batch.item.ExecutionContext; import org.springframework.batch.item.ItemStreamException; import org.springframework.batch.item.ParseException; import org.springframework.batch.item.UnexpectedInputException; import org.springframework.batch.item.file.ResourceAwareItemReaderItemStream; import org.springframework.core.io.Resource;public class CustomerFileReader implementsResourceAwareItemReaderItemStream<Object> {private Object curItem = null;private ResourceAwareItemReaderItemStream<Object> delegate;public Object read() throws Exception { if (curItem == null) { curItem = (Customer) delegate.read(); } Customer item = (Customer) curItem; curItem = null; if (item != null) { item.setTransactions(new ArrayList<Transaction>()); while (peek() instanceof Transaction) { item.getTransactions().add((Transaction) curItem); curItem = null; } } return item; } public Object peek() throws Exception, UnexpectedInputException, ParseException { if (curItem == null) { curItem = delegate.read(); } return curItem; } public void setDelegate( ResourceAwareItemReaderItemStream<Object> delegate) { this.delegate = delegate;

}

public void close() throws ItemStreamException {

delegate.close();

}

public void open(ExecutionContext arg0) throws ItemStreamException {

delegate.open(arg0);

}

public void update(ExecutionContext arg0) throws ItemStreamException {

delegate.update(arg0);

}

public void setResource(Resyource arg0) {

delegate.setResource(arg0);

}

}The sole difference from a processing standpoint between what is shown in Listing 7-33 and what you originally wrote in Listing 7-26 is the ability to inject a Resource. This allows Spring Batch to create each of the files as needed and inject them into the ItemReader instead of the ItemReader itself being responsible for file management.

When you run this example, Spring Batch will iterate through all of the resources that match your provided pattern and execute your reader for each file. The output for this job is nothing more than a larger version of the output from the multiline record example.

Example 7.33. Output from Multiline Job

Warren Q. Darrow has 1 transactions. Ann V. Gates has no transactions. Erica I. Jobs has 5 transactions. Joseph Z. Williams has 2 transactions. Estelle Y. Laflamme has 3 transactions. Robert X. Wilson has 1 transactions. Clement A. Blair has 1 transactions. Chana B. Meyer has 1 transactions. Kay C. Quinonez has 1 transactions. Kristen D. Seibert has 1 transactions. Lee E. Troupe has 1 transactions. Edgar F. Christian has 1 transactions.

It is important to note that when dealing with multiple files like this, Spring Batch provides no added safety around things like restart. So in this example, if your job started with files customerFile1.csv, customerFile2.csv, and customerFile3.csv and it were to fail after processing customerFile2.csv, and you added a customerFile4.csv before it was restated, customerFile4.csv would be processed as part of this run even though it didn't exist when the job was first executed. To safeguard against this, it's a common practice to have a directory for each batch run. All files that are to be processed for the run go into the appropriate directory and are processed. Any new files go into a new directory so that they have no impact on the currently running execution.

I have covered many scenarios involving flat files—from fixed-width records, delimited records, multiline records, and even input from multiple files. However, flat files are not the only type of files that you are likely to see. You have spent a large amount of this book (and will still spend a large amount more) looking at XML, yet you haven't even looked at how Spring Batch processes it. Let's see what Spring Batch can do for you when you're faced with XML files.

When I began talking about file-based processing at the beginning of this chapter, I talked about how different file formats have differing amounts of metadata that describe the format of the file. I said that fixed-width records have the least amount of metadata, requiring the most information about the record format to be known in advance. XML is at the other end of the spectrum. XML uses tags to describe the data in the file, providing a full description of the data it contains.

Two XML parsers are commonly used: DOM and SAX. The DOM parser loads the entire file into memory in a tree structure for navigation of the nodes. This approach is not useful for batch processing due to the performance implications. This leaves you with the SAX parser. SAX is an event-based parser that fires events when certain elements are found.

In Spring Batch, you use a StAX parser. Although this is an event-based parser similar to SAX, it has the advantage of allowing for the ability to parse sections of your document independently. This relates directly with the item oriented reading you do. A SAX parser would parse the entire file in a single run; the StAX parser allows you to read each section of a file that represents an item to be processed at a time.

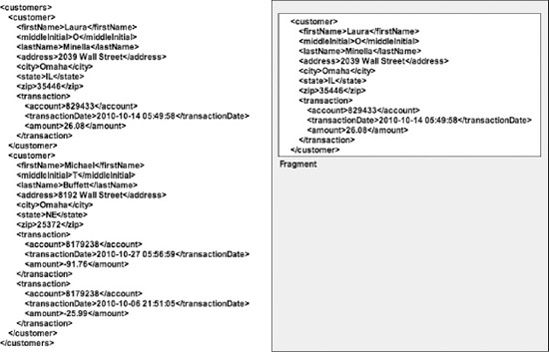

Before you look at how to parse XML with Spring Batch, let's look at a sample input file. To see how the XML parsing works with Spring Batch, you will be working with the same input: your customer file. However, instead of the data in the format of a flat file, you will structure it via XML. Listing 7-34 shows a sample of the input.

Example 7.34. Customer XML File Sample

<customers>

<customer>

<firstName>Laura</firstName>

<middleInitial>O</middleInitial>

<lastName>Minella</lastName>

<address>2039 Wall Street</address>

<city>Omaha</city>

<state>IL</state>

<zip>35446</zip>

<transaction>

<account>829433</account>

<transactionDate>2010-10-14 05:49:58</transactionDate>

<amount>26.08</amount>

</transaction>

</customer>

<customer>

<firstName>Michael</firstName>

<middleInitial>T</middleInitial>

<lastName>Buffett</lastName>

<address>8192 Wall Street</address>

<city>Omaha</city>

<state>NE</state>

<zip>25372</zip><transaction>

<account>8179238</account>

<transactionDate>2010-10-27 05:56:59</transactionDate>

<amount>-91.76</amount>

</transaction>

<transaction>

<account>8179238</account>

<transactionDate>2010-10-06 21:51:05</transactionDate>

<amount>-25.99</amount>

</transaction>

</customer>

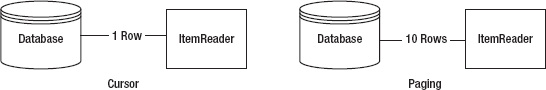

</customers>The customer file is structured as a collection of customer sections. Each of these contains a collection of transaction sections. Spring Batch parses lines in flat files into FieldSets. When working with XML, Spring Batch parses XML fragments that you define into your domain objects. What is a fragment? As Figure 7-4 shows, an XML fragment is a block of XML from open to close tag. Each time the specified fragment exists in your file, it will be considered a single record and converted into an item to be processed.

In the customer input file, you have the same data at the customer level. You also have a collection of transaction elements within each customer, representing the list of transactions you put together in the multiline example previously.

To parse your XML input file, you will use the org.springframework.batch.item.xml.StaxEventItemReader that Spring Batch provides. To use it, you define a fragment root element name, which identifies the root element of each fragment considered an item in your XML. In your case, this will be the customer tag. It also takes a resource, which will be the same your customerFile bean as it has been previously. Finally, it takes an org.springframework.oxm.Unmarshaller implementation. This will be used to convert the XML to your domain object. Listing 7-35 shows the configuration of your customerFileReader using the StaxEventItemReader implementation.

Example 7.35. customerFileReader Configured with the StaxEventItemReader

...

<beans:bean id="customerFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[customerFile]}"/>

</beans:bean>

<beans:bean id="customerFileReader"

class="org.springframework.batch.item.xml.StaxEventItemReader">

<beans:property name="fragmentRootElementName" value="customer" />

<beans:property name="resource" ref="customerFile" />

<beans:property name="unmarshaller" ref="customerMarshaller" />

</beans:bean>

...Spring Batch is not picky about the XML binding technology you choose to use. Spring provides Unmarshaller implementations that use Castor, JAXB, JiBX, XMLBeans, and XStream in their oxm package. For this example, you will use the XStream binding framework.

For your customerMarshaller configuration, you will use the org.springframework.oxm.xstream.XStreamMarshaller implementation provided by Spring. To parse your customer file, there are three things you will need to configure on the XStreamMarshaller instance.

Aliases: This is a map of tag names to fully qualified class names that tells the unmarshaller what each tag maps to.

implicitCollection: This is a map of fields to fully qualified classes that indicate what fields on the class specified are collections consisting of another type.

Converters: Although XStream is pretty smart and can figure out how to convert most of your XML file from the Strings it sees in the file to the required data type in your objects, you need to help it out on the transaction date. For XStream to be able to parse the transaction date, you will need to provide a DateConverter instance configured with the correct date format.

Listing 7-36 shows how to configure your XStreamMarshaller with these dependencies.

Example 7.36. customerMarshaller Configuration

...

<beans:bean id="customerMarshaller"

class="org.springframework.oxm.xstream.XStreamMarshaller">

<beans:property name="aliases">

<beans:map><beans:entry key="customer"

value="com.apress.springbatch.chapter7.Customer" />

<beans:entry key="transaction"

value="com.apress.springbatch.chapter7.Transaction" />

<beans:entry key="account" value="java.lang.String"/>

<beans:entry key="zip" value="java.lang.String"/>

</beans:map>

</beans:property>

<beans:property name="implicitCollection">

<beans:map>

<beans:entry key="transactions"

value="com.apress.springbatch.chapter7.Customer"/>

</beans:map>

</beans:property>

<beans:property name="converters">

<beans:list>

<beans:ref local="dateConverter"/>

</beans:list>

</beans:property>

</beans:bean>

<beans:bean id="dateConverter"

class="com.thoughtworks.xstream.converters.basic.DateConverter">

<beans:constructor-arg value="yyyy-MM-dd HH:mm:ss"/>

<beans:constructor-arg value="yyyy-MM-dd HH:mm:ss"/>

</beans:bean>

...As you can see in Listing 7-36, you configure the aliases to tell your parser what each tag maps to. Note that you don't need to map every tag since the parser can figure out most of the Strings. However, in the case of the zip code and account number, you need to let XStream know that those are not any type of number field. You also let your parser know what each of the two root tags map to: transaction maps to the Transaction class and customer maps to the Customer class. The implicitCollection dependency identifies that the field transactions on the Customer object is a collection. Finally, you provide a list of converters for XStream to use when it finds types that it can't parse by default. In your case, you provide the com.thoughtworks.xstream.converters.basic.DateConverter with the correct format for the dates found in your file.

That's all you need to parse XML into items in Spring Batch! By running this job, you will get the same output as you did from the multiline record job.