In Chapter 2, you created your first job. You walked through the configuration of a job and steps, executed the job, and configured a database to store your job repository. In that "Hello, World!" example, you began to scratch the surface of what jobs and steps are in Spring Batch. This chapter continues to explore jobs and steps at a much deeper level. You begin by learning what a job and a step are in relation to the Spring Batch framework.

From there, you dive into great detail about what happens when jobs or steps are executed, from loading them and validating that they're valid to running all the way through their completion. Then, you dig into some code, see the various parts of jobs and steps that you can configure, and learn best practices along the way. Finally, you see how different pieces of the batch puzzle can pass data to each other via the various scopes involved in a Spring Batch process.

Although you dive deep into steps in this chapter, the largest parts of a step are their readers and writers, which aren't covered here. Chapters 7 and 9 explore the input and output functionality available in Spring Batch. This chapter keeps the I/O aspects of each step as simple as possible so you can focus on the intricacies of steps in a job.

With the proliferation of web applications, you may have become used to the idea of an application being broken up into requests and responses. Each request contains the data for a single unique piece of processing that occurs. The result of the request is typically a view of some kind being returned to the user. A web application can be made up of dozens to literally hundreds of unique interactions like this, each structured the same way, as shown in Figure 4-1.

Yet when you think about batch jobs, you're really talking about a collection of actions. The term flow[8] is a good way to describe a job. Using the web application example again, think about how the checkout process of a shopping cart application works. When you click Check Out with items in your cart, you're walked through a series of steps: register or sign in, confirm shipping address, enter billing information, confirm order, submit order. This flow is similar to what a job is.

For the purpose of this book, a job is defined as a unique, ordered list of steps that can be executed from start to finish independently. Let's break down this definition so you can get a better understanding of what you're working with:

Unique: Jobs in Spring Batch are configured via XML similar to how beans are configured using the core Spring framework and are reusable as a result. You can execute a job as many times as you need to with the same configuration. Because of this there is no reason to define the same job multiple times.

Ordered list of steps:[9] Going back to the checkout flow example, the order of the steps matter. You can't validate your shipping address if you haven't registered one in the first place. You can't execute the checkout process if your shopping cart is empty. The order of steps in your job is important. You can't generate a customer's statement until their transactions have been imported into your system. You can't calculate the balance of an account until you've calculated all of your fees. You structure jobs in a sequence that allows all steps to be executed in a logical order.

Can be executed from start to finish: Chapter 1 defined a batch process as a process that can run without additional interaction to some form of completion. A job is a series of steps that can be executed without external dependencies. You don't structure a job so that the third step is to wait until a file is sent to a directory to be processed. Instead, you have a job begin when the file has arrived.

Independently: Each batch job should be able to execute without external dependencies affecting it. This doesn't mean a job can't have dependencies. On the contrary, there are not many practical jobs (except "Hello, World") that don't have external dependencies. However, the job should be able to manage those dependencies. If a file isn't there, it handles the error gracefully. It doesn't wait for a file to be delivered (that's the responsibility of a scheduler, and so on). A job can handle all elements of the process it's defined to do.

As a comparison, Figure 4-2 shows how a batch process executes versus the web application in Figure 4-1.

As you can see in Figure 4-2, a batch process is executed with all of the input available for it as it runs. There are no user interactions. Each step is executed to completion against a dataset before the next step is executed. Before you dig deeply into how to configure the various features of a job in Spring Batch, let's talk about a job's execution lifecycle.

When a job is executed, it goes through a lifecycle. Knowledge of this lifecycle is important as you structure your jobs and understand what is happening as they run. When you define a job in XML, what you're really doing is providing the blueprint for a job. Just like writing the code for a Java class is like defining a blueprint for the JVM from which to create an instance, your XML definition of a job is a blueprint for Spring Batch to create an instance of your job.

The execution of a job begins with a job runner. The job runner is intended to execute the job requested by name with the parameters passed. Spring Batch provides two job runners:

CommandLineJobRunner: This job runner is intended to be used from a script or directly from the command line. When used, theCommandLineJobRunnerbootstraps Spring and executes the job requested with the parameters passed.JobRegistryBackgroundJobRunner: When using a scheduler like Quartz or a JMX hook to execute a job, typically Spring is bootstrapped and the Java process is live before the job is to be executed. In this case, aJobRegistryis created when Spring is bootstrapped containing the jobs available to run. TheJobRegistryBackgroundJobRunneris used to create theJobRegistry.

CommandLineJobRunner and JobRegistryBackgroundJobRunner (both located in the org.springframework.batch.core.launch.support package) are the two job runners provided by the framework. You used CommandLineJobRunner in Chapter 2 to run the "Hello, World!" job, and you continue to use it through out the book.

Although the job runner is what you use to interface with Spring Batch, it's not a standard piece of the framework. There is no JobRunner interface because each scenario would require a different implementation (although both of the two job runners provided by Spring Batch use main methods to start). Instead, the true entrance into the framework's execute is an implementation of the org.springframework.batch.core.launch.JobLauncher interface.

Spring Batch provides a single JobLauncher, the org.springframework.batch.core.launch.support.SimpleJobLauncher. This class uses the TaskExecutor interface from Core Spring to execute the requested job. You see in a bit at how this is configured, but it's important to note that there are multiple ways to configure the org.springframework.core.task.TaskExecutor in Spring. If an org.springframwork.core.task.SyncTaskExecutor is used, the job is executed in the same thread as the JobLauncher. Any other option executes the job in its own thread.

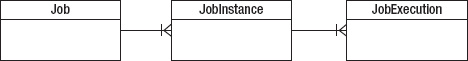

When a batch job is run, an org.springframework.batch.core.JobInstance is created. A JobInstance represents a logical run of the job and is identified by the job name and the parameters passed to the job for this run. A run of the job is different than an attempt at executing the job. If you have a job that is expected to run daily, you would have it configured once in your XML (defining the blueprint). Each day you would have a new run or JobInstance because you pass a new set of parameters into the job (one of which is the date). Each JobInstance would be considered complete when it has an attempt or JobExecution that has successfully completed.

Note

A JobInstance can only be executed once to a successful completion. Because a JobInstance is identified by the job name and parameters passed in, this means you can only run a job once with the same parameters.

You're probably wondering how Spring Batch knows the state of a JobInstance from attempt to attempt. In Chapter 2, you took a look at the job repository, and in it there was a batch_job_instance table. This table is the base from which all other tables are derived. It's the batch_job_instance and batch_job_params that identify a JobInstance (the batch_job_instance.job_key is actually a hash of the name and parameters).

An is an actual attempt to run the job. If a job runs from start to finish the first time, there is only one JobExecution related to a given JobInstance. If a job ends in an error state after the first run, a new JobExecution is created each time an attempt is made to run the JobInstance (by passing in the same parameters to the same job). For each JobExecution that Spring Batch creates for your job, a record in the batch_job_execution table is created. As the JobExecution executes, its state is maintained in the batch_job_execution_context as well. This allows Spring Batch to restart a job at the correct point if an error occurs.

Enough about theory. Let's get into some code. This section digs into the various ways to configure a job. As mentioned in Chapter 2, as with all of Spring, Spring Batch configurations are done via XML. With that in mind, one of the very welcome features added to Spring Batch 2 was the addition of a batch XSD to make configuration of batch jobs more concise.

Note

A good best practice is to configure each job in its own XML file named after the name of the job.

Listing 4-1 shows the shell of a basic Spring Batch job. For the record, this isn't a valid job. A job in Spring Batch is required to have at least one step or be declared abstract.[10] In any case, the focus here is on the job and not the steps, so you add steps to the job later in this chapter.

You used this format in Chapter 2's "Hello, World!" job, and it should look familiar to anyone who has used Spring before. Just like most other extensions of the Spring framework, you configure beans like any other use of Spring and have an XSD that defines domain-specific tags. In this case, you include the XSD for Spring Batch in the beans tag.

Example 4.1. basicJob.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns:batch="http://www.springframework.org/schema/batch"

xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/batch

http://www.springframework.org/schema/batch/spring-batch-2.1.xsd">

<import resource="../launch-context.xml"/>

<batch:job id="basicJob">

...

</batch:job>

</beans>The first piece of the basicJob.xml file after the beans tag is an import for the launch-context.xml file, which is located in the src/main/resources directory of your project. You used this file in Chapter 2 without really going into it, so let's look at it now. Listing 4-2 shows launch-context.xml. Notice that this launch-context.xml is a significantly slimmed-down version of what came out of the zip file. This book discusses the rest of the file as you use its parts in future chapters. For now, let's focus on the pieces that you need to make Spring Batch work.

Example 4.2. launch-context.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:p="http://www.springframework.org/schema/p"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd">

<bean id="dataSource" class="org.apache.commons.dbcp.BasicDataSource">

<property name="driverClassName" value="${batch.jdbc.driver}" />

<property name="url" value="${batch.jdbc.url}" />

<property name="username" value="${batch.jdbc.user}" /><property name="password" value="${batch.jdbc.password}" />

</bean>

<bean id="transactionManager"

class="org.springframework.jdbc.datasource.DataSourceTransactionManager"

lazy-init="true">

<property name="dataSource" ref="dataSource" />

</bean>

<bean id="placeholderProperties"

class="org.springframework.beans.factory.config.PropertyPlaceholderConfigure"

>

<property name="location" value="classpath:batch.properties" />

<property name="systemPropertiesModeName"

value="SYSTEM_PROPERTIES_MODE_OVERRIDE" />

<property name="ignoreUnresolvablePlaceholders" value="true" />

<property name="order" value="1" />

</bean>

<bean id="jobRepository"

class="org.springframework.batch.core.repository.support.JobRepositoryFactoryBean"

p:dataSource-ref="dataSource" p:transactionManager-ref="transactionManager" />

<bean id="jobLauncher"

class="org.springframework.batch.core.launch.support.SimpleJobLauncher">

<property name="jobRepository" ref="jobRepository" />

</bean>

</beans>launch-context.xml has most of the elements discussed in the previous section and their dependencies. It starts with a datasource. You use standard Spring configuration to configure a datasource that Spring Batch uses to access the job repository and that is also available for any other database access your batch processes may require. It's important to note that the database used by Spring Batch for the JobRepository isn't required to be the same as the schema (or schemas) used for business processing.

transactionManager also is configured in this file. Transaction processing is important in batch jobs given that you process large volumes of data in chunks and each chunk being committed at once. This again is a standard configuration using core Spring components.

Notice that you're using properties to specify values that may change from environment to environment. After transactionManager, you configure Spring's PropertyPlaceholderConfigurer to handle the population of these properties at runtime. You're using the batch.properties file to specify the values, which is included in the source provided in the zip file.

Next you have jobRepository. This is the first Spring Batch component you're going to configure in the launch-context.xml file. jobRepository is used to maintain the state of the job and each step for Spring Batch. In this case, you're configuring the handle that the framework uses to perform CRUD operations on the database. Chapter 5 goes over some advanced configurations of jobRepository including changing schema prefixes, and so on. This example configuration provides its two required dependencies: a datasource and a transaction manager.

The last piece of launch-context.xml you have here is the jobLauncher bean. As the previous section said, the job launcher is the gateway into Spring Batch framework from an execution standpoint. It is configured with the jobRepository as a dependency.

With the common components defined, let's go back to basicJob.xml. With regard to configuration, 90% of the configuration of a job is the ordered definition of the steps, which is covered later in this chapter. Note about the basicJob that you haven't configured any reference to a job repository or a transaction manager. This is because by default, Spring uses the jobRepository with the name jobRepository and the transaction manager named transactionManager. You see how to specifically configure these elements in Chapter 5, which discusses using JobRepository and its metadata.

Most of the options related to configuring a job are related to execution, so you see those later when you cover job execution. However, there is one instance when you can alter the job configuration that makes sense to discuss here: the use of inheritance.

Like most other object-oriented aspects of programming, the Spring Batch framework allows you to configure common aspects of your jobs once and then extend the base job with other jobs. Those other jobs inherit the properties of the job they're extending. But there are some caveats to inheritance in Spring Batch. Spring Batch allows the inheritance of all job-level configurations from job to job. This is an important point. You can't define a job that has common steps you can inherit. Things you're allowed to inherit are the ability to restart a job, job listeners, and a validator for any parameters passed in. To do so, you do two things: declare the parent job abstract and specify it as the parent job in any job that wants to inherit functionality from it.

Listing 4-3 configures a parent job to be restartable[11] and then extends it with sampleJob. Because sampleJob extends baseJob, it's also restartable. Listing 4-4 shows how you can configure an abstract job that has a parameter validator configured and extend it to inherit the validator as well.

Example 4.3. inheritanceJob.xml with Job Inheritance

<job id="baseJob" abstract="true" restartable="true">

</job>

<job id="inheritanceJob" parent="baseJob">

...

</job>Example 4.4. Parameter Validator Inheritance

<job id="baseJob" abstract="true" restartable="true">

<validator ref="myParameterValidator"/>

</job>

<job id="sampleJob1" parent="baseJob">

...

</job>Although most of a job's configuration can be inherited from a parent job, not all of it is. Following is a list of the things you can define in a parent job that are inherited by its children:

Restartable: Specifies whether a job is restartable or not

A parameter incrementer: Increments job parameters with each

JobExecutionListeners: Any job-level listeners

Job parameter validator: Validates that the parameters passed to a job meet any requirements

All of these concepts are new and are discussed later in this chapter. For now, all you need to be aware of is that when these values are set on an abstract job, any job extending the parent job inherits them. Things the child doesn't inherit include step configurations, step flows, and decisions. These must be defined in any job using them.

Inheritance can be helpful not only to consolidate the configuration of common attributes but also to standardize how certain things are done. Because the last example began looking at parameters and their validation, that seems like a logical next topic.

You've read a few times that a JobInstance is identified by the job name and the parameters passed into the job. You also know that because of that, you can't run the same job more than once with the same parameters. If you do, you receive an org.springframework.batch.core.launch.JobInstanceAlreadyCompleteException telling you that if you'd like to run the job again, you need to change the parameters (as shown in Listing 4-5).

Example 4.5. What Happens When You Try to Run a Job Twice with the Same Parameters

2010-11-28 21:06:03,598 ERROR

org.springframework.batch.core.launch.support.CommandLineJobRunner.main()

[org.springframework.batch.core.launch.support.CommandLineJobRunner] - <Job Terminated in

error: A job instance already exists and is complete for parameters={}. If you want to run

this job again, change the parameters.>

org.springframework.batch.core.repository.JobInstanceAlreadyCompleteException: A job instance

already exists and is complete for parameters={}. If you want to run this job again, change

the parameters.

at

org.springframework.batch.core.repository.support.SimpleJobRepository.createJobExecution(Simpl

eJobRepository.java:122)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

...So how do you pass parameters to your jobs? Spring Batch allows you not only to pass parameters to your jobs but also to automatically increment them[12] or validate them before your job runs. You start by looking at how to pass parameters to your jobs.

Passing parameters to your job depends on how you're calling your job. One of the functions of the job runner is to create an instance of org.springframework.batch.core.JobParameters and pass it to the JobLauncher for execution. This makes sense because the way you pass parameters is different if you launch a job from a command line than if you launch your job from a Quartz scheduler. Because you've been using CommandLineJobRunner up to now, let's start there.

Passing parameters to CommandLineJobRunner is as simple as passing key=value pairs on the command line. Listing 4-6 shows how to pass parameters to a job using the way you've been calling jobs up to this point.

Example 4.6. Passing Parameters to the CommandLineJobRunner

java -jar sample-application-0.0.1-SNAPSHOT.jar jobs/sampleJob.xml sampleJob name=Michael

In Listing 4-6, you pass one parameter, name. When you pass parameter into your batch job, your job runner creates an instance of JobParameters, which serves as a container for all the parameters the job received.

JobParameters isn't much more than a wrapper for a java.util.Map<String, JobParameter> object. Notice that although you're passing in Strings in this example, the value of the Map is an org.springframework.batch.core.JobParameter instance. The reason for this is type. Spring Batch provides for type conversion of parameters, and with that, type-specific accessors on the JobParameter class. If you specify the type of parameter to be a long, it's available as a java.lang.Long. String, Double, and java.util.Date are all available out of the box for conversion. In order to utilize the conversions, you tell Spring Batch the parameter type in parentheses after the parameter name, as shown in Listing 4-7. Notice that Spring Batch requires that the name of each be all lowercase.

Example 4.7. Specifying the Type of a Parameter

java -jar sample-application-0.0.1-SNAPSHOT.jar jobs/sampleJob.xml sampleJob param1(string)=Spring param2(long)=33

To view what parameters have been passed into your job, you can look in the job repository. Chapter 2 noted that there is a table for job parameters called batch_job_params, but because you didn't pass any parameters to your job, it was empty. If you explore the table after executing the examples in Listings 4-6 and 4-7, you should see what is shown in Table 4-1.

Table 4.1. Contents of BATCH_JOB_PARAMS

JOB_ INSTANCE_ ID | TYPE_CD | KEY_NAME | STRING_VAL | DATE_VAL | LONG_VAL | DOUBLE_VAL |

|---|---|---|---|---|---|---|

|

|

|

| |||

|

|

|

| |||

|

|

|

|

Now that you know how to get parameters into your batch jobs, how do you access them once you have them? If you take a quick look at the ItemReader, ItemProcessor, ItemWriter, and Tasklet interfaces, you quickly notice that all the methods of interest don't receive a JobParameters instance as one of their parameters. There are a few different options depending on where you're attempting to access the parameter:

ChunkContext: If you look at theHelloWorldtasklet, you see that theexecutemethod receives two parameters. The first parameter isorg.springframework.batch.core.StepContribution, which contains information about where you are in the step (write count, read count, and so on). The second parameter is an instance ofChunkContext. It provides the state of the job at the point of execution. If you're in a tasklet, it contains any information about the chunk you're processing. Information about that chunk includes information about the step and job. As you might guess,ChunkContexthas a reference toorg.springframework.batch.core.scope.context.StepContext, which contains yourJobParameters.Late binding: For any piece of the framework that isn't a tasklet, the easiest way to get a handle on a parameter is to inject it via the Spring Configuration. Given that

JobParametersare immutable, binding them during bootstrapping makes perfect sense.

Listing 4-8 shows an updated HelloWorld tasklet that utilizes a name parameter in the output as an example of how to access parameters from ChunkContext.

Example 4.8. Accessing JobParameters in a Tasklet

package com.apress.springbatch.chapter4;

import org.springframework.batch.core.StepContribution;

import org.springframework.batch.core.scope.context.ChunkContext;

import org.springframework.batch.core.step.tasklet.Tasklet;

import org.springframework.batch.repeat.RepeatStatus;

import org.springframework.batch.item.ExecutionContext;

public class HelloWorld implements Tasklet {

private static final String HELLO_WORLD = "Hello, %s";public RepeatStatus execute( StepContribution step,

ChunkContext context ) throws Exception {

String name =

(String) context.getStepContext().getJobParameters().get("name");

System.out.println( String.format(HELLO_WORLD, name) );

return RepeatStatus.FINISHED;

}

}Although Spring Batch stores the job parameters in an instance of the JobParameter class, when you obtain the parameters this way getJobParameters() returns a Map<String, Object>. Because of this, the previous cast is required.

Listing 4-9 shows how to use Spring's late binding to inject job parameters into components without having to reference any of the JobParameters code. Besides the use of Spring's EL (Expression Language) to pass in the value, any bean that is going to be configured with late binding is required to have the scope set to step.

Example 4.9. Obtaining Job Parameters via Late Binding

<bean id="helloWorld" class="com.apress.springbatch.chapter4.HelloWorld"

scope="step">

<property name="name" value="#{jobParameters[name]}"/>

</bean>It's important to note that in order for the configuration in Listing 4-9 to work, the HelloWorld class needs to be updated to accept the new parameter. Listing 4-10 shows the updated code for this method of parameter association.

Example 4.10. Updated HelloWorld Tasklet

package com.apress.springbatch.chapter4;

import org.springframework.batch.core.StepContribution;

import org.springframework.batch.core.scope.context.ChunkContext;

import org.springframework.batch.core.step.tasklet.Tasklet;

import org.springframework.batch.repeat.RepeatStatus;

import org.springframework.batch.item.ExecutionContext;

public class HelloWorld implements Tasklet {

private static final String HELLO_WORLD = "Hello, %s";

private String name;

public RepeatStatus execute( StepContribution step,

ChunkContext context ) throws Exception {

String name =

(String) context.getStepContext().getJobParameters().get("name");

System.out.println( String.format(HELLO_WORLD, name) );

return RepeatStatus.FINISHED;

}public void setName(String newName) {

name = newName;

}

public String getName() {

return name;

}

}With the ability to pass parameters into your jobs as well as put them to use, two parameter-specific pieces of functionality are built into the Spring Batch framework that the chapter discusses next: parameter validation and the ability to increment a given parameter with each run. Let's start with parameter validation because it's been alluded to in previous examples.

Whenever a piece of software obtains outside input, it's a good idea to be sure the input is valid for what you're expecting. The web world uses client-side JavaScript as well as various server-side frameworks to validate user input, and validation of batch parameters is no different. Fortunately, Spring has made it very easy to validate job parameters. To do so, you just need to implement the org.springframework.batch.core.JobParametersValidator interface and configure your implementation in your job. Listing 4-11 shows an example of a job parameter validator in Spring Batch.

Example 4.11. A Parameter Validator that Validates the Parameter Name Is a String

package com.apress.springbatch.chapter4;

import java.util.Map;

import org.springframework.batch.core.*;

import org.apache.commons.lang.StringUtils;

public class ParameterValidator implements JobParametersValidator{

public void validate(JobParameters params) throws

JobParametersInvalidException {

String name = params.getString("name");

if(!StringUtils.isAlpha(name)) {

throw new

JobParametersInvalidException("Name is not alphabetic");

}

}

}As you can see, the method of consequence is the validate method. Because this method is void, the validation is considered passing as long as a JobParametersInvalidException isn't thrown. In this example, if you pass the name 4566, the exception is thrown and the job completes with a status of COMPLETED. This is important to note. Just because the parameters you passed in weren't valid doesn't mean the job didn't complete correctly. In the case where invalid parameters are passed, the job is marked as COMPLETED because it did all valid processing for the input it received. And when you think about this, it makes sense. A JobInstance is identified by the job name and the parameters passed into the job. If you pass in invalid parameters, you don't want to repeat that, so it's ok to declare the job completed.

In addition to implementing your own custom parameter validator as you did earlier, Spring Batch offers a validator to confirm that all the required parameters have been passed: org.springframework.batch.core.job.DefaultJobParametersValidator. To use it, you configure it the same way you would your custom validator. DefaultJobParametersValidator has two optional dependencies: requiredKeys and optionalKeys. Both are String arrays that take in a list of parameter names that are either required or are the only optional parameters allowed. Listing 4-12 shows two configurations for DefaultJobParametersValidator as well as how to add it to your job.

Example 4.12. DefaultJobParametersValidator Configuration in parameterValidatorJob.xml

<beans:bean id="requiredParamValidator"

class="org.springframework.batch.core.job.DefaultJobParametersValidator">

<beans:property name="requiredKeys" value="batch.name,batch.runDate"/>

</beans:bean>

<beans:bean id="optionalParamValidator"

class="org.springframework.batch.core.job.DefaultJobParametersValidator">

<beans:property name="requiredKeys" value="batch.name,batch.runDate"/>

<beans:property name="optionalKeys" value="batch.address"/>

</beans:bean>

<job id="parameterValidatorJob">

...

<validator ref="requiredParamValidator"/>

</job>If you use requiredParamValidator, your job throws an exception if you don't pass the parameters batch.name and batch.runDate. You're allowed to pass more parameters in if required, but those two can't be null. On the other hand, if you use optionalParamValidator, the job once again throws an exception if batch.name and batch.runDate aren't passed to the job, but it also throws an exception if any parameters in addition to batch.address are passed. The difference between the two validators is that the first one can accept any parameters in addition to the required ones. The second one can only accept the three specified. In either case, if the invalid scenario occurs, a JobParametersInvalidException is thrown and the job is marked as completed as previously discussed.

Up to now, you've been running under the limitation that a job can only be run once with a given set of parameters. If you've been following along with the examples, you've probably hit what happens if you attempt to run the same job twice with the same parameters as shown in Listing 4-5. However, there is a small loophole: using JobParametersIncrementer.

org.springframework.batch.core.JobParametersIncrementer is an interface that Spring Batch provides to allow you to uniquely generate parameters for a given job. You can add a timestamp to each run. You may have some other business logic that requires a parameter to be incremented with each run. The framework provides a single implementation of the interface, which increments a single long parameter with the default name run.id.

Listing 4-13 shows how to configure a JobParametersIncrementer for your job by adding the reference to the job.

Example 4.13. Using a JobParametersIncrementer in a Job

<beans:bean id="idIncrementer"

class="org.springframework.batch.core.launch.support.RunIdIncrementer"/>

<job id="baseJob" incrementer="idIncrementer">

...

</job>Once you've configured JobParametersIncrementer (the framework provides org.springframework.batch.core.launch.support.RunIdIncrementer in this case), there are two more things you need to do to make this work. First you need to add the configuration for a JobExplorer implementation. Chapter 5 goes into detail about what JobExplorer is and how to use it. For now, just know that Spring Batch needs it to increment parameters. Listing 4-14 shows the configuration, but it's already configured in the launch-context.xml that is included in the zip file distribution.

Example 4.14. Configuration for JobExplorer

<bean id="jobExplorer"

class="org.springframework.batch.core.explore.support.JobExplorerFactoryBean">

<property name="dataSource" ref="dataSource"/>

</bean>The last piece of the puzzle to use a JobParametersIncrementer affects how you call your job. When you want to increment a parameter, you need to add the parameter –next to the command when you call your job. This tells Spring Batch to use the incrementer as required.

Now when you run your job with the command in Listing 4-15, you can run it as many times as you want with the same parameters.

Example 4.15. Command to Run a Job and Increment Parameters

java –jar sample-application-0.0.1-SNAPSHOT.jar jobs/sampleJob.xml sampleJob name=Michael -next

In fact, go ahead and give it a try. When you've run the sampleJob three or four times, look in the batch_job_params table and see how Spring Batch is executing your job with two parameters: one String named name with the value Michael, and one long named run.id. run.id's value changes each time, increasing by one with each execution.

You saw earlier that you may want to have a parameter be a timestamp with each run of the job. This is common in jobs that run once a day. To do so, you need to create your own implementation of JobParametersIncrementer. The configuration and execution are the same as before. However, instead of using RunIdIncrementer, you use DailyJobTimestamper, the code for which is in Listing 4-16.

Example 4.16. DailyJobTimestamper.java

package com.apress.springbatch.chapter4; import org.springframework.batch.core.JobParameters; import org.springframework.batch.core.JobParametersBuilder; import org.springframework.batch.core.JobParametersIncrementer; import java.util.Date; import org.apache.commons.lang.time.DateUtils;

public class DailyJobTimestamper implements JobParametersIncrementer {

/**

* Increment the current.date parameter.

*/

public JobParameters getNext( JobParameters parameters ) {

Date today = new Date();

if ( parameters != null && !parameters.isEmpty() ) {

Date oldDate = parameters.getDate( "current.date", new Date() );

today = DateUtils.addDays(oldDate, 1);

}

return new JobParametersBuilder().addDate( "current.date", today )

.toJobParameters();

}

}It's pretty obvious that job parameters are an important part of the framework. They allow you to specify values at runtime for your job. They also are used to uniquely identify a run of your job. You use them more throughout the book for things like configuring the dates for which to run the job and reprocessing error files. For now, let's look at another powerful feature at the job level: job listeners.

When you use a web application, feedback is essential to the user experience. A user clicks a link, and the page refreshes within a few seconds. However, as you've seen, batch processes don't provide much in the way of feedback. You launch a process, and it runs. That's it. Yes, you can query the job repository to see the current state of your job, and there is the Spring Batch Admin web application, but many times you may want something to happen at a given point in your job. Say you want to send an email if a job fails. Maybe you want to log the beginning and ending of each job to a special file. Any processing you want to occur at the beginning (once the JobExecution is created and persisted but before the first step is executed) or end of a job is done with a job listener.

There are two ways to create a job listener. The first is by implementing the org.springframework.batch.core.JobExecutionListener interface. This interface has two methods of consequence: beforeJob and afterJob. Each takes JobExecution as a parameter, and they're executed—you guessed it, before the job executes and after the job executes, respectively. One important thing to note about the afterJob method is that it's called regardless of the status the job finishes in. Because of this, you may need to evaluate the status in which the job ended to determine what to do. Listing 4-17 has an example of a simple listener that prints out some information about the job being run before and after as well as the status of the job when it completed.

Example 4.17. JobLoggerListener.java

package com.apress.springbatch.chapter4;

import org.springframework.batch.core.JobExecution;

import org.springframework.batch.core.JobExecutionListener;

public class JobLoggerListener implements JobExecutionListener {public void beforeJob(JobExecution jobExecution) {

System.out.println(jobExecution.getJobInstance().getJobName()

+ " is beginning execution");

}

public void afterJob(JobExecution jobExecution) {

System.out.println(jobExecution.getJobInstance()

.getJobName()

+ " has completed with the status " +

jobExecution.getStatus());

}

}If you remember, the book previously stated that Spring Batch doesn't support annotations yet for its configuration. That was a lie. A small number of annotations are supported, and @BeforeJob and @AfterJob are two of them. When using the annotations, the only difference, as shown in Listing 4-18, is that you don't need to implement the JobExecutionListener interface.

Example 4.18. JobLoggerListener.java

package com.apress.springbatch.chapter4;

import org.springframework.batch.core.JobExecution;

import org.springframework.batch.core.JobExecutionListener;

import org.springframework.batch.core.annotation.AfterJob;

import org.springframework.batch.core.annotation.BeforeJob;

public class JobLoggerListener {

@BeforeJob

public void beforeJob(JobExecution jobExecution) {

System.out.println(jobExecution.getJobInstance().getJobName()

+ " is beginning execution");

}

@AfterJob

public void afterJob(JobExecution jobExecution) {

System.out.println(jobExecution.getJobInstance()

.getJobName()

+ " has completed with the status " +

jobExecution.getStatus());

}

}The configuration of these two options is the same in either case. Back in the world of XML, you can configure multiple listeners in your job, as shown in Listing 4-19.

Example 4.19. Configuring Job Listeners in listenerJob.xml

<beans:bean id="loggingListener"

class="com.apress.springbatch.chapter4.JobLoggerListener"/>

<job id="listenerJob" incrementer="idIncrementer">

...

<listeners>

<listener ref="loggingListener"/>

</listeners>

</job>Earlier, this chapter discussed job inheritance. This inheritance has an impact on how you configure listeners within your job. When you have a job that has listeners and it has a parent that also has listeners, you have two options. The first option is to let the child's listeners override the parent's. If this is what you want, then you do nothing different. However, if you want both the parent's and the child's listeners to be executed, then when you configure the child's list of listeners, you use the merge attribute as shown in Listing 4-20.

Example 4.20. Merging Listeners Configured in a Parent and Child Job

<beans:bean id="loggingListener"

class="com.apress.springbatch.chapter4.JobLoggerListener"/>

<beans:bean id="theEndListener"

class="com.apress.springbatch.chapter4.JobEndingListener"/>

<job id="baseJob">

...

<listeners>

<listener ref="loggingListener"/>

</listeners>

</job>

<job id="listenerJob" parent="baseJob">

...

<listeners merge="true">

<listener ref="theEndListener"/>

</listeners>

</job>Listeners are a useful tool to be able to execute logic at certain points of your job. Listeners are also available for many other pieces of the batch puzzle, such as steps, readers, writers, and so on. You see each of those as you cover their respective components later in the book. For now, there is just one more piece to cover that pertains to jobs: ExecutionContext.

Batch processes are stateful by their nature. They need to know what step they're on. They need to know how many records they have processed within that step. These and other stateful elements are vital to not only the ongoing processing for any batch process but also restarting it if the process failed before. For example, suppose a batch process that processes a million transactions a night goes down after processing 900,000 of those records. Even with periodic commits along the way, how do you know where to pick back up when you restart? The idea of reestablishing that execution state can be daunting, which is why Spring Batch handles it for you.

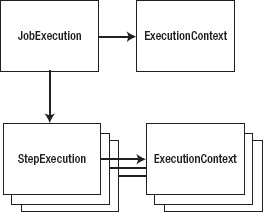

You read earlier about how a JobExecution represents an actual attempt at executing the job. It's this level of the domain that requires state to be maintained. As a JobExecution progresses through a job or step, the state changes. This state is maintained in ExecutionContext.

If you think about how web applications store state, typically it's through the HttpSession.[13] ExecutionContext is essentially the session for your batch job. Holding nothing more than simple key-value pairs, ExecutionContext provides a way to store state within your job in a safe way. One difference between a web application's session and ExecutionContext is that you actually have multiple ExecutionContexts over the course of your job. JobExecution has an ExecutionContext, as does each StepExecution (which you'll see later in this chapter). This allows data to be scoped at the appropriate level (either data-specific for the step or global data for the entire job). Figure 4-4 shows how these elements are related.

ExecutionContext provides a "safe" way to store data. The storage is safe because everything that goes into an ExecutionContext is persisted in the job repository. You briefly looked at the batch_job_execution_context and batch_step_execution_context tables in Chapter 2, but they didn't contain any meaningful data at the time. Let's look at how to add data to and retrieve data from the ExecutionContext and what it looks like in the database when you do.

The ExecutionContext is part of the JobExecution or StepExecution as mentioned earlier. Because of this, to get a handle on the ExecutionContext, you obtain it from the JobExecution or StepExecution based on which you want to use. Listing 4-21 shows how to get a handle on ExecutionContext in the HelloWorld tasklet and add to the context the name of the person you're saying hello to.

Example 4.21. Adding a Name to the Job's ExecutionContext

package com.apress.springbatch.chapter4;

import org.springframework.batch.core.StepContribution;

import org.springframework.batch.core.scope.context.ChunkContext;

import org.springframework.batch.core.step.tasklet.Tasklet;

import org.springframework.batch.repeat.RepeatStatus;

import org.springframework.batch.item.ExecutionContext;

public class HelloWorld implements Tasklet {

private static final String HELLO_WORLD = "Hello, %s";

public RepeatStatus execute( StepContribution step,

ChunkContext context ) throws Exception {

String name =

(String) context.getStepContext()

.getJobParameters()

.get("name");

ExecutionContext jobContext = context.getStepContext()

.getStepExecution()

.getJobExecution()

.getExecutionContext();

jobContext.put("user.name", name);

System.out.println( String.format(HELLO_WORLD, name) );

return RepeatStatus.FINISHED;

}

}Notice that you have to do a bit of traversal to get to the job's ExecutionContext. All you're doing in this case is going from the chunk to the step to the job, working your way up the tree of scopes. If you look at the API for StepContext, you see that there is a getJobExecutionContext() method. This method returns a Map<String, Object> that represents the current state of the job's ExecutionContext. Although this is a handy way to get access to the current values, it has one limiting factor in its use: updates made to the Map returned by the StepContext.getJobExecutionContext() method aren't persisted to the actual ExecutionContext. Thus any changes you make to that Map that aren't also made to the real ExecutionContext are lost in the event of an error.

Listing 4-21's example showed using the job's ExecutionContext, but the ability to obtain and manipulate the step's ExecutionContext works the same way. In that case, you get the ExecutionContext directly from the StepExecution instead of the JobExecution. Listing 4-22 shows the code updated to use the step's ExecutionContext instead of the job's.

Example 4.22. Adding a Name to the Job's ExecutionContext

package com.apress.springbatch.chapter4; import org.springframework.batch.core.StepContribution; import org.springframework.batch.core.scope.context.ChunkContext; import org.springframework.batch.core.step.tasklet.Tasklet;

import org.springframework.batch.repeat.RepeatStatus;

import org.springframework.batch.item.ExecutionContext;

public class HelloWorld implements Tasklet {

private static final String HELLO_WORLD = "Hello, %s";

public RepeatStatus execute( StepContribution step,

ChunkContext context ) throws Exception {

String name =

(String) context.getStepContext()

.getJobParameters()

.get("name");

ExecutionContext jobContext = context.getStepContext()

.getStepExecution()

.getExecutionContext();

jobContext.put("user.name", name);

System.out.println( String.format(HELLO_WORLD, name) );

return RepeatStatus.FINISHED;

}

}As your jobs process, Spring Batch persists your state as part of committing each chunk. Part of that persistence is the saving of the job and current step's ExecutionContexts. Chapter 2 went over the layout of the tables. Let's go ahead and execute the sampleJob job with the updates from Listing 4-21 to see what the values look like persisted in the database. Table 4-2 shows what the batch_job_execution_context table has in it after a single run with the name parameter set as Michael.

Table 4.2. Contents of BATCH_JOB_EXECUTION_CONTEXT

JOB_EXECUTION_ID | SHORT_CONTEXT | SERIALIZED_CONTEXT |

|---|---|---|

|

{"map":{"entry":{"string":["user.name",

"Michael"]}}}

|

|

Table 4-2 consists of three columns. The first is a reference to the JobExecution that this ExecutionContext is related to. The second is a JSON representation of the Job's ExecutionContext. This field is updated as processing occurs. Finally, the SERIALIZED_CONTEXT field contains a serialized Java object. The SERIALIZED_CONTEXT is only populated while a job is running or when it has failed.

This section of the chapter has gone through different pieces of what a job is in Spring Batch. In order for a job to be valid, however, it requires at least one step, which brings you to the next major piece of the Spring Batch framework: steps.

If a job defines the entire process, a step is the building block of a job. It's an independent, sequential batch processor. I call it a batch processor for a reason. A step contains all of the pieces a job requires. It handles its own input. It has its own processor. It handles its own output. Transactions are self-contained within a step. It's by design that steps are as disjointed as they're. This allows you as the developer to structure your job as freely as needed.

In this section you take the same style deep dive into steps that you did with jobs in the previous section. You cover the way Spring Batch breaks processing down in a step by chunks and how that has changed because previous versions of the framework. You also look at a number of examples on how to configure steps within your job including how to control the flow from step to step and conditional step execution. Finally you configure the steps required for your statement job. With all of this in mind, let's start looking at steps by looking at how steps process data.

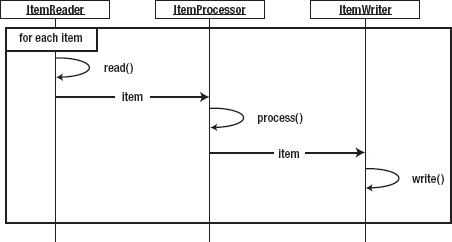

Batch processes in general are about processing data. When you think about what a unit of data to be processed is, there are two options: an individual item or a chunk of items. An individual item consists of a single object that typically represents a single row in a database or file. Item-based processing, therefore, is the reading, processing, and then writing of your data one row, record, or object at a time, as Figure 4-5 shows.

As you can imagine, there can be significant overhead with this approach. The inefficiency of writing individual rows when you know you'll be committing large numbers of rows to a database or writing them to a file can be enormous.

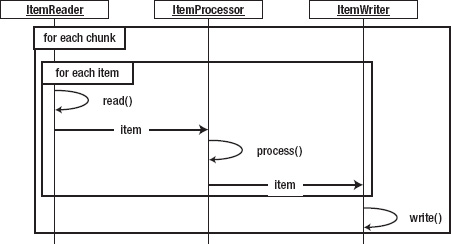

When Spring Batch 1.x came out in 2008, item-based processing was the way records were processed. Since then the guys at SpringSource and Accenture have upgraded the framework, and in Spring Batch 2, they introduced the concept of chunk-based processing. A chunk in the world of batch processing is a subset of the records or rows that need to be processed, typically defined by the commit interval. In Spring Batch, when you're working with a chunk of data, it's defined by how many rows are processed between each commit.

Figure 4-6 shows how data flows through a batch process when designed for chunk processing. Here you see that although each row is still read and processed individually, all the writing for a single chunk occurs at once when it's time to be committed. This small tweak in processing allows for large performance gains and opens up the world to many other processing capabilities.

One of the things that chunk-based processing allows you to do is to process chunks remotely. When you consider things like networking overhead, it's cost prohibitive to process individual items remotely. However, if you can send over an entire chunk of data at once to a remote processor, then instead of making performance worse, it can improve performance dramatically.

As you learn more about steps, readers, writers, and scalability throughout the book, keep in mind the chunk-based processing that Spring Batch is based on. Let's move on by digging into how to configure the building blocks of your jobs: steps.

By now, you've identified that a job is really not much more than an ordered list of steps to be executed. Because of this, steps are configured by listing them within a job. Let's examine how to configure a step and the various options that are available to you.

When you think about steps in Spring Batch, there are two different types: a step for chunk-based processing and a tasklet step. Although you used a tasklet step previously in the "Hello, World!" job, you see more detail about it later. For now, you start by looking at how to configure chunk-based steps.

As you saw earlier, chunks are defined by their commit intervals. If the commit interval is set to 50 items, then your job reads in 50 items, processes 50 items, and then writes out 50 items at once. Because of this, the transaction manager plays a key part in the configuration of a chunk-based step. Listing 4-23 shows how to configure a basic step for chunk-oriented processing.

Example 4.23. stepJob.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans:beans xmlns="http://www.springframework.org/schema/batch"

xmlns:beans="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/batch

http://www.springframework.org/schema/batch/spring-batch-2.1.xsd">

<beans:import resource="../launch-context.xml"/>

<beans:bean id="inputFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[inputFile]}"/>

</beans:bean>

<beans:bean id="outputFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[outputFile]}"/>

</beans:bean>

<beans:bean id="inputReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resource" ref="inputFile"/>

<beans:property name="lineMapper">

<beans:bean

class="org.springframework.batch.item.file.mapping.PassThroughLineMapper"/>

</beans:property>

</beans:bean>

<beans:bean id="outputWriter"

class="org.springframework.batch.item.file.FlatFileItemWriter">

<beans:property name="resource" ref="outputFile"/>

<beans:property name="lineAggregator">

<beans:bean

class="org.springframework.batch.item.file.transform.PassThroughLineAggregator"/>

</beans:property>

</beans:bean>

<job id="stepJob">

<step id="step1">

<tasklet>

<chunk reader="inputReader" writer="outputWriter"

commit-interval="50"/>

</tasklet>

</step>

</job>

</beans:beans>Listing 4-23 may look intimidating, but let's focus on the job and step configuration at the end. The rest of the file is the configuration of a basic ItemReader and ItemWriter, which are covered in Chapters 7 and 9, respectively. When you look through the job in Listing 4-23, you see that the step begins with the step tag. All that is required is the id or name, like any other Spring Bean. Within the step tag is a tasklet tag. The org.springframework.batch.core.step.tasklet.Tasklet interface is really a strategy interface for the type of step you're going to execute. In this case, you're configuring org.springframework.batch.core.step.item.ChunkOrientedTasklet<I>. You don't have to worry about configuring the class specifically here; just be aware that other types of tasklets can be used. The last piece of the example step is the chunk tag. Here you're defining what a chunk is for your step. You're saying to use the inputReader bean (an implementation of the ItemReader interface) as the reader and the outputWriter bean (an implementation of the ItemWriter interface) as the writer, and that a chunk consists of 50 items.

Note

When you're configuring beans with Spring, it's better to use the id attribute than the name attribute. They both have to be unique for Spring to work, but using the id attribute allows XML validators to enforce it.

It's important to note the commit-interval attribute. It's set at 50 in the example. This means no records will be written until 50 records are read and processed. If an error occurs after processing 49 items, Spring Batch will roll back the current chunk (transaction) and mark the job as failed. If you were to set the commit-interval value to 1, your job would read in a single item, process that item, and then write that item. Essentially, you would be going back to item based processing. The issue with this is that there is more than just that single item being persisted at the commit-interval. The state of the job is being updated in the job repository as well. You experiment with the commit-interval later in this book but you needed to know now that it's important to set commit-interval as high as reasonably possible.

Although the majority of your steps will be chunk-based processing and therefore use ChunkOrientedTasklet, that isn't the only option. Spring Batch provides three other implementations of the Tasklet interface: CallableTaskletAdapter, MethodInvokingTaskletAdapter, and SystemCommandTasklet. Let's look at CallableTaskletAdapter first.

org.springframework.batch.core.step.tasklet.CallableTaskletAdapter is an adapter that allows you to configure an implementation of the java.util.concurrent.Callable<RepeatStatus> interface. If you're unfamiliar with this newer interface, the Callable<V> interface is similar to the java.lang.Runnable interface in that it's intended to be run in a new thread. However, unlike the Runnable interface, which doesn't return a value and can't throw checked exceptions, the Callable interface can return a value (a RepeatStatus, in this case) and can throw checked exceptions.

The adapter is actually extremely simple in its implementation. It calls the call() method on your Callable object and returns the value that the call() method returns. That's it. Obviously you would use this if you wanted to execute the logic of your step in another thread than the thread in which the step is being executed. If you look at Listing 4-24, you can see that to use this adapter, you configure CallableTaskletAdapter as a normal Spring bean and then reference it in the tasklet tag. In the configuration of the CallableTaskletAdapter bean shown in Listing 4-24, CallableTaskletAdapter contains a single dependency: the callable object itself.

Example 4.24. Using CallableTaskletAdapter

<?xml version="1.0" encoding="UTF-8"?>

<beans:beans xmlns="http://www.springframework.org/schema/batch"

xmlns:beans="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/batch

http://www.springframework.org/schema/batch/spring-batch-2.1.xsd">

<beans:import resource="../launch-context.xml"/>

<beans:bean id="callableObject"

class="com.apress.springbatch.chapter4.CallableLogger"/>

<beans:bean id="callableTaskletAdapter"

class="org.springframework.batch.core.step.tasklet.CallableTaskletAdapter">

<beans:property name="callable" ref="callableObject"/>

</beans:bean>

<job id="callableJob">

<step id="step1">

<tasklet ref="callableTaskletAdapter"/>

</step>

</job>

</beans:beans>One thing to note with CallableTaskletAdapter is that although the tasklet is executed in a different thread than the step itself, this doesn't parallelize your step execution. The execution of this step won't be considered complete until the Callable object returns a valid RepeatStatus object. Until this step is considered complete, no other steps in the flow in which this step is configured will execute. You see how to parallelize processing in a number of ways, including executing steps in parallel, later in this book.

The next Tasklet implementation is org.springframework.batch.core.step.tasklet.MethodInvokingTaskletAdapter. This class is similar to a number of utility classes available in the Spring framework. It allows you to execute a preexisting method on another class as the step of your job. Say for example you already have a service that does a piece of logic that you want to run once in your batch job. Instead of writing an implementation of the Tasklet interface that really just wraps that method call, you can use MethodInvokingTaskletAdapter to call the method. Listing 4-25 shows an example of the configuration for MethodInvokingTaskletAdapter.

Example 4.25. Using MethodInvokingTaskletAdapter

<?xml version="1.0" encoding="UTF-8"?>

<beans:beans xmlns="http://www.springframework.org/schema/batch"

xmlns:beans="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/batch

http://www.springframework.org/schema/batch/spring-batch-2.1.xsd">

<beans:import resource="../launch-context.xml"/>

<beans:bean id="service"

class="com.apress.springbatch.chapter4.ChapterFourService"/>

<beans:bean id="methodInvokingTaskletAdapter"

class="org.springframework.batch.core.step.tasklet.MethodInvokingTaskletAdapter">

<beans:property name="targetObject" ref="service"/>

<beans:property name="targetMethod" value="serviceMethod"/>

</beans:bean>

<job id="methodInvokingJob">

<step id="step1">

<tasklet ref="methodInvokingTaskletAdapter"/>

</step>

</job>

</beans:beans>The example shown in Listing 4-25 specifies an object and a method. With this configuration, the adapter calls the method with no parameters and returns an ExitStatus.COMPLETED result unless the method specified also returns the type org.springframework.batch.core.ExitStatus. If it does return an ExitStatus, the value returned by the method is returned from the tasklet. If you want to configure a static set of parameters, you can use the late-binding method of passing job parameters that you read about earlier in this chapter, as shown in Listing 4-26.

Example 4.26. Using MethodInvokingTaskletAdapter with Parameters

beans:bean id="methodInvokingTaskletAdapter"

class="org.springframework.batch.core.step.tasklet.MethodInvokingTaskletAdapter"

scope="step">

<beans:property name="targetObject" ref="service"/>

<beans:property name="targetMethod" value="serviceMethod"/>

<beans:property name="arguments" value="#{jobParameters[message]}"/>

</beans:bean>

<job id="methodInvokingJob">

<step id="step1">

<tasklet ref="methodInvokingTaskletAdapter"/>

</step>

</job></beans:beans>

The last type of Tasklet implementation that Spring Batch provides is org.springframework.batch.core.step.tasklet.SystemCommandTasklet. This tasklet is used to—you guessed it—execute a system command! The system command specified is executed asynchronously. Because of this, the timeout value (in milliseconds) as shown in Listing 4-27 is important. The interruptOnCancel attribute in the listing is optional but indicates to Spring Batch whether to kill the thread the system process is associated with if the job exits abnormally.

Example 4.27. Using SystemCommandTasklet

<?xml version="1.0" encoding="UTF-8"?>

<beans:beans xmlns="http://www.springframework.org/schema/batch"

xmlns:beans="http://www.springframework.org/schema/beans"

xmlns:xsi=http://www.w3.org/2001/XMLSchema-instance

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/batch

http://www.springframework.org/schema/batch/spring-batch-2.1.xsd">

<beans:import resource="../launch-context.xml" />

<beans:bean id="tempFileDeletionCommand"

class="org.springframework.batch.core.step.tasklet.SystemCommandTasklet">

<beans:property name="command" value="rm – rf /temp.txt " />

<beans:property name="timeout" value="5000" />

<beans:property name="interruptOnCancel" value="true" />

</beans:bean>

<job id="systemCommandJob">

<step id="step1">

<tasklet ref="tempFileDeletionCommand" />

</step>

</job>

</beans:beans>SystemCommandTasklet allows you to configure a number of parameters that can have an effect on how a system command executes. Listing 4-28 shows a more robust example.

Example 4.28. Using SystemCommandTasklet with Full Environment Configuration

<beans:bean id="touchCodeMapper"

class="org.springframework.batch.core.step.tasklet.SimpleSystemProcessExitCodeMapper"/>

<beans:bean id="taskExecutor"

class="org.springframework.core.task.SimpleAsyncTaskExecutor"/>

<beans:bean id="robustFileDeletionCommand"

class="org.springframework.batch.core.step.tasklet.SystemCommandTasklet"><beans:property name="command" value="touch temp.txt" />

<beans:property name="timeout" value="5000" />

<beans:property name="interruptOnCancel" value="true" />

<beans:property name="workingDirectory"

value="/Users/mminella/spring-batch" />

<beans:property name="systemProcessExitCodeMapper"

ref="touchCodeMapper"/>

<beans:property name="terminationCheckInterval" value="5000" />

<beans:property name="taskExecutor" ref="taskExecutor" />

<beans:property name="environmentParams"

value="JAVA_HOME=/java,BATCH_HOME=/Users/batch" />

</beans:bean>

<job id="systemCommandJob">

<step id="step1">

<tasklet ref="robustFileDeletionCommand" />

</step>

</job>

</beans:beans>Listing 4-28 includes five more optional parameters in the configuration:

workingDirectory: This is the directory from which to execute the command. In this example, it's the equivalent of executingcd ˜/spring-batchbefore executing the actual command.

systemProcessExitCodeMapper: System codes may mean different things depending on the command you're executing. This property allows you to use an implementation of theorg.springframework.batch.core.step.tasklet.SystemProcessExitCodeMapperinterface to map what system-return codes go with what Spring Batch status values. Spring provides two implementations of this interface by default:org.springframework.batch.core.step.tasklet.ConfigurableSystemProcessExitCodeMapper, which allows you to configure the mapping in your XML configuration, andorg.springframework.batch.core.step.tasklet.SimpleSystemProcessExitCodeMapper, which returnsExitStatus.FINISHEDif the return code was 0 andExitStatus.FAILEDif it was anything else.

terminationCheckInterval: Because the system command is executed in an asynchronous way by default, the tasklet checks periodically to see if it has completed. By default, this value is set to one second, but you can configure it to any value you wish in milliseconds.

taskExecutor: This allows you to configure your ownTaskExecutorto execute the system command. You're highly discouraged from configuring a synchronous task executor due to the potential of locking up your job if the system command causes problems.

environmentParams: This is a list of environment parameters you can set prior to the execution of your command.

You've seen over the previous section that many different tasklet types are available in Spring Batch. Before moving off the topic, however, there is one other tasklet type to discuss: the tasklet step.

The tasklet step is different than the others you've seen. But it should be the most familiar to you, because it's what you used in the "Hello, World!" job. The way it's different is that in this case, you're writing your own code to be executed as the tasklet. Using MethodInvokingTaskletAdapter is one way to define a tasklet step. In that case, you allow Spring to forward the processing to your code. This lets you develop regular POJOs and use them as steps.

The other way to create a tasklet step is to implement the Tasklet interface as you did when you created the HelloWorld tasklet in Chapter 2. There, you implement the execute method required in the interface and return a RepeatStatus object to tell Spring Batch what to do after you completed processing. Listing 4-29 has the HelloWorld tasklet code as you constructed it in Chapter 2.

Example 4.29. HelloWorld Tasklet

package com.apress.springbatch.chapter2;

import org.springframework.batch.core.StepContribution;

import org.springframework.batch.core.scope.context.ChunkContext;

import org.springframework.batch.core.step.tasklet.Tasklet;

import org.springframework.batch.repeat.RepeatStatus;

public class HelloWorld implements Tasklet {

private static final String HELLO_WORLD = "Hello, world!";

public RepeatStatus execute( StepContribution arg0,

ChunkContext arg1 ) throws Exception {

System.out.println( HELLO_WORLD );

return RepeatStatus.FINISHED;

}

}When processing is complete in your Tasklet implementation, you return an org.springframework.batch.repeat.RepeatStatus object. There are two options with this: RepeatStatus.CONTINUABLE and RepeatStatus.FINISHED. These two values can be confusing at first glance. If you return RepeatStatus.CONTINUABLE, you aren't saying that the job can continue. You're telling Spring Batch to run the tasklet again. Say, for example, that you wanted to execute a particular tasklet in a loop until a given condition was met, yet you still wanted to use Spring Batch to keep track of how many times the tasklet was executed, transactions, and so on. Your tasklet could return RepeatStatus.CONTINUABLE until the condition was met. If you return RepeatStatus.FINISHED, that means the processing for this tasklet is complete (regardless of success) and to continue with the next piece of processing.

You configure a tasklet step as you configure any of the other tasklet types. Listing 4-30 shows HelloWorldJob configured using the HelloWorld tasklet.

Example 4.30. HelloWorldJob

<beans:bean id="helloWorld" class="com.apress.springbatch.chapter2.HelloWorld/>

<job id="helloWorldJob">

<step id="helloWorldStep">

<tasklet ref="helloWorld"/>

</step>

</job>

...You may be quick to point out that this listing isn't the same as it was in Chapter 2, and you would be correct. The reason is that you haven't seen one other feature used in Chapter 2: step inheritance.

Like jobs, steps can be inherited from each other. Unlike jobs, steps don't have to be abstract to be inherited. Spring Batch allows you to configure fully defined steps in your configuration and then have other steps inherit them. Let's start discussing step inheritance by looking at the example used in Chapter 2, the HelloWorldJob in Listing 4-31.

Example 4.31. HelloWorldJob

<beans:bean id="helloWorld"

class="com.apress.springbatch.chapter2.HelloWorld"/>

<step id="helloWorldStep">

<tasklet ref="helloWorld"/>

</step>

<job id="helloWorldJob">

<step id="step1" parent="helloWorldStep"/>

</job>In Listing 4-31, you configure the tasklet implementation (the helloWorld bean), and then you configure the step that references the tasklet (helloWorldStep). Spring Batch doesn't require that the step element be nested in a job tag. Once you're defined your step, helloWorldStep, you can then inherit it when you declare the steps in sequence in your actual job, helloWorldJob. Why would you do this?

In this simple example, there is little benefit to this approach. However, as steps become more complex, experience shows that it's best to configure your steps outside the scope of your job and then inherit them with the steps in the job. This allows the actual job declaration to be much more readable and maintainable.

Obviously, readability isn't the only reason to use inheritance, and that isn't all that is going on even in this example. Let's dive deeper. In this example, what you're really doing in step1 is inheriting the step helloWorldStep and all its attributes. However, step1 chooses not to override any of them.

Step inheritance provides a more complete inheritance model than that of job inheritance. In step inheritance you can fully define a step, inherit the step, and then add or override any of the values you wish. You can also declare a step abstract and place only common attributes there.

Listing 4-32 shows an example of how steps can add and override attributes configured in their parent. You start with the parent step, vehicleStep, which declares a reader, writer, and commit-interval. You then create two steps that inherit from vehicleStep: carStep and truckStep. Each uses the same reader and writer that has been configured in vehicleStep. In each case, they add an item processor that does different things. carStep has chosen to use the inherited commit-interval of 50 items, whereas truckStep has overridden the commit-interval and set it to 5 items.

Example 4.32. Adding Attributes in Step Inheritance

<step id="vehicleStep"> <tasklet> <chunk reader="vehicleReader" writer="vehicleWriter" commit-interval="50"/> </tasklet> </step> <step id="carStep" parent="vehicleStep"> <tasklet> <chunk processor="carProcessor"/> </tasklet> </step> <step id="truckStep" parent="vehicleStep"> <tasklet> <chunk processor="truckProcessor" commit-interval="5"/> </tasklet> </step> <job id="exampleJob"> <step id="step1" parent="carStep" next="step2"/> <step id="step2" parent="truckStep"/> </job>

By declaring a step abstract, as in Java, you're allowed to leave things out that would otherwise be required. In an abstract step, as in Listing 4-33, you're allowed to leave off the reader, writer, processor, and tasklet attributes. This would normally cause an initialization error when Spring tried to build the step; but because it's declared abstract, Spring knows that those will be populated by the steps that inherit it.

Example 4.33. An Abstract Step and Its Implementations

<beans:bean id="inputFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[inputFile]}"/>

</beans:bean>

<beans:bean id="outputFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[outputFile]}"/>

</beans:bean>

<beans:bean id="inputReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resource" ref="inputFile"/>

<beans:property name="lineMapper"><beans:bean

class="org.springframework.batch.item.file.mapping.PassThroughLineMapper"/>

</beans:property>

</beans:bean>

<beans:bean id="outputWriter"

class="org.springframework.batch.item.file.FlatFileItemWriter">

<beans:property name="resource" ref="outputFile"/>

<beans:property name="lineAggregator">

<beans:bean

class="org.springframework.batch.item.file.transform.PassThroughLineAggregator"/>

</beans:property>

</beans:bean>

<step id="commitIntervalStep" abstract="true">

<tasklet>

<chunk commit-interval="15"/>

</tasklet>

</step>

<step id="copyStep" parent="commitIntervalStep">

<tasklet>

<chunk reader="inputReader" writer="outputWriter" />

</tasklet>

</step>

<job id="stepInheritanceJob">

<step id="step1" parent="copyStep" />

</job>

</beans:beans>In Listing 4-33, commitIntervalStep is an abstract step that is used to configure the commit interval for any step that extends this step. You configure the required elements of a step in the step that extends the abstract step, copyStep. Here you specify a reader and writer. copyStep has the same commit-interval of 15 that commitIntervalStep has, without the need to repeat the configuration.

Step inheritance allows you to configure common attributes that can be reused from step to step as well as structure your XML configuration in a maintainable way. The last example of this section used a couple of attributes that were chunk specific. To better understand them, let's go over how you can use the different features that Spring Batch provides in its chunk-based processing.

Because chunk-based processing is the foundation of Spring Batch 2, it's important to understand how to configure its various options to take full advantage of this important feature. This section covers the two options for configuring the size of a chunk: a static commit count and a CompletionPolicy implementation. All other chunk configuration options relate to error handling and are discussed in that section.

To start looking at chunk configuration, Listing 4-34 has a basic example of nothing more than a reader, writer, and commit-interval configured. The reader is an implementation of the ItemReader interface, and the writer an implementation of ItemWriter. Each of these interfaces has its own dedicated chapter later in the book, so this section doesn't go into detail about them. All you need to know is that they supply input and output, respectively, for the step. The commit-interval defines how many items make up a chunk (50 items, in this case).

Example 4.34. A Basic Chunk Configuration

<?xml version="1.0" encoding="UTF-8"?>

<beans:beans xmlns="http://www.springframework.org/schema/batch"

xmlns:beans="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/batch

http://www.springframework.org/schema/batch/spring-batch-2.1.xsd">

<beans:import resource="../launch-context.xml" />

<beans:bean id="inputFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[inputFile]}"/>

</beans:bean>

<beans:bean id="outputFile"

class="org.springframework.core.io.FileSystemResource" scope="step">

<beans:constructor-arg value="#{jobParameters[outputFile]}"/>

</beans:bean>

<beans:bean id="inputReader"

class="org.springframework.batch.item.file.FlatFileItemReader">

<beans:property name="resource" ref="inputFile"/>

<beans:property name="lineMapper">

<beans:bean

class="org.springframework.batch.item.file.mapping.PassThroughLineMapper"/>

</beans:property>

</beans:bean>

<beans:bean id="outputWriter"

class="org.springframework.batch.item.file.FlatFileItemWriter">

<beans:property name="resource" ref="outputFile"/>

<beans:property name="lineAggregator">

<beans:bean