CHAPTER 8

Data analysis

“Data! Data! Data!” he cried impatiently. “I can’t make bricks without clay.”

Sherlock Holmes

The Adventure of the Copper Beeches (1892)

IN Chapter 6, we designed algorithms to analyze and manipulate text, which is stored as a sequence of characters. In this chapter, we will design algorithms to process and learn from more general collections of data. The problems in this chapter involve earthquake measurements, SAT scores, isotope ratios, unemployment rates, meteorite locations, consumer demand, river flow, and more. Data sets such as these have become a (if not, the) vital component of many scientific, non-profit, and commercial ventures. Many of these now employ experts in data science and/or data mining who use advanced techniques to transform data into valuable information to guide the organization.

To solve these problems, we need an abstract data type (ADT) in which to store a collection of data. The simplest and most intuitive such abstraction is a list, which is simply a sequence of items. In previous chapters, we discovered how to generate Python list objects with the range function and how to accumulate lists of coordinates to visualize in plots. To solve the problems in this chapter, we will also grow and shrink lists, and modify and rearrange their contents, without having to worry about where or how they are stored in memory.

8.1 SUMMARIZING DATA

A list is represented as a sequence of items, separated by commas, and enclosed in square brackets ([ ]). Lists can contain any kind of data we want; for example:

>>> sales = [32, 42, 11, 15, 58, 44, 16]

>>> unemployment = [0.082, 0.092, 0.091, 0.063, 0.068, 0.052]

>>> votes = ['yea', 'yea', 'nay', 'yea', 'nay']

>>> points = [[2, 1], [12, 3], [6, 5], [3, 14]]

The first example is a list representing hundreds of daily sales, the second example is a list of the 2012 unemployment rates of the six largest metropolitan areas, and the third example is a list of votes of a five-member board. The last example is a list of (x, y) coordinates, each of which is represented by a two-element list. Although they usually do not in practice, lists can also contain items of different types:

>>> crazy = [15, 'gtaac', [1, 2, 3], max(4, 14)]

>>> crazy

[15, 'gtaac', [1, 2, 3], 14]

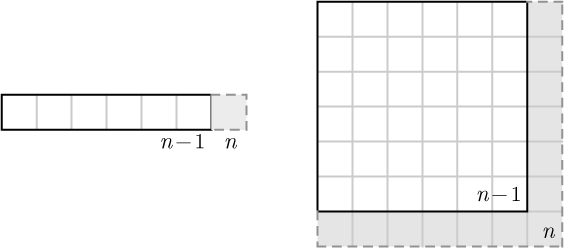

Since lists are sequences like strings, they can also be indexed and sliced. But now indices refer to list elements instead of characters and slices are sublists instead of substrings:

>>> sales[1]

42

>>> votes[:3]

['yea', 'yea', 'nay']

Now suppose that we are running a small business, and we need to get some basic descriptive statistics about last week’s daily sales, starting with the average (or mean) daily sales for the week. Recall from Section 1.2 that, to find the mean of a list of numbers, we need to first find their sum by iterating over the list. Iterating over the values in a list is essentially identical to iterating over the characters in a string, as illustrated below.

def mean(data):

"""Compute the mean of a list of numbers.

Parameter:

data: a list of numbers

Return value: the mean of the numbers in data

"""

sum = 0

for item in data:

sum = sum + item

return sum / len(data)

In each iteration of the for loop, item is assigned the next value in the list named data, and then added to the running sum. After the loop, we divide the sum by the length of the list, which is retrieved with the same len function we used on strings.

Reflection 8.1 Does this work when the list is empty?

If data is the empty list ([ ]), then the value of len(data) is zero, resulting in a “division by zero” error in the return statement. We have several options to deal with this. First, we could just let the error happen. Second, if you read Section 7.2, we could use an assert statement to print an error message and abort. Third, we could detect this error with an if statement and return something that indicates that an error occurred. In this case, we will adopt the last option by returning None and indicating this possibility in the docstring.

1 def mean(data):

2 """Compute the mean of a non-empty list of numbers.

3

4 Parameter:

5 data: a list of numbers

6

7 Return value: the mean of numbers in data or None if data is empty

8 """

9

10 if len(data) == 0:

11 return None

12

13 sum = 0

14 for item in data:

15 sum = sum + item

16 return sum / len(data)

This for loop is yet another example of an accumulator, and is virtually identical to the countLinks function that we developed in Section 4.1. To illustrate what is happening, suppose we call mean from the following main function.

def main():

sales = [32, 42, 11, 15, 58, 44, 16]

averageSales = mean(sales)

print('Average daily sales were', averageSales)

main()

The call to mean(sales) above will effectively execute the following sequence of statements inside the mean function. The changing value of item assigned by the for loop is highlighted in red. The numbers on the left indicate which line in the mean function is being executed in each step.

14 sum = 0 # sum is initialized

15 item = 32 # for loop assigns 32 to item

16 sum = sum + item # sum is assigned 0 + 32 = 32

15 item = 42 # for loop assigns 42 to item

16 sum = sum + item # sum is assigned 32 + 42 = 74

⋮

15 item = 16 # for loop assigns 16 to item

16 sum = sum + item # sum is assigned 202 + 16 = 218

17 return sum / len(data) # returns 218 / 7 ≈ 31.14

Reflection 8.2 Fill in the missing steps above to see how the function arrives at a sum of 218.

The mean of a data set does not adequately describe it if there is a lot of variability in the data, i.e., if there is no “typical” value. In these cases, we need to accompany the mean with the variance, which is measure of how much the data varies from the mean. Computing the variance is left as Exercise 8.1.10.

Now let’s think about how to find the minimum and maximum sales in the list. Of course, it is easy for us to just look at a short list like the one above and pick out the minimum and maximum. But a computer does not have this ability. Therefore, as you think about these problems, it may be better to think about a very long list instead, one in which the minimum and maximum are not so obvious.

Reflection 8.3 Think about how you would write an algorithm to find the minimum value in a long list. (Similar to a running sum, keep track of the current minimum.)

As the hint suggests, we want to maintain the current minimum while we iterate over the list with a for loop. When we examine each item, we need to test whether it is smaller than the current minimum. If it is, we assign the current item to be the new minimum. The following function implements this algorithm.

def min(data):

"""Compute the minimum value in a non-empty list of numbers.

Parameter:

data: a list of numbers

Return value: the minimum value in data or None if data is empty

"""

if len(data) == 0:

return None

minimum = data[0]

for item in data[1:]:

if item < minimum:

minimum = item

return minimum

Before the loop, we initialize minimum to be the first value in the list, using indexing. Then we iterate over the slice of remaining values in the list. In each iteration, we compare the current value of item to minimum and, if item is smaller than minimum, update minimum to the value of item. At the end of the loop, minimum is assigned the smallest value in the list.

Reflection 8.4 If the list [32, 42, 11, 15, 58, 44, 16] is assigned to data, then what are the values of data[0] and data[1:]?

Let’s look at a small example of how this function works when we call it with the list containing only the first four numbers from the list above: [32, 42, 11, 15]. The function begins by assigning the value 32 to minimum. The first value of item is 42. Since 42 is not less than 32, minimum remains unchanged. In the next iteration of the loop, the third value in the list, 11, is assigned to item. In this case, since 11 is less than 32, the value of minimum is updated to 11. Finally, in the last iteration of the loop, item is assigned the value 15. Since 15 is greater than 11, minimum is unchanged. At the end, the function returns the final value of minimum, which is 11. A function to compute the maximum is very similar, so we leave it as an exercise.

Reflection 8.5 What would happen if we iterated over data instead of data[1:]? Would the function still work?

If we iterated over the entire list instead, the first comparison would be useless (because item and minimum would be the same) so it would be a little less efficient, but the function would still work fine.

Now what if we also wanted to know on which day of the week the minimum sales occurred? To answer this question, assuming we know how indices correspond to days of the week, we need to find the index of the minimum value in the list. As we learned in Chapter 6, we need to iterate over the indices in situations like this:

def minDay(data):

"""Compute the index of the minimum value in a non-empty list.

Parameter:

data: a list of numbers

Return value: the index of the minimum value in data

or -1 if data is empty

"""

if len(data) == 0:

return -1

minIndex = 0

for index in range(1, len(data)):

if data[index] < data[minIndex]:

minIndex = index

return minIndex

This function performs almost exactly the same algorithm as our previous min function, but now each value in the list is identified by data[index] instead of item and we remember the index of current minimum in the loop instead of the actual minimum value.

Reflection 8.6 How can we modify the minDay function to return a day of the week instead of an index, assuming the sales data starts on a Sunday.

One option would be to replace return minIndex with if/elif/else statements, like the following:

if minIndex == 0:

return 'Sunday'

elif minIndex == 1:

return 'Monday'

⋮

else:

return 'Saturday'

But a more clever solution is to create a list of the days of the week that are in the same order as the sales data. Then we can simply use the value of minIndex as an index into this list to return the correct string.

days = ['Sunday', 'Monday', 'Tuesday', 'Wednesday', 'Thursday',

'Friday', 'Saturday']

return days[minIndex]

There are many other descriptive statistics that we can use to summarize the contents of a list. The following exercises challenge you to implement some of them.

Exercises

8.1.1. Suppose a list is assigned to the variable name data. Show how you would

- print the length of

data - print the third element in

data - print the last element in

data - print the last three elements in

data - print the first four elements in

data - print the list consisting of the second, third, and fourth elements in

data

8.1.2. In the mean function, we returned None if data was empty. Show how to modify the following main function so that it properly tests for this possibility and prints an appropriate message.

def main():

someData = getInputFromSomewhere()

average = mean(someData)

print('The mean value is', average)

8.1.3. Write a function

sumList(data)

that returns the sum of all of the numbers in the list data. For example, sumList([1, 2, 3]) should return 6.

8.1.4. Write a function

sumOdds(data)

that returns the sum of only the odd integers in the list data. For example, sumOdds([1, 2, 3]) should return 4.

8.1.5. Write a function

countOdds(data)

that returns the number of odd integers in the list data. For example, countOdds([1, 2, 3]) should return 2.

8.1.6. Write a function

multiples5(data)

that returns the number of multiples of 5 in a list of integers. For example, multiples5([5, 7, 2, 10]) should return 2.

8.1.7. Write a function

countNames(words)

that returns the number of capitalized names in the list of strings named words. For example, countNames(['Fili', 'Oin', 'Thorin', 'and', 'Bilbo', 'are', 'characters', 'in', 'a', 'book', 'by', 'Tolkien']) should return 5.

8.1.8. The percentile associated with a particular value in a data set is the number of values that are less than or equal to it, divided by the total number of values, times 100. Write a function

percentile(data, value)

that returns the percentile of value in the list named data.

meanSquares(data)

that returns the mean (average) of the squares of the numbers in a list named data.

variance(data)

that returns the variance of a list of numbers named data. The variance is defined to be the mean of the squares of the numbers in the list minus the square of the mean of the numbers in the list. In your implementation, call your function from Exercise 8.1.9 and the mean function from this section.

8.1.11. Write a function

max(data)

that returns the maximum value in the list of numbers named data. Do not use the built-in max function.

8.1.12. Write a function

shortest(words)

that returns the shortest string in a list of strings named words. In case of ties, return the first shortest string. For example, shortest(['spider', 'ant', 'beetle', 'bug'] should return the string 'ant'.

8.1.13. Write a function

span(data)

that returns the difference between the largest and smallest numbers in the list named data. Do not use the built-in min and max functions. (But you may use your own functions.) For example, span([9, 4, 2, 1, 7, 7, 3, 2]) should return 8.

maxIndex(data)

that returns the index of the maximum item in the list of numbers named data. Do not use the built-in max function.

8.1.15. Write a function

secondLargest(data)

that returns the second largest number in the list named data. Do not use the built-in max function. (But you may use your maxIndex function from Exercise 8.1.14.)

search(data, target)

that returns True if the target is in the list named data, and False otherwise. Do not use the in operator to test whether an item is in the list. For example, search(['Tris', 'Tobias', 'Caleb'], 'Tris') should return True, but search(['Tris', 'Tobias', 'Caleb'], 'Peter') should return False.

8.1.17. Write a function

search(data, target)

that returns the index of target if it is found in the list named data, and -1 otherwise. Do not use the in operator or the index method to test whether items are in the list. For example, search(['Tris', 'Tobias', 'Caleb'], 'Tris') should return 0, but search(['Tris', 'Tobias', 'Caleb'], 'Peter') should return -1.

8.1.18. Write a function

intersect(data1, data2)

that returns True if the two lists named data1 and data2 have any common elements, and False otherwise. (Hint: use your search function from Exercise 8.1.16.) For example, intersect(['Katniss', 'Peeta', 'Gale'], ['Foxface', 'Marvel', 'Glimmer']) should return False, but intersect(['Katniss', 'Peeta', 'Gale'], ['Gale', 'Haymitch', 'Katniss']) should return True.

8.1.19. Write a function

differ(data1, data2)

that returns the first index at which the two lists data1 and data2 differ. If the two lists are the same, your function should return -1. You may assume that the lists have the same length. For example, differ(['CS', 'rules', '!'], ['CS', 'totally', 'rules!']) should return the index 1.

8.1.20. A checksum is a digit added to the end of a sequence of data to detect error in the transmission of the data. (This is a generalization of parity from Exercise 6.1.11 and similar to Exercise 6.3.17.) Given a sequence of decimal digits, the simplest way to compute a checksum is to add all of the digits and then find the remainder modulo 10. For example, the checksum for the sequence 48673 is 8 because (4 + 8 + 6 + 7 + 3) mod 10 = 28 mod 10 = 8. When this sequence of digits is transmitted, the checksum digit will be appended to the end of the sequence and checked on the receiving end. Most numbers that we use on a daily basis, including credit card numbers and ISBN numbers, as well as data sent across the Internet, include checksum digits. (This particular checksum algorithm is not particularly good, so “real” checksum algorithms are a bit more complicated; see, for example, Exercise 8.1.21.)

- Write a function

checksum(data)

that computes the checksum digit for the list of integers nameddataand returns the list with the checksum digit added to the end. For example,checksum([4, 8, 6, 7, 3])should return the list[4, 8, 6, 7, 3, 8]. - Write a function

check(data)

that returns a Boolean value indicating whether the last integer indatais the correct checksum value. - Demonstrate a transmission (or typing) error that could occur in the sequence

[4, 8, 6, 7, 3, 8](the last digit is the checksum digit) that would not be detected by thecheckfunction.

8.1.21. The Luhn algorithm is the standard algorithm used to validate credit card numbers and protect against accidental errors. Read about the algorithm online, and then write a function

validateLuhn(number)

that returns True if the number if valid and False otherwise. The number parameter will be a list of digits. For example, to determine if the credit card number 4563 9601 2200 1999 is valid, one would call the function with the parameter [4, 5, 6, 3, 9, 6, 0, 1, 2, 2, 0, 0, 1, 9, 9, 9]. (Hint: use a for loop that iterates in reverse over the indices of the list.)

8.2 CREATING AND MODIFYING LISTS

We often want to create or modify data, rather than just summarize it. We have already seen some examples of this with the list accumulators that we have used to build lists of data to plot. In this section, we will revisit some of these instances, and then build upon them to demonstrate some of the nuts and bolts of working with data stored in lists. Several of the projects at the end of this chapter can be solved using these techniques.

List accumulators, redux

We first encountered lists in Section 4.1 in a slightly longer version of the following function.

import matplotlib.pyplot as pyplot

def pond(years):

""" (docstring omitted) """

population = 12000

populationList = [population]

for year in range(1, years + 1):

population = 1.08 * population - 1500

populationList.append(population)

pyplot.plot(range(years + 1), populationList)

pyplot.show()

return population

In the first red statement, the list named populationList is initialized to the single-item list [12000]. In the second red statement, inside the loop, each new population value is appended to the list. Finally, the list is plotted with the pyplot.plot function.

We previously called this technique a list accumulator, due to its similarity to integer accumulators. List accumulators can be applied to a variety of problems. For example, consider the find function from Section 6.5. Suppose that, instead of returning only the index of the first instance of the target string, we wanted to return a list containing the indices of all the instances:

def find(text, target):

""" (docstring omitted) """

indexList = []

for index in range(len(text) - len(target) + 1):

if text[index:index + len(target)] == target:

indexList.append(index)

return indexList

In this function, just as in the pond function, we initialize the list before the loop, and append an index to the list inside the loop (wherever we find the target string). At the end of the function, we return the list of indices. The function call

find('Well done is better than well said.', 'ell')

would return the list [1, 26]. On the other hand, the function call

find('Well done is better than well said.', 'Franklin')

would return the empty list [] because the condition in the if statement is never true.

This list accumulator pattern is so common that there is a shorthand for it called a list comprehension. You can learn more about list comprehensions by reading the optional material at the end of this section, starting on Page 368.

Lists are mutable

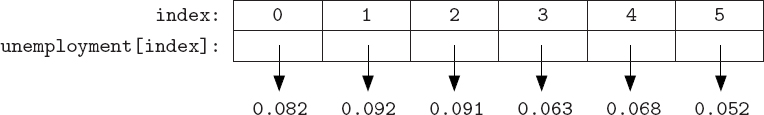

We can modify an existing list with append because, unlike strings, lists are mutable. In other words, the components of a list can be changed. We can also change individual elements in a list. For example, if we need to change the second value in the list of unemployment rates, we can do so:

>>> unemployment = [0.082, 0.092, 0.091, 0.063, 0.068, 0.052]

>>> unemployment[1] = 0.062

>>> unemployment

[0.082, 0.062, 0.091, 0.063, 0.068, 0.052]

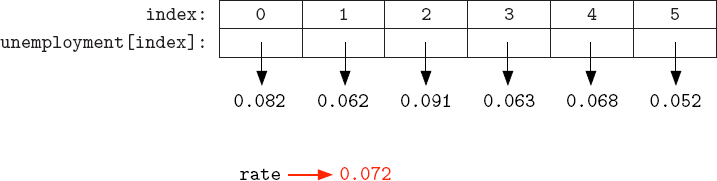

We can change individual elements in a list because each of the elements is an independent reference to a value, like any other variable name. We can visualize the original unemployment list like this:

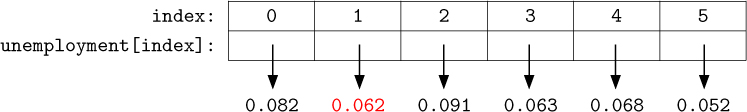

So the value 0.082 is assigned to the name unemployment[0], the value 0.092 is assigned to the name unemployment[1], etc. When we assigned a new value to unemployment[1] with unemployment[1] = 0.062, we were simply assigning a new value to the name unemployment[1], like any other assignment statement:

Suppose we wanted to adjust all of the unemployment rates in this list by subtracting one percent from each of them. We can do this with a for loop that iterates over the indices of the list.

>>> for index in range(len(unemployment)):

unemployment[index] = unemployment[index] - 0.01

>>> unemployment

[0.072, 0.052, 0.081, 0.053, 0.058, 0.042]

This for loop is simply equivalent to the following six statements:

unemployment[0] = unemployment[0] - 0.01

unemployment[1] = unemployment[1] - 0.01

unemployment[2] = unemployment[2] - 0.01

unemployment[3] = unemployment[3] - 0.01

unemployment[4] = unemployment[4] - 0.01

unemployment[5] = unemployment[5] - 0.01

Reflection 8.7 Is it possible to achieve the same result by iterating over the values in the list instead? In other words, does the following for loop accomplish the same thing? (Try it.) Why or why not?

for rate in unemployment:

rate = rate - 0.01

This loop does not modify the list because rate, which is being modified, is not a name in the list. So, although the value assigned to rate is being modified, the list itself is not. For example, at the beginning of the first iteration, 0.082 is assigned to rate, as illustrated below.

Then, when the modified value rate - 0.01 is assigned to rate, this only affects rate, not the original list, as illustrated below.

List parameters are mutable too

Now let’s put the correct loop above in a function named adjust that takes a list of unemployment rates as a parameter. We can then call the function with an actual list of unemployment rates that we wish to modify:

def adjust(rates):

"""Subtract one percent (0.01) from each rate in a list.

Parameter:

rates: a list of numbers representing rates (percentages)

Return value: None

"""

for index in range(len(rates)):

rates[index] = rates[index] - 0.01

def main():

unemployment = [0.053, 0.071, 0.065, 0.074]

adjust(unemployment)

print(unemployment)

main()

The list named unemployment is assigned in the main function and then passed in for the parameter rates to the adjust function. Inside the adjust function, every value in rates is decremented by 0.01. What effect, if any, does this have on the list assigned to unemployment? To find out, we need to look carefully at what happens when the function is called.

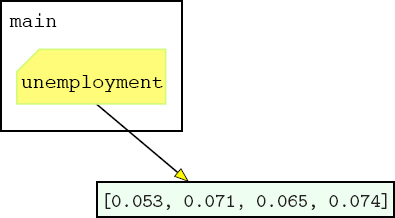

Right after the assignment statement in the main function, the situation looks like the following, with the variable named unemployment in the main namespace assigned the list [0.053, 0.071, 0.065, 0.074].

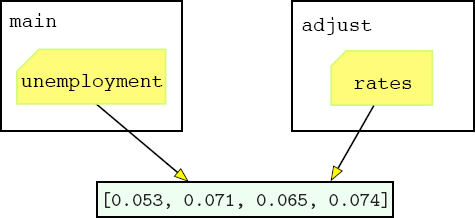

Now recall from Section 3.5 that, when an argument is passed to a function, it is assigned to its associated parameter. Therefore, immediately after the adjust function is called from main, the parameter rates is assigned the same list as unemployment:

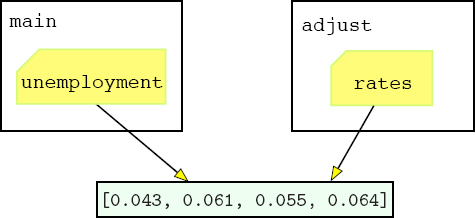

After adjust executes, 0.01 has been subtracted from each value in rates, as the following picture illustrates.

But notice that, since the same list is assigned to unemployment, these changes will also be reflected in the value of unemployment back in the main function. In other words, after the adjust function returns, the picture looks like this:

So when unemployment is printed at the end of main, the adjusted list [0.043, 0.061, 0.055, 0.064] will be displayed.

Reflection 8.8 Why does the argument’s value change in this case when it did not in the parameter passing examples in Section 3.5? What is different?

The difference here is that lists are mutable. When you pass a mutable value as an argument, any changes to the associated formal parameter inside the function will be reflected in the value of the argument. Therefore, when we pass a list as an argument to a function, the values in the list can be changed inside the function.

What if we did not want to change the argument to the adjust function (i.e., unemployment), and instead return a new adjusted list? One alternative, illustrated in the function below, would be to make a copy of rates, using the list method copy, and then modify this copy instead.

def adjust(rates):

""" (docstring omitted) """

ratesCopy = rates.copy()

for index in range(len(ratesCopy)):

ratesCopy[index] = ratesCopy[index] - 0.01

return ratesCopy

The copy method creates an independent copy of the list in memory, and returns a reference to this new list so that it can be assigned to a variable name (in this case, ratesCopy). There are other solutions to this problem as well, which we leave as exercises.

Tuples

Python offers another list-like object called a tuple. A tuple works just like a list, with two substantive differences. First, a tuple is enclosed in parentheses instead of square brackets. Second, a tuple is immutable. For example, as a tuple, the unemployment data would look like (0.053, 0.071, 0.065, 0.074).

Tuples can be used in place of lists in situations where the object being represented has a fixed length, and individual components are not likely to change. For example, colors are often represented by their (red, green, blue) components (see Box 3.2) and two-dimensional points by (x, y).

>>> point = (4, 2)

>>> point

(4, 2)

>>> green = (0, 255, 0)

Reflection 8.9 Try reassigning the first value in point to 7. What happens?

>>> point[0] = 7

TypeError: 'tuple' object does not support item assignment

Tuples are more memory efficient than lists because extra memory is set aside in a list for a few appends before more memory must be allocated.

List operators and methods

Two operators that we used to create new strings can also be used to create new lists. The repetition operator * creates a new list that is built from repeats of the contents of a smaller list. For example:

>>> empty = [0] * 5

>>> empty

[0, 0, 0, 0, 0]

>>> ['up', 'down'] * 4

['up', 'down', 'up', 'down', 'up', 'down', 'up', 'down']

The concatenation operator + creates a new list that is the result of “sticking” two lists together. For example:

>>> unemployment = [0.082, 0.092, 0.091, 0.063, 0.068, 0.052]

>>> unemployment = unemployment + [0.087, 0.101]

>>> unemployment

[0.082, 0.092, 0.091, 0.063, 0.068, 0.052, 0.087, 0.101]

Notice that the concatenation operator combines two lists to create a new list, whereas the append method inserts a new element into an existing list as the last element. In other words,

unemployment = unemployment + [0.087, 0.101]

accomplishes the same thing as the two statements

unemployment.append(0.087)

unemployment.append(0.101)

However, using concatenation actually creates a new list that is then assigned to unemployment, whereas using append modifies an existing list. So using append is usually more efficient than concatenation if you are just adding to the end of an existing list.

Reflection 8.10 How do the results of the following two statements differ? If you want to add the number 0.087 to the end of the list, which is correct?

unemployment.append(0.087)

and

unemployment.append([0.087])

The list class has several useful methods in addition to append. We will use many of these in the upcoming sections to solve a variety of problems. For now, let’s look at four of the most common methods: sort, insert, pop, and remove.

The sort method simply sorts the elements in a list in increasing order. For example, suppose we have a list of SAT scores that we would like to sort:

>>> scores = [620, 710, 520, 550, 640, 730, 600]

>>> scores.sort()

>>> scores

[520, 550, 600, 620, 640, 710, 730]

Note that the sort and append methods, as well as insert, pop and remove, do not return new lists; instead they modify the lists in place. In other words, the following is a mistake:

>>> scores = [620, 710, 520, 550, 640, 730, 600]

>>> newScores = scores.sort()

Reflection 8.11 What is the value of newScores after we execute the statement above?

Printing the value of newScores reveals that it refers to the value None because sort does not return anything (meaningful). However, scores was modified as we expected:

>>> newScores

>>> scores

[520, 550, 600, 620, 640, 710, 730]

The sort method will sort any list that contains comparable items, including strings. For example, suppose we have a list of names that we want to be in alphabetical order:

>>> names = ['Eric', 'Michael', 'Connie', 'Graham']

>>> names.sort()

>>> names

['Connie', 'Eric', 'Graham', 'Michael']

Reflection 8.12 What happens if you try to sort a list containing items that cannot be compared to each other? For example, try sorting the list [3, 'one', 4, 'two'].

The insert method inserts an item into a list at a particular index. For example, suppose we want to insert new names into the sorted list above to maintain alphabetical order:

>>> names.insert(3, 'John')

>>> names

['Connie', 'Eric', 'Graham', 'John', 'Michael']

>>> names.insert(0, 'Carol')

>>> names

['Carol', 'Connie', 'Eric', 'Graham', 'John', 'Michael']

The first parameter of the insert method is the index where the inserted item will reside after the insertion.

The pop method is the inverse of insert; pop deletes the list item at a given index and returns the deleted value. For example,

>>> inMemoriam = names.pop(3)

>>> names

['Carol', 'Connie', 'Eric', 'John', 'Michael']

>>> inMemoriam

'Graham'

If the argument to pop is omitted, pop deletes and returns the last item in the list.

The remove method also deletes an item from a list, but takes the value of an item as its parameter rather than its position. If there are multiple items in the list with the given value, the remove method only deletes the first one. For example,

>>> names.remove('John')

>>> names

['Carol', 'Connie', 'Eric', 'Michael']

Reflection 8.13 What happens if you try to remove 'Graham' from names now?

*List comprehensions

As we mentioned at the beginning of this section, the list accumulator pattern is so common that there is a shorthand for it called a list comprehension. A list comprehension allows us to build up a list in a single statement. For example, suppose we wanted to create a list of the first 15 even numbers. Using a for loop, we can construct the desired list with:

evens = [ ]

for i in range(15):

evens.append(2 * i)

An equivalent list comprehension looks like this:

evens = [2 * i for i in range(15)]

The first part of the list comprehension is an expression representing the items we want in the list. This is the same as the expression that would be passed to the append method if we constructed the list the “long way” with a for loop. This expression is followed by a for loop clause that specifies the values of an index variable for which the expression should be evaluated. The for loop clause is also identical to the for loop that we would use to construct the list the “long way.” This correspondence is illustrated below:

NumPy is a Python module that provides a different list-like class named array. (Because the numpy module is required by the matplotlib module, you should already have it installed.) Unlike a list, a NumPy array is treated as a mathematical vector. There are several different ways to create a new array. We will only illustrate two:

>>> import numpy

>>> a = numpy.array([1, 2, 3, 4, 5])

>>> print(a)

[1 2 3 4 5]

>>> b = numpy.zeros(5)

>>> print(b)

[ 0. 0. 0. 0. 0.]

In the first case, a was assigned an array created from a list of numbers. In the second, b was assigned an array consisting of 5 zeros. One advantage of an array over a list is that arithmetic operations and functions are applied to each of an array object’s elements individually. For example:

>>> print(a * 3)

[ 3 6 9 12 15]

>>> c = numpy.array([3, 4, 5, 6, 7])

>>> print(a + c)

[ 4 6 8 10 12]

There are also many functions and methods available to array objects. For example:

>>> print(c.sum())

25

>>> print(numpy.sqrt(c))

[ 1.73205081 2. 2.23606798 2.44948974 2.64575131]

An array object can also have more than one dimension, as we will discuss in Chapter 9. If you are interested in learning more about NumPy, visit http://www.numpy.org.

List comprehensions can also incorporate if statements. For example, suppose we wanted a list of the first 15 even numbers that are not divisible by 6. A for loop to create this list would look just like the previous example, with an additional if statement that checks that 2 * i is not divisible by 6 before appending it:

evens = [ ]

for i in range(15):

if 2 * i % 6 != 0:

evens.append(2 * i)

This can be reproduced with a list comprehension that looks like this:

evens = [2 * i for i in range(15) if 2 * i % 6 != 0]

The corresponding parts of this loop and list comprehension are illustrated below:

In general, the initial expression in a list comprehension can be followed by any sequence of for and if clauses that specify the values for which the expression should be evaluated.

Reflection 8.14 Rewrite the find function on Page 361 using a list comprehension.

The find function can be rewritten with the following list comprehension.

def find(text, target):

""" (docstring omitted) """

return [index for index in range(len(text) - len(target) + 1)

if text[index:index + len(target)] == target]

Look carefully at the corresponding parts of the original loop version and the list comprehension version, as we did above.

Exercises

8.2.1. Show how to add the string 'grapes' to the end of the following list using both concatenation and the append method.

fruit = ['apples', 'pears', 'kiwi']

squares(n)

that returns a list containing the squares of the integers 1 through n. Use a for loop.

8.2.3. (This exercise assumes that you have read Section 6.7.) Write a function

getCodons(dna)

that returns a list containing the codons in the string dna. Your algorithm should use a for loop.

8.2.4. Write a function

square(data)

that takes a list of numbers named data and squares each number in data in place. The function should not return anything. For example, if the list [4, 2, 5] is assigned to a variable named numbers then, after calling square(numbers), numbers should have the value [16, 4, 25].

swap(data, i, j)

that swaps the positions of the items with indices i and j in the list named data.

8.2.6. Write a function

reverse(data)

that reverses the list data in place. Your function should not return anything. (Hint: use the swap function you wrote above.)

8.2.7. Suppose you are given a list of 'yea' and 'nay' votes. Write a function

winner(votes)

that returns the majority vote. For example, winner(['yea', 'nay', 'yea']) should return 'yea'. If there is a tie, return 'tie'.

8.2.8. Write a function

delete(data, index)

that returns a new list that contains the same elements as the list data except for the one at the given index. If the value of index is negative or exceeds the length of data, return a copy of the original list. Do not use the pop method. For example, delete([3, 1, 5, 9], 2) should return the list [3, 1, 9].

8.2.9. Write a function

remove(data, value)

that returns a new list that contains the same elements as the list data except for those that equal value. Do not use the builit-in remove method. Note that, unlike the built-in remove method, your function should remove all items equal to value. For example, remove([3, 1, 5, 3, 9], 3) should return the list [1, 5, 9].

8.2.10. Write a function

centeredMean(data)

that returns the average of the numbers in data with the largest and smallest numbers removed. You may assume that there are at least three numbers in the list. For example, centeredMean([2, 10, 3, 5]) should return 4.

8.2.11. On Page 365, we showed one way to write the adjust function so that it returned an adjusted list rather than modifying the original list. Give another way to accomplish the same thing.

8.2.12. Write a function

shuffle(data)

that shuffles the items in the list named data in place, without using the shuffle function from the random module. Instead, use the swap function you wrote in Exercise 8.2.5 to swap 100 pairs of randomly chosen items. For each swap, choose a random index for the first item and then choose a greater random index for the second item.

8.2.13. We wrote the following loop on Page 362 to subtract 0.01 from each value in a list:

for index in range(len(unemployment)):

unemployment[index] = unemployment[index] - 0.01

Carefully explain why the following loop does not accomplish the same thing.

for rate in unemployment:

rate = rate - 0.01

smooth(data, windowLength)

that returns a new list that contains the values in the list data, averaged over a window of the given length. This is the problem that we originally introduced in Section 1.3.

8.2.15. Write a function

median(data)

that returns the median number in a list of numbers named data.

8.2.16. Consider the following alphabetized grocery list:

groceries = ['cookies', 'gum', 'ham', 'ice cream', 'soap']

Show a sequence of calls to list methods that insert each of the following into their correct alphabetical positions, so that the final list is alphabetized:

'jelly beans''donuts''bananas''watermelon'

Next, show a sequence of calls to the pop method that delete each of the following items from the final list above.

'soap''watermelon''bananas''ham'

8.2.17. Given n people in a room, what is the probability that at least one pair of people shares a birthday? To answer this question, first write a function

sameBirthday(people)

that creates a list of people random birthdays and returns True if two birthdays are the same, and False otherwise. Use the numbers 0 to 364 to represent 365 different birthdays. Next, write a function

birthdayProblem(people, trials)

that performs a Monte Carlo simulation with the given number of trials to approximate the probability that, in a room with the given number of people, two people share a birthday.

8.2.18. Write a function that uses your solution to the previous problem to return the smallest number of people for which the probability of a pair sharing a birthday is at least 0.5.

8.2.19. Rewrite the squares function from Exercise 8.2.2 using a list comprehension.

8.2.20. (This exercise assumes that you have read Section 6.7.) Rewrite the getCodons function from Exercise 8.2.3 using a list comprehension.

8.3 FREQUENCIES, MODES, AND HISTOGRAMS

In addition to the mean and range of a list of data, which are single values, we might want to learn how data values are distributed throughout the list. The number of times that a value appears in a list is called its frequency. Once we know the frequencies of values in a list, we can represent them visually with a histogram and find the most frequent value(s), called the mode(s).

Tallying values

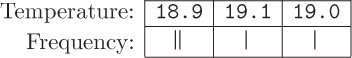

To get a sense of how we might compute the frequencies of values in a list, let’s consider the ocean buoy temperature readings that we first encountered in Chapter 1. As we did then, let’s simplify matters by just considering one week’s worth of data:

temperatures = [18.9, 19.1, 18.9, 19.0, 19.3, 19.2, 19.3]

Now we want to iterate over the list, keeping track of the frequency of each value that we see. We can imagine using a simple table for this purpose. After we see the first value in the list, 18.9, we create an entry in the table for 18.9 and mark its frequency with a tally mark.

The second value in the list is 19.1, so we create another entry and tally mark.

The third value is 18.9, so we add another tally mark to the 18.9 column.

The fourth value is 19.0, so we create another entry in the table with a tally mark.

Continuing in this way with the rest of the list, we get the following final table.

Or, equivalently:

Now to find the mode, we find the maximum frequency in the table, and then return a list of the values with this maximum frequency: [18.9, 19.3].

Dictionaries

Notice how the frequency table resembles the picture of a list on Page 361, except that the indices are replaced by temperatures. In other words, the frequency table looks like a generalized list in which the indices are replaced by values that we choose. This kind of abstract data type is called a dictionary. In a dictionary, each index is replaced with a more general key. Unlike a list, in which the indices are implicit, a dictionary in Python (called a dict object) must define the correspondence between a key and its value explicitly with a key:value pair. To differentiate it from a list, a dictionary is enclosed in curly braces ({ }). For example, the frequency table above would be represented in Python like this:

>>> frequency = {18.9: 2, 19.1: 1, 19.0: 1, 19.2: 1, 19.3: 2}

The first pair in frequency has key 18.9 and value 2, the second item has key 19.1 and value 1, etc.

If you type the dictionary above in the Python shell, and then display it, you might notice something unexpected:

>>> frequency

{19.0: 1, 19.2: 1, 18.9: 2, 19.1: 1, 19.3: 2}

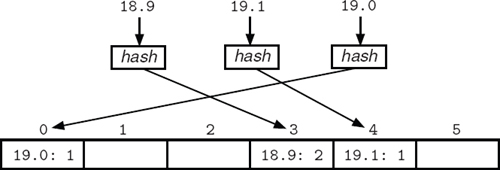

The items appear in a different order than they were originally. This is okay because a dictionary is an unordered collection of pairs. The displayed ordering has to do with the way dictionaries are implemented, using a structure called a hash table. If you are interested, you can learn a bit more about hash tables in Box 8.2.

Each entry in a dictionary object can be referenced using the familiar indexing notation, but using a key in the square brackets instead of an index. For example:

>>> frequency[19.3]

2

>>> frequency[19.1]

1

As we alluded to above, the model of a dictionary in memory is similar to a list (except that there is no significance attached to the ordering of the key values):

Each entry in the dictionary is a reference to a value in the same way that each entry in a list is a reference to a value. So, as with a list, we can change any value in a dictionary. For example, we can increment the value associated with the key 19.3:

>>> frequency[19.3] = frequency[19.3] + 1

Now let’s use a dictionary to implement the algorithm that we developed above to find frequencies and the mode(s) of a list. To begin, we will create an empty dictionary named frequency in which to record our tally marks:

def mode(data):

"""Compute the mode of a non-empty list.

Parameter:

data: a list of items

Return value: the mode of the items in data

"""

frequency = { }

A Python dictionary is implemented with a structure called a hash table. A hash table contains a fixed number of indexed slots in which the key:value pairs are stored. The slot assigned to a particular key:value pair is determined by a hash function that “translates” the key to a slot index. The picture below shows how some items in the frequency dictionary might be placed in an underlying hash table.

In this illustration, the hash function associates the pair 18.9: 2 with slot index 3, 19.1: 1 with slot index 4, and 19.0: 1 with slot index 0.

The underlying hash table allows us to access a value in a dictionary (e.g., frequency[18.9]) or test for inclusion (e.g., key in frequency) in a constant amount of time because each operation only involves a hash computation and then a direct access (like indexing in a string or a list). In contrast, if the pairs were stored in a list, then the list would need to be searched (in linear time) to perform these operations.

Unfortunately, this constant-time access could be foiled if a key is mapped to an occupied slot, an event called a collision. Collisions can be resolved by using adjacent slots, using a second hash function, or associating a list of items with each slot. A good hash function tries to prevent collisions by assigning slots in a seemingly random manner, so that keys are evenly distributed in the table and similar keys are not mapped to the same slot. Because hash functions tend to be so good, we can still consider an average dictionary access to be a constant-time operation, or one elementary step, even with collisions.

Each entry in this dictionary will have its key equal to a unique item in data and its value equal to the item’s frequency count. To tally the frequencies, we need to iterate over the items in data. As in our tallying algorithm, if there is already an entry in frequency with a key equal to the item, we will increment the item’s associated value; otherwise, we will create a new entry with frequency[item] = 1. To differentiate between the two cases, we can use the in operator: item in frequency evaluates to True if there is a key equal to item in the dictionary named frequency.

for item in data:

if item in frequency: # item is already a key in frequency

frequency[item] = frequency[item] + 1 # count the item

else:

frequency[item] = 1 # create a new entry item: 1

The next step is to find the maximum frequency in the dictionary. Since the frequencies are the values in the dictionary, we need to extract a list of the values, and then find the maximum in this list. A list of the values in a dictionary object is returned by the values method, and a list of the keys is returned by the keys method. For example, if we already had the complete frequency dictionary for the example above, we could extract the values with

>>> frequency.values()

dict_values([1, 1, 2, 1, 2])

The values method returns a special kind of object called a dict_values object, but we want the values in a list. To convert the dict_values object to a list, we simply use the list function:

>>> list(frequency.values())

[1, 1, 2, 1, 2]

We can get a list of keys from a dictionary in a similar way:

>>> list(frequency.keys())

[19.0, 19.2, 18.9, 19.1, 19.3]

The resulting lists will be in whatever order the dictionary happened to be stored, but the values and keys methods are guaranteed to produce lists in which every key in a key:value pair is in the same position in the list of keys as its corresponding value is in the list of values.

Returning to the problem at hand, we can use the values method to retrieve the list of frequencies, and then find the maximum frequency in that list:

frequencyValues = list(frequency.values())

maxFrequency = max(frequencyValues)

Finally, to find the mode(s), we need to build a list of all the items in data with frequency equal to maxFrequency. To do this, we iterate over all the keys in frequency, appending to the list of modes each key that has a value equal to maxFrequency. Iterating over the keys in frequency is done with the familiar for loop. When the for loop is done, we return the list of modes.

modes = [ ]

for key in frequency:

if frequency[key] == maxFrequency:

modes.append(key)

return modes

With all of these pieces in place, the complete function looks like the following.

Figure 8.1 A histogram displaying the frequency of each temperature reading in the list [18.9, 19.1, 18.9, 19.0, 19.3, 19.2, 19.3].

def mode(data):

""" (docstring omitted) """

frequency = { }

for item in data:

if item in frequency:

frequency[item] = frequency[item] + 1

else:

frequency[item] = 1

frequencyValues = list(frequency.values())

maxFrequency = max(frequencyValues)

modes = [ ]

for key in frequency:

if frequency[key] == maxFrequency:

modes.append(key)

return modes

As a byproduct of computing the mode, we have also done all of the work necessary to create a histogram for the values in data. The histogram is simply a vertical bar chart with the keys on the x-axis and the height of the bars equal to the frequency of each key. We leave the creation of a histogram as Exercise 8.3.7. As an example, Figure 8.1 shows a histogram for our temperatures list.

We can use dictionaries for a variety of purposes beyond counting frequencies. For example, as the name suggests, dictionaries are well suited for handling translations. The following dictionary associates a meaning with each of three texting abbreviations.

>>> translations = {'lol': 'laugh out loud', 'u': 'you', 'r': 'are'}

We can find the meaning of lol with

>>> translations['lol']

'laugh out loud'

There are more examples in the exercises that follow.

Exercises

printFrequencies(frequency)

that prints a formatted table of frequencies stored in the dictionary named frequency. The key values in the table must be listed in sorted order. For example, for the dictionary in the text, the table should look something like this:

Key Frequency

18.9 2

19.0 1

19.1 1

19.2 1

19.3 2

(Hint: iterate over a sorted list of keys.)

8.3.2. Write a function

wordFrequency(text)

that returns a dictionary containing the frequency of each word in the string text. For example, wordFrequency('I am I.') should return the dictionary {'I': 2, 'am': 1}. (Hint: the split and strip string methods will be useful; see Appendix B.)

8.3.3. The probability mass function (PMF) of a data set gives the probability of each value in the set. A dictionary representing the PMF is a frequency dictionary with each frequency value divided by the total number of values in the original data set. For example, the probabilities for the values represented in the table in Exercise 8.3.1 are shown below.

Key Probability

18.9 2/7

19.0 1/7

19.1 1/7

19.2 1/7

19.3 2/7

Write a function

pmf(frequency)

that returns a dictionary containing the PMF of the frequency dictionary passed as a parameter.

wordFrequencies(fileName)

that prints an alphabetized table of word frequencies in the text file with the given fileName.

firstLetterCount(words)

that takes as a parameter a list of strings named words and returns a dictionary with lower case letters as keys and the number of words in words that begin with that letter (lower or upper case) as values. For example, if the list is ['ant', 'bee', 'armadillo', 'dog', 'cat'], then your function should return the dictionary {'a': 2, 'b': 1, 'c': 1, 'd': 1}.

8.3.6. Similar to the Exercise 8.3.5, write a function

firstLetterWords(words)

that takes as a parameter a list of strings named words and returns a dictionary with lower case letters as keys. But now associate with each key the list of the words in words that begin with that letter. For example, if the list is ['ant', 'bee', 'armadillo', 'dog', 'cat'], then your function should return the dictionary {'a': ['ant', 'armadillo'], 'b': ['bee'], 'c': ['cat'], 'd': ['dog']}.

histogram(data)

that displays a histogram of the values in the list data using the bar function from matplotlib. The matplotlib function bar(x, heights, align = 'center') draws a vertical bar plot with bars of the given heights, centered at the x-coordinates in x. The bar function defines the widths of the bars with respect to the range of values on the x-axis. Therefore, if frequency is the name of your dictionary, it is best to pass in range(len(frequency)), instead of list(frequency.keys()), for x. Then label the bars on the x-axis with the matplotlib function xticks(range(len(frequency)), list(frequency.keys())).

8.3.8. Write a function

bonus(salaries)

that takes as a parameter a dictionary named salaries, with names as keys and salaries as values, and increases the salary of everyone in the dictionary by 5%.

8.3.9. Write a function

updateAges(names, ages)

that takes as parameters a list of names of people whose birthday it is today and a dictionary named ages, with names as keys and ages as values, and increments the age of each person in the dictionary whose birthday is today.

8.3.10. Write a function

seniorList(students, year)

that takes as a parameter a dictionary named students, with names as keys and class years as values, and returns a list of names of students who are graduating in year.

8.3.11. Write a function

createDictionary()

that creates a dictionary, inserts several English words as keys and the Pig Latin (or any other language) translations as values, and then returns the completed dictionary.

Next write a function

translate()

that calls your createDictionary function to create a dictionary, and then repeatedly asks for a word to translate. For each entered word, it should print the translation using the dictionary. If a word does not exist in the dictionary, the function should say so. The function should end when the word quit is typed.

8.3.12. Write a function

txtTranslate(word)

that uses a dictionary to return the English meaning of the texting abbreviation word. Incorporate translations for at least ten texting abbreviations. If the abbreviation is not in the dictionary, your function should return a suitable string message instead. For example, txtTranslate('lol') might return 'laugh out loud'.

login(passwords)

that takes as a parameter a dictionary named passwords, with usernames as keys and passwords as values, and repeatedly prompts for a username and password until a valid pair is entered. Your function should continue to prompt even if an invalid username is entered.

8.3.14. Write a function

union(dict1, dict2)

that returns a new dictionary that contains all of the entries of the two dictionaries dict1 and dict2. If the dictionaries share a key, use the value in the first dictionary. For example, union({'pies': 3, 'cakes': 5}, {'cakes': 4, 'tarts': 2} should return the dictionary {'pies': 3, 'cakes': 5, 'tarts': 2}.

8.3.15. The Mohs hardness scale rates the hardness of a rock or mineral on a 10-point scale, where 1 is very soft (like talc) and 10 is very hard (like diamond). Suppose we have a list such as

rocks = [('talc', 1), ('lead', 1.5), ('copper', 3),

('nickel', 4), ('silicon', 6.5), ('emerald', 7.5),

('boron', 9.5), ('diamond', 10)]

where the first element of each tuple is the name of a rock or mineral, and the second element is its hardness. Write a function

hardness(rocks)

that returns a dictionary organizing the rocks and minerals in such a list into four categories: soft (1–3), medium (3.1–5), hard (5.1–8), and very hard (8.1–10). For example, given the list above, the function would return the dictionary

{'soft': ['talc', 'lead', 'copper'],

'medium': ['nickel'],

'hard': ['silicon', 'emerald'],

'very hard': ['boron', 'diamond']}

8.3.16. (This exercise assumes that you have read Section 6.7.) Rewrite the complement function on page 302 using a dictionary. (Do not use any if statements.)

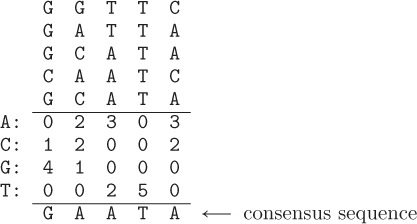

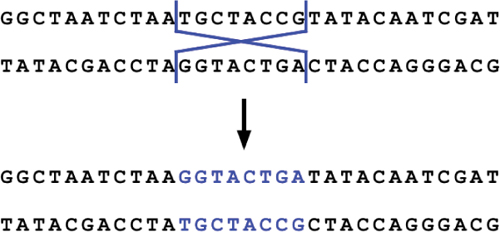

8.3.17. (This exercise assumes that you have read Section 6.7.) Suppose we have a set of homologous DNA sequences with the same length. A profile for these sequences contains the frequency of each base in each position. For example, suppose we have the following five sequences, lined up in a table:

Their profile is shown below the sequences. The first column of the profile indicates that there is one sequence with a C in its first position and four sequences with a G in their first position. The second column of the profile shows that there are two sequences with A in their second position, two sequences with C in their second position, and one sequence with G in its second position. And so on. The consensus sequence for a set of sequences has in each position the most common base in the profile. The consensus for this list of sequences is shown below the profile.

A profile can be implemented as a list of 4-element dictionaries, one for each column. A consensus sequence can then be constructed by finding the base with the maximum frequency in each position. In this exercise, you will build up a function to find a consensus sequence in four parts.

- Write a function

profile1(sequences, index)

that returns a dictionary containing the frequency of each base in position

indexin the list of DNA sequences namedsequences. For example, suppose we pass in the list['GGTTC', 'GATTA', 'GCATA', 'CAATC', 'GCATA']forsequences(the sequences from the example above) and the value 2 forindex. Then the function should return the dictionary{'A': 3, 'C': 0, 'G': 0, 'T': 2}, equivalent to the third column in the profile above. - Write a function

profile(sequences)

that returns a list of dictionaries representing the profile for the list of DNA sequences named

sequences. For example, given the list of sequences above, the function should return the list[{'A': 0, 'C': 1, 'G': 4, 'T': 0},

{'A': 2, 'C': 2, 'G': 1, 'T': 0},

{'A': 3, 'C': 0, 'G': 0, 'T': 2},

{'A': 0, 'C': 0, 'G': 0, 'T': 5},

{'A': 3, 'C': 2, 'G': 0, 'T': 0}]Your

profilefunction should call yourprofile1function in a loop. - Write a function

maxBase(freqs)

that returns the base with the maximum frequency in a dictionary of base frequencies named

freqs. For example,maxBase({'A': 0, 'C': 1, 'G': 4, 'T': 0})should return

'G'. - Write a function

consensus(sequences)

that returns the consensus sequence for the list of DNA sequences named

sequences. Yourconsensusfunction should call yourprofilefunction and also call yourmaxBasefunction in a loop.

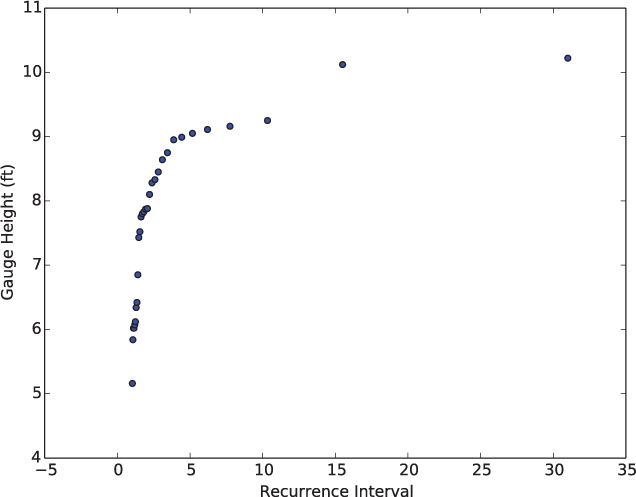

8.4 READING TABULAR DATA

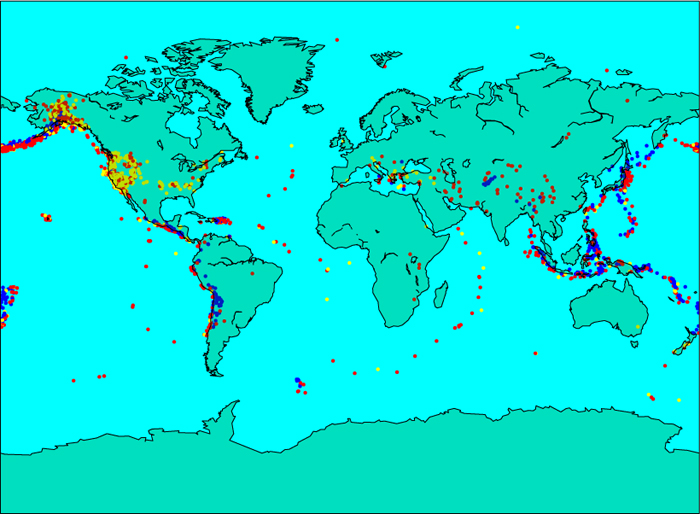

In Section 6.2, we read one-dimensional text from files and designed algorithms to analyze the text in various ways. A lot of data, however, is maintained in the form of two-dimensional tables instead. For example, the U.S. Geological Survey (USGS) maintains up-to-the-minute tabular data about earthquakes happening around the world at http://earthquake.usgs.gov/earthquakes/feed/v1.0/csv.php. Earthquakes typically occur on the boundaries between tectonic plates. Therefore, by extracting this data into a usable form, and then plotting the locations of the earthquakes with matplotlib, we can visualize the locations of the earth’s tectonic plates.

The USGS earthquake data is available in many formats, the simplest of which is called CSV, short for “comma-separated values.” The first few columns of a USGS data file look like the following.

time,latitude,longitude,depth,mag,magType,...

2013-09-24T20:01:22.700Z,40.1333,-123.863,29,1.8,Md,...

2013-09-24T18:57:59.000Z,59.8905,-151.2392,56.4,2.5,Ml,...

2013-09-24T18:51:19.100Z,37.3242,-122.1015,0.3,1.8,Md,...

2013-09-24T18:40:09.100Z,34.3278,-116.4663,8.5,1.2,Ml,...

2013-09-24T18:20:06.300Z,35.0418,-118.3227,1.3,1.4,Ml,...

2013-09-24T18:09:53.700Z,32.0487,-115.0075,28,3.2,Ml,...

As you can see, CSV files contain one row of text per line, with columns separated by commas. The first row contains the names of the fifteen columns in this file, only six of which are shown here. Each additional row consists of fifteen columns of data from one earthquake. The first earthquake in this file was first detected at 20:01 UTC (Coordinated Universal Time) on 2013-09-24 at 40.1333 degrees latitude and –123.863 degrees longitude. The earthquake occurred at a depth of 29 km and had magnitude 1.8.

The CSV file containing data about all of the earthquakes in the past month is available on the web at the URL

http://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/all_month.csv

(If you have trouble with this file, you can try smaller files by replacing all_month with 2.5_month or 4.5_month. The numbers indicate the minimum magnitude of the earthquakes included in the file.)

Reflection 8.15 Enter the URL above into a web browser to see the data file for yourself. About how many earthquakes were recorded in the last month?

We can read the contents of CSV files in Python using the same techniques that we used in Section 6.2. We can either download and save this file manually (and then read the file from our program), or we can download it directly from our program using the urllib.request module. We will use the latter method.

To begin our function, we will open the URL, and then read the header row containing the column names, as follows. (We do not actually need the header row; we just need to get past it to get to the data.)

import urllib.request as web

def plotQuakes():

"""Plot the locations of all earthquakes in the past month.

Parameters: None

Return value: None

"""

url = 'http://earthquake.usgs.gov/...' # see above for full URL

quakeFile = web.urlopen(url)

header = quakeFile.readline()

To visualize fault boundaries with matplotlib, we will need all the longitude (x) values in one list and all the latitude (y) values in another list. In our plot, we will color the points according to their depths, so we will also need to extract the depths of the earthquakes in a third list. To maintain an association between the latitude, longitude, and depth of a particular earthquake, we will need these lists to be parallel in the sense that, at any particular index, the longitude, latitude, and depth at that index belong to the same earthquake. In other words, if we name these three lists longitudes, latitudes, and depths, then for any value of index, longitudes[index], latitudes[index], and depths[index] belong to the same earthquake.

In our function, we next initialize these three lists to be empty and begin to iterate over the lines in the file:

longitudes = []

latitudes = []

depths = []

for line in quakeFile:

line = line.decode('utf-8')

To extract the necessary information from each line of the file, we can use the split method from the string class. The split method splits a string at every instance of a given character, and returns a list of strings that result. For example, 'this;is;a;line'.split(';') returns the list of strings ['this', 'is', 'a', 'line']. (If no argument is given, it splits at strings of whitespace characters.) In this case, we want to split the string line at every comma:

row = line.split(',')

Figure 8.2 Plotted earthquake locations with colors representing depths (yellow dots are shallower, red dots are medium, and blue dots are deeper).

The resulting list named row will have the time of the earthquake at index 0, the latitude at index 1, the longitude at index 2 and the depth at index 3, followed by 11 additional columns that we will not use. Note that each of these values is a string, so we will need to convert each one to a number using the float function. After converting each value, we append it to its respective list:

latitudes.append(float(row[1]))

longitudes.append(float(row[2]))

depths.append(float(row[3]))

Finally, after the loop completes, we close the file.

quakeFile.close()

Once we have the data from the file in this format, we can plot the earthquake epicenters, and depict the depth of each earthquake with a color. Shallow (less than 10 km deep) earthquakes will be yellow, medium depth (between 10 and 50 km) earthquakes will be red, and deep earthquakes (greater than 50 km deep) will be blue. In matplotlib, we can color each point differently by passing the scatter function a list of colors, one for each point. To create this list, we iterate over the depths list and, for each depth, append the appropriate color string to another list named colors:

colors = []

for depth in depths:

if depth < 10:

colors.append('yellow')

elif depth < 50:

colors.append('red')

else:

colors.append('blue')

Finally, we can plot the earthquakes in their respective colors:

pyplot.scatter(longitudes, latitudes, 10, color = colors)

pyplot.show()

In the call to scatter above, the third argument is the square of the size of the point marker, and color = colors is a keyword argument. (We also saw keyword arguments briefly in Section 4.1.) The name color is the name of a parameter of the scatter function, for which we are passing the argument colors. (We will only use keyword arguments with matplotlib functions, although we could also use them in our functions if we wished to do so.)

The complete plotQuakes function is shown below.

import urllib.request as web

import matplotlib.pyplot as pyplot

def plotQuakes():

"""Plot the locations of all earthquakes in the past month.

Parameters: None

Return value: None

"""

url = 'http://earthquake.usgs.gov/...' # see above for full URL

quakeFile = web.urlopen(url)

header = quakeFile.readline()

longitudes = []

latitudes = []

depths = []

for line in quakeFile:

line = line.decode('utf-8')

row = line.split(',')

latitudes.append(float(row[1]))

longitudes.append(float(row[2]))

depths.append(float(row[3]))

quakeFile.close()

colors = []

for depth in depths:

if depth < 10:

colors.append('yellow')

elif depth < 50:

colors.append('red')

else:

colors.append('blue')

pyplot.scatter(longitudes, latitudes, 10, color = colors)

pyplot.show()

The plotted earthquakes are shown in Figure 8.2 over a map background. (Your plot will not show the map, but if you would like to add it, look into the Basemap class from the mpl_toolkits.basemap module.) Geologists use illustrations like Figure 8.2 to infer the boundaries between tectonic plates.

Reflection 8.16 Look at Figure 8.2 and try to identify the different tectonic plates. Can you infer anything about the way neighboring plates interact from the depth colors?

For example, the ring of red and yellow dots around Africa encloses the African plate, and the dense line of blue and red dots northwest of Australia delineates the boundary between the Eurasian and Australian plates. The depth information gives geologists information about the types of the boundaries and the directions in which the plates are moving. For example, the shallow earthquakes on the west coast of North America mark a divergent boundary in which the plates are moving away from each other, while the deeper earthquakes in the Aleutian islands near Alaska mark a subduction zone on a convergent boundary where the Pacific plate to the south is diving underneath the North American plate to the north.

Exercises

8.4.1. Modify plotQuakes so that it also reads earthquake magnitudes into a list, and then draws larger circles for higher magnitude earthquakes. The sizes of the circles can be changed by passing a list of sizes, similar to the list of colors, as the third argument to the scatter function.

8.4.2. Modify the function from Exercise 8.3.5 so that it takes a file name as a parameter and uses the words from this file instead. Test your function using the SCRABBLE® dictionary on the book web site.1

8.4.3. Modify the function from Exercise 8.3.13 so that it takes a file name as a parameter and creates a username/password dictionary with the usernames and passwords in that file before it starts prompting for a username and password. Assume that the file contains one username and password per line, separated by a space. There is an example file on the book web site.

8.4.4. Write a function

plotPopulation()

that plots the world population over time from the tab-separated data file on the book web site named worldpopulation.txt. To read a tab-separated file, split each line with line.split(' '). These figures are U.S. Census Bureau midyear population estimates from 1950–2050. Your function should create two plots. The first shows the years on the x-axis and the populations on the y-axis. The second shows the years on the x-axis and the annual growth rate on the y-axis. The growth rate is the difference between this year’s population and last year’s population, divided by last year’s population. Be sure to label your axes in both plots with the xlabel and ylabel functions. What is the overall trend in world population growth? Do you have any hypotheses regarding the most significant spikes and dips?

8.4.5. Write a function

plotMeteorites()

that plots the location (longitude and latitude) of every known meteorite that has fallen to earth, using a tab-separated data file from the book web site named meteoritessize.txt. Split each line of a tab-separated file with line.split(' '). There are large areas where no meteorites have apparently fallen. Is this accurate? Why do you think no meteorites show up in these areas?

plotTemps()

that reads a CSV data file from the book web site named madison_temp.csv to plot several years of monthly minimum temperature readings from Madison, Wisconsin. The temperature readings in the file are integers in tenths of a degree Celsius and each date is expressed in YYYYMMDD format. Rather than putting every date in a list for the x-axis, just make a list of the years that are represented in the file. Then plot the data and put a year label at each January tick with

pyplot.plot(range(len(minTemps)), minTemps)

pyplot.xticks(range(0, len(minTemps), 12), years)

The first argument to the xticks function says to only put a tick at every twelfth x value, and the second argument supplies the list of years to use to label those ticks. It will be helpful to know that the data file starts with a January 1 reading.

8.4.7. Write a function

plotZebras()

that plots the migration of seven Burchell’s zebra in northern Botswana. (The very interesting story behind this data can be found at https://www.movebank.org/node/11921.) The function should read a CSV data file from the book web site named zebra.csv. Each line in the data file is a record of the location of an individual zebra at a particular time. Each individual has a unique identifier. There are over 50,000 records in the file. For each record, your function should extract the individual identifier (column index 9), longitude (column index 3), and latitude (column index 4). Store the data in two dictionaries, each with a key equal to an identifier. The values in the two dictionaries should be the longitudes and latitudes, respectively, of all tracking events for the zebra with that identifier. Plot the locations of the seven zebras in seven different colors to visualize their migration patterns.

How can you determine from the data which direction the zebras are migrating?

8.4.8. On the book web site, there is a tab-separated data file named education.txt that contains information about the maximum educational attainment of U.S. citizens, as of 2013. Each non-header row contains the number of people in a particular category (in thousands) that have attained each of fifteen different educational levels. Look at the file in a text editor (not a spreadsheet program) to view its contents. Write a function

plotEducation()

that reads this data and then plots separately (but in one figure) the educational attainment of all males, females, and both sexes together over 18 years of age. The x-axis should be the fifteen different educational attainment levels and the y axis should be the percentage of each group that has attained that level. Notice that you will only need to extract three lines of the data file, skipping over the rest. To label the ticks on the x-axis, use the following:

pyplot.xticks(range(15), titles[2:], rotation = 270)

pyplot.subplots_adjust(bottom = 0.45)

The first statement labels the x ticks with the educational attainment categories, rotated 270 degrees. The second statement reserves 45% of the vertical space for these x tick labels. Can you draw any conclusions from the plot about relative numbers of men and women who pursue various educational degrees?

*8.5 DESIGNING EFFICIENT ALGORITHMS

For nearly all of the problems we have encountered so far, there has been only one algorithm, and its design has been relatively straightforward. But for most real problems that involve real data, there are many possible algorithms, and a little more ingenuity is required to find the best one.

To illustrate, let’s consider a slightly more involved problem that can be formulated in terms of the following “real world” situation. Suppose you organized a petition drive in your community, and have now collected all of the lists of signatures from your volunteers. Before you can submit your petition to the governor, you need to remove duplicate signatures from the combined list. Rather than try to do this by hand, you decide to scan all of the names into a file, and design an algorithm to remove the duplicates for you. Because the signatures are numbered, you want the final list of unique names to be in their original order.

As we design an algorithm for this problem, we will keep in mind the four steps outlined in Section 7.1:

Understand the problem.

Design an algorithm.

Implement your algorithm as a program.

Analyze your algorithm for clarity, correctness, and efficiency.

Reflection 8.17 Before you read any further, make sure you understand this problem. If you were given this list of names on paper, what algorithm would you use to remove the duplicates?

The input to our problem is a list of items, and the output is a new list of unique items in the same order they appeared in the original list. We will start with an intuitive algorithm and work through a process of refinement to get progressively better solutions. In this process, we will see how a critical look at the algorithms we write can lead to significant improvements.

A first algorithm

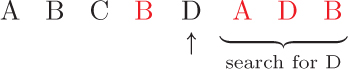

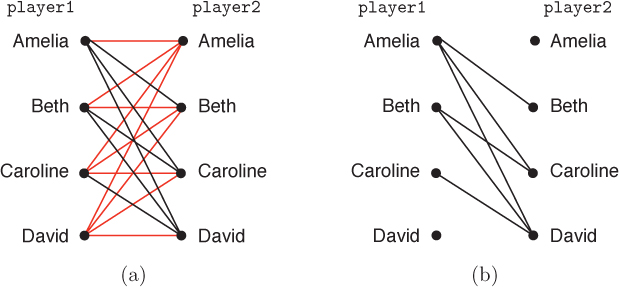

There are several different approaches we could use to solve this problem. We will start with an algorithm that iterates over the items and, for each one, marks any duplicates found further down the list. Once all of the duplicates are marked, we can remove them from a copy of the original list. The following example illustrates this approach with a list containing four unique names, abbreviated A, B, C, and D. The algorithm starts at the beginning of the list, which contains the name A. Then we search down the list and record the index of the duplicate, marked in red.

Next we move on to the second item, B, and mark its duplicates.

Some items, like the next item, C, do not have any duplicates.

The item after C is already marked as a duplicate, so we skip the search.

The next item, D, is not marked so we search for its duplicates down the list.

Finally, we finish iterating over the list, but find that the remaining items are already marked as duplicates.

Once we know where all the duplicates are, we can make a copy of the original list and remove the duplicates from the copy. This algorithm, partially written in Python, is shown below. We keep track of the “marked” items with a list of their indices. The portions in red need to be replaced with calls to appropriate functions, which we will develop soon.

1 def removeDuplicates1(data):

2 """Return a list containing only the unique items in data.

3

4 Parameter:

5 data: a list

6

7 Return value: a new list of the unique values in data,

8 in their original order

9 """

10

11 duplicateIndices = [ ] # indices of duplicate items

12 for index in range(len(data)):

13 if index is not in duplicateIndices:

14 positions = indices of later duplicates of data[index]

15 duplicateIndices.extend(positions)

16

17 unique = data.copy()

18 for index in duplicateIndices:

19 remove data[index] from unique

20

21 return unique

To implement the red portion of the if statement on line 13, we need to search for index in the list duplicateIndices. We could use the Python in operator (if index in duplicateIndices:), but let’s instead revisit how to write a search function from scratch. Recall that, in Section 6.5, we developed a linear search algorithm (named find) that returns the index of the first occurrence of a substring in a string. We can use this algorithm as a starting point for an algorithm to find an item in a list.

Reflection 8.18 Look back at the find function on Page 283. Modify the function so that it returns the index of the first occurrence of a target item in a list.

The linear search function, based on the find function but applied to a list, follows.

def linearSearch(data, target):

"""Find the index of the first occurrence of target in data.

Parameters:

data: a list object to search in

target: an object to search for

Return value: the index of the first occurrence of target in data

"""

for index in range(len(data)):

if data[index] == target:

return index

return -1