Chapter 3. Cybersecurity Experimentation and Test Environments

Scientific inquiry and experimentation require time, space, and materials. Depending on type, scale, cost, and other factors, you may want to run an experiment on your laptop, in a lab, on a cloud, or in the real world. In the checklist for experimentation in Chapter 2, an early step in testing a hypothesis was to “identify the environment or test facility where you will conduct experimentation.” This chapter explores that topic and explains the trade-offs and choices for different types of experimentation.

One way to think about experimentation is in an ecosystem, in other words, the “living” environment and digital organisms. The most obvious ecosystem is the real world. Knowledge about cybersecurity science is certainly gained by observing and interacting with the real world, and some scientists firmly believe that experimentation should start with the real world because it grounds science in reality.

Sometimes the real world is inappropriate or otherwise undesirable for testing and evaluation. It would be unethical, dangerous, and probably illegal to study the effects of malware by releasing it onto the Internet. It is also challenging to observe or measure real-world systems without affecting them. This phenomenon is called the observer effect. Studying the way that users make decisions about cybersecurity choices is valuable, but once subjects know that a researcher is observing them, their behavior changes.

Consider a noncyber analogy. When scientists want to learn about monkeys, sometimes the scientists go into the jungle and observe the monkeys in the wild. The advantage is an opportunity to learn about the monkeys in an undisturbed, natural habitat. Disadvantages include the cost and inconvenience of going into the jungle, and the inability to control all aspects of the experiment. Scientists also learn about monkeys in zoos. A zoo provides more structure and control over the environment while allowing the animals some freedom to exert their natural behavior. Finally, scientists learn about monkeys in cages. This is a highly restrictive ecosystem that enables the scientist to closely monitor and control many variables but greatly inhibits the free and natural behavior of the animal. Each environment is useful for different purposes.

Scientists use the term ecological validity to indicate how well a study approximates the real world. In a study of passwords generated by participants for fictitious accounts versus their real passwords, the experimenters said “this is the first study concerning the ecological validity of password creation in user studies.…” In many cases, and especially in practical cybersecurity, test environments that reflect the production environment are preferred because you want the test results to mimic performance of the same solution in the wild. Unfortunately, there is no standard measurement or test for ecological validity. It is the experimenter’s duty to address challenges to validity.

This chapter will look at environments and test facilities for cybersecurity experimentation. The first section introduces modeling and simulation, one way to test hypotheses offline. Then we’ll look at desktop, cloud, and testbed options that offer choices in cost and scale. Finally, we’ll discuss datasets that you can use for testing. Keep in mind that there may be no single right answer for how to conduct your tests and experiments. In fact, you might choose to use more than one. People who study botnet behavior, for example, often start with a simulation, then run a controlled test on a small network, and compare these results to real-world data.

Modeling and Simulation

Modeling and simulation are methods of scientific exploration that are carried out in artificial environments. For the results to be useful in the real world, these techniques require informed design and clear statement of assumptions, configurations, and implementations. Modeling and simulation are especially useful in exploring large-scale systems, complex systems, and new conceptual designs. For example, they might be used to investigate an Internet of the future, or how malware spreads on an Internet scale. Questions such as these might only be answered by modeling and simulation, especially if an emergent behavior is not apparent until the experimental scale is large enough.

While “modeling and simulation” are often used together as a single discipline, they are individual concepts. Modeling is the creation of a conceptual object that can predict the behavior of real systems under a set of assumptions and conditions. For example, you could create a model to describe how smartphones move around inside a city. Simulation is the process of applying the model to a particular use case in order to predict the system’s behavior. The smartphone simulation could involve approximating an average workday by moving 100,000 hypothetical smartphones around a city of a certain size.

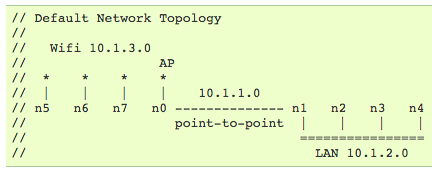

Modeling and simulation can be done in small environments (like on your laptop) and large environments (like supercomputers). Software like MATLAB and R can run many kinds of prebuilt simulations, and contain powerful programming languages with flexibility for new experiments. Simulations can be written in traditional programming languages, using special libraries devoted to those tasks. Some modeling and simulation tools are tailored for specific purposes. For example, ns-3 is an open source simulation environment for networking research. Figure 3-1 shows a basic wireless topology that can be created in ns-3 for a functional network simulation; it follows one online tutorial.

Figure 3-1. A simulated wireless network topology in ns-3

The usefulness of modeling and simulation is primarily limited by the ability to define and create a realistic model. Figuring out how to model network traffic, system performance, user behavior, and any other relevant variables is a challenging task. Within the cybersecurity community there remain unsolved questions about how to quantify and measure whether an experiment is realistic enough.

Simulating human behavior is strongly desirable in simulations. A simulated network without any simulated user traffic or activity limits its value, and can make the simulation ineffective. It can be useful to simulate normal activity in some scenarios, and malicious or anomalous activity in other experiments. One solution is to replay previously recorded traffic from real users or networks. This requires access to such datasets and limits your control over the type and tempo of activity. Another solution is to use customizable software agents. Note that these agents are more advanced than network traffic generators because they attempt to simulate real human behavior. Examples of software agents include NCRBot, built for the National Cyber Range, and SIMPass, specifically designed to simulate human password behavior. DASH is an agent-based platform for simulating human behavior that was designed specifically for the DETER Testbed (see Table 3-4).

Open Datasets for Testing

Publicly available datasets are good for science. A dataset, or corpus, allows researchers to reproduce experiments and compare the implementation and performance of tools using the same data. Public datasets also save you from having to find relevant and representative data, or worry about getting permission to use private or proprietary data. The Enron Corpus is one example of a public dataset, and contains over 600,000 real emails from the collapsed Enron Corporation. This collection has been a valuable source of data for building and testing cybersecurity solutions. Additional datasets are listed in Table 3-1.

The primary challenges with creating open datasets are realism and privacy. The community has not yet discovered how to create sufficiently realistic artificial laboratory-created cyber data.

Warning

Data from real, live networks and the Internet often contains sensitive and personal information, sensitive company details, or could reveal security vulnerabilities of the data provider if publicly distributed. Anonymization of IP addresses and personally identifiable information is one way to sanitize live data. Another is to restrict a dataset to particular uses or users.

| Dataset | Description |

|---|---|

| MIT Lincoln Laboratory IDS Datasets | Examples of background and attacks traffic |

| NSA Cyber Defense Exercise Dataset | Snort, DNS, web server, and Splunk logs |

| Internet-Wide Scan Data Repository | Large collection of Internet-wide scanning data from Rapid7, the University of Michigan, and others |

| Center for Applied Internet Data Analysis (CAIDA) Datasets | Internet measurement with collaboration of numerous institutions, academics, commercial and noncommercial contributors, including anonymized Internet traces, Code Red worm propagation, passive traces on high-speed links |

| Protected Repository for the Defense of Infrastructure Against Cyber Threats (PREDICT) | Several levels of data datasets (unrestricted, quasi-restricted, and restricted), including BGP routing data, blackhole data, IDS and firewall data, and unsolicited bulk email data |

| Amazon Web Services Datasets | Public datasets that can easily be attached to Amazon cloud-based applications, including the Enron Corpus (email), Common Crawl corpus (millions of crawled web pages), and geographical data |

Desktop Testing

Desktop testing is perhaps the most common environment for cybersecurity science. Commodity laptops and workstations often provide sufficient computing resources for developers, administrators, and scientists to run scientific experiments. Using one’s own computer is also convenient and cost-effective. Desktop virtualization solutions such as QEMU, VirtualBox, and VMware Workstation are widespread and offer the additional benefits of snapshots and revertible virtual machines.

DARPA has built and released an open source operating system extension to Linux called DECREE (DARPA Experimental Cybersecurity Research Evaluation Environment) that is tailored especially for computer security research and experimentation. The platform is intentionally simple (just seven system calls), safe (custom executable format), and reproducible (from the kernel up). DECREE is available on GitHub as a Vagrant box and also works in VirtualBox and VMware.

Scientific tests do not inherently require specialized hardware or software. Depending on what you are studying, common desktop applications such as Microsoft Excel can be used to analyze data. In other cases, it is convenient or necessary to use benchmarking or analysis software to collect performance metrics. Many users prefer virtualization to compartmentalize their experiments or to create a virtual machine pre-loaded with useful tools. Table 3-2 is a brief list of free and open source software that could be used for a science-oriented cybersecurity workstation.

| Software | Function |

|---|---|

| R | Statistical computing and graphics |

| gnuplot | Function and data plotting |

| Latex | Document preparation |

| Scilab | Numerical computation |

| SciPy | Python packages for mathematics, science, and engineering |

| iPython | Shell for interactive computing |

| Pandas | Python data manipulation and analysis library |

| KVM and QEMU | Virtualization |

| Wireshark | Network traffic capture and analysis |

| ns-3 | Modeling and simulation |

| Scapy | Packet manipulation |

| gcc | GNU compiler collection |

| binutils | GNU binary utilities |

| Valgrind | Instrumentation framework for dynamic analysis |

| iperf | TCP/UDP bandwidth measurement |

| netperf | Network performance benchmark |

| RAMspeed | Cache and memory benchmark |

| IOzone | Filesystem benchmark |

| LMbench | Performance analysis |

| Peach Fuzzer | Fuzzing platform |

Desktop testing is mostly limited by the resources of the machine, including memory, CPU, storage, and network speed. Comparing the performance and correctness of one encryption algorithm against another can be done with desktop-quality resources. An average workstation running ns-3 can easily handle thousands of simulated hosts. However, the US Army Research Laboratory ran an ns-3 scaling experiment in 2012 and achieved 360,448,000 simulated nodes using 176 servers. Malware analysis, forensics, software fuzzing, and many other scientific questions can be explored on your desktop, and they can produce significant and meaningful scientific results.

Cloud Computing

If a desktop environment is too limiting for your experiment, cloud computing is another option. Cloud computing offers one key set of advantages: cost and scale. Inherent in the definition of cloud computing is metered service, paying only for what you use. For experimentation, this is almost always cheaper than buying the same number of servers on-site. Given the seemingly “unlimited” resources of major cloud providers, you also benefit from very large-scale environments that are impractical and cost-prohibitive on-site. Compared with desktop testing, which is slow with limited resources, you can quickly provision a temporary cloud machine—or cluster of machines—with very large CPU, memory, or networking resources. In cases where your work can be parallelized, the cloud architecture can also help get your work done faster. Password cracking is commonly used as an example of an embarrassingly parallel workload, and cloud-based password cracking has garnered much media attention.

Cloud environments provide several scientifically relevant attributes. First, reproducibility is enhanced because you can precisely describe the environment used for a test. With Amazon Web Services, for example, virtual machines have a unique identifier (AMI) that you can reference. To document the hardware and software setup for your experiment, you might say, “I used ami-a0c7a6c8 running on an m1.large instance.” Microsoft, Rackspace, and other cloud providers have similar constructs, as shown in Table 3-3.

| Cloud provider | Description |

|---|---|

| Amazon Web Services | One of the largest and most widely used cloud providers, including a free tier |

| PlanetLab | Publicly available cloud-based global testbed aimed at network and distributed systems research |

| CloudLab | A “scientific instrument” with instrumentation and transparency to see how the system is operating, and the ability to publish hardware and software profiles for external repeatability |

Many companies, universities, and organizations now have their own on-premise cloud or cloudlike solution for internal use. This environment combines the attributes and benefits of cloud computing with increased security, local administration, and support. You may benefit from this kind of shared resource for conducting tests and experiments.

Cybersecurity Testbeds

Cybersecurity testbeds, sometimes called ranges, have emerged in the past decade to provide shared resources devoted to furthering cybersecurity research and experimentation. Testbeds can include physical and/or virtual components, and may be general purpose or highly specialized for a specific focus area. In addition to the collection of hardware and software, most testbeds include support tools: testbed control and provisioning, network or user emulators, instrumentation for data collection and situational awareness. Table 3-4 lists some testbeds applicable to cybersecurity. While some testbeds are completely open to the public, many are restricted to academia or other limited communities. Every year, new testbeds and testbed research appears at research workshops such as CSET and LASER.

For those committed to scientific experimentation in the long term, investing in public or private testbed infrastructure is advantageous. Your cybersecurity testbed could be dual-purposed for nonscientific business processes as well, including training, quality assurance, or testing and evaluation (see Table 3-4). Experiment facilities with limited capacity or capabilities can unfortunately limit the research questions that a researcher wishes to explore. Therefore, carefully consider what you will invest in before committing.

| Testbed | Focus area |

|---|---|

| Anubis | Malware analysis |

| Connected Vehicle Testbed | Connected vehicles |

| DETER | Cybersecurity experimentation and testing |

| DRAKVUF | Virtualized, desktop dynamic malware analysis |

| EDURange | Training and exercises |

| Emulab | Network testbed |

| Future Internet of Things (FIT) Lab | Wireless sensors and Internet of Things |

| Future Internet Research & Experimentation (FIRE) | European federation of testbeds |

| GENI (Global Environment for Network Innovations) | Networking and distributed systems |

| NITOS (Network Implementation Testbed using Open Source) | Wireless |

| OFELIA (OpenFlow in Europe: Linking Infrastructure and Applications) | OpenFlow software-defined networking |

| ORBIT (Open-Access Research Testbed for Next-Generation Wireless Networks) | Wireless |

| PlanetLab | Global-scale network research |

| StarBed | Internet simulations |

One testbed that you might not immediately think of is a human testbed. It can be tricky to find environments with a large number of voluntary human subjects willing to participate in your study or experiment. Amazon Mechanical Turk was designed as a marketplace for crowdsourced human work, where volunteers are paid small amounts for completing tasks. Researchers have found that results from Mechanical Turk are scientifically valid and can rapidly produce inexpensive high-quality data.

A Checklist for Selecting an Experimentation and Test Environment

Here is a 10-point checklist to use when deciding on an experimentation or test environment:

Identify the technical requirements for your test or experiment.

Establish what testbed(s) you may have access to based on your affiliation (e.g., business sector, public, academic, etc.).

Estimate how much money you want to spend.

Decide how much control and flexibility you want over the environment.

Determine how much realism, fidelity, and ecological validity you need in the environment.

Establish how much time, expertise, and desire you have to spend configuring the test environment.

Calculate the scale/size you plan the experiment to be.

Consider whether a domain-specific testbed (e.g., malware, wireless, etc.) is appropriate.

Identify the dataset that you will use, if required.

Create a plan to document and describe the environment to others in a repeatable way.

Conclusion

This chapter described important considerations for choosing the environment or test facility for experimentation. The key takeaways were:

Cybersecurity experiments vary in their ecological validity, which is how well they approximate the real world.

Modeling and simulation are useful in exploring large-scale systems, complex systems, and new conceptual designs. Modeling and simulation are primarily limited by the ability to define and create a realistic model.

There are a variety of open datasets available for tool testing and scientific experimentation. Public datasets allow researchers to reproduce experiments and compare tools using common data.

Cybersecurity experimentation can be done on desktop computers, cloud computing environments, and cybersecurity testbeds. Each brings a different amount of computational resources and cost.

References

David Balenson, Laura Tinnel, and Terry Benzel. Cybersecurity Experimentation of the Future (CEF): Catalyzing a New Generation of Experimental Cybersecurity Research.

Michael Gregg. The Network Security Test Lab (Indianapolis, IN: Wiley, 2015)

Mohammad S. Obaidat, Faouzi Zarai, and Petros Nicopolitidis (eds.). Modeling and Simulation of Computer Networks and Systems (Waltham, MA: Morgan Kaufmann, 2015)

William R. Shadish, Thomas D. Cook, and Donald T. Campbell. (2002) Experimental and Quasi-experimental Designs for Generalized Causal Inference (Boston, MA: Houghton Mifflin, 2002)

Angela B. Shiflet and George W. Shiflet. Introduction to Computational Science: Modeling and Simulation for the Sciences (Second Edition) (Princeton, NJ: Princeton University Press, 2014)

USENIX Workshops on Cyber Security Experimentation and Test (CSET)