Chapter 9. Malware Analysis

The field of malware analysis is a prime candidate for scientific exploration. Experimentation is worthwhile because the malware problem affects all computer users and because advances in the field can be broadly useful. Malware also evolves over time, creating an enormous dataset with a long history that we can study. Security researchers have conducted scientific experiments that produced practical advances not only in tools and techniques for malware analysis but also in knowing how malware spreads and how to deter and mitigate the threat.

People who do malware analysis every day know the value of automation for repetitive tasks balanced with manual in-depth analysis. In one interview with [IN]SECURE, Michael Sikorski, researcher and author of Practical Malware Analysis, described his approach to analyzing a new piece of malware. “I start my analysis by running the malware through our internal sandbox and seeing what the sandbox outputs,” followed by basic static analysis and then dynamic analysis which drive full disassembly analysis. Anytime you see the prospect for automation is the opportunity to scientifically study the process and later evaluate the improvements.

Recall from the discussion of test environments in Chapter 3 that cybersecurity science, particularly in malware analysis, can be dangerous. When conducting experimentation with malware, you must take extra precautions and safeguards to protect yourself and others from harm. We will talk more about safe options such as sandboxes and simulators in this chapter.

Malware analysis has improved in many ways with the help of scientific advances in many fields. Consider the disassembly of compiled binary code, a fundamental task in malware analysis. IDA Pro uses recursive descent disassembly to distinguish code from data by determining if a given machine instruction is referenced in another location. The recursive descent technique is not new, having been notably applied to compilers for decades and the subject of many academic research papers. Malware analysis tools, such as disassemblers, are enabled and improved through science.

An Example Scientific Experiment in Malware Analysis

For an example of scientific experimentation in malware analysis, look at the paper “A Clinical Study of Risk Factors Related to Malware Infections” by Lévesque et al. (2013). The abstract that follows describes an interesting malware-related experiment that looks not at the malware itself but at users confronted with malware infection. Humans are clearly part of the operating environment, including the detection of and response to malware threats. In this experiment, the researchers instrumented laptops for 50 test subjects and observed how the systems performed and how users interacted with them in practice. During the four-month study, 95 detections were observed by the AV product on 19 different user machines, and manual analysis revealed 20 possible infections on 12 different machines. The team used general regression, logistic regression, and statistical analysis to determine that user characteristics (such as age) were not significant risk factors but that certain types of user behavior were indeed significant.

Abstract from a malware analysis experimentThe success of malicious software (malware) depends upon both technical and human factors. The most security-conscious users are vulnerable to zero-day exploits; the best security mechanisms can be circumvented by poor user choices. While there has been significant research addressing the technical aspects of malware attack and defense, there has been much less research reporting on how human behavior interacts with both malware and current malware defenses.

In this paper we describe a proof-of-concept field study designed to examine the interactions between users, antivirus (anti-malware) software, and malware as they occur on deployed systems. The four-month study, conducted in a fashion similar to the clinical trials used to evaluate medical interventions, involved 50 subjects whose laptops were instrumented to monitor possible infections and gather data on user behavior. Although the population size was limited, this initial study produced some intriguing, non-intuitive insights into the efficacy of current defenses, particularly with regards to the technical sophistication of end users. We assert that this work shows the feasibility and utility of testing security software through long-term field studies with greater ecological validity than can be achieved through other means.

You can imagine that this kind of real-world testing would be useful for antivirus vendors and other cybersecurity solution providers. In the next section, we discuss the benefits of different experimental environments for malware analysis.

Scientific Data Collection for Simulators and Sandboxes

Experimental discovery with malware is a routine activity for malware analysts even when it isn’t scientific. Dynamic analysis, where an analyst observes the malware executing, can sometimes reveal functionality of the software more quickly than static analysis, where the analyst dissects and analyzes the file without executing it. Because malware inherently interacts with its target, the malware imparts change to the target environment, even in unexpected ways. Malware analysts benefit from analysis environments, especially virtual machines, that allow them to quickly and easily revert or rebuild the execution environment to a known state. Scientific reproducibility is rarely the primary goal of this practice.

Different malware analysis environments have their own methods for collecting scientific measurements during experimentation. Commercial, open source, and homegrown malware-analysis environments provide capabilities that aid the malware analyst in monitoring the environment to answer the questions “what does this malware do and how does it do work?” One open source simulator is ns-3, which has built-in data collection features and allows you to use third-party tools. The ns-3 framework is built to collect data during experiments. Traces can come from a variety of sources which signal events that happen in a simulation.

A trace source could indicate when a packet is received by a network

device and provide access to the packet contents. Tracing for pcap data is

done using the PointToPointHelper class. Here’s how

to set that up so that ns-3 outputs packet captures to

experiment1.pcap:

#include "ns3/point-to-point-module.h"

PointToPointHelper pointToPoint;

pointToPoint.EnablePcapAll ("experiment1");FlowMonitor is another ns-3 module that provides statistics on network flows. Here is an example of how to add flow monitoring to ns-3 nodes and print flow statistics.

// Install FlowMonitor on all nodes

FlowMonitorHelper flowmon;

Ptr<FlowMonitor> monitor = flowmon.InstallAll();

// Run the simulation for 10 seconds

Simulator::Stop (Seconds (10));

Simulator::Run ();

// Print per flow statistics

monitor->CheckForLostPackets ();

Ptr<Ipv4FlowClassifier> classifier =

DynamicCast<Ipv4FlowClassifier> (flowmon.GetClassifier ());

std::map<FlowId, FlowMonitor::FlowStats> stats = monitor->GetFlowStats ();

for (std::map<FlowId, FlowMonitor::FlowStats>::const_iterator i =

stats.begin (); i != stats.end (); ++i)

{

Ipv4FlowClassifier::FiveTuple t = classifier->FindFlow (i->first);

std::cout << "Flow " << i->first << " (" << t.sourceAddress << " -> "

<< t.destinationAddress << ")

";

std::cout << " Tx Bytes: " << i->second.txBytes << "

";

std::cout << " Rx Bytes: " << i->second.rxBytes << "

";

std::cout << " Throughput: " <<

i->second.rxBytes * 8.0 / 10.0 / 1024 / 1024

<< " Mbps

";

}It is easy to add software to collect measurements to sandboxes, especially in a virtual environment. You can install software on the virtual machine to collect network traffic, performance loads, and timing. There are now easily accessible tools for virtual machine introspection (VMI). VMI enables you to monitor the virtual machine from outside the machine using tools on the host. LibVMI, for example, allows you to access the guests’ memory and CPU state. The primary benefit is that the malware inside the guest virtual machine doesn’t know this observation is happening. PyVMI is a Python adapter for LibVMI, which allows you to instrument data collection however you want. You can also use a PyVMI library with Volatility for runtime memory analysis.

[~]$ # Copy the PyVMI address space file to Volatility's plugins folder

[~]$ cp libvmi/tools/pyvmi/pyvmiaddressspace.py volatility/plugins/addrspaces/

[~]$ # Create a LibVMI profile for the virtual machine

[~]$ # Here is an example entry for a Windows 7 VM

[~]$ cat /etc/libvmi.conf

win7 {

ostype = "Windows";

win_tasks = 0xb8;

win_pdbase = 0x18;

win_pid = 0xb4;

}

[~]$ # Run Volatility and specifying the Xen VM ("win7") as the URN for the

[~]$ # address space

[~]$ python vol.py -l vmi://win7 pslistGame Theory for Malware Analysis

Cybersecurity involves interactions between attackers and defenders, and it is effected by the probability of a system being attacked. This interaction can be described in terms of a game. Game theory is the study and mathematical modeling of decision-making which took its name in the 1940s and soon spread from economics to biology and later computer science. The “games” that we study could be chess or blackjack, but are just as useful to cyber-related topics such as peer-to-peer file sharing, online advertising auctions, and computer hackers. The games are represented by mathematical models that describe the players, the actions available to the players, payoffs of actions, and information. Players use strategies to pick actions based on information in order to maximize payoffs.

The execution of game theory goes something like this. Each player makes moves from a set of available moves and follows constraints established for the game. Players have an information set—knowledge of different variables at a particular point in time. Players also have a strategy, which is a rule that tells the player what action to take at each instance of the game, given her information set. The outcome is the set of interesting elements that arise due to selections that you, the modeler, pick for values of the information, actions, payoffs, and other variables after the game is played out.

Why would game theory be useful to you if the approach is largely theoretical? Here is one example to help illustrate the answer. Say you manage security for a startup video streaming company. You need to figure out how many servers to deploy around the globe with the assumption that these servers are likely to be attacked. Modeling the interaction between your company and adversaries can shed light on the number of servers required.

Game theory has an interesting solution concept called the Nash equilibrium, named for mathematician John Forbes Nash, Jr. (subject of the movie A Beautiful Mind). The Nash equilibrium describes a state in game theory where the optimal outcome of a game is one where no player has an incentive to deviate from his or her chosen strategy after considering an opponent’s choice. Mutually assured destruction is a form of the Nash equilibrium where the use of a weapon would destroy both sides.

Note

Honeypots are realistic-looking decoy systems that network defenders use to lure attackers. The honeypot allows a defender to observe and monitor attackers’ activities in a controlled environment, thus informing the defender about how to better defend his or her environment.

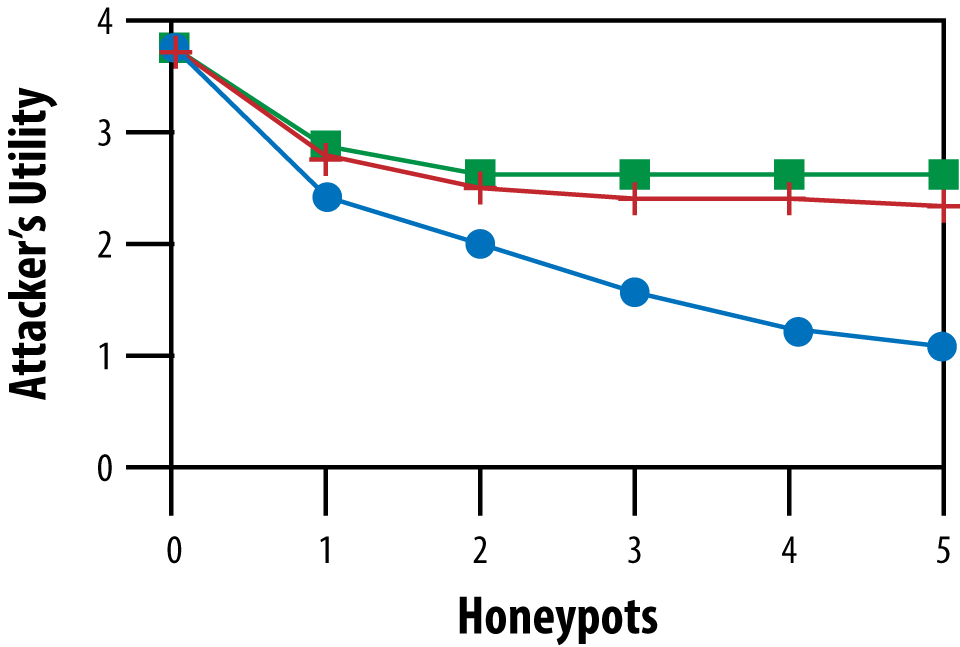

Several years ago, a team of researchers studied how honeypots could best be used to deceive potential attackers.1 Network administrators were familiar with the benefits of honeypots, but were simply guessing about the number and location of where to place them in the network to maximize network defense. You can imagine that malware analysts, on the other hand, might be interested in figuring out where to place honeypots that would be more likely to attract malware and generate data to aid malware analysis. In Figure 9-1, you can see one result from the researchers’ report showing that the Nash equilibrium strategy significantly outperforms the baseline strategies. Game theory also allowed these researchers to show that the optimal strategy for honeypots is randomized and distributed throughout the network, not always masquerading as the most or least valuable machines in the network.

Figure 9-1. Exploitability of defender strategies for the honeypot selection game; Xs = Nash Equilibrium, Squares = Maximum, Pluses = Random

Compare the Nash equilibrium to a cat-and-mouse game, which is the langauge often used to describe the relationship between attackers and defenders in cybersecurity. We tend to think of cybersecurity as having no equilibrium, but rather being a game of constant pursuit. Both attackers and defenders in cybersecurity can improve their situation by changing actions. Moving target defense (described in Chapter 10) is such a situation.

Another technique in game theory is called Stackelberg games. Stackelberg security games are attacker-defender games where the defender attempts to allocate limited resources to protect a set of targets, and the adversary plans to attack one such target. In these games, the defender first commits to a strategy assuming that the adversary can observe that strategy. Then, the adversary takes his response after seeing the defender’s strategy. This is perhaps a stronger claim than reality, where defenders may face uncertainty about the attacker’s ability to observe the defense strategy.

Game theory has some strong limitations to consider. First, players in real-life versions of a game are not as rational as a game suggests. Second, in most practical settings, the costs and motivations of other players is uncertain and difficult to determine. Third, game theory often ignores human components of real-life decisions such as the regret (or fear of regret) of poor decisions.

You can run game theory scientific experiments with mathematical software including Mathematica and Maple, or with free software such as Gambit and Gams. These software packages allow you to run the games described above, including Stackelberg security games.

Case Study: Identifying Malware Families with Science

In this section, we consider a hypothetical scientific experiment for a method of categorizing malware binaries into families. We first look at similar work that others have done on the topic to differentiate this experiment from that other work. Then, we describe how one might run a new experiment.

Building on Previous Work

From 2010−2014, DARPA ran a research program called Cyber Genome. The project was designed to determine whether there were discernible “fingerprints” of malware authors in malware binaries. Another goal was to study the ability to detect malware genealogy and construct “family trees” using similarity metrics. Some companies who participated in the research went on to create commercial products including Cynomix, a cloud-based “patent-pending cyber genome analysis technology” from Invincea.

Having heard about DARPA’s research, assume that you want to write a program to automatically determine whether you have seen a similar variant of a piece of malware before. This technique is similar to using biological DNA profiling to identify evidence of a genetic relationship. This feature might be useful in an antivirus product or network security appliance where you could tell users “we haven’t seen this file before, but it appears similar to Trojan X.”

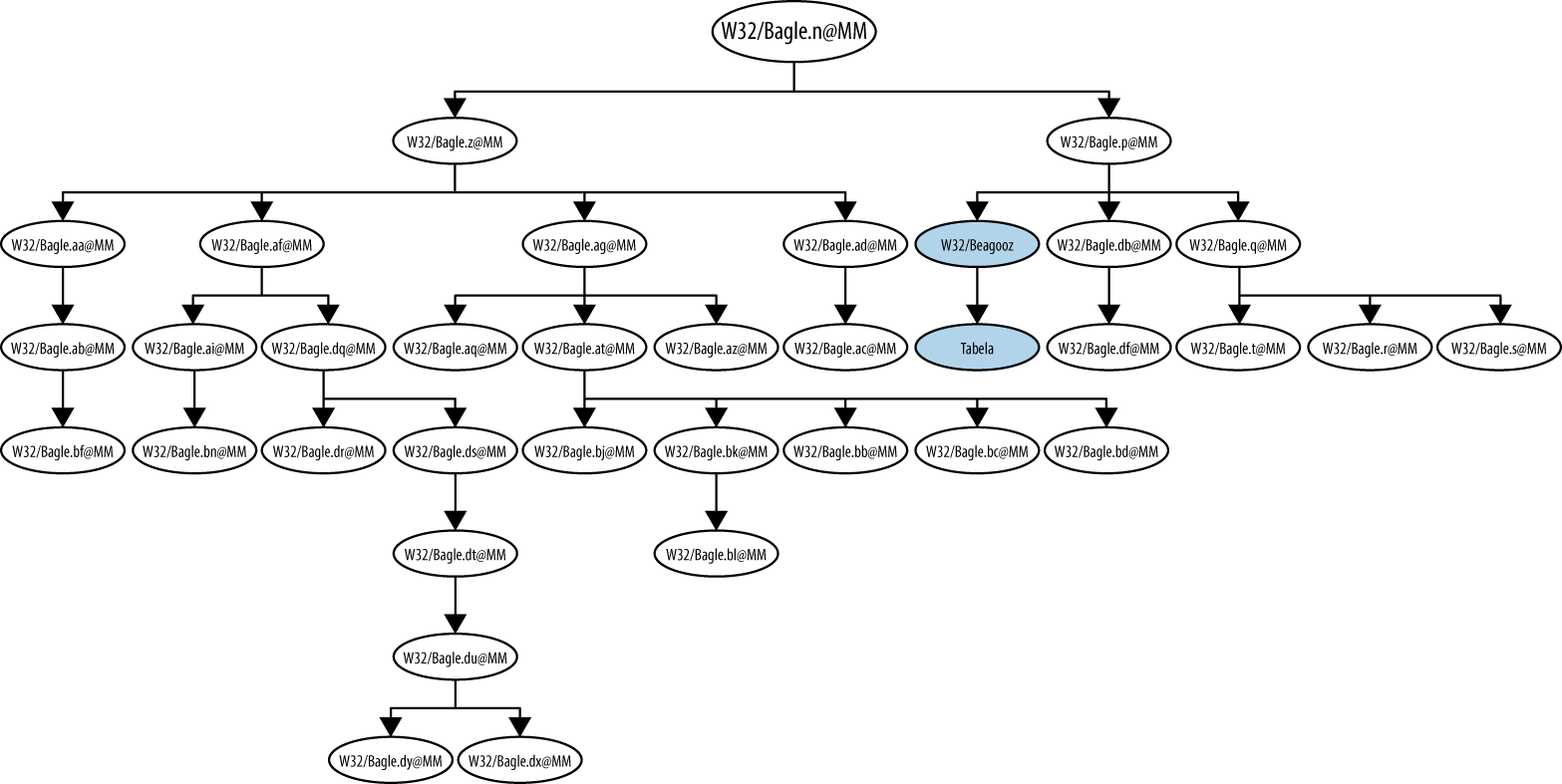

In 2009, researchers building algorithms for determining malware relationships constructed the image in Figure 9-2 to illustrate the biggest of 14 families of Bagle malware in their dataset. The research team also tested their algorithms with the Mytob malware family, which show relations between the Mydoom, Polybot, and Gaobot malware.

Figure 9-2. A family tree of variants of the Bagle worm, from “An Empirical Study of Malware Evolution” (2009)

A New Experiment

You have a novel algorithm for cyber DNA profiling that you think can match new, never-before-seen malware samples with digital relatives in a known set. You want to offer proof of this claim, and embark on experimentation to evaluate this hypothesis:

My cyber DNA profiling algorithm can correctly identify digital genetic relationships of new samples with known malware families with 95% confidence.

One approach to testing this hypothesis is to simply try malware samples and see how well your algorithm works. A key challenge with this kind of testing, however, is that you have to know ground truth to determine whether or not the algorithm performed correctly. During the testing, you essentially need to know the answer about which malware family the test sample belongs to before you start. Even if you are successful in this regard, perhaps by using known variants of malware as the researchers did with Mytob and Bagle, people may be unconvinced by your results.

Ground truth malware sets for testing are a challenge for everyone building malware solutions. One research team doing malware clustering in 2009 chose to use 2,658 samples (from a set of 14,212) that a majority of six antivirus programs agreed upon. In 2013, students at the Naval Postgraduate School manually created a small dataset of ground truth malware for their Ground Truth Malware Database. Malware samples with and without labels can be obtained for free from various websites, including Contagio Malware Dump, Open Malware, and VirusShare.

The sample size required for this experiment is impossible to estimate because there is no way to accurately determine whether you have a representative sample of all malware. People are likely to question your results if you use a sample size that they consider too small. In general, aim for hundreds or thousands of samples whenever possible.

How to Find More Information

Advances and scientific results in malware analysis are shared at cybersecurity and topic-specific workshops and conferences. REcon is one annual reverse engineering conference. Virus Bulletin hosts an annual international conference on malware and other cyber threats. The IEEE International Conference on Malicious and Unwanted Software (MALCON) presents theoretical and applied knowledge of malware-related tools, practices, and incidents. The Conference on Decision and Game Theory for Security (GameSec) is one forum for academic and industrial researchers in game theory and technological systems.

Conclusion

This chapter presented the application of cybersecurity science to malware analysis. The key takeaways are:

Malware analysis tools are enabled and improved through science. Security researchers conduct experiments that produce practical advances in tools and techniques for malware analysis, in knowing how malware spreads, and how to deter and mitigate the threat.

Malware analysis simulators and sandboxes sometimes have features that support the scientific method, including reproducible execution, reliable data collection, and virtual machine introspection.

Game theory is one way to practice cybersecurity science, analyzing interactions between attackers and defenders as a strategic game.

We applied the scientific method to malware analysis using a hypothetical case to study the ability to identify relationships between similar malware binaries.

References

Matthew O. Jackson. “A Brief Introduction to the Basics of Game Theory” (2011)

Michael Sikorski and Andrew Honig. Practical Malware Analysis (San Francisco, CA: No Starch Press, 2012)

Steven Tadelis. Game Theory: An Introduction (Princeton, NJ: Princeton University Press, 2013)

1 Radek Pıbil, Viliam Lisy, Christopher Kiekintveld, Branislav Bosansky, and Michal Pechoucek. “Game Theoretic Model of Strategic Honeypot Allocation in Computer Networks,” In: Decision and Game Theory for Security. Conference on Decision and Game Theory for Security, Budapest, 2012-11-05/2012-11-06. Heidelberg: Springer-Verlag, GmbH, 2012, pp. 201−220. Lecture Notes in Computer Science. vol. 7638. 2012.