Chapter 17. Testing database access

Dependency is the key problem in software development at all scales.... Eliminating duplication in programs eliminates dependency.

Kent Beck, Test-Driven Development: By Example

This chapter covers

- Challenges of database testing

- Introduction to DbUnit

- Advanced DbUnit techniques

- DbUnit best practices

The persistence layer (or, roughly speaking, the database access code) is undoubtedly one of the most important parts of any enterprise project. Despite its importance, the persistence layer is hard to unit test, mainly because of the following issues:

- Unit tests must exercise code in isolation; the persistence layer requires interaction with an external entity, the database.

- Unit tests must be easy to write and run; code that accesses the database can be cumbersome.

- Unit tests must be fast to run; database access is relatively slow.

We call these issues the database unit testing impedance mismatch, in reference to the object-relational impedance mismatch (which describes the difficulties of using a relational database to persist data when an application is written using an object-oriented language).

The database-testing mismatch can be minimized using specialized tools, one of them being DbUnit. In this chapter, we show how DbUnit can be used to test database code, and we not only describe its basic concepts but also present techniques that make its usage more productive and the resulting code easier to maintain.

17.1. The database unit testing impedance mismatch

Let’s take a deeper look at the three issues that compose the database unit testing impedance mismatch.

17.1.1. Unit tests must exercise code in isolation

From a purist point of view, tests that exercise database access code can’t be considered unit tests because they depend on an external entity, the almighty database. What should they be called then? Integration tests? Functional tests? Non-unit unit tests?

Well, the answer is, there is no secret formula! In other words, database tests can fit into many categories, depending on the context.

Pragmatically speaking, though, database access code can be exercised by both unit and integration tests:

- Unit tests are used to test classes that interact directly with the database (like DAOs). Such tests guarantee that these classes execute the proper SQL statements, assemble the right objects, and so on. Although these tests depend on external entities (such as the database and/or persistence frameworks), they exercise classes that are building blocks in a bigger application (and hence are units).

- Similarly, unit tests can be written to test the upper layers (like façades), without the need to access the database. In these tests, the persistence layer can be emulated by mocks or stubs.

- Even with both layers (persistence and upper) unit tested aside, it’s still necessary to write integration tests that access

the database, because some situations can arise only in end-to-end scenarios (like the dreaded lazy-initialization exception

that frequently haunts JPA applications).[1]

1 If you don’t have a clue as to what we’re talking about, don’t panic! JPA testing and its issues will be explained in detail in the next chapter.

Despite the theoretical part of the issue, there’s still a practical question: can’t the data present in the database get in the way of the tests?

Yes, it can, so before you run the tests, you must assure that the database is in a known state. Fortunately, there are plenty of tools that can handle this task, and in this chapter we analyze one of them, DbUnit.

17.1.2. Unit tests must be easy to write and run

It doesn’t matter how much a company, project manager, or technical leader praises unit tests; if they’re not easy to write and run, developers will resist writing them. Moreover, writing code that accesses the database isn’t the sexiest of tasks. One would have to write SQL statements, mix many levels of try-catch-finally code, convert SQL types to and from Java, and so on.

Therefore, in order for database unit tests to thrive, it’s necessary to alleviate the database burden on developers. Luckily again, there are tools that provide such alleviation, and DbUnit is one of them.

17.1.3. Unit tests must be fast to run

Let’s say you’ve overcome the first two issues and have a nice environment, with hundreds of unit tests exercising the objects that access the database, and where a developer can easily add new ones. All seems nice, but when a developer runs the build (and they should do that many times a day, at least after updating their workspace and before submitting changes to the source control system), it takes 10 minutes for the build to complete, 9 of them spent in the database tests. What should you do then?

This is the hardest issue, because it can’t always be solved. Typically, the delay is caused by the database access per se, because the database is probably a remote server, accessed by dozens of users. A possible solution is to move the database closer to the developer, by either using an embedded database (if the application uses standard SQL that enables a database switch) or locally installing lighter versions of the database.

Definition

Embedded database—An embedded database is a database that’s bundled within an application instead of being managed by external servers (which is the typical scenario). A broad range of embedded databases is available for Java applications, most of them based on open source projects, such as HSQLDB (http://hsqldb.org), H2 (http://h2database.com), Derby (http://db.apache.org/derby), and Java DB (http://developers.sun.com/javadb). Notice that the fundamental characteristic of an embedded database is that it’s managed by the application and not the language it’s written in. For instance, both HSQLDB and Derby support client/server mode (besides the embedded option), although SQLite (which is a C-based product) could also be embedded in a Java application.

In the following sections, we show how DbUnit (and, to a lesser degree, embedded databases) can be used to solve the database unit testing impedance mismatch.

17.2. Introducing DbUnit

DbUnit (http://www.dbunit.org) is a JUnit[2] extension created by Manuel LaFlamme in 2002, when Java Unit testing was still in its infancy and there was no framework focused on database testing. At about the same time, Richard Dallaway wrote an online article titled “Unit testing database code” (http://dallaway.com/acad/dbunit.html), which inspired the creation of DbUnit.

2 Although it can be used without JUnit.

Since then, DbUnit has became the de facto Java framework for database testing, and its development has had its up and downs. After a period of high activity, when most of its codebase was created, it faced a long drought. Fortunately, though, new developers jumped in and, during the time this book was written, several new versions have been cut, providing many improvements and bug fixes.

Although DbUnit comprises hundreds of classes and interfaces, DbUnit usage roughly consists of moving data to and from the database, and that data is represented by datasets (more specifically, classes that implement the IDataSet interface).

In the following subsections, we examine the basic usage of datasets and some other DbUnit artifacts.

17.2.1. The sample application

Throughout this chapter, we use DbUnit to unit test the persistence layer of a Java application. In order to simplify, this layer consists of only the interface defined in listing 17.1.

Listing 17.1. DAO interface used in the examples

public interface UserDao {

long addUser(User user) throws SQLException;

User getUserById(long id) throws SQLException;

}

The DAO implementation (using plain JDBC) isn’t shown here but is available for download at the book’s website. The User object is a simple POJO,[3] described in listing 17.2.

3 Plain old Java object.

Listing 17.2. Domain model used in the examples

public class User {

private long id;

private String username;

private String firstName;

private String lastName;

// getters and setters omitted

}

The User object will be mapped in the database by the users table, which can be created using the SQL statement shown in listing 17.3.

Listing 17.3. SQL script that creates the users table

CREATE TABLE users (

id INTEGER GENERATED BY DEFAULT AS IDENTITY(START WITH 1),

username VARCHAR(10),

first_name VARCHAR(10),

last_name VARCHAR(10) )

Finally, the examples will use HSQLDB as the database, because it’s Java based and doesn’t require any further configuration. HSQLDB is also flexible: it can be run as client/server or embedded, using disk or memory. The simplest—and fastest—mode is as an in-memory embedded database, and that’s the mode used in the examples.

17.2.2. Setting up DbUnit and running the sample application

DbUnit itself comprises just one JAR (dbunit.jar), and the only required external dependency is the logging framework, SLF4J (Simple Logging Façade for Java). SLF4J requires two JARs: slf4j-api.jar (which contains only the framework interfaces) and an implementation, such as slf4j-nop.jar (which doesn’t log anything; we talk more about logging later on). Of course, because DbUnit will connect to a database, it’s also necessary to add the JDBC driver to the classpath; in the sample application, it’s hsqldb.jar.

The sample application is available in two flavors: Maven and Ant. To run the tests on Maven, type 'mvn clean test'. Similarly, to use Ant instead, type 'ant clean test'. The application is also available as two Eclipse projects, one with the required libraries (under the lib directory) and another with the project itself.

17.3. Using datasets to populate the database

Let’s start by writing a unit test for the getUserById() method.

First, we need to analyze what the method does: it fetches data from the relational database, creates a Java object, populates that object with the fetched data, and then returns the object.

Consequently, our test case must prepare the database with the proper data, run the code being tested, and verify that the object returned contains the expected data. The latter two steps can be done with trivial Java code, whereas the former needs interaction with a database—that’s where DbUnit is handy.

Data in DbUnit is represented by a dataset (interface org.dbunit.dataset. IDataSet), and DbUnit provides dozens of different IDataSet implementations, the most common ones being XmlDataSet and FlatXmlDataset. In our example, we need to insert a row in the table users with the values id=1, username=ElDuderino, firstName=Jeffrey, and lastName=Lebowsky. Let’s see how this data could be represented on these two different dataset implementations, first in the XmlDataSet format (listing 17.4).

Listing 17.4. XmlDataSet representation of users table

<?xml version="1.0"?>

<!DOCTYPE dataset SYSTEM "dataset.dtd">

<dataset>

<table name="users">

<column>id</column>

<column>username</column>

<column>first_name</column>

<column>last_name</column>

<row>

<value>1</value>

<value>ElDuderino</value>

<value>Jeffrey</value>

<value>Lebowsky</value>

</row>

</table>

</dataset>

The XmlDataSet format is self-described, but it has two problems. First, it’s verbose. As you can see in the previous example, a simple row in a table required 16 lines of XML code. The advantage of this format is that it follows a well-defined DTD (available inside DbUnit’s JAR), which could avoid problems caused by bad XML syntax. But that brings up the second issue: DbUnit doesn’t validate the DTD (that DOCTYPE line could be removed or even changed to any garbage, and the result would be the same).

Although the lack of XML validation is a DbUnit bug, the verboseness of the format is a design option. A much simpler option is to use FlatXmlDataSet, where each line describes a row in the database. Listing 17.5 shows the same dataset using the flat XML format.

Listing 17.5. FlatXmlDataSet representation of users table (user.xml)

<?xml version="1.0"?>

<dataset>

<users id="1" username="ElDuderino"

first_name="Jeffrey" last_name="Lebowsky" />

</dataset>

The FlatXmlDataSet format is much clearer and easier to maintain,[4] so we use it in our examples. Listing 17.6 shows our first test case.

4 This format has its issues as well, which we will cover later in the chapter.

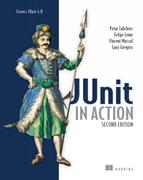

Listing 17.6. Initial test case for UserDaoJdbcImpl (UserDaoJdbcImplTest)

Our test case ![]() doesn’t need to extend any DbUnit or JUnit class. Although it doesn’t sound like a big deal in this example, being forced

to extend a superclass could be a big limitation in real life, especially if you use different testing frameworks such as

TestNG.[5]

doesn’t need to extend any DbUnit or JUnit class. Although it doesn’t sound like a big deal in this example, being forced

to extend a superclass could be a big limitation in real life, especially if you use different testing frameworks such as

TestNG.[5]

5 Such testing framework independence is a DbUnit 2.2+ feature; before that release, all classes had to extend DatabaseTestCase, which would make it hard to use DbUnit with TestNG.

Remember that test methods (such as ![]() and

and ![]() ) are still code, and hence “real code” best practices should be applied to them as well. In particular, because opening a

database connection can be an expensive operation (in terms of time and number of concurrent connections), it’s a good practice to open it at the beginning of the tests and close it at the end. Alternatively,

if a connection pool was used instead, these methods could be defined at @Before/@After, respectively.

) are still code, and hence “real code” best practices should be applied to them as well. In particular, because opening a

database connection can be an expensive operation (in terms of time and number of concurrent connections), it’s a good practice to open it at the beginning of the tests and close it at the end. Alternatively,

if a connection pool was used instead, these methods could be defined at @Before/@After, respectively.

At ![]() , connection is a just a regular JDBC connection (java.sql.Connection), but dbunitConnection is a DbUnit IDatabaseConnection instance. DbUnit uses IDatabaseConnection to encapsulate access to the database, and it provides implementations for the most common databases. The advantage of using

a specialized IDatabaseConnection implementation (HsqldbDatabaseConnection in this case) rather than the generic one (DatabaseConnection) is that it can handle nuances specific to that database, like conversion between nonstandard SQL types and Java classes.

, connection is a just a regular JDBC connection (java.sql.Connection), but dbunitConnection is a DbUnit IDatabaseConnection instance. DbUnit uses IDatabaseConnection to encapsulate access to the database, and it provides implementations for the most common databases. The advantage of using

a specialized IDatabaseConnection implementation (HsqldbDatabaseConnection in this case) rather than the generic one (DatabaseConnection) is that it can handle nuances specific to that database, like conversion between nonstandard SQL types and Java classes.

Notice that in this example, both connection and dbunitConnection were created using hardcoded values; in real projects, a better practice would be to define these settings externally, such

as in a property file. That would allow the same tests to be run in different environments, such as using alternate databases

or getting the connection from a pooled data source. Getting an IDataSet from an XML file is so common that it deserves its proper method ![]() . It’s also a good practice to load these XML files as resources in the classpath, instead of physical files in the operating

system. And if the file isn’t found in the classpath (which is a common scenario when you’re writing the test cases—you might

have forgotten to create the file or misspelled its name), getResourcesAsStream()returns null (instead of throwing a resource-not-found exception), which in turn would cause a NullPointerException in the caller method. Because an NPE is a sure bet to cause a lot of headaches, adding an assertNotNull()

. It’s also a good practice to load these XML files as resources in the classpath, instead of physical files in the operating

system. And if the file isn’t found in the classpath (which is a common scenario when you’re writing the test cases—you might

have forgotten to create the file or misspelled its name), getResourcesAsStream()returns null (instead of throwing a resource-not-found exception), which in turn would cause a NullPointerException in the caller method. Because an NPE is a sure bet to cause a lot of headaches, adding an assertNotNull() ![]() with a meaningful message is a one-liner that can save you time troubleshooting.

with a meaningful message is a one-liner that can save you time troubleshooting.

Finally, ![]() the DbUnit job is effectively performed. We have a dataset (setupDataSet) with the data we want to insert and a connection to the database (dbunitConnection). All that’s left is the class responsible to do the dirty work, and that’s DatabaseOperation or, more precisely, one of its subclasses. In this case, we use CLEAN_INSERT, which first deletes all rows from all tables defined in dataset and then inserts the new ones. See the next section for

more details about DatabaseOperation and its implementations.

the DbUnit job is effectively performed. We have a dataset (setupDataSet) with the data we want to insert and a connection to the database (dbunitConnection). All that’s left is the class responsible to do the dirty work, and that’s DatabaseOperation or, more precisely, one of its subclasses. In this case, we use CLEAN_INSERT, which first deletes all rows from all tables defined in dataset and then inserts the new ones. See the next section for

more details about DatabaseOperation and its implementations.

A final note about transactions: in order to keep this example simple, we aren’t dealing with transactions at all, and every database operation is done in one transaction (using JDBC’s autocommit feature). Although this simplification is fine here, usually the test cases must be aware of the transaction semantics. For instance, a transaction could be started before the test (using a @Before method) and committed afterwards (using @After), the test cases could explicitly set the autocommit property (particularly if the connections were obtained from a pool), and so on. The exact approach depends on many factors, like the type of test (unit or integration) being written and the underlying technologies used in the code tested (like pure JDBC or ORM frameworks).[6]

6Chapter 18 offers more detailed insight on transactions in JPA-based test cases.

The UserDaoJdbcImplTest class in listing 17.6 defines four methods, but only one is effectively a test case—the other three are helpers used in the testing infrastructure. As you add more test cases, the proportion tends to revert, although it’s common to add more helper methods as well. These helpers can typically be reused by other test classes.

Consequently, it’s good practice to create a superclass that defines only these infrastructure methods and then make the real test classes extend this superclass. This best practice applies not only to DbUnit-based tests but also to testing in general.

The class in the listing 17.6 example could be refactored into two classes, an AbstractDbUnitTestCase superclass (with methods setupDatabase(), close-Database(), and getDataSet()) and the UserDaoJdbcImplTest properly speaking (which for now contains only testGetUserById()). The next example will use this technique.

Now let’s look at DatabaseOperation in detail.

17.3.1. DatabaseOperation dissected

DatabaseOperation is the class used to send datasets to the database. Although DbUnit makes good use of interfaces and implementations, DatabaseOperation is one of the few concepts that isn’t defined by an interface. Instead, it’s defined as an abstract class with an abstract method (execute(), which takes as parameters a dataset and a database connection). The reason for such different design is to facilitate its use, because the abstract class also defines constants for its implementations (DbUnit was created on Java 1.3/1.4 when there was no native enum), so the operations can be executed with just one line of code, as we saw in the first example.

The implementations provided by DatabaseOperation as static fields are as follows:

- UPDATE—Update the database with the dataset content. It assumes the rows in the dataset already exist in the database (that is, the database contains rows with the same primary keys as the rows in the dataset); if they don’t exist, DbUnit will throw an exception.

- INSERT—Insert the dataset rows in the database. Similarly to UPDATE, DbUnit will throw an exception if any row already exists. Because rows are inserted in the order in which they appear in the dataset, care must be taken when tables have foreign keys: rows must be defined in the dataset using the right insertion order.

- REFRESH—This is a mix of INSERT and UPDATE: rows that exist in the dataset but not in the database are inserted, but rows that exist in both are updated.

- DELETE—Delete from the database only the rows present in the dataset, in the reverse order in which they appear in the dataset.

- DELETE_ALL—Delete from the database all rows from each table present in the dataset. Although it’s an aggressive approach in many cases (such as when the database is shared by many developers or it contains data that shouldn’t be deleted), it’s the simplest way to guarantee the state of the database (because sometimes the database might contain data that isn’t deleted by DELETE and hence can interfere in the test results).

- TRUNCATE—Same purpose as DELETE_ALL, but faster, because it uses the SQL’s TRUNCATE TABLE. The only drawback is that not all databases support such SQL operation.

- CLEAN_INSERT—Composite operation, first calls DELETE_ALL, then INSERT, using the same dataset.

- TRANSACTION(operation)—Not exactly a field but a method. It creates a DatabaseOperation that will wrap another operation inside a database transaction. It’s particularly useful in cases where tables have circular dependency and rows can’t be inserted outside a transaction with deferred constraints.

- CLOSE_CONNECTION(operation)—Another wrapper, it executes the operation and then automatically closes the connection. This can be useful in teardown methods.

- NONE—An empty operation that does nothing.

That’s it; we wrote our first DbUnit test case and set the foundation for most of the tests to come.

17.4. Asserting database state with datasets

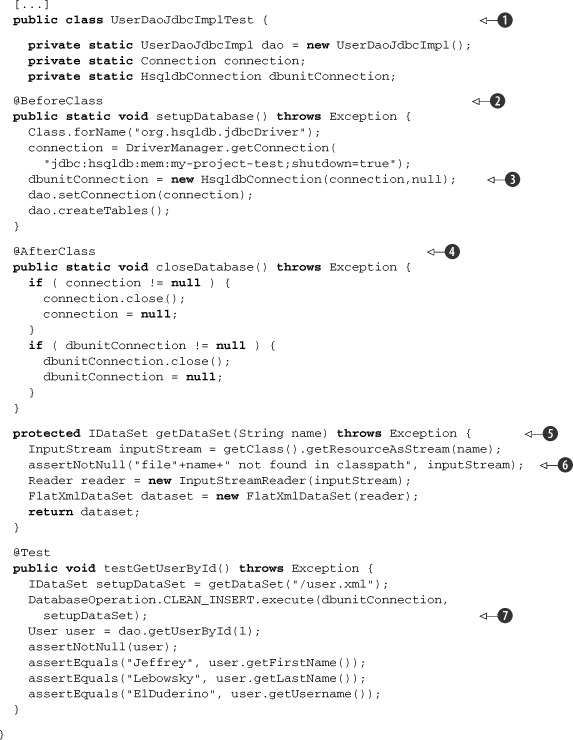

Another common use of datasets is to assert that the database has the right data after an insert or update. Back to our DAO example: we need a test case for the addUser() method, and the workflow for this test is the opposite from getUserById()’s test. Here we first create a User object, ask our DAO to persist it, then use a DbUnit dataset to assert that the data was properly inserted. The code snippet in listing 17.7 is our first attempt at such a test case.

Listing 17.7. Test case for addUser() method

We start by extending the DbUnit class AbstractDbUnitTestCase ![]() . The @Test method testAddUser() first creates and populates a User object

. The @Test method testAddUser() first creates and populates a User object ![]() .

.

Next, we ask DAO to persist the User object and check that it generates a valid ID ![]() (a positive value, in this case) and that the returned ID matches the object. Such checking is important in situations where

the caller needs to use the new ID (for instance, in a web page that generates a link to edit the newly created object). It

may sound trivial and redundant in this case, but you’d be surprised by how often the ID isn’t set correctly in more complex

combinations (like multilayered applications with Spring managing transactions and Hibernate being used as the persistence

layer).

(a positive value, in this case) and that the returned ID matches the object. Such checking is important in situations where

the caller needs to use the new ID (for instance, in a web page that generates a link to edit the newly created object). It

may sound trivial and redundant in this case, but you’d be surprised by how often the ID isn’t set correctly in more complex

combinations (like multilayered applications with Spring managing transactions and Hibernate being used as the persistence

layer).

At ![]() we use the same dataset (user.xml) and same method (getDataSet()) as the previous example—it’s always a good practice to reuse code and testing artifacts. At

we use the same dataset (user.xml) and same method (getDataSet()) as the previous example—it’s always a good practice to reuse code and testing artifacts. At ![]() IDatabaseConnection.createDataSet() returns a dataset containing all tables (with all rows) in the database. Finally, at

IDatabaseConnection.createDataSet() returns a dataset containing all tables (with all rows) in the database. Finally, at ![]() Assertion.assertEquals() compares both datasets, and if a discrepancy is found, it fails with the proper message. Notice that we did not statically

import this method. Because both JUnit’s Assert and DbUnit’s Assertion have assertEquals() methods, if you static import both, chances are a call to assertEquals() will reference the wrong one.

Assertion.assertEquals() compares both datasets, and if a discrepancy is found, it fails with the proper message. Notice that we did not statically

import this method. Because both JUnit’s Assert and DbUnit’s Assertion have assertEquals() methods, if you static import both, chances are a call to assertEquals() will reference the wrong one.

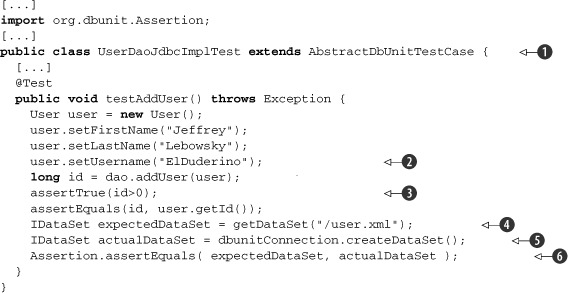

In the previous example, testGetUserById() fetched an object from the database and asserted its attributes, but testAddUser() did the opposite (instantiated a new object, filled its attributes, and then inserted it in the database). As your test cases grow, more and more tests will need to do the same. To avoid the DRY (don’t repeat yourself) syndrome, it’s better to create a helper class containing methods and constants for these tasks. Doing so improves reuse in the Java classes and facilitates maintenance of dataset files. If you use Java 5 and static imports, accessing members in this helper class is simple. Listing 17.8 shows a revised version of testAddUser() using this practice.

Listing 17.8. Revised version of testAddUser(), using a helper class

The newUser() method is statically imported ![]() and called

and called ![]() in the helper class

in the helper class ![]() . The helper class itself

. The helper class itself ![]() provides an assertUser() method

provides an assertUser() method ![]() (used by testGetUserById()); it uses constants

(used by testGetUserById()); it uses constants ![]() to define the User attributes.

to define the User attributes.

17.4.1. Filtering data sets

The method IDatatabaseConnection.createDataSet() returns a dataset representing the whole database. This is fine in our example, where the database has only one table and the database access is fast (because it’s an embedded database). In most other cases, though, it would be overkill—either the test would fail because it would return tables that we’re not interested in or it would be slow to run.

The simplest way to narrow the field is by filtering the tables returned by createDataSet(), by passing an array containing the name of the tables that should be returned. Applying that change to the previous example, we have the following:

IDataSet actualDataSet = dbunitConnection.createDataSet(

new String[] { "users" } );

A similar approach is to use a FilteredDataSet to wrap the dataset containing the full database:

IDataSet actualDataSet = dbunitConnection.createDataSet();

FilteredDataSet filteredDataSet = new FilteredDataSet(

new String[] {"users"}, actualDataSet );

A FilteredDataSet decorates a given dataset using an ITableFilter, which in turn is a DbUnit interface that defines which tables belongs to a dataset and in what order they should be retrieved. In the previous example, the constructor implicitly creates a SequenceTableFilter, which returns the tables in the order defined by the array passed as a parameter.

Finally, a third option is to use a QueryDataSet, where you explicitly indicate which table should be present in the dataset. The next example returns a dataset that has the exact same contents as the previous example:

QueryDataSet actualDataSet = new QueryDataSet(dbunitConnection);

actualDataSet.addTable("users");

Comparing the three options, the overloaded createDataSet() is obviously simpler in this case. But the other options have their usefulness in different scenarios:

- QueryDataSet is more flexible, because you can also provide the query that will be used to populate the dataset (if you don’t provide

one, it assumes SELECT * FROM table_name). Using a query, you can narrow the field even more, by selecting only the rows the test case is interested in, which is

useful when the database contains a lot of data, for example:

QueryDataSet actualDataSet = new QueryDataSet(dbunitConnection);

actualDataSet.addTable("users",

"select * from users where id = " + id); - FilteredDataSet can be used with any ITableFilter, such as a filter that returns tables in the right foreign-key dependency order (as will be shown in section 17.6).

17.4.2. Ignoring columns

If you run the previous test method alone, it will pass. But if you append it to the existing UserDaoJdbcImplTest class and run the whole class, it will fail:

junit.framework.AssertionFailedError: row count (table=users) expected:<1>but was:<2>

at junit.framework.Assert.fail(Assert.java:47)

This is a common problem when using DbUnit—and one of the most annoying. A test case passes when it’s run alone but fails when run as part of a suite. In this particular case, our user.xml dataset has only one row, and this is what we assume the database should contain after we insert the User object. When many tests are run, it fails because the database contains something else. Where does the extra row come from? From the previous test (testGetUserById()) execution, because that method also inserted a row. We could say the culprit is the previous test, which did not clean itself up.[7] It’s the test case’s responsibility to make sure the database is in a known state before the test is run. This is achieved by using the same dataset and a DELETE_ALL operation:

7 The DbUnit documentation states: “Good setup don’t need cleanup” and you “should not be afraid to leave your trace after a test.” This isn’t always true, though, as you’ll see in section 17.8.

IDataSet setupDataSet = getDataSet("/user.xml");

DatabaseOperation.DELETE_ALL.execute(dbunitConnection, setupDataSet);

If we add this code and run the whole test again, the test fails:

junit.framework.AssertionFailedError: value (table=users, row=0, col=id):expected:<1> but was:<2>

at junit.framework.Assert.fail(Assert.java:47)

at org.dbunit.Assertion.assertEquals(Assertion.java:147)

at org.dbunit.Assertion.assertEquals(Assertion.java:80)

Now the number of rows is correct (because we deleted all rows from the users table before running the test), but the ID of the inserted row doesn’t match what we expect. This is another common problem, and it happens frequently when the database generates the ID. Although we cleaned up the rows, we didn’t reset the primary key generation, so the next row inserted has an ID of 2 and not 1 as we expected.

There are many solutions for this issue, ranging from simple (like ignoring the ID column in the comparison) to sophisticated (like taking control of how IDs are generated—we show this approach in the next chapter). For now, let’s just ignore the ID column, using the method Assertion.assertEqualsIgnoreCols() instead of Assertion.assertEquals():

Assertion.assertEqualsIgnoreCols( expectedDataSet, actualDataSet, "users",

new String[] { "id" } );

Listing 17.9. Second approach for testAddUser()

[...]

public class UserDaoJdbcImplTest extends AbstractDbUnitTestCase {

@Test

public void testAddUserIgnoringIds() throws Exception {

IDataSet setupDataSet = getDataSet("/user.xml");

DatabaseOperation.DELETE_ALL.execute(dbunitConnection, setupDataSet);

User user = newUser();

long id = dao.addUser(user);

assertTrue(id>0);

IDataSet expectedDataSet = getDataSet("/user.xml");

IDataSet actualDataSet = dbunitConnection.createDataSet();

Assertion.assertEqualsIgnoreCols( expectedDataSet, actualDataSet,

"users", new String[] { "id" } );

}

}

Although ignoring the column is the simplest approach to the problem (in the sense that it doesn’t require any advanced technique), it introduces a maintenance bug: now it’s necessary to keep both the dataset file (user.xml) and the Java class (which has references to both the users table and the ID column) in sync. In the next section we examine a better (although not yet optimal) approach, where the database information (table and column names) is contained just in the dataset file.

17.5. Transforming data using ReplacementDataSet

DbUnit provides a simple yet powerful IDataSet implementation called ReplacementDataSet. In the following sections, we explore how it can be used to solve some common problems.

17.5.1. Using ReplacementDataSet to handle the different IDs issue

Let’s try a different approach for the “same dataset, different IDs” issue. Instead of ignoring the ID column, couldn’t we dynamically change a dataset value before the test case uses it?

Changing the data inside a dataset would be quite complicated. Fortunately, though, DbUnit provides the ReplacementDataSet class, which decorates an existing dataset to dynamically replace tokens, according to your needs.

Back to our problem: first we need to change the dataset XML file, by replacing the hardcoded IDs by a token (we used [ID] in this case, but it could anything, as long as that string doesn’t occur somewhere else in the dataset). Listing 17.10 shows the new XML.

Listing 17.10. user-token.xml, a dataset that uses a token for IDs

<?xml version="1.0"?>

<dataset>

<users id="[ID]" username="ElDuderino"

first_name="Jeffrey" last_name="Lebowsky" />

</dataset>

Next, we change the test case class, with the changed (and new) methods shown in listing 17.11.

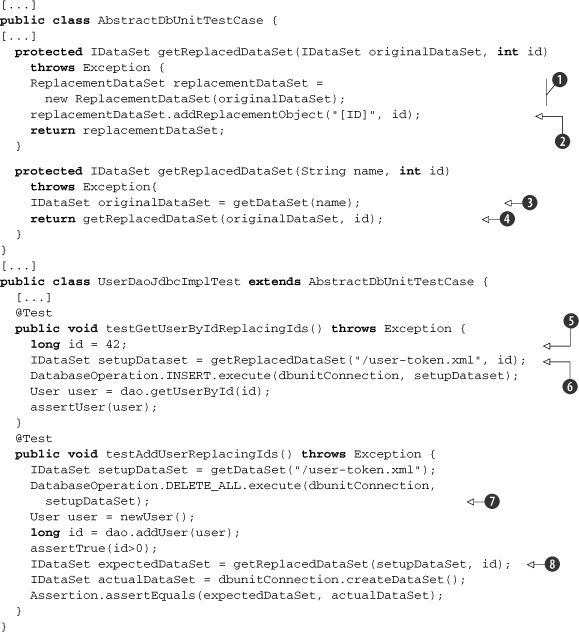

Listing 17.11. Changes on UserDaoJdbcImplTest to handle dynamic IDs

In the first getReplacedDataSet() utility method, the ReplacementDataSet constructor ![]() takes as a parameter the dataset it’s decorating. Notice that the original dataset remains intact, and the method returns

a new dataset. Next, we define what must be replaced

takes as a parameter the dataset it’s decorating. Notice that the original dataset remains intact, and the method returns

a new dataset. Next, we define what must be replaced ![]() , using addReplacementObject() (the API also provides an addReplacementSubstring() method, but addReplacementObject() is the most common option). The second getReplacedDataSet() utility method gets a dataset

, using addReplacementObject() (the API also provides an addReplacementSubstring() method, but addReplacementObject() is the most common option). The second getReplacedDataSet() utility method gets a dataset ![]() and calls the first getReplacedDataSet() method we defined

and calls the first getReplacedDataSet() method we defined ![]() .

.

In the first test, the value of the ID ![]() doesn’t matter, as long as the same value is used in both places (getReplacedDataSet() and getUserById()). Next, we call

doesn’t matter, as long as the same value is used in both places (getReplacedDataSet() and getUserById()). Next, we call ![]() the new method, which reads the original XML file and returns a dataset with the [ID] dynamically replaced.

the new method, which reads the original XML file and returns a dataset with the [ID] dynamically replaced.

In the second test, we use DELETE_ALL ![]() to clean up the database; note that the IDs are irrelevant. But if we used another DatabaseOperation (like DELETE), we’d need to use a decorated dataset here as well. For the next part of the test

to clean up the database; note that the IDs are irrelevant. But if we used another DatabaseOperation (like DELETE), we’d need to use a decorated dataset here as well. For the next part of the test ![]() , we need to use a decorated dataset in the assertion; we use the ID returned by the DAO itself. If the test still fails because

of a wrong ID, then something is wrong with the DAO class.

, we need to use a decorated dataset in the assertion; we use the ID returned by the DAO itself. If the test still fails because

of a wrong ID, then something is wrong with the DAO class.

Next, let’s look at how DbUnit handles NULL values.

This example uses hard coded 1s in method calls:

IDataSet setupDataset =

getReplacedDataSet("/user-token.xml", 1);

DatabaseOperation.INSERT.execute(dbunitConnection,

setupDataset);

User user = dao.getUserById(1);

If you didn’t write that code (or even if you wrote it a long time ago), the following questions might pop up in your mind: What does the 1 in the first line stands for? Is that method replacing just one line? Does it sound like that 1 is directly related to the 1 in the third line? Now take a look back at the testGetUserById() method from the previous listing. Would you have the same doubts? This example illustrates how a subtle change (which costs just a few seconds of a developer’s time) makes code much more understandable (and consequently easier to maintain). Create variables or constants whenever appropriate, even if the variable will be used only once.

17.5.2. Handling NULL values

Another situation where a ReplacementDataSet is useful is to represent NULL values (SQL’s NULL, not Java’s null) in a dataset. The way DbUnit handles NULL in FlatXmlDataSet files is tricky and deserves clarification:

- If a column exists in the database but is missing in an XML line, then the value of that column (for that row in the dataset) is assumed to be NULL.

- But that applies only if the column was present in the first line of XML. DbUnit uses the first line to define which columns a table is made of.

- This is true unless the database columns are defined in a DTD!

It’s frustrating to spend hours trying to figure out why your test case is failing, just to realize you were caught by a DbUnit idiosyncrasy.[8] Let’s try to make it clearer with a naive example, where we have two XML files with the exact same lines but in different order (as shown by listings 17.12 and 17.13).

8 This situation has improved in more recent versions of DbUnit. Although the idiosyncrasy still exists, at least now DbUnit is aware of the problems it can cause and logs a warning message whenever it finds a line in the XML file with different columns than the first one.

Listing 17.12. user-ok.xml, where the first line has all columns

<?xml version="1.0"?>

<dataset>

<users id="1" username="ElDuderino"

first_name="Jeffrey" last_name="Lebowsky" />

<users id="2" username="TheStranger"/>

</dataset>

Listing 17.13. user-reverted.xml, where the first line is incomplete

<?xml version="1.0"?>

<dataset>

<users id="2" username="TheStranger"/>

<users id="1" username="ElDuderino"

first_name="Jeffrey" last_name="Lebowsky" />

</dataset>

Now let’s write a test case (listing 17.14) that does the following:

- It uses the first dataset (user-ok.xml) to populate the database. Because the second line doesn’t have the first_name and last_name attributes, DbUnit inserts NULL in the equivalent database columns.

- Then it compares the database contents with the contents of both datasets (user-ok.xml and user-reverted.xml).

Because both datasets contain the same lines (but in different order), both tests should pass, but the user-reverted.xml assertion fails, complaining that the dataset expected two columns but the database contained four:

junit.framework.ComparisonFailure: column count(table=users, expectedColCount=2, actualColCount=4) expected:

<[[id, username]]> but was:<[[FIRST_NAME, ID, LAST_NAME, USERNAME]]>

at org.dbunit.Assertion.assertEquals(Assertion.java:244)

at org.dbunit.Assertion.assertEquals(Assertion.java:204)

at org.dbunit.Assertion.assertEquals(Assertion.java:186)

The reason for such failure is item 2: DbUnit uses the content of the first line to define how many columns it is expecting thereafter.

Listing 17.14. Test case that demonstrates the missing column issue

[...]

public class NULLTest extends AbstractDbUnitTestCase {

@Test

public void testNULL() throws Exception {

IDataSet okDataset = getDataSet("/user-ok.xml");

DatabaseOperation.CLEAN_INSERT.execute(dbunitConnection, okDataset);

IDataSet actualDataSet = dbunitConnection.createDataSet();

assertEquals(okDataset, actualDataSet);

IDataSet revertedDataSet = getDataSet("/user-reverted.xml");

Assertion.assertEquals(revertedDataSet, actualDataSet);

}

}

There are many ways to resolve this issue. The two most common ones are

- Using a ReplacementDataSet

- Using a DTD

Let’s look at both in detail.

Using a ReplacementDataSet

We already used a ReplacementDataSet to replace the [ID] token; we could reuse the same dataset to also replace NULL values. All we need is to define a new token (say, [NULL]) and add another replacement role:

replacementDataSet.addReplacementObject("[NULL]", null);

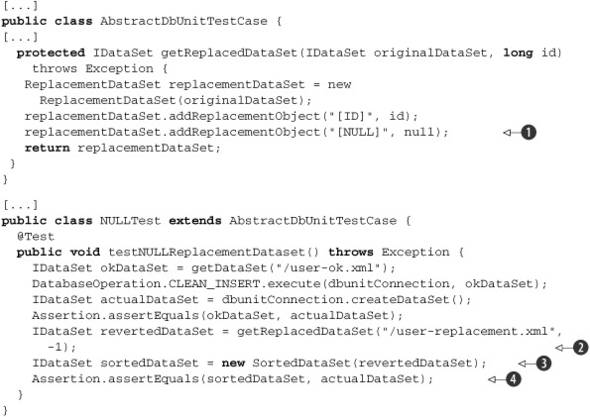

Listing 17.15 shows all relevant changes, with comments.

Listing 17.15. ReplacementDataSet approach to the missing column issue

This is the same method we used before; we just added a new replacement role here ![]() .

.

In ![]() , in order to simplify, we’re using the method that expects an ID and passing a bogus value (-1), because it won’t be replaced anyway (as the dataset doesn’t have any [ID] token). Ideally, though, getReplacedDataSet() should be overloaded to handle the situation where the ID isn’t necessary. Better yet, the tokens to be replaced shouldn’t

be passed as parameters (we look at how to do that later, in section 17.7.3).

, in order to simplify, we’re using the method that expects an ID and passing a bogus value (-1), because it won’t be replaced anyway (as the dataset doesn’t have any [ID] token). Ideally, though, getReplacedDataSet() should be overloaded to handle the situation where the ID isn’t necessary. Better yet, the tokens to be replaced shouldn’t

be passed as parameters (we look at how to do that later, in section 17.7.3).

If in ![]() we compared actualDataSet against revertedDataSet (which is the original XML file with the proper [NULL] tokens replaced), the assertion would still fail. Although this time the number of columns is correct, the order would be

reverted; the database query would return rows 1 and 2, whereas the dataset defines the order as 2 and 1. In order to solve

this issue without changing the order in the dataset (whose wrong order is the whole purpose of the example), we wrapped the dataset in a SortedDataSet

we compared actualDataSet against revertedDataSet (which is the original XML file with the proper [NULL] tokens replaced), the assertion would still fail. Although this time the number of columns is correct, the order would be

reverted; the database query would return rows 1 and 2, whereas the dataset defines the order as 2 and 1. In order to solve

this issue without changing the order in the dataset (whose wrong order is the whole purpose of the example), we wrapped the dataset in a SortedDataSet ![]() , which returns a new dataset with the tables sorted by the order in which its columns were defined in the database. In this

example, it would sort first by ID, which is the first column in the CREATE TABLE statement. If any two or more lines had the same ID (which isn’t the case here, because ID is the primary key), then it would

sort them by username (the second column), first_name (third column), and so on.

, which returns a new dataset with the tables sorted by the order in which its columns were defined in the database. In this

example, it would sort first by ID, which is the first column in the CREATE TABLE statement. If any two or more lines had the same ID (which isn’t the case here, because ID is the primary key), then it would

sort them by username (the second column), first_name (third column), and so on.

Using a DTD

You can explicitly define the database structure (instead of letting DbUnit implicitly “guess” it when it reads the XML first line) in a DTD and add that DTD to the dataset header, as shown in listings 17.16 and 17.17.

Listing 17.16. New version of user-reverted.xml, with DTD declaration

<?xml version="1.0"?>

<!DOCTYPE dataset SYSTEM "target/test-classes/user.dtd">

<dataset>

<users id="2" username="TheStranger"/>

<users id="1" username="ElDuderino"

first_name="Jeffrey" last_name="Lebowsky" />

</dataset>

Listing 17.17. user.dtd

<!ELEMENT dataset (users*)>

<!ATTLIST users

id CDATA #REQUIRED

username CDATA #REQUIRED

first_name CDATA #REQUIRED

last_name CDATA #REQUIRED

>

Notice the odd location (target/test-classes/) of the user.dtd file; that’s the relative directory where our test artifacts are compiled. Unfortunately, DbUnit supports only physical locations, so the DTD path must be relative to the project’s root directory or an absolute path in the filesystem. Ideally, DbUnit should support looking up the DTDs in the classpath.

Once user-reverted.xml is changed, the method testNULL() can be run again (without any change) and will succeed.

Both approaches have their advantages and disadvantages. Using a DTD adds more validation to the datasets (which can prevent other errors), at the cost of a more complicated initial setup (creating the DTD, making sure it’s in the right place, and so on). On the other hand, using [NULL] makes the datasets clearer, because its presence explicitly indicates that a value is NULL. It also has a setup cost, but if you’re already using a ReplacementDataSet, that cost is minimal (just one more line of code). Hence, the decision depends more on the project context and personal preferences than on the technical merits of the approach per se.

Logging

Earlier on we said that DbUnit would warn us about the missing column in the XML issue, but if you run the testNULL() method, it fails without any warning. If that happens, it means DbUnit logging is somehow disabled.

There’s no API or DbUnit configuration file to explicitly enable logging. Instead, you must configure SLF4J in your project. It isn’t the intent of this book to explain how SLF4J works or why the DbUnit chose this tool (until release 2.2, DbUnit used no logging framework at all). Briefly, you need to add to the project’s classpath a JAR containing a real SL4J implementation. In our cases we didn’t see any log because the project’s Ant script is explicitly using sl4j-nop.jar, which doesn’t log anything (this is also the default implementation included in your Maven project if you just add DbUnit as a project dependency).

If your project already uses a logging framework (like log4j or Java’s java.util. logging), chances are there’s an SLF4J provider for that framework, so you can just include its JAR (such as sl4j-log4j12.jar) in the classpath. If you don’t use any logging framework, the easiest solution (the one that requires no extra configuration) is to add sl4j-simple.jar. This provider sends info messages to System.out, sends warnings and errors to System.err, and ignores all other logging levels (like debug and trace).

Adding sl4j-simple.jar to the classpath and running the test case again, we get the aforementioned warning:

474 [main] WARN org.dbunit.dataset.xml.FlatXmlProducer - Extra columns online 2. Those columns will be ignored.

474 [main] WARN org.dbunit.dataset.xml.FlatXmlProducer - Please add theextra columns to line 1, or use a DTD to make sure the value of those

columns are populated or specify 'columnSensing=true' for your

FlatXmlProducer.

474 [main] WARN org.dbunit.dataset.xml.FlatXmlProducer - See FAQ for moredetails.

Whenever you’re facing problems that sound like a DbUnit bug or usage issue, try enabling the lower logging levels[9] like debug or trace. DbUnit will output a lot of debugging information, which will hopefully help you resolve the issue.

9 Notice that you’ll need a better SL4J implementation than sl4j-simple in this case.

17.6. Creating datasets from existing database data

So far in the examples, we created dataset XML files from scratch, in a bottom-up approach. This is the ideal situation when you’re doing pure TDD, but often you need to create these files from the data already in the database.

For instance, you might be working on a big project, where the database development is done by a separate team of DBAs and a QA team maintains a database instance full of testing data. Typically in these situations, your Java code (and test cases) will have to deal with complex scenarios, like tables with dozens of columns and many foreign key relationships. It would be unpractical and error prone to create the datasets from scratch, so you can leverage the existing data to create the initial files and then prune the data your test cases don’t need.

Even if your project is simpler and you can create the XML files from scratch in your typical development cycle, you may face bugs that are hard to reproduce in a test case. For instance, the user accesses a web application, executes a couple of inserts and updates, and then a page displays a table with incorrect data. In this case, instead of trying to reproduce all steps through code in your test case, you could just manually reproduce the steps, then export the relevant database contents to a dataset, and reproduce only the buggy method call in the test case.

In its simplest form, exporting a dataset is a straightforward task: all you need to do is create a dataset object containing the data you want to export (for instance, using DatabaseConnection.createDataset() to export the whole database or a QueryDataSet to narrow the data) and then call a static method from the dataset format class (like FlatXmlDataSet):

IDataSet fullDataSet = dbunitConnection.createDataSet();

FileOutputStream xmlStream = new FileOutputStream("full-database.xml");

FlatXmlDataSet.write(fullDataSet, xmlStream);

Similarly, you could also generate the dataset’s DTD:

FileOutputStream dtdStream = new FileOutputStream("full-database.dtd");

FlatDtdDataSet.write(fullDataSet, dtdStream);

This simple approach works fine most of the time, but it has a drawback: the tables in the dataset are created in no particular order. Therefore, if one table has a foreign key constraint with another table, and they’re generated in the wrong order, attempts to insert the dataset into the database will mostly likely fail (because of constraint violations).

Fortunately, the solution for this problem is also simple; all it takes is to wrap the dataset in a FilteredDataSet that uses a DatabaseSequenceFilter, which in turn will return the tables in the right order:

IDataSet fullDataSet = dbunitConnection.createDataSet();

ITableFilter filter = new DatabaseSequenceFilter(dbunitConnection);

FilteredDataSet filteredDatSet = new FilteredDataSet(filter, fullDataSet);

FileOutputStream xmlStream = new FileOutputStream("full-database.xml")

FlatXmlDataSet.write(fullDataSet, xmlStream);

17.7. Advanced techniques

In this section, we analyze techniques that make DbUnit usage easier to understand and maintain. These techniques don’t employ any particular DbUnit feature, just advanced Java and JUnit APIs.

17.7.1. DbUnit and the Template Design Pattern

If you look at the previous examples from a higher level, you might realize they all follow the same workflow:

- Prepare the database, using a dataset XML file.

- Develop some Java code for the test.

- (Optionally) Compare the state of the database with another dataset file.

Going further, only step 2 is specific for each test; steps 1 and 3 are the same (except for the XML file locations) for all tests. In the examples so far, we achieved a level of reuse by delegating part of step 1 to helper methods (like getDataSet() and getReplacedDataSet()), but we can improve reuse even more if we use the Template Design Pattern.

The Template (or Template Method) is a behavioral design pattern described in the classic GoF[10] book. In this pattern, a superclass defines the overall skeleton of an algorithm (that is, the template) but leaves some details to be filled in by subclasses.

10 See Design Patterns: Elements of Reusable Object-Oriented Software, by Eric Gamma et al (the Gang of Four).

Back to our example: the template is the workflow we just described, where a superclass defines the skeleton and takes care of steps 1 and 3, and the subclasses are responsible for step 2.

The most common—and simpler—way to implement the template pattern in Java is through an abstract superclass that implements the template and defines abstract methods for the steps the subclasses must implement. This isn’t a good approach in our case, because it would allow each subclass to have only one test method, which in turn would require dozens or even hundreds of test classes in a typical project.

A second approach is to create an interface that defines the steps the template method isn’t responsible for and receive an implementation (which is typically an anonymous class) of that interface as parameter. This is the approach the Spring Framework uses in its templates (like JdbcTemplate and HibernateTemplate), and it’s the approach used in listing 17.18.

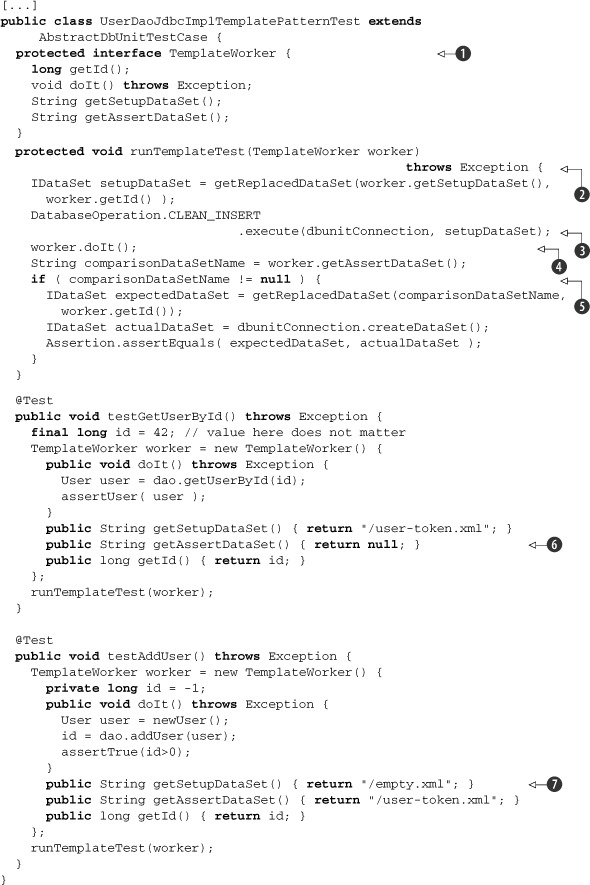

Listing 17.18. UserDaoJdbcImplTest using the Template Design Pattern

We start by defining the interface TemplateWorker ![]() , which will be implemented as an inner class by the test cases that use the template method. In the template method runTemplateTest

, which will be implemented as an inner class by the test cases that use the template method. In the template method runTemplateTest ![]() , notice that

, notice that ![]() ,

, ![]() , and

, and ![]() match the three workflow steps described previously.

match the three workflow steps described previously.

In the first test method, testGetUserById(), it isn’t necessary to check the database state after this test case is run, so null is returned ![]() .

.

In the second test method testAddUser(), we use CLEAN_INSERT to prepare the database; in this test case we opted for a total cleanup with "/empty.xml" ![]() , a dataset that contains all tables used by the test cases (its content is shown in listing 17.19).

, a dataset that contains all tables used by the test cases (its content is shown in listing 17.19).

Listing 17.19. empty.xml, dataset used to clean up the database

<?xml version="1.0"?>

<dataset>

<users/>

</dataset>

The problem with this approach is that it’s too verbose and unnatural, because we have to create an inner class on each test method and do the work inside that class (instead of inside the method). In the next section we show a much cleaner approach.

17.7.2. Improving reuse through custom annotations

Since their introduction to the Java language, annotations have grown in popularity and are used by many development tools, such as JUnit itself (we’ve been using JUnit annotations, such as @Test, throughout this book). What most developers don’t realize, though, is that they don’t need to limit themselves to using third-party annotations; they can create their own project-specific annotations. Although Joshua Bloch preaches the opposite in Effective Java Second Edition,[11] we believe that custom annotations can boost a project’s productivity, particularly in the test-cases arena.

11 Item 35, page 175: “Most programmers will have no need to define annotation types.”

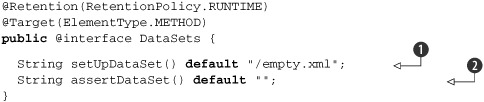

That being said, let’s use custom annotations as a third approach to the template pattern implementation. The idea is to clear the noise out of the test method and let it focus on step 2. We use annotations to pass the information necessary to complete steps 1 and 3. Listing 17.20 shows the custom annotation, and listing 17.21 shows the new test methods.

Listing 17.20. Custom annotation @DataSets

This listing defines an annotation called DataSets. The annotation attribute setUpDataSet ![]() defines the dataset used to prepare the database. If not specified, the default value is "/empty.xml", which will clean up the entire database.

defines the dataset used to prepare the database. If not specified, the default value is "/empty.xml", which will clean up the entire database.

Similarly, assertDataSet() defines the dataset that will be used to check the database state after the test is executed. Because not all test cases

must check that (typically, test cases for methods that load data don’t), the default is "" ![]() , which in our case means no dataset. (Notice that the meaning of an annotation value is relevant to the classes that will use the annotation. Because it isn’t possible to use null, we use the empty string to indicate no dataset.)

, which in our case means no dataset. (Notice that the meaning of an annotation value is relevant to the classes that will use the annotation. Because it isn’t possible to use null, we use the empty string to indicate no dataset.)

Listing 17.21. UserDaoJdbcImplTest using custom annotations

[...]

public class UserDaoJdbcImplAnnotationTest extends

AbstractDbUnitTemplateTestCase {

@Test

@DataSets(setUpDataSet="/user-token.xml")

public void testGetUserById() throws Exception {

User user = dao.getUserById(id);

assertUser(user);

}

@Test

@DataSets(assertDataSet="/user-token.xml")

public void testAddUser() throws Exception {

User user = newUser();

id = dao.addUser(user);

assertTrue(id>0);

}

}

Compare this new test class with the previous example (listing 17.19). You barely notice the template pattern being used.

The magic is done by the AbstractDbUnitTemplateTestCase, which extends AbstractDbUnitTestCase and uses a custom TestRunner; this TestRunner intercepts the test methods[12] and plays the template role. Listing 17.22 shows this new superclass.

12 This technique is explained in more detail in appendix B.

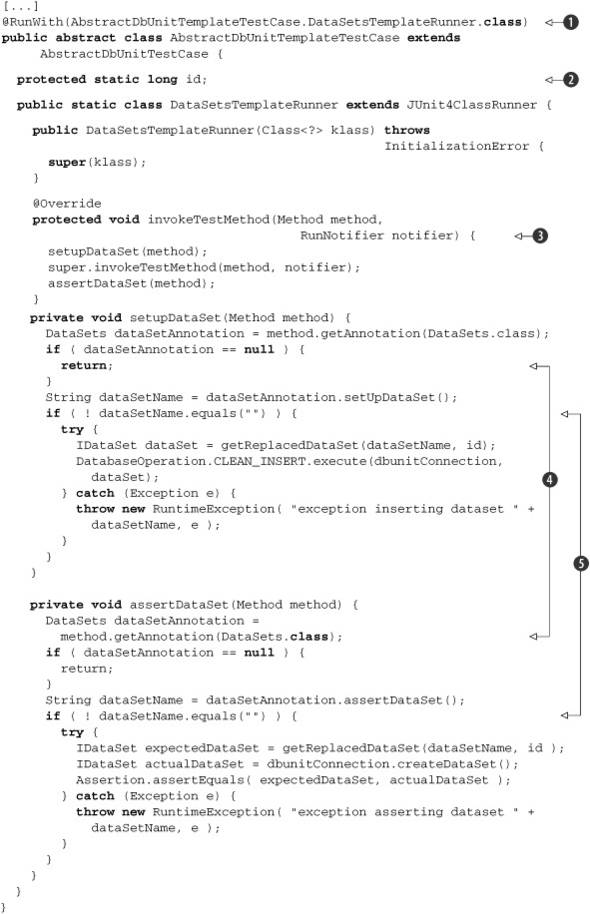

Listing 17.22. New superclass, AbstractDbUnitTemplateTestCase

The dirty work is done by DataSetsTemplateRunner ![]() , a static inner class. AbstractDbUnitTemplateTestCase itself does almost nothing other than using @RunWith to drive the test.

, a static inner class. AbstractDbUnitTemplateTestCase itself does almost nothing other than using @RunWith to drive the test.

The variable id ![]() can’t be passed around in annotations, because it can have dynamic values (like in testAddUser()), and annotations can receive only literals (because they’re defined at compile time), so it must be shared among test cases.

Such an approach might annoy purists, but keeping state in tests is not only acceptable in some cases but often is the best

approach for a given problem. The state (id) in this case is passed around only to solve an issue caused by the way the tests are executed.

can’t be passed around in annotations, because it can have dynamic values (like in testAddUser()), and annotations can receive only literals (because they’re defined at compile time), so it must be shared among test cases.

Such an approach might annoy purists, but keeping state in tests is not only acceptable in some cases but often is the best

approach for a given problem. The state (id) in this case is passed around only to solve an issue caused by the way the tests are executed.

The template method invokeTestMethod() ![]() defines the three steps of the workflow described earlier. First, the annotation is read

defines the three steps of the workflow described earlier. First, the annotation is read ![]() and the dataset is used only if the annotation value isn’t an empty string

and the dataset is used only if the annotation value isn’t an empty string ![]() .

.

17.7.3. Using Expression Language in datasets

Our getReplacedDataSet() method has two issues: it requires passing the tokens to be replaced (like id) as parameters and then explicitly calls addReplacementObject() for each token.

If later on we create a test case where it’s necessary to replace two ids, and we know they will be generated in sequence, the method is

IDataSet dataSet = getReplacedDataSet(dataSetName, id, id+1);

The method changes to

public static IDataSet getReplacedDataSet(IDataSet originalDataSet,

long id, long id2) {

[...]

replacementDataSet.addReplacementObject("[ID2]", id2);

[...]

}

And the dataset XML would have both [ID] and [ID2]:

<dataset>

<users id="[ID]" ... />

<users id="[ID2]" ... />

</dataset>

That looks like an ugly hack, not to mention the changes to DataSetsTemplateRunner. A much cleaner approach would be to figure out the tokens dynamically, where the following apply:

- Values to be replaced aren’t passed by parameter but added to a context.

- Tokens are evaluated so that values in the context are replaced dynamically. It’s better yet if the syntax allows basic operations, like [ID+1].

Fortunately, there’s a standard Java technology that fits perfectly in this description, the Expression Language (EL).

EL has been around in Java for many years and has made steady progress toward being an integral part of the platform: first as part of JSP tag attributes on JSTL 1.0 and JSF 1.0, then available anywhere inside a JSP 2.0 page, and finally as a standalone Java API (javax.el). Besides the standard Java EL, many open source projects offer alternative EL libraries, like OGNL (http://ognl.org) and Marmalade (http://marmalade.codehaus.org).

Using EL, the same dataset would be expressed as

<dataset>

<users id="${id}" ... />

<users id="${id+1}" ... />

</dataset>

And the getReplacedDataSet () would not require id parameters anymore; instead, the id would be bound to the EL context:

getContext().bind( "id", id );

IDataSet dataSet = getReplacedDataSet(dataSetName);

Listing 17.23 shows the changes necessary to support EL. Notice that, despite EL being a standard API, it’s still necessary to create an ELContext implementation (ELContextImpl, in our example), and that isn’t a trivial task (because of lack of documentation). It’s out of the scope of this book to explain how that class was implemented (although the code is available for download), but a quick explanation can be found at the author’s blog (http://weblogs.java.net/blog/felipeal/).

Listing 17.23. New AbstractDbUnitELTemplateTestCase that supports EL

We start by creating a new EL context object ![]() before each test and making it available through the method getContext()

before each test and making it available through the method getContext()![]() , so the test cases could bind more objects to the context as needed. Using the EL context, the id is bound

, so the test cases could bind more objects to the context as needed. Using the EL context, the id is bound ![]() before the setup dataset is read and bound again

before the setup dataset is read and bound again ![]() before the assert dataset is read. This is necessary because the test case might have changed the id (like on testAddUser()), and the id is represented by a primitive type (if it was a mutable object, this second bind would not be necessary).

before the assert dataset is read. This is necessary because the test case might have changed the id (like on testAddUser()), and the id is represented by a primitive type (if it was a mutable object, this second bind would not be necessary).

In the method getReplacedDataSet() ![]() , the only relevant change (aside from the absence of the id parameter) is that it now uses a custom dataset (ELAwareFlatXmlDataSet).

, the only relevant change (aside from the absence of the id parameter) is that it now uses a custom dataset (ELAwareFlatXmlDataSet).

In the class ELAwareFlatXmlDataSet ![]() , we override the method row(), such that it passes each dataset value to the EL engine for evaluation. The code at

, we override the method row(), such that it passes each dataset value to the EL engine for evaluation. The code at ![]() shows a subtle trick: instead of passing the EL context as a parameter to ELAwareFlatXmlDataSet constructor, it’s accessed with a call to getContext(). This is necessary because row() is used during XML parsing, and FlatXmlDataSet parses the XML in the constructor. This is a bad practice on DbUnit’s part—a constructor shouldn’t call methods that can

be overridden by subclasses.

shows a subtle trick: instead of passing the EL context as a parameter to ELAwareFlatXmlDataSet constructor, it’s accessed with a call to getContext(). This is necessary because row() is used during XML parsing, and FlatXmlDataSet parses the XML in the constructor. This is a bad practice on DbUnit’s part—a constructor shouldn’t call methods that can

be overridden by subclasses.

We note the optimization at ![]() : if the value isn’t enclosed in ${}, there’s no need to evaluate it.

: if the value isn’t enclosed in ${}, there’s no need to evaluate it.

Finally, we get to where the EL engine does its job of evaluating the expression ![]() , according to the values bound in the context.

, according to the values bound in the context.

Listing 17.24 shows the new test case. Notice that the only differences from the previous version (listing 17.20) are the superclass and the dataset being used (which is shown in listing 17.25).

Listing 17.24. UserDaoJdbcImplELTest using custom annotations

[...]

public class UserDaoJdbcImplAnnotationTest extends

AbstractDbUnitELTemplateTestCase {

@Test

@DataSets(setUpDataSet="/user-EL.xml")

public void testGetUserById() throws Exception {

User user = dao.getUserById(id);

assertUser(user);

}

@Test

@DataSets(assertDataSet="/user-EL.xml")

public void testAddUser() throws Exception {

User user = newUser();

id = dao.addUser(user);

assertTrue(id>0);

}

}

Listing 17.25. user-EL.xml, dataset that uses EL syntax for tokens

<?xml version="1.0"?>

<dataset>

<users id="${id}" username="ElDuderino"

first_name="Jeffrey" last_name="Lebowsky" />

</dataset>

Now that we’ve covered some of the advanced techniques you can use with DbUnit, we look next at best practices that are specific to database access testing.

17.8. Database access testing best practices

Throughout this chapter, we described in detail best practices that apply to our examples. In this final section, we present additional best practices.

17.8.1. Use one database per developer

When you run a database test case, the test can leave the database in an unknown state. Furthermore, the actions of other users can affect the test results. One solution to this problem is to have each developer and build machine use their own database.

If you’re fortunate enough to be developing an application that can be run in different database products (for instance, if it uses only standard SQL statements or if the SQL is managed by an ORM tool), then the best approach is to use an embedded database. Not only would each developer would have their own instance, but the database access would be fast.

If the embedded database approach isn’t possible, then you should try to install a matching database in each developer’s machine (many database vendors, such as Oracle, provide a light version of their product, a good option for this approach).

If neither the embedded nor the light database is possible, then try to allocate one database instance for each developer in the database server. In the worst case, if not even that is possible (too many instances could be costly in resources or in license fees), allocate a few instances to be used for test cases and a few others for regular development.

17.8.2. Make sure the target database is tested

If you can implement the embedded database approach, but the final application will be deployed in another database product, make sure the application is tested against the target database. A reasonable approach is to let the developers use the embedded database but have a daily or continuous build use the target database.

Don’t make the mistake of assuming databases can be substituted at will; there are always incompatibilities, even if you use an ORM tool. The sooner you catch these issues, the better.

17.8.3. Create complementary tests for loading and storing data

As the old sayings goes, “everything that goes up must come down.” If you write a test case that verifies an object is correctly stored in the database, chances are you should write the test that asserts it’s loaded correctly. And if you keep that in mind when you write one of them, it makes it easy to write the other one; you could reuse the same dataset or even write special infrastructure to handle both.

17.8.4. When writing load test cases, cover all the basic scenarios

All versions of testGetUserById() used in this chapter covered just one scenario: the database contained a row with the tested ID and only that row. That was enough for the purpose of describing the techniques, but in a real project you should test other scenarios, such as testing an ID that doesn’t exist in the database, testing an empty database, testing when more than one row is available, and testing joins when multiple rows are available.

The last scenario deserves special attention, because it’s a common issue when you use an ORM tool and you’re testing a method that uses a complex query where one or more tables are selected using a join. Let’s say a User object has a List<Telephone> relationship, the Telephone class is mapped to a telephones table with a foreign key to the users table, you’re using JPA in the persistence layer, and the getUserById() must do a join fetch to get all telephones in just one query (if you aren’t familiar with these concepts, don’t worry; we explain them better in chapter 18). You write just one test case, where the dataset contains only one row in both users and telephone rows, and you implement the JPA query as something like "from User user left join fetch user.telephones where user.id = ?". Your test case passes, so you commit your code. Then once the application is in production, and a user happens to have more than one telephone, your code returns two users for the query. After half a day of debugging, you figure out the fix would be to change the query to "select distinct(user) from User user left join fetch user.telephones where user.id = ?". Had your initial test case covered more than the canonical scenario, you wouldn’t have had this bug. This particular issue is common.

17.8.5. Plan your dataset usage

As your application grows and you write more database test cases, your datasets become hard to manage. If you have 20 dataset XML files containing a particular table, and that table changes, then you have to change 20 XML files. This is probably the biggest DbUnit drawback, and unfortunately it’s a problem without a clear solution.

The best “practice” here is to be aware of this problem and plan in advance. With this in mind, the following techniques can mitigate the problem:

- Use the same dataset for loading/storing an object.

- Keep datasets small, restricting the tables and columns compared.

- If you always use the same values for the same objects (as described in “Best practice: use a helper class to create and assert object instances”), at least you can apply the changes with just one search-and-replace command. Or, in a more sophisticated approach, you could keep smaller XML files for groups of objects and then use XML include or CompositeDataSet to join them. Keep in mind, though, that any of these approaches brings more complexity to the test cases, and you might end up with something that’s harder to maintain than a good, old search and replace.

17.8.6. Test cleanup

In all examples so far, the test cases set up the database but didn’t bother to clean it up after they did their job. This is typically a good practice and is indeed one of the best practices endorsed by DbUnit.

Notice our emphasis on the typically, though. The problem of not cleaning up the database after the test is done is that a test case could make another test’s life miserable if it inserts data that’s hard to be cleaned, like rows with foreign keys. Back to the users/telephones tables example, let’s say a test case adds one row to each table, with the telephones row having a foreign key to users, and this test case doesn’t clean up these rows after it’s run. Then a second test case is going to use the same users row, but it doesn’t care about the telephones table. If this test tries to remove the users row at setup, it will fail because of a foreign key violation.

So, long story short, although typically a good setup doesn’t need cleanup, it doesn’t hurt to clean up, especially when the test case inserts rows with foreign key constraints.

17.9. Summary

The persistence layer is undoubtedly one of the most important parts of any enterprise application, although testing it can be a challenge: test cases must be agile, but database characteristics make them bureaucratic. Although JUnit itself doesn’t have an answer to this problem, many tools do. In this chapter, we used DbUnit to validate an application’s database access.

DbUnit is a stable and mature project, comprising a few dozen interfaces, implementations, and helpers. Despite this high number of classes, DbUnit usage is relatively simple, because it consists of setting up the database before a test is run and comparing its state afterwards.

Although DbUnit is a great tool, it’s a low-level library, which provides the basic blocks for database testing. To use it efficiently, it’s necessary to define infrastructure classes and methods that at the same time leverage DbUnit strengths and provide reuse throughout the project. With creativity, experience, and planning, it’s possible to write DbUnit tests in an efficient and enjoyable way.

In this chapter, we demonstrated through progressive examples how to use DbUnit to populate the database before tests, assert the database contents after the tests, create datasets from existing databases, and use advanced APIs to make tests easier to write and maintain. In the next chapter, we show how to extend these techniques to JPA-based applications, and in chapter 19 we cover tools that enhance JUnit, including Unitils, which provides some of this chapter’s techniques out of the box.