12 Replication And Synchronization

Replication refers to the concept of storing several copies of a data record at different database servers. These copies are called replicas and the number of copies is called the replication factor. When applying replication to large data sets, first a fragmentation of the data set is obtained and then the fragments are replicated among a distributed database system. Replication on the one hand improves reliability and availability of a distributed system because replicas can serve as backup copies whenever one of the servers fails and becomes unavailable. But even if no failures occur, replication enables load balancing (by redirecting client requests to idle replicas) or data locality (by redirecting client requests to replicas closest to them). Yet, this kind of concurrent accesses to different replicas lead to consistency problems of the data records. Techniques for ordering these accesses have to be implemented.

In this chapter, we discuss the replication models of master-slave and multi-master replication. We present protocols for distributed concurrency control and consensus. We extensively discuss logical clocks as a method to order events in a distributed database system.

12.1 Replication Models

Replication has several advantages:

–it improves the reliability of the distributed database system by offering higher data availability: if one of the replicas fails to handle a user query, another replica can take over.

–and it offers lower latency than a non-replicated system by enabling load balancing, data locality and parallelization: any replica can answer any user’s read request and so the database system can redirect requests to idle copies; moreover, replicas answering a user request should at best be closest to the user’s location to reduce latency while other replicas answer requests of other users.

The advantages of replication however imply a major disadvantage: replication causes consistency problems in a distributed database system. When a user updates a data record at one server, network delays or even network failures may prevent the database system from updating all other replicas of the data record quickly; this leads to replicas having outdated data which could be read by other users in the meantime. Moreover there is the concurrency problem: two or more users might concurrently update the same data record on different replicas and the database system must offer a mechanism to resolve this conflict.

The two basic models of replication are master-slave and multi-master replication. While the consistency problem exists for both, the concurrency problem is avoided in the master-slave case but at the cost of higher latency for write requests.

12.1.1 Master-Slave Replication

In master-slave replication, write requests are handled only by a single dedicated server that is called the master. After a write, the master is responsible for updating all other servers that hold a replica – the so-called slaves. Read requests can be accepted by both the master and the slaves. Figure 12.1 shows an example of a master server with two slaves, one client executing write and read requests and one client only executing read requests. Master-slave replication offers enough redundancy in case of a master failure: when the master fails, one of the slaves can be elected to be the new master and all write request are redirected to it.

Having a single master server for all write requests in the database system is a bottleneck that slows down the processing of writes tremendously. A pragmatic solution is to partition the set of all data records into disjoint subsets and to each such subset assign one server as the master server. This master assignation could be combined with the partitioning process discussed in Chapter 11: In combination with data partitioning, data records in the same partition (or fragment, or shard) are copied to the same replication servers and one of the servers is designated master for the entire partition while the others act as slaves. In Figure 12.2, we see two data records (A and B) with a replication factor 2. One server is the master server for record A and only this server will accept write requests for record A. It is then responsible to update the second server – acting as a slave for record A. Similarly, the second server is master for record B while the other server is slave for record B.

Fig. 12.3. Multi-master replication

12.1.2 Multi-Master Replication

When all servers holding a replica of a data record can process write request, they all act as masters for the data record; hence, we can talk of multi-master replication in this case. An equivalent term is peer-to-peer replication based on the fact that the masters are peers with identical capabilities and they have to synchronize one with the other. Multi-master replication offers higher write availability than master-slave replication because clients can contact any replica server with a write request and hence write requests can be processed in parallel. In Figure 12.3 all servers accept write and read requests for a data item and the servers have to regularly synchronize their state among themselves. Due to the consistency problem, some clients may retrieve outdated data whenever the replica answering the client’s read request has not finished the synchronization process. Even worse a situation arises due to the concurrency problem: replicas may be in conflict when different clients wrote to different replicas without a synchronization step in between the writes.

12.1.3 Replication Factor and the Data Replication Problem

Maintaining more replicas can improve reliability (for example, reduce the amount of data loss) in case of failures in the distributed database system. On the other hand, more replicas lead to more overhead for keeping all replicas synchronized. When designing a distributed database, the core question is: How many replicas are sufficient for a reliable distributed database system? Assume that we have 2 as the replication factor: that is, one master stores the primary copy while and one slave stores a secondary copy in the master-slave replication case; or alternatively two master servers each store a copy of a data item in the multi-master case. Ideally, if just one of the servers fails or is temporarily unavailable, the other server will take over and answer all incoming user requests including the writes. As soon as the first server recovers, it has to synchronize with the second server and reconcile its database state to reflect the most recent writes before it can answer any new user requests (see Figure 12.4).

Fig. 12.5. Failure and recovery of two servers

However, with two-way replication, a couple of error cases can occur. For example, if the second server fails, too, before the first one has recovered, any writes accepted by the second server will not be visible to the first server (see Figure 12.5); it might hence return stale data to a read request. After both servers have recovered, the writes accepted by the servers independently need to be ordered in a reconciliation step; after the reconciliation, both servers should reflect the most recent database state. If the second server does not recover at all, all updates accepted independently by it (without having reconciled the updates with the first server) will be lost.

A replication factor of 3 is widely accepted as a good trade-off between reliability and complexity of replica maintenance. However, with 3-way replication, too, it might happen that all three replicas fail or are not able to communicate for some time.

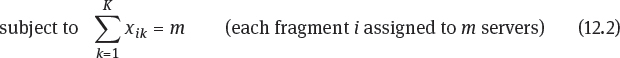

An extension of the basic Data Distribution Problem in Section 11.3.1, the Data Replication Problem expresses that replicas will be placed on distinct servers. In the ILP representation, the variables yk for the bins and xik for fragments are kept.

![]()

![]()

When solving this optimization problem, the resulting assignment of the x-variables represents an assignment of fragments to servers where m replicas of each fragment are assigned to m different servers.

12.1.4 Hinted Handoff and Read Repair

Hinted handoff has been devised as a flexible mechanism to handle temporary failures: if a replica is unavailable, the write requests (or any system messages) for this replica are delegated to another available server with a hint that these requests and messages should be relayed to the replica server as soon as possible. To maintain the replication factor, the other server itself should not hold a replica of the affected data item. When the connection to the replica server is reestablished, the hinted server holding the delegated requests and messages can pass them on to the replica; the replica can then update its state before accepting new requests.

Yet, the relaying server itself might become unavailable or might fail before it can deliver requests and messages to the unavailable replica. To reduce the adverse effects of such a situation, background tasks can be run to keep replicas synchronized even in case of more complex failure cases. One way to deal with this is to regularly run an epidemic protocol (see Section 10.4) in the background which will synchronize the replicas. Another option is called read repair proceeding as follows. Whenever a data item is requested by a client, a coordinator node sends out the read request to a couple of replicas; after the responses are retrieved, the coordinator checks these responses for inconsistencies. The replicas holding outdated data are updated with the current value which in turn is also returned to the requesting client. Read repair is often combined with read quorums (see Section 13.1.1): Because the coordinator nodes needs a set of unambiguous responses anyway, it contacts a set of replicas larger than the needed quorum, returns the majority response to the client and sends repair instructions to those replicas that are not yet synchronized.

12.2 Distributed Concurrency Control

Distributed concurrency control ensures the correct execution of operations (more generally, transactions) that affect data that are stored in a distributed fashion on different database servers. In case of data replication, a typical application of a concurrency control protocol is synchronizing all replicas of a record (on all database servers that hold a replica) when a write on the record was issued to one of the database servers. That is, if there a n replicas and one of them has been updated the remaining n − 1 replicas have to be updated, too.

Specifications of concurrency control protocols use the term agent for each stakeholder participating in the protocol; each agent can furthermore have different roles. The most important role is the coordinator: the coordinator is the database server that communicates with all other agents and is responsible for either leading the distributed operation to success or aborting it in its entirety. Concurrency protocols hence offer a solution to the consensus problem: the consensus problem requires a set of agents to agree on a single value.

The two-phase commit protocol presented in Section 12.2.1 requires all participating agents to agree to a proposed value in order to accept the value as the currently globally valid state among all agents. In contrast, quorum consensus protocols only require a certain majority of agents to agree on a proposed value where the exact definition of majority depends on a balance between read and write behaviour and the types of failures that the protocol should be resistant against. The Paxos algorithm as a prominent and widely-used quorum consensus protocol is presented in Section 12.2.2. Lastly, multi-version concurrency control as a timestamp-based concurrency mechanism that offers non-blocking reads is introduced in Section 12.2.3.

12.2.1 Two-Phase Commit

The two-phase commit (2PC) addresses the execution of a distributed transaction where all agents have to acknowledge a successful finalization of the transaction. 2PC is initiated by the coordinator of the transaction who wants to reach a consensus for the transaction results by all agents. In the simplest case, all agents try to agree on accepting a single value of an update request that has been received by the coordinator. The two-phase commit (2PC) protocol has a voting phase and a decision phase. In each phase, the coordinator sends one message to all agents and – if everything is working according to the default protocol – receives a reply from each agent. Timeouts and restarts of the coordinator or any of the other agents have to be handled by additional protocols.

In the case that no timeouts and no restarts occur, the agents can either jointly agree to commit the value or the coordinator decides to abort the transaction. In the voting phase, the coordinator sends all agents a prepare message asking them whether they are able to commit the transaction. Each agent can then vote by either replying ready (the agent is willing to accept the transaction) or failed (the agent does not accept the transaction) – or it does not reply at all which causes a timeout protocol to handle this situation.

In the decision phase, the coordinator notifies the agents of a common decision resulting from the votes: the transaction can only be globally committed if all agents voted ready in which case the coordinator sends a commit message to all agents; afterwards, all agents have to send an acknowledgement to the coordinator to achieve a global commit. This commit case is shown in Figure 12.6.

The abort case applies if at least one agent voted failed. In order to abort the transaction globally, the coordinator has to send an abort message to all agents that have voted ready. Afterwards, the agents that voted ready have to acknowledge the abort and have to internally undo all transaction operations (rollback).

A major problem with a large-scale application of the two-phase commit protocol is that a failure of a single agent will lead to a global abort of the transaction. Moreover, the protocol highly depends on the central role of a single coordinator. In particular, the state between the two phases – before the coordinator sends his decision to all agents – is called the in-doubt state: in case the coordinator irrecoverably fails before sending his decision, the agents cannot proceed to either commit or abort the transaction. This problem can only be solved by a complex recovery procedure that contacts all other agents and asks for their votes again. Even more severe is the case that both the coordinator and one agent fail during the in-doubt state. In this case, one cannot say whether the failed agent already received a commit or an abort message from the failed coordinator. The entire system is hence blocked until at least one of them recovers.

A so-called three-phase commit protocol adds another phase (the pre-commit phase) to avoid this blocking behavior; it however comes at the cost of more message delays and hence a larger roundtrip time.

12.2.2 Paxos Algorithm

Starting with [Lam98] a family of consensus protocols called Paxos algorithms has been devised. Different versions of the Paxos algorithm can ensure progress under different failure models provided that a certain majority of agents is alive and working correctly.

The first and basic Paxos algorithm is meant to cope with non-Byzantine failures (in particular, crash failures, message loss, duplication and reordering of messages) as long as more than half of the accepting agents follow the protocol. From a database system point of view, the Paxos algorithm can be applied to keep a distributed DBMS in a consistent state. A client can for example issue a read request for some database record; the database servers then have to come to a consensus on what the current state (and hence the most recent value) of the record is. In this setting, the database servers act as one or more agents in the Paxos protocol. The following types of agents take part in a Paxos protocol:

Proposer: A proposer is an agent that waits for a client request and then initiates the consensus process by asking acceptor agents to send a possible response value. A proposer assigns a number to its request (called proposal number, or sometimes command number or ballot number). Depending on the answers received from the acceptors, the proposer chooses a response value. This response value is sent to all acceptors once more to obtain a final consensus.

Leader: For handling a specific client request, one of the proposers is elected to be the leader for this specific client request. There may be several proposers competing to be leader and they may issue proposals with different proposal numbers. Acceptor: An acceptor can accept a proposal based on the proposed value and on the proposal number; a correct acceptor only accepts proposals which are numbered higher than any proposal it has accepted before. This is why an acceptor has to always remember the highest proposal it accepted so far – even if it crashes and later on restarts; thus persistent disk storage and recovery is needed for acceptors.

Learner: A learner is any other agent that is interested in the value on which the acceptors agreed. Learners will be informed of a response value by each of the acceptors. If a majority of acceptors advocates a certain value, this value is chosen as the consensus outcome. One of the learners can return the response value to the client. Usually, the leader is also a learner so that he receives the notification that his chosen value was finally agreed to by a majority of acceptors.

The basic Paxos algorithm consists of a read phase (Phase 1) and a write phase (Phase 2) as shown in Figure 12.8. In the read phase, the leader prepares the consensus by communicating with the acceptors to retrieve their current state. This is necessary because the acceptors might be in different states and they might have already answered requests sent by other proposers (with different proposal numbers). For the read phase to be successful, the leader has to receive an answer from a majority of acceptors. In the write phase, the leader can choose a value from the answers sent to him by the acceptors; he then sends the chosen value to all acceptors. Each acceptor will notify all learners of the chosen value. Again for a learner to effectively learn the value, he has to receive notifications from a majority of acceptors. The two phases each consist of two messages:

Phase 1a: The leader chooses a proposal number to identify himself. He sends a prepare message with his proposal number propNum to the acceptors.

Phase 1b: The acceptors reply with an acknowledgement message promise. The acknowledgement contains the proposal number propNum as well as the highest proposal number maxAcceptPropNum for which the acceptor has previously sent an accepted message and the corresponding value of this accepted message; that is, maxAcceptPropNum is different from propNum and stems from a previous run of Phase 2 for which however no consensus was reached due to some failure. By sending an acknowledgement message promise for proposal number propNum each acceptor informs the leader of the value he is willing to accept as the consensus result. And the acceptor promises not to send any more accepted messages for proposal numbers less than propNum.

Phase 2a: When the leader receives a promise message from a majority of acceptors, he compares all replies and identifies the one with the highest proposal number maxAcceptPropNum; he chooses the corresponding value as the possible consensus value. If there is no such highest proposal number, he can choose a value freely – hoping that a majority of acceptors will finally approve his choice. After choosing a value, the leader sends an accept message (with his proposal number propNum and the chosen value) to the acceptors for final approval.

Phase 2b: An acceptor accepts a chosen value for a given propNum by sending an accepted message (with proposal number propNum and the chosen value) to all learners – as long as he has not sent a promise message for a higher proposal number (in a different run of phase 1). As an alternative the acceptors can send their accepted messages to the leader who in turn notifies all the learners; this reduces the amount of messages sent (when there is more than one learner) at the cost of introducing an additional message delay (because learners have to wait for the notification from the leader).

With the basic Paxos protocol the following safety properties are guaranteed to hold for each individual run of the protocol (see [Lam05]):

Nontriviality: Any value that a learner learns must have been proposed by a proposer.

Stability: A learner learns one single value or none at all.

Consistency: All learners learn the same value; that is, no two learners learn different values.

Paxos also has the following liveness property (for a given learner and a given proposed value) under the assumption that the given learner, the proposer of the value and the necessary majority of acceptors are working correctly and all messages eventually reach their destination:

Liveness: If some value has been proposed, a learner will learn a value – although not necessarily the proposed one and although several rounds of communications (that is, message delays) might be necessary to establish the learned value.

The basic Paxos protocol can support non-Byzantine failures of the participating agents as follows:

Failures of proposers: The leader can fail as long as there is at least one backup proposer who can be the new leader and eventually gets his chosen value accepted. That is why the read phase (Phase 1) is necessary: in Phase 1 the new leader retrieves the highest proposal number for which an accepted message has ever been sent previously by any of the acceptors – but no learner has received a majority of accepted messages for this proposal number so far. In case the proposal number chosen by the new leader (in his prepare message in the current run of Phase 1) is superseded by a proposal number sent by any other proposer, the leader can start a new run of Phase 1 with a higher proposal number. This is shown in Figure 12.9. One problem of this restart behavior is the case of competing proposers (also called dueling proposers; see Figure 12.10): two or more proposer are trying to be leaders but they are never able to finish Phase 2 because in the meantime a majority of acceptors has already sent a promise message for another higher proposal number – hence making it impossible for them to send an accepted message for the lower proposal number. In this case it can happen that the competing proposers each try to increase their proposal numbers forever without making progress so that no consensus is reached. A practical recommendation to avoid this case is to introduce small random delays before starting a new run of Phase 1 so that one of the competing proposers gets a chance to finish Phase 2.

Failures of learners: If all learners fail, the consensus value will not be sent to the client although a majority of acceptors accepted a chosen value. Hence, there must be at least one learner working correctly (and timely). A practical solution is to have one distinguished learner (who could act as the leader at the same time) to be responsible for returning the consensus value to the client – but to also have one or more backup learners that can take over in case the distinguished learners fails (or delays).

Failures of acceptors: Regarding the acceptors, for a consensus to be successful with a certain proposal number, the leader has to receive promise messages for the proposal number from a majority of acceptors and later on at least one learner has to receive accepted messages for the proposal number from a majority of acceptors – although not necessarily the same acceptors have to send the promise and accepted messages. Now we can establish the minimum quorum size needed in basic Paxos. The basic Paxos protocol can tolerate F faulty acceptors as long as there are at least F + 1 non-faulty acceptors that agree on a value. That is, for a total of N > 2 · F acceptors, basic Paxos can work reliably, even if F acceptors fail. In other words, basic Paxos can proceed with a quorum size of F + 1 as long as there are at most F faulty acceptors – and their messages are eventually received by proposers and learners (see Figure 12.11).

If however a majority of acceptors fails, a new run of Phase 1 has to be started with a higher proposal number and with a quorum containing other acceptors than the failed ones (see Figure 12.12).

Several variants of the basic Paxos protocol have been devised to offer enhanced functionalities. One such variant of Paxos called cheap Paxos relies on the optimistic perspective that F failing acceptors can be tolerated by having F + 1 active acceptors and F auxiliary acceptors. The auxiliary acceptors need not take part in the Paxos protocol as long as the active acceptors are working correctly; only if one of the active acceptors fails, one auxiliary acceptor is included in the protocol as a replacement for the faulty acceptor – and only until the faulty acceptor has recovered. This version of Paxos reduces message transmissions (because only F + 1 acceptors are contacted in the best case) and the auxiliary acceptors can be idle (or processing other tasks) as long as no faults occur.

A further generalization of Paxos – called generalized Paxos – relies on the observation that commutative commands can be executed in any order. Instead of enforcing a total order of all commands, it hence suffices to have a partial order; in other words, non-commutative commands have to be executed in the right sequence by all agents, whereas commutative commands can be executed in any order by the agents. Note that in this case consensus is reached not only for a single client request but for a continuous sequence of commands.

12.2.3 Multiversion Concurrency Control

In a distributed database system, lock-based concurrency control on replicated data is very expensive as locks must be managed globally for all servers. It may also lead to deadlocks more often than on a single centralized server.

That is why a form of multiversion concurrency control (MVCC) is often employed. MVCC-based transactions consist of

–a read phase where a local copy for the transaction is created which the transaction can operate on;

–a validation phase where the MVCC system checks whether the transaction is allowed to apply its modification to the global authoritative data set;

–and a write phase where the modified data are copied to the global data set.

The main advantage of MVCC is that each client sees its own copy of the current values in the database; that is, the database is always accessible for read access without restrictions – a feature called non-blocking reads. When writes take place inside a transaction then the client version is compared with the current version in the database system at commit time: when the client version is older than the database version, writes of other transactions have occurred in between. To avoid consistency problems, the client transaction must be aborted and restarted with a new version.

Although MVCC offers non-blocking reads and less overhead than lock-based approaches, MVCC has some disadvantages. Maintaining versions for different clients for one has a storage space problem: several copies of data items have to be held available for the accessing clients and the clients produce new versions during the interaction. And due to the late abort at commit time, many restarts of transaction may occur. For more details on MVCC see the Section 13.1.2 on snapshot isolation.